Sentinel: Early Detection and Response for Global Catastrophes

By rai, NunoSempere @ 2025-11-18T03:48 (+71)

tl;dr: Sentinel is an open-source intelligence organization that rapidly identifies and reacts to global risks, particularly ones difficult to anticipate over longer time-horizons. We have filled $700K of our ~$1.6M budget and are looking to fill the rest to expand and sustain our large-scale open source monitoring for GCR.

About Sentinel

Sentinel is an open-source intelligence organization that focuses on rapidly identifying and reacting to global risks. During times of relative calm we build tooling and use the judgment of world-class forecasters to monitor events that could escalate into global catastrophes on relatively short timescales. We then distribute our resulting analysis through our weekly brief and on Twitter, which builds the distribution capacity and reputation that will allow for high leverage during times of crisis. During times of turmoil we focus on fast, permissionless action, like that of VaccinateCA.

Over the next few years, as AI gets more capable and more integrated, dangers will become more hectic and more common, and observe-orient-decide-act loops (or even just the observe step) around new dangers will need to become faster for them to make a difference. Big-picture strategic-thinking is valuable when it works, but it often misses key details that a dedicated foresight effort working at a cadence of days and weeks rather than months and years would be able to catch and leverage.

Humanity’s portfolio of approaches to mitigating the potentially existential risks of a rapidly changing future needs to include a sufficiently large component that operates rapidly on short time horizons, with comparatively few assumptions about the future and thrives in uncertain and volatile conditions. In our view, there is too little allocated to global-catastrophic-risk mitigation approaches that surf the wave of uncertainty as it happens, rather than trying to condition on a specific picture of the world ex-ante.

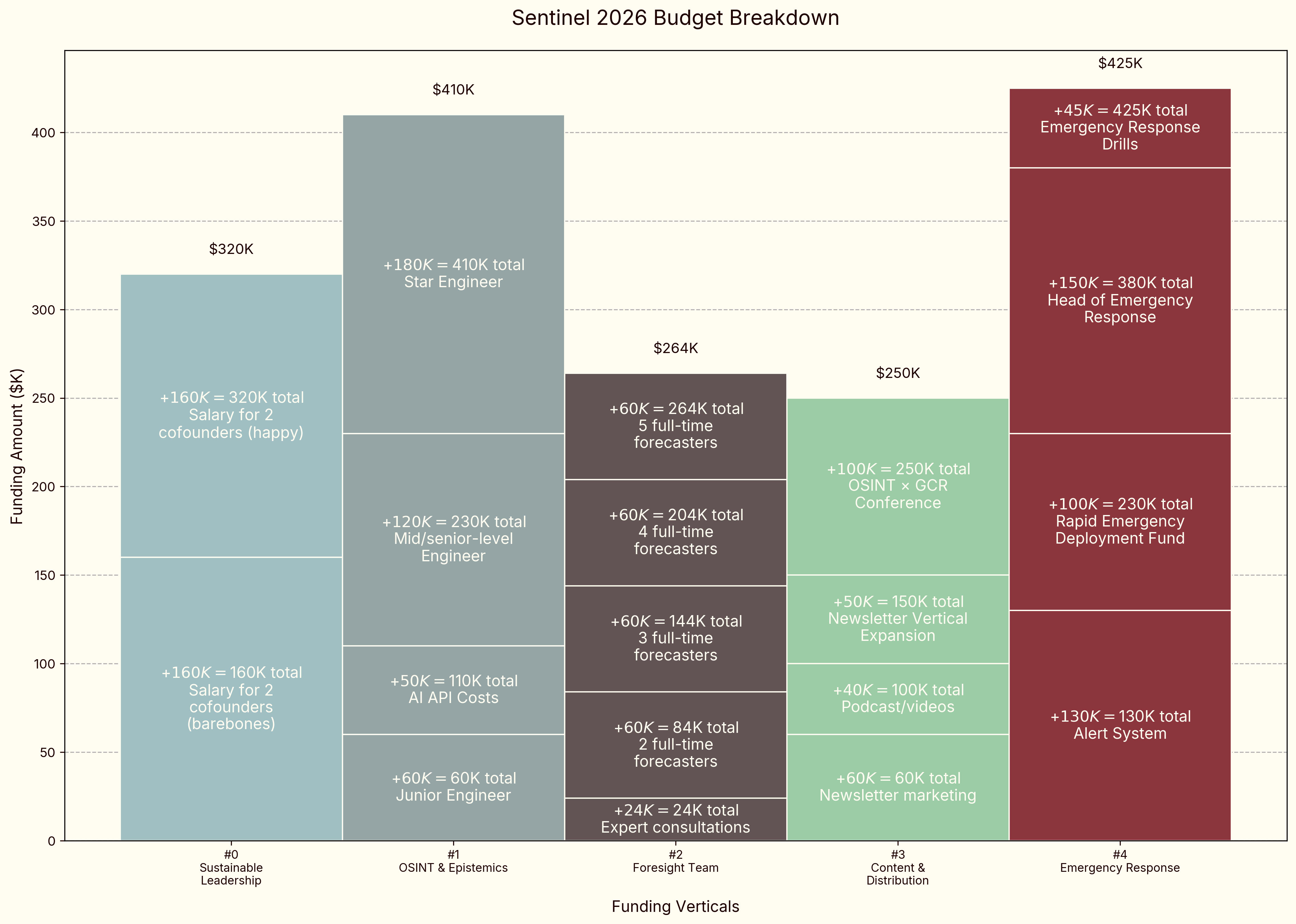

Where we're at. We have ~$700K on our balance sheet which means we have partially funded the Sustainable Leadership, AI OSINT, Foresight Team, and Content & Distribution verticals. We have not funded the Emergency Response vertical as yet. You can choose to provide us general funding to distribute over the verticals as we see fit, fund a subset of verticals, or even fund a project within a vertical.

Verticals & Concrete Projects

OSINT & Epistemics Software

The more engineering resources we have, the more of the following we can deliver:

- On the OSINT side:

- Reddit/4chan monitoring: Track ~100K subreddits for early signals of AI-related incidents, novel pathogens, social unrest, or technical risks. At ~50¢ per subreddit for shallow parsing, this might represent significant API usage, although we are finding that we can still get signal by having a cheaper model do an initial pass and only use the more expensive models for refinement.

- Chinese military news monitoring: Automated translation and analysis of Chinese military and state media to detect escalation signals, or policy shifts.

- Pandemic symptom tracking: Monitor social media for unusual symptom clusters or discussion of novel diseases, like this discussion (english) of chikungunya in Cuba.

- Advanced real-time dashboards: Build interactive maps and visualizations (https://liveuamap.com/ or https://birdflurisk.com/ style) for crisis situations.

- Specific signals depending on new threat models, e.g., Telegram channels, weibo, satellite imagery, etc. In general we are a bit more excited about meta-level sources, like Reddit and Twitter, however we also have a very long list of individual sources to monitor if we had the capacity.

- On the epistemics side, we are still ideating but having access to world-class forecasters opens up a lot of opportunities to improve the state of AI forecasting.

We could grow our engineering team to 3 FTEs ($360K) and $50K in API costs, up from half an FTE and around $500/month in API costs. So far, we have hired a junior Latin American developer at $3k/month.

Foresight Team

We are currently employing 5 forecasters part-time, and this is already getting us relatively broad coverage. They have been working below market rate because they believe in the mission and we have not yet reached diminishing returns on their time.

More funding would allow us to:

- Buy more of their time to better integrate them into tooling

- Maintain the benefits of a stable forecasting team working together through time

- Track multiple escalation sources simultaneously rather than having to prioritize as ruthlessly

- Increase early detection delta

- Increase forecasting quality by adding more forecasters

We could increase our forecasting spending from ~$85K a year to $264K a year, going from part time forecasters to full time, or increasing their number, or supplementing them with a research assistant. Note that, in general, forecasters are better value for money when part-time.

In addition, we could pay something like $2K/month for experts in areas where our forecasters could use deeper expertise, e.g.:

- China military analysis and geopolitical dynamics

- AI and AI safety specific talent

- Pandemic monitoring and biosecurity threats

- OSINT techniques and satellite imagery manipulation

- Other domains as risks evolve and new threats emerge

Emergency response

Once the foresight team encounters news or events that increase their probability of a large catastrophe, we need to be able to realize the benefits of that signal by affecting action.

We think it’s relatively self-evident that coordination can be a limiting factor in the public's response to a crisis. For example, in the case of VaccinateCA, there was no one whose responsibility it was to ensure that people could quickly and reliably find out where unspent vaccine doses were located and so, despite the vials being some of the most valuable stuff on earth and there being common knowledge of this, they were getting thrown away at the end of the day. VaccinateCA alleviated this bottleneck in a relatively permissionless way, with no official designations.

Another dynamic at play is the trend-following aspect of action. In Seeing the Smoke, the primary contribution Jacob Falkovich intended to make to the discourse was not facts about epidemiology, but rather, permission to take COVID seriously. He was using his reputation as a force to predictably trigger actions he thought were sane for the circumstances. We hope Sentinel is early to gaining conviction on legitimate risks so that we too can stake our reputation to spur action.

We want to learn the meta-lesson from instances of coordination scarcity by preparing for these kinds of actions during times of calm. To that end, we are excited about the following projects.

Alert System ($130K for development and first 12 months of operation)

In the past, we had a notion of a “reserve team” that we would call upon in case of an emergency. We think having flexible capacity that has opted-in to being relied upon in case of an emergency is still a great idea but the way in which we went about it was unnecessarily limiting and not scalable. With our readership growing significantly, we want to create a multi-channel alert system for crisis awareness and coordination that, at sign-up time, elicits the capabilities of volunteers.

This enables three things.

- Projects and individuals that wish to have crisis alerts of various kinds can sign up for those via SMS, voice calls, webhooks, Signal, WhatsApp, email, and any others we may wish to support in the future. We have a few individuals and organizations that have expressed interest in getting these alerts.

- We are no longer a bottleneck for recruiting since the system would be self-serve.

- In a crisis we would be able to quickly tap the individuals who have the most relevant skills, resources, and connections.

Funding this item would allow us to build the alerting system, AI-enabled onboarding for eliciting volunteer capabilities, and 12 months of support and operational costs.

Head of Emergency Response ($150K/year)

There are large differences in people’s problem-solving creativity and the effectiveness of the solutions they implement during a crisis. Minds built for these fast-moving and high-stakes tasks end up achieving things like setting a record for time from company formation to first patient dosing of a vaccine.

We think having an operational savant to develop emergency protocols, perform training exercises, and lay the groundwork for frictionless action during a catastrophe would be very valuable. They are probably not cheap, however.

They could spend peacetime identifying potential partners, running drills, pre-negotiating collaboration agreements, mapping available resources and capabilities, and developing playbooks for different crisis scenarios.

Rapid Emergency Deployment Fund ($100K)

Relative to the speed at which events unfold in a crisis, it is plausible that getting funding could be a a bottleneck, especially if the risk is too speculative for others (so there isn't common knowledge that costly action should be taken) but very bad in expectation as judged by Sentinel. Even needing to wait for a wire transfer might be too slow in some cases. We'd likely hold this in a mix of currencies and stablecoins.

This $100K buffer would be held for emergencies and kept in forms that make them easily deployable within 24 hours. Here are some things that these funds could be used for:

- Enabling responders to travel to crisis locations for on-the-ground assessment and coordination

- Equipment and supplies (PPE, testing equipment, communications gear)

- Sequencing for novel pathogens

- Hiring contractors on short notice (translators, local fixers, technical specialists)

- Renting facilities or infrastructure

If you would like to fund this rapid deployment fund, please don’t hesitate to contact us.

Emergency Response Drills ($45K for 3 drills)

Making contact with reality is very important for learning. Even in absence of a global catastrophe, mundane failures in communication, organization, and resource allocation can be flushed out in a relatively cheap way by performing drills and exercises on smaller-scale issues.

For example, we could:

- Test communication protocols and alert system functionality

- Benchmark decision-making speed and coordination effectiveness

- Practice volunteer activation and task assignment

- Compensate participants for engaging

Content & Distribution

Once we have produced some forecasting or analysis product, it needs to be distributed in order for it to have an impact. In order to do this, we can hire fractional social media, design, podcast, strategist and advertisement contractors for one to three FTEs at $60K to $150K.

Podcasts in particular seem like a good and popular way to reach a large audience in a high-bandwidth way, and Rai spent 1 month FTE this year setting up the pipeline to produce these podcasts, which seems worthwhile, although editing is expensive.

We could also organize a conference on Global Catastrophic Risks for about $100K, integrating attendees from the OSINT community and the GCR space. It’s looking like we might get resources needed for this gifted in-kind by a funder who is offering us his luxurious villa for a weekend for such a conference, though it could fall through for a variety of reasons.

Team

Our leadership team is composed of:

- Nuño Sempere. Cofounder of Samotsvety. He has consulted with large institutions like major AI labs, has experience managing teams of 10-20 forecasters, and a strong open source background.

- Rai Sur. Cofounder and CTO of the crypto fintech startup Alongside (now Universal), which raised over $13M from a16z and is still operating five years later. Systems he designed and oversaw the development of secured $6M of assets in smart contracts with no hacks. He has the experience to operate large budgets and manage multiple employees. He also worked at Microsoft on a library that distributed the training and evaluation of AI models over clusters.

Our forecasting team is composed of:

- Lisa Murillo. Superforecaster with a focus on biological and geopolitical risks. In addition to Sentinel, works with the Swift Centre, Samotsvety, and Rand Forecasting Initiative.

- Tolga Bilge. Superforecaster and AI Policy Researcher at ControlAI

- Vidur Kapur. Superforecaster at Good Judgment, Swift Centre, Samotsvety, RAND and a hedge fund.

- and an expert and extremely well connected geopolitics forecaster who prefers to remain anonymous.

This forecasting team is on board with building software to capture their current skillset, and transition towards meta-level work, e.g., instead of forecasters spending time on X directly, they can spend time deciding what intelligent tooling should be paying attention to.

We also recently hired a talented yet junior Latin American developer.

We are supported by Impact Ops for operations. This has allowed us to register as a 501(c)3.

Track Record & Recent Progress

Foresight

At our current $300K/year funding level, we've been making sense of large-scale risks both periodically in our weekly brief, as well as during singular and more worrying developments:

- Making Sense of Israel's Strike on Iran: rapid-response geopolitical analysis

- The US Executive vs Supreme Court: constitutional crisis scenario analysis

- Forecasting the Future of Recommender Systems: AI companions and addictive potential

- H5N1: Much More Than You Wanted To Know: contributed to Scott Alexander's analysis

- How Strategic AI Will Revolutionize Warfare: expert interview on drone warfare

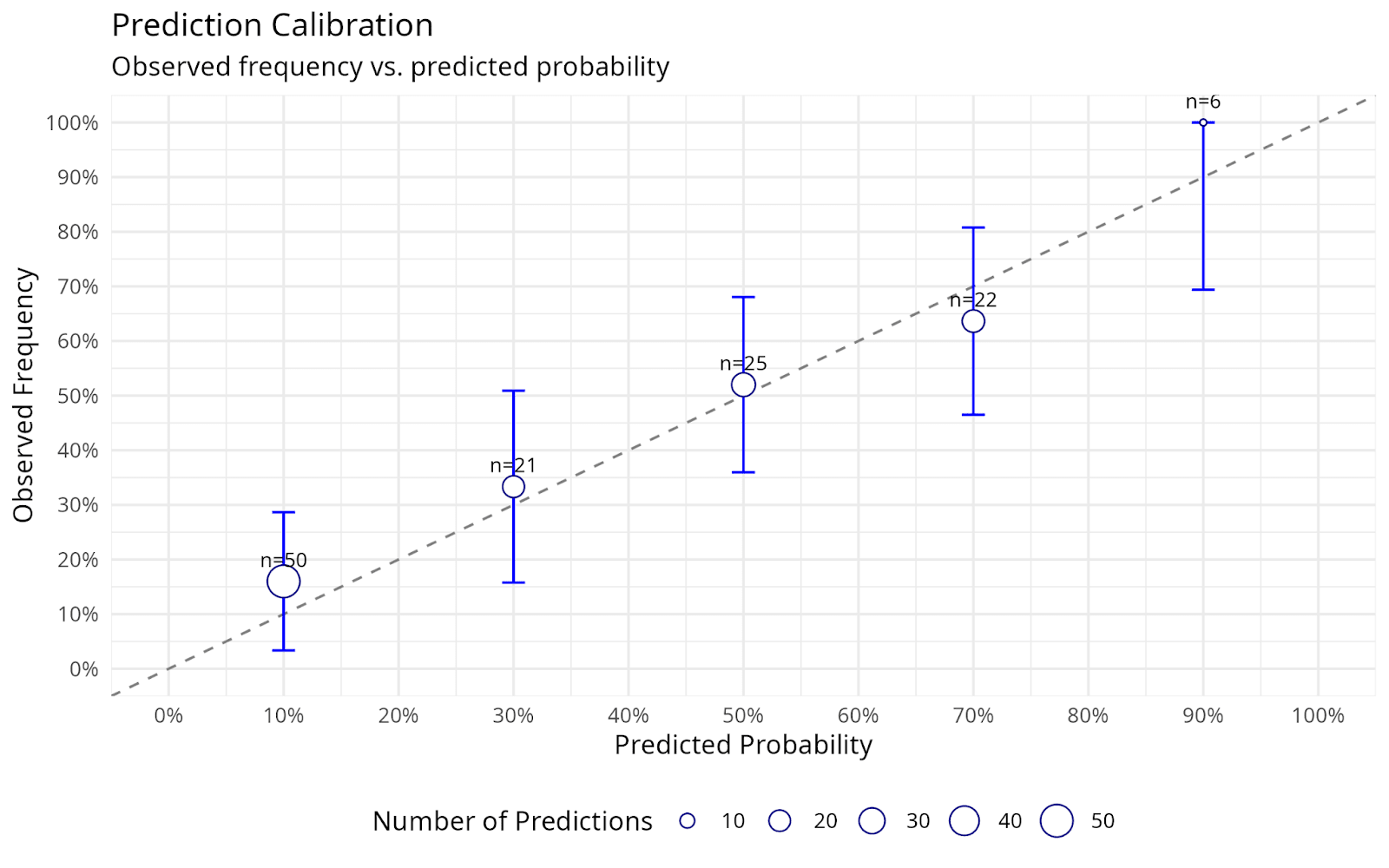

Our calibration chart looks as follows:

Our Brier score is 0.1777, but it’s unclear what to compare it for, since we don’t select for question difficulty. Perhaps a reasonable comparison point might be Metaculus’ score on AI questions, or the Brier score of individual Samotsvety forecasters.

Software

Our "eye of sauron" repository contains our code for parsing generic sources; the README in that repository provides more details. We have also built more specialized tooling for tweet monitoring (see outputs here), since there are many small sources, requiring their own specialized abstractions. We were also relatively early to doing demos of AI-enabled wargaming at the beginning of the year. Recently, we have started parsing Reddit.

Distribution

Since our last fundraise in November 2024, we've substantially improved our content quality and distribution pipeline:

- Twitter: 21.9K followers, getting 1K-30K views/week (higher when we detect more alarming signals)

- Newsletter: Grew 7.1x from 552 to 3,936 subscribers

- Revenue: $26.1K/year from 39 paid subscribers, despite having no paywalled material (this demonstrates genuine value to readers)

- Notable readers/endorsers: We have readers from DOGE, DeepMind, OpenAI, Anthropic, capital allocators, US Gov, diplomats, and safety engineers. Neel Nanda, Seb Krier, Malcolm Nance, Brian Potter, Peter Wildeford, PauseAI, Rob Wiblin, and David Manheim have endorsed or amplified us on Twitter.

Emergency response

This year we de-emphasized emergency response due to funding constraints. However, we think that a big chunk of our impact depends on how our forecasts are used in scenarios where we detect a large-scale catastrophe so it would be valuable to spend more effort here as outlined above in the concrete projects section.

Impact estimate

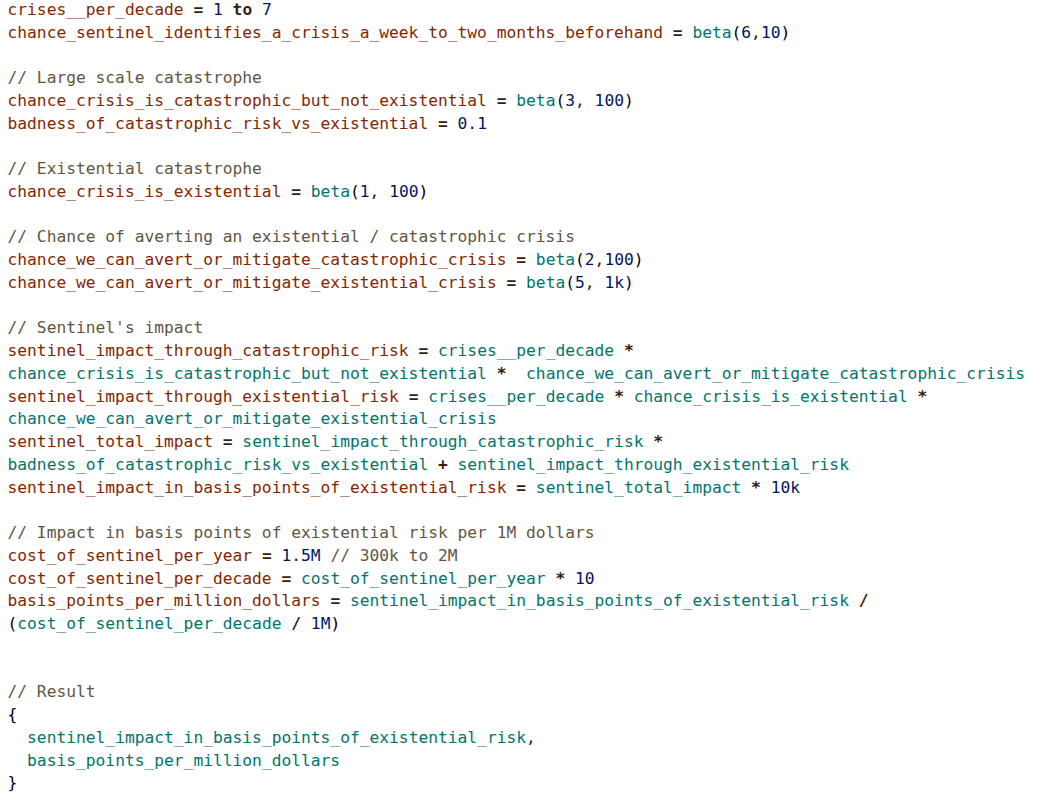

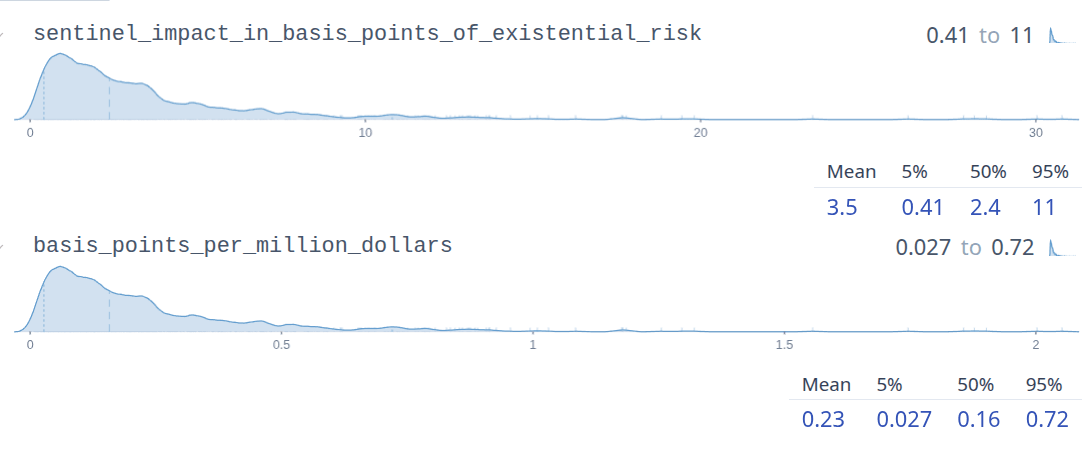

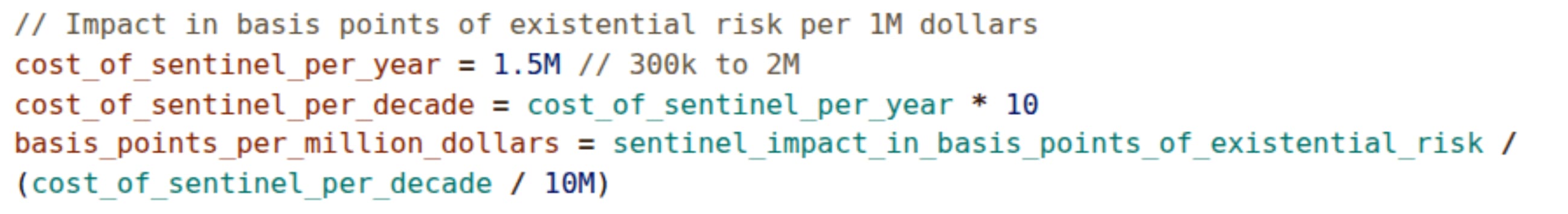

At the full funding level, we estimate Sentinel’s impact at 3.5 (0.4 to 11) basis points of existential risk averted, corresponding to 0.27 to 7.2 basis points of existential risk averted per million dollars:

We think a meaningful threshold is to divide total risk by total funding available to prevent it: if there are $20B working to prevent a 10% chance of existential risk, that would correspond to 2B per 1%, or 20M per basis point. You can read more about the virtue of this approach here. Sentinel is cheaper than that threshold. In a sense, the above estimate is also of a lower bound on the value of the project as a whole, since it doesn't attempt to model e.g., value of information, or the non-risk sensemaking derived from our projects. But in another sense, since this is our estimate, it might also be too optimistic. We encourage you to play around with the model to determine for yourself. (Tip: use this tool to quickly translate your probabilities over [0,1] to beta distributions)

How Sentinel fits with other projects in the space

vs. Epoch/METR: Epoch and METR focus on trend lines and benchmarks, which provide a good, broad, general fundament for what to expect if these trend lines keep up. In contrast, we monitor specific threats as they arise, e.g., AI psychosis cases on Reddit, autonomous drone warfare developments, AI-enabled influence operations. We view their work as immensely valuable in some futures but too brittle in a large swath of futures.

vs. Forecasting Research Institute: FRI does valuable work on forecasting methodology and expert aggregation. In contrast, we monitor events in real time with faster action loops. We may also have better forecaster selection and make more emphasis on figuring out things ourselves rather than polling experts. We view our action loops as much faster, and their focus on having a strong middle management layer that produces academic publications that take months or years as a bet to attain a particular kind of influence and legible expertise that might be too costly in rapidly developing situations.

vs. AI Futures Project: The AI Futures Project develops detailed scenarios through more top-down reasoning. We process information bottom-up, processing signals as they arise in the environment, rather than starting from a theoretical framework. We think that top-down reasoning has a poor track record when stacking many conditionals. The likelihood of some surprising factor not in their model (public sentiment against water usage slowing down AI like worries stopped nuclear in Germany, AI being important earlier but in a different way—through autonomous drones rather than LLMs, or some other gray swan) is very high. Having broad situational awareness of the near-term future is more likely to pay off than deep consideration of narrow medium-term scenarios.

Distribution strategy: We think there are already many people schmoozing in DC and SF. We see ourselves as feeding into superconnectors, not as being those superconnectors ourselves. We believe that a strategy of amassing influence through putting out really good material on the internet, as with Gwern, is a viable path to influence.

Political balance: About half of our forecasters lean more left-wing politically, and about half lean more right-wing. As a result, our briefings tend to highlight both partisan perspectives for contentious topics, which leads us to gain credibility for readers on the right—but when we do give a warning, it comes across as more legitimate. In contrast, our sense is that the EA ecosystem is more left-wing, which leads to it having made bets that led to losing power in the current administration and Zeitgeist.

Donate and Follow Us

Ways to donate:

- GiveButter. Probably the easiest for card donations.

- Manifund. If you already have funds there or want to donate a lump sum to a platform with features to discover other cool projects.

- Wire.

- Other. For example, if you donate appreciated stock or crypto, you can get a tax benefit in at least the US. Contact Rai.

To follow our work, see our weekly brief and our Twitter.

MaxRa @ 2025-11-18T14:00 (+7)

Huge fan of your work, one of the few newsletters I read every week.

Random question, I wonder whether prediction markets are a potentially promising income stream for the team? E.g. Polymarket seems to have a bunch of overlap with the topics you're covering.

Also, thanks for making your news-parsing code open source, was often curious how it looks like under the hood.

NunoSempere @ 2025-11-18T19:40 (+4)

I think doing it successfully would take too large a chunk of my time, but I was considering it in case Sentinel fails

Joey Bream @ 2025-11-18T14:15 (+6)

Nice touch including the funding verticals. Great work!

NunoSempere @ 2025-11-18T19:43 (+2)

:)

Vasco Grilo🔸 @ 2025-12-15T10:41 (+2)

Thanks for the update, Nuno and Rai! I read the summary of weekly brief every week.

The last 10 M should be 1 M?

NunoSempere @ 2025-12-16T00:13 (+4)

Thanks!!!