Saving lives in normal times is better to improve the longterm future than doing so in catastrophes?

By Vasco Grilo🔸 @ 2024-04-20T08:37 (+13)

You can give me anonymous feedback about anything you want here.

Summary

- Interventions in the effective altruism community are usually assessed under 2 different frameworks, existential risk mitigation, and nearterm welfare improvement. It looks like 2 distinct frameworks are needed given the difficulty of comparing nearterm and longterm effects. However, I do not think this is quite the right comparison under a longtermist perspective, where most of the expected value of one’s actions results from influencing the longterm future, and the indirect longterm effects of saving lives outside catastrophes cannot be neglected.

- In this case, I believe it is better to use a single framework for assessing interventions saving human lives in catastrophes and normal times. One way of doing this, which I consider in this post, is supposing the benefits of saving one life are a function of the population size.

- Assuming the benefits of saving a life are proportional to the ratio between the initial and final population, and that the cost to save a life does not depend on this ratio, it looks like saving lives in normal times is better to improve the longterm future than doing so in catastrophes.

- Many in the effective altruism community argue for focussing on existential risk mitigation given its high neglectedness relative to its importance, i.e. high ratio between potential (astronomical) benefits and (tiny) amount of resources currently being dedicated to it. However, this does not appear to be the right measure of whether a problem is neglected in the relevant sense. One has to consider not only the benefits of solving a problem, but also multiply these by their probability, and arguably expect spending to be proportional to the product.

- The above is in contrast to Nick Bostrom’s maxipok rule.

- I question this as follows under my framework:

- The benefits of saving a life tend to infinity as the post-catastrophe population goes to 0, i.e. as one is increasingly confident it would cause human extinction.

- Yet, the probability of the severity of a catastrophe tends to 0 as severity increases.

- The 2 points above have opposing effects in terms of the expected value of saving a human life, and one cannot consider each of them in isolation because they are not independent.

- I think the expected value of saving a human life tends to 0 under reasonable assumptions as the severity of the catastrophe increases.

- As a 1st approximation, I believe all interventions could be fairly assessed based on a single metric like the number of disability-adjusted life years averted per dollar, as calculated through a standard cost-effectiveness analysis.

- I question this as follows under my framework:

Introduction

Interventions in the effective altruism community are usually assessed under 2 different frameworks:

- Existential risk mitigation, where the goal is often decreasing extinction or global catastrophic risk associated with catastrophes in the next few decades. For example, the interventions supported by the Long-Term Future Fund (LTFF).

- Nearterm welfare improvement, where the goal is improving the lives of animals or humans in the next few decades. For example, the interventions supported by the Animal Welfare Fund and GiveWell’s funds.

In agreement with the above and its commitment to worldview diversification, Open Philanthropy says:

So far, the focus areas we have selected fall into one of two broad categories: Global Health and Wellbeing (GHW) and Global Catastrophic Risks (GCR). We summarize the key differences between these portfolios as follows:

- While GCR grants tend to be evaluated based on something like “How much this grant reduces the chance of a catastrophic event that endangers billions of people”, GHW grants tend to be evaluated based on something like “How much this grant increases health (denominated in e.g. life-years) and/or wellbeing, worldwide.”

- The GHW team places greater weight on evidence, precedent, and track record in its giving; the GCR team tends to focus on problems and interventions where evidence and track records are often comparatively thin. (That said, the GHW team does support a significant amount of low-probability but high-upside work like policy advocacy and scientific research.)

- The GCR team’s work could be hugely important, but it’s very hard to answer questions like “How will we know whether this work is on track to have an impact?” We can track intermediate impacts and learn to some degree, but some key premises likely won’t become very clear for decades or more. (Our primary goal is for catastrophic events not to happen, and to the extent we succeed, it can be hard to learn from the absence of events.) By contrast, we generally expect the work of the GHW team to be more likely to result in recognizable impact on a given ~10-year time frame, and to be more amenable to learning and changing course as we go.

It looks like 2 distinct frameworks are needed given the difficulty of comparing nearterm and longterm effects. However, I do not think this is quite the right comparison under a longtermist perspective, where most of the expected value of one’s actions results from influencing the longterm future, and the indirect longterm effects of saving lives outside catastrophes cannot be neglected. In this case, I believe it is better to use a single framework for assessing interventions saving human lives in catastrophes and normal times. One way of doing this, which I consider in this post, is supposing the benefits of saving one life are a function of the population size.

Note Rethink Priorities’ cross-cause cost-effectiveness model (CCM) also estimates the cost-effectiveness of interventions in the 2 aforementioned categories under the same metric (DALY/$). Nevertheless, I would say it underestimates the benefits of saving lives in normal times by neglecting their indirect longterm effects, which should be considered if one holds a longtermist view.

Expected value density of the benefits and cost-effectiveness of saving a life

Denoting the population at the start and end of any period of 1 year (e.g. a calendar year) by and , which I will refer to as the initial and final population, I assume:

- follows a Pareto distribution (power law), which means its probability density function (PDF) is , where is the tail index.

- Given the probability of being higher than a given ratio is , one can determine from , where and are the tail risks respecting the ratios and .

- I would not agree with assuming a Pareto distribution for the reduction in population as a fraction of the initial population ().

- This fraction is limited to 1, whereas a Pareto distribution can take an arbitrarily large value.

- So assuming a Pareto would mean a reduction in population could exceed the initial population, which does not make sense.

- The benefits of saving a life are , where:

- are the benefits of saving a life in normal times.

- is the elasticity of the benefits with respect to the ratio between the initial and final population.

- for the benefits to increase as the final population decreases.

- The cost of saving a life is , where:

- is the cost to save a life in normal times.

- is the elasticity of the cost with respect to the ratio between the initial and final population.

Consequently, the expected value density of:

- The benefits of saving a life is .

- The cost-effectiveness of saving a life is .

I guess:

- , as Carl Shulman seems to suggest in a post on the flow-through effects of saving a life that the respective indirect longterm effects, in terms of the time by which humanity’s trajectory is advanced, are inversely proportional to the population when the life is saved[1].

- , since I feel like the effect of having more opportunities to save lives over periods with lower final population is roughly offset by the greater difficulty of preparing to take advantage of those opportunities.

As a result, the expected value densities of the benefits and cost-effectiveness of saving a life are both proportional to , which tends to 0 as the final population decreases. So it looks like saving lives in normal times is better to improve the longterm future than doing so in catastrophes.

I got values for of 0.153 (= ln(0.0905/0.01)/ln(2.02*10^6/1.11)) and 0.0835 (= ln(0.20/0.06)/ln(2.02*10^6/1.11)) based on the predictions of the superforecasters and experts of the Existential Risk Persuasion Tournament (XPT) for the global catastrophic and extinction risk between 2023 and 2100:

- Global catastrophic risk refers to more than 10 % of humans dying, so is 1.11 (= 1/(1 - 0.1)).

- Extinction risk refers to less than 5 k people surviving, and the global population is projected to be 10.1 billion in 2061 ((2023 + 2100)/2 = 2061.5), which is in the middle of the relevant period, so is 2.02 M (= 10.1*10^9/(5*10^3)).

- Superforecasters predicted a global catastrophic and extinction risk of and , and experts and (see Table 1).

For the tail indices I mentioned, the expected value densities of the benefits and cost-effectiveness of saving a life are 34.8 % (= (10^3)^-0.153) and 56.2 % (= (10^3)^-0.0835) as high for a final population 0.1 % (= 10^-3) as large. Nonetheless, I would say the above tail indices are overestimates, as I suspect extinction risk was overestimated in the XPT. The annual risk of human extinction from nuclear war from 2023 to 2050 estimated by the superforecasters, domain experts, general existential risk experts, and non-domain experts of the XPT is 602 k, 7.23 M, 10.3 M and 4.22 M times mine.

The tail index would be 0.313 (= ln(0.0905/0.001)/ln(2.02*10^6/1.11)) for my guess of an extinction risk from 2023 to 2100 of around 0.1 % (essentially from transformative artificial intelligence), and superforecasters’ global catastrophic risk of 9.05 %. In this case, the expected value densities of the benefits and cost-effectiveness of saving a life would be 11.5 % (= (10^3)^-0.313) as high for a final population 0.1 % as large.

Existential risk mitigation is not neglected?

Many in the effective altruism community argue for focussing on existential risk mitigation given its high neglectedness relative to its importance, i.e. high ratio between potential (astronomical) benefits and (tiny) amount of resources currently being dedicated to it. However, this does not appear to be the right measure of whether a problem is neglected in the relevant sense. One has to consider not only the benefits of solving a problem, but also multiply these by their PDF, and arguably expect spending to be proportional to the product, i.e. to the expected value density of the benefits. Focussing on this has implications for what is fairly/unfairly neglected. To illustrate:

- In the context of war deaths, the tail index is “1.35 to 1.74, with a mean of 1.60”. So the PDF of the benefits is proportional to “deaths”^-2.6 (= “deaths”^-(“tail index” + 1)).

- So I think spending should a priori be proportional to “deaths”^-1.6 (= “deaths”*“deaths”^-2.6).

- As a consequence, if the goal is minimising war deaths[2], spending to save lives in wars 1 k times as deadly should be 0.00158 % (= (10^3)^(-1.6)) as large.

Here is an example of a discussion which apparently does not account for the probability of the benefits being realised. In Founders Pledge’s report Philanthropy to the Right of Boom, Christian Ruhl says:

suppose for the sake of argument that a nuclear terrorist attack could kill 100,000 people, and an all-out nuclear war could kill 1 billion people. All else equal, in this scenario it would be 10,000 times more effective to focus on preventing all-out war than it is to focus on nuclear terrorism.[8]

Here is footnote 8:

All else, obviously, is not equal. Questions about the tractability of escalation management are crucial.

Here is the paragraph following what I quoted above:

Generalizing this pattern, philanthropists ought to prioritize the largest nuclear wars (again, all else equal) when thinking about additional resources at the margin.

However, as I showed, the Pareto distribution describing war deaths is such that the expected value density of deaths decreases with war severity. So, all else equal except for the probability of a war having a given size, it may well be better to address smaller wars.

Here is another example where the probability of the benefits being realised is seemingly not adequately considered. Cotton-Barratt 2020 says “it’s usually best to invest significantly into strengthening all three defence layers”:

- Prevention. “First, how does it start causing damage?”.

- Response. “Second, how does it reach the scale of a global catastrophe?”.

- Resilience. “Third, how does it reach everyone?”.

“This is because the same relative change of each probability will have the same effect on the extinction probability”. I agree with this, but I wonder whether tail risk is the relevant metric. I think it is better to look into the expected value density of the cost-effectiveness of saving a life, accounting for indirect longterm effects as I did. I predict this expected value density to be higher for the 1st layers, which respect a lower severity, but are more likely to be requested. So, to equalise the marginal cost-effectiveness of additional investments across all layers, it may well be better to invest more in prevention than in response, and more in response than in resilience.

Maxipok is not ok?

My conclusion that saving lives in normal times is better to improve the longterm future than doing so in catastrophes is in contrast to Nick Bostrom’s maxipok rule:

Maximize the probability of an “OK outcome,” where an OK outcome is any outcome that avoids existential catastrophe.

I question the above as follows under my framework:

- The benefits of saving a life tend to infinity as the post-catastrophe population goes to 0, i.e. as one is increasingly confident it would cause human extinction.

- Yet, the PDF of the severity of the catastrophe (ratio between the pre- and post-catastrophe population) tends to 0 in that case.

- The 2 points above have opposing effects in terms of the expected value of saving a human life, and one cannot consider each of them in isolation because they are not independent.

- The 2 points are encapsulated in the expected value density of the benefits of saving a life, which I think tends to 0 under reasonable assumptions as the severity of the catastrophe increases.

I wonder which of the following heuristics is better to improve the longterm future. Maximising:

- The probability of nearterm human welfare not dropping to zero (roughly as in maxipok).

- Nearterm human welfare.

- Nearterm welfare (not only of humans, but all sentient beings, including animals and beings with artificial sentience).

Maxipok matches the 1st of these to the extent nearterm risk of human extinction is a good proxy for nearterm existential risk[3], so I guess Nick would suggest the 1st of the above is the best heuristic. Nevertheless, it naively seems to me that the last one is broader:

- It depends on the whole distribution of welfare instead of just its left tail.

- It encompasses all sentient beings instead of just humans.

So a priori I would conclude maximising nearterm welfare is the best heuristic among the above. Accordingly, as a 1st approximation, I believe all interventions could be fairly assessed based on a single metric like the number of disability-adjusted life years (DALYs) averted per dollar, as calculated through a standard cost-effectiveness analysis[4] (CEA). Ideally, cost-effectiveness analyses would account for effects on non-human beings too.

- ^

Here is the relevant excerpt:

For example, suppose one saved a drowning child 10,000 years ago, when the human population was estimated to be only in the millions. For convenience, we’ll posit a little over 7 million, 1/1000th of the current population. Since the child would add to population pressures on food supplies and disease risk, the effective population/economic boost could range from a fraction of a lifetime to a couple of lifetimes (via children), depending on the frequency of famine conditions. Famines were not annual and population fluctuated on a time scale of decades, so I will use 20 years of additional life expectancy.

So, for ~ 20 years the ancient population would be 1/7,000,000th greater, and economic output/technological advance. We might cut this to 1/10,000,000 to reflect reduced availability of other inputs, although increasing returns could cut the other way. Using 1/10,000,000 cumulative world economic output would reach the same point ~ 1/500,000th of a year faster. An extra 1/500,000th of a year with around our current population of ~7 billion would amount to an additional ~14,000 life -years, 700 times the contemporary increase in life years lived. Moreover, those extra lives on average have a higher standard of living than their ancient counterparts.

Readers familiar with Nick Bostrom’s paper on astronomical waste will see that this is a historical version of the same logic: when future populations will be far larger, expediting that process even slightly can affect the existence of many people. We cut off our analysis with current populations, but the greater the population this growth process will reach, the greater long-run impact of technological speedup from saving ancient lives.

- ^

This is a decent goal in the model I presented previously if the post-catastrophe population is not too different from the pre-catastrophe one.

- ^

Human extinction may not be an existential catastrophe if it is followed by the emergence of another intelligent species or caused by benevolent artificial intelligence.

- ^

I prefer focusing on wellbeing-adjusted life years (WELLBYs) instead of DALYs, but both work for the point I am making as long as they are calculated through a standard CEA. Elliott Thornley and Carl Shulman argued longtermists should commit to acting in accordance with a catastrophe policy driven by standard cost-benefit analysis (CBA), but only in the political sphere, whereas I think the same holds in other contexts.

Owen Cotton-Barratt @ 2024-04-20T14:09 (+12)

I'm worried that modelling the tail risk here as a power law is doing a lot of work, since it's an assumption which makes the risk of very large events quite small (especially since you're taking a power law in the ratio, aside from the threshold from requiring a certain number of humans to have a viable population, the structure of the assumption essentially gives that extinction is impossible).

But we know from (the fancifully named) dragon king theory that the very largest events are often substantially larger than would be predicted by power law extrapolation.

Vasco Grilo @ 2024-04-20T22:03 (+2)

Thanks for the critique, Owen! I strongly upvoted it.

I'm worried that modelling the tail risk here as a power law is doing a lot of work, since it's an assumption which makes the risk of very large events quite small (especially since you're taking a power law in the ratio

Assuming the PDF of the ratio between the initial and final population follows a loguniform distribution (instead of a power law), the expected value density of the cost-effectiveness of saving a life would be constant, i.e. it would not depend on the severity of the catastrophe. However, I think assuming a loguniform distribution for the ratio between the initial and final population majorly overestimates tail risk. For example, I think a population loss (over my period length of 1 year[1]) of 90 % to 99 % (ratio between the initial and final population of 10 to 100) is more likely than a population loss of 99.99 % to 99.999 % (ratio between the initial and final population of 10 k to 100 k), whereas a loguniform distribution would predict both of these to be equally likely.

aside from the threshold from requiring a certain number of humans to have a viable population

My reduction in population is supposed to refer to a period of 1 year, but the above only decreases population over longer horizons.

the structure of the assumption essentially gives that extinction is impossible

I think human extinction over 1 year is extremely unlikely. I estimated 5.93*10^-12 for nuclear wars, 2.20*10^-14 for asteroids and comets, 3.38*10^-14 for supervolcanoes, a prior of 6.36*10^-14 for wars, and a prior of 4.35*10^-15 for terrorist attacks.

But we know from (the fancifully named) dragon king theory that the very largest events are often substantially larger than would be predicted by power law extrapolation.

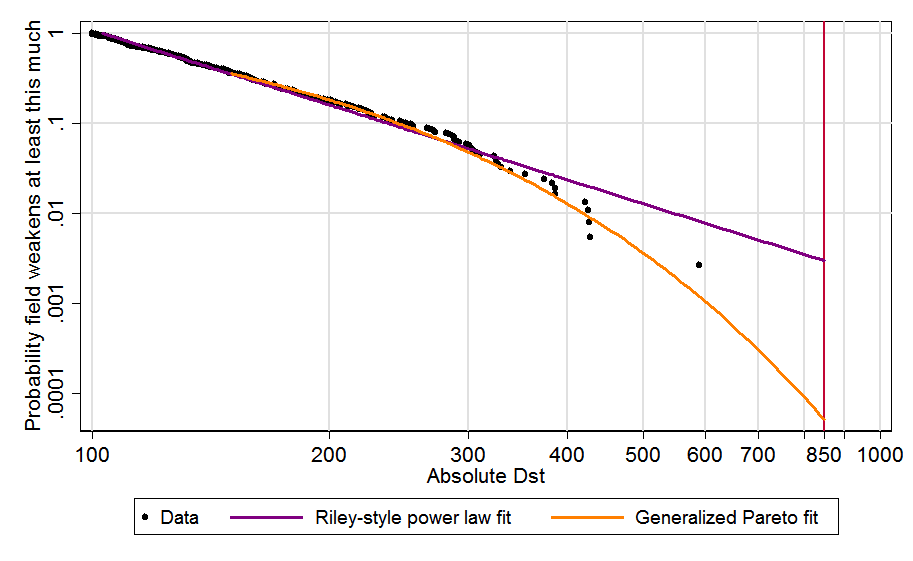

Interesting! I did not know about that theory. On the other hand, there are counterexamples. David Roodman has argued the tail risk of solar storms decreases faster than predicted by a power law:

I have also found the tail risk of wars decreases faster than predicted by a power law:

Do you have a sense of the extent to which the dragon king theory applies in the context of deaths in catastrophes?

- ^

I have now clarified this in the post.

Owen Cotton-Barratt @ 2024-04-20T22:45 (+5)

I think human extinction over 1 year is extremely unlikely. I estimated 5.93*10^-12 for nuclear wars, 2.20*10^-14 for asteroids and comets, 3.38*10^-14 for supervolcanoes, a prior of 6.36*10^-14 for wars, and a prior of 4.35*10^-15 for terrorist attacks.

Without having dug into them closely, these numbers don't seem crazy to me for the current state of the world. I think that the risk of human extinction over 1 year is almost all driven by some powerful new technology (with residues for the wilder astrophysical disasters, and the rise of some powerful ideology which somehow leads there). But this is an important class! In general dragon kings operate via something which is mechanically different than the more tame parts of the distribution, and "new technology" could totally facilitate that.

Do you have a sense of the extent to which the dragon king theory applies in the context of deaths in catastrophes?

Unfortunately, for the relevant part of the curve (catastrophes large enough to wipe out large fractions of the population) we have no data, so we'll be relying on theory. My understanding (based significantly just on the "mechanisms" section of that wikipedia page) is that dragon kings tend to arise in cases where there's a qualitatively different mechanism which causes the very large events but doesn't show up in the distribution of smaller events. In some cases we might not have such a mechanism, and in others we might. It certainly seems plausible to me when considering catastrophes (and this is enough to drive significant concern, because if we can't rule it out it's prudent to be concerned, and risk having wasted some resources if we turn out to be in a world where the total risk is extremely small), via the kind of mechanisms I allude to in the first half of this comment.

Vasco Grilo @ 2024-04-21T09:29 (+2)

I think that the risk of human extinction over 1 year is almost all driven by some powerful new technology (with residues for the wilder astrophysical disasters, and the rise of some powerful ideology which somehow leads there). But this is an important class! In general dragon kings operate via something which is mechanically different than the more tame parts of the distribution, and "new technology" could totally facilitate that.

To clarify, my estimates are supposed to account for unknown unknowns. Otherwise, they would be any orders of magnitude lower.

Unfortunately, for the relevant part of the curve (catastrophes large enough to wipe out large fractions of the population) we have no data, so we'll be relying on theory.

I found the "Unfortunately" funny!

My understanding (based significantly just on the "mechanisms" section of that wikipedia page) is that dragon kings tend to arise in cases where there's a qualitatively different mechanism which causes the very large events but doesn't show up in the distribution of smaller events. In some cases we might not have such a mechanism, and in others we might.

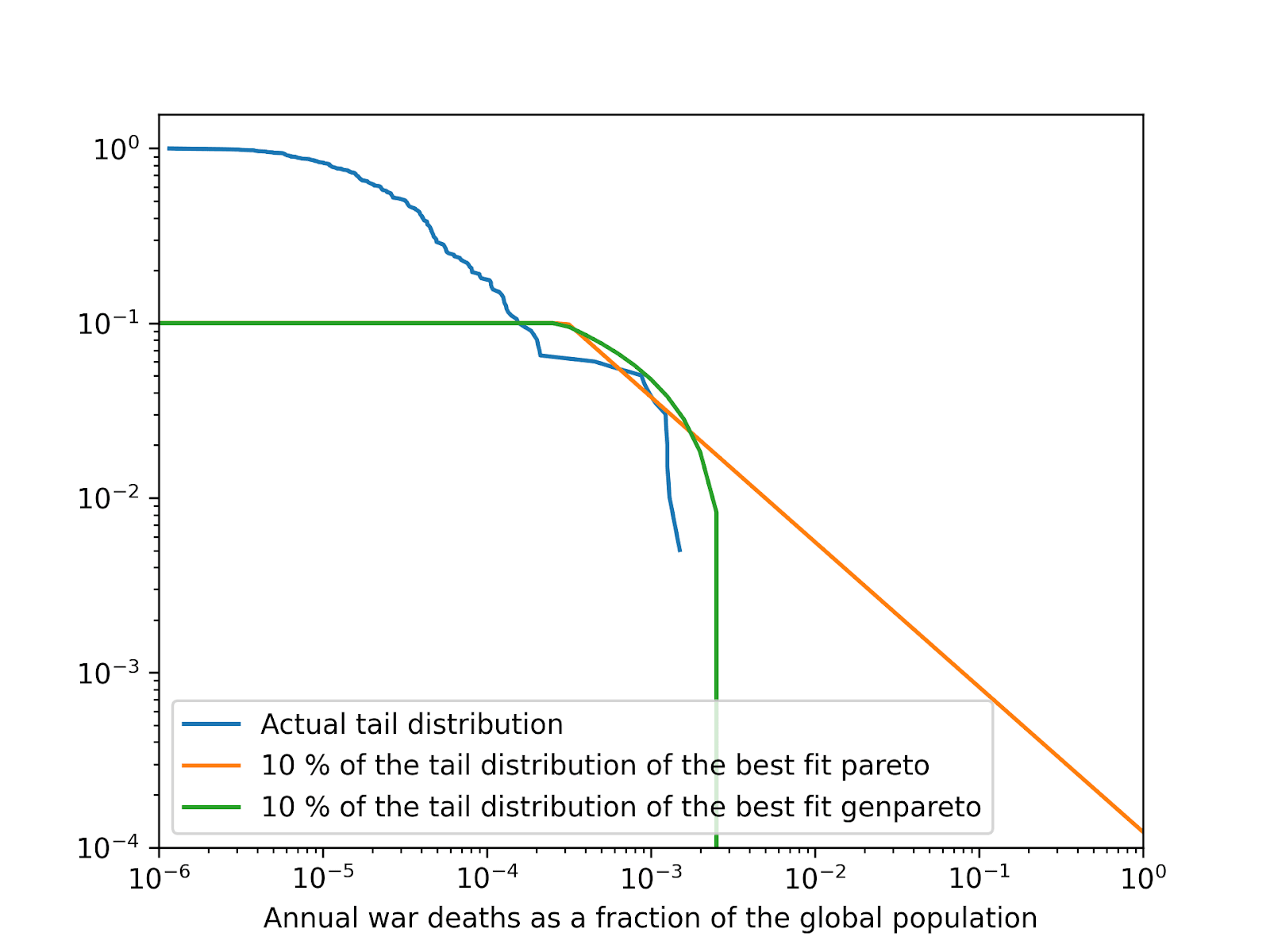

Makes sense. We may even have both cases in the same tail distribution. The tail distribution of the annual war deaths as a fraction of the global population is characteristic of a power law from 0.001 % to 0.01 %, then it seems to have a dragon king from around 0.01 % to 0.1 %, and then it decreases much faster than predicted by a power law. Since the tail distribution can decay slower and faster than a power law, I feel like this is still a decent assumption.

It certainly seems plausible to me when considering catastrophes (and this is enough to drive significant concern, because if we can't rule it out it's prudent to be concerned, and risk having wasted some resources if we turn out to be in a world where the total risk is extremely small), via the kind of mechanisms I allude to in the first half of this comment.

I agree we cannot rule out dragon kings (flatter sections of the tail distribution), but this is not enough for saving lives in catastrophes to be more valuable than in normal times. At least for the annual war deaths as a fraction of the global population, the tail distribution still ends up decaying faster than a power law despite the presence of a dragon king, so the expected value density of the cost-effectiveness of saving lives is still lower for larger wars (at least given my assumption that the cost to save a life does not vary with the severity of the catastrophe). I concluded the same holds for the famine deaths caused by the climatic effects of nuclear war.

One could argue we should not only put decent weight on the existence of dragon kings, but also on the possibility that they will make the expected value density of saving lives higher than in normal times. However, this would be assuming the conclusion.

Stan Pinsent @ 2024-04-22T07:54 (+11)

Using PDF rather than CDF to compare the cost-effectiveness of preventing events of different magnitudes here seems off.

You show that preventing (say) all potential wars next year with a death toll of 100 is 1000^1.6 = 63,000 times better in expectation than preventing all potential wars with a death toll of 100k.

More realistically, intervention A might decrease the probability of wars of magnitude 10-100 deaths and intervention B might decrease the probability of wars of magnitude 100,000 to 1,000,000 deaths. Suppose they decrease the probability of such wars over the next n years by the same amount. Which intervention is more valuable? We would use the same methodology as you did except we would use the CDF instead of the PDF. Intervention A would be only 1000^0.6 = 63 times as valuable.

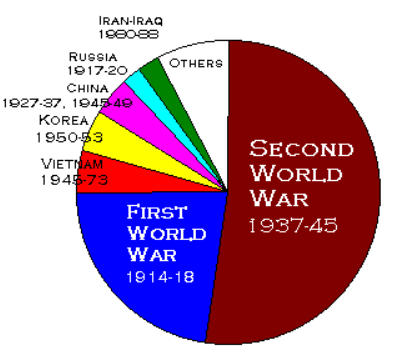

As an intuition pump we might look at the distribution of military deaths in the 20th century. Should the League of Nations/UN have spent more effort preventing small wars and less effort preventing large ones?

The data actually makes me think that even the 63x from above is too high. I would say that in the 20th century, great-power conflict > interstate conflict > intrastate conflict should have been the order of priorities (if we wish to reduce military deaths). When it comes to things that could be even deadlier than WWII, like nuclear war or a pandemic, it's obvious to me that the uncertainty about the death toll of such events increases at least linearly with the expected toll, and hence the "100-1000 vs 100k-1M" framing is superior to the PDF approach.

Vasco Grilo @ 2024-04-22T17:20 (+3)

Thanks for the comment, Stan!

Using PDF rather than CDF to compare the cost-effectiveness of preventing events of different magnitudes here seems off.

Technically speaking, the way I modelled the cost-effectiveness:

- I am not comparing the cost-effectiveness of preventing events of different magnitudes.

- Instead, I am comparing the cost-effectiveness of saving lives in periods of different population losses.

Using the CDF makes sense for the former, but the PDF is adequate for the latter.

You show that preventing (say) all potential wars next year with a death toll of 100 is 1000^1.6 = 63,000 times better in expectation than preventing all potential wars with a death toll of 100k.

I agree the above follows from using my tail index of 1.6. It is just worth noting that the wars have to involve exactly, not at least, 100 and 100 k deaths for the above to be correct.

More realistically, intervention A might decrease the probability of wars of magnitude 10-100 deaths and intervention B might decrease the probability of wars of magnitude 100,000 to 1,000,000 deaths. Suppose they decrease the probability of such wars over the next n years by the same amount. Which intervention is more valuable? We would use the same methodology as you did except we would use the CDF instead of the PDF. Intervention A would be only 1000^0.6 = 63 times as valuable.

This is not quite correct. The expected deaths from wars with to deaths is , where are the minimum war deaths. So, for a tail index of , intervention A would be 251 (= (10^-0.6 - 100^-0.6)/((10^5)^-0.6 - (10^6)^-0.6)) times as cost-effective as B. As the upper bounds of the severity ranges of A and B get increasingly close to their lower bounds, the cost-effectiveness of A tends to 63 k times that of B. In any case, the qualitative conclusion is the same. Preventing smaller wars averts more deaths in expectation assuming war deaths follow a power law.

As an intuition pump we might look at the distribution of military deaths in the 20th century. Should the League of Nations/UN have spent more effort preventing small wars and less effort preventing large ones?

I do not know. Instead of relying on past deaths alone, I would rather use cost-effectiveness analyses to figure out what is more cost-effective, as the Centre for Exploratory Altruism Research (CEARCH) does. I just think it is misleading to directly compare the scale of different events without accounting for their likelihood, as in the example from Founders Pledge’s report Philanthropy to the Right of Boom I mention in the post.

When it comes to things that could be even deadlier than WWII, like nuclear war or a pandemic, it's obvious to me that the uncertainty about the death toll of such events increases at least linearly with the expected toll, and hence the "100-1000 vs 100k-1M" framing is superior to the PDF approach.

I am also quite uncertain about the death toll of catastrophic events! I used the PDF to remain consistent which Founders Pledge's example, which compared discrete death tolls (not ranges).

Stan Pinsent @ 2024-04-23T16:34 (+3)

Thanks for the detailed response, Vasco! Apologies in advance that this reply is slightly rushed and scattershot.

I agree that you are right with the maths - it is 251x, not 63,000x.

- I am not comparing the cost-effectiveness of preventing events of different magnitudes.

- Instead, I am comparing the cost-effectiveness of saving lives in periods of different population losses.

OK, I did not really get this!

In your example on wars you say

- As a consequence, if the goal is minimising war deaths[2], spending to save lives in wars 1 k times as deadly should be 0.00158 % (= (10^3)^(-1.6)) as large.

Can you give an example of what might count as "spending to save lives in wars 1k times as deadly" in this context?

I am guessing it is spending money now on things that would save lives in very deadly wars. Something like building a nuclear bunker vs making a bullet proof vest? Thinking about the amounts we might be willing to spend on interventions that save lives in 100-death wars vs 100k-death wars, it intuitively feels like 251x is a way better multiplier than 63,000. So where am I going wrong?

When you are thinking about the PDF of , are you forgetting that ∇ is not proportional to ∇?

To give a toy example: suppose .

Then if we have

If we have

The "height of the PDF graph" will not capture these differences in width. This won't matter much for questions of 100 vs 100k deaths, but it might be relevant for near-existential mortality levels.

Vasco Grilo @ 2024-04-23T18:50 (+2)

Can you give an example of what might count as "spending to save lives in wars 1k times as deadly" in this context?

For example, if one was comparing wars involding 10 k or 10 M deaths, the latter would be more likely to involve multiple great power, in which case it would make more sense to improve relationships between NATO, China and Russia.

Thinking about the amounts we might be willing to spend on interventions that save lives in 100-death wars vs 100k-death wars, it intuitively feels like 251x is a way better multiplier than 63,000. So where am I going wrong?

You may be right! Interventions to decrease war deaths may be better conceptualised as preventing deaths within a given severity range, in which case I should not have interpreted lirerally the example in Founders Pledge’s report Philanthropy to the Right of Boom. In general, I think one has to rely on cost-effectiveness analyses to decide what to prioritise.

When you are thinking about the PDF of , are you forgetting that ∇ is not proportional to ∇?

I am not sure I got the question. In my discussion of Founders Pledge's example about war deaths, I assumed the value of saving one life to be the same regardless of population size, because this is what they were doing). So I did not use the ratio between the initial and population.

Denkenberger @ 2024-04-21T01:39 (+6)

I think that saving lives in a catastrophe could have more flow-through effects, such as preventing collapse of civilization (from which we may not recover), reducing the likelihood of global totalitarianism, and reducing the trauma of the catastrophe, perhaps resulting in better values ending up in AGI.

Vasco Grilo @ 2024-04-21T08:30 (+2)

Thanks for the comment, David! I agree all those effects could be relevant. Accordingly, I assume that saving a life in catastrophes (periods over which there is a large reduction in population) is more valuable than saving a life in normal times (periods over which there is a minor increase in population). However, it looks like the probability of large population losses is sufficiently low to offset this, such that saving lives in normal time is more valuable in expectation.

MichaelStJules @ 2024-04-20T18:21 (+4)

Expected value density of the benefits and cost-effectiveness of saving a life

You're modelling the cost-effectiveness of saving a life conditional on catastrophe here, right? I think it would be best to be more explicit about that, if so. Typically x-risk interventions aim at reducing the risk of catastrophe, not the benefits conditional on catastrophe. Also, it would make it easier to follow.

Denoting the pre- and post-catastrophe population by and , I assume

Also, to be clear, this is supposed to be ~immediately pre-catastrophe and ~immediately post-catastrophe, right? (Catastrophes can probably take time, but presumably we can still define pre- and post-catastrophe periods.)

Vasco Grilo @ 2024-04-20T20:46 (+2)

Thanks for the comment, Michael!

Also, to be clear, this is supposed to be ~immediately pre-catastrophe and ~immediately post-catastrophe, right? (Catastrophes can probably take time, but presumably we can still define pre- and post-catastrophe periods.)

I have updated the post changing "pre- and post-catastrophe population" to "population at the start and end of a period of 1 year", which I now also refer to as the initial and final population.

You're modelling the cost-effectiveness of saving a life conditional on catastrophe here, right?

No. It is supposed to be the cost-effectiveness as a function of the ratio between the initial and final population.

Typically x-risk interventions aim at reducing the risk of catastrophe, not the benefits conditional on catastrophe.

Yes, interpreting catastrophe as a large population loss. In my framework, xrisk interventions aim to save lives over periods whose initial population is significantly higher than the final one.

MichaelStJules @ 2024-04-20T23:30 (+4)

Oh, I didn't mean for you to define the period explicitly as a fixed interval period. I assume this can vary by catastrophe. Like maybe population declines over 5 years with massive crop failures. Or, an engineered pathogen causes massive population decline in a few months.

I just wasn't sure what exactly you meant. Another intepretation would be that P_f is the total post-catastrophe population, summing over all future generations, and I just wanted to check that you meant the population at a given time, not aggregating over time.

Vasco Grilo @ 2024-04-21T09:41 (+2)

Oh, I didn't mean for you to define the period explicitly as a fixed interval period. I assume this can vary by catastrophe. Like maybe population declines over 5 years with massive crop failures. Or, an engineered pathogen causes massive population decline in a few months.

Hi @MichaelStJules, I am tagging you because I have updated the following sentence. If there is a period longer than 1 year over which population decreases, the power laws describing the ratio between the initial and final population of each of the years following the 1st could have different tail indices, with lower tail indices for years in which there is a larger population loss. I do not think the duration of the period is too relevant for my overall point. For short and long catastrophes, I expect the PDF of the ratio between the initial and final population to decay faster than the benefits of saving a life, such that the expected value density of the cost-effectiveness decreases with the severity of the catastrophe (at least for my assumption that the cost to save a life does not depend on the severity of the catastrophe).

I just wasn't sure what exactly you meant. Another intepretation would be that P_f is the total post-catastrophe population, summing over all future generations, and I just wanted to check that you meant the population at a given time, not aggregating over time.

I see! Yes, both and are population sizes at a given point in time.

Owen Cotton-Barratt @ 2024-04-20T14:11 (+4)

Re.

Cotton-Barratt 2020 says “it’s usually best to invest significantly into strengthening all three defence layers”:

“This is because the same relative change of each probability will have the same effect on the extinction probability”. I agree with this, but I wonder whether tail risk is the relevant metric. I think it is better to look into the expected value density of the cost-effectiveness of saving a life, accounting for indirect longterm effects as I did. I predict this expected value density to be higher for the 1st layers, which respect a lower severity, but are more likely to be requested. So, to equalise the marginal cost-effectiveness of additional investments across all layers, it may well be better to invest more in prevention than in response, and more in response than in resilience.

That paper was explicitly considering strategies for reducing the risk of human extinction. I agree that relative to the balance you get from that, society should skew towards prioritizing response and especially prevention, since these are also important for many of society's values that aren't just about reducing extinction risk.

Vasco Grilo @ 2024-04-20T20:54 (+2)

Thanks for all your comments, Owen!

That paper was explicitly considering strategies for reducing the risk of human extinction.

My expected value density of the cost-effectiveness of saving a life, which decreases as catastrophe severity increases, is supposed to account for longterm effects like decreasing the risk of human extinction.

Owen Cotton-Barratt @ 2024-04-20T20:59 (+6)

I think if you're primarily trying to model effects on extinction risk, then doing everything via "proportional increase in population" and nowhere directly analysing extinction risk, seems like a weirdly indirect way to do it -- and leaves me with a bunch of questions about whether that's really the best way to do it.

Vasco Grilo @ 2024-04-20T22:23 (+2)

if you're primarily trying to model effects on extinction risk

I am not necessarily trying to do this. I intended to model the overall effect of saving lives, and I have the intuition that saving a life in a catastrophe (period over which there is a large reduction in population) conditional on it happening is more valuable than saving a life in normal times, so I assumed the value of saving a life increases with the severity of the catastrophe. One can assume preventing extinction is specially important by selecting a higher value for ("the elasticity of the benefits [of saving a life] with respect to the ratio between the initial and final population").

Owen Cotton-Barratt @ 2024-04-20T22:30 (+4)

Sorry, I understood that you primarily weren't trying to model effects on extinction risk. But I understood you to be suggesting that this methodology might be appropriate for what we were doing in that paper -- which was primarily modelling effects on extinction risk.

Owen Cotton-Barratt @ 2024-04-20T13:59 (+4)

I'm confused by some of the set-up here. When considering catastrophes, your "cost to save a life" represents the cost to save that life conditional on the catastrophe being due to occur? (I'm not saying "conditional on occurring" because presumably you're allowed interventions which try to avert the catastrophe.)

Understood this way, I find this assumption very questionable:

, since I feel like the effect of having more opportunities to save lives in catastrophes is roughly offset by the greater difficulty of preparing to take advantage of those opportunities pre-catastrophe.

Or is the point that you're only talking about saving lives via resilience mechanisms in catastrophes, rather than trying to make the catastrophes not happen or be small? But in that case the conclusions about existential risk mitigation would seem unwarranted.

Vasco Grilo @ 2024-04-20T20:59 (+2)

I'm confused by some of the set-up here. When considering catastrophes, your "cost to save a life" represents the cost to save that life conditional on the catastrophe being due to occur? (I'm not saying "conditional on occurring" because presumably you're allowed interventions which try to avert the catastrophe.)

My language was confusing. By "pre- and post-catastrophe population", I meant the population at the start and end of a period of 1 year, which I now also refer to as the initial and final population. I have now clarified this in the post.

I assume the cost to save a life in a given period is a function of the ratio between the initial and final population of the period.

Or is the point that you're only talking about saving lives via resilience mechanisms in catastrophes, rather than trying to make the catastrophes not happen or be small? But in that case the conclusions about existential risk mitigation would seem unwarranted.

I meant to refer to all mechanisms (e.g. prevention, response and resilience) which affect the variation in population over a period.

Owen Cotton-Barratt @ 2024-04-20T21:06 (+4)

Sorry, this isn't speaking to my central question. I'll try asking via an example:

- Suppose we think that there's a 1% risk of a particular catastrophe C in a given time period T which kills 90% of people

- We can today make an intervention X, which costs $Y, and means that if C occurs then T will only kill 89% of people

- We pay the cost $Y in all worlds, including the 99% in which C never occurs

- When calculating the cost to save a life for X, do you:

- A) condition on C, so you save 1% of people at the cost of $Y; or

- B) don't condition on C, so you save an expected 0.01% of people at a cost of $Y?

I'd naively have expected you to do B) (from the natural language descriptions), but when I look at your calculations it seems like you've done A). Is that right?

Vasco Grilo @ 2024-04-20T22:37 (+2)

Thanks for clarifying! I agree B) makes sense, and I am supposed to be doing B) in my post. I calculated the expected value density of the cost-effectiveness of saving a life from the product between:

- A factor describing the value of saving a life ().

- The PDF of the ratio between the initial and final population (), which is meant to reflect the probability of a catastrophe.

Owen Cotton-Barratt @ 2024-04-20T23:04 (+4)

I'm worried I'm misunderstanding what you mean by "value density". Could you perhaps spell this out with a stylized example, e.g. comparing two different interventions protecting against different sizes of catastrophe?

Vasco Grilo @ 2024-04-21T10:32 (+2)

By "pre- and post-catastrophe population", I meant the population at the start and end of a period of 1 year, which I now also refer to as the initial and final population.

I guess you are thinking that the period of 1 year I mention above is one over which there is a catastrophe, i.e. a large reduction in population. However, I meant a random unconditioned year. I have now updated "period of 1 year" to "any period of 1 year (e.g. a calendar year)". Population has been growing, so my ratio between the initial and final population will have a high chance of being lower than 1.