An experiment to evaluate the value of one researcher's work

By NunoSempere @ 2020-12-01T09:01 (+57)

Introduction

EA has lots of small research projects of uncertain value. If we improved our ability to assess value, we could redistribute funding to more valuable projects after the fact (e.g. using certificates of impact), or start to build forecasting systems which predict their value beforehand, and then choose to carry out the most valuable projects.

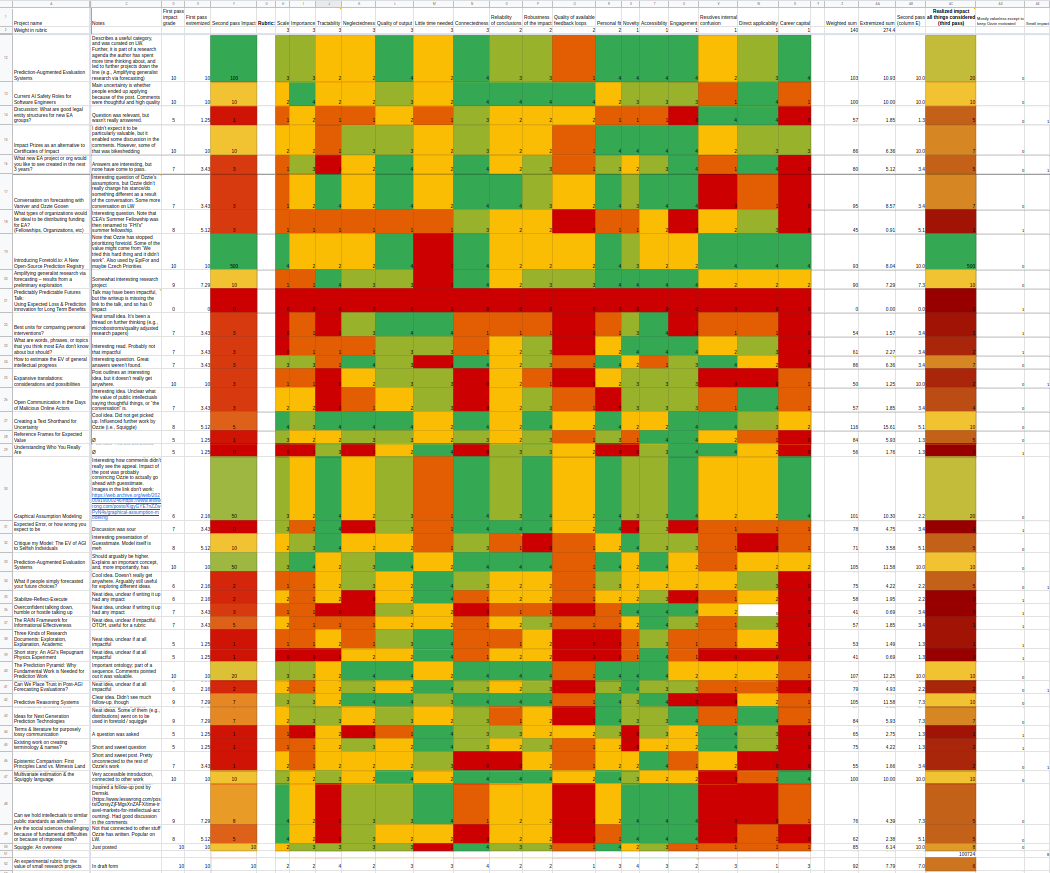

With the goal of understanding how difficult it is to assess the value of research after the fact, I went through all of Ozzie Gooen’s research output in the EA forum and on LessWrong, using an ad-hoc rubric. I’d initially asked more authors, but I found that Ozzie was particularly accessible and agreeable, and he already had a large enough number of posts for a preliminary project.

As my primary conclusion, I found that assessing the relative value of projects was doable. I was also left with the impression that Ozzie’s work is divided between, on the one hand, a smaller number of projects that build upon one another and that seem fairly valuable, and on the other hand a larger number of posts which don’t seem to have brought about much value and aren’t related to any other posts before or after.

The reader can see my results here, though I’d like to note that they’re preliminary and experimental, as is the rest of this post. Towards the end of this post, there is a section which mentions more caveats.

Related work:

- Charity Entrepreneurship's beautiful weighted factor model

- Prediction Augmented Evaluation Systems

- Predicting the Value of Small Altruistic Projects: A Proof of Concept Experiment

Thanks to Ozzie Gooen and Elizabeth Van Nostrand for thoughtful comments, and to Ozzie Gooen for suggesting the project.

Method

I rated each project using two distinct methods. First, I rated each project using my holistic intuition, taking care that their relative values made preliminary sense (i.e., that I'd be willing to exchange one project worth units for projects worth units.) I then rated them with a rubric, using a 0-4 Likert scale for each of the rubric’s elements. I then combined each rubric score with my intuition to come up with a final estimate of expected value, paying particular attention to the projects for which the rubric score and my intuition initially produced different answers.

Rubric elements

I produced this rubric by babbling, and by borrowing from previous rubrics I knew about, seeking to not spend too much time on its design. Note that the rubric contains many elements, whereas a final rubric would perhaps contain fewer. Here is a more cleaned up and easier to read — yet still very experimental — version of the rubric, though not exactly the one I used.

- Scale: Does this project affect many people?

- Importance: How important is the issue for the people affected?

- Tractability: How likely is the project to succeed in its goals?

- Neglectedness: How neglected is the area in which this project focuses on?

- Reliability: How much can we trust the conclusions of the project?

- Robustness: Is the project likely to be a good idea under many different models? Are there any models under which this project has a value of 0 or net negative?

- Novelty: Are the ideas presented novel? Is this project pursuing a novel direction?

- Accessibility: How understandable is this project to a motivated reader?

- Time needed: How long did it take to write the post? How long did it take to carry out the project of which the post is a write up?

- Quality of feedback-loops available: Will the authors know if it the project is going wrong?

- Quality of output.

- Connectedness: Is this project related to previous work? Does it inspire future work? Is this project part of a concerted effort to solve a larger problem?

- Engagement: Does this project elicit positive and constructive comments from casual readers? Is this project upvoted a lot?

- Resolves internal confusion: Does this project resolve any of the author’s personal confusions? Is its value of information high?

- Direct applicability: Is this project directly applicable to making the world better, or does it require many steps until impact is realized?

- Career capital: Does this project generate career capital for the one who carries it out?

- Personal fit: Was the project a good personal fit for the author?

In hindsight,

- Some of the elements of the rubric didn't really match up with research oriented projects, e.g., it's difficult to say whether theorizing about the shape of Predictive Systems is tractable (it’s in general hard to estimate the tractability of a research direction before the fact.)

- It's not clear what the a priori probability of success of a project which ultimately succeeded is.

- Although most of the projects I looked into probably didn't generate much career capital, maybe many together did.

- I might have wanted to borrow heavily from Charity Entrepreneurship’s rubric, but I did want to see what I could come up with on my own.

Unit: Quality Adjusted Research Papers

My arbitrary and ad-hoc unit was a "Quality Adjusted Research Paper" (QARP). For illustration purposes:

- ~0.1-1 mQ: A particularly thoughtful blog post comment, like this one

- ~10 mQ: A particularly good blog post, like Extinguishing or preventing coal seam fires is a potential cause area

- ~100 mQ: A fairly valuable paper, like Categorizing Variants of Goodhart's Law.

- ~1Q: A particularly valuable paper, like The Vulnerable World Hypothesis

- ~10-100 Q: The Global Priorities Institute's Research Agenda.

- ~100-1000+ Q: A New York Times Bestseller on a valuable topic, like Superintelligence (cited 2,651 times), or Thinking Fast and Slow (cited 30,439 times.)

- ~1000+ Q: A foundational paper, like Shannon’s "A Mathematical Theory of Communication." (cited 131,736 times.)

This spans six orders of magnitude (1 to 1,000,000 mQ), but I do find that my intuitions agree with the relative values, i.e., I would probably sacrifice each example for 10 equivalents of the preceding type (and vice-versa).

Throughout, a unit — even if it was arbitrary or ad-hoc — made relative comparison easier, because instead of comparing all the projects with each other, I could just compare them to a reference point. It also made working with different orders of magnitude easier: instead of asking how valuable a blog post is compared to a foundational paper I could move up and down in steps of 10x, which was much more manageable.

Example: Rating this project using its own rubric

The last row of the results rates this project. It initially gets a 10 mQ based on my holistic intuition (connected to previous work, takes relatively little time, resolves some of my internal confusions, etc.) But after seeing that my rubric gives it a 7.5 mQ and looking at other projects, I downgraded this to a 6 mQ, i.e., between What if people simply forecasted your future choices? (5) and Ideas for Next Generation Prediction Technologies (7).

Technical details: aggregation and extremizing

The problem of aggregating the elements of a rubric felt to me to be multiplicative, rather than additive. That is, I think that the value of a project looks more like

or

than like

or like

Further, I also had the sense that for these variables, my brain thinks logarithmically. That is, I don’t perceive Scale, Tractability, Neglectedness, I perceive something closer to log(Scale), log(Tractability), log(Neglectedness).

| What I perceive | What I care about | How it’s aggregated |

| log(Scale) | Scale | Total value ~ |

Thus, I was planning to exponentiate the sum of the (weighted) rubric variables, because

These variables would then be weighted, so that introducing a less important variable, like, say, novelty, would translate to

which in my mind made sense. Sadly, this did not work out, that is, aggregating Likert ratings that way didn’t produce a result which matched with my intuitions.

I tried different transformations, and in the end, one which did somewhat match my intuitions was , where s is the sum of the rubric variables (in our previous example, and the transformation was). This produced relative values which made sense to me, and were normalized such that 10 Q was roughly equal to a particularly good EA forum post.

I think that the theoretically elegant part of that transformation is that it will still be correlated with impact, but will give different errors than my intuition, so that combining them with my intuition will produce a more accurate picture.

A different alternative might have been to simply add up the z scores (subtract the mean, divide by the standard deviation), which is the solution used by Charity Entrepreneurship.

Another approach might have been to try to actually consider the multiplicative factors for each element in the rubric. For example, one could have units that naturally multiply together, like importance for each person affected (in e.g., QALYs), number of people, and probability of success (0 to 100%). When one multiplies these together, one gets total expected QALYs. In any case, this gets complicated quickly as one adds more elements to a rubric.

Results and comments.

The reader can see the results here. My main takeaways are:

Many projects don’t seem to have brought about much value

Many projects don’t seem to have brought about much value, and might have been valuable only to the extent that they helped the author keep motivated. Some examples to which I gave very low scores are here, here, here, and in general I/the rubric gave many projects a fairly low score. Ozzie comments:

One obvious question that comes from this is a difference between expected value and apparent value. It could be the case that the expected value of these tiny things were quite high because they were experimental. New posts in areas outside of an author's main area might have a small probability of becoming the authors next main area.

Further, though these posts were higher in number, they might have comprised a lower proportion of total research time than more time intensive projects.

Lastly, this conclusion is lightly held. For example, Ozzie gives a higher value to Can We Place Trust in Post-AGI Forecasting Evaluations? than either myself or the rubric did, and given that he’s spent more time thinking about it that I did, it wouldn't surprise me if he was right.

Being part of a concerted effort was important

Projects which seemed valuable to me usually belonged to a cluster, either Prediction Systems or the more recent Epistemic Progress. Despite often not having that much engagement in the comments, Ozzie has kept working on them based on personal intuition.

In particular, consider Graphical Assumption Modeling (archive link with images) and Creating a Text Shorthand for Uncertainty, which outlined what would later become Guesstimate. These posts had few upvotes, and the comments are broadly positive but not that informative, and bike-shed a lot. A similar example of a valuable project (by another author) which was only recognized as such after the fact is Fundraiser: Political initiative raising an expected USD 30 million for effective charities (which went on to become EAF’s ballot initiative doubled Zurich’s development aid). Although both examples are the product of a selection effect, this marginally increases the weight I give to personal intuition vs people's opinions.

There was also a cluster of "community building" posts, whose impact was harder to evaluate. However, at least one of them seemed fairly valuable, namely Current AI Safety Roles for Software Engineers.

Overall I’d give a lot of weight to "connectedness", i.e., whether a project is part of a concerted effort to solve a given problem, as opposed to a one-shot. The post Extinguishing or preventing coal seam fires is a potential cause area does really poorly in this area, because although it prepares the ground for e.g., a coal seam fires charity, the idea wasn't picked up; there was no follow-up.

The most valuable project seems to outweigh everything else combined

Guesstimate so far seems to outweigh every other project in the list together, with an estimated value of ~10-100+ Q. This is similar in magnitude to how valuable I think The Global Priorities Institute's Research Agenda is, though given that my reference unit is 10 mQ, I'm very uncertain. I arrived at this by attempting to guess the average value (in Q) of each Guesstimate model, of which there are currently 17k+ (Guesstimate model here). If we take out Guesstimate, the next more valuable project, Foretold, also outweighs everything else combined.

As an aside, Ozzie also has a meta-project, something like “building expertise in predictive systems”, and a community around it, which I’d rate more valuable than Foretold, but which didn’t have a clear post to assign to. More generally, I’d imagine that not all of Ozzie’s research is captured in his posts.

Caveats and warnings

As I was writing this project, Ozzie left some thoughtful comments emphasizing the uncertainty intrinsic in this kind of project. I could have adopted them into the main text, but then I’d wonder whether I would have used less caveats if the results had been different, so instead I reproduce them below:

I also would suggest stressing/acknowledging the messiness he goes into the sort of work. I think we should be pretty uncertain about the results of this, and if it seems to readers that we’re not, that seems bad. Generally rubrics in similar instill overconfidence in readers, from what I’ve seen in this area before.

So far in this document it sounds like you could be expecting this to be a perfectly final measure, and I don’t think that’s the case. For example you edit so many rubric elements arguably in large part as an experiment for future better rubrics.

This [that many projects don’t seem to have brought about much value, originally worded as "most projects are fairly valueless"] seems like a pretty bold statement given the relatively short amount of time it took for this piece. It doesn't seem like you've done any empirical work, for example, going out in the world and interviewing people about the results.

I'm fine with you suggesting bold things but it should be really clear that they aren't definitive. I really don't like overconfidence and I'm also worried about people thinking that you're overconfident.

Even if it's clear to you that you mean a lot of this with uncertainty, it seems common that readers don't pick up on that, So we need to be extra cautious.

One obvious question that comes from this is a difference between expected value and apparent value. It could be the case that the expected value of these tiny things were quite high because they were experimental. New posts in areas outside of an author's main area might have a small probability of becoming the authors next main area.

I would suggest thanking or at least mentioning that I reviewed this and gave feedback, both because I did and because it's about me, so I feel like readers might find it important to know. It is also in part a disclaimer that this work may have been a bit biased because of it.

Conclusion

Ideally, we would want to think in terms of the amount of, say, utilons, for each intervention, and have objective currency conversion between different cause areas, such as human and animal welfare. We'd also want perfect foresight to determine the expected utilons for each project. Absent that, I'll settle for one unit for each cause area (QALYs, tonnes of CO2 emitted, QARPs, etc), uncertain probabilistic currency conversion, and imperfect yet really good forecasting systems.

Future work

I’ve asked several people if I could use their posts, and several said yes, with the common sentiment being that they are already being rated in terms of upvotes. If you wouldn't mind your own posts being rated as well, do leave a comment!

In private conversation, Ozzie makes the point that better evaluation systems might be the solution to some coordination systems. For example, if we could measure the value of projects better we could assign status more directly to those who carry out more valuable projects, or directly only implement the most valuable projects. But the case for better evaluation systems does need to be made.

Lastly, there is of course work to be done to improve the ad-hoc rubric in this post (see here for my current version). For this, examples of past rubrics might prove useful; see Prize: Interesting Examples of Evaluations if you have any suggestions.

Ozzie Gooen @ 2020-12-02T01:03 (+16)

I have a bunch of thoughts on this, and would like to spend time thinking of more. Here are a few:

---

I’ve been advising this effort and gave feedback on it (some of which he explicitly included in the post in the “Caveats and warnings” section). Correspondingly, I think it’s a good early attempt, but definitely feel like things are still fairly early. Doing deep evaluation of some of this with getting more empirical data (for instance, surveying people to see which ones might have taken this advice, or having probing conversations with Guesstimate users) seems necessary to get a decent picture. However, it is a lot of work. This effort was much more of Nuño intuitively estimating all the parameters, which can get you kind of far, but shouldn’t be understood to be substantially more. Rubrics like these can be seen as much more authoritative than they actually are.

Reasons to expect these estimates to be over-positive

I tried my best to encourage Nuño to be fair and unbiased, but I’m sure he felt incentivized to give positive grades. I don’t believe I gave feedback to encourage him to exchange the scores favorably, but I did request that he made uncertainty more clear in this post. This wasn’t because I thought I did poorly in the rankings, is more because I thought that this was just a rather small amount of work for the claims being made. I imagine this will be an issue forward with evaluation, especially if people are evaluated you might be seen as possibly holding grudges or similar later on. It is not enough for them to not retaliate, the problem is that from an evaluator’s perspective, there’s a chance that they might retaliate.

Also, I imagine there is some selection pressure to a positive outcome. One of the reasons why I have been advising his efforts is because they are very related to my interests, so it would make sense that he might be more positive towards my previous efforts then would be others of different interests. This is one challenging thing about evaluation; typically the people who best understand the work have the advantage of better understanding its quality, but the disadvantage typically be biased towards how good this type of work is.

Note that all none of the projects wound up with a negative score, for example. I’m sure that at least one really should if we were clairvoyant, although it’s not obvious to me to say which one at this point.

Reasons to expect these estimates to be over-negative

I personally care whole lot more about being able to be neutral, and also in seeming neutral, than I do that my projects were evaluated favorably at the stage. I imagine this could been the case for Nuño as well. So it’s possible there was some over-compensation

here, but my guess is that you should expect things to be biased on the positive side regardless.

Tooling

I think this work brings to light how valuable improved tooling (better software solutions) could be. A huge spreadsheet can be kind of a mess, and things get more complicated if multiple users (like myself) would try to make rankings. I’ve been inspecting no-code options and would like to do some iteration here.

One change that seems obvious would be for reviews to be posted on the same page as the corresponding blog post. This could be done on the comments or in the post itself, like a Github status icon.

Decision Relevance

I’m hesitant to update much due to the rather low weight I place on this. I was very uncertain about the usefulness my projects before this and also I’m uncertain about it afterwards. I agree that most of it is probably not to be valuable at all unless I specifically, are much more unlikely someone else, continues his work into a more accessible or valuable form.

If it’s true the Guesstimate is actually far more important than anything else I’ve worked on, it would probably update me to focus a lot more on software. Recently I’ve been more focused on writing and mentorship than on technical development, but I’m considering changing back.

I think I would have paid around $1,000 or so for a report like this for my own usefulness. Looking back, the main value perhaps would come from talking through the thoughts with the people doing the rating. We haven’t done this yet, but might going forward. I’d probably pay at least $10,000 or so if I was sure that it was “fairly correct”.

The value of research in neglected areas

I think one big challenge with research is that you either focus on an active area or a neglected one. In active areas, marginal contributions may be less valuable because others are much more like you to come up with them. There’s one model where there is basically a bunch of free prestige lying around, and if you get there first you are taking zero-sum gains directly from someone else. In the EA community in particular I don’t want to play zero-sum games with other people. However, for neglected work, it seems very possible that almost no one will continue with it. My read is that neglected work is generally a fair bit more risky. There are instances where goes well and this could actually encourage a whole field to emerge (though this takes a while). There are other instances where no one happens to be interested in continuing this kind of research, and it dies before being able to be useful at all.

I think of my main research as being in areas I feel are very neglected. This can be exciting, but has obvious challenge that is difficult to be adopted by others, and so far this has been the case.

MichaelA @ 2020-12-05T07:09 (+9)

Doing deep evaluation of some of this without getting more empirical data (for instance, surveying people to see which ones might have taken this advice, or having probing conversations with Guesstimate users) seems necessary to get a decent picture.

(I assume you mean something like "and getting more empirical data", not "without"?)

I think it'd indeed be interesting and useful to combine the sort of intuitive estimation approach Nuño adopted here with some gathering of empirical data. Nuño (or whoever) could perhaps randomly select a subset of posts/outputs to gather empirical data on, to reduce how time-consuming/costly the data collection is.

Two potential methods of data collection that come to mind are:

- Surveys

- E.g., Rethink Priorities' "impact survey", my survey which was inspired by Rethink's, 80k's annual survey, and a recent Happier Lives Institute survey

- Some extra discussion here

- Interviews

- E.g., Rethink do "structured interviews with key decision-makers and leaders at EA organizations[, and seek] interviewees’ feedback on the general importance of our work for them and for the community, what they have and have not found helpful in what we’ve done, what we can do in the future that would be useful for them, and ways we can improve."

In fact, one possibility would be to use the intuitive estimation approach on the work of one of the orgs/people who already have a bunch of this sort of data relevant to that work (after checking that the org/people are happy to have their work used for this process), and then look at the empirical data, and see how they compare.

(I recently started working for Rethink, but the views in this comment are just my personal views.)

Ozzie Gooen @ 2020-12-07T03:59 (+4)

That's quite useful, thanks

In fact, one possibility would be to use the intuitive estimation approach on the work of one of the orgs/people who already have a bunch of this sort of data relevant to that work (after checking that the org/people are happy to have their work used for this process), and then look at the empirical data, and see how they compare.

This seems like a neat idea to me. We'll investigate it.

NunoSempere @ 2020-12-02T10:01 (+4)

Note that all none of the projects wound up with a negative score, for example. I’m sure that at least one really should if we were clairvoyant, although it’s not obvious to me to say which one at this point.

Expected Error, or how wrong you expect to be ended up with a -1, because of the negative comments.

Ozzie Gooen @ 2020-12-07T04:00 (+2)

Good catch

EdoArad @ 2020-12-02T16:00 (+2)

Regarding tooling, it may be very helpful to input subjective distributions - uncertainty seems to me to be very important here, mostly if we expect this kind of tool to be used by a low number of people

Ozzie Gooen @ 2020-12-02T17:17 (+4)

Yea, I'd love to see things like this, but it's all a lot of work. The existing tooling is quite bad, and it will probably be a while before we could rig it up with Foretold/Guesstimate/Squiggle.

EdoArad @ 2020-12-02T16:34 (+4)

Another idea - to be able to use units in cells such that the end result will depend on them. Say, for scale one can write "20000 QALYs" or "400 BCLs" (Broiler Chicken Lives) or "2% XpC" (X-risk per Century)

Ozzie Gooen @ 2020-12-07T04:01 (+2)

Yep, I think this is quite useful/obvious. (If I understand it correctly). Work though :)

Joey @ 2020-12-01T11:47 (+13)

Happy to have my posts used for this. One thing I would love to see integrated would be a willingness to pay metric as we have been experimenting with this a bit in our research process and have found it quite useful.

Ozzie Gooen @ 2020-12-02T01:12 (+5)

One challenge with willingness to pay is that we need to be clear who the money would be coming from. For instance, I would pay less for things if the money were coming from the budget of EA Funds than I would Open Phil, than I would the US Government. This seems doable to me, but is tricky. Ideally we could find a measure that wouldn't vary dramatically over time. For instance, the EA Funds budget might be desperate for cash some years have have too much others, changing the value of the marginal dollar dramatically.

NunoSempere @ 2020-12-01T20:20 (+3)

Thanks! A willingness to pay is an interesting proxy; will keep in mind. In particular, I imagine that it consolidates some intuitions, or makes them more apparent, though it probably won't help if your intuitions are just wrong.

Davidmanheim @ 2020-12-01T21:21 (+11)

This sounds great, and happy if you want to use my posts for this.

I also am super-happy that the Goodhart paper was used as an example of a "fairly valuable" paper! I should look at my other non-forum-post output and consider the score-to-time-and-effort ratio, to see if I can maximize the ratio by doing more of specific types of work, or emphasizing different projects.

Ben_West @ 2020-12-01T17:57 (+6)

This is a cool idea. Thanks Nuno for doing this evaluation, and thanks Ozzie for being willing to participate!

MichaelA @ 2020-12-05T07:12 (+4)

Thanks for this interesting post.

I'm also happy to have my posts used for this process. (Though some were written with or on behalf of people/orgs who I can't speak for, so if you would be interested in using my posts for this and making the results public, just let me know so I can check with those people/orgs first.)

If you do use my posts for this, you could perhaps also compare the results from your process to the results of my survey of the quality and impact of things I've written (as I sort-of suggest in another comment).

NunoSempere @ 2020-12-05T18:13 (+2)

Though some were written with or on behalf of people/orgs who I can't speak for

Are there any posts for which this is the case but where this isn't stated in the post?

MichaelA @ 2020-12-07T04:22 (+4)

No - for all posts where this is the case, the post will say so near the top or bottom (usually in italics).

Also, btw, I think if it's just that I acknowledge a person for helpful comments and discussions, there'd be no need to check with them. I just feel it'd be worth checking in cases where a person is acknowledged as a coauthor (or as having written an earlier draft that my post is based on), or where I say a post is "written for [organisation]".

NunoSempere @ 2020-12-07T10:29 (+2)

Cheers, thanks

alexherwix @ 2020-12-02T15:30 (+3)

Thanks for the interesting post.

One consideration that comes to my mind is if something like this type of evaluation further reinforces a "success to the successful" feedback loop which is inherently sensitive to initial conditions. As in people might be able to produce great work given the right support and conditions but don't have them in the beginning. Someone else is more lucky and gets picked up, then more supported, which then reinforces further success.

Thus, it seems generally pretty hard to use something like this kind of system to achieve "optimal" outcomes or, rather, let's say you have to be careful about how you implement such rating systems and be aware of such feedback loops.

What do you think about this?

NunoSempere @ 2020-12-02T18:26 (+3)

Yeah, I agree that for forecasting setups self-fulfilling prophesies/feedback loops can be a problem, but it seems likely that they can be mitigated with a certain amount of exploration (e.g., occasionally try things you'd expect to fail in order to test your system.)

It's also not clear that this type of evaluation is worse than the alternative informal evaluation system. For example, with a formal evaluation system you'd be able to pick up high quality outputs even if they come from otherwise low status people (and then give them further support.)

alexherwix @ 2020-12-03T13:00 (+1)

Thanks for the thoughtful answer. I agree that it's not clear that it is worse than other alternatives, in my comment I didn't give a reference solution to compare it to after all.

I just wanted to highlight the potential for problems that ought to be looked at while designing such solutions. So, if you consider working more on this in the future, it might be fruitful to think about how it would influence such feedback loops.

EdoArad @ 2020-12-02T16:06 (+3)

Do you mean that people who had past successes (even if by external support) would be more likely to score higher here?

I intuitively think that this kind of rubric would actually be more robust against such feedback loops. Most of the factors are not specific to the person, so I think that there would be less bias there.

alexherwix @ 2020-12-03T12:55 (+3)

In essence, I think that act of adding quantitative measures may lend a veil of "objectivity" to assessments of peoples work, which is intrinsically vulnerable to the success to the successful feedback loop.

Based on your comment, I had another look at the specific criteria of the rubric and agree that it seems possible that it could help to counteract something like the dynamic I outlined above, however, it would still have to be applied with care and recognizing the possibility of such dynamics.

The main problem I wanted to highlight is that something like this might obscure those dynamics and might be employed for political purposes such as justifying existing status hierarchies which might be simply circumstantial and not based on merit.