Expected value estimates you can take (somewhat) literally

By Gregory Lewis🔸 @ 2014-11-24T15:55 (+23)

Summary

A geometric interpretation of tail divergence offers a pseudo-robust mechanism of quantifying the expected 'regression to the mean' of a cost effectiveness estimate (i.e. expected value given estimated value). With caveats, this seems to be superior to current attempts at guestimation and 'calling the bottom' of anticipated regression to the mean. There are a few pleasing corollaries: 1) it vindicates a 'regressive mindset' (particularly championed by Givewell), and underscores the value of performing research to achieve more accurate estimation; 2) it suggests (and provides a framework for quantification for) how much an estimated expected value should be discounted by how speculative it is; 3) it suggests empirical approaches one can use to better quantify degree of regression, using variance in expected-value estimates.

[Cross-posted here. I'm deeply uncertain about this, and wonder if this is an over-long elaboration on a very simple error. If so, please show me!]

Introduction

Estimates of expected value regress to the mean - something that seems much more valuable than average will generally look not-quite-so-valuable when one has a better look. This problem is particularly acute for effective altruism and its focus on the highest expected value causes, as these are likely to regress the most. Quantifying how much one expects an estimate to regress is not straightforward, and this has in part lead to groups like Givewell warning against explicit expected value estimates. More robust estimation has obvious value, especially when comparing between diverse cause areas, but worries about significant and differential over-estimation make these enterprises highly speculative.

'In practice', I have seen people offer discounts to try and account for this effect (e.g. "Givewell think AMF averts a DALY for $45-ish, so lets say it's $60 to try and account for this"), or mention it as a caveat without quantification. It remains very hard to get an intuitive handle on how big one should expect this effect to be, especially when looking at different causes: one might expect more speculative causes in fields like animal welfare or global catastrophic risks to 'regress more' than relatively well-studied ones in public health, but how much more?

I am unaware of any previous attempts to directly quantify this effect. I hope this essay can make some headway on this issue, and thus allow more trustworthy expected value estimates.

A geometric explanation of regression to the mean

(A related post here)

Why is there regression to the mean for expected value calculations? For a toy model, pretend there are some well-behaved normally distributed estimates of expected value, and the estimation technique that it captures 90% of the variance in expected value. A stylized scatter plot might look something like this, with a fairly tight ellipse covering the points.

Despite the estimates of expected value being closely correlated to the actual expected values, there remains some scatter, and so the tails come apart: the estimated-to-be highest expected value things are unlikely to have the highest actual expected value (although it should still be pretty high). The better the estimate, the tighter the correlation, and therefore the tails diverge less:

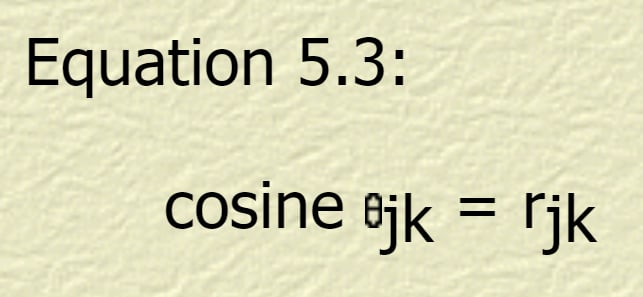

Another way of representing what is going on is using two vectors instead of Cartesian coordinates. If the set of estimates and actual expected values were plotted as vectors in n-dimensional space (normalized to a mean of zero), the cosine between them would be equal to the r-squared of their correlation. [1] So our estimates correlated to the actual cost effectiveness values with an r-square of 0.9 looks like this:

The two vectors lie in a similar direction to one another, as the arccos of 0.9 is ~26 degrees, (in accord with intuition, an r-square of 1 - perfect correlation - leads to vectors parallel, an r-square of zero vectors orthogonal, and and r-square of -1 vectors antiparallel). It also supplies a way to see how one can estimate the 'real' expected value given an estimated expected value - just project the expected value vector onto the 'actual' value vector, and the rest is basic trigonometry: multiply the hypotenuse (the estimate) by the cosine of the angle (the r-square) to arrive at the actual value. So, in our toy model with an R-square of 0.9, an estimate which is 1SD above the mean estimate puts the expected value at 0.9SD above the mean expected value.

There are further dividends to this conceptualization: it helps explain why regression to mean happens across the entire range, but it is particularly noticeable at the tails: the absolute amount of regression to the mean (taken as estimate minus actual value given estimate) grows linearly with the size of the estimate. [2] Reassuringly, it is order preserving: although E > A|E if E is above the mean, A|E1 > A|E2 if E1>E2, and so among a set of estimates of expected value, the highest estimate has the highest expected value.

Given this fairly neat way of getting from an estimate to the expected value given an estimate, can this be exploited to give estimates that 'pre-empt' their own regression?

A quick and fairly dirty attempt at 'regression-proofing' an estimate

A natural approach would be to try and estimate the expected value given an estimate by correcting for the correlation between the estimated expected value and the actual expected value. Although the geometry above was neat, there are several obstacles in the way of application to 'real' cost-effectiveness estimates. In ascending order of difficulty:

1) The variances of the two distributions need to be standardized.

Less of a big deal, we we'd generally hope for and aim that our estimates of expected value are drawn from a similar distribution to the actual expected values - if it's not, our estimates are systemically wrong somehow.

2) We need to know the means of the distribution to do the standardization - after all, if an intervention was estimated to be below the mean, we should anticipate it to regress upwards.

Trickier for an EA context, as the groups that do evaluation focus their efforts on what appear to be the most promising things, so there isn't a clear handle on the 'mean global health intervention' which may be our distribution of interest. To some extent though, this problem solves itself if the underlying distributions of interest are log normal or similarly fat tailed and you are confident your estimate lies far from the mean (whatever it is): log (X - something small) approximates to log(X)

3) Estimate errors should be at-least-vaguely symmetrical.

If you expect your estimates to be systematically 'off', this sort of correction has limited utility. That said, perhaps this is not as burdensome as it first appears: although we should expect our estimates (especially in more speculative fields) to be prone to systematic error, this can be modelled as symmetrical error if we are genuinely uncertain of what sign the systematic error should be. And, if you expect your estimate to be systematically erring one way or another, why haven't you revised it accordingly?

4) How do you know the r-square between your estimates and their true values?

Generally, these will remain hard to estimate, especially so for more speculative causes, as the true expected values will depend on recondite issues in the speculative cause itself. Further, I don't think many of us have a good 'handle' on what an r-square = 0.9 distribution looks like compared to an r-square of 0.5, and our adjustments (especially in fat-tailed distributions) will be highly sensitive to it. However, there is scope for improvement - one can use various approaches of benchmarking (if you think your estimator has an r-square of 0.9, you could ask yourself whether you think it really should track 90% of the variance in expected value, and one could gain a better 'feel' for the strength of various correlations by seeing some representative examples, and then use these to anchor an intuition about where the estimator should fall compared to these). Also, at least in cases with more estimates available, there would be pseudo-formal approaches to estimating the relevant angle between estimated and actual expected value (on which more later).

5) What's the underlying distribution?

Without many estimates, this is hard, and there is a risk of devolving into reference class tennis with different offers as to what underlying distributions 'should' be (broadly, fat tailed distributions with low means 'hurt' an estimate more, and vice-versa). This problem gets particularly acute when looking between cause areas, necessitating some shared distribution of actual expected values. I don't have any particularly good answers to offer here. Ideas welcome!

With all these caveats duly caveated, let's have a stab at estimating the 'real' expected value of malaria nets, given Givewell's estimate: [3]

- (One of) Givewell's old estimates for malaria nets was $45 dollars per DALY averted. This rate is the 'wrong way around', as we are interested in Unit/Cost rather than Cost/Unit. So convert it to something like 'DALYs averted per $100000': 2222 DALYs/100000$ [4]

- I aver (and others agree) that developing world interventions are approximately log-normally distributed, with a correspondingly fat tail to the right. [5] So take logs to get to normality: 3.347

- I think pretty highly of givewell, and so I rate their estimates pretty highly too. I think their estimation captures around 90% of the variance in actual expected value, so they correlate with an R-square of 0.9. So multiply by this factor: 3.012.

- Then we do everything in reverse, so the 'revised' expected value estimate is 1028 DALYs/100000$, or back in the headline figure, $97 dollars per DALY averted.

Some middling reflections

Naturally, this 'attempt' is far from rigorous, and it may take intolerable technical liberties. That said, I 'buy' this bottom line estimate more than my previous attempts to guestimate the 'post regression' cost effectiveness: I had previously been worried I was being too optimistic, whereas now my fears about my estimate are equal and opposite: I wouldn't be surprised if this was too optimistic, but I'd be similarly unsurprised if I was too pessimistic either.

It also has some salutary lessons attached: the correction turns out be greater than a factor of two, even when you think the estimate is really good. This vindicates my fear we are prone to under-estimate the risk, and how strongly regression can bite on the right of a fat-tailed distribution.

The 'post regression' estimate of expected value depends a lot on the r-square - the 'angle' between the two vectors (e.g., if you thought Givewell were 'only' as good as a 0.8 correlation to real effect, then the revised estimate becomes $210 per DALY averted). It is unfortunate to have an estimation method sensitive to a variable that is hard for us to grasp. It suggests that although our central measure for expected value may be $97/DALY, this should have wide credence bounds.

Comparing estimates of differing quality

One may be more interested not in the absolute expected value of a something, but how it compares to something else. This problem gets harder when comparing across diverse cause areas: one intuition may be that a more speculative estimate should be discounted when 'up against' a more robust estimate when comparing two causes or two interventions. A common venue I see this is comparing interventions between human and animal welfare. A common line of argument is something like:

For $1000 you might buy 20 or so QALYs, or maybe save a life (though that is optimistic) if you gave to AMF, in comparison, our best guess for an animal charity like The Humane League is it might save 3400 animals from a life in agriculture. Isn't that even better?

Bracketing all the considerable uncertainties of the right 'trade off' between animal and human welfare, anti- or pro-natalist concerns, trying to cash out 'a life in agriculture' in QALY terms, etc. etc. there's an underlying worry that ACE's estimate will be more speculative than Givewell's, and so their bottom line figures should be adjusted downwards compared to Givewell's as they have 'further to regress'. I think this is correct:

Suppose there's a more speculative source of expected value estimates, and you want to compare these to your current batch of higher quality expected value estimates. Say you think the speculative estimates correlate to real expected value with an r-square of 0.4 - they leave most of the variance unaccounted for. Geometrically, this means your estimates and 'actual' value diverge quite a lot - the angle is around 66 degrees, and as a consequence you expected value given the estimate is significantly discounted. This can mean an intervention with a high (but speculative) estimate still has a lower expected value than an intervention with a lower but more reliable estimate. (Plotted above: E2 > E1, but A2|E2 < A1|E1)

This correction wouldn't be a big deal (it's only going to be within an order of magnitude) except that we generally think the distributions are fat tailed and so these vectors are on something like a log scale. Going back to the previous case, if you thought that Animal Charity Evaluators estimates were 'only' correlated to real effects to a R-square of 0.4, your 'post regression' estimate as to how good The Humane League should be around 25 Animals saved per $1000 dollars, a reduction by two orders of magnitude.

Another fast and loose example would be something like this: suppose you are considering donating to a non-profit that wants to build a friendly AI. You estimate looks like it would be very good, but you know it is highly speculative, so estimates about its valuable might only be very weakly correlated with the truth (maybe R-square 0.1). How would it stack up to the AMF estimate made earlier? If you take Givewell to correlate much better to the truth (r-square 0.9, as earlier), then you can run the mathematics in reverse to see how 'good' your estimate of the AI charity has to be to still 'beat' Givewell's top charity after regression. The estimate for the friendly AI charity would need to be something greater than 10^25 DALYs averted per dollar donated for you to believe it a 'better deal' than AMF - at least when Givewell were still recommending it. [6]

A vindication of the regressive mindset

Given the above, regression to the mean, particularly on the sort of distributions Effective Altruists are dealing with, looks significant. If you are like me, you might be surprised at how large the downward adjustment is, and how much bigger it gets as your means of estimation get more speculative.

This vindicates the 'regressive mindset', championed most prominently by Givewell: not only should we not take impressive sounding expected value estimates literally, we often should adjust them downwards by orders of magnitude. [7] Insofar as you are surprised by the size of correction required in anticipation of regression to the mean, you should be less confident of more speculative causes (in a manner commensurate with their speculativeness), you should place a greater emphasis on robustness and reliability of estimation, and you should be more bullish about the value of cause prioritization research: improving the correlation of our estimates with actuality can massively increase the expected value of the best causes or interventions subsequently identified. [8] Givewell seem 'ahead of the curve', here, for which they deserve significant credit: much of this post can be taken as a more mathematical recapitulation of principles they already espouse. [9]

Towards more effective cost-effectiveness estimates

These methods are not without problems: principally, a lot depends on estimate of the correlation, and estimating this is difficult and could easily serve as a fig leaf to cover prior prejudice (e.g. I like animals but dislike far future causes, so I'm bullish on how good we are at animal cause evaluation but talk down our ability to estimate far future causes, possibly giving orders of magnitude of advantage to my pet causes over others). Pseudo-rigorous methods can supply us a false sense of security, both in our estimates, but also in our estimation of our own abilities.

In this case I believe the dividends outweigh the costs. It underscores how big an effect regression to the mean can be, and (if you are anything like me) prompts you to be more pessimistic when confronted with promising estimates. It appears to offer a more robust framework to allow our intuitions on these recondite matters to approach reflective equilibrium, and to better entrain them to the external world: the r-squares I offered in the examples above are in fact my own estimates, and on realizing their ramifications I my outlook on animal and targeted far future interventions has gone downwards, but my estimate of research has gone up. [10] If you disagree with me, we could perhaps point to a more precise source of our disagreement (maybe I over-egg how good Givewell is, but under-egg groups like ACE), and although much of our judgement of these matters would still rely on intuitive judgement calls, there's greater access for data to change our minds (maybe you could show me some representative associations with an r-square of 0.9 and 0.4 respectively, and suggest these are too strong/too weak to be analogous to the performance of Givewell and ACE). Prior to the mathematics, it is not clear what we could appeal to if you held that ACE should 'regress down' by ~10% and I held it should regress down by >90%. [11]

Besides looking for 'analogous data' (quantitative records of prediction of various bodies would be an obvious - and useful! - place to start) there are well-worn frequentist methods to estimate error that could be applied here, albeit with caveats: if there were multiple independent estimates of the same family of interventions, the correlation between them could be used to predict the correlation between them (or their mean) and the 'true' effect sizes (perhaps the 'repeat' of the Disease Control and Prevention Priorties project may provide such an opportunity, although I'd guess they wouldn't be truly independent, nor what they are estimating would be truly stationary). Similarly, but with greater difficulty, one could try and look at the track record of how far your estimates have previously regressed to anticipate how much ones in the future will. Perhaps these could be fertile avenues for further research. More qualitatively, it speaks in favour of multiple independent approaches to estimation, and so supports 'cluster-esque' thinking approaches.

[2] Again, recall that this with the means taken as zero - consequently, estimates below the mean will regress upwards.

[3] Given Givewell's move away from recommending AMF, this 'real' expected value might be more like 'expected value a few years ago', depending on how much you agree with their reasoning.

[4] It is worth picking a big multiple, as it gets fiddly dealing with logs that are negative.

[5] There are further lines of supporting evidence: it looks like the factors that contribute to effectiveness multiply rather than add, and correspondingly errors in the estimate tend to range over multiples as well.

[6] This ignores worries about 'what distribution': we might think that given the large uncertainties about poor meat-eating, broad-run convergence, value of yet-to-exist beings etc. etc. mean both Givewell estimates and others are going to be prone to a lot of scatter - these considerations might comprise the bulk of variance in expected value. You can probably take out sources of uncertainty that are symmetric when comparing between two estimators, but it can be difficult to give 'fair dealing' to both, and not colour ones estimates of estimate accuracy with sources of variance you include for one intervention but not another. Take this as an illustration rather than a careful attempt to evaluate directed far future interventions.

There is an interesting corollary here, though. You might take the future seriously (e.g. you're a total utilitarian), but also hold their is some convergence between good things now and good things in the future thanks to flow-through effects. You may be uncertain about whether you should give to causes with proven direct impact now (and therefore likely good impact into the future thanks to flow through) or to a cause with a speculative but very large potential upside across the future.

The foregoing suggests a useful heuristic for these decisions. If you hold there is broad run covergence, then you could phrase it as something like, "Impact measured over the near term and total impact are positively correlated". The crucial question is how strong this correlation is: roughly, if you think measured near-term impact correlates more strongly with total impact than your estimation of far future causes correlates with total impact, this weighs in favour of giving to the 'good thing now' cause over the 'speculative large benefit in the future' cause, and vice versa.

[7] This implies that the sort of 'back of the envelope' comparisons EAs are fond of making are probably worthless, and better refrained from.

[8] My hunch is this sort of surprise should also lead one to update towards 'broad' in the 'broad versus targeted' debate, but I'm not so confident about that.

[9] Holden Karnofsky has also offered a mathematical gloss to illustrate Givewell's scepticism and regressive mindset (but he is keen to point out this is meant as illustration, that it is not Givewell's 'official' take, and that Givewell's perspective on these issues does not rely on the illustration he offers). Although I am not the best person to judge, and will all due respect meant, I believe this essay improves on his work:

The model by Karnofsky has the deeply implausible characteristic that your expected value should start to fall as you estimated expected value rises, and so the mapping of likelihood onto posterior is no longer order-preserving: if A has a mean estimate of 10 units value and B an estimate of 20 units value, you should think A has a higher expected value than B. The reason the model demonstrates this pathological feature is that it stipulates the SD of the estimate should always equal its mean: as a consequence for their model, no matter how high the mean estimate, you should assign ~37% credence (>-1SD) that the real value should be negative - worse, as the mean estimate increases, lower percentiles (e.g. the 5% ~-2SD confidence bound) continue to fall, so that whilst an intervention of mean expected value of 5 Units has <<1% of its probability mass at -10 or less, this ceases to be the case with an intervention with a mean expected value of 50 Units or 500. Another way of looking at what is going on is the steep increase in the SD means that the estimate becomes less informative far more rapidly than its mean rises, and so the update ignores the new information more and more as the mean rises (given updating is commutative, it might be clearer the other way around: you have this very vague estimate, and then you attend to the new estimate with 'only' an SD of 1 compared to 500 or 1000 or so, and strongly update to that).

This seems both implausible on its face and doesn't concord with our experience as to how estimators work in the wild either. The motivating intuition (that your expected error/credence interval/whatever of an estimate should get bigger as your estimate grows) is better captured with using fatter tailed distributions (e.g. the confidence interval grows in absolute terms with log-normal distributions).

I also think this is one case where the frequentist story gives an easier route to something robust and understandable than talk of Bayesian updating. It is harder to get 'hard numbers' (i.e. 'so how much do I adjust downwards from the estimate?') out the Bayesian story as easily as the geometric one provided above, and the importance (as we'll see later) of multiple - preferably independent - estimates can be seen more easily, at least by my lights.

[10] These things might overlap: it may be the best way of doing research in cause prioritization is trialing particular causes and seeing how they turn out, and - particularly in far future directed causes - the line between 'intervention' and 'research' could get fuzzy. However, it suggests in many cases the primarily value of these activities is the value of information gains, rather than the putative 'direct' impact (c.f. 'Giving to Learn').

[11] It also seems fertile ground to better analyse things like search strategy, and the trade off between evaluating many things less accurately or fewer things more rigorously in terms of finding the highest expected value causes.

undefined @ 2014-11-24T16:34 (+4)

I'm really glad to see an attack on this problem, so thanks for having a go. It's an important issue that can be easy to lose track of.

Unfortunately I think there are some technical issues with your attempt to address it. To help organise the comment thread, I'm going to go into details in comments that are children of this one.

Edit: I originally claimed that the technical issues were serious. I'm now less confident -- perhaps something in this space will be useful, although the difficulty in estimating some of the parameters makes me wary of applying it as is.

undefined @ 2014-11-25T20:06 (+3)

the difficulty in estimating some of the parameters makes me wary of applying it as is.

I agree that these expected-value estimates shouldn't be taken (even somewhat) literally. But I think toy models like this one can still be important for checking the internal consistency of one's reasoning. That is: if you can create a model that says X, this doesn't mean you should treat X as true; but if you can't create a reasonable model that says X, this is pretty strong evidence that X isn't true.

In this case, the utility would be in allowing you to inspect the sometimes-unintuitive interplay between your guesses at an estimate's R^2, the distributional parameters, and the amount of regression. While you shouldn't plug in guesses at the parameters and expect the result to be correct, you can still use such a model to constrain the parameter space you want to think about.

undefined @ 2014-11-24T17:16 (+1)

2) We need to know the means of the distribution to do the standardization - after all, if an intervention was estimated to be below the mean, we should anticipate it to regress upwards.

Trickier for an EA context, as the groups that do evaluation focus their efforts on what appear to be the most promising things, so there isn't a clear handle on the 'mean global health intervention' which may be our distribution of interest. To some extent though, this problem solves itself if the underlying distributions of interest are log normal or similarly fat tailed and you are confident your estimate lies far from the mean (whatever it is): log (X - something small) approximates to log(X)

Sadly I don't think a log-normal distribution solves this problem for you, because to apply your model I think you are working entirely in the log-domain, so taking log(X) - log(something small), rather than log(X - something small). Then the choice of the small thing can have quite an effect on the answer.

For example when you regressed the estimate of cost-effectiveness of malaria nets, you had an implicit mean cost-effectiveness of 1 DALY/$100,000. If you'd assumed instead 1 DALY/$10,000, you'd have regressed to $77/DALY instead of $97/DALY.

undefined @ 2014-11-24T19:45 (+3)

Hey Greg, an estimate can be really highly correlated with reality and still be terribly biased. For instance, I can estimate that I will save 100, 200 and 300 lives in each of the respective next three years. In reality, I will actually save 1, 2 and 3 lives. My estimates explain 100% of the variance there, but there's a huge upward bias. This could be similar to the situation with GiveWell's estimates, which have dropped by an order of magnitude every couple of years. Does your approach guard against this? If not, I don't think it would deserve to be taken literally.

undefined @ 2014-11-27T05:10 (+2)

which have dropped by an order of magnitude every couple of years

This seems like a really important point, and I wonder if anyone has blogged on this particular topic yet. In particular:

- How should we expect this trend to continue?

- Does it increase the activation energy for getting involved in EA? (my interest in EA was first aroused by GW and how cheap they reckoned it was to save a life via VillageReach)

- Does it affect the claim that a minority of charities are orders of magnitude more effective than the rest?

- If we become able to put numbers to the effectiveness of a new area, such as xrisk or meta, would we expect to see the same exponential drop-off in our estimates even if we're aware of this problem?

Vasco Grilo @ 2023-07-11T11:17 (+2)

Hi Gregory,

If the set of estimates and actual expected values were plotted as vectors in n-dimensional space (normalized to a mean of zero), the cosine between them would be equal to the r-squared of their correlation.

Not R-square, just R:

Vasco Grilo @ 2023-02-18T18:35 (+2)

Nice post!

It looks like I cannot open the figures :(.

undefined @ 2016-05-21T17:11 (+1)

I just realized that I wrote a post with almost the exact same title as this one! Sorry mate, didn't mean to steal your title.