What We Owe the Past

By Austin @ 2022-05-05T12:06 (+55)

TL;DR: We have ethical obligations not just towards people in the future, but also people in the past.

Imagine the issue that you hold most dear, the issue that you have made your foremost cause, the issue that you have donated your most valuable resources (time, money, attention) to solving. For example: imagine you’re an environmental conservationist whose dearest value is the preservation of species and ecosystem biodiversity across planet Earth.

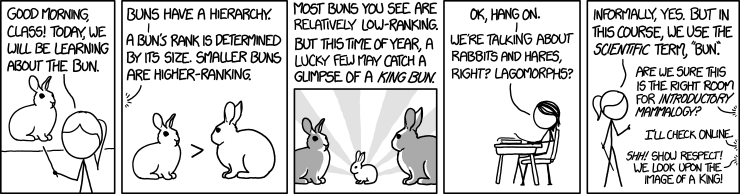

Now imagine it’s 2100. You’ve died, and your grandchildren are reading your will — and laughing. They’re laughing because they have already tiled over the earth with one of six species chosen for maximum cuteness (puppies, kittens, pandas, polar bears, buns, and axolotl) plus any necessary organisms to provide food.

Cuteness optimization is the driving issue of their generation; biodiversity is wholly ignored. They’ve taken your trust fund set aside for saving rainforests, and spent it on the systematic extinction of 99.99% of the world’s species. How would that make you, the ardent conservationist, feel?

Liberals often make fun of conservatives by pointing out how backwards conservative beliefs are. “Who cares about what a bunch of dead people think? We’ve advanced our understanding of morality in all these different ways, the past is stuck in bigoted modes of thinking.”

I don’t deny that we’ve made significant moral progress, that we’ve accumulated wisdom through the years, that a civilization farther back in time is younger, not older. But to strengthen the case for conservatism: the people in the past were roughly as intellectually capable as you are. The people in the past had similar modes of thought, similar hopes and dreams to you. And there are a lot more people in the past than the present.

In The Precipice, Toby Ord describes how there have been 100 billion people who have ever lived; the 7 billion alive today represent only 7% of all humans to date.

Ord continues to describe the risks from extinction, with an eye towards why and how we might try to prevent them. But this got me thinking: assume that our species WILL go extinct in 10 years. If you are a utilitarian, whose utilities should you then try to maximize?

One straightforward answer is “let’s make people as happy as possible over the next 10 years”. But that seems somewhat unsatisfactory. In 2040, the people we’ve made happy in the interim will be just as dead as the people in 1800 are today. Of course, we have much more ability to satisfy people who are currently alive[1] — but there may be cheap opportunities to honor the wishes of people in the past, eg by visiting their graves, upholding their wills, or supporting their children.

Even if you are purely selfish, you should care about what you owe the past. This is not contingent on what other people will think, not your parents and ancestors in the past, nor your descendants or strangers in the future. But because your own past self also lives in the past. And your current self lives in the past of your future self.

Austin at 17 made a commitment: he went through the Catholic sacrament of Confirmation. Among other things, this entails spending one hour every Sunday attending Catholic mass, for the rest of his life. At the time, this was a no-brainer; being Catholic was the top value held by 17!Austin.

Austin at 27 has... a more complicated relationship with the Catholic church. But he still aims to attend Catholic mass every week — with a success rate of 95-98%. Partly because mass is good on rational merits (the utility gained from meeting up with fellow humans, thinking about ethics, meditating through prayer, singing with the congregation). But partly because he wants Austin at 37 to take seriously 27!Austin’s commitments, ranging from his GWWC pledge to the work and relationships he currently values.

And because if 27!Austin decides to ignore the values of 17!Austin, then that constitutes a kind of murder. Austin at 17 was a fully functioning human, with values and preferences and beliefs and and motivations that were completely real. 17!Austin is different in some regards, but not obviously a worse, dumber, less ethical person. If Austin at 27 chooses to wantonly forget or ignore those past values, then he is effectively erasing any remaining existence of 17!Austin.[2]

Of course, this obligation is not infinite. Austin at 27 has values that matter too! But again, it’s worth thinking through what cheap opportunities exist to honor 17!Austin - one hour a week seems reasonable. And it’s likely that 27!Austin already spends too much effort satisfying his own values, much more than would be ideal - call it “temporal discounting”, except backwards instead of forwards.[3]

So tell me: what do you owe the past? How will you pay that debt?

Inspirations

- Gwern, The Narrowing Circle: Ancestors

- Holden Karnofsky, What counts as death

Kinship with past and future selves. My future self is a different person from me, but he has an awful lot in common with me: personality, relationships, ongoing projects, and more. Things like my relationships and projects are most of what give my current moment meaning, so it's very important to me whether my future selves are around to continue them.

So although my future self is a different person, I care about him a lot, for the same sorts of reasons I care about friends and loved ones (and their future selves)

- Joe Carlsmith, Can you control the past?

- Kelsey Piper, Young people have a stake in our future. Let them vote.

- Alexander Wales, Worth the Candle (on Soul Magic aka value alteration)

- Peter Barnett, What the Future Owes Us

Thanks to Sinclair, Vlad, and Kipply for conversations on this subject, and Justis for feedback and edits to this piece.

- ^

Justis: Many readers will react with something like "well, you just can't score any utils anymore in 2040 - it doesn't matter whose values were honored when at that point; utils can only be accrued by currently living beings."

This was a really good point, thanks for flagging! I think this is somewhat compelling, though I also have an intuition that "utils can only be accrued by the present" is incomplete. Think again on the environmental conservationist; your utils in the present derive from the expected future, so violating those expectations in the future is a form of deception. Analogous to how wireheading/being a lotus-eater/sitting inside a pleasure machine is deceptive.

- ^

Justis: Calling breaking past commitments "a kind of murder" strikes me as like, super strong, as does the claim that doing so erases all traces of the past self-version. To me it seems past selves "live on" in a variety of ways, and the fulfillment of their wishes is only one among these ways.

Haha I take almost the opposite view, that "murder" really isn't that strong of a concept because we're dying all the time anyways, day-by-day and also value-by-value changed. But I did want to draw upon the sense of outrage that the word "murder" invokes.

The ways that the dead live on (eg memories in others, work they've achieved, memes they've shared) are important, but I'd claim they're important (to the dead) because those effects in the living are what the dead valued. Just as commitments are important because they represent what the dead valued. Every degree of value ignored constitutes a degree of existence erased; but it's true that commitments are only a portion of this.

- ^

Justis: I think another interesting angle/frame for honoring the past (somewhat, both in the broader cultural sense and in the within-an-individual sense) is acausal trade. So one way of thinking about honoring your past self's promises is that you'd like there to be a sort of meta promise across all your time-slices that goes like "beliefs or commitments indexed strongly at time t will be honored, to a point, at times greater than t." This is in the interests of each time slice, since it enables them to project some degree of autonomy into the future at the low price of granting that autonomy to the past. Start dishonoring too many past commitments, and it's harder to credibly commit to more stuff.

I love this framing, it does describe some of the decision theory that motivates honoring past commitments. I hesitate to use the words "acausal trade" because it's a bit jargon-y (frankly, I'm still not sure I understand "acausal trade"); and this post is already weird enough haha

MichaelA @ 2022-05-06T06:40 (+46)

For what it's worth, I get a sense of vagueness from this post, like I don't have a strong understanding of what specific claims are being made and like I predict that different readers will spot or interpret different claims from this.

I think attempting to provide a summary of the key points in the form of specific claims and arguments for/against them would be a useful exercise, to force clarity of thought/expression here. So what follows is one possible summary. Note that I think many of the arguments in this attempted summary are flawed, as I'll explain below.

"I think we should base our ethical decision-making in part on the views that people from the past (including past versions of currently living people) would've held or did hold. I see three reasons for this:

- Those past people may have been right and we may be wrong

- Those past people's utility matters, and our decisions can affect their utility

- A norm of respecting their preferences could contribute to future people respecting our preferences, which is good from our perspective"

I think (1) is obviously true, and it does seem worth people bearing it in mind. But I don't see any reason to think that people on average currently under-weight that point - i.e., that people pay less attention to past views than they should given how often past views will be better than present views. I also don't think that this post provided such arguments. So I don't think that merely stating this basic point seems very useful. (Though I do think a post providing some arguments or evidence on whether people should change how much or when they pay attention to past views would be useful.)

I think (2) is just false, if by utility we have in mind experiences (including experiences of preference-satisfaction), for the obvious reason that the past has already happened and we can't change it. This seems like a major error in the post. Your footnote 1 touches on this but seems to me to conflate arguments (2) and (3) in my above attempted summary.

Or perhaps you're thinking of utils in terms of whether preferences are actually satisfied, regardless of whether people know or experience that and whether they're alive at that time? If so, then I think that's a pretty unusual form of utilitarianism, it's a form I'd give very little weight to, and that's a point that it seems like you should've clarified in the main text.

I think (3) is true, but to me it raises the key questions "How good (if at all) is it for future people to respect our preferences?". "What are the best ways to get that to happen?", and "Are there ways to get our preferences fulfilled that are better than getting future people to respect them?" And I think that:

- It's far from obvious that it's good for future people to respect present-people-in-general's preferences.

- It's not obvious but more likely that it's good for them to respect EAs' preferences.

- It's unlikely that the best way to get them to respect our preferences is to respect past people's preferences to build a norm (alternatives include e.g. simply writing compelling materials arguing to respect our preferences, or shifting culture in various ways).

- It's likely that there are better options for getting our preferences fulfilled (relative to actively working to get future people to choose to respect our preferences), such as reducing x-risk or maybe even things like pursuing cryonics or whole-brain emulation to extend our own lifespans.

So here again, I get a feeling that this post:

- Merely flags a hypothesis in a somewhat fuzzy way

- Implies confidence in that hypothesis and in the view that this means we should spend more resources fulfilling or thinking about past people's preferences

- But it doesn't really make this explicit enough or highlight in-my-view relatively obvious counterpoints, alternative options, or further questions

...I guess this comment is written more like a review than like constructive criticism. But what I'd say on the latter front (if you're interested!) is that it seems worth trying to make your specific claims and argument structures more explicit, attempting to summarize all the key things (both because summaries are useful for readers and as an exercising in forcing clear thought), and spending more thought on alternative options and counterpoints to whatever you're initially inclined to propose.

[Note that I haven't read other comments.]

Pablo @ 2022-05-06T13:36 (+21)

Or perhaps you're thinking of utils in terms of whether preferences are actually satisfied, regardless of whether people know or experience that and whether they're alive at that time? If so, then I think that's a pretty unusual form of utilitarianism, it's a form I'd give very little weight to, and that's a point that it seems like you should've clarified in the main text.

Although I find this version of utilitarianism extremely implausible, it is actually a very common form of it. Discussions of preference-satisfaction theories of wellbeing presupposed by preference utilitarianism often explicitly point out that "satisfaction" is used in a logical rather than a psychological sense, to refer to the preferences that are actually satisfied rather than the subjective experience of satisfaction. For example, Shelly Kagan writes:

Second, there are desire or preference theories, which hold that being well-off is a matter of having one's (intrinsic) desires satisfied. What is intended here, of course, is "satisfaction" in the logician's sense: the question is simply whether or not the states of affairs that are the objects of one's various desires obtain; it is irrelevant whether or not one realizes it, or whether one gets some psychological feeling of satisfaction.

So conditional on preference utilitarianism as it is generally understood, I think (2) is true. (But, to repeat, I don't find this version of utilitarianism in the least plausible. I think the only reasons for respecting past people's preferences are instrumental reasons (societies probably function more smoothly if their members have a justified expectation that others will put some effort into satisfying their preferences posthumously) and perhaps reasons based on moral uncertainty, although I'm skeptical about the latter.)

ClaireZabel @ 2022-05-07T01:37 (+19)

I put a bunch of weight on decision theories which support 2.

A mundane example: I get value now from knowing that, even if I died, my partner would pursue certain Claire-specific projects I value being pursued because it makes me happy to know they will get pursued even if I die. I couldn't have that happiness now if I didn't believe he would actually do it, and it'd be hard for him (a person who lives with me and who I've dated for many years) to make me believe that he actually would pursue them even if it weren't true (as well as seeming sketchy from a deontological perspective).

And, +1 to Austin's example of funders; funders occasionally have people ask for retroactive funding, and say that they only did the thing because their model of the funders suggested the funder would pay.

Austin @ 2022-05-06T12:47 (+14)

Thank you so, so much for writing up your review & criticism! I think your sense of vagueness is very justified, mostly because my own post is more "me trying to lay out my intuitions" and less "I know exactly how we should change EA on account of these intuitions". I had just not seen many statements from EAs, and even less among my non-EA acquaintances, defending the importance of (1), (2), or (3) - great breakdown, btw. I put this post up in the hopes of fostering discussion, so thank you (and all the other commenters) for contributing your thoughts!

I actually do have some amount of confidence in this view, and do think we should think about fulfilling past preferences - but totally agree that I have not made those counterpoints, alternatives, or further questions available. Some of this is: I still just don't know - and to that end your review is very enlightening! And some is: there's a tradeoff between post length and clarity of argument. On a meta level, EA Forum posts have been ballooning to somewhat hard-to-digest lengths as people try to anticipate every possible counterargument; I'd push for a return to more of Sequences-style shorter chunks.

I think (2) is just false, if by utility we have in mind experiences (including experiences of preference-satisfaction), for the obvious reason that the past has already happened and we can't change it. This seems like a major error in the post. Your footnote 1 touches on this but seems to me to conflate arguments (2) and (3) in my above attempted summary.

I still believe in (2), but I'm not confident I can articulate why (and I might be wrong!). Once again, I'd draw upon the framing of deceptive or counterfeit utility. For example, I feel that involuntary wireheading or being tricked into staying in a simulation machine is wrong, because the utility provided is not a true utility. The person would not actually realize that utility if they were cognizant that this was a lie. So too would the conversationist laboring to preserve biodiversity feel deceived/not gain utility if they were aware of the future supplanting their wishes.

Can we change the past? I feel like the answer is not 100% obviously "no" -- I think this post by Joe Carlsmith lays out some arguments for why:

Overall, rejecting the common-sense comforts of CDT, and accepting the possibility of some kind of “acausal control,” leaves us in strange and uncertain territory. I think we should do it anyway. But we should also tread carefully.

(but it's also super technical and I'm at risk of having misunderstood his post to service my own arguments.)

In terms of one specific claim: Large EA Funders (OpenPhil, FTX FF) should consider funding public goods retroactively instead of prospectively. More bounties and more "this was a good idea, here's your prize", and less "here's some money to go do X".

I'm not entirely sure what % of my belief in this comes from "this is a morally just way of paying out to the past" vs "this will be effective at producing better future outcomes"; maybe 20% compared to 80%? But I feel like many people would only state 10% or even less belief in the first.

To this end, I've been working on a proposal for equity for charities -- still in a very early stage, but as you work as a fund manager, I'd love to hear your thoughts (especially your criticism!)

Finally (and to put my money where my mouth is): would you accept a $100 bounty for your comment, paid in Manifold Dollars aka a donation to the charity of your choice? If so, DM me!

Larks @ 2022-05-05T14:03 (+17)

Great, thank you so much for writing this and saving me from having to do it.

I think it's worthwhile comparing obligations to the past with those to the future. In many but not all ways ways obligations to the past look more plausible:

- Future people are separated from us in time and their existence is uncertain; past people may be temporally distant but their existence is certain, which matters for the person-affecting view.

- There are some ways of helping people (e.g. ensuring they have enough food) that work for future people but not past people.

- We have better information about the values of people in the past. We don't know what religions people will believe in the future, but we know past people would prefer we didn't dynamite the Hagia Sofia.

- There are a lot more people in the past than the present, but many more again in the future (hopefully).

- Past people were much poorer than current people (and, conditional on non-extinction, likely also future people) and hence egalitarianism pushes in favour of helping them.

Austin @ 2022-05-06T01:17 (+3)

Thanks - this comparison was clarifying to me! The point about past people being poorer was quite novel to me.

Intuitively for me, the strongest weights are for "it's easier to help the future than the past" followed by "there are a lot of possible people in the future", so on balance longtermism is more important than "pasttermism" (?). But I'd also intuit that pasttermism is under-discussed compared to long/neartermism on the margin - basically the reason I wrote this post at all.

Larks @ 2022-05-06T01:26 (+2)

Yeah I basically agree with that. I think pasttermism is basically interesting because there are plausibly some very low hanging fruit, like respecting graves, temples and monuments. The scope seems much smaller than longtermism.

Jay Bailey @ 2022-05-06T03:09 (+3)

Has this low-hanging fruit remained unpicked, however? I feel like "respecting graves, temples, and monuments" is already something most people do most of the time. Are there particularly neglected things you think we ought to do that we as a society currently don't?

Ramiro @ 2022-05-05T14:59 (+16)

Thanks for the post. Following along with the other comments, I think this community sometimes overemphasizes the utilitarian case for longtermism (that, even if sound, may be unpersuasive for non-utilitarians), and often neglects the possibility of basing it on a theory of intergenerational cooperation / justice (something that, in my opinion, is underdeveloped among contractualist political philosophers). That's why I think this discussion is quite relevant, and I commend you for bringing it up.

I agree with Justin's comment that acausal trade might be a good way to frame the relationship between agents through different time slices... but you need something even weaker than that - instead of framing it as a cooperation between two agents, think about a chain of cooperation and add backward induction: if 27!Austin finds out he'll not comply with 17!Austin commitments, he'll have strong evidence that 37!Austin won't comply with his commitments, too - which threatens plans lasting more than a decade. And this is not such a weird reasoning: individuals often ponder if they will follow their own plans (or if they will change their minds, or be driven away by temptation...), and some financial structures depend on analogous "continuing commitments" (think about long-term debt and pension funds).

(actually, I suspect acausal trade might have stronger implications than what most people here would feel comfortable with... because it may take you to something very close to a universalization principle akin to a Kantian categorical imperative - but hey, Parfit has already convinced me we're all "climbing the same mountain")

Jay Bailey @ 2022-05-05T13:50 (+13)

This is an interesting read, but I believe I disagree with the premise. I've still upvoted it, however.

I actually DO consider 20!Jay to be a dumber and less ethical person than present-day 30!Jay . And I can only hope 40!Jay is smarter and more ethical than I am. (Using "dumb" and "smart" in the colloquial terms - 20!Jay presumably had just as much IQ as I do, but he was far less knowledgeable and effective, even at pursuing his own goals - I have every confidence that I could pursue 20!Jay's goals far better than he could, even though I think 30!Jay's goals are better.)

This idea with the future and past selves is interesting, but it isn't one I think I share. I don't think 40!Jay has any obligation to 30!Jay . He should consider 30!Jay's judgement, but overall 40!Jay wins. For the same reason, I consider myself consistent that I don't consider 20!Jay's wishes to be worth following. What 20!Jay thought is worth taking into consideration as evidence, but does not carry moral weight in and of itself because he thought it.

With one exception - I would consider any pledge or promise made by 20!Jay to be, if not absolutely binding, at least fairly significant, in the same way you consider 17!Austin's commitment to mass to be. The reason the GWWC pledge is lifelong is because it is designed to bind your future self to a value system they may no longer share. I explicitly knew and accepted that when I pledged it. I don't think 40!Jay should be bound absolutely by this pledge, but he should err on the side of keeping it. Perhaps a good metric would be to ask "If 30!Jay understood what 40!Jay understands now, would HE have signed the pledge?" and then break it only if the answer is no, even if 40!Jay would not sign the pledge again.

Similarly, I believe the case for conservatism is best put the way you did - the people in the past were just as intellectually capable (Flynn effect notwithstanding) as we are. We shouldn't automatically dismiss their wisdom, just like we should consider our past selves to have valuable insights. But that's evidence, as opposed to moral weight. To be fair, I'm biased here - the people of the past believed a lot of things that I don't want to give moral weight today. People fifty years ago believed homosexuality was a form of deviancy - I don't think we owe that perspective any moral weight just because people once thought it. I have taken their wisdom into consideration, dutifully considered the possibility, and determined it to be false - that's the extent I owe them. I can only hope that when I am gone, people will seriously consider my moral views and judge them on their merits. After that, if they choose to ignore them...well, it's not my world any more, and that's their right. Hopefully they're smarter than me.

Austin @ 2022-05-06T01:27 (+5)

I deeply do not share the intuition that younger versions of me are dumber and/or less ethical. Not sure how to express this but:

- 17!Austin had much better focus/less ADHD (possibly as a result of not having a smartphone all the time), and more ability to work through hard problems

- 17!Austin read a lot more books

- 17!Austin was quite good at math

- 17!Austin picked up new concepts much more quickly, had more fluid intelligence

- 17!Austin had more slack, ability to try out new things

- 17!Austin had better empathy for the struggles of young people

This last point is a theme in my all-time favorite book, Ender's Game - that the lives of children and teenagers are real lives, but society kind of systematically underweights their preferences and desires. We stick them into compulsory schooling, deny them the right to vote and the right to work, and prevent them from making their own choices.

Jay Bailey @ 2022-05-06T03:34 (+1)

I fully believe you when you say that 17!Austin was just as smart and selfless as 27!Austin. The same pattern is not the case for 20!Jay and 30!Jay, including all your points about 17!Austin. (except the one on slack, but 20!Jay did not meaningfully use it)

That said, I don't think we're actually in disagreement on this. I believe what you say about 17!Austin, and I assume you believe what I say about 20!Jay - neither of us have known each other's past selves, so we have no reason to believe that our current selves are wrong about them.

Given that, I'm curious if there are any specific points in my original comment that you disagree with and why. I think that'd be a constructive point of discussion. Alternatively, if you agree with what I wrote, but you don't think that is a sufficient argument against what you said, that'd be interesting to hear about too.

Denis Drescher @ 2022-05-06T11:21 (+7)

Thanks for writing this up! I feel like this overlaps in places with some of the research directions that are relevant to evidential cooperation in large worlds.

- How should we idealize preferences, or how would past you or past conservationalist have wanted their preferences to be idealized. Did they want to optimize for the preferences of individual animals and just thought that conservation was a way to achieve that? Brian Tomasik could perhaps convince them otherwise. Maybe the maximally cute world optimizes for the preferences of the animals that are left much better than the real world does? Maybe it is biased toward mammals, with few offspring, so the happiness/suffering ratio is better? Even if the past conservationalist thinks that it’s not optimal, may it is better than the past world even by their lights. In other contexts, the preferences of the past people may be conditioned on the environments they found themselves in. If today’s environments are different, maybe they’d think that their past priorities are no longer applicable or necessary.

- How do the mechanics of the trade work? Superrationality hasn’t been around for long, so even the more EDT-leaning Calvinists may not actually be superrational cooperation partners. It’s also unclear how much weight past cooperators will have in the acausal bargaining solution. Maybe it’s not so much a trade than a commitment that we hope to make strong enough to also bind future generations to it. Then it’s more a question of how to design particularly permanent institutions.

- If it’s a commitment that we hope to bind future generations to, we’ll have to make various tradeoffs – how much commitment maximizes the expected commitment because too much could cause future generations to abandon it altogether. Also all the questions of preference idealization that trade off the risk of self-serving bias against the risk of acting against the informed preferences of the people you want to help.

- There’s also an interesting phenomenon where the belief in moral progress (whether justified or not) will bias everyone to think that future generations will have an easier time implementing the commitment. At least if they conceive of moral progress converging on some optimum. The difference to the past generation will continually shrink, so the further you are in the future the less do your commitments diverge from what you would’ve wanted anyway.

- I also wonder how I should conceive of dead people and past versions of myself compared to people who are very set in their ways. If there’s a person I like who has weird beliefs and explicitly refuses to update away from them no matter how much contradictory evidence they see, my respect for the person’s epistemic opinions will wane to some extent. A dead person or a past version of myself is (de facto and de jure respectively) a particularly extreme version of such a person. So I want to respect them and their preferences, but I’ll probably discount the weight of their views in my moral compromise because I don’t trust their epistemics much.

- I find this all very interesting and would in particular be interested in any examples of preferences of past people that are (1) strongly held even after slight idealization, (2) strong preferences, and (3) fairly easy to satisfy today. Past people are probably rather few, but some of them might be quite powerful because of their leverage over the future, and generally I want to protect the strong preferences of minorities in my moral compromise.

Marcel D @ 2022-05-05T20:16 (+5)

"How would that make you, the ardent conservationist, feel?"

Do you mean "how would that make the dead version of you feel"? The answer is "the dead person does not feel anything." Why should we care?

Let's be very clear: it's valid to think that the experiences of people in the past theoretically "matter" in the same fundamental way that the wellbeing of people in the future "matter": a reality in which people in the past did not suffer is a better reality than one in which people in the past did suffer. But their suffering/wellbeing while they were alive is not affected by what we choose to do in the present/future once they have died. Our choice of disregarding their wishes is not causing them to roll in their grave; they went to the grave with some belief that their wishes would or wouldn't be well regarded, and our actions now cannot affect that.

"Even if you are purely selfish, you should care about what you owe the past. [...] because your own past self also lives in the past. And your current self lives in the past of your future self."

Now we're more in the motte, it seems: yes, technically it's possible that your actions now will cause you to believe that your future self will betray your current self's wishes, which causes you suffering.

Additionally, it might be the case that in some scenarios, adopting an epistemically irrational mindset regarding temporal causality and moral obligations is instrumentally rational (utility/preference-maximizing); utilitarianism does not inherently prescribe thinking through everything with a "utilitarian mindset" or framework.

However, while I don't exactly love being the temporal Grinch here, I think it's probably for the best that people don't get too carried away by this thinking. After all, we are probably deeply betraying many of our ancestors who likely would have hated things such as respect for black people and tolerance of homosexuality, and our descendants may (hopefully!) betray many current people's desires such as by banning factory farms and perhaps even outright banning most meat consumption.

Miranda_Zhang @ 2022-05-07T00:56 (+4)

Upvoted because I thought this was a novel contribution (in the context of longtermism) and because I feel some intuitive sympathy with the idea of maintaining-a-coherent-identity.

But also agree with other commenters that this argument seems to break down when you consider the many issues that much of society has since shifted its views on (c.f. the moral monsters narrative).

I still think there's something in this idea that could be relevant to contemporary EA, though I'd need to think for longer to figure out what it is. Maybe something around option value? A lot of longtermist thought is anchored around preserving option-value for future generations, but perhaps there's some argument that we should maintain the choices of past generations (which is, why, for example, codifying things in laws and institutions can be so impactful).

Larks @ 2022-06-19T18:38 (+2)

Somewhat related Robin Hanson post on reciprocity obligations to ancestors to have children.

MaxRa @ 2022-06-04T12:29 (+1)

I had a somewhat related random stream of thoughts the other day regarding the possibility of bringing past people back to life to allow them to live the life they would like.

While I'm fairly convinced of hedonistic utilitarianism, I found the idea of "righing past wrongs" very appealing. For example allowing a person that died prematurely to live out the fulfilled life that this person would wish for themself, that would feel very morally good to me.

That idea made me wonder if it makes sense to distinguish between persons who were born, and persons that could have existed but didn't, as it seemed somewhat arbitrary to distinguish based on random fluctuations that led to the existence of one kind of person over the other. So at the end of the stream of thought I thought "Might as well spend some infinitely small fraction of our cosmic endowment on instantiating all possible kinds of beings and allow them to live the life they most desire." :D

Tyner @ 2022-05-05T20:55 (+1)

I'm not sure I understand your argument.

Are you saying (a) we have some non-zero ethical obligation to the past? Or (b) we have some non-zero ethical obligation to the past AND many people, specific people, EA, or some other group is not sufficiently meeting that obligation?

Claim (a) seems quite weak, in the sense that just about everyone already agrees. I don't think I have encountered people suggesting all past should be disavowed. We have a system of wills which very explicitly honors their wishes. Culturally, there are countless examples of people paying deference to ancestors with museums, works of art, naming of children and places etc. etc.

Claim (b) seems to be a bolder claim, and this is what Gwern implies. It does seem that the level of ethical concern for ancestors/past is dropping, but I am not at all convinced that it is dropping too far. If anything I would say that people in the past have been massively too deferential to their ancestors wishes and this downward correction is needed and insufficient. It also seems to me that it takes substantial effort to work against honoring our past selves due to sunk cost and status quo biases.

Thanks for any clarification you can offer. 👍

Antoine de Scorraille @ 2022-05-05T17:56 (+1)

Austin at 17 was a fully functioning human

17? I laught, thinking about my past self.

More seriously, great post I don't fully agree with but upvoted (cf Jay's comment), which I accept within the limits of updateless(-ish) light. As Jay said:

I actually DO consider 20!Jay to be a dumber and less ethical person than present-day 30!Jay .