Should the main text of the write-ups of Open Philanthropy’s large grants be longer than 1 paragraph?

By Vasco Grilo🔸 @ 2024-03-31T09:04 (+55)

On 17 February 2024, the mean length of the main text of the write-ups of Open Philanthropy’s largest grants in each of its 30 focus areas was only 2.50 paragraphs, whereas the mean amount was 14.2 M 2022-$[1]. For 23 of the 30 largest grants, it was just 1 paragraph. The calculations and information about the grants is in this Sheet.

Should the main text of the write-ups of Open Philanthropy’s large grants (e.g. at least 1 M$) be longer than 1 paragraph? I think greater reasoning transparency would be good, so I would like it if Open Philanthropy had longer write-ups.

In terms of other grantmakers aligned with effective altruism[2]:

- Charity Entrepreneurship (CE) produces an in-depth report for each organisation it incubates (see CE’s research).

- Effective Altruism Funds has write-ups of 1 sentence for the vast majority of the grants of its 4 funds.

- Founders Pledge has write-ups of 1 sentence for the vast majority of the grants of its 4 funds.

- Future of Life Institute’s grants have write-ups roughly as long as Open Philanthropy.

- Longview Philanthropy’s grants have write-ups roughly as long as Open Philanthropy.

- Manifund's grants have write-ups (comments) of a few paragraphs.

- Survival and Flourishing Fund has write-ups of a few words for the vast majority of its grants.

I encourage all of the above except for CE to have longer write-ups. I focussed on Open Philanthropy in this post given it accounts for the vast majority of the grants aligned with effective altruism.

Some context:

- Holden Karnofsky posted about how Open Philanthropy was thinking about openness and information sharing in 2016.

- There was a discussion in early 2023 about whether Open Philanthropy should share a ranking of grants it produced then.

- ^

Open Philanthropy has 17 broad focus areas, 9 under global health and wellbeing, 4 under global catastrophic risks (GCRs), and 4 under other areas. However, its grants are associated with 30 areas.

I define main text as that besides headings, and not including paragraphs of the type:

- “Grant investigator: [name]”.

- “This page was reviewed but not written by the grant investigator. [Organisation] staff also reviewed this page prior to publication”.

- “This follows our [dates with links to previous grants to the organisation] support, and falls within our focus area of [area]”.

- “The grant amount was updated in [date(s)]”.

- “See [organisation's] page on this grant for more details”.

- “This grant is part of our Regranting Challenge. See the Regranting Challenge website for more details on this grant”.

- “This is a discretionary grant”.

I count lists of bullets as 1 paragraph.

- ^

The grantmakers are ordered alphabetically.

MathiasKB @ 2024-03-31T12:04 (+140)

I think it's a travesty that so many valuable analyses are never publicly shared, but due to unreasonable external expectations it's currently hard for any single organization to become more transparent without occurring enormous costs.

If open phil actually were to start publishing their internal analyses behind each grant, I will bet you at good odds the the following scenario is going to play out on the EA Forum:

- Somebody digs deep into a specific analysis carried out. It turns out Open Phil’s analysis has several factual errors that any domain expert could have alerted them to, additionally they entirely failed to consider some important aspect which may change the conclusion.

- Somebody in the comments accuses Open Phil of shoddy and irresponsible work. That they are making such large donations decisions based on work filled with errors, proves their irresponsibility. Moreover, why have they still not responded to the criticism?

- A new meta-post argues that the EA movement needs reform, and uses the above as one of several examples showing the incompetence of ‘EA leadership’.

Several things would be true about the above hypothetical example:

- Open Phil’s analysis did, in fact, have errors.

- It would have been better for Open Phil’s work not to have those errors.

- The errors were only found because they chose to make the analysis public.

- The costs for Open Phil to reduce the error rate of analyses, would not be worth the benefits.

- These mistakes were found, and at no cost (outside of reputation) to the organization.

Criticism shouldn’t have to warrant a response if it takes time away from work which is more important. The internal analyses from open phil I’ve been privileged to see were pretty good. They were also made by humans, who make errors all the time.

In my ideal world, every one of these analyses would be open to the public. Like open-source programming people would be able to contribute to every analysis, fixing bugs, adding new insights, and updating old analyses as new evidence comes out.

But like an open-source programming project, there has to be an understanding that no repository is ever going to be bug-free or have every feature.

If open phil shared all their analyses and nobody was able to discover important omissions or errors, my main conclusion would be they are spending far too much time on each analysis.

Some EA organizations are held to impossibly high standards. Whenever somebody points this out, a common response is: “But the EA community should be held to a higher standard!”. I’m not so sure! The bar is where it’s at because it takes significant effort to higher it. EA organizations are subject to the same constraints the rest of the world is subject to.

More openness requires a lowering of expectations. We should strive for a culture that is high in criticism, but low in judgement.

cata @ 2024-03-31T23:48 (+43)

I think you are placing far too little faith in the power of the truth. None of the events you list above are bad. It's implied that they are bad because they will cause someone to unfairly judge Open Phil poorly. But why presume that more information will lead to worse judgment? It may lead to better judgment.

As an example, GiveWell publishes detailed cost-effectiveness spreadsheets and analyses, which definitely make me take their judgment way more seriously than I would otherwise. They also provide fertile ground for criticism (a popular recent magazine article and essay did just that, nitpicking various elements of the analyses that it thought were insufficient.) The idea that GiveWell's audience would then think worse of them in the end because of the existence of such criticism is not credible to me.

David T @ 2024-04-01T14:43 (+21)

Agreed. GiveWell has revised their estimates numerous times based on public feedback, including dropping entire programmes after evidence emerged that their initial reasons for funding were excessively optimistic, and is nevertheless generally well-regarded including outside EA. Most people understand its analysis will not be bug free.

OpenPhil's decision to fund Wytham Abbey, on the other hand, was hotly debated before they'd published even the paragraph summary. I don't think declining to make any metrics available except the price tag increased people's confidence in the decision making process, and participants in it appear to admit that with hindsight they would have been better off doing more research and/or more consideration of external opinion. If the intent is to shield leadership from criticism, it isn't working.

Obviously GiveWell exists to advise the public so sharing detail is their raison d'etre, whereas OpenPhil exists to advise Dustin Moskovitz and Cari Tuna, who will have access to all the detail they need to decide on a recommendation. But I think there are wider considerations to publicising more on the project and the rationale behind decisions even if OpenPhil doesn't expect to find corrections to its calculations useful

- Increased clarity about funding criteria would reduce time spent (on both sides) on proposals for projects OpenPhil would be highly unlikely to fund, and probably improve the relevance and quality of the average submission.

- There are a lot of other funders out there and many OpenPhil supported causes have room for additional funding.

- Publicly-shared OpenPhil analysis could help other donors conclude particular organizations are worth funding (just as I imagine OpenPhil itself is happy to use assessments by organizations it trusts), ultimately leading to its favoured causes having more funds at their disposal

- Or EA methodologies could in theory be adopted by other grantmakers doing their own analysis. It seems private foundations are much happier borrowing more recent methodological ideas from Mackenzie Scott, but generally have a negative perception of EA. Adoption of TBF might be mainly down to its relative simplicity, but you don't exactly make a case for the virtues of the ITN framework by hiding the analysis...

- Lastly, whilst OpenPhil's primary purpose is to help Dustin and Cari give their money away it's also the flagship grantmaker of EA, so the signals it sends about effectiveness, rigour, transparency and willingness to update has an outsized effect on whether people believe the movement overall is living up to its own hype. I think that alone is a bigger reputational issue than a grantmaker using a disputed figure or getting their sums wrong.

The non-reputational costs matter too and it'd be unreasonable to expect enormously time-consuming GiveWell and CE style analysis for every grant, especially with the grants already made and recipients sometimes not even considering additional funding sources. But there's a happy medium between elaborate reasoning/spreadsheets and a single paragraph. Even publishing sections from the original application (essentially zero additional work) would be an improvement in transparency.

Habryka @ 2024-03-31T23:40 (+27)

As a critic of many institutions and organizations in EA, I agree with the above dynamic and would like people to be less nitpicky about this kind of thing (and I tried to live up to that virtue by publishing my own quite rough grant evaluations in my old Long Term Future Fund writeups)

Vasco Grilo @ 2024-03-31T15:44 (+22)

Thanks for the thoughtful reply, Mathias!

I think it's a travesty that so many valuable analyses are never publicly shared, but due to unreasonable external expectations it's currently hard for any single organization to become more transparent without occurring enormous costs.

I think this applies to organisations with uncertain funding, but not Open Philanthropy, which is essentially funded by a billionaire quite aligned with their strategy?

The internal analyses from open phil I’ve been privileged to see were pretty good. They were also made by humans, who make errors all the time.

Even if the analyses do not contain errors per se, it would be nice to get clarity on morals. I wonder whether Open Philanthropy's prioritisation among human and animal welfare interventions in their global health and wellbeing (GHW) portfolio considers 1 unit of welfare in humans as valuable as 1 unit of welfare in animals. It does not look like so, as I estimate the cost-effectiveness of corporate campaigns for chicken welfare is 680 times Open Philanthropy's GHW bar.

The costs for Open Phil to reduce the error rate of analyses, would not be worth the benefits.

[...]

Criticism shouldn’t have to warrant a response if it takes time away from work which is more important.

On the one hand, I agree it is important to be mindful of the time it would take to improve decisions. On the other, I think it would be quite worth it for Open Philanthropy to have the main text of the write-ups of its millionaire grants longer than 1 paragraph, and to explain how they prioritise between human and animal interventions. Hundreds of millions of dollars are at stake in these decisions. Open Philanthropy also has great researchers which could (relatively) quickly provide adequate context for their decisions. My sense is that transparency is not among Open Philanthropy's priorities.

Transparency also facilates productive criticism.

Jason @ 2024-03-31T23:19 (+15)

There's a lot of room between publishing more than ~1 paragraph and "publishing their internal analyses." I didn't read Vasco as suggesting publication of the full analyses.

Assertion 4 -- "The costs for Open Phil to reduce the error rate of analyses, would not be worth the benefits" -- seems to be doing a lot of work in your model here. But it seems to be based on assumptions about the nature and magnitude of errors that would be detected. If a number of errors were material (in the sense that correcting them would have changed the grant/no grant decision, or would have seriously changed the funding level), I don't think it would take many errors for assertion 4 to be incorrect.

Moreover, if an error were found in -- e.g., a five-paragraph summary of a grant rationale -- the odds of the identified error being material / important would seem higher than the average error found in (say) a 30-page writeup. Presumably the facts and conclusions that made the short writeup would be ~the more important ones.

NickLaing @ 2024-04-01T14:39 (+6)

What you say is true. One thing to keep in mind is that academic data, analysis and papers are usually all made public these days. Yes with OpenPhil funding rather than just academic rigor is involved, but I feel like we should aim to at least have the same level of transparency as academia...

DPiepgrass @ 2024-04-14T01:27 (+4)

What if, instead of releasing very long reports about decisions that were already made, there were a steady stream of small analyses on specific proposals, or even parts of proposals, to enlist others to aid error detection before each decision?

Linch @ 2024-04-05T23:01 (+34)

(I work at EA Funds)

- Charity Entrepreneurship (CE) produces an in-depth report for each organisation it incubates (see CE’s research).

- Effective Altruism Funds has write-ups of 1 sentence for the vast majority of the grants of its 4 funds.

These seem like pretty unreasonable comparisons unless I'm missing something. Like entirely different orders of magnitude. For context, Long-Term Future Fund (which is one of 4 EA Funds) gives out about 200 grants a year.

If I understand your sources correctly, CE's produces like 4 in-depth reports a cycle (and I think they have 1-2 cycles a year)? So 4-8 grants/year[1]

I would guess that EA Funds gives out more grants (total number, not dollar amount) than all the other orgs in your list combined except OP[2].

Of course it's a lot easier to write in-depth reports of 8 grants than for 400! Like you could write a report every month and still have some time left over to proofread. [3]

- ^

I think they also have more employees.

- ^

I'm more confident if you exclude SFF, which incidentally also probably gives out more grants than all the other orgs combined on this list if you exclude OP and EA Funds.

- ^

When LTFF "only" gave out 27 grants in a cycle, they were able to write pretty detailed reasoning for each of their grants.

Vasco Grilo @ 2024-04-08T10:43 (+11)

Hi Linch,

I estimated CE shares 52.1 (= 81.8/1.57) times as much information per amount granted as EA Funds:

- For CE's charities, the ratio between the mean length of the reports respecting their top ideas in 2023 in global health and development and mean seed funding per charity incubated in 2023 was 81.8 word/k$ (= 11.7*10^3/(143*10^3)).

- The mean length of the reports respecting their top ideas in 2023 in global health and development was 11.7 k words[1] (= (12,294 + 9,652 + 14,382 + 10,385)/4). I got the number of words clicking on "Tools" and "Word count" on Google Docs.

- The mean seed funding per charity incubated in 2023 was 143 k$ (= (190 + 110 + 130 + 130 + 185 + 150 + 155 + 93)*10^3/8).

- Ideally, I would get the seed funding per length of the report for each of the charities incubated in 2023, and then take the mean, but this would take some time to match each charity to a given report, and I do not think it would qualitatively change my main point.

- For EA Funds' grants, the mean length of the write-up per amount granted is 1.57 word/k$. I am only accounting for the write-ups in the database, but:

- I am also excluding CE's documents linked in their main reports.

- EA Funds' EA Forum posts only cover a tiny minority of their grants, and the number above would not be affected much if there were a few grants with higher ratios.

The mean length of the write-up of EA Funds' grants is 14.4 words, so it would have to increase to 750 words (= 14.4*52.1) in order for EA Funds to share as much information per amount granted as CE. 750 words is around 2 pages, so I reiterate my suggestion of EA Funds having write-ups of "a few paragraphs to 1 page instead of 1 sentence for more grants, or a few restrospective impact evaluations".

- ^

There were no top ideas in 2023 in animal welfare nor EA meta.

Linch @ 2024-04-08T20:57 (+4)

EDIT: I think there's a database issue, when I try to delete this comment I think it might also delete a comment in a different thread. To be clear I still endorse this comment, just not its location.

This analysis can't be right. The most recent LTFF payout report alone is 13000 words, which covered 327 grantees, or an average of 40 words/grant (in addition to the other information in eg the database).

EDIT: You say:

EA Funds' EA Forum posts only cover a tiny minority of their grants, and the number above would not be affected much if there were a few grants with higher ratios.

But this isn't true if you actually look at the numbers, since our non-database writings are more in fact more extensive, enough to change the averages substantially[1].

- ^

Also what you call a "tiny minority" is still more grants than total CE incubated charities that year!

Vasco Grilo @ 2024-04-08T21:06 (+2)

Thanks for following up, Linch!

I have replaced "I am excluding EA Forum posts [to calculate the mean length of the write-up per amount granted for EA Funds]" by "I am only accounting for the write-ups in the database", which was what I meant. You say that report covered 327 grantees, but it is worth clarifying you only have write-ups of a few paragraphs for 15 grants, and of 1 sentence for the rest.

In any case, EA Funds' mean amount granted is 76.0 k$, so the 40 words/grant you mentioned would result in 0.526 word/k$ (= 40/(76.0*10^3)), which is lower than the 1.57 word/k$ I estimated above. I do not think it would be fair to add both estimates, because I would be double counting information, as you reproduce in this section of the write-up you linked the 1 sentence write-ups which are also in the database.

Here is an easy way of seeing the Long-Term Future Fund (LTFF) shares way less information than CE. The 2 grants you evaluated for which there is a "long" write-up have 1058 words (counting the titles in bold), i.e. 529 words/grant (= 1058/2). So, even if EA Funds had similarly "long" write-ups for all grants, the mean length of the write-up per amount granted would be 6.96 word/k$ (= 529/(76.0*10^3)), which is still just 8.51 % (= 6.96/81.8) of CE's 81.8 word/k$. Given this, I (once again) reiterate my suggestion of EA Funds having write-ups of "a few paragraphs to 1 page instead of 1 sentence for more grants, or a few restrospective impact evaluations".

Linch @ 2024-04-08T22:35 (+17)

Thanks for engaging as well. I think I disagree with much of the framing of your comment, but I'll try my best to only mention important cruxes.

- I don't think wordcount is a good way to measure information shared

- I don't think "per amount granted" is a particularly relevant denominator when different orgs have very different numbers of employees per amount granted.

- I don't think grantmakers and incubators are a good like-for-like comparison.

- As a practical matter, I neither want to write 500-1000 pages/year of grants nor think it's the best use of my time.

Here is an easy way of seeing LTFF shares way less information than CE. The 2 grants you evaluated for which there is a "long" write-up have 1058 words[...]

I don't think wordcount is a good way to measure information shared.

I don't think wordcount is a fair way to estimate (useful) information shared. I mean it's easy to write many thousands of words that are uninformative, especially in the age of LLMs. I think to estimate useful information shared, it's better to see how much people actually know about your work, and how accurate their beliefs are.

As an empirical crux, I predict the average EAF reader, or EA donor, knows significantly more about LTFF than they do about CE, especially when adjusting for number of employees[1]. I'm not certain that this is true since I obviously have a very skewed selection, so I'm willing to be updated otherwise. I also understand that "EAF reader" is probably not a fair comparison since a) we crosspost often on the EA Forum and maybe CE doesn't as much, and b) much of CE's public output is in global health, which at least in theory has a fairly developed academic audience outside of EA. I'd update towards the "CE shares a lot more information than EA Funds" position if either of the following turned out to be true:

- In surveys, people, and especially donors, are more empirically knowledgeable about CE work than LTFF work.

- CE has much more views/downloads of their reports than LTFF.

- I looked at my profile again and I think I wrote a post about EA Funds work ~once a month, which I think makes it a fair comparison comparable to the 4-8 reports/year CE writes

- For context, skimming analytics, we have maybe 1k-5k views/post.

- EA Forum has a "reads" function for how many people spend over x minutes on the post, for my posts I think it's about 1/3 - 1/2 of views.

- (To be clear, quantity =/= quality or quality-adjusted quantity. I'd also update towards your position if somebody from CE or elsewhere tells me that they have slightly less views but their reports are much more decision-relevant for viewers).

I don't think "per amount granted" is a particularly relevant denominator when different orgs have very different numbers of employees per amount granted.

I don't know how many employees CE has. I'd guess it's a lot (e.g. 19 people on their website). EA Funds has 2 full-time employees and some contractors (including for grantmaking and grants disbursement). I'm ~ the only person at EA Funds who has both the time and inclination to do public writing.

Obviously if you have more capacity, you can write more.

I would guess most EA grantmakers (the orgs you mentioned, but also GiveWell) will have a closer $s granted/FTEs ratio to EA Funds than to CE.

If anything, looking at the numbers again, I suspect CE should be devoting more efforts to fundraising and/or finding more scalable interventions. But I'm an outsider and there is probably a lot of context I'm missing.

I don't think grantmakers and opinionated incubators are a good like-for-like comparison.

Does that mean I think CE staff aren't doing useful things? Of course not! They're just doing very different things. CE calls itself an incubator but much of their staff should better be understood as "researchers" trying to deeply understand an issue. (Like presumably they understand the interventions their incubees work on much better than say YC does). It makes a lot of sense to me that researchers for an intervention can and will go into a lot of depth about an intervention[2]. Similarly, EA Funds' grantees also can and often do go into a lot of depth about their work.

The main difference between EA Funds is that as a superstructure, we don't provide research support for our grantees. Whereas you can think of CE as an org that provides initial research support for their incubees so the incubees can think more about strategy and execution.

Just different orgs doing different work.

As a practical matter, I neither want to write 500-1000 pages/year of grants nor think it's the best use of my time.

Nobody else at EA Funds has time/inclination to publicly write detailed reports. If we want to see payout reports for any of the funds this year, most likely I'd have to write it myself. I personally don't want to write upwards of a thousand grants this year. It frankly doesn't sound very fun.

But I'm being paid for impact, not for having fun, so I'm willing to make such sacrifices if the utility gods demand it. So concretely, I'd be interested in what projects I ought to drop to write up all the grants. Eg, to compare like-for-like, I'd find it helpful if you or others can look at my EA Funds' related writings and tell me which posts I ought to drop so I can spend more time writing up grants.

- ^

EA Funds has ~2 full-time employees, and maybe 5-6 FTEs including contractors.

- ^

And indeed when I did research I had a lot more time to dive into specific interventions than I do now.

Vasco Grilo @ 2024-04-09T12:24 (+4)

Thanks for the detailed comment. I strongly upvoted it.

I don't think wordcount is a good way to measure information shared.

I don't think wordcount is a fair way to estimate (useful) information shared. I mean it's easy to write many thousands of words that are uninformative, especially in the age of LLMs. I think to estimate useful information shared, it's better to see how much people actually know about your work, and how accurate their beliefs are.

I agree the number of words per grant is far from an ideal proxy. At the same time, the median length of the write-ups on the database of EA Funds is 15.0 words, and accounting for what you write elsewhere does not impact the median length because you only write longer write-ups for a small fraction of the grants, so the median information shared per grant is just a short sentence. So I assume donors do not have enough information to assess the median grant.

On the other hand, donors do not necessarily need detailed information about all grants because they could infer how much to trust EA Funds based on longer write-ups for a minority of them, such as the ones in your posts. I think I have to recheck your longer write-ups, but I am not confident I can assess the quality of the grants with longer write-ups based on these alone. I suspect trusting the reasoning of EA Funds' fund managers is a major reason for supporting EA Funds. I guess me and others like longer write-ups because transparency is often a proxy for good reasoning, but we had better look into the longer write-ups, and assess EA Funds based on them rather than the median information shared per grant.

I don't think "per amount granted" is a particularly relevant denominator when different orgs have very different numbers of employees per amount granted.

At least a priori, I would expect the information shared about a grant to be proportional to the amount of effort put into assessing it, and this to be proportional to the amount granted, in which case the information shared about a grant would be proportional to the amount granted. The grants you assessed in LTFF's most recent report were of 200 and 71 k$, and you wrote a few paragraphs about each of them. In contrast, CE's seed funding per charity in 2023 ranged from 93 to 190 k$, but they wrote reports of dozens of pages for each of them. This illustrates CE shares much more information about the interventions they support than EA Funds' shares about the grants for which there are longer write-ups. So it is possible to have a better picture of CE's work than EA Funds'. This is not to say CE's donors actually have a better picture of CE's work than EA Funds' donors have of EA Funds' work. I do not know how whether CE's donors look into their reports. However, I guess it would still be good for EA Funds to share some in-depth analyses of their grants.

I don't think grantmakers and opinionated incubators are a good like-for-like comparison.

Does that mean I think CE staff aren't doing useful things? Of course not! They're just doing very different things. CE calls itself an incubator but much of their staff should better be understood as "researchers" trying to deeply understand an issue.

I guess EA Funds' would benefit from having researchers in that sense. I like that Founders Pledge produces lots of research informing the grantmaking of their funds.

As a practical matter, I neither want to write 500-1000 pages/year of grants nor think it's the best use of my time.

How about just making some applications public, as Austin suggested? I actually think it would be good to make public the applications of all grants EA Funds makes, and maybe even rejected applications. Information which had better remain confidential could be removed from the public version of the application.

Linch @ 2024-04-09T23:22 (+6)

(Appreciate the upvote!)

At a high level, l I'm of the opinion that we practice better reasoning transparency than ~all EA funding sources outside of global health, e.g. a) I'm responding to your thread here and other people have not, b) (I think) people can have a decent model of what we actually do rather than just an amorphous positive impression, and c) I make an effort of politely delivering messages that most grantmakers are aware of but don't say because they're worried about flack.

It's really not obvious that this is the best use of limited resources compared to e.g. engaging with large donors directly or having very polished outwards-facing content, but I do think criticizing our lack of public output is odd given that we invest more in it than almost anybody else.

(I do wonder if there's an effect where because we communicate our overall views so much, we become a more obvious/noticeable target to criticize.)

This illustrates CE shares much more information about the interventions they support than EA Funds' shares about the grants for which there are longer write-ups. So it is possible to have a better picture of CE's work than EA Funds'. This is not to say CE's donors actually have a better picture of CE's work than EA Funds' donors have of EA Funds' work. I do not know how whether CE's donors look into their reports.

Well, I haven't read CE's reports. Have you?

I think you have a procedure-focused view where the important thing is that articles are written, regardless of whether they're read. I mostly don't personally think it's valuable to write things people don't read. (though again for all I know CE's reports are widely read, in which case I'd update!) And it's actually harder to write things people want to read than to just write things.

(To be clear, I think there are exceptions. Eg all else equal, writing up your thoughts/cruxes/BOTECs are good even if nobody else reads them because it helps with improving quality of thinking).

How about just making some applications public, as Austin suggested? I actually think it would be good to make public the applications of all grants EA Funds makes, and maybe even rejected applications.

We've started working on this, but no promises. My guess is that making public the rejected applications is more valuable than accepted ones, eg on Manifund. Note that grantees also have the option to upload their applications as well (and there are less privacy concerns if grantees choose to reveal this information).

Vasco Grilo @ 2024-04-13T14:13 (+2)

We've started working on this [making some application public], but no promises. My guess is that making public the rejected applications is more valuable than accepted ones, eg on Manifund. Note that grantees also have the option to upload their applications as well (and there are less privacy concerns if grantees choose to reveal this information).

Manifund already has quite a good infrastructure for sharing grants. However, have you considered asking applicants to post a public version of their applications on EA Forum? People who prefer to remain anonymous could use an anonymous account, and anonymise the public version of their grant. At a higher cost, there would be a new class of posts[1] which would mimic some of the features of Manifund, but this is not strictly necessary. The posts with the applications could simply be tagged appropriately (with new tags created for the purpose), and include a standardised section with some key information, like the requested amount of funding, and the status of the grant (which could be changed over time editing the post).

The idea above is inspired by some thoughts from Hauke Hillebrandt.

- ^

As of now, there are 3 types, normal posts, question posts and linkposts/crossposts.

Linch @ 2024-04-13T22:24 (+4)

Grantees are obviously welcome to do this. That said, my guess is that this will make the forum less enjoyable/useful for the average reader, rather than more.

David T @ 2024-04-13T22:59 (+3)

I think a dedicated area would minimise the negative impact on people that aren't interested whilst potentially adding value (to prospective applicants in understanding what did and didn't get accepted, and possibly also to grant assessors if there was occasional additional insight offered by commenters)

I 'd expect there would be some details of some applications that wouldn't be appropriate to share on a public forum though

Linch @ 2024-04-14T02:57 (+4)

I 'd expect there would be some details of some applications that wouldn't be appropriate to share on a public forum though

Hopefully grantees can opt-in/out as appropriate! They don't need so share everything.

Vasco Grilo @ 2024-04-14T09:12 (+2)

Grantees are obviously welcome to do this.

Right, but they have not been doing it. So I assume EA Funds would have to at least encourage applicants to do it, or even make it a requirement for most applications. There can be confidential information in some applications, but, as you said below, applicants do not have to share everything in their public version.

That said, my guess is that this will make the forum less enjoyable/useful for the average reader, rather than more.

I guess the opposite, but I do not know. I am mostly in favour of experimenting with a few applications, and then deciding whether to stop or scale up.

Vasco Grilo @ 2024-04-10T09:07 (+2)

(I do wonder if there's an effect where because we communicate our overall views so much, we become a more obvious/noticeable target to criticize.)

To be clear, the criticisms I make in the post and comments apply to all grantmakers I mentioned in the post except for CE.

Well, I haven't read CE's reports. Have you?

I have skimmed some, but the vast majority of my donations have been going to AI safety interventions (via LTFF). I may read CE's reports in more detail in the futute, as I have been moving away from AI safety to animal welfare as the most promising cause area.

I think you have a procedure-focused view where the important thing is that articles are written, regardless of whether they're read.

I do not care about transparency per se[1], but I think there is usually a correlation between it and cost-effectiveness (for reasons like the ones you mentioned inside parentheses). So, a priori, lower transparency updates me towards lower cost-effectiveness.

We've started working on this, but no promises.

Cool!

My guess is that making public the rejected applications is more valuable than accepted ones, eg on Manifund.

I can see this being the case, as people currently get to know about most accepted aplications, but nothing about the rejected ones.

- ^

I fully endorse expected total hedonistic utilitarianism, so I only intrinsically value/disvalue positive/negative conscious experiences.

Linch @ 2024-04-08T22:41 (+5)

Less importantly,

In any case, EA Funds' mean amount granted is 76.0 k$, so 52 words/grant would result in 0.684 word/k$ (= 52/(76.0*10^3)), which is lower than the 1.57 word/k$ I estimated above

You previously said:

> The mean length of the write-up of EA Funds' grants is 14.4 words

So I'm a bit confused here.

Also for both LTFF and EAIF, when I looked at mean amount granted in the past, it was under $40k rather than $76k. I'm not sure how you got $76k. I suspect at least some of the difference is skewed upwards by our Global Health and Development fund. Our Global Health and Development fund has historically ~only given to GiveWell-recommended projects, and

- GiveWell is often considered the gold standard in EA transparency.

- It didn't seem necessary for our GHD grant managers (who also work at GiveWell) to justify their decisions twice since they already wrote up their thinking at GiveWell.

Vasco Grilo @ 2024-04-09T10:11 (+2)

You previously said:

> The mean length of the write-up of EA Funds' grants is 14.4 words

This is the mean number of words of the write-ups on EA Funds' database. 52 words in my last comment was supposed to be the words per grant regarding the payout report you mentioned. I see now that you said 40 words, so I have updated my comment above (the specific value does not affect the point I was making).

Also for both LTFF and EAIF, when I looked at mean amount granted in the past, it was under $40k rather than $76k. I'm not sure how you got $76k. I suspect at least some of the difference is skewed upwards by our Global Health and Development fund.

Makes sense. For LTFF and the Effective Altruism Infrastructure Fund (EAIF), I get a mean amount granted of 42.9 k$. For these 2 funds plus the Animal Welfare Fund (AWF), 47.4 k$, so as you say the Global Health and Development Fund (GHDF) pushes up the mean across all 4 funds.

I am calculating the mean amount granted based on the amounts provided in the database, without any adjustments for inflation.

Our Global Health and Development fund has historically ~only given to GiveWell-recommended projects, and

- GiveWell is often considered the gold standard in EA transparency.

- It didn't seem necessary for our GHD grant managers (who also work at GiveWell) to justify their decisions twice since they already wrote up their thinking at GiveWell.

I agree. However, the 2nd point also means donating to GHDF has basically the same effect as donating to GiveWell's funds, so I think GHDF should be doing something else. To the extent Caleb Parikh seems to dispute this a little, I would say it would be worth having public writings about it.

Linch @ 2024-04-09T23:11 (+2)

Hmm, I still think your numbers are not internally consistent but I don't know if it's worth getting into.

Austin @ 2024-04-05T23:20 (+6)

For sure, I think a slightly more comprehensive comparison of grantmakers would include the stats for the number of grants, median check size, and amount of public info for each grant made.

Also, perhaps # of employees, or ratio of grants per employee? Like, OpenPhil is ~120 FTE, Manifund/EA Funds are ~2, this naturally leads to differences in writeup-producing capabilities.

Vasco Grilo @ 2024-04-06T09:32 (+2)

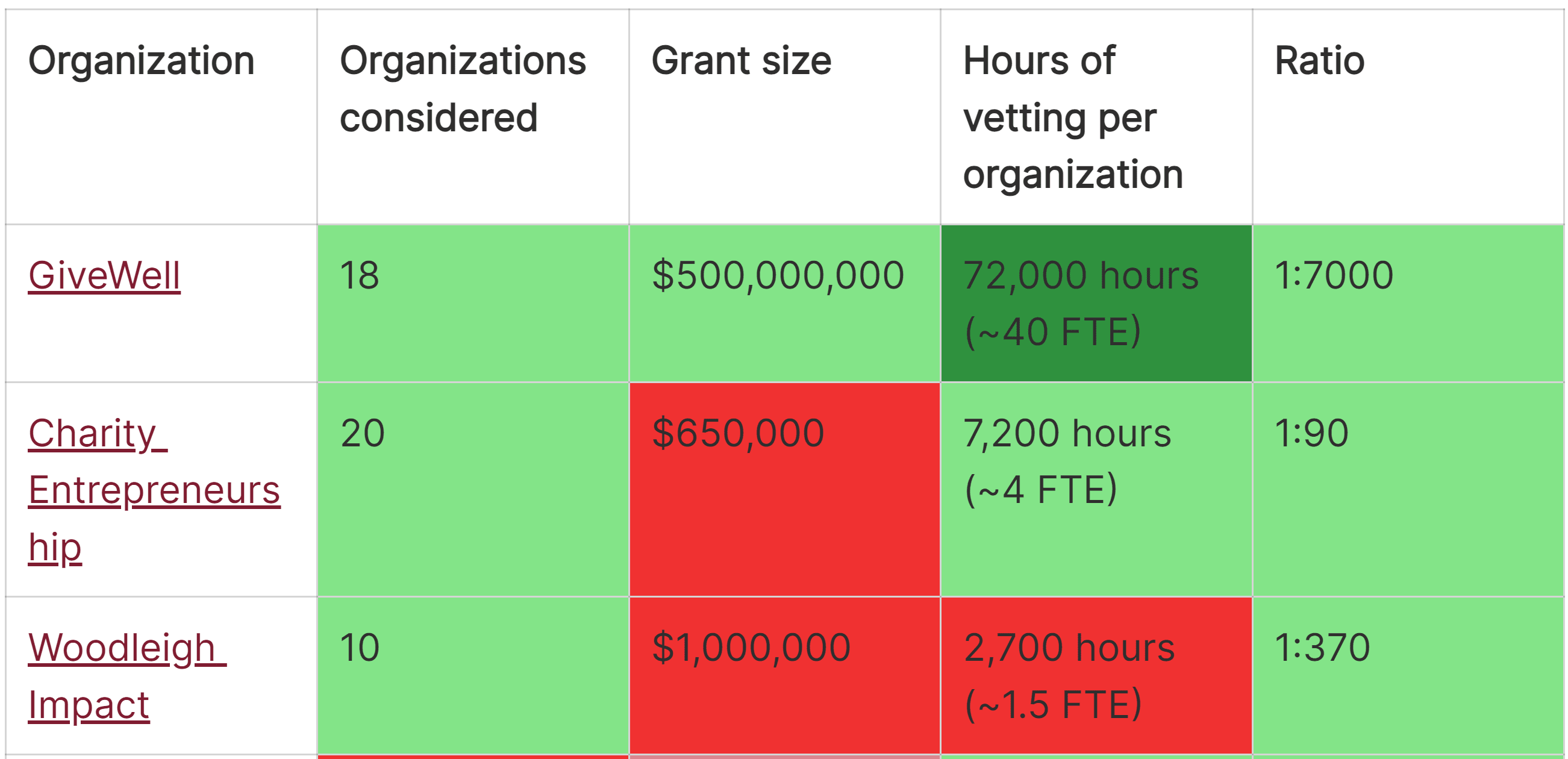

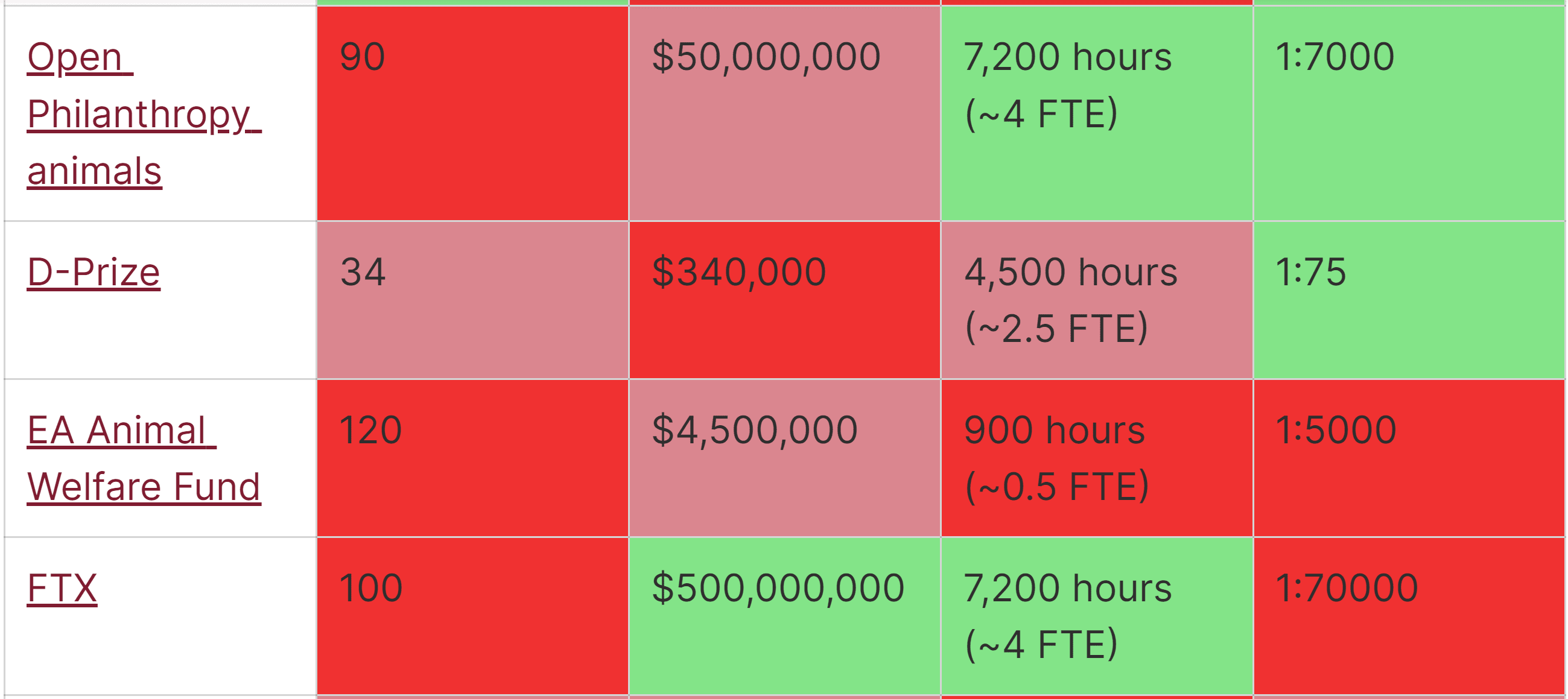

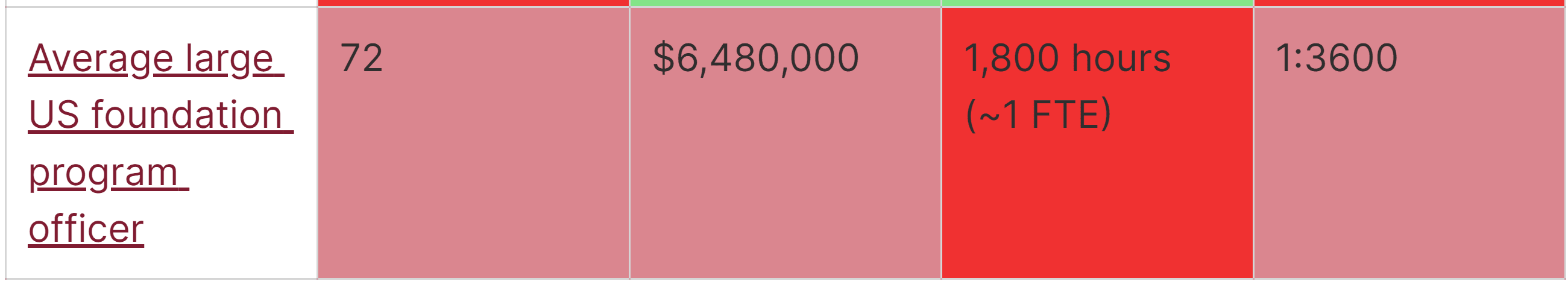

Thanks, Austin. @Joey did an analysis 2 years ago (published on 21 June 2022) where he estimated the ratio between total hours of vetting and dollars granted for various organisations. Here is the table with the results:

I am little confused by the colour coding. In the last column, I think "1:5000" and "1:3600" should be in green given "1:7000" is in green.

It would be nice to have an updated table for 2023 with total amount granted, total words in public write-ups, total cost (excluding grants), ratio between total amount granted and cost, and ratio between total amount granted and words in public write-ups. Maybe @Sjir Hoeijmakers and @Michael Townsend could do this as part of Giving What We Can's project to evaluate the evaluators.

Linch @ 2024-04-05T23:03 (+4)

(I'm also not sure your list is comprehensive, eg Longview only has 12 writeups on their website and you say they "have write-ups roughly as long as Open Philanthropy," but I'm pretty sure they gave out more grants than that (and have not written about them at all).

Elizabeth @ 2024-04-10T19:22 (+2)

Comparing write-ups of incubatees (that CE has invested months in and would like to aid in fundraising), to grants seems completely out of left field to me.

Vasco Grilo @ 2024-04-06T09:09 (+2)

Thanks for the comment, Linch!

I said:

I encourage all of the above except for CE to have longer write-ups.

I was not clear, but I did not mean to encourage all grantmakers I listed to have write-ups as long as CE's report, which I agree would make little sense. I just meant longer write-ups relative to their current length. For EA Funds, I guess a few paragraphs to 1 page instead of 1 sentence for more grants, or a few restrospective impact evaluations would still be worth it.

Austin @ 2024-04-01T23:04 (+17)

Manifund is pretty small in comparison to these other grantmakers (we've moved ~$3m to date), but we do try to encourage transparency for all of our grant decisions; see for example here and here.

A lot of our transparency just comes from the fact that we have our applicants post their application in public -- the applications have like 70% of the context that the grantmaker has. This is a pretty cheap win; I think many other grantmakers could do if they just got permission from the grantees. (Obviously, not all applications are suited for public posting, but I would guess ~80%+ of EA apps would be.)

Vasco Grilo @ 2024-04-02T09:10 (+2)

Thanks for commenting, Austin! I think it is great that Manifund shares more information about its grants than all grantmakers I mentioned except for CE (which is incubating organisations, so it makes sense they have more to share).

Sorry for not having mentioned Manifund. I have now added:

Manifund's grants have write-ups (comments) of a few paragraphs, and the applications for funding are made public.

I have been donating to the Long-Term Future Fund (LTFF), but, if I was going to donate now[1], I think I would either pick specific organisations, or Manifund's regrantors or projects.

- ^

I usually make my annual donations late in the year.

Austin @ 2024-04-04T19:57 (+4)

Thanks for updating your post and for the endorsement! (FWIW, I think the LTFF remains an excellent giving opportunity, especially if you're in less of a position to evaluate specific regrantors or projects.)

Ben Millwood @ 2024-04-03T10:33 (+15)

[edit: this is now linked from the OP] Relevant blog post from OpenPhil in 2016: Update on How We’re Thinking about Openness and Information Sharing

Vasco Grilo @ 2024-04-03T12:59 (+2)

Thanks, Ben! Strongly upvoted. I did not know about that, but have now added it to the post:

Holden Karnofsky posted about how Open Philanthropy was thinking about openness and information sharing in 2016.

Jason @ 2024-04-11T15:09 (+10)

I'm late to the discussion, but I'm curious how much of the potential value would be unlocked -- at least for modest size / many grants orgs like EA Funds -- if we got a better writeup for a random ~10 percent of grants (with the selection of the ten percent happening after the grant decisions were made).

If the idea is to see the quality of the median grant, not assess individual grants, then a random sample should work ~as well as writing and polishing for dozens and dozens of grants a year.

Vasco Grilo @ 2024-04-11T18:01 (+4)

I'm late to the discussion, but I'm curious how much of the potential value would be unlocked -- at least for modest size / many grants orgs like EA Funds -- if we got a better writeup for a random ~10 percent of grants (with the selection of the ten percent happening after the grant decisions were made).

Great suggestion, Jason! I think that would be over 50 % as valuable as detailed write-ups for all grants.

Actually, the grants which were described in this post on the Long-Term Future Fund (LTFF) and this on the Effective Altruism Infrastructure Fund (EAIF) were randomly selected after being divided into multiple tiers according to their cost-effectiveness[1]. I think this procedure was great. I would just make the probability of a grant being selected proportional to its size. The 5th and 95th percentile amount granted are 2.00 k$ and 234 k$, which is a wide range, so it is specially important to make larger grants being more likely to be picked if one is just analysing a few grants as opposed to dozens of grants (as it was the case for the posts). Otherwise there is a risk of picking small grants which are not representative of the mean grant.

There is still the question about how detailed the write-ups of the selected grants should be. They are just a few paragraphs in the posts I linked above, which in my mind is not enough to make a case for the value of the grants without many unstated background assumptions.

If the idea is to see the quality of the median grant, not assess individual grants, then a random sample should work ~as well as writing and polishing for dozens and dozens of grants a year.

Nitpick. I think we should care about the quality of the mean (not median) grant weighted by grant size, which justifies picking each grant with a probability proportional to its size.

- ^

I know you are aware of this, since you commented on the post on LTFF, but I am writing this here for readers who did not know about the posts.

Jason @ 2024-04-11T20:33 (+10)

On the nitpick: After reflection, I'd go with a mixed approach (somewhere between even odds and weighted odds of selection). If the point is donor oversight/evaluation/accountability, then I am hesitant to give the grantmakers too much information ex ante on which grants are very likely/unlikely to get the public writeup treatment. You could do some sort of weighted stratified sampling, though.

I think grant size also comes into play on the detail level of the writeup. I don't think most people want more than a paragraph, maximum, on a $2K grant. I'd hope for considerably more on $234K. So the overweighting of small grants relative to their percentage of the dollar-amount pie would be at least somewhat counterbalanced by them getting briefer writeups if selected. So the expected-words-per-dollar figures might be somewhat similar throughout the range of grant sizes.

Vasco Grilo @ 2024-04-11T20:58 (+4)

If the point is donor oversight/evaluation/accountability, then I am hesitant to give the grantmakers too much information ex ante on which grants are very likely/unlikely to get the public writeup treatment.

Great point! I had not thought about that. On the other hand, I assume grantmakers are already spending more time on assessing larger grants. So I wonder whether the distribution of the granted amount is sufficiently heavy-tailed for grantmakers to be influenced to spend too much time on them due to their higher chance of being selected for having longer write-ups.

I think grant size also comes into play on the detail level of the writeup.

Another nice point. I agree the level of detail of the write-up should be proportional to the granted amount.

Jason @ 2024-04-12T14:04 (+4)

I think I have an older discussion about managing conflicts of interest in grantmaking the back of my mind. I think that's part of why I would want to see a representative sample of small-to-midsize grant writeups.