How binary is longterm value?

By Vasco Grilo🔸 @ 2022-11-01T15:21 (+13)

Knowing the shape of future (longterm) value appears to be important to decide which interventions would more effectively increase it. For example, if future value is roughly binary, the increase in its value is directly proportional to the decrease in the likelihood/severity of the worst outcomes, in which case existential risk reduction seems particularly useful[1]. On the other hand, if value is roughly uniform, focussing on multiple types of trajectory changes would arguably make more sense[2].

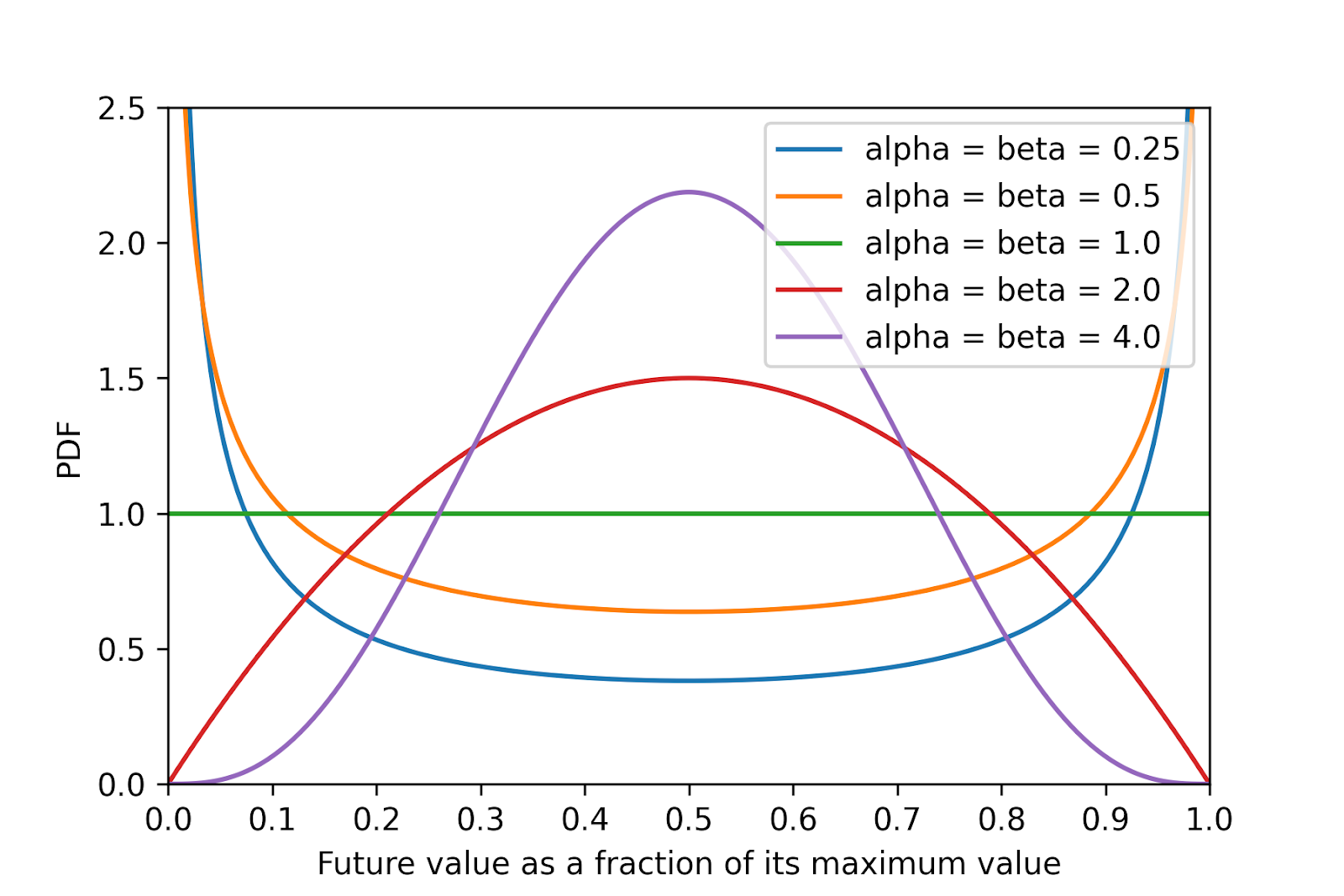

So I wonder what is the shape of future value. To illustrate the question, I have plotted in the figure below the probability density function (PDF) of various beta distributions representing the future value as a fraction of its maximum value[3].

For simplicity, I have assumed future value cannot be negative. The mean is 0.5 for all distributions, which is Toby Ord’s guess for the total existential risk given in The Precipice[4], and implies the distribution parameters alpha and beta have the same value[5]. As this tends to 0, the future value becomes more binary.

- ^

Existential risk was originally defined in Bostrom 2002 as:

One where an adverse outcome would either annihilate Earth-originating intelligent life or permanently and drastically curtail its potential.

- ^

Although trajectory changes encompass existential risk reduction.

- ^

The calculations are in this Colab.

- ^

If forced to guess, I’d say there is something like a one in two chance that humanity avoids every existential catastrophe and eventually fulfills its potential: achieving something close to the best future open to us.

- ^

According to Wikipedia, the expected value of a beta distribution is “alpha”/(“alpha” + “beta”), which equals 0.5 for “alpha” = “beta”.

WilliamKiely @ 2022-11-01T18:42 (+9)

I think longterm value is quite binary in expectation.

I think a useful starting point is to ask: how many orders of magnitude does value span?

If we use the life of one happy individual today as one unit of goodness, then I think I think maximum value (originating from Earth in the next billion years, which is probably several orders of magnitude low) is around at least 10^50 units of goodness per century.

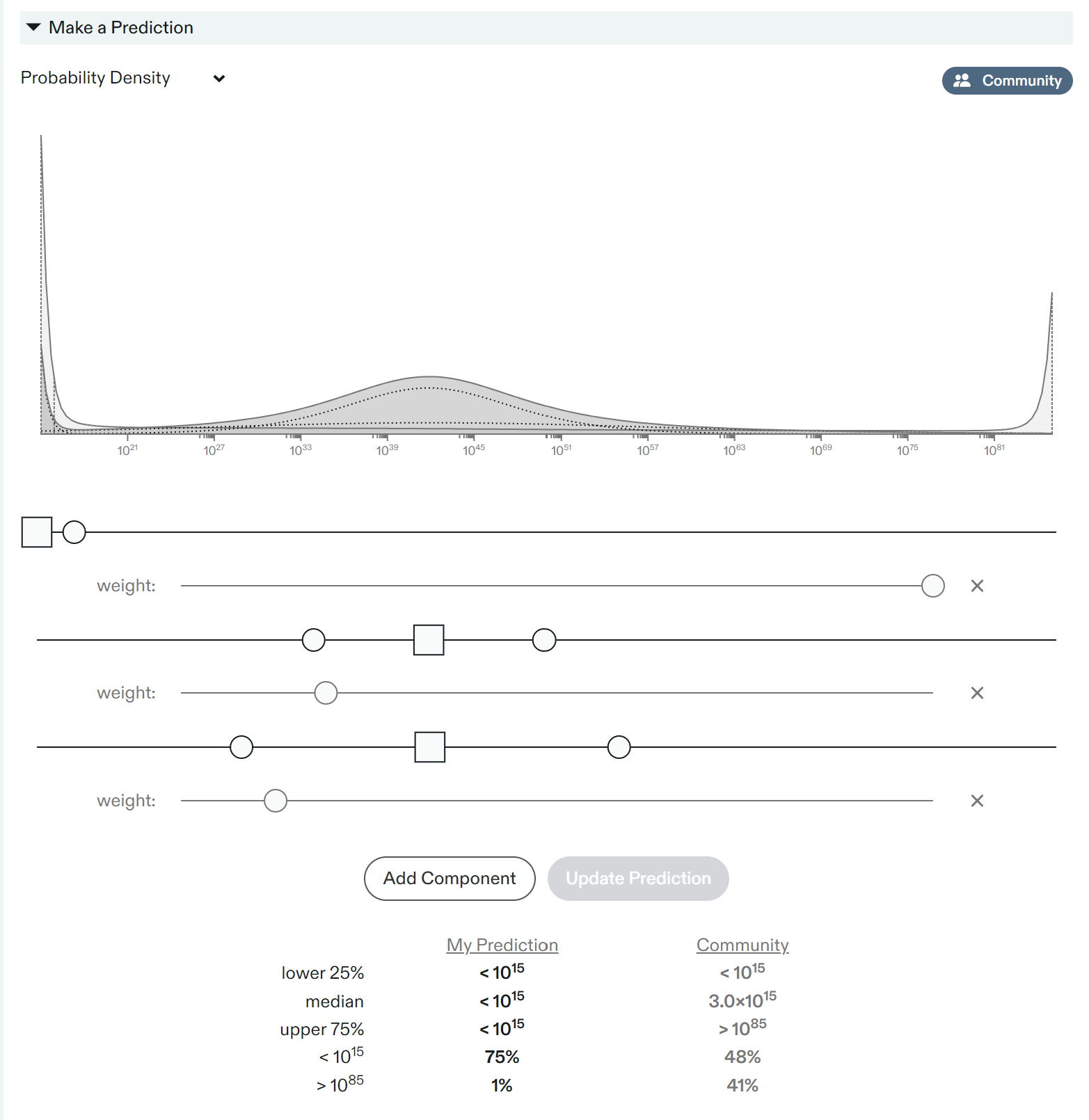

My forecast on this Metaculus question reflects this: Highest GWP in the next Billion Years:

My current forecast:

How I got 10^50 units of goodness (i.e. happy current people) per century from my forecast

I converted 10^42 trillion USD to 10^50 happy people by saying today's economy is composed of about 10^10 happy people, and such a future economy would be about 10^40 times larger than today's economy. If happy people today live 100 years, that gives 10^50 units of goodness per century as the optimal future a century from now.

I also of course assumed the relationship between GWP and value of people stays constant, which of course is highly dubious, but that's also how I came up with my dubious forecast for future GWP.

Is Value roughly Binary?

Yes, I think. My forecast of maximum GWP in the next billion years (and thus my forecast of maximum value from Earth-originating life in the next billion years) appears to be roughly binary. I have a lot of weight around what I think the maximum value is (~10^42 trillion 2020 USD), and then a lot of weight on <10^15 trillion 2020 USD (i.e. near zero), but much less weigh on the orders of magnitude in between. If you plot this on a linear scale, I think the value looks even more binary. If my x-axis was not actual value, but fraction of whatever the true maximum possible value is, it would look even more binary (since the vast majority of my uncertainty near the top end would go away).

Note that this answer doesn't explain why my maximum GWP forecast has the shape it does, so doesn't actually make the case for the answer much, just reports that it's what I believe. Rather than explain why my GWO forecast has the shape it does, I'd invite anyone who doesn't think value is binary to show their corresponding forecast for maximum GWP (that implies value is not binary) and explain why it looks like that. Given that potential value spans so many order of magnitude, I think it's quite hard to make a plausible seeming forecast in which value is not approximately binary.

Charlie_Guthmann @ 2022-11-02T05:30 (+4)

Slightly pedantic note but shouldn’t the metaculus gwp question be phrased as the world gwp in our lightcone? We can’t reach most of the universe so unless I’m misunderstanding this would become a question of aliens and stuff that is completely unrelated/out of the control to/of humans.

Also somewhat confused what money even means, when you have complete control of all the matter in the universe. Is the idea trying to translate our levels of production into what they would be valued at today? Do people today value billions of sentient digital minds? Not saying this isn’t useful to think about but just trying to wrap My head around it.

WilliamKiely @ 2022-11-02T06:52 (+3)

Good questions. Re the first, from memory I believe I required that the economic activity be causually the result of Earth's economy today, so that rules out alien economys from consideration.

Re the second, I think it's complicated and the notion of gross product may break down at some point. I don't have some very clear answer for you other than that I could think of no better metric to measure the size of the future economy's output than GWP. (I'm not an economist.)

Robin Hanson's post "The Limits of Growth" may be useful for understanding how to compare potential future economies of immense size to today's economy. IIRC he makes comparisons using consumers today having some small probability of achieving something that could be had in the very large future economy. (He's an economist. In short, I'd ask the economists for help with interpreting what GWP in far-future contexts over me.)

Vasco Grilo @ 2022-11-02T11:25 (+1)

Thanks for sharing!

Given that potential value spans so many order of magnitude, I think it's quite hard to make a plausible seeming forecast in which value is not approximately binary.

In theory, it seems possible to have future value span lots of orders of magnitude while not being binary. For example, one could have a lognormal/loguniform distribution with median close to 10^15, and 95th percentile around 10^42. Even if one thinks there is a hard upper bound, there is the possibility of selecting a truncated lognormal/loguniform (I guess not in Metaculus).

Zach Stein-Perlman @ 2022-11-01T16:11 (+9)

I think my Value is binary is relevant.

Charlie_Guthmann @ 2022-11-01T17:56 (+8)

I have substantial credence that the future is at least 99% as good as the optimal future.[3] I do not claim much certainty about what the optimal future looks like — my baseline assumption is that it involves increasing and improving consciousness in the universe, but I have little idea whether that would look like many very small minds or a few very big minds

Overall, I don't really agree with the above quote, depending on what you mean by substantial (edit: it's also possible I just don't see the above statement as well defined as I don't presupose there is a true "value"). The tldr of my disagreement is (1) that superintelligence doesn't automatically mean there won't be (internal and interstellar) political struggles and (2) I don't think superintelligence will "solve ethics", because I don't believe there is a correct solution, and, (3) We would need to do everything very quickly to hit the 99% mark.

I'm gonna talk below under the assumption that value = total utility (with an existing weighting map or rule and a clear definition of what utility is) just for the purpose of clarity, but I don't think it would matter if you substituted another definition for value.

In order to get 99% of the potential utility

- One society probably needs to control its entire lightcone. They (who is they? next bullet point) also need to have total utilitarian values. If they don't control the lightcone, then all other societies in the lightcone need to have similar total utiltarian values also to get to this 99%. For this to be the case there would need to be strong convergent CEVs, but I don't see why this is at all obvious. If they don't have utilitarian values then of course it seems extremely unlikely that they are going to create an optimal world.

- This dominant society needs to have complete agreement on what value is. Let's assume that a single society rules the entire light cone and is ASI boosted. We still need all the sub agents to agree on total utilitarianism (or the people who control the resources). Disagreement with each other could cause infighting or just a split value system. So unless you then accept the split value system is what value is, you have already lost way more than 1% of the value. If a single agent rules this entire society, how did they come to power and why do we think they would be especially caring about maxing value or have the correct value.

- We would need to do all of this in less than ~1/100 of the time until the world ended. It's a bit more complicated than this but the intuition should be clear.

Yea you can get around points 1 and 2 by just defining value as whatever the future people figure out with the help of AGI but that seems somewhat noninteresting to me. If you are a moral realist then this is more interesting because it feels less tautological. At the same time moral realism being true would not mean that any society + an agi would agree on it. In fact I still think most wouldn't, although I have trouble conceiving of what it even means for a moral fact to be correct so my intuitions may be heavily off.

Almost all of the remaining probability to near-zero futures.

This claim is bolder, I think. Even if it seems reasonable to expect a substantial fraction of possible futures to converge to near-optimal, it may seem odd to expect almost all of the rest to be near-zero. But I find it difficult to imagine any other futures.

This seems significantly less bold to me.

Zach Stein-Perlman @ 2022-11-03T23:06 (+2)

Briefly:

- Yeah, "optimal future" should mean something like "future where humanity acts optimally."

- Yeah, I expect strong coordination in the future.

- Yeah, I expect stuff to happen fast, on the astronomical scale.

Stefan_Schubert @ 2022-10-27T11:05 (+7)

For example, if future value is roughly binary, the increase in its value is directly proportional to the decrease in the likelihood/severity of the worst outcomes, in which case existential risk reduction seems particularly useful. On the other hand, if value is roughly uniform, focussing on trajectory changes would make more sense.

That depends on what you mean by "existential risk" and "trajectory change". Consider a value system that says that future value is roughly binary, but that we would end up near the bottom of our maximum potential value if we failed to colonise space. Proponents of that view could find advocacy for space colonisation useful, and some might find it more natural to view that as a kind of trajectory change. Unfortunately it seems that there's no complete consensus on how to define these central terms. (For what it's worth, the linked article on trajectory change seems to define existential risk reduction as a kind of trajectory change.)

WilliamKiely @ 2022-11-01T18:19 (+3)

(For what it's worth, the linked article on trajectory change seems to define existential risk reduction as a kind of trajectory change.)

FWIW I take issue with that definition, as I just commented in the discussion of that wiki page here.

Vasco Grilo @ 2022-10-27T11:15 (+1)

I would agree existential risk reduction is a type of trajectory change (as I mentioned in this footnote). That being said, depending on the shape of future value, one may want to focus on some particular types of trajectory changes (e.g. x-risk reduction). To clarify, I have added "multiple types of " before "trajectory changes".

Stefan_Schubert @ 2022-10-27T11:25 (+5)

I don't think that change makes much difference.

It could be better to be more specific - e.g. to talk about value changes, human extinction, civilisational collapse, etc. Your framing may make it appear as if a binary distribution entails that, e.g. value change interventions have a low impact, and I don't think that's the case.

Vasco Grilo @ 2022-10-28T04:54 (+1)

In my view, we should not assume that value change interventions are not an effective way of reducing existential risk, so they may still be worth pursuing if future value is binary.

Charlie_Guthmann @ 2022-10-27T17:52 (+3)

I have two thoughts here.

First, I'm not sure I like Bostrom's definition of x-risk. It seems to dismiss the notion of aliens. You could imagine a scenario with a ton of independently popping up alien civilizations being very uniform, regardless of what we do. Second, I think the binaryness of our universe is going to be dependent on the AI we make and/or our expansion philosophy.

AI 1: Flies around the universe dropping single celled organisms on every livable planet

AI 2: Flies around the universe setting up colonies that suck up all the energy in the area and converting it into simulations/digital people.

if AI 2 expands through the universe then the valence of sentience in our lightcone would seemingly be much more correlated than if AI 1 expands. So AI 1 scenario would look more binary uniform and AI 2 scenario would look more uniform binary.

WilliamKiely @ 2022-11-01T18:40 (+2)

[Moved to an answer instead of a comment.]

Vasco Grilo @ 2022-11-01T16:23 (+1)

Quick disclaimer, I had already published this some days ago, but accidently republished it on the front page. I moved the post to my drafts, and, when I published it after unchecking the front page box, it still appeared on the front page. I may be missing something.