My Skeptical Opinion on the Repugnant Conclusion

By Ozzie Gooen @ 2024-05-27T22:56 (+16)

Epistemic/Research Status

I'm not very well studied in population ethics. You can view this as a quick opinion piece. I know that similar points to mine have been made before in the literature, but thought it could still be valuable to provide my personal take. I wrote this mainly because I've kept hearing the Repugnant Conclusion be brought up as an attack on what I see as very basic decision-making, and wanted to better organize my thoughts on the matter. I used Claude to help rewrite this.

Summary

The Repugnant Conclusion has been a topic of frequent discussion and debate within the Effective Altruism (EA) community and beyond, with numerous dedicated posts on the EA Forum. It has often been used to question what I believe to be straightforward, fundamental questions about making trade-offs. However, I argue that the perceived "repugnancy" of the Repugnant Conclusion is often a result of either poorly chosen utility function parameters or misunderstandings about the workings of simple utility functions.

In my view, the undesirability associated with the Repugnant Conclusion is not inherent to the concept itself but rather arises from our intuitive discomfort with certain extreme scenarios.

Instead of dedicating significant efforts to radical approaches designed to circumvent the Repugnant Conclusion, I suggest that the EA and greater intellectual communities should focus on estimating realistic utility functions, leveraging the knowledge and methods found in economics and health science. I have been mostly unimpressed with much of what I've read of the debate on this topic within Population Ethics, and much more impressed by discussions on related topics within formal science and engineering.

A Dialogue

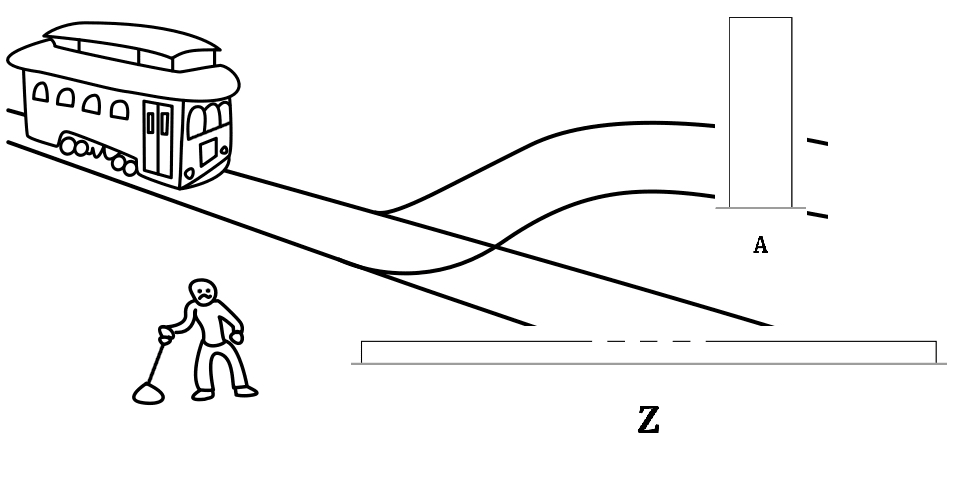

Alice: "So, here's a choice. We could either (A) create 10 humans that are happy millionaires, or (B) 100 humans that are pretty happy, but not quite as happy as the millionaires."

Bob: "Weird question, but sure. I'll take the 100 humans."

Alice: "That works. Now consider a new option, (C). Instead, we can make 1,000 humans that are fairly happy. Say, these are middle-income, decent lives, but not much fame. Maybe they would rate their lives at an 8.1/10, instead of an 8.5/10."

Bob: "That still sounds preferable. I'll go for the 1,000 humans then."

Alice: "Great. New question. We can actually go all the way to option (D), to create 1 million humans. These will be in mild poverty this time, to preserve resources. Their lives are fairly plain. They would rate their lives an average of 6.2/10. They still would prefer to live, but it's pretty slim."

Bob: "This sounds less great, but based on my intuitions and some math, I think it's still worthwhile."

…some time passes…

Bob: "You know, I actually hate this last option. It seems clearly worse than the previous ones."

Alice: "Okay, do you want to then choose one of the previous ones?"

Bob: "No."

Alice: "Um… so what do you want to do? Do you want to reconsider the specific of how you should trade-off population quantity vs. quality?."

Bob: "No. My dissatisfaction with my final choice here demonstrates that the entire system of me choosing options is flawed. The very idea that I could make choices has led me to this last choice that I dislike, so I've learned that we shouldn't be allowed to make choices like this."

Alice: "We do need you to choose some option."

Bob: "No. Instead, I'll request that philosophers come up with entirely new ways of comparing options. I think that D is better than C, but I also think that D is worse than C. There must be a flaw in the very idea of deciding between options."

Alice: "But let's start again. Do you think that option B is still better than option A?

Bob: "Yes, I'm still sure."

Alice: "And you think that option C is still better than option B?"

Bob: "Yes, I'm still sure."

Alice: "So can you then choose your favorite between C and D?"

Bob: "No. I feel like I'm pressured to choose D, but C seems much better to me. Instead of choosing one or thinking through these trade-offs directly, I think we need a completely new theory of how to make decisions. It's the very idea of preferring some populations over others in ways like this that's probably the problem."

My (Quick) Take:

The Repugnant Conclusion presents a paradox in population ethics that challenges our intuitions about quality vs. quantity of life. As a utilitarian, I think it's important to address these concerns, but I don't at all see the need to discarding the framework of welfare trade-offs entirely, as some might suggest.

The Trade-Off Problem

Most people intuitively understand the need for trade-offs. For instance, consider the extreme yet analogous question:

“Would you prefer to save one person at a wellbeing level of 9.4/10 or 1,000,000 people at a wellbeing level of 9.38/10?”

The obvious solution is to save the larger number of people with slightly lower wellbeing. This illustrates that trade-offs can be made even when dealing with high stakes.

Handling the Extremes

If you find yourself at option D (1 million people with lives barely worth living) and it feels wrong, you can simply revert to option C (1,000 people with decent lives). The discomfort with extreme options doesn't invalidate the entire concept of welfare trade-offs. In real-world scenarios, extreme options are rarely the optimal choice.

The Utility Function

I think it is worthwhile to be precise about specific properties of our utility function of preferences over a population, for decision-making. Note that there are many kinds of utility functions, so by using a "best guess at a utility function made for this specific problem, to be used for decisions", doesn't need to have much at all to do with other utility functions, like one's "terminal utility function".

Key Specific Claims

- Monotonically Increasing Utility: The relationship between the number of happy people and total utility is monotonically increasing, if not strictly increasing, all other things being equal.

- Average Happiness and Utility: The relationship between average happiness and total utility is monotonically increasing, if not strictly increasing, all other things being equal.

- Threshold of Preferability: There exists a threshold where a human life is considered preferable or not. For example, if asked, “Would you prefer a human come into existence with endless pain at level X, all other things being equal?”, there is some level X for which we would say no.

- Axiom of Trade-offs: For any given level of individual welfare above the threshold of preferability, there exists some number of individuals at that level whose existence would be preferable to a single individual at a higher level of welfare, all else being equal.

- Extreme Conclusions: If certain conclusions seem odd at the extremes, it’s more likely that the specific parameters or intuitions are mistaken rather than the claims above.

Note that a von Neumann-Morgenstern utility function would imply (4). I think that such a function is an easy claim to make, though I know there are those who disagree.

Note: This is very arguably just a worse version of Arrhenius's impossibility theorems. I suggest reading about those if you're curious in this topic. Here's a summary, from Claude.

Arrhenius' Impossibility Theorem states that no population axiology can simultaneously satisfy the following six intuitively plausible desiderata:

- The Egalitarian Dominance Condition: If population A has higher welfare than population B at all levels, then A is better than B.

- The Dominance Addition Condition: Adding people with positive welfare is better than adding people with negative welfare.

- The Non-Sadism Condition: An addition of any number of people with negative welfare is worse than an addition of any number of people with positive welfare.

- The Mere Addition Condition: Adding people with positive welfare does not make a population worse, other things being equal.

- The Normalization Condition: If two populations are of the same size, the one with higher average welfare is better.

- The Avoidance of the Repugnant Conclusion: A large population with lives barely worth living is not better than a smaller population with very high positive welfare.

Arrhenius proves that these conditions are incompatible, meaning that any theory that satisfies some of them must necessarily violate others. This impossibility result poses a significant challenge to population ethics, as it suggests that our moral intuitions in this domain may be irreconcilable.

Other philosophers, such as Tyler Cowen and Derek Parfit, have also proposed similar impossibility theorems, each based on a slightly different set of moral principles.

Some Quick Takes on Population Ethics and Population Axiology

Some claim that the "Repugnant Conclusion" represents a need for fundamental changes in the way that we make tradeoffs. I find this unlikely. We make decisions using simple von Neumann utility function tradeoffs in dozens of industries, with lots of money and lives at stake, and I think we can continue to use those techniques here.

Again, I see the key question as one of where to set specific parameters, like the human baseline. This is a fairly contained question.

I think that a lot of the proposed "solutions" in Population Ethics are very unlikely to be true. They mostly seem so bad to me that I don't understand why they continue to be argued, for or against. I'm paranoid that Effective Altruists have spent too much time debating bad theories here.

See an overview of these reasons at the Stanford Encyclopedia of Philosophy, or in Population Axiology by Hilary Greaves.

- Averagism seems clearly false.

- Variable value principles seems very weird and unlikely. However, it could still fit within a fairly conventional utility function, so I won't complain too much.

- Critical level principle. "The idea is that a person’s life contributes positively to the value of a population only if the quality of the person’s life is above a certain positive critical level." The basic idea that there is some baseline makes sense to me. Again, I'm happy for discussions onto where exactly it is set. Again, I would see this is a minor change, so much that it seems to almost, if not exactly, be arguing about semantics.

- Person-affecting theories. I find them unlikely, and I also don't think they address the actual "repugnant conclusion" question. Just can just change "you can create population X" to statements like, "Imagine that population X exists, and you are asked about killing them."

- Rejections of transitivity. This seems very radical to me, and therefore unlikely. I understand this to be basically throwing away the idea of us being able to make many simple tradeoffs, like options A vs. B. above. I don't think we need an incredibly radical take that would require us to throw out lots of basic assumptions in economics, in order to get around the basic weirdness of the Repugnant conclusion.

- Accepting the impossibility of a satisfactory population ethics. One listed option seems to be to give up and to assume that there's just a fundamental problem of ethics. From what I can tell, the basic argument is, "It seems very clear that the prerequisites that require the Repugnant Conclusion are true. We also think that the Repugnant Conclusion represents a severe problem with morality." I agree that the Repugnant Conclusion of some form is likely to be an optimal decision, but don't at all think that it represents a severe problem.

Perhaps worse than these are some solutions I hear by non-philosophers. I've heard many people think about the Repugnant Conclusion briefly, and assume that the logical implication is to reject many of the basic premises of making decisions to maximize human welfare. To me, this follows the failure mode of rejecting one unintuitive premise, with a dramatically more unintuitive premise.

Ben Millwood @ 2024-05-28T10:29 (+9)

I think some of what makes the repugnant conclusion counter-intuitive to some people might simply be that when they hear "life barely worth living" they think of a life that is quite unpleasant, and feel sad about lots of people living that life. This might either be:

- People actually thinking of a net-negative life, e.g. because people with net-negative lives often still want to live because of survival instincts and/or hope for improvement, so people are thinking of "a life barely worth suffering through in the hope that it gets better", which is not worth living for its own sake,

- People thinking of lives that are net positive, but are easily instinctively compared to lives that are better, causing them to seem sad / tragic as an outcome for what they fail to be, even though they are good for what they are.

If I'm right, then you may already see surprising results when people compare things like "a small population of people with lives barely worth living" and "a large population of people with lives barely worth living", which I expect people to be much more ambivalent about than "a small population of people with good lives" vs "a large population of people with good lives".

(Obviously it makes sense to feel less strongly about the former comparison, but I also think people will feel more negatively rather than just more indifferent towards it.)

(To be clear, I'm conjecturing reasons why someone might be biased / irrational in rejecting the repugnant conclusion, but I don't mean to imply that all rejections are of this kind. People may have other, more principled reasons as well / instead.)

MichaelStJules @ 2024-05-29T17:29 (+5)

People actually thinking of a net-negative life, e.g. because people with net-negative lives often still want to live because of survival instincts and/or hope for improvement, so people are thinking of "a life barely worth suffering through in the hope that it gets better", which is not worth living for its own sake,

Ya, this would be denying the hypothetical. There may be ways to prevent this, though, by making descriptions of lives more explicit and suffering-free, like extremely short joyful lives, or happy animal lives, for animals with much narrower welfare ranges each.

People thinking of lives that are net positive, but are easily instinctively compared to lives that are better, causing them to seem sad / tragic as an outcome for what they fail to be, even though they are good for what they are.

This could depend on what you (or they) mean by "net positive" here. Or, they may just have intuitions about "net positive" and other things that are incompatible with these instinctive comparisons to better lives, but that doesn't mean they should abandon the instinctive comparisons to better lives instead of abandoning their interpretation of net positive. It could be that their intuitions about "net positive" are the biased ones, or, more plausibly, in my view, there's no objective fact of the matter (denying moral realism).

Instinctively comparing to better lives doesn't seem necessarily biased or irrational (or no more so than any other intuitions). This could just be how people can compare outcomes. People with explicit person-affecting intuitions do something like this fairly explicitly. People who do so instinctively/implicitly may have (partially) person-affecting intuitions they haven't made explicit.

If we describe this as biased, I'd say all preferences and moral intuitions are biased. I think proving otherwise requires establishing a stance-independent moral fact, i.e. moral realism, relative to which we can assess bias. Every other view looks biased relative to a view that disagrees with it in some case. Those who accept the Repugnant Conclusion are biased relative to views rejecting it.

Ben Millwood @ 2024-05-29T18:08 (+4)

Ya, this would be denying the hypothetical. There may be ways to prevent this, though, by making descriptions of lives more explicit and suffering-free, like extremely short joyful lives, or happy animal lives, for animals with much narrower welfare ranges each.

Yeah these are good ideas, although they come with their own complications. (A related thought experiment is how you feel about two short lives vs. one long life, with the same total lifetime and the same moment-to-moment quality of experience. I think they're equally valuable, but I sympathise with people finding this counterintuitive, especially as you subdivide further.)

It could be that their intuitions about "net positive" are the biased ones, or, more plausibly, in my view, there's no objective fact of the matter (denying moral realism).

The sense in which I'd want to call the view I described "objectively" biased / irrational, is that it says "this state of affairs is undesirable because a better state is possible", but in fact the better state of affairs is not possible. Again, it's denying the hypothetical, but may be doing so implicitly or subconsciously. The error is not a moral error but an epistemic one, so I don't think you need moral realism.

MichaelStJules @ 2024-05-28T00:05 (+8)

Person-affecting theories. I find them unlikely, and I also don't think they address the actual "repugnant conclusion" question. Just can just change "you can create population X" to statements like, "Imagine that population X exists, and you are asked about killing them."

Killing people would be bad for them under some person-affecting accounts, and probably the kind that most people with person-affecting views hold: their lifetime aggregate welfares will be lower than otherwise (if they would have otherwise had good futures), or you'll frustrate preferences, and we can think either is bad even if they won't experience the deprivation or frustration.

I'm curious about what you mean by not addressing the actual question. Person-affecting views avoid making each of the tradeoffs in your sequence. But there's still a fundamental problem of aggregation, which doesn't go away by taking a person-affecting view, e.g. you can fix a huge population, and consider

- tiny benefits or harms, one each to a huge number of people vs

- large benefits or harms each to a much smaller set of people.

E.g. Scanlon's transmitter room,[1] Yudkowsky's torture vs dust specks, Spears and Budolfson, 2021. To avoid this, I think you'd need to take a view that gives up full aggregation, and so gives up either transitivity or the independence of irrelevant alternatives. (Person-affecting views also typically give up transitivity, the independence of irrelevant alternatives or completeness/full comparability.)

- ^

Transmitter Room. The World Cup final is currently being played. Jones, a technician in the room containing the equipment that is causing the game’s worldwide television broadcast, has inadvertently come into contact with some exposed wires that are causing him very painful electric shocks. He is unable to extricate himself from his situation, but you can help him by turning off the machine with the exposed wires. Unfortunately, if you do this, then the World Cup broadcast will be shut down, and it won’t be able to be restarted for 10 minutes.

Ozzie Gooen @ 2024-05-28T00:55 (+4)

(Person-affecting views also typically give up transitivity, the independence of irrelevant alternatives or completeness/full comparability.)

These views seem quite strange to me. I'd be curious to understand who these people are that believe this. Are these views common among groups of Effective Altruists, or philosophers, or perhaps other groups?

MichaelStJules @ 2024-05-28T03:27 (+8)

I'd guess person-affecting intuitions are common (like at least a substantial minority), including among EAs, but I'd also guess most people with them don't have very specific views worked out, and working out person-affecting theories intuitive even to those with person-affecting intuitions seems hard, e.g. my post here and this one (although see also responses in the comments). It's probably easier for some than others, depending on their other intuitions.

Person-affecting intuitions and views are probably more common among people with more contractualist (e.g. Finneron-Burns, 2017) or Kantian leanings.

A couple posts defending person-affecting intuitions, mostly the procreation asymmetry,[1] have been well-received on the EA Forum:

- Critique of MacAskill’s “Is It Good to Make Happy People?” by Magnus Vinding (high karma, many votes)

- Population Ethics Without Axiology: A Framework by Lukas_Gloor (one of the top prize winners for the EA Criticism and Red Teaming Contest)

Also some discussion in Confused about "making people happy" vs. "making happy people" and the comments.

I would say I have asymmetric person-affecting views. This post kind of describes where I'm at, ignoring the asymmetry. (And I'm working on another post.)

- ^

Although the procreation asymmetry is compatible with negative utilitarianism, which isn't really person-affecting at all, and doesn't violate transitivity, IIA or completeness.

Ozzie Gooen @ 2024-05-28T00:46 (+4)

> I'm curious about what you mean by not addressing the actual question.

I just meant that my impression was that person-affecting views seem fairly orthogonal to the Repugnant Conclusion specifically. I imagine that many person-affecting believers would agree with this. Or, I assume that it's very possible to do any combination of [strongly care about the repugnant conclusion] | [not care about it], and [have person-affecting views] and [not have them].

The (very briefly explained) example I mentioned is meant as something like,

Say there's a trolly problem. You could either accept scenario (A): 100 people with happy lives are saved, or (B) 10000 people with sort of decent lives are saved.

My guess was that this would still be an issue in many person-affecting views (I might well be wrong here though, feel free to correct me!). To me, this question is functionally equivalent to the Repugnant Conclusion.

Your examples with aggregation also seem very similar.

David Mathers @ 2024-05-28T08:29 (+5)

Just a guess, but I think many people who reject the repugnant conclusion in its original form would be happy to save far more people with less good but positive lives, over less people with better lives. Recall the recent piece on bioethicists where lots of them don't even think you have more reason to save the life of a 20-year old than a 70-year old. Or consider how offensive it is to say "let's save the lives of people in rich countries, all things being equal, because their lives will likely contain less suffering". In general, people seem to reject the idea that the size of the benefit conveyed on someone by saving their life affects how strong the reason to save their life is, so long as their remaining life will be net positive and something like a "normal" human life, and isn't ludicrously short. (Note: I'm not defending this position, I think you should obviously save a 20-year old over a 70-year old because the benefit to them is so much larger.)

On the other hand, most of these people would probably save a few humans over many more animals, which is kind of like rejecting the repugnant conclusion in a life-saving rather than life-creating context.

Lukas_Gloor @ 2024-05-28T12:30 (+4)

I just meant that my impression was that person-affecting views seem fairly orthogonal to the Repugnant Conclusion specifically. I imagine that many person-affecting believers would agree with this. Or, I assume that it's very possible to do any combination of [strongly care about the repugnant conclusion] | [not care about it], and [have person-affecting views] and [not have them].

The (very briefly explained) example I mentioned is meant as something like,

Say there's a trolly problem. You could either accept scenario (A): 100 people with happy lives are saved, or (B) 10000 people with sort of decent lives are saved.

My guess was that this would still be an issue in many person-affecting views (I might well be wrong here though, feel free to correct me!). To me, this question is functionally equivalent to the Repugnant Conclusion.

I'm pretty confident you're wrong about this. (Edit: I mean, you're right if you call it "repugnant conclusion" whenever we talk about choosing between a small very happy population and a sufficiently larger less happy one; however, my point is that it's no coincidence that people most often object to favoring the larger population over the smaller one in contexts of population ethics, i.e., when the populations are not already both in existence.)

I've talked to a lot of suffering-focused EAs. Of the people who feel strongly about rejecting the repugnant conclusion in population ethics, at best only half feel that aggregation is altogether questionable. More importantly, even in those that feel that aggregation is altogether questionable, I'm pretty sure that's a separate intuition for them (and it's only triggered when we compare something as mild as dust specks to extremes like torture). Meaning, they might feel weird about "torture vs dustspecks," but they'll be perfectly okay with "there comes a point where letting a trolley run over a small paradise is better than letting it run over a sufficiently larger population of less happy (but still overall happy) people on the other track." By contrast, the impetus of their reaction to the original repugnant conclusion comes from the following. When they hear a description of "small-ish population with very high happiness," their intuition goes "hmm, that sounds pretty optimal," so they're not interested in adding costs just to add more happiness moments (or net happy lives) to the total.

To pass the Ideological Turing test for most people who don't want to accept the repugnant conclusion, you IMO have to engage with the intuition that it isn't morally important to create new happy people. (This is also what person-affecting views try to build on.)

I haven't done explicit surveys of this, but I'm still really confident that I'm right about this being what non-totalists in population ethics base their views on, and I find it strange that pretty much* every time totalists discuss the repugnant conclusion, they don't seem to see this.

(For instance, I've pointed this out here on the EA forum at least once to Gregory Lewis and Richard Yetter-Chappell (so you're in good company, but what is going on?))

*For an exception,this post by Joe Carlsmisth doesn't mention the repugnant conclusion directly, but it engages with what I consider to be more crux-y arguments and viewpoints in relation to it.

Ozzie Gooen @ 2024-05-29T18:41 (+4)

Thanks for that explanation.

>I've talked to a lot of suffering-focused EAs. Of the people who feel strongly about rejecting the repugnant conclusion in population ethics, at best only half feel that aggregation is altogether questionable.

I think this is basically agreeing with my point on "person-affecting views seem fairly orthogonal to the Repugnant Conclusion specifically", in that it's possible to have any combination.

That said, you do make it sound like suffering-focused people have a lot of thoughtful and specific views on this topic.

My naive guess would have been that many suffering-focused total utilitarians would simply have a far higher bar for what the utility baseline is than, say, classical total utilitarians. So in some cases, perhaps they would consider most groups of "a few people living 'positive' lives" to still be net-suffering, and would therefore just straightforwardly prefer many options with fewer people. But I'd also assume that in this theory, the repugnant conclusion would basically not be an issue anyway.

I realize that this wasn't clear in my post, but when I wrote it, it wasn't with suffering-focused people in mind. My impression is that the vast majority of people worried about the Repugnant Conclusion are not suffering focused, and would have different thoughts on this topic and counterarguments. I think I'm fine not arguing against the suffering-focused people on this topic, like the ones you've mentioned, because it seems like they're presenting different arguments than the main ones I disagree with.

Charlie_Guthmann @ 2024-05-29T22:04 (+5)

Lots of discussion about people misinterpreting what barely worth living means on the individual utility axis, which I do think could be part of the issue. I also believe that there is a hidden assumption most people make here: that all the lives barely worth living are "similar" (or alternatively: it's easier to imagine 10000 people being similar than 10). I think there is another dimension in population utility functions that most people care about - the diversity of the world. It's easy to imagine the very good lives as a variety of distinct lives and the millions of just barely good lives all being the same sort of potato farmer. If you clarified that all of the barely worth living lives were extremely different, and gave people time to reflect on and believe that it is possible for 1 million lives to be very different, I wonder if many people would feel the conclusion is less repugnant. Whether or not having diversity be an input in your population utility function is a separate question.

Also, I found your post about utility functions to be helpful, thanks.

lincolnq @ 2024-05-28T07:30 (+4)

One of the things that seems intuitively repugnant is the idea of "lives barely worth living". The word barely is doing a lot of work in driving my intuition of repugnancy, since a life "barely" worth living seems to imply some level of fragility -- "if something goes even slightly wrong, then that life is no longer worth living".

I think this may simply be a marketing problem though. Could we use some variation of "middle class"? This is essentially the standard of living that developed-world politics accepts as both sustainable and achievable, and sounds a lot nicer than "lives barely worth living". In reality, even lives "barely worth living" will build in a margin of individual slack to avoid the problem of individual fragility which has huge negative societal consequences, so it will probably seem much closer to middle-class than our current impression of poverty anyway.

MichaelStJules @ 2024-05-28T15:50 (+3)

What if you consider a population of beings with much narrower (expected) welfare ranges or much shorter lives? How many nematodes eating their preferred foods are better than a population of a billion flourishing humans?

See also Sebo, 2023 https://doi.org/10.1080/21550085.2023.2200724

Or, you could imagine if humans had extremely short lives, but joyful nonetheless, and in huge numbers.

ThePlanetaryNinja108 @ 2024-06-06T16:33 (+3)

A lot of people (including I) lean towards empty individualism.

From an empty individualistic perspective, there is no difference between creating 1 billion people who experience 100 years of bliss and creating 100 billion people who experience 1 year of bliss.

So that version of the RC is easy to bite the bullet on.

I am a negative utilitarian so both would be neutral, in my opinion.

Ben Millwood @ 2024-05-28T10:25 (+3)

Not sure what you're getting at here -- the whole point of the repugnant conclusion is that the people in the large branch have lives barely worth living. If you replace those people with people whose lives are a generous margin above the threshold, it's not the same question anymore.

Lukas_Gloor @ 2024-05-28T13:12 (+4)

My guess is that, even then, there'll be a lot of people for whom it remains counterintuitive. (People may no longer use the strong word "repugnant" to describe it, but I think many will still find it counterintuitive.)

Which would support my point that many people find the repugnant conclusion counterintuitive not (just) because of aggregation concerns, but also because they have the intuition that adding new people doesn't make things better.

SummaryBot @ 2024-05-28T14:09 (+1)

Executive summary: The perceived repugnancy of the Repugnant Conclusion in population ethics is often due to poorly chosen utility function parameters or misunderstandings, and the focus should be on estimating realistic utility functions using knowledge from economics and health science rather than seeking radical approaches to circumvent it.

Key points:

- The undesirability of the Repugnant Conclusion arises from intuitive discomfort with extreme scenarios, not inherent flaws in the concept.

- The author argues for a utility function with monotonically increasing relationships between total utility and both population size and average happiness, a threshold for a life worth living, and the existence of welfare tradeoffs.

- If conclusions seem odd at extremes, it's more likely due to mistaken parameters or intuitions than fundamental issues with the utility function.

- The author finds many proposed "solutions" in population ethics, such as averagism, person-affecting theories, and rejecting transitivity, to be unlikely or to not actually address the Repugnant Conclusion.

- The author disagrees with the view that the Repugnant Conclusion represents a severe problem for ethics and decision-making.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.