A Critical Review of Open Philanthropy’s Bet On Criminal Justice Reform

By NunoSempere @ 2022-06-16T16:40 (+308)

Epistemic status: Dwelling on the negatives.

Hello AstralCodexTen readers! You might also enjoy this series on estimating value, or my forecasting newsletter. And for the estimation language used throughout this post, see Squiggle.—Nuño.

Summary

From 2013 to 2021, Open Philanthropy donated $200M to criminal justice reform. My best guess is that, from a utilitarian perspective, this was likely suboptimal. In particular, I am fairly sure that it was possible to realize sooner that the area was unpromising and act on that earlier on.

In this post, I first present the background for Open Philanthropy's grants on criminal justice reform, and the abstract case for considering it a priority. I then estimate that criminal justice grants were distinctly worse than other grants in the global health and development portfolio, such as those to GiveDirectly or AMF.

I speculate about why Open Philanthropy donated to criminal justice in the first place, and why it continued donating. I end up uncertain about to what extent this was a sincere play based on considerations around the value of information and learning, and to what extent it was determined by other factors, such as the idiosyncratic preferences of Open Philanthropy's funders, human fallibility and slowness, paying too much to avoid social awkwardness, “worldview diversification” being an imperfect framework imperfectly applied, or it being tricky to maintain a balance between conventional morality and expected utility maximization. In short, I started out being skeptical that a utilitarian, left alone, spontaneously starts exploring criminal justice reform in the US as a cause area, and to some degree I still think that upon further investigation, though I still have significant uncertainty.

I then outline my updates about Open Philanthropy. Personally, I updated downwards on Open Philanthropy’s decision speed, rationality and degree of openness, from an initially very high starting point. I also provide a shallow analysis of Open Philanthropy’s worldview diversification strategy and suggest that they move to a model where regular rebalancing roughly equalizes the marginal expected values for the grants in each cause area. Open Philanthropy is doing that for its global health and development portfolio anyways.

Lastly, I brainstorm some mechanisms which could have accelerated and improved Open Philanthropy's decision-making and suggest red teams and monetary bets or prediction markets as potential avenues of investigation.

Throughout this piece, my focus is aimed at thinking clearly and expressing myself clearly. I understand that this might come across as impolite or unduly harsh. However, I think that providing uncertain and perhaps flawed criticism is still worth it, in expectation. I would like to note that I still respect Open Philanthropy and think that it’s one of the best philanthropic organizations around.

Open Philanthropy staff reviewed this post prior to publication.

Index

- Background information

- What is the case for Criminal Justice Reform?

- What is the cost-effectiveness of criminal justice grants?

- Why did Open Philanthropy donate to criminal justice in the first place?

- Why did Philanthropy keep donating to criminal justice?

- What conclusions can we reach from this?

- Systems that could have optimized Open Philanthropy’s impact

- Conclusion

Background information

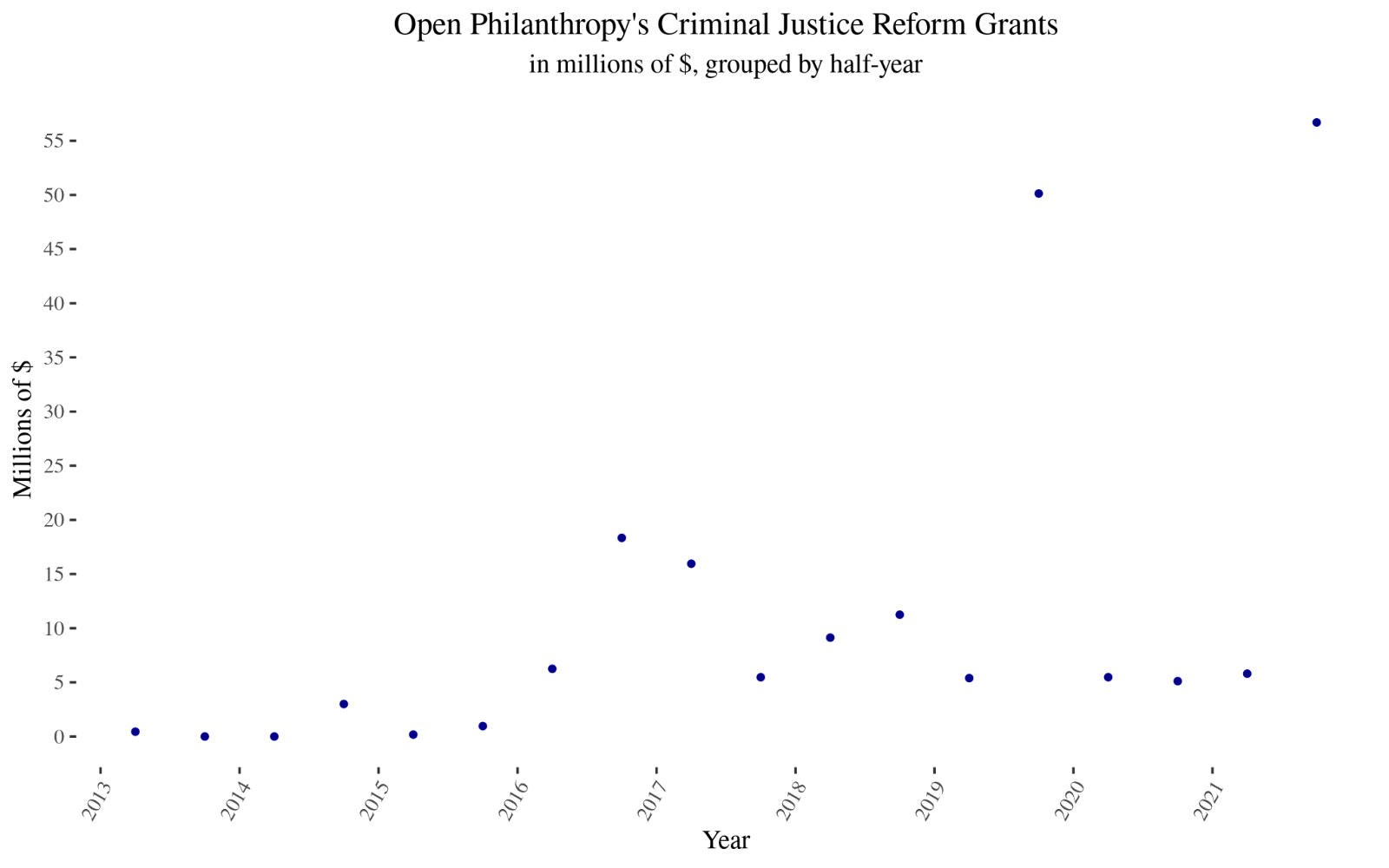

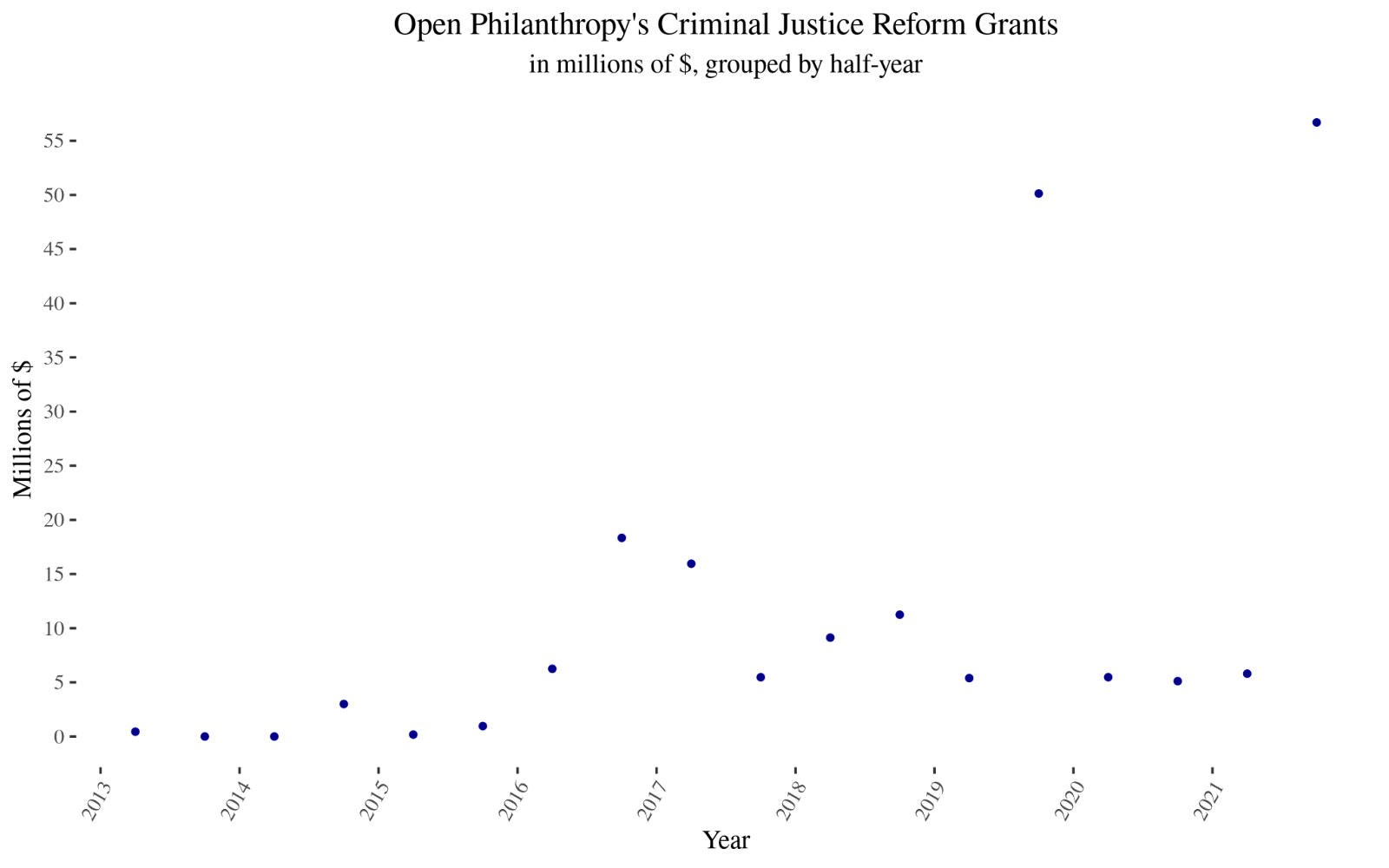

From 2013 to 2021, Open Philanthropy distributed $199,574,123 to criminal justice reform [0]. In 2015, they hired Chloe Cockburn as a program officer, following a “stretch goal” for the year. They elaborated on their method and reasoning on The Process of Hiring our First Cause-Specific Program Officer.

In that blog post, they described their expansion into the criminal justice reform space as substantially a “bet on Chloe”. Overall, the post was very positive about Chloe (more on red teams below). But the post expressed some reservations because “Chloe has a generally different profile from the sorts of people GiveWell has hired in the past. In particular, she is probably less quantitatively inclined than most employees at GiveWell. This isn’t surprising or concerning - most GiveWell employees are Research Analysts, and we see the Program Officer role as calling for a different set of abilities. That said, it’s possible that different reasoning styles will lead to disagreement at times. We think of this as only a minor concern.” In hindsight, it seems plausible to me that this relative lack of quantitative inclination played a role in Open Philanthropy making comparatively suboptimal grants in the criminal justice space [1].

In mid-2019, Open Philanthropy published a blog post titled GiveWell’s Top Charities Are (Increasingly) Hard to Beat. It explained that, with GiveWell’s expansion into researching more areas, Open Philanthropy expected that there would be enough room for more funding for charities that were as good as GiveWell’s top charities. Thus, causes like Criminal Justice Reform looked less promising.

In the months following that blog post, Open Philanthropy donations to Criminal Justice reform spike, with multi-million, multi-year grants going to Impact Justice ($4M), Alliance for Safety and Justice ($10M), National Council for Incarcerated and Formerly Incarcerated Women and Girls ($2.25M), Essie Justice Group ($3M), Texas Organizing Project ($4.2M), Color Of Change Education Fund ($2.5M) and The Justice Collaborative ($7.8M).

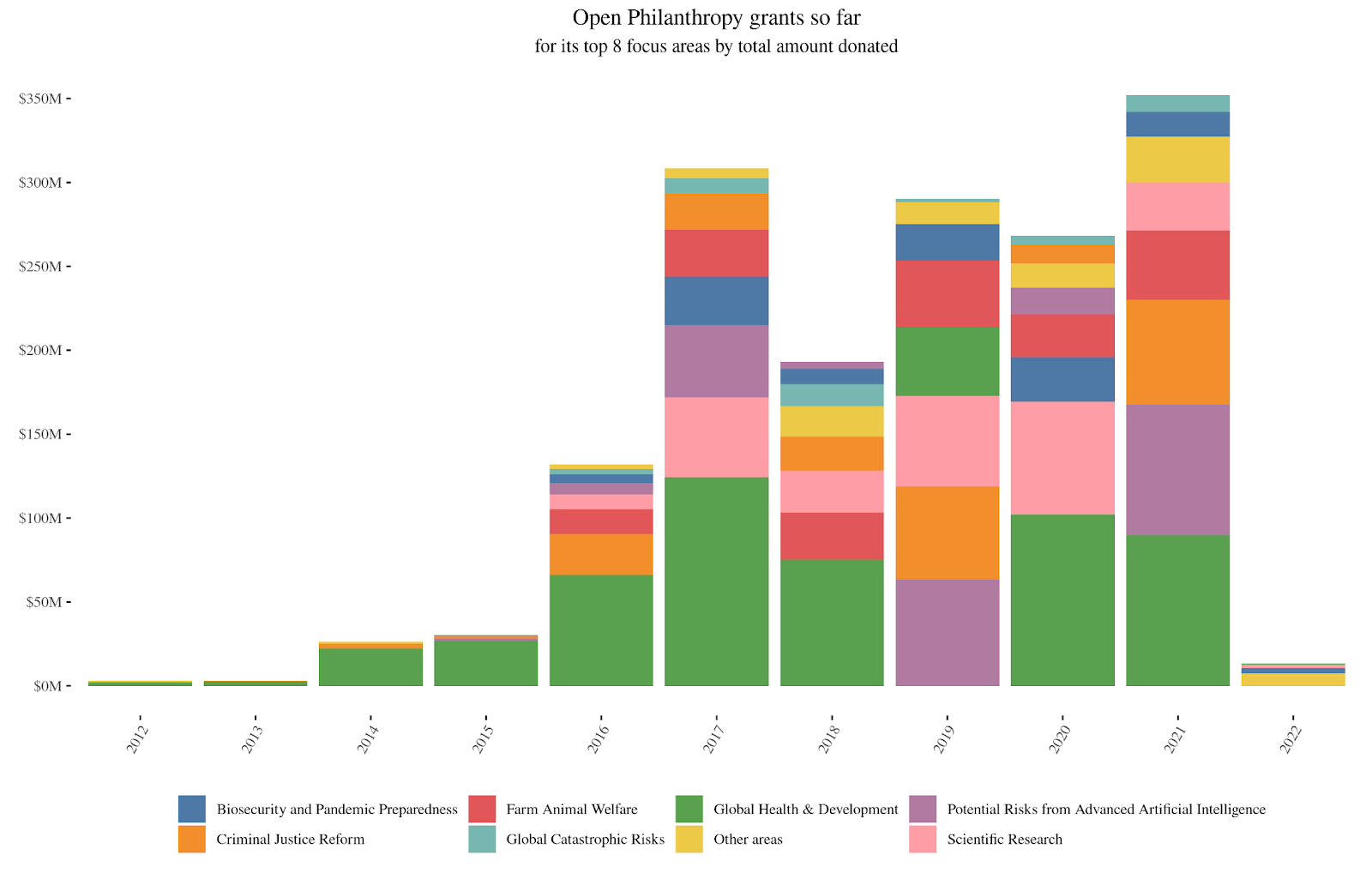

Initially, I thought that might be because of an expectation of winding down. However, other Open Philanthropy cause areas also show a similar pattern of going up in 2019, perhaps at the expense of spending on Global Health and Development for that year:

In 2021, Open Philanthropy spun out its Criminal Justice Reform department as a new organization: Just Impact. Open Philanthropy seeded Just Impact with $50M. Their parting blog post explains their thinking: that Global Health and Development interventions have significantly better cost-effectiveness.

What is the case for Criminal Justice Reform?

Note: This section briefly reviews my own understanding of this area. For a more canonical source, see Open Philanthropy’s strategy document on criminal justice reform.

There are around 2M people in US prisons and jails. Some are highly dangerous, but a glance at a map of prison population rates per 100k people suggests that the US incarcerates a significantly larger share of its population than most other countries:

Outlining a positive vision for reform is still an area of active work. Still, a first approximation might be as follows:

Criminals should be punished in proportion to an estimate of the harm they have caused, times a factor to account for a less than 100% chance of getting caught, to ensure that crimes are not worth it in expectation. This is in opposition to otherwise disproportionate jail sentences caused by pressures on politicians to appear tough on crime. In addition, criminals then work to provide restitution to the victim, if the victim so desires, per some restorative justice framework [2].

In a best-case scenario, criminal justice reform could achieve somewhere between a 25% reduction in incarceration in the short-term and a 75% reduction in the longer term, bringing the incarceration rate down to only twice that of Spain [4], while maintaining the crime rate constant. Say that $2B to $20B, or 10x to 100x the amount that Open Philanthropy has already spent, would have a 1 to 10% chance of succeeding at that goal [5].

What is the cost-effectiveness of criminal justice grants?

Estimation strategy

In this section, I come up with some estimates of the impact of criminal justice reform, and compare them with some estimates of the impact of GiveWell-style global health and development interventions.

Throughout, I am making the following modelling choices:

- I am primarily looking at the impact of systemic change

- I am looking at the first-order impacts

- I am using subjective estimates

I am primarily looking at the impact of systemic change because many of the largest Open Philanthropy donations were aiming for systemic change, and their individual cost-effectiveness was extremely hard to estimate. For completeness, I do estimate the impacts of a standout intervention as well.

I am looking at the first-order impacts on prisoners and GiveWell recipients, rather than at the effects on their communities. My strong guess is that the story the second-order impacts would tell—e.g., harms to the community from death or reduced earnings in the case of malaria, harms from absence and reduced earnings in the case of imprisonment)—wouldn’t change the relative values of the two cause areas.

After presenting my estimates, I discuss their limitations.

Simple model for systemic change

Using those what I consider to be optimistic assumptions over first-order effects, I come up with the following Squiggle model:

initialPrisonPopulation = 1.5M to 2.5M # Data for 2022 prison population has not yet been published, though this estimate is perhaps too wide.

reductionInPrisonPopulation = 0.25 to 0.75

badnessOfPrisonInQALYs = 0.2 to 6 # 80% as good as being alive to 5 times worse than living is good

counterfactualAccelerationInYears = 5 to 50

probabilityOfSuccess = 0.01 to 0.1 # 1% to 10%.

counterfactualImpactOfGrant = 0.5 to 1 ## other funders, labor cost of activism

estimateQALYs = initialPrisonPopulation * reductionInPrisonPopulation * badnessOfPrisonInQALYs * counterfactualAccelerationInYears * probabilityOfSuccess * counterfactualImpactOfGrant

cost = 2B to 20B

costPerQALY = cost / estimateQALYs

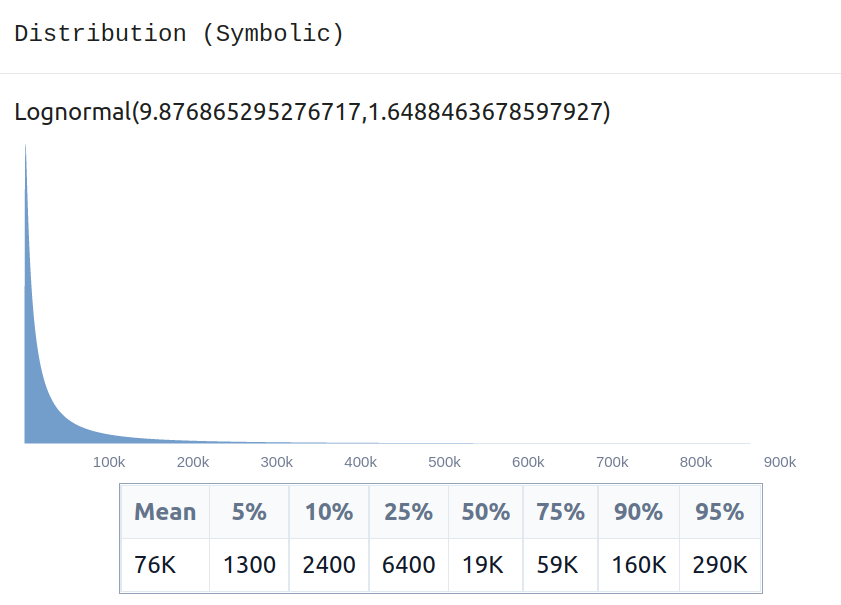

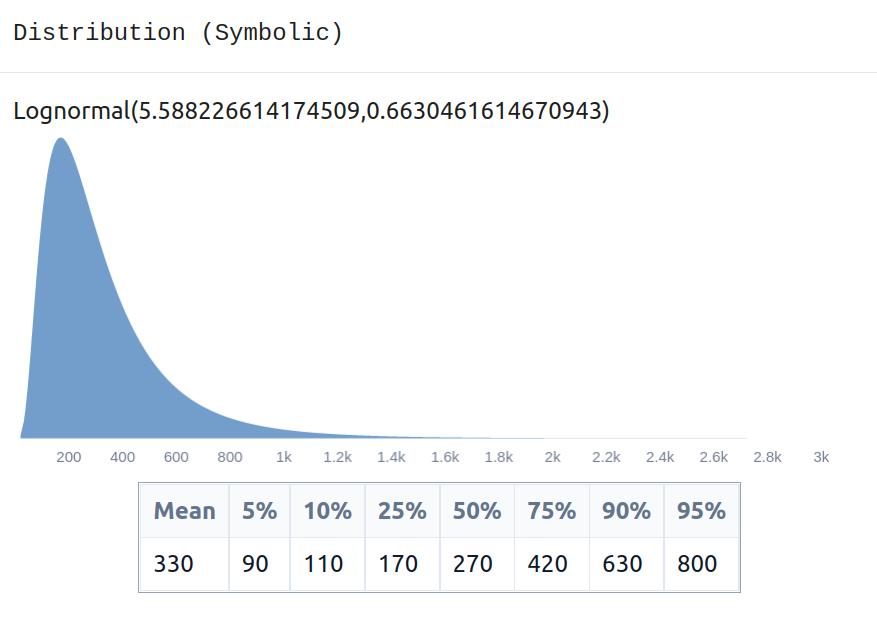

costPerQALYThat model produces the following distribution:

mean(cost)/mean(estimateQALYs) is equal to $8160/QALYThis model estimates that criminal justice reform buys one QALY [6](quality-adjusted life year) for $76k, on average. But the model is very uncertain, and its 90% confidence interval is $1.3k to ~$290k per QALY. It assigns a 50% chance to it costing less than ~$19k. For a calculation that instead looks at more marginal impact, see here.

EDIT 22/06/2022: Commenters pointed out that the mean of cost / estimateQALYs in the chart above isn't the right quantity to look at in the chart above. mean(cost)/mean(estimateQALYs) is probably a better representation of "expected cost per QALY. That quantity is $8160/QALY for the above model. If one looks at 1/mean(estimateQALYs/cost), this is $5k per QALY. Overall I would instead recommend looking at the 90% confidence intervals, rather at the means. See this comment thread for discussion. I've added notes below each model.

Simple model for a standout criminal justice reform intervention

Some grants in criminal justice reform might beat systemic reform. I think this might be the case for closing Rikers, bail reform, and prosecutorial accountability:

- Rikers is a large and particularly bad prison.

- Bail reform seems like a well-defined objective that could affect many people at once.

- Prosecutorial accountability could get a large multiplier over systemic reform by focusing on the prosecutors in districts that hold very large prison populations.

For instance, for the case of Rikers, I can estimate:

initialPrisonPopulation = 5000 to 10000

reductionInPrisonPopulation = 0.25 to 0.75

badnessOfPrisonInQALYs = 0.2 to 6 # 80% as good as being alive to 5 times worse than living is good

counterfactualAccelerationInYears = 5 to 20

probabilityOfSuccess = 0.07 to 0.5

counterfactualImpactOfGrant = 0.5 to 1 ## other funders, labor cost of activism

estimatedImpactInQALYs = initialPrisonPopulation * reductionInPrisonPopulation * badnessOfPrisonInQALYs * counterfactualAccelerationInYears * probabilityOfSuccess * counterfactualImpactOfGrant

cost = 5000000 to 15000000

costPerQALY = cost / estimatedImpactInQALYs

costPerQALY

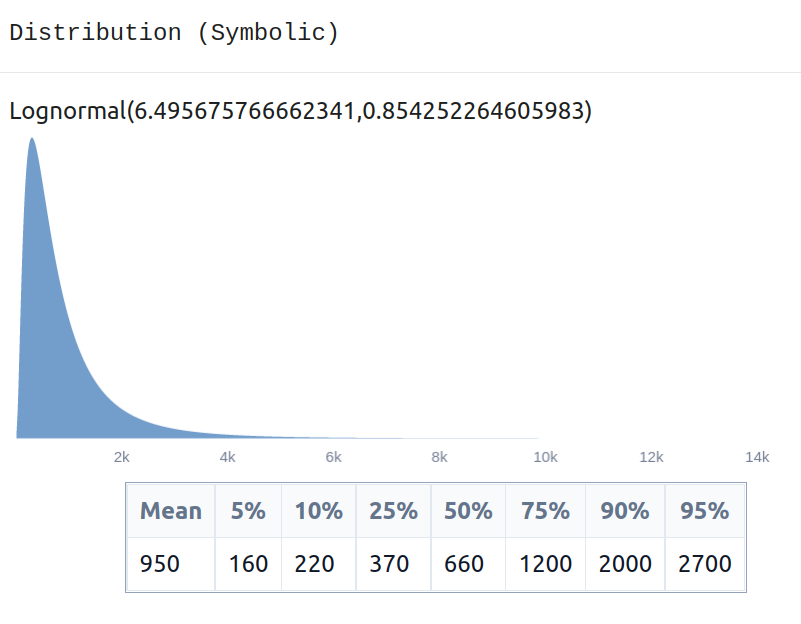

mean(cost)/mean(estimateQALYs) is $837/QALYSimple model for GiveWell charities

Against Malaria Foundation

Using a similar estimation for the Against Malaria Foundation:

costPerLife = 3k to 10k

lifeDuration = 30 to 70

qalysPerYear = 0.2 to 1 ## feeling unsure about this.

valueOfSavedLife = lifeDuration * qalysPerYear

costEffectiveness = costPerLife/valueOfSavedLife

costEffectiveness

mean(costPerLife)/mean(valueOfSavedLife) is $245/QALYIts 90% confidence interval is $90 to ~$800 per QALY, and I likewise validated this with Simple Squiggle. Notice that this interval is disjoint with the estimate for criminal justice reform of $1.3k to $290k.

GiveDirectly

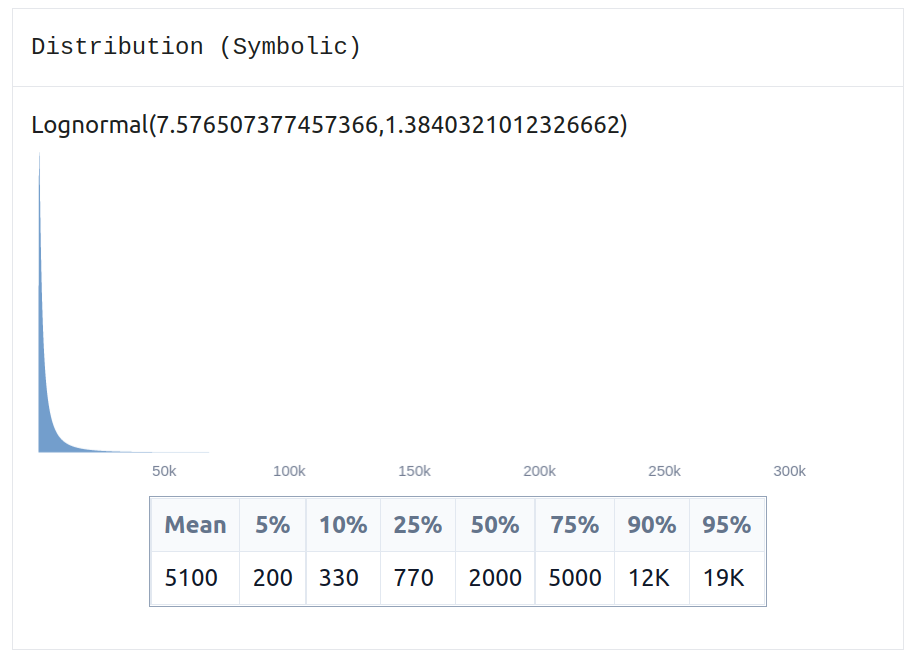

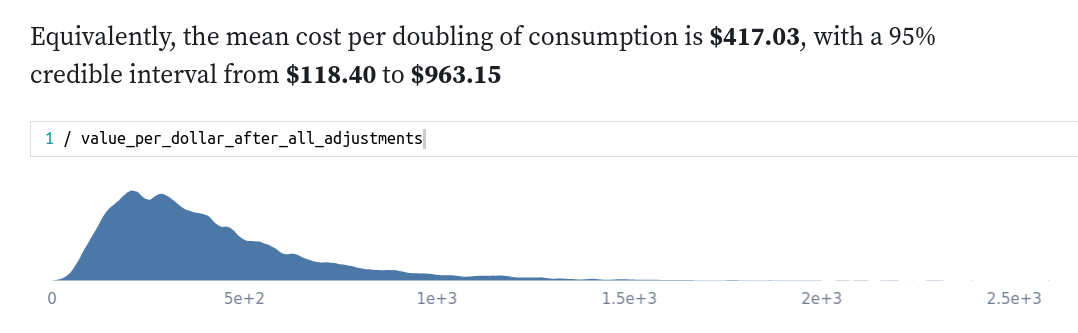

One might argue that AMF is too strict a comparison and that one should instead compare criminal justice reform to the marginal global health and development grant. Recently, my colleague Sam Nolan quantified the uncertainty in GiveDirectly’s estimate of impact. He arrived at a final estimate of ~$120 to ~$960 per doubling of consumption for one year.

The conversion between a doubling of consumption and a QALY is open to some uncertainty. For instance:

- GiveWell estimates it about equal based on the different weights given to saving people of different ages—a factor of ~0.8 to 1.3, based on some eye-balling from this spreadsheet.

- GiveWell recently updated their weighings to give a DALY (similar to a QALY) a value of around ~2 doublings of income.

- Commenters pointed out that few people would trade half their life to double their income, and that for them a conversion factor around 0.2 might be more appropriate. But they are much wealthier than the average GiveDirectly recipient.

Using a final adjustment of 0.2 to 1.3 QALYs per doubling of consumption (which has a mean of 0.6 QALYs/doubling), I come up with the following model an estimate:

costPerDoublingOfConsumption = 118.4 to 963.15

qalysPerDoublingOfConsumption = 0.2 to 1.3

costEffectivenesss=costPerDoublingOfConsumption/qalysPerDoublingOfConsumption

costEffectivenesss

mean(costPerDoublingOfConsumption)/mean(qalysPerDoublingOfConsumption) is $690/QALYThis has a 90% confidence interval between $160 and $2700 per QALY.

Discussion

My estimate for the impact of AMF ($90 to $800 per QALY) does not overlap with my estimate for systemic criminal justice reform ($1.3k to $290k per QALY). I think this is informative, and good news for uncertainty quantification: even though both estimates are very uncertain—they range 2 and 3 orders of magnitude, respectively—we can still tell which one is better.

When comparing GiveDirectly ($160 and $2700 per QALY; mean of $900/QALY) against one standout intervention in the space ($200 to $19K per QALY, with a mean of $5k/QALY), the estimates do overlap, but GiveDirectly is still much better in expectation.

EDIT 22/06/2022. Using the better mean, the above paragraph would be: When comparing GiveDirectly ($160 and $2700 per QALY; mean of $690/QALY) against one standout intervention in the space ($200 to $19K per QALY, with a mean of $837/QALY), the estimates do overlap, but GiveDirectly is still better in expectation.

One limitation of these estimates is that they only model first-order effects. GiveWell does have some estimates of second-order effects (avoiding malaria cases that don’t lead to death, longer-term income increases, etc.) However, for the case of criminal justice interventions, these are harder to estimate. Nonetheless, my strong sense is that the second-order effects of death from malaria or cash transfers are similar to or greater than the second-order effects of temporary imprisonment, and don’t change the relative value of the two cause areas all that much.

Some other sources of model error might be:

- QALYs being an inadequate modelling choice: QALYs intuitively have a bound of 1 QALY/year, and might not be the right way to think about certain interventions.

- I ignored the cost to the US of keeping someone in prison, as opposed to how that money could have been spent otherwise

- I didn’t model the increased productivity of someone outside prison

- I didn’t estimate recidivism or increased crime from lower incarceration

- I didn’t estimate the cost of pushback, such as lobbying for opposite policies

- My estimates of the cost of reform were pretty optimistic.

Of these, I think that not modelling the cost to the US of keeping someone in prison, and not modelling recidivism are one of the weakest aspects of my current model. For a model which tries to incorporate these, see the appendix. So overall, there is likely a degree of model error. But I still think that the small models point to something meaningful.

We can also compare the estimates in this post with other estimates. A lengthy report commissioned by Open Philanthropy on the impacts of incarceration on crime mostly concludes that marginal reduction in crime through more incarceration is non-existent—because the effects of reduced crime while prisoners are in prison are compensated by increased crime when they get out, proportional to the length of their sentence. But the report reasons about short-term effects and marginal changes, e.g., based on RCTs or natural experiments, rather than considering longer-term incentive landscape changes following systemic reform. So for the purposes of judging systemic reform rather than marginal changes, I am inclined to almost completely discount it [7]. That said, my unfamiliarity with the literature is likely one of the main weaknesses of this post.

Open Philanthropy’s own initial casual cause estimations are much more optimistic. In a 2020 interview with Chloe Cockburn, she mentions that Open Philanthropy estimates criminal justice reform to be around 1/4th as valuable as donations to top GiveWell charities, but that she is personally higher based on subjective factors [8].

For illustration, here are a few grants that I don’t think meet the funding bar of being comparable to AMF or GiveDirectly, based on casual browsing of their websites:

- $600k: Essie Justice Group — General Support

- $500k: LatinoJustice — Work to End Mass Incarceration

- $261k: The Soze Agency — Returning Citizens Project

- $255k: Mijente — Criminal Justice Reform

- $200k: Justice Strategies — General Support

- $100k: ReFrame Mentorship — General Support

- $100k: Cosecha, general support. (part 1, part 2)

- $10k: Photo Patch Foundation — General Support

The last one struck me as being both particularly bad and relatively easy to evaluate: A letter costs $2.5, about the same as deworming several kids at $0.35 to $0.97 per deworming treatment. But sending a letter intuitively seems significantly less impactful.

Conversely, larger grants, such as, for instance, a $2.5M grant to Color Of Change, are harder to casually evaluate. For example, that particular grant was given to support prosecutorial accountability campaigns and to support Color Of Change’s work with the film Just Mercy. And because the grant was 50% of Color of Change’s budget for one year, I imagine it also subsidized its subsequent activities, such as the campaigns currently featured on its website [10], or the $415k salary of its president [11]. So to the extent that the grant’s funds were used for prosecutorial accountability, they may have been more cost-effective, and to the extent that they were used for other purposes, less so. Overall, I don’t think that estimating the cost-effectiveness of larger grants as the cost-effectiveness of systemic change would be grossly unfair.

Why did Open Philanthropy donate to criminal justice in the first place?

Epistemic status: Speculation.

I will first outline a few different hypotheses about why Open Philanthropy donated to criminal justice, without regard to plausibility:

- The Back of the Envelope Calculation Hypothesis

- The Value of Information Hypothesis

- The Leverage Hypothesis

- The Strategic Funder Hypothesis

- The Progressive Funders Hypothesis

- The “Politics is The Mind Killer” Hypothesis

- The Non-Updating Funders Hypothesis

- The Moral Tension Hypothesis

I obtained this list by talking to people about my preliminary thoughts when writing this draft. After outlining them, I will discuss which of these I think are most plausible.

The Back of the Envelope Calculation Hypothesis

As highlighted in Open Philanthropy blog posts, early on, it wasn’t clear that GiveWell was going to find as many opportunities as it later did. It was plausible that the bar could have gone down with time. If so, and if one has a rosier outlook on the tractability and value of criminal justice reform, it could plausibly have been competitive with other areas.

For instance, per Open Philanthropy’s estimations:

Each grant is subject to a cost-effectiveness calculation based on the following formula:

Number of years averted x $50,000 for prison or $100,000 for jail [our valuation of a year of incarceration averted] / 100 [we aim to achieve at least 100x return on investment, and ideally much more] - discounts for causation and implementation uncertainty and multiple attribution of credit > $ grant amount. Not all grants are susceptible to this type of calculation, but we apply it when feasible.

That is, Open Philanthropy’s lower bound for funding criminal justice reform was $500 to $1,000 per year of prison/jail avoided. Per this lower bound, criminal justice reform would be roughly as cost-effective as GiveDirectly. But this bound is much more optimistic than my estimates of the cost-effectiveness of criminal justice reform grants above.

The Value of Information Hypothesis

In 2015, when Open Philanthropy hadn’t invested as much into criminal justice reform, it might have been plausible that relatively little investment might have led to systematic reform. It might have also been plausible that, if found promising, an order of magnitude more funding could have been directed to the cause area.

Commenters in a draft pointed out a second type of information gain: Open Philanthropy might gain experience in grantmaking, learn information, and acquire expertise that would be valuable for other types of giving. In the case of criminal justice reform, I would guess that the specific cause officers—rather than Open Philanthropy as an institution—would gain most of the information. I would also guess that the lessons learnt haven’t generalized to, for instance, pandemic prevention funding advocacy. So my best guess is that the information gained would not make this cause worth it if it otherwise would not have been. But I am uncertain about this.

The Leverage Hypothesis

Even if systemic change itself is not cost-effective, criminal justice reform and adjacent issues attract a large amount of attention anyway. By working in this area, one could gain leverage, for instance:

- Leverage over other people’s attention and political will, by investing early in leaders who will be in a position to channel somewhat ephemeral political wills.

- Leverage over the grantmaking in the area, by seeding Just Impact

The Strategic Funder Hypothesis

My colleagues raised the hypothesis that Open Philanthropy might have funded criminal justice reform in part because they wanted to look less weird. E.g., “Open Philanthropy/the EA movement has donated to global health, criminal justice reform, preventing pandemics and averting the risks of artificial intelligence” sounds less weird than “...donated to global health, preventing pandemics and averting the risks of artificial intelligence”.

The Progressive Funders Hypothesis

Dustin Moskovitz and Cari Tuna likely have other goals beyond expected utility maximization. Some of these goals might align with the mores of the current left-wing of American society. Or, alternatively, their progressive beliefs might influence and bias their beliefs about what maximizes utility.

On the one hand, I think this would be a mistake. Cause impartiality is one of EA’s major principles, and I think it catalyzes an important part of what we’ve found out about doing good better. But on the other hand, these are not my billions. On the third hand, it seems suboptimal if politically-motivated giving were post-hoc argued to be utility-optimal. If this was the case, I would really have appreciated if their research would have been upfront about this.

The “Politics is The Mind Killer” Hypothesis

In the domain of politics, reasoning degrades, and principal-agent problems arise. And so another way to look at the grants under discussion is that Open Philanthropy flew too close to politics, and was sucked in.

To start, there is a selection effect of people who think an area is the most promising going into it. In addition, there is a principal-agent problem where people working inside a cause area are not really incentivized to look for arguments and evidence that they should be replaced by something better. My sense is that people will tend to give very, very optimistic estimates of impact for their own cause area.

These considerations are general arguments, and they could apply to, for instance, community building or forecasting, with similar force. Though perhaps the warping effects would be stronger for cause areas adjacent to politics.

The Moral Tension Hypothesis

My sense is that Open Philanthropy funders lean a bit more towards conventional morality, whereas philosophical reflection leans more towards expected utility maximization. Managing the tension between these two approaches seems pretty hard, and it shouldn’t be particularly surprising that a few mistakes were made from a utilitarian perspective.

Discussion

In conversation with Open Philanthropy staff, they mentioned that the first three hypotheses —Back of the Envelope, Value of Information and Leverage—sounded most true to them. In conversation with a few other people, mostly longtermists, some thought that the Strategic Funders and the Progressive Funders hypothesis were more likely.

I would make a distinction between what the people who made the decision were thinking at the time, and the selection effects that chose those people. And so, I would think that early on, Open Philanthropy leadership mainly was thinking about back-of-the-envelope calculations, value of information, and leverage. But I would also expect them to have done so somewhat constrainedly. And I expect some of the other hypotheses—particularly the “progressive funders hypothesis”, and the “moral tension hypothesis”—to explain those constraints at least a little.

I am left uncertain about whether and to what extent Open Philanthropy was acting sincerely. It could be that criminal justice reform was just a bet that didn’t pay off. But it could also be the case that some factor put the thumb on the scale and greased the choice to invest in criminal justice reform. In the end, Open Philanthropy is probably heterogenous; it seems likely that some people were acting sincerely, and others with a bit of motivated reasoning.

Why did Open Philanthropy keep donating to criminal justice?

Epistemic status: More speculation

The Inertia Hypothesis

Open Philanthropy wrote about GiveWell’s Top Charities Are (Increasingly) Hard to Beat in 2019. They stopped investing in criminal justice reform in 2021, after giving an additional $100M to the cause area. I’m not sure what happened in the meantime.

In a 2016 blog post explaining worldview diversification, Holden Karnofsky writes:

Currently, we tend to invest resources in each cause up to the point where it seems like there are strongly diminishing returns, or the point where it seems the returns are clearly worse than what we could achieve by reallocating the resources - whichever comes first

Under some assumptions explained in that post, namely that the amounts given to each cause area are balanced to ensure that the values of the marginal grants to each area are similar, worldview diversification would be approximately optimal even from an expected value perspective [12]. My impression is that this monitoring and rebalancing did not happen fast enough in the case of criminal justice reform.

Incongruous as it might ring to my ears, it is also possible that optimizing the allocation of an additional $100M might not have been the most valuable thing for Open Philanthropy’s leadership to have been doing. For instance, exploring new areas, convincing or coordinating with additional billionaires or optimizing other parts of Open Philanthropy’s portfolio might have been more valuable.

The Social Harmony Hypothesis

Firing people is hard. When you structured your bet on a cause area as a bet on a specific person, I imagine that resolving that bet as a negative would be awkward [14].

The Soft Landing Hypothesis

Abruptly stopping funding can really be detrimental for a charity. So Open Philanthropy felt the need to give a soft roll-off that lasts a few years. On the one hand, this is understandable. But on the other hand, it seems that Open Philanthropy might have given two soft landings, one of $50M in 2019, and another $50M in 2021 to spin-off Just Impact.

The Chessmaster Hypothesis

There is probably some calculation or some factor that I am missing. There is nothing disallowing Open Philanthropy from making moves based on private information. In particular, see the discussion on information gains above. Information gains are particularly hard for me to estimate from the outside.

What conclusions can we reach from this?

On Open Philanthropy’s Observe–Orient–Decide–Act loops

Open Philanthropy took several years and spent an additional $100M on a cause that they could have known was suboptimal. That feels like too much time.

They also arguably gave two different “golden parachutes” when leaving criminal justice reform. The first, in 2019, gave a number of NGOs in the area generous parting donations. The second, in 2021, gave the outgoing program officers $50 million to continue their work.

This might make similar experimentation—e.g., hiring a program officer for a new cause area, and committing to it only if it goes well—much more expensive. It’s not clear to me that Open Philanthropy would have agreed beforehand to give $100M in “exit grants”.

On Moral Diversification

Open Philanthropy’s donations to criminal justice were part of its global health and development portfolio, and, thus, in theory, not subject to Open Philanthropy’s worldview diversification framework. But in practice, I get the impression that one of the bottlenecks for not noticing sooner that criminal justice reform was likely suboptimal, might have had to do with worldview diversification.

In Technical Updates to Our Global Health and Wellbeing Cause Prioritization Framework, Peter Favaloro and Alexander Berger write:

Overall, having a single “bar” across multiple very different programs and outcome measures is an attractive feature because equalizing marginal returns across different programs is a requirement for optimizing the overall allocation of resources

Prior to 2019, we used a “100x” bar based on the units above, the scalability of direct cash transfers to the global poor, and the roughly 100x ratio of high-income country income to GiveDirectly recipient income. As of 2019, we tentatively switched to thinking of “roughly 1,000x” as our bar for new programs, because that was roughly our estimate of the unfunded margin of the top charities recommended by GiveWell

We’re also updating how we measure the DALY burden of a death; our new approach will accord with GiveWell’s moral weights, which value preventing deaths at very young ages differently than implied by a DALY framework. (More)

This post focuses exclusively on how we value different outcomes for humans within Global Health and Wellbeing; when it comes to other outcomes like farm animal welfare or the far future, we practice worldview diversification instead of trying to have a single unified framework for cost-effectiveness analysis. We think it’s an open question whether we should have more internal “worldviews” that are diversified over within the broad Global Health and Wellbeing remit (vs everything being slotted into a unified framework as in this post).

Speaking about Open Philanthropy’s portfolio rather than about criminal justice, instead of strict worldview diversification, one could compare these different cause areas as best one can, strive to figure out better comparisons, and set the marginal impact of grants in each area to be roughly equal. This would better approximate expected value maximization, and it is in fact not too dissimilar to (part of the) the original reasoning for worldview diversification. As explained in the original post, worldview diversification makes the most sense in some contexts and under some assumptions: diminishing returns to each cause, and similar marginal values to more funding.

But somehow, I get the weak impression that worldview diversification (partially) started as an approximation to expected value, and ended up being more of a peace pact between different cause areas. This peace pact disincentivizes comparisons between giving in different cause areas, which then leads to getting their marginal values out of sync.

Instead, I would like to see:

- further analysis of alternatives to moral diversification,

- more frequent monitoring of whether the assumptions behind moral diversification still make sense,

- and a more regular rebalancing of the proportion of funds assigned to each cause according to the value of their marginal grants [13].

On Open Philanthropy’s Openness

After a shallow investigation and reading a few of its public writings, I’m still unsure why exactly Open Philanthropy invested a relatively large amount into this cause area. My impression is that there are some critical details about this that they have not yet written about publicly.

Open Philanthropy’s Rationality

I used to implicitly model Open Philanthropy as a highly intelligent unified agent to which I should likely defer. I now get the impression that there might be a fair amount of politicking, internal division, and some suboptimal decision-making.

I think that this update was larger for me than it might be for others, perhaps because I initially thought very highly of Open Philanthropy. So others who started from a more moderate starting point should make a more minor update, if any.

I still believe that Open Philanthropy is likely one of the best organizations working in the philanthropic space.

Systems that could improve Open Philanthropy’s decision-making

While writing this piece, the uncomfortable thought struck me that if someone had realized in 2017 that criminal justice was suboptimal, it might have been difficult for them to point this out in a way which Open Philanthropy would have found useful. I’m also not sure people would have been actively incentivized to do so.

Once the question is posed, it doesn’t seem hard to design systems that incentivize people to bring potential mistakes to Open Philanthropy’s attention. Below, I consider two options, and I invite commenters to suggest more.

Red teaming

When investing substantial amounts in a new cause area, putting a large monetary bounty on red teams seems a particularly cheap intervention. For instance, one could put a prize on the best red teaming, and a larger bounty on a red teaming output, leading to a change in plans. The recent Criticism Contest is a one-off example which could in theory address Open Philanthropy.

Forecasting systems

Per this recent writeup, Open Philanthropy has predictions made and graded by each cause’s officer, who average about 1 prediction per $1 million moved. The focus of their prediction setup seems to be on learning from past predictions, rather than on using prediction setups to inform decisions before they are made. And it seems like staff tend to make predictions on individual grants, rather than on strategic decisions.

This echoes the findings of a previous report on Prediction Markets in the Corporate Setting: organizations are hesitant to use prediction setups in situations where this would change their most important decisions, or where this would lead to social friction. But this greatly reduces the usefulness of predictions. And in fact, we do know that Open Philanthropy's prediction setup failed to avoid the pitfalls outlined in this post.

Instead, have a forecasting system which is not restricted to Open Philanthropy staff, which has real-money bets, and which a focuses on using predictions to change decisions, rather than on learning after the fact. Such a system would ask things such as:

- whether a key belief underlying the favourable assessment of a grant will later be estimated to be false

- whether Open Philanthropy will regret having donated a given grant, or

- whether Open Philanthropy will regret some strategic decision, such as going into a cause area, or having set-up such-and-such disbursement schedule,

These questions might be operationalized as:

- “In year [x], what probability will [some mechanism] assign to [some belief]?”

- “In year [x], what will Open Philanthropy’s best estimate of the value for grant [y] be?” + “In year [x], what will be Open Philanthropy’s bar for funding be?”.

- Or, even simpler still, asking directly or “in year [x], will Open Philanthropy regret having made grant [y]?”,

- “in year [x], will Open Philanthropy regret having made decision [y]?”,

There would be a few challenges in creating such a forecasting system in a way that would be useful to Open Philanthropy:

- It would be difficult to organize this at scale.

- If open to the public, and if Open Philanthropy was listening to them, it might be easy and desirable to manipulate them.

- If structured as a prediction market, it might not be worth it to participate unless the market also yielded interest.

- If Open Philanthropy had enough bandwidth to create a forecasting system, it would also have been capable of monitoring the criminal justice reform situation more closely (?)

- It would be operationally or legally complex

- Prediction markets are mostly illegal in the US

In 2018, the best way to structure this may have been as follows: Open Philanthropy decides on a probability and a metric of success and offers a trusted set of advisors to bet against the metric being satisfied. Note that the metric can be fuzzy, e.g., “Open Phil employee X will estimate this grant to have been worth it”.

With time, advisors who can predict how Open Philanthropy will change its mind would acquire more money and thus more independent influence in the world. This isn’t bullet-proof—for instance, advisors would have an incentive to make Open Philanthropy be wrong so that they can bet against them—but it’d be a good start.

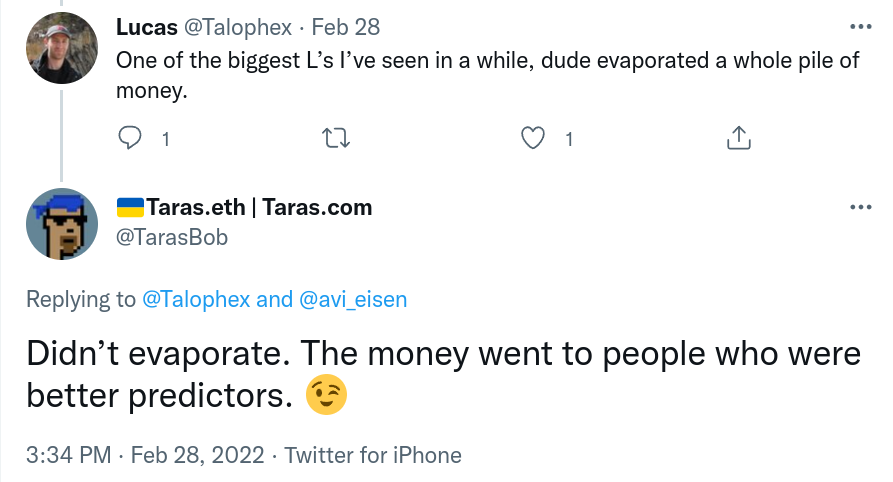

Note that the pathway to impact of making monetary bets wouldn’t only be to change Open Philanthropy’s decisions—which past analysis suggests would be difficult—but also to transfer wealth to altruistic actors that have better models of the world.

In July 2022, there still aren’t great forecasting systems that could deal with this problem. The closest might be Manifold Markets, which allows for the fast creation of different markets and the transfer of funds to charities, which gives some monetary value to their tokens. In any case, because setting up such a system might be laborious, one could instead just offer to set such a system up only upon request.

I am also excited about a few projects that will provide possibly scalable prediction markets, which are set to launch in the next few months and could be used for that purpose. My forecasting newsletter will have announcements when these projects launch.

Conclusion

Open Philanthropy spent $200M on criminal justice reform, $100M of which came after their own estimates concluded that it wasn’t as effective as other global health and development interventions. I think Open Philanthropy could have done better.

And I am left confused about why Open Philanthropy did not in fact do better. Part of this may have been their unique approach of worldview diversification. Part of this may have been the political preferences of their funders. And part of this may have been their more optimistic Fermi estimates. I oscillate between thinking “I, a young grasshopper, do not understand”, and “this was clearly suboptimal from the beginning, and obviously so”.

Still, Open Philanthropy did end up parting ways with their criminal justice reform team. Perhaps forecasting systems or red teams would have accelerated their decision-making on this topic.

Acknowledgements

Thanks to Linch Zhang, Max Ra, Damon Pourtahmaseb-Sasi, Sam Nolan, Lawrence Newport, Eli Lifland, Gavin Leech, Alex Lawsen, Hauke Hillebrandt, Ozzie Gooen, Aaron Gertler, Joel Becker and others for their comments and suggestions.

This post is a project by the Quantified Uncertainty Research Institute (QURI). The language used to express probabilities distributions used throughout the post is Squiggle, which is being developed by QURI.

Appendix: Incorporating savings and the cost of recidivism.

Epistemic status: These models are extremely rough, and should be used with caution. A more trustworthy approach would use the share of the prison population by type of crime, the chance of recidivism for each crime, and the cost of new offenses by type. Nonetheless, the general approach might be as follows:

// First section: Same as before

initialPrisonPopulation = 1.8M to 2.5M # Data for 2022 prison population has not yet been published, though this estimate is perhaps too wide.

reductionInPrisonPopulation = 0.25 to 0.75

badnessOfPrisonInQALYs = 0.2 to 6 # 80% as good as being alive to 5 times worse than living is good

accelerationInYears = 5 to 50

probabilityOfSuccess = 0.01 to 0.1 # 1% to 10%.

estimateQALYs = initialPrisonPopulation * reductionInPrisonPopulation * badnessOfPrisonInQALYs * accelerationInYears * probabilityOfSuccess

cost = 2B to 20B

costEffectivenessPerQALY = cost / estimateQALYs

// New section: Costs and savings

numPrisonersFreed = initialPrisonPopulation * reductionInPrisonPopulation * accelerationInYears * probabilityOfSuccess

savedCosts = numPrisonersFreed * (14k to 70k)

savedQALYsFromCosts = savedCosts / 50k

probabilityOfRecidivism = 0.3 to 0.7

numIncidentsUntilCaughtAgain = 1 to 10 // uncertain; look at what percentage of different types of crimes are reported and solved.

costPerIncident = 1k to 50k

lostCostsFromRecidivism = numPrisonersFreed * probabilityOfRecidivism * costPerIncident

lostQALYsFromRecidivism = lostCostsFromRecidivism/50k

costPerQALYIncludingCostsAndIncludingRecidivism = truncateLeft(cost / (estimateQALYs + savedQALYsFromCosts - lostQALYsFromRecidivism), 0)

// ^ truncateLeft needed because division is very numerically unstable.

// Display

// costPerQALYIncludingCostsAndIncludingRecidivism

// ^ increase the number of samples to 10000 and uncomment this lineA review from Open Philanthropy on the impacts of incarceration on crime concludes by saying that "The analysis performed here suggests that it is hard to argue from high-credibility evidence that at typical margins in the US today, decarceration would harm society". But “high-credibility evidence” does a lot of the heavy lifting: I have a pretty strong prior that incentives matter, and the evidence is weak. In particular, the evidence provided is a) mostly at the margin, and b) mostly using evidence based on short-term change. So I’m slightly convinced that for small changes, the effect in the short term—e.g., within one generation—is small. But if prison sentences are marginally reduced in length or in quantity, I still end up with the impression that crime would marginally rise in the longer term, as crimes become marginally more worth it. Conversely, if sentences are reduced more than in the margin, common sense suggests that crime will increase, as observed in, for instance, San Francisco (note:or not; see this comment and/or this investigation.)

Footnotes

[0]. This number is $138.8 different than the $138.8M given in Open Philanthropy's website, which is probably not up to date with their grants database.

[1]. Note that this paragraph is written from my perspective doing a postmortem, rather than aiming to summarize what they thought at the time.

[2]. Note that restorative justice is normally suggested as a total replacement for punitive justice. But I think that pushing back punitive justice until it is incentive compatible and then applying restorative justice frameworks would also work, and would encounter less resistance.

[3]. Subjective estimate based on the US having many more guns, a second amendment, a different culture, more of a drug problem.

[4]. Subjective estimate; I think it would take 1-2 orders of magnitude more investment than the already given $2B.

[5]. Note that QALYs refers to a specific construct. This has led people to come up with extensions and new definitions, e.g., the WALY (wellbeing-adjusted), HALY (happiness-adjusted), DALY (disability-adjusted), and SALY (suffering-adjusted) life years. But throughout this post, I’m stretching that definition and mostly thinking about “QALYs as they should have been”.

[6]. Initially, Squiggle was making these calculations using monte-carlo simulations. However, operations multiplying and dividing lognormals can be done analytically. I extracted the functionality to do so into Simple Squiggle, and then helped the main Squiggle branch compute the model analytically.

Simple Squiggle does validate the model as producing an interval of $1.3k to $290k. To check this, feed `1000000000 * (2 to 20) / ((1000000 * 1.5 to 2.5) * 0.25 to 0.75 * 0.2 to 6 * 5 to 50 * 0.01 to 0.1 * 0.5 to 1 )` into it

[7]. To elaborate on this, as far as I understand, to estimate the impact of incarceration, the reports' best source of evidence are randomized trials or natural experiments, e.g., harsher judges randomly assigned, arbitrary threshold changes resulting from changes in guidelines or policy, etc. But these methods will tend to estimate short-term changes, rather than longer term (e.g., intergenerational) changes.

And I would give substantial weight to lighter sentencing in fact making it more worth it to commit crime. See Lagerros' Unconscious Economics.

This topic also has very large number of degrees of choice (e.g., see p. 133 on lowballing the cost of murder on account of it being rare), which I am inclined to be suspicious about.

The report has a "devil's advocate case". But I think that it could have been much harsher, by incorporating hard-to-estimate long-term incentive changes.

[8]. Excerpt, with some light editing to exclude stutters:

With a lot of hedging and assumptions and guessing, I think that we can show that we were at around 250x, versus GiveWell, which is at more like 1000x [9]. So according to Open Philanthropy, if you're just like, what's the place where I can put my dollar that does the most good, you should give to GiveWell, I think.

That said, I would say well, first of all, if you feel that now's the time, now's a particular unique and important time to be working on this when there is a lot of traction, that puts a thumb on the scale more towards this. Deworming was very important 10 years ago, will be very important in 10 years. I think that's different than this issue, where you have this moments where we can actually make a lot of change, where a boost of cash is good.

And then second, that there is a lot that's not captured in that 250x.

And then third, that 250x is based on the assumption that a year of freedom from prison is worth $50k, and a year of freedom from jail is worth $100k. I think a jail bed gone empty for a year could be worth $250k, for example.

So, I'm telling you this, I don't say this to normal people, I have no idea what I'm talking about. But for EA folks, I think we're closer to 1000x than I've been able to show thus far. But if you want to be like "I'm helping the most that I can be certain about" yeah, for sure, go give your money to deworming, that's still probably true.

[9]: "1000x" (resp. 250x) refers to being 1000 times (resp. 250 times) more cost-effective than giving a dollar to someone with $50k of annual income; see here.

[10]. As I was writing this, it featured campaigns calling for common carriers to drop Fox, and for Amazon and Twitch to carry out racial equity audits. But these have since cycled through.

[11]. It rose from $216k in 2016 to $415k in 2019. Honestly I'm not even sure this unjustified; he could probably be a very highly paid political consultant, and a high salary is in fact a strong signal that his funders think that he shouldn't be.

[12]. This excludes considerations around how much to donate each year.

[13]. A side effect of spinning off Just Impact with a very sizeable initial endowment is that the careers of the Open Philanthropy officers involved appear to continue progressing. Commenters pointed out that this might make it easier to hire talent. But coming from a forecasting background which has some emphasis in proper scoring rules, this seems personally unappealing.

[14]. Technically, according to the shape of the values of their grants and the expected future shape, not just the values of the marginal grant.

I also considered suggesting a ruthless Hunger Games-style fight between the representatives of different cause areas, with the winner getting all the resources regardless of diminishing returns. But I concluded that this was likely not possible in practice, and also that the neartermists would probably be in better shape.

Ozzie Gooen @ 2022-06-16T22:46 (+135)

I previously gave a fair bit of feedback to this document. I wanted to quickly give my take on a few things.

Overall, I found the analysis interesting and useful. However, I overall have a somewhat different take than Nuno did.

On OP:

- Aaron Gertler / OP were given a previous version of this that was less carefully worded. To my surprise, he recommended going forward with publishing it, for the sake of community discourse. This surprised me and I’m really thankful.

- This analysis didn’t get me to change my mind much about Open Philanthropy. I thought fairly highly of them before and after, and expect that many others who have been around would think similarly. I think they’re a fair bit away from being an “idealized utilitarian agent” (in part because they explicitly claim not to be), but still much better than most charitable foundations and the like.

On this particular issue:

- My guess is that in the case of criminal justice reform, there were some key facts of the decision-making process that aren’t public and are unlikely to ever be public. It’s very common in large organizations for compromises to be made for various political or social reasons, for example. I’ve previously written a bit about similar things [here](https://twitter.com/ozziegooen/status/1456992079326978052).

- I think Nuno’s quantitative estimates were pretty interesting, but I wouldn’t be too surprised if other smart people would come up with numbers that are fairly different. For those reading this, I’d take the quantitative estimates with a lot of uncertainty.

- My guess is that a “highly intelligent idealized utilitarian agent” probably would have invested a fair bit less in criminal justice reform than OP did, if at all.

On evaluation, more broadly:

- I’ve found OP to be a very intimidating target of critique or evaluation, mainly just because of their position. Many of us are likely to want funding from them in the future (or from people that listen to them), so the risk of getting people at OP upset is very high. From a cost-benefit position, publicly critiquing OP (or other high-status EA organizations) seems pretty risky. This is obviously unfortunate; these groups are often appreciative of feedback, and of course, they are some of the most useful groups to get feedback. (Sometimes prestigious EAs complain about getting too little feedback, I think this is one reason why).

- I really would hate for this post to be taken as “ammunition” by people with agendas against OP. I’m fairly paranoid about this. That wasn’t the point of this piece at all. If future evaluations are mainly used as “ammunition” by “groups with grudges”, then that makes it far more hazardous and costly to publish them. If we want lots of great evaluations, we’ll need an environment that doesn’t weaponize them.

- Similarly to the above point, I prefer these sorts of analysis and the resulting discussions to be fairly dispassionate and rational. When dealing with significant charity decisions I think it’s easy for some people to get emotional. “$200M could have saved X lives!”. But in the scheme of things, there are many decisions like this to make, and there will definitely be large mistakes made. Our main goals should be to learn quickly and continue to improve in our decisions going forward.

- One huge set of missing information is OP’s internal judgements of specific grants. I’m sure they’re very critical now of some groups they’ve previously funded (in all causes, not just criminal justice). However, it would likely be very awkward and unprofessional to actually release this information publicly.

- For many of the reasons mentioned above, I think we can rarely fully trust the public reasons for large actions by large institutions. When a CEO leaves to “spend more time with family”, there’s almost always another good explanation. I think OP is much better than most organizations at being honest, but I’d expect that they still face this issue to an extent. As such, I think we shouldn’t be too surprised when some decisions they make seem strange when evaluating them based on their given public explanations.

NunoSempere @ 2022-06-17T02:01 (+27)

I really appreciate this comment; it feels like it's drawing from models deeper than my own.

Agrippa @ 2022-06-17T03:30 (+12)

It's interesting that you say that given what is in my eyes a low amount of content in this comment. What is a model or model-extracted part that you liked in this comment?

NunoSempere @ 2022-06-17T04:13 (+4)

Some of my models feel like they have a mix of reasonable stuff and wanton speculation, and this comment sort of makes it a bit more clear which of the wanton speculation is more reasonable, and which is more on the deep end.

For instance:

in the case of criminal justice reform, there were some key facts of the decision-making process that aren’t public and are unlikely to ever be public

.

My guess is that a “highly intelligent idealized utilitarian agent” probably would have invested a fair bit less in criminal justice reform than OP did, if at all.

.

I think we can rarely fully trust the public reasons for large actions by large institutions. When a CEO leaves to “spend more time with family”, there’s almost always another good explanation. I think OP is much better than most organizations at being honest, but I’d expect that they still face this issue to an extent. As such, I think we shouldn’t be too surprised when some decisions they make seem strange when evaluating them based on their given public explanations.

.

Agrippa @ 2022-06-17T18:50 (+3)

Well this is still confusing to me

in the case of criminal justice reform, there were some key facts of the decision-making process that aren’t public and are unlikely to ever be public

Seems obviously true and in fact a continued premise of your post is that there are key facts absent that could explain or fail to explain one decision or the other. Is this particularly true in crminal justice reform? Compared to IDK orgs like AMF (which are hyper transparent by design) maybe, compared to stuff around AI risk I think not.

My guess is that a “highly intelligent idealized utilitarian agent” probably would have invested a fair bit less in criminal justice reform than OP did, if at all.

This is like the same thesis as your post, does not actually convey much information (it is what anyone I assume would have already guessed Ozzie thought).

I think we can rarely fully trust the public reasons for large actions by large institutions. When a CEO leaves to “spend more time with family”, there’s almost always another good explanation. I think OP is much better than most organizations at being honest, but I’d expect that they still face this issue to an extent. As such, I think we shouldn’t be too surprised when some decisions they make seem strange when evaluating them based on their given public explanations.

Yeah I mean, no kidding. But it's called Open Philanthropy. It's easy to imagine there exists a niche for a meta-charity with high transaparency and visibility. It also seems clear that Open Philanthropy advertises as a fulfillment of this niche as much as possible and that donors do want this. So when their behavior seems strange in a cause area and the amount of transparency on it is very low, I think this is notable, even if the norm among orgs is to obfuscate internal phenomena. So I don't rlly endorse any normative takeaway from this point about how orgs usually obfuscate information.

Linch @ 2022-06-17T19:57 (+13)

Yeah I mean, no kidding. But it's called Open Philanthropy. It's easy to imagine there exists a niche for a meta-charity with high transaparency and visibility. It also seems clear that Open Philanthropy advertises as a fulfillment of this niche as much as possible and that donors do want this.

I don't understand this point. Can you spell it out?

From my perspective, Open Phil's main legible contribution is a) identifying great donation opportunities, b) recommending Cari Tuna and Dustin Moskovitz to donate to such opportunities, and c) building up an apparatus to do so at scale.

Their donors are specific people, not hypothetical "donors who want transparency." I assume Open Phil is quite candid/transparent with their actual donors, though of course I don't have visibility here.

Ozzie Gooen @ 2022-06-19T15:06 (+13)

In fairness, the situation is a bit confusing. Open Phil came from GiveWell, which is meant for external donors. In comparison, as Linch mentioned, Open Phil mainly recommends donations just to Good Ventures (Cari Tuna and Dustin Moskovitz). My impression is that OP's main concern is directly making good grants, not recommending good grants to other funders. Therefore, a large amount of public research is not particularly crucial.

I think the name is probably not quite ideal for this purpose. I think of it more like "Highly Effective Philanthropy"; it seems their comparative advantage / unique attribute is much more their choices of focus and their talent pool, than it is their openness, at this point.

If there is frustration here, it seems like the frustration is a bit more "it would be nice if they could change their name to be more reflective of their current focus", than "they should change their work to reflect the previous title they chose".

Agrippa @ 2022-06-28T05:10 (+2)

Sorry I did not realize that OP doesn't solicit donations from non megadonors. I agree this recontextualizes how we should interpret transparency.

Given the lack of donor diversity, tho, I am confused why their cause areas would be so diverse.

Guy Raveh @ 2022-06-17T20:34 (+5)

How do you balance your high opinion of OpenPhil with the assumption that there's information that cannot be made public, and which tips the scale in important decisions? How can you judge OpenPhil's decisions in this case?

Ozzie Gooen @ 2022-06-19T15:11 (+5)

How do you balance your high opinion of OpenPhil with the assumption that there's information that cannot be made public, and which tips the scale in important decisions?

This is almost always the case for large organizations. All CEOs or government officials have a lot of private information that influences their decision making.

This private information does make it much more difficult for external evaluators to evaluate them. However, there's often still a lot that can be inferred. It's really important that these evaluators stay humble about their analysis in light of the fact that there's a lot of private information, but it's also important that evaluators still try, given the information available.

Aaron Gertler @ 2022-06-16T19:51 (+108)

(Writing from OP’s point of view here.)

We appreciate that Nuño reached out about an earlier draft of this piece and incorporated some of our feedback. Though we disagree with a number of his points, we welcome constructive criticism of our work and hope to see more of it.

We’ve left a few comments below.

*****

The importance of managed exits

We deliberately chose to spin off our CJR grantmaking in a careful, managed way. As a funder, we want to commit to the areas we enter and avoid sudden exits. This approach:

- Helps grantees feel comfortable starting and scaling projects. We’ve seen grantees turn down increased funding because they were reluctant to invest in major initiatives; they were concerned that we might suddenly change our priorities and force them to downsize (firing staff, ending projects half-finished, etc.)

- Helps us hire excellent program officers. The people we ask to lead our grantmaking often have many other good options. We don’t want a promising candidate to worry that they’ll suddenly lose their job if we stop supporting the program they work on.

Exiting a program requires balancing:

- the cost of additional below-the-bar spending during a slow exit;

- the risks from a faster exit (difficulty accessing grant opportunities or hiring the best program officers, as well as damage to the field itself).

We launched the CJR program early in our history. At the time, we knew that committing to causes was important, but we had no experience in setting expectations about a program’s longevity or what an exit might look like. When we decided to spin off CJR, we wanted to do so in a way that inspired trust from future grantees and program staff. In the end, we struck what felt to us like an appropriate balance between “slow” and “fast”.[1]

It’s plausible that we could have achieved this trust by investing less money and more time/energy. But at the time, we were struggling to scale our organizational capacity to match our available funding; we decided that other capacity-strained projects were a priority.

*****

Open Phil is not a unitary agent

Running an organization involves making compromises between people with different points of view — especially in the case of Open Phil, which explicitly hires people with different worldviews to work on different causes. This is especially true for cases where an earlier decision has created potential implicit commitments that affect a later decision.

I would avoid trying to model Open Phil (or other organizations) as unitary agents whose actions will match a single utility function. The way we handle one situation may not carry over to other situations.

If this dynamic leads you to put less “trust” in our decisions, we think that’s a good thing! We try to make good decisions and often explain our thinking, but we don’t think others should be assuming that all of our decisions are “correct” (or would match the decisions you would make if you had access to all of the relevant info).

*****

“By working in this area, one could gain leverage, for instance [...] leverage over the grantmaking in the area, by seeding Just Impact.”

Indeed, part of our reason for seeding Just Impact was that it could go on to raise a lot more money, resulting in a lot of counterfactual impact. That kind of leverage can take funding from below the bar to above it.

*****

Open Philanthropy might gain experience in grantmaking, learn information, and acquire expertise that would be valuable for other types of giving. In the case of criminal justice reform, I would guess that the specific cause officers—rather than Open Philanthropy as an institution—would gain most of the information. I would also guess that the lessons learnt haven’t generalized to, for instance, pandemic prevention funding advocacy.

This doesn’t accord with our experience. Over six years of working closely with Chloe, we learned a lot about effective funding in policy and advocacy in ways we do expect to accrue to other focus areas. She was also a major factor when we updated our grantmaking process to emphasize the importance of an organization's leadership for the success of a grant.

It’s possible that we would have learned these lessons otherwise, but given that Chloe was our first program officer, a disproportionate amount of organizational learning came from our early time working with her, and those experiences have informed our practices.

- ^

Note that when we launched our programs in South Asian Air Quality and Global Aid Policy, we explicitly stated that we "expect to work in [these areas] for at least five years". This decision comes from the experience we’ve developed around setting expectations.

NunoSempere @ 2022-06-16T20:06 (+35)

The importance of managed exits

So one of the things I'm still confused is about having two spikes in funding, one in 2019 and the other one in 2021, both of which can be interpreted as parting grants:

So OP gave half of the funding to criminal justice reform ($100M out of $200M) after writing GiveWell’s Top Charities Are (Increasingly) Hard to Beat, and this makes me less likely to think about this in terms of exit grant and more in terms of, idk, some sort of nefariousness/shenanigans.

Aaron Gertler @ 2022-06-17T10:34 (+42)

The 2019 'spike' you highlight doesn't represent higher overall spending — it's a quirk of how we record grants on the website.

Each program officer has an annual grantmaking "budget", which rolls over into the next year if it goes unspent. The CJR budget was a consistent ~$25 million/year from 2017 through 2021. If you subtract the Just Impact spin-out at the end of 2021, you'll see that the total grantmaking over that period matches the total budget.

So why does published grantmaking look higher in 2019?

The reason is that our published grants generally "frontload" payment amounts — if we're making three payments of $3 million in each of 2019, 2020, and 2021, that will appear as a $9 million grant published in 2019.

In the second half of 2019, the CJR team made a number of large, multi-year grants — but payments in future years still came out of their budget for those years, which is why the published totals look lower in 2020 and 2021 (minus Just Impact). Spending against the CJR budget in 2019 was $24 million — slightly under budget.

So the actual picture here is "CJR's budget was consistent from 2017-2021 until the spin-out", not "CJR's budget spiked in the second half of 2019".

NunoSempere @ 2022-06-17T14:39 (+22)

So this doesn't really dissolve my curiosity.

In dialog form, because otherwise this would have been a really long paragraph:

NS: I think that the spike in funding in 2019, right after the GiveWell’s Top Charities Are (Increasingly) Hard to Beat blogpost, is suspicious

AG: Ah, but it's not higher spending. Because of our accounting practices, it's rather an increase in future funding commitments. So your chart isn't about "spending" it's about "locked-in spending commitments". And in fact, in the next few years, spending-as-recorded goes down because the locked-in-funding is spent.

NS: But why the increase in locked-in funding commitments in 2019. It still seems suspicious, even if marginally less so.

AG: Because we frontload our grants; many of the grants in 2019 were for grantees to use for 2-3 years.

NS: I don't buy that. I know that many of the grants in 2019 were multi-year (frontloaded), but previous grants in the space were not as frontloaded, or not as frontloaded in that volume. So I think there is still something I'm curious about, even if the mechanistic aspect is more clear to me now.

AG: ¯\_(ツ)_/¯ (I don't know what you would say here.)

AppliedDivinityStudies @ 2022-06-17T03:53 (+34)

If this dynamic leads you to put less “trust” in our decisions, I think that’s a good thing!

I will push back a bit on this as well. I think it's very healthy for the community to be skeptical of Open Philanthropy's reasoning ability, and to be vigilant about trying to point out errors.

On the other hand, I don't think it's great if we have a dynamic where the community is skeptical of Open Philanthropy's intentions. Basically, there's a big difference between "OP made a mistake because they over/underrated X" and "OP made a mistake because they were politically or PR motivated and intentionally made sub-optimal grants."

Linch @ 2022-06-18T20:27 (+22)

Basically, there's a big difference between "OP made a mistake because they over/underrated X" and "OP made a mistake because they were politically or PR motivated and intentionally made sub-optimal grants."

The synthesis position might be something like "some subset of OP made a mistake because they were subconsciously politically or PR motivated and unintentionally made sub-optimal grants."

I think this is a reasonable candidate hypothesis, and should not be that much of a surprise, all things considered. We're all human.

MichaelDickens @ 2022-08-28T04:22 (+5)

FWIW I would be surprised to see you, Linch, make a suboptimal grant out of PR motivation. I think Open Phil is capable of being in a place where it can avoid making noticeably-suboptimal grants due to bad subconscious motivations.

orellanin @ 2022-06-18T01:04 (+12)

I agree that there's a difference in the social dynamics of being vigilant about mistakes vs being vigilant about intentions. I agree with your point in the sense that worlds in which the community is skeptical of OP's intentions tend to have worse social dynamics than worlds in which it isn't.

But you seem to be implying something beyond that; that people should be less skeptical of OP's intentions given the evidence we see right now, and/or that people should be more hesitant to express that skepticism. Am I understanding you correctly, and what's your reasoning here?

My intuition is that a norm against expressing skepticism of orgs' intentions wouldn't usefully reduce community skepticism, because community members can just see this norm and infer that there's probably some private skepticism (just like I update when reading your comment and the tone of the rest of the thread). And without open communication, community members' level of skepticism will be noisier (for example, Nuño starting out much more trusting and deferential than the EA average before he started looking into this).

Guy Raveh @ 2022-06-17T19:58 (+2)

I agree with you, but unfortunately I think it's inevitable that people doubt the intentions of any privately-managed organisation. This is perhaps an argument for more democratic funding (though one could counter-argue about the motivations of democratically chosen representatives).

brb243 @ 2022-06-16T20:57 (+10)

At the time, we knew that committing to causes was important

Did you also think that breadth of cause exploration is important?

I think [the commitment to causes and hiring expert staff] model makes a great deal of sense ... Yet I’m not convinced that this model is the right one for us. Depth comes at the price of breadth.

It seems that you were conducting shallow and medium-depth investigations since late 2014. So, if there were some suboptimal commitments early on these should have been shown by alternatives that the staff would probably be excited about, since I assume that everyone aims for high impact, given specific expertise.

So, it would depend on the nature of the commitments that earlier decisions created: if these were to create high impact within one's expertise, then that should be great, even if the expertise is US criminal justice reform, specifically.[1] If multiple such focused individuals exchange perspectives, a set of complementary[2] interventions that covers a wide cause landscape emerges.

If this dynamic leads you to put less “trust” in our decisions, I think that’s a good thing!

If you think that not trusting you is good, because you are liable to certain suboptimal mechanisms established early on, then are you acknowledging that your recommendations are suboptimal? Where would you suggest that impact-focused donors in EA look?

Indeed, part of our reason for seeding Just Impact was that it could go on to raise a lot more money, resulting in a lot of counterfactual impact. That kind of leverage can take funding from below the bar to above it.

Are you sure that the counterfactual impact is positive, or more positive without your 'direct oversight?' For example, it can be that Just Impact donors would have otherwise donated to crime prevention abroad,[3] if another organization influenced them before they learn about Just Impact, which solicits a commitment? Or, it can be that US CJR donors would not have donated to other effective causes were they not first introduced to effective giving by Just Impact. Further, do you think that Just Impact can take less advantage of communication with experts in other OPP cause areas (which could create important leverages) when it is an independent organization?

Ozzie Gooen @ 2022-06-16T21:53 (+27)

I appreciate the response here, but would flag that this came off, to me, as a bit mean-spirited.

One specific part:

> If you think that not trusting you is good, because you are liable to certain suboptimal mechanisms established early on, then are you acknowledging that your recommendations are suboptimal? Where would you suggest that impact-focused donors in EA look?

1. He said "less trust", not "not trust at all". I took that to mean something like, "don't place absolute reverence in our public messaging."

2. I'm sure anyone reasonable would acknowledge that their recommendations are less than optimal.

3. "Where would you suggest that impact-focused donors in EA look" -> There's not one true source that you should only pay attention to. You should probably look at a diversity of sources, including OP's work.

brb243 @ 2022-06-16T22:20 (+9)

"less trust", not "not trust at all". I took that to mean something like, "don't place absolute reverence in our public messaging." ... look at a diversity of sources, including OP's work.

That makes sense, probably the solution.

IanDavidMoss @ 2022-06-16T18:00 (+63)

One context note that doesn't seem to be reflected here is that in 2014, there was a lot of optimism for a bipartisan political compromise on criminal justice reform in the US. The Koch network of charities and advocacy groups had, to some people's surprise, begun advocating for it in their conservative-libertarian circles, which in turn motivated Republican participation in negotiations on the hill. My recollection is that Open Phil's bet on criminal justice reform funding was not just a "bet on Chloe," but also a bet on tractability: i.e., that a relatively cheap investment could yield a big win on policy because the political conditions were such that only a small nudge might be needed. This seems to have been an important miscalculation in retrospect, as (unless I missed something) a limited-scope compromise bill took until the end of 2018 to get passed. I'm not aware of any significant other criminal justice legislation that has passed in that time period. [Edit: while this is true at the national level, arguably there has been a lot of progress on CJR at state and local levels since 2014, much of which could probably be traced back to advocacy by groups like those Open Phil funded.]

This information strongly supports the "Leverage Hypothesis," which was cited by Open Phil staff themselves, so I think it ought to be weighted pretty strongly in your updates.

NunoSempere @ 2022-06-16T18:06 (+41)

So this is good context. What are your thoughts on why they kept donating?

IanDavidMoss @ 2022-06-16T19:48 (+24)

I don't have any inside info here, but based on my work with other organizations I think each of your first three hypotheses are plausible, either alone or in combination.

Another consideration I would mention is that it's just really hard to judge how to interpret advocacy failures over a short time horizon. Given that your first try failed, does that mean the situation is hopeless and you should stop throwing good money after bad? Or does it mean that you meaningfully moved the needle on people's opinions and the next campaign is now likelier to succeed? It's not hard for me to imagine that in 2016-17 or so, having seen some intermediate successes that didn't ultimately result in legislation signed into law, OP staff might have held out genuine hope that victory was still close at hand. Or after the First Step Act was passed in 2018 and signed into law by Trump, maybe they thought they could convert Trump into a more consistent champion on the issue and bring the GOP along with him. Even as late as 2020, when the George Floyd protests broke out, Chloe's grantmaking recommendations ended up being circulated widely and presumably moved a lot of money; I could imagine there was hope at that time for transformative policy potential. Knowing when to walk away from sustained but not-yet-successful efforts at achieving low-probability, high-impact results, especially when previous attempts have unknown correlations with the probability of future success, is intrinsically a very difficult estimation problem. (Indeed, if someone at QURI could develop a general solution to this, I think that would be a very useful contribution to the discourse!)

CW @ 2022-06-16T19:44 (+24)