Famine deaths due to the climatic effects of nuclear war

By Vasco Grilo🔸 @ 2023-10-14T12:05 (+40)

The views expressed here are my own, not those of Alliance to Feed the Earth in Disasters (ALLFED), for which I work as a contractor. Please assume this is always the case unless stated otherwise.

Summary

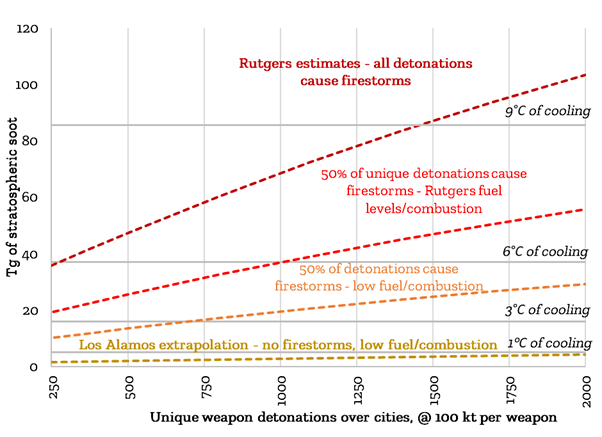

- The initial motivation for my analysis was combining the results of 2 views about nuclear winter:

- One linked to Alan Robock (Rutgers University), Michael Mills (National Center for Atmospheric Research), and Brian Toon (University of Colorado), which is illustrated in Xia 2022. “We estimate more than 2 billion people could die from nuclear war between India and Pakistan, and more than 5 billion could die from a war between the United States and Russia”.

- Another linked to Jon Reisner (Los Alamos National Laboratory), which is illustrated in Reisner 2018. “Our analysis demonstrates that the probability of significant global cooling from a limited exchange scenario as envisioned in previous studies is highly unlikely, a conclusion supported by examination of natural analogs, such as large forest fires and volcanic eruptions”.

- I estimate 12.9 M expected famine deaths due to the climatic effects of nuclear war before 2050, multiplying:

- 3.30 % probability of large nuclear war before 2050, multiplying:

- 392 M famine deaths due to the climatic effects of a large nuclear war, multiplying:

- 4.43 % famine death rate due to the climatic effects for 22.1 Tg (22.1 trillion grams, i.e. million tonnes[1]) of soot injected into the stratosphere in a large nuclear war, multiplying:

- 2.09 k offensive nuclear detonations in a large nuclear war.

- 21.5 % countervalue nuclear detonations.

- 0.0491 Tg per countervalue nuclear detonation, multiplying:

- 189 kt of yield per countervalue nuclear detonation.

- 2.60*10^-4 Tg/kt of soot injected into the stratosphere per countervalue yield.

- 8.86 G people (8.86 billion[2]).

- 4.43 % famine death rate due to the climatic effects for 22.1 Tg (22.1 trillion grams, i.e. million tonnes[1]) of soot injected into the stratosphere in a large nuclear war, multiplying:

- My expected annual famine deaths due to the climatic effects of nuclear war before 2050 are 496 k, and my 5th and 95th percentile are 0 and 30.9 M. My 95th percentile is 62.3 times my best guess, which means there is lots of uncertainty. Bear in mind my estimates only refer to the famine deaths due to the climatic effects. I exclude famine deaths resulting directly or indirectly from infrastructure destruction, and heat mortality.

- I obtained my best guess for the soot injected into the stratosphere per countervalue yield giving the same weight to results I inferred from Reisner’s and Toon’s views, but they differ substantially. If I attributed all weight to the result I deduced from Reisner’s (Toon’s) view, my estimates for the expected mortality would become 0.121 (8.27) times as large. In other words, my best guess is hundreds of millions of famine deaths due to the climatic effects, but tens of millions putting all weight in the result I deduced from Reisner’s view, and billions putting all weight in the one I deduced from Toon’s view. Further research would be helpful to figure out which view should be weighted more heavily.

- My expected famine deaths due to the climatic effects of a large nuclear war are 17.7 M/Tg (per soot injected into the stratosphere) and 0.992 M/Mt (per total yield). These are 32.3 % and 7.81 % of the 54.8 M/Tg and 12.7 M/Mt of Xia 2022, which I deem too pessimistic.

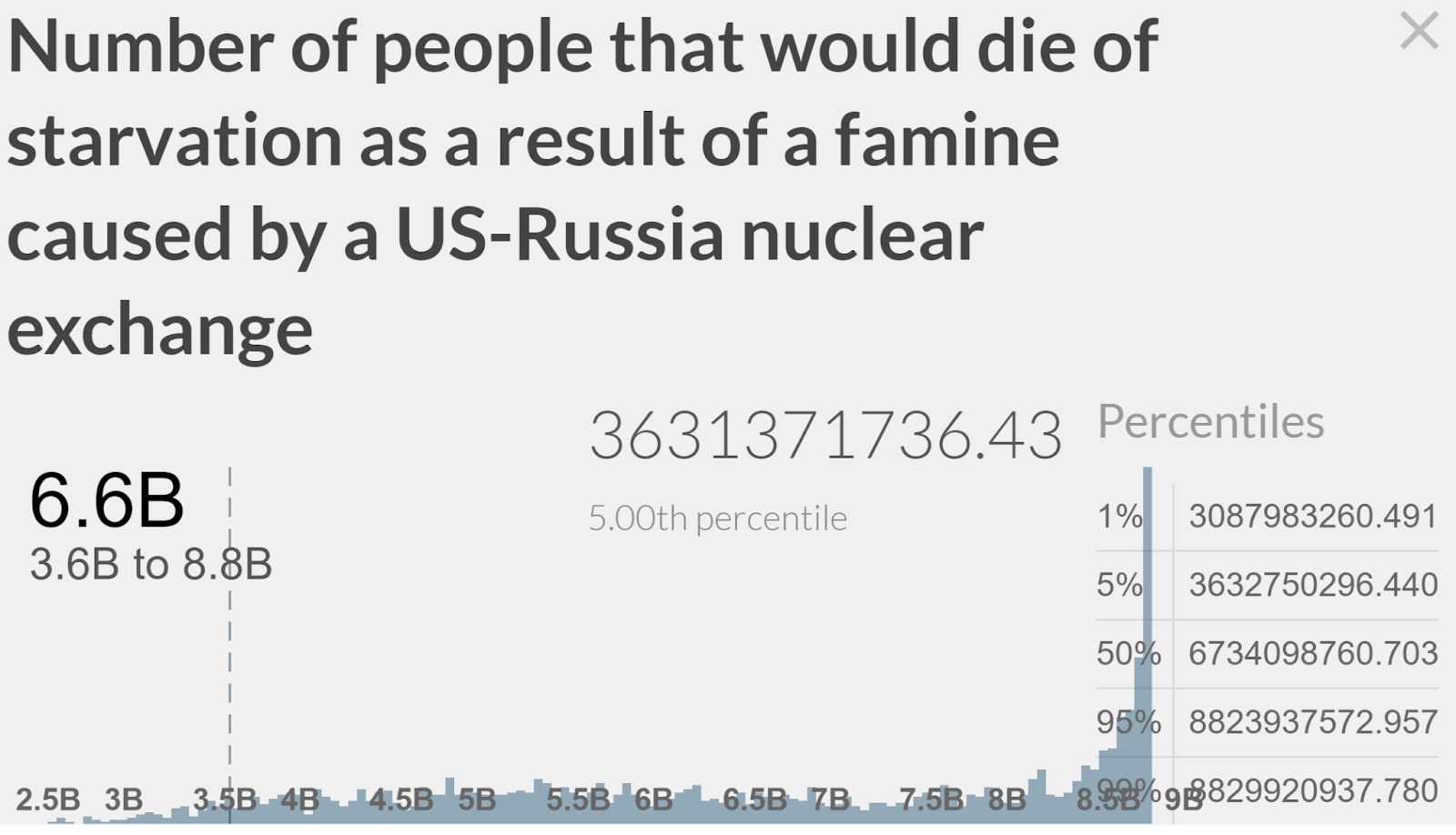

- My estimate of 12.9 M expected famine deaths due to the climatic effects of nuclear war before 2050 is 2.05 % the 630 M implied by Luisa Rodriguez’s results for nuclear exchanges between the United States and Russia, so I would say they are significantly pessimistic[3]. I am also surprised by Luisa’s distribution for the famine death rate due to the climatic effects given at least one offensive nuclear detonation in the United States or Russia. Her 5th and 95th percentile are 41.0 % and 99.6 %, which I think are too close and high.

- I believe Mike underweighted Reisner’s view.

- I guess the famine deaths due to the climatic effects of a large nuclear war would be 1.16 times the direct deaths. Putting all the weight in the soot injected into the stratosphere per countervalue yield I inferred from Reisner’s (Toon’s) view, the famine deaths due to the climatic effects would be 0.140 (9.59) times the direct deaths. In other words, my best guess is that famine deaths due to the climatic effects are within the same order of magnitude of the direct deaths, but 1 order of magnitude lower putting all weight in the result I inferred from Reisner’s view, and 1 higher putting all weight in the one I inferred from Toon’s view.

- Combining my mortality estimates with data from Denkenberger 2016, I estimate the expected cost-effectiveness of planning, research and development of resilient food solutions is 28.7 $/life, which is 2 orders of magnitude more cost-effective than GiveWell’s top charities. Nevertheless, I suspect the values from Denkenberger 2016 are very optimistic, such that I am greatly overestimating the cost-effectiveness. I guess the true cost-effectiveness is within the same order of magnitude of that of GiveWell’s top charities, although this adjustment is not resilient. Furthermore, I have argued corporate campaigns for chicken welfare are 3 orders of magnitude more cost-effective than GiveWell’s top charities.

- I do not think activities related to resilient food solutions are cost-effective at increasing the longterm value of the future. By not cost-effective, I mostly mean I do not see those activities being competitive with the best opportunities to decrease AI risk, and improve biosecurity and pandemic preparedness at the margin, like Long-Term Future Fund’s marginal grants.

- It is often hard to find interventions which are robustly beneficial. In my mind, decreasing the famine deaths due to the climatic effects of nuclear war is no exception, and I think it is unclear whether that is beneficial or harmful from both a nearterm and longterm perspective (although I strongly oppose killing people, including via nuclear war).

- Feel free to check my personal recommendations for funders.

Introduction

I have been assuming the importance of the climatic effects of nuclear war is roughly in agreement with Denkenberger 2018 and Luisa’s post, but I had not looked much into the relevant literature myself. I got interested in doing so following some of the discussion in my global warming post, and Bean’s and Mike’s analyses.

The initial motivation for my analysis was combining the results of 2 views about nuclear winter:

- One linked to Alan Robock (Rutgers University), Michael Mills (National Center for Atmospheric Research), and Brian Toon (University of Colorado), which is illustrated in Xia 2022. “We estimate more than 2 billion people could die from nuclear war between India and Pakistan, and more than 5 billion could die from a war between the United States and Russia”.

- Another linked to Jon Reisner (Los Alamos National Laboratory), which is illustrated in Reisner 2018. “Our analysis demonstrates that the probability of significant global cooling from a limited exchange scenario as envisioned in previous studies is highly unlikely, a conclusion supported by examination of natural analogs, such as large forest fires and volcanic eruptions”.

Denkenberger 2018 did not integrate the results of Reisner 2018, which was published afterwards[4]. Luisa says:

As a final point, I’d like to emphasize that the nuclear winter is quite controversial (for example, see: Singer, 1985; Seitz, 2011; Robock, 2011; Coupe et al., 2019; Reisner et al., 2019; Pausata et al., 2016; Reisner et al., 2018; Also see the summary of the nuclear winter controversy in Wikipedia’s article on nuclear winter). Critics argue that the parameters fed into the climate models (like, how much smoke would be generated by a given exchange) as well as the assumptions in the climate models themselves (for example, the way clouds would behave) are suspect, and may have been biased by the researchers’ political motivations (for example, see: Singer, 1985; Seitz, 2011; Reisner et al., 2019; Pausata et al., 2016; Reisner et al., 2018). I take these criticisms very seriously — and believe we should probably be skeptical of this body of research as a result. For the purposes of this estimation, I assume that the nuclear winter research comes to the right conclusion. However, if we discounted the expected harm caused by US-Russia nuclear war for the fact that the nuclear winter hypothesis is somewhat suspect, the expected harm could shrink substantially.

I also felt like Bean’s analysis underweighted Rutgers’ view, and Michael Hinge’s underweighted Los Alamos’ (see my comments).

My goal is estimating the famine deaths due to the climatic effects of nuclear war, not all famine deaths, nor heat mortality (related to hot or cold exposure). I also:

- Do a very shallow analysis of the cost-effectiveness of activities related to resilient food solutions.

- Discuss potential negative effects of decreasing famine deaths.

Famine deaths due to the climatic effects

Overview

I arrived at 12.9 M (= 0.0330*392*10^6) famine deaths due to the climatic effects of nuclear war before 2050, multiplying:

- 3.30 % probability of a large nuclear war before 2050.

- 392 M famine deaths due to the climatic effects of a large nuclear war, which I determined by multiplying:

- Famine death rate due to the climatic effects of a large nuclear war, which I obtained from the soot injected into the stratosphere in a large nuclear war[5]. I calculated this from the product between:

- Offensive nuclear detonations in a large nuclear war.

- Countervalue nuclear detonations as a fraction of the total.

- Soot injected into the stratosphere per countervalue nuclear detonation.

- Global population.

- Famine death rate due to the climatic effects of a large nuclear war, which I obtained from the soot injected into the stratosphere in a large nuclear war[5]. I calculated this from the product between:

Unlike Denkenberger 2018 and Luisa, I did not run a Monte Carlo simulation modelling all non-probabilistic variables as distributions, but I do not think that would meaningfully move my estimate of the expected deaths:

- Assuming all 4 factors describing the soot injected into the stratosphere before 2050 given at least one offensive nuclear detonation before 2050 are independent, as I would do for simplicity anyway in a Monte Carlo simulation, the product between their expected values would be the expected product (E(X Y) = E(X) E(Y) if X and Y are independent).

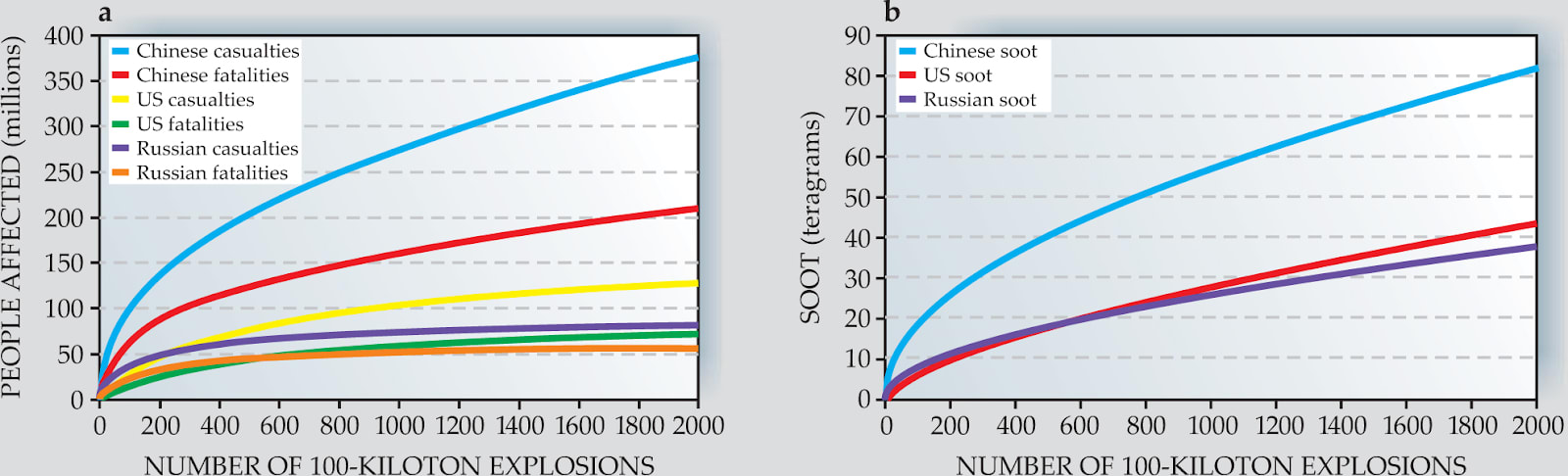

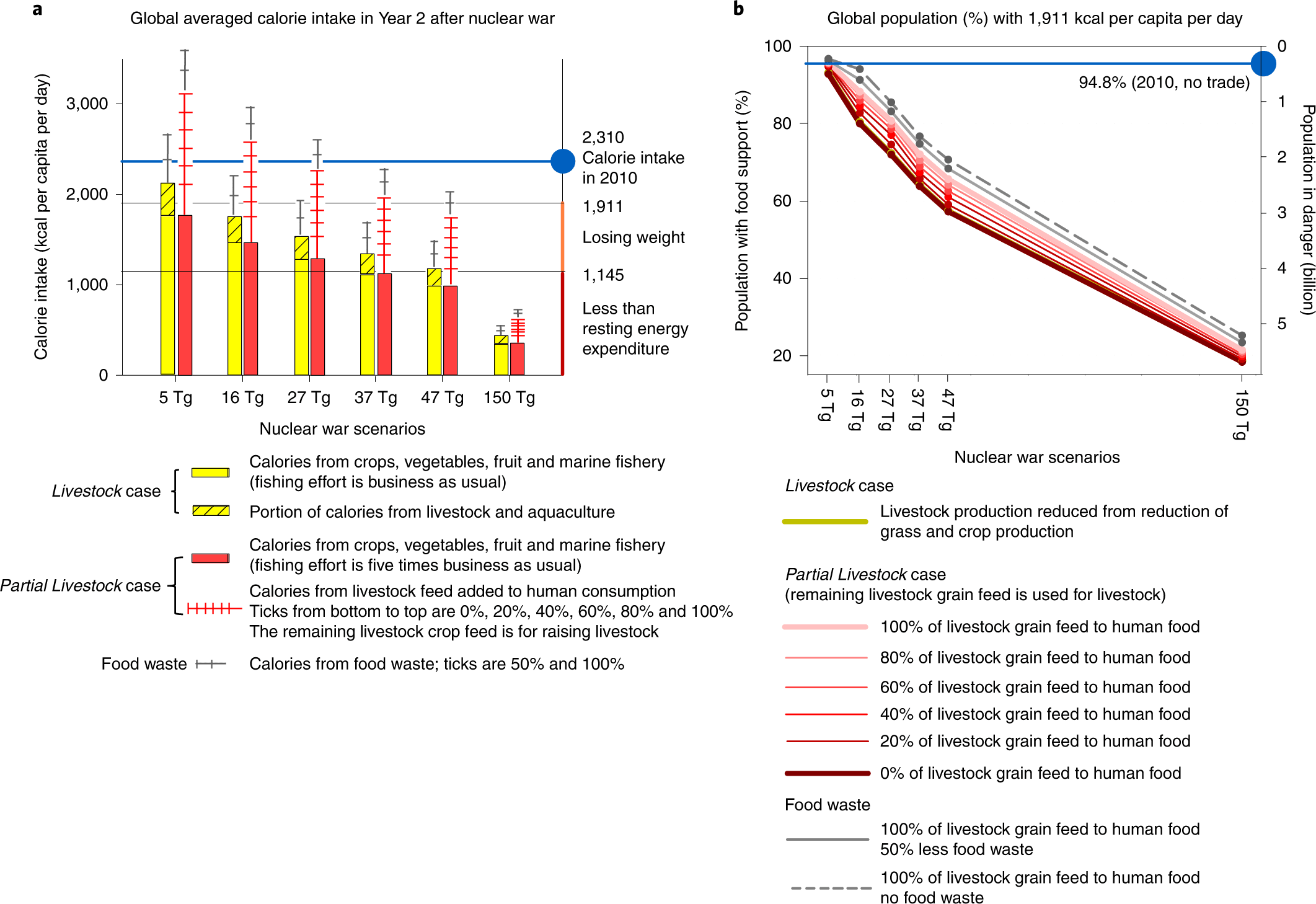

- From Fig. 5b of Xia 2022, the number of people without food in year 2 is roughly proportional to the soot injected into the stratosphere[6].

- To be precise, from the data on Table 1, the linear regression with null intercept of the former on the latter has a coefficient of determination (R^2) of 96.8 %.

- Therefore, since the mean is a linear operator (E(a X + b) = a E(X) + b), one can obtain the expected number of people without food in year 2 from the expected soot injected into the stratosphere.

- Christian Ruhl argues for the non-linearity of nuclear war effects. I agree, as I guess starvation deaths increase logistically with the soot injected into the stratosphere, but I believe injections of soot into the stratosphere for large nuclear wars fall in its roughly linear part.

- I defined such wars as having at least 1.07 k offensive nuclear detonations, and Figure 2b of Toon 2008, presented below, suggests emitted soot increases linearly with the number of detonations in that case.

- If the linear part of the logistic curve starts sooner/later, the starvation resulting from small nuclear wars will tend to be larger/smaller, and therefore I would be underestimating/overestimating expected mortality.

- My point estimates respect the expected values, not medians, of the variables to which the result of interest is proportional to.

Probability of large nuclear war

I put the probability of large nuclear war before 2050 at 3.30 % (= 0.32*0.103), which is the product between:

- 32 % probability of at least one offensive nuclear detonation before 2050.

- 10.3 % probability of large nuclear war conditional on the above.

I motivate these values below.

Probability of at least one offensive nuclear detonation

I placed the probability of at least one offensive nuclear detonation before 2050 at 32 %, in agreement with Metaculus’ community prediction on 31 August 2023[7]. This is reasonable based on:

- The base rate:

- There have been offensive nuclear detonations in 1 year (1945) over the 79 (= 2023 - 1945 + 1) during which they could occur. This suggests an annual probability of at least one offensive nuclear detonation of 1.27 % (= 1/79).

- There are still 26 years (= 2050 - 2024) before 2050.

- So the base rate implies a probability of at least one offensive nuclear detonation before 2050 of 28.3 % (= 1 - (1 - 0.0127)^26), which is 88.4 % (= 28.3/32) of Metaculus’ community prediction.

- Luisa’s prediction[8]:

- 1.1 %/year (see table).

- 25.0 % (= 1 - (1 - 0.011)^26) before 2050, which is 78.1 % (= 25.0/32) of Metaculus’ community prediction.

Probability of escalation into large nuclear war

I presupposed a beta distribution for the fraction of nuclear warheads being detonated before 2050 given at least one offensive nuclear detonation before then. I defined it from 61th and 89th percentiles equal to 1.06 % (= 100/(9.43*10^3)) and 10.6 % (= 1*10^3/(9.43*10^3)), given:

- Metaculus’ community predictions on 26 September 2023 of 39 % (= 1 - 0.61) and 11 % (= 1 - 0.89) for the probability of at least 100 and 1 k offensive nuclear detonations before 2050 given at least one offensive nuclear detonation before then.

- 9.43 k (= (9.50 + (9.22 - 9.50)/(2052 - 2032)*(2037 - 2032))*10^3 - 1) expected nuclear warheads minus 1[9], which I obtained:

- For 2037 (= (2050 - 2024)/2), which is midway between now and 2050[10].

- Linearly interpolating between the mean of Metaculus’ 25th and 75th percentile community predictions on 11 September 2023 for[11]:

- 2032, 9.50 k (= (8.29 + 10.7)*10^3/2).

- 2052, 9.22 k (= (4.84 + 13.6)*10^3/2).

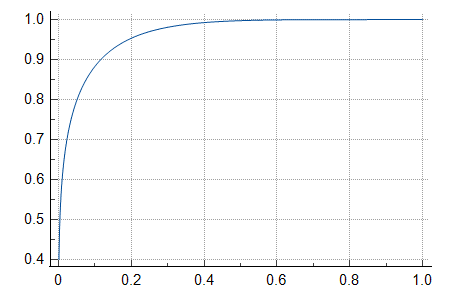

The alpha and beta parameters of the beta distribution are 0.189 and 5.03, and its cumulative distribution function (CDF) is below. The horizontal axis is the fraction of nuclear warheads being detonated, and the vertical one the probability of less than a certain fraction being detonated. The probability of escalation into a large nuclear war, which I defined as at least 1.07 k offensive nuclear detonations, corresponding to 11.3 % (= 1.07*10^3/(9.43*10^3)) of nuclear warheads being detonated, is 10.3 %[12].

Soot injected into the stratosphere

I expect 22.1 Tg (= 2.09*10^3*0.215*0.0491) of soot being injected into the stratosphere in a large nuclear war. This is the product between:

- 2.09 k offensive nuclear detonations in a large nuclear war.

- 21.5 % countervalue nuclear detonations[13].

- 0.0491 Tg (= 189*2.60*10^-4) per countervalue nuclear detonation, multiplying:

- 189 kt yield per countervalue nuclear detonation.

- 2.60*10^-4 Tg/kt of soot injected into the stratosphere per countervalue yield.

I explain the above estimates in the next sections. I neglected counterforce nuclear detonations because:

- From Figure 4 of Wagman 2020, the soot injected into the stratosphere for an available fuel per area of 5 g/cm^2 is negligible[14].

- I estimated an available fuel per area of g/cm^2 for counterforce nuclear detonations of 3.07 g/cm^2, which is lower than the above 5 g/cm^2.

Offensive nuclear detonations

I expect 2.09 k (= 1 + 0.221*9.43*10^3) offensive nuclear detonations in a large nuclear war. This is 1 plus the product between:

- 22.1 % of nuclear warheads being offensively detonated in a large nuclear war, which I computed:

- Generating 1 M Monte Carlo samples of the beta distribution describing the fraction of nuclear warheads being detonated before 2050 given at least one offensive nuclear detonation before then.

- Taking the mean of the above samples larger or equal to 11.3 %, which is the minimum fraction for a large nuclear war.

- 9.43 k expected nuclear warheads minus 1.

The 5th and 95th percentile fraction of nuclear warheads being detonated in a large nuclear war are 11.8 % and 43.6 %, which correspond to 1.11 k (= 1 + 0.118*9.43*10^3) and 4.11 k (= 1 + 0.436*9.43*10^3) offensive nuclear detonations.

I compared the offensive nuclear detonations, given at least one before 2050, implied by my beta distribution with those of a Metaculus’ question whose predictions I ended up not using. The 5th, 50th and 95th percentile of the beta distribution are 1.84*10^-6 %, 0.362 % and 19.2 %[15], and the respective detonations given at least one are:

- 1.00 (= 1 + 1.84*10^-8*9.43*10^3), which is 90.1 % (= 1.00/1.11) Metaculus’ 5th percentile community prediction of 1.11.

- 35.1 (= 1 + 0.00362*9.43*10^3), which is 3.97 (= 35.1/8.84) times Metaculus’ median community prediction of 8.84[16] (= (8.56 + 9.11)/2).

- 1.81 k (= 1 + 0.192*9.43*10^3), which is 21.5 % (= 1.81/8.42) Metaculus’ 95th percentile community prediction of 8.42 k[17] (= (7.18 + 9.66)/2*10^3).

The mean of my beta distribution is 3.62 % (= 0.189/(0.189 + 5.03)), and therefore I expect 342 (= 1 + 0.0362*9.43*10^3) offensive nuclear detonations given one offensive nuclear detonation before 2050, which is 9.74 (= 342/35.1) times my median detonations. Additionally, my 95th percentile is 1.81 k (= 1.81*10^3/1.00) times my 5th percentile. Such high ratios illustrate nuclear war is predicted to be heavy-tailed, as has been the case for non-nuclear wars.

From the above bullets, the predictions for the number of detonations I arrived at fitting a beta distribution to the forecasts for 2 Metaculus’ questions about the probability of escalation to large nuclear wars (100 and 1 k detonations) are not quite in line with the forecasts for another Metaculus’ question explicitly about the number of detonations. The large difference for the 95th percentile is relevant because the right tail has a significant influence on the expected detonations, as can be seen from the high ratio between my mean and median detonations. I decided to rely on the 2 Metaculus’ questions about escalation because:

- Of the importance of the right tail. The other requires forecasters to estimate the entire probability distribution, which I expect to lead to less accurate forecasts for the right tail.

- I would have to arbitrarily select 2 quantiles from the other in order to define the beta distribution.

Countervalue nuclear detonations

I assumed 21.5 % of the offensive nuclear detonations to respect countervalue targeting. This was Metaculus’ median community prediction on 30 September 2023 for the fraction of offensive nuclear detonations before 2050 which will be countervalue.

I presumed 100 % total burned area as a fraction of the burned area assuming different detonations did not compete for fuel, i.e. that overlapping between burned areas is negligible. David Denkenberger commented that some additional area would be burned thanks to the combined effects of multiple detonations. I tend to agree, but:

- This is not discussed in Reisner 2018 nor Toon 2008.

- For this effect to be significant, I guess there would have to be a meaningful overlap between the burned areas of countervalue detonations, whereas I am assuming it is negligible.

- I think the areas which would burn thanks to the combined effects of countervalue detonations would have low fuel load, thus not emitting much soot, because they would tend to be far away from the city centre:

- The detonation points would presumably be near the dense city centres, and therefore population density, and fuel load would tend to decrease with the distance from the detonation point.

- The radius of my burned area is 7.23 km.

Yield

I considered a yield per countervalue nuclear detonation of 189 kt (= (600*335 + 200*300 + 1511*90 + 25*8 + 384*455 + 500*(5*150)^0.5 + 288*400 + 200*(0.3*170)^0.5)/3708). This is the mean yield of the United States nuclear warheads in 2023 (deployed or in reserve, but not retired), which I got from data in Table 1 of Kristensen 2023. For the rows for which a range was provided for the yield, I used the geometric mean between its lower and upper bound[18].

For context, my yield of 189 kt is:

- 47.2 % (= 189/400) the 400 kt mentioned by Bean “for a typical modern strategic nuclear warhead”.

- 1.14 (= 189/166) times the yield of 166 kt (= 30.2^(3/2)) linked to the mean yield to the power of 2/3 implied by the data in Table 1 of Kristensen 2023[19]. Bean argues for an exponent of 2/3, but the difference does not seem to matter much, as 1.14 is a small factor.

- 1.89 (= 189/100) times that of Toon 2008.

- 12.6 (= 189/15) times that of Hiroshima’s nuclear detonation.

For the 2.09 k offensive nuclear detonations I expect in a large nuclear war, the minimum and maximum mean yield are 66.1 kt (= (200*(0.3*170)^0.5 + 25*8 + 500*(5*150)^0.5 + 1365*90)/(2.09*10^3)) and 290 kt (= (384*455 + 288*400 + 600*335 + 200*300 + 618*90)/(2.09*10^3)).

I investigated the relationship between the burned area and yield a little, but, as I said just above, I do not think it is that important whether the area scales with yield to the power of 2/3 or 1. Feel free to skip to the next section. In short, an exponent of:

- 2/3 makes sense if the energy released by the detonation is uniformly distributed in a spherical region (centred at the detonation point). This is apparently the case for blast/pressure energy, so an exponent of 2/3 is appropriate for the blasted area.

- 1 makes sense if the energy released by the detonation propagates outwards with negligible losses, like the Sun's energy radiating outwards into space. This is seemingly the case for thermal energy, so an exponent of 1 is appropriate for the burned area.

The emitted soot is proportional to the burned area. So using the mean yield as I did presupposes burned area is proportional to yield, which is what is supposed in Toon 2008. “In particular, since the area within a given thermal energy flux contour varies linearly with yield for small yields, we assume linear scaling for the burned area”. I guess this is based on the following passage of this chapter of The Medical Implications of Nuclear War (the source provided in Toon 2008):

Thermal energy, unlike blast energy [which “fills the volume surrounding it”], instead radiates out into the surroundings. Thermal energy from a detonation will therefore be distributed over a hypothetical sphere that surrounds the detonation point. If the sphere's area is larger in direct proportion to the yield of a detonation, then the amount of energy per unit area passing through its surface would be unchanged. The radius of this hypothetical sphere varies as the square root of its area. Hence, the range at which a given amount of thermal energy per unit area is deposited varies as the square root of the yield.

Presumably, Toon 2008 assumes the burned area is defined by this range, and therefore it is proportional to yield (since a circular area is proportional to the square of its radius). With respect to this, Bean said:

Nor is the assumption that burned area will scale linearly with yield a particularly good one. I couldn’t find it in the source they cite, and it flies in the face of all other scaling relationships around nuclear weapons.

[...]

per Glasstone p.108, blast radius typically scales with the 1/3rd power of yield, so we can expect damaged area from fire as well as blast to scale with the yield^2/3 [since area is proportional to the square of the radius].

According to The Medical Implications of Nuclear War (see quotation above), the blasted area is indeed proportional to yield to the power of 2/3, but the same may not apply to burned area (see quotation above starting with “Thermal energy”). In fact, the results of Nukemap seem to be compatible with the assumption that the ground area enclosed by a spherical surface of a given energy flux is proportional to yield. For 0.1, 1 and 10 times my yield of 189 kt, i.e. 18.9, 189 and 1.89 k kt, the ground area enclosed by a spherical surface whose energy flux is 146 J/cm^2, for which “dry wood usually burns”, are:

- For an airburst height of 0 (just above the surface), 4.11, 37.1 and 317 km^2. Based on the 1st and last pair of these estimates, burned area would be proportional to yield to the power of 0.956 (= log10(37.1/4.11)) and 0.928 (= log10(314/37.1)).

- For airburst heights of 0.832, 1.83 and 3.93 km, which maximise the radius of the overpressure ring of 5 psi[20] (0.34 atm) of each yield, 1.94, 26.6 and 268 km^2. Based on the 1st and last pair of these estimates, burned area would be proportional to yield to the power of 1.14 (= log10(26.6/1.94)) and 1.00 (= log10(268/26.6)).

The mean of the above 4 exponents is 1.01[21] (= (0.956 + 0.928 + 1.14 + 1.00)/4), which suggests a value of 1 is appropriate. Nevertheless, I do not know how the above areas are estimated in Nukemap.

Energy flux following an inverse-square law, as described in The Medical Implications of Nuclear War, makes sense if atmospheric losses are negligible, like with the Sun’s energy radiating outwards into space. Intuitively, I would have thought the losses were sufficiently high for the exponent to be lower than 1, and GPT-4 also guessed an exponent of 2/3 would be a better approximation. However, Nukemap’s results do support an exponent of 1.

Soot injected into the stratosphere per countervalue yield

I set the soot injected into the stratosphere per countervalue yield to 2.60*10^-4 Tg/kt (= (3.15*10^-5*0.00215)^0.5). This is the geometric mean between 3.15*10^-5 and 0.00215 Tg/kt[18], which I arrived at by adjusting results from Reisner 2018 and Reisner 2019, and Toon 2008 and Toon 2019. I describe how I did this in the next 2 sections, and discuss some considerations I did not cover in these sections in the one after them.

There are other studies which have analysed how much of the emitted soot is injected into the stratosphere, but I think only Reisner 2018, Reisner 2019 and Wagman 2020 modelled the whole causal chain. From Wagman 2020:

An analysis of whether fires ignited by a nuclear war will cause global climatic and environmental consequences must address the following:

- The characteristics of the fires ignited by nuclear weapons (e.g., intensity, spread, and whether they generate sufficient buoyancy for lofting emissions to high altitudes); these are a function of many factors, including number and yield of weapons, target type, fuel availability, meteorology, and geography.

- The composition of the fire emissions (whether emissions include significant amounts of black carbon [BC] and organic carbon [OC] aerosols, and gases affecting atmospheric chemistry); these are a function of the fuel type, carbon loading, oxygen availability, and other factors.

- Whether the emissions are self-lofted by the absorption of solar radiation and to what heights; this is a function primarily of meteorology and particle size, composition, and absorption of solar radiation.

- The physical and chemical evolution of BC and other aerosol species in the stratosphere; this is a function of stratospheric chemistry and dynamics.

[...]

The Reisner et al. (2018) approach deviates from previous efforts by modeling aspects of all four bullet points above

[...]

Motivated by the different conclusions that have been reached for this scenario, we make our own assessment, which also uses numerical models to address aspects of all four factors bulleted above.

I did not integrate evidence from Wagman 2020 (whose main author is affiliated with Lawrence Livermore National Laboratory), because, rather than estimating the emitted soot as Reisner 2018 and Reisner 2019, it sets it to the soot injected into the stratosphere in Toon 2007:

Finally, we choose to release 5 Tg (5·10^12 g) BC into the climate model per 100 fires, for consistency with the studies of Mills et al. (2008, 2014), Robock et al. (2007), Stenke et al. (2013), Toon et al. (2007), and Pausata et al. (2016). Those studies use an emission of 6.25 Tg BC and assume 20% is removed by rainout during the plume rise, resulting in 5 Tg BC remaining in the atmosphere.

I did not include direct evidence from the atomic bombings of Hiroshima and Nagasaki because I did not find empirical data about the resulting injections of soot into the stratosphere. Relatedly, Robock 2019 says:

- Between 3 February and 9 August 1945, an area of 461 km2 in 69 Japanese cities, including Hiroshima and Nagasaki, was burned during the U.S. B-29 Superfortress air raids, producing massive amounts of smoke

- Because of multiple uncertainties in smoke injected to the stratosphere, solar radiation observations, and surface temperature observations, it is not possible to formally detect a cooling signal from World War II smoke

- These results do not invalidate nuclear winter theory that much more massive smoke emissions from nuclear war would cause large climate change and impacts on agriculture

I also excluded evidence from Tambora’s eruption. There were global impacts according to Oppenheimer 2003, but their magnitude is unclear, and I think the world has evolved too much in the last 200 years for me to extrapolate.

Reisner 2018 and Reisner 2019

I estimated a soot injected into the stratosphere per countervalue yield of 3.15*10^-5 Tg/kt (= 0.0473/(1.50*10^3)) for Reisner 2018 and Reisner 2019. I calculated it from the ratio between:

- 0.0473 Tg (= 0.224*0.211) of soot injected into the stratosphere, multiplying:

- 0.224 Tg of emitted soot.

- 21.1 % of emitted soot being injected into the stratosphere

- Total yield of 1.50 k kt (= 100*15), given “100 low-yield weapons of 15 kilotons”.

I got 0.224 Tg (= 12.3*0.855*0.0213) of emitted soot, multiplying:

- 12.3 Tg (= 8.454 + (23.77 - 8.454)/(72.62 - 5.24)*(22.1 - 5.24)) of emitted soot if there was no-rubble, which I determined:

- For my available fuel per area for countervalue nuclear detonations of 22.1 g/cm^2.

- Linearly interpolating the no-rubble results of Reisner 2019 (see Table 1). For 5.24 and 72.62 g/cm^2, 8.454 and 23.77 Tg

- 85.5 % (= 3.158/3.692) to adjust for the presence of rubble. This is the ratio between the emitted soot of the rubble and no-rubble results of Reisner 2018 (see Table 1 of Reisner 2019).

- 2.13 % to account for the overestimation of emitted soot per burned fuel. Reisner 2019 says their “BC [black carbon, i.e. soot] emission factor is high by a factor of 10–100”, and Denkenberger 2018 models the “percent of combustible material that burns that turns into soot” as a lognormal distribution with 2.5th and 97.5th percentiles equal to 1 % and 4 % (see Table 2), whose mean is 2.13 %[22]. The production of soot would ideally be determined via chemical modelling of the combustion of fuel in the conditions of a firestorm, but I do not think we have that[23].

I concluded 21.1 % (= 0.0621*3.39) of emitted soot is injected into the stratosphere, multiplying:

- 6.21 % (= 0.196/3.158) of emitted soot being injected into the stratosphere in the 1st 40 min, which is implied by the results of Reisner 2018 (see Table 1 of Reisner 2019). I estimated it from the ratio between the 0.196 Tg of soot injected into the stratosphere in the 1st 40 min, and 3.158 Tg of emitted soot in the rubble case. I must note:

- The 0.196 Tg is referred to in Reisner 2019 as being injected “above 12 km”, not into the stratosphere. Nonetheless, I am assuming the stratosphere starts there, as Reisner 2018 attributes that height to the tropopause (which marks the start of the stratosphere). “Note that a majority of black carbon is found significantly below the tropopause (roughly 12 km) and hence can be easily washed away by precipitation produced by the climate model”. Interestingly, the stratosphere only starts at 16.6 km according to Figure 4 of Wagman 2020[24] (eyeballing the dashed black lines).

- Reisner 2019 does not explicitly say the 0.196 Tg refers to the 1st 40 min, but I think it does[25]:

- Reisner 2018’s discussion of the fire simulation for the no-rubble case is compatible with 0.23 Tg (= 3.69 - 3.46) of soot being injected into the stratosphere in the 1st 40 min, which is quite similar to 0.236 Tg in Table 1 of Reisner 2019. “The total amount of BC produced is in line with previous estimates (about 3.69 Tg from no-rubble simulation); however, the majority of BC resides below the stratosphere (3.46 Tg below 12 km) and can be readily impacted by scavenging from precipitation either via pyrocumulonimbus produced by the fire itself (not modeled) or other synoptic weather systems”.

- Reisner 2019 only discusses the fire simulations, which only last 40 min. From Reisner 2018, “HIGRAD-FIRETEC simulations for this domain used 5,000 processors and took roughly 96 h to complete for 40 min of simulated time”.

- 3.39 (= 0.8/0.236) times as much soot being injected into the stratosphere in total as in the 1st 40 min. This respects the no-rubble case of Reisner 2018, and is the ratio between:

- 0.8 Tg of soot injected into the stratosphere in total. “The BC aerosol that remains in the atmosphere, lifted to stratospheric heights by the rising soot plumes, undergoes sedimentation over a time scale of several years (Figures 8 and 9). This mass represents the effective amount of BC that can force climatic changes over multiyear time scales. In the forced ensemble simulations, it is about 0.8 Tg after the initial rainout, whereas it is about 3.4 Tg in the simulation with an initial soot distribution as in Mills et al. (2014)”.

- 0.236 Tg of soot injected into the stratosphere in the 1st 40 min, in line with the last row of Table 1 of Reisner 2019.

The estimate of 6.21 % of emitted soot being injected into the stratosphere in the 1st 40 min is derived from the rubble case of Reisner 2018, which did not produce a firestorm. However, in response to Robock 2019, Reisner 2019 run:

Two simulations at higher fuel loading that are in the firestorm regime (Glasstone & Dolan, 1977): the first simulation (4X No-Rubble) uses a fuel load around the firestorm criterion (4 g/cm2) and the second simulation (Constant Fuel) is well above the limit (72 g/cm2).

These simulations led to a soot injected into the stratosphere in the 1st 40 min per emitted soot of 5.45 % (= 0.461/8.454) and 6.44 % (= 1.53/23.77), which are quite similar to the 6.21 % of Reisner 2018 I used above. Reisner 2019 also notes:

Of note is that the Constant Fuel case is clearly in the firestorm regime with strong inward and upward motions of nearly 180 m/s during the fine-fuel burning phase. This simulation included no rubble, and since no greenery (trees do not produce rubble) is present, the inclusion of a rubble zone would significantly reduce BC production and the overall atmospheric response within the circular ring of fire.

This suggests a firestorm is not a sufficient condition for a high soot injected into the stratosphere per emitted soot.

Toon 2008 and Toon 2019

I deducted a soot injected into the stratosphere per countervalue yield of 0.00215 Tg/kt (= 945/(440*10^3)) for Toon 2008 and Toon 2019. I computed it from the ratio between:

- 945 Tg (= 1.35*10^3*0.700) of soot injected into the stratosphere, multiplying:

- 1.35 k Tg of emitted soot.

- 70.0 % of emitted soot being injected into the stratosphere.

- “440-Mt total yield [4.4 k detonations of 100 kt]”.

I got 1.35 k Tg (= 180*7.52) of emitted soot, multiplying:

- “180 Tg of [“generated”] soot”.

- 7.52 (= 22.1/2.94) to adjust for the available fuel per area:

- Emitted soot is proportional to burned area, in agreement with the 2nd equation of Toon 2008.

- I estimated an available fuel per area for countervalue nuclear detonations of 22.1 g/cm^2.

- I think the results of Toon 2008 imply 0.0294 Tg/km^2 (= 11.2*10^3/(4.4*10^3*86.6)) of available fuel per area, i.e. 2.94 g/cm^2 (= 0.0294*10^(12 - 5*2)), given:

- 11.2 k Tg (= 180/0.016) of fuel, which is the ratio between the above soot and “0.016 kg of soot per kg of fuel”.

- “A SORT conflict with 4400 nuclear explosions”.

- A burned area per detonation of 86.6 km^2. “In our model we considered 100-kt weapons, since that is the size of many of the submarine-based weapons in the US, British, and French arsenals. In that case we assume a burned area of 86.6 km2 per weapon”.

I concluded 70.0 % (= (1 - 0.20)*(1 - 0.125)) of emitted soot is injected into the stratosphere, in agreement with Toon 2019. This stems from:

- “On the basis of limited observations of pyrocumulus clouds (16) [Toon 2007], we assume that 20% of the BC is removed by rainfall during injection into the upper troposphere”.

- “Further smoke is rained out by the climate model before the smoke is lofted into the stratosphere by solar heating of the smoke. The fraction of the injected mass that is present in the model over 15 years is shown in fig. S5. In the first few days after the injection, 10 to 15% of the smoke is removed in the climate model before reaching the stratosphere”. So I considered an additional soot removal of 12.5 %[21] (= (0.10 + 0.15)/2).

You might have noticed that I discounted the results of Reisner 2018 to account for their overestimation of the emitted soot per burned fuel, but that I did not do that for Toon 2008. I think this is right because, right after “how much of the fuel is converted into soot”, there is a reference to Turco 1990, which estimates an emitted soot per burned fuel very similar to what I assumed in the previous section[22].

Toon 2019 justifies the 20 % soot removal during injection into the upper troposphere citing Toon 2007, which in turn backs it up citing Turco 1990[26], but I noted this does not justify the value that well. From the header of Table 2 of Turco 1990, “the prompt soot removal efficiency [i.e. soot removal during injection into the upper troposphere[27]] is taken to be 20% (range of 10 to 25%)”, which checks out, but it is mentioned that:

Originally, we (2) [Turco 1983] estimated that 25 to 50% of the smoke mass would be immediately scrubbed from urban fires by induced precipitation. However, based on current data, it is more reasonable to assume that, on average, <=10 to 25% of the soot emission is likely to be removed in such a manner.

Nevertheless, as far as I can tell, the “current data” is not discussed in Turco 1990. I would have expected to see a justification for the update, as the 20 % prompt soot removal assumed in Turco 1990 is lower than the lower bound of 25 % attributed to Turco 1983. In addition, I was not able to confirm the soot removal of 25 % to 50 % quoted above, searching in Turco 1983 for “%”, “25 percent”, “50 percent”, “0.25”, “0.5” and “rain”. It is possible a soot removal of 25 % to 50 % is implied by the assumptions or results of Turco 1983, although it is not explicitly mentioned, but it looks like this might not be so. Turco 1983 appears to have used a soot removal of 20 % as Turco 1990. From Table 2, “80 percent [of the soot was assumed to be injected] in the stratosphere”. I did not find an explanation of this value searching for “80 percent” and “0.8”.

Brian Toon, the 1st author of Toon 2007, Toon 2008 and Toon 2019, and 2nd of Turco 1983 and Turco 1990, clarified the 20 % prompt soot removal in Toon 2007 was calculated from (1 minus) the ratio between the concentration of smoke and carbon monoxide at the stratosphere and near natural fires. I tried to obtain the 20 % with this approach, but did not have success. I assume Brian’s clarification refers to the following passage of Toon 2007:

According to Andreae et al. (2001) in natural fires the ratio of injected smoke aerosol larger than 0.1 µm to enhanced carbon monoxide concentrations is in the range 5–20 cm^3/ppb near the fires. Jost et al. (2004) found ratios ∼7 [cm^3/ppb] in smoke plumes deep within the stratosphere over Florida that had originated a few days earlier in Canadian fires, implying that the smoke particles had not been significantly depleted during injection into the stratosphere (or subsequent transport over thousands of kilometers in the stratosphere). Such evidence is consistent with the choice of R=0.8 for smoke removal in pyroconvection.

On the one hand, I agree with the last sentence, as the quoted evidence is consistent with a smoke removal in pyroconvection between 0 (7 > 5) and 65 % (= 1 - 7/20), which encompasses 20 % (= 1 - 0.8). On the other hand, this value seems to be pessimistic. Assuming a ratio between the concentration of smoke and carbon monoxide near the fires of 12.5 cm^3/ppb[21] (= (5 + 20)/2), R = 56.0 % (= 7/12.5) of smoke would be injected into the upper troposphere, which suggests a prompt soot removal of 44.0 % (= 1 - 0.560), 2.20 (= 0.440/0.20) times as high as the value supposed in Toon 2007.

I shared the above reasoning with Brian, but his best guess continues to be 20 % soot removal during the injection into the upper troposphere. So I relied on that value to estimate the soot injected into the stratosphere per countervalue yield at the start of this section.

As a side note, Turco 1983 presents an emitted soot per yield of land near-surface and surface detonations of 1.0*10^-4 and 3.3*10^-4 Tg/kt (see Table 2), which are 3.26 % (= 1.0*10^-4/0.00307) and 10.7 % (= 3.3*10^-4/0.00307) the 0.00307 Tg/kt (= 0.00215/0.7) I inferred from Toon 2008[28]. Brian Toon clarified the lower soot emissions in Toon 2008 are explained by this study considering a less fuel per area owing to more detonations with larger yield, which imply a larger burned area with lower population density. I think this makes sense.

Considerations influencing the soot injected into the stratosphere

There are a number of considerations I have not covered influencing the soot injected into the stratosphere per countervalue yield. I have little idea about their net effect, but I point out some of them below. Relatedly, feel free to check Hess 2021, and the comments on Bean’s and Mike’s post.

Overestimating soot injected into the stratosphere

Besides the pessimistic assumption regarding the soot emissions per burned area, which I corrected for, Reisner 2018 says:

For the vertical transport of the BC, very calm ambient winds are assumed in the model, so to prevent rapid dispersion of the BC in the plume. The height of burst is determined as twice the fallout-free height, so to minimize building damage and to maximize the number of ignited locations. Fire propagation in the model occurs primarily via convective heat transfer and spotting ignition due to firebrands, and the spotting ignition model employs relatively high ignition probabilities as another worst case condition

[...]

The wind speed profile was chosen to be high enough to maintain fire spread but low enough to keep the plume from tilting too much to prevent significant plume rise (worst case). Wind direction is set as 270° (west-to-east, +x direction) for all heights, with no directional shear, and a weakly stable atmosphere was used below the tropopause to assist plume rise (worst case).

David:

- Thinks one does not need wind to maintain fire spread if one includes secondary ignitions, or the fireball ignites everything at once.

- Commented the worst case would be an unstable atmosphere (rather than a “weakly stable” one), like in a thunderstorm.

Underestimating soot injected into the stratosphere

Secondary ignitions were neglected in Reisner 2018:

The impact of secondary ignitions, such as gas line breaks, is not considered and research is still needed to determine their impact on a mass fire's intensity. For example, evidence of secondary ignitions in the Hiroshima conflagration ensuing the nuclear bombing (National Research Council, 1985), or utilization of incendiary bombs in Dresden and Hamburg (Hewitt, 1983), led to unique conditions that resulted in significantly enhanced fire behavior.

David commented “existing heating/cooking fires spreading” “is all that was required for the San Francisco earthquake firestorm”. Bean noted “urban fires are down 50% since the 1940s and way more since 1906”, when the San Francisco earthquake and firestorm happened. GPT-4 very much agreed urban fires are now less likely to occur[29]. On the other hand, David commented:

- Urban fires have decreased mostly due to the installation of sprinkler systems, smoke detectors, and reductions in smoking and the combustibility of certain materials (e.g. mattresses).

- The above would not help much to mitigate the house fires caused by nuclear detonations, which have multiple ignition points.

As noted in Robock 2019, fires, and therefore soot production and elevation, were only modelled for 40 min:

Reisner et al. stated that their fires were of surprisingly short duration, “because of low wind speeds and hence minimal fire spread, the fires are rapidly subsiding at 40 min.” However, they do not show the energy release rate so that we can tell if the fuel has been consumed within 40 minutes. And their claims of low wind speed are erroneous, as they choose wind speeds higher than typically observed in Atlanta. Real-world experience with firestorms such as in Hiroshima or Hamburg during World War II or in San Francisco after the 1906 earthquake (London, 1906), and of conflagrations, such as after the bombing of Tokyo during World War II (Caidan, 1960), suggests that a 40-minute mass fire is a dramatic underestimate; most of these fires last for many hours. A longer fire would make available more heat and buoyancy to inject soot to higher altitudes. If their fire had a short duration, and did not simply blow off their grid, it was likely due to the low fuel load assumed in their target area and combustion that did not consume all of the available fuel.

Reisner 2019 replied that:

Another important point concerning these simulations is that the rapid burning of the fine fuels leads to both a reduction in oxygen that limits combustion and a large upward transport of heat and mass that stabilizes the upper atmosphere above and downwind of the firestorm. These dynamical and combustion processes help limit fire activity and BC production once the fine material has been consumed (timescale < 30 min). Hence, the primary time period for BC injection that could impact climate occurs during a relatively short time period compared to the entirety of the fire or the continued burning and/or smoldering of thicker fuels.

[...]

While the full duration is not modeled, we argue that the primary atmospheric response from a nuclear detonation is the rapid burning of the fine fuels. Thick fuels will take longer to burn but will induce less atmospheric response and produce and inject less BC to upper atmosphere. Further, during the later time period, the upper atmosphere stabilizes from the large injection of heat and mass. Firestorms such as Dresden were maintained not only by burning of thick fuels but also by the injection of highly flammable fuel from the incendiary bombs, which we believe acted as fine fuel replacement.

In any case, it still seems to me Robock 2019 might have a valid point:

- From the legend of Figure 6 of Reisner 2018, the soot emissions in the rubble case for 40 min are 1.32 (= 3.16/2.39) times those for 20 min, so it is not obvious that soot emissions after 40 min would be negligible.

- From Figure 7, soot continues to be injected into the stratosphere in the climate simulation (run after the fire simulation), which means soot not injected into the stratosphere in the 1st 40 min can still do it afterwards. Nevertheless, I guess the initial conditions of the climate simulation, which I think are supposed to represent a random typical atmosphere, are less favourable to soot being injected into the stratosphere than the final ones of the fire simulation. If true, this would result in underestimating the injection of soot into the stratosphere.

I guess these 2 arguments are stronger for firestorms, which were not produced in Reisner 2018. The 2 simulations of Reisner 2019 concern firestorms, but I would like to see:

- On the 1st point above, data on soot emissions for a longer fire simulation demonstrating they are negligible after 40 min.

- On the 2nd, climate simulations demonstrating the soot injected into the stratosphere in total as a fraction of that in the 1st 40 min is similar to the ratio of 3.39 respecting the no-rubble case of Reisner 2018.

Overestimating/Underestimating soot injected into the stratosphere

Robock 2019 contended that:

Water vapor allows for latent heat release when clouds form. Numerous studies have shown that sensible and latent heat release is essential to lofting smoke in either firestorms (e.g., Penner et al., 1986) or conflagrations (Luderer et al., 2006). Reisner et al. stated “A dry atmosphere was utilized, and pyrocumulus impacts or precipitation from pyro-cumulonimbus were not considered. While latent heat released by condensation could lead to enhanced vertical motions of the air, increased scavenging of soot particles by precipitation is also possible. These processes will be examined in future studies using HIGRAD-FIRETEC.” By not considering pyrocumulonimbus clouds, which by the latent heat of condensation can inject soot into the stratosphere, they have eliminated a major source of buoyancy that would loft the soot. They seem to suggest that any lofting of soot would be balanced by significant precipitation scavenging, but there is no evidence for that assumption. In fact, forest fires triggered pyrocumulonimbus clouds that lofted soot into the lower stratosphere in August 2017 over British Columbia, Canada. Over the succeeding weeks, the soot was lofted many more kilometers, as observed by satellites, because it was heated by the Sun (Yu et al., 2019). This fire is direct evidence of the self-lofting process Robock et al. (2007) and Mills et al. (2014) modeled before. It also shows that precipitation in the cloud still allowed massive amounts of smoke to reach the stratosphere.

Reisner 2019 replied that:

The latent heat release may or may not lead to enhanced smoke lofting depending on the complex microphysical and mesoscale processes. Robock et al. (2019) cite wildfires in extremely dry conditions that prevent precipitation formation and do not model the process. Precipitation scavenging of BC can be much higher than is currently assumed (20%) (Yu 2018). We and the community agree that research is needed to quantify the role latent heat plays in BC movement and washout.

Meanwhile, Tarshish 2022 concluded:

Direct numerical and large-eddy simulations indicate that dry firestorm plumes possess temperature anomalies that are less than the requirements for stratospheric ascent by a factor of two or more. In contrast, moist firestorm plumes are shown to reach the stratosphere by tapping into the abundant latent heat present in a moist environment. Latent heating is found to be essential to plume rise, raising doubts about the applicability of past work [namely, Reisner 2018 and Reisner 2019] that neglected moisture.

Nonetheless, as hinted by Reisner 2019, moisture not only helps the emitted soot reach the stratosphere, but it also contributes to it being rained out. This latter process is not modelled in Tarshish 2022:

A limitation of the theory and simulations presented here is the absence of soot microphysics. Soot aerosols provide cloud condensation nuclei that may alter the drop size distribution and impact auto-conversion. This aerosol effect is expected to invigorate convection (Lee et al., 2020), lofting the plume higher. Coupling soot to microphysics, however, also enables soot to rain out, which could remove much of the soot from the rising plume as suggested in Penner et al. (1986). Given the essential role of moisture in lofting firestorm plumes we identified here, future research should investigate how these second-order microphysical effects impact firestorm soot transport. Another aspect not addressed here and deserving of future study is the radiative lofting of plumes, which has been observed to substantially lift wildfire plume soot for months after the fire (Yu et al., 2019).

Available fuel

Available fuel for counterforce

For counterforce, I calculated an available fuel per burned area of 3.07 g/cm^2 (= (11*10^6*2.06*10^3 + 8*10^9)*10^(-5*2)). I got this from the 1st equation in Box 1 of Toon 2008:

- The equation respects a linear regression of the fuel load (available fuel per area) on population density, relying on 1 data point for San Jose, 5 for the United States, and 3 for Hamburg (see Fig. 9 of Toon 2007).

- The slope is 11 Mg/person.

- The fuel load for null population density is 8 Gg/km^2.

- I used a population density of 2.06 k person/km^2 (= ((0.492*1.69 + 0.675*2.90 + 0.921*2.21 + 0.492*2.02 + 0.860*1.47)/(0.492 + 0.675 + 0.921 + 0.492 + 0.860))*10^3). This is a weighted mean with:

- Weights proportional to the counterforce nuclear detonations in each of 5 countries as a fraction of total. I guess the vast majority of offensive nuclear detonations will be (launched) by these countries. I obtained the weights supposing the offensive nuclear detonations by each country is the same, and using Metaculus’ median community predictions on 30 August 2023 for the fraction of countervalue offensive nuclear detonations before 2050 by these countries[30]. I got the following weights[31]:

- 49.2 % (= (1 - 0.0154)/2) for China, considering it is targeted by half of the countervalue nuclear detonations by the United States.

- 67.5 % (= 1 - 0.325) for India, considering it is targeted by all of the countervalue nuclear detonations by Pakistan.

- 92.1 % (= 1 - 0.079) for Pakistan, considering it is targeted by all of the countervalue nuclear detonations by India.

- 49.2 % (= (1 - 0.0154)/2) for Russia, considering it is targeted by half of the countervalue nuclear detonations by the United States.

- 86.0 % (= 1 - 0.118 - 0.0218) for the United States, considering it is targeted by all of the countervalue nuclear detonations by China and Russia.

- The following urban population densities. For:

- China, 1.69 k person/km^2 (= 883*10^6/(522*10^3)), respecting an urban population in 2021 of 883 M, and an urban land area in 2015[32] of 522 k km^2.

- India, 2.90 k person/km^2 (= 498*10^6/(172*10^3)), respecting an urban population in 2021 of 498 M, and an urban land area in 2015 of 172 k km^2.

- Pakistan, 2.21 k person/km^2 (= 86.6*10^6/(39.1*10^3)), respecting an urban population in 2021 of 86.6 M, and an urban land area in 2015 of 39.1 k km^2.

- Russia, 2.02 k person/km^2 (= 107*10^6/(52.9*10^3)), respecting an urban population in 2021 of 107 M, and an urban land area in 2015 of 52.9 k km^2.

- The United States, 1.47 k person/km^2 (= 275*10^6/(187*10^3)), respecting an urban population in 2021 of 275 M, and an urban land area in 2015 of 187 k km^2.

- Weights proportional to the counterforce nuclear detonations in each of 5 countries as a fraction of total. I guess the vast majority of offensive nuclear detonations will be (launched) by these countries. I obtained the weights supposing the offensive nuclear detonations by each country is the same, and using Metaculus’ median community predictions on 30 August 2023 for the fraction of countervalue offensive nuclear detonations before 2050 by these countries[30]. I got the following weights[31]:

- Relying on the urban population density presupposes the burned area by counterforce nuclear detonations is uniformly distributed across urban land area, which I guess makes sense a priori.

Available fuel for countervalue

For countervalue, I considered an available fuel per burned area of 21.1 g/cm^2 (= (0.00770*34.6 + 0.325*27.9 + 0.079*13.9 + 0.00770*13.0 + 0.140*8.95)/(0.00770 + 0.325 + 0.079 + 0.00770 + 0.140)). This is a weighted mean with:

- Weights proportional to the countervalue nuclear detonations in each of the aforementioned 5 countries as a fraction of total. Once again, I obtained the weights supposing the offensive nuclear detonations by each country is the same, and using Metaculus’ median community predictions on 30 August 2023 for the fraction of countervalue offensive nuclear detonations before 2050 by these countries. I got the following weights[33]:

- 0.770 % (= 0.0154/2) for China, considering it is targeted by half of the countervalue nuclear detonations by the United States.

- 32.5 % for India, considering it is targeted by all of the countervalue nuclear detonations by Pakistan.

- 7.9 % for Pakistan, considering it is targeted by all of the countervalue nuclear detonations by India.

- 0.750 % (= 0.0154/2) for Russia, considering it is targeted by half of the countervalue nuclear detonations by the United States.

- 14.0 % (= 0.118 + 0.0218) for the United States, considering it is targeted by all of the countervalue nuclear detonations by China and Russia.

- Available fuel per burned area adjusting the values in Table 13 of Toon 2007 for population density and burned area:

- Toon 2007 used population density data from 2003[34], but it has generally been increasing due to population growth and urbanisation, thus increasing fuel load. So I multiplied the values in Table 13 by the ratio between the fuel loads computed with the 1st equation in Box 1 of Toon 2008 (see previous section) for urban population densities:

- In 2023 (numerator), given by the ones I determined in the previous section.

- In 2003 (denominator), dividing urban population in 2003 by urban land area in 2000[35]. For:

- China, 1.78 k person/km^2 (= 776*10^6/(437*10^3)), respecting an urban population of 776 M, and an urban land area of 437 k km^2.

- India, 2.53 k person/km^2 (= 319*10^6/(126*10^3)), respecting an urban population of 319 M, and an urban land area of 126 k km^2.

- Pakistan, 3.06 k person/km^2 (= 56.0*10^6/(18.3*10^3)), respecting an urban population of 56.0 M, and an urban land area of 18.3 k km^2.

- Russia, 2.00 k person/km^2 (= 106*10^6/(52.9*10^3)), respecting an urban population of 106 M, and an urban land area of 52.9 k km^2.

- The United States, 1.39 k person/km^2 (= 231*10^6/(166*10^3)), respecting an urban population of 231 M, and an urban land area of 166 k km^2.

- In addition, Toon 2007 refers to a yield per detonation of 15 kt, and burned area of 13 km^2[36], whose radius () is 2.03 km (= (13/3.14)^0.5). I assumed burned area is proportional to yield, so it is 164 km^2 (= 13*189/15) for my yield of 189 kt, and the respective radius is 7.23 km (= (164/3.14)^0.5). Since population density decreases as distance to the city centre increases, the fuel load has to be adjusted downwards. As I believe is usually the case in urban economics, I presumed population density () decreases exponentially with the distance to the city centre () according to a certain density gradient (), such that , where is the population density at the city centre[37]. Consequently, the population density in a circle of radius centred at the city centre equals [38]. I set the density gradient to 0.1, which is the mean of those of the 47 cities analysed in Bertaud 2003 (see pp. 96 and 97 of the PDF). As a result, the population densities for the smaller and larger radii of 2.03 and 7.23 km are 0.874 (= 2/0.1/2.03^2*(1/0.1 - e^(-0.1*2.03)*(2.03 + 1/0.1))) and 0.627 (= 2/0.1/7.23^2*(1/0.1 - e^(-0.1*7.23)*(7.23 + 1/0.1))) times that at the city centre. So I also multiplied the values in Table 13 by 0.717 (= 0.627/0.874).

- I ended up with the following fuel loads:

- 34.6 g/cm^2 (= 50*0.964*0.717) for China, updating the original 50 g/cm^2 by a factor of 0.964 (= (11*1.69 + 8)/(11*1.78 + 8)) to account for population growth and urbanisation, and 0.717 to correct for different burned area.

- 27.9 g/cm^2 (= 35*1.11*0.717) for India, updating the original 35 g/cm^2 by factors of 1.11 (= (11*2.90 + 8)/(11*2.53 + 8)) and 0.717.

- 13.9 g/cm^2 (= 25*0.776*0.717) for Pakistan, updating the original 25 g/cm^2 by factors of 0.776 (= (11*2.21 + 8)/(11*3.06 + 8)) and 0.717.

- 13.0 g/cm^2 (= 18*1.01*0.717) for Russia, updating the original 18 g/cm^2 by factors of 1.01 (= (11*2.02 + 8)/(11*2.00 + 8)) and 0.717.

- 8.95 g/cm^2 (= 12*1.04*0.717) for the United States, updating the original 12 g/cm^2 by factors of 1.04 (= (11*1.47 + 8)/(11*1.39 + 8)) and 0.717.

- Toon 2007 used population density data from 2003[34], but it has generally been increasing due to population growth and urbanisation, thus increasing fuel load. So I multiplied the values in Table 13 by the ratio between the fuel loads computed with the 1st equation in Box 1 of Toon 2008 (see previous section) for urban population densities:

For context, my available fuel per area for countervalue nuclear detonations is:

- 1.32 (= 21.1/16) times the 16 g/cm^2 used in the “base case simulations” of Wagman 2020.

- 7.18 (= 21.1/2.94) times the 2.94 g/cm^2 I think is implied by Toon 2008.

- 20.1 (= 21.1/1.05) and 16.1 (= 21.1/1.31) times the 1.05 and 1.31 g/cm^2 related to the rubble and non-rubble cases of Reisner 2018 (see Table 1 of Reisner 2019).

Famine deaths due to the climatic effects

I expect 392 M deaths (= 0.0443*8.86*10^9) following a nuclear war which resulted in 22.1 Tg of soot being injected into the stratosphere. I found this multiplying:

I explain these estimates in the next sections.

Famine death rate due to the climatic effects

Defining large nuclear war

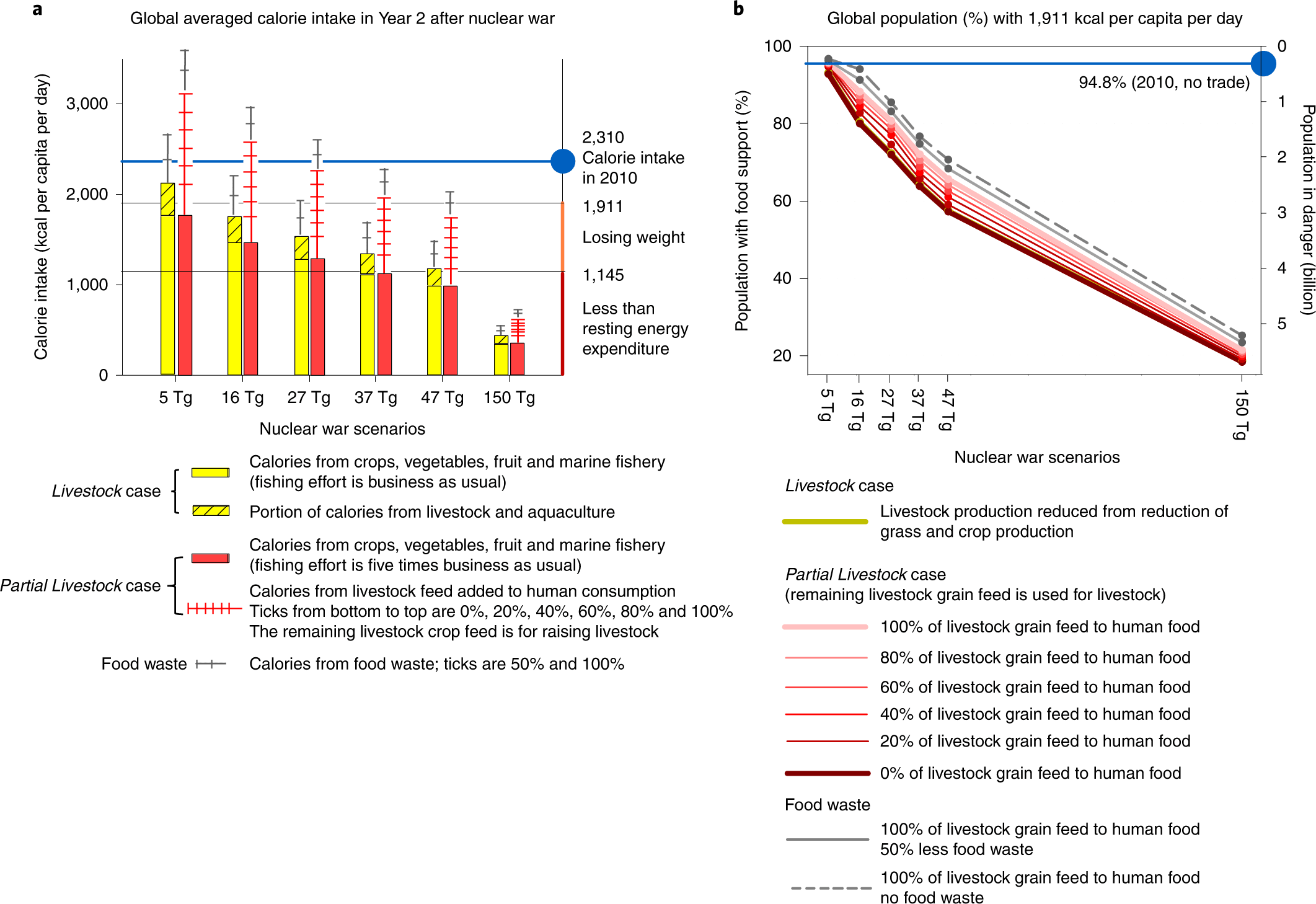

I agree with Christian that deaths in a nuclear war increase superlinearly with offensive nuclear detonations. As Luisa, I guess famine deaths due to the climatic effects increase logistically with soot injected into the stratosphere. For simplicity, I approximate the logistic function as a piecewise linear function which is 0 for low levels of soot.

The minimum offensive nuclear detonations based on which I define a large nuclear war marks the end of the region for which famine deaths due to the climatic effects are 0. From Fig. 5b of Xia 2022, for the case in which there is no international food trade, all livestock grain is fed to humans, and there is no household food waste (top line), adjusted to include international food trade without equitable distribution dividing by 94.8 % food support “when food production does not change [0 Tg] but international trade is stopped”, there are no deaths for 10.5 Tg[39]. I guess the societal response will have an effect equivalent to assuming international food trade, all livestock grain being fed to humans, and no household food waste (see next section), so I supposed the famine deaths due to the climatic effects are negligible up to the climate change induced by 10.5 Tg of soot being injected into the stratosphere in Xia 2022.

I believe Xia 2022 overestimates the duration of the climatic effects, so I considered the linear part of the logistic function starts at 11.3 Tg (instead of 10.5 Tg):

- My estimate is that the e-folding time of stratospheric soot is 4.72 years (= (2*(1.4 + 2.3)/2 + 6 + 6.5 + (4.0 + 4.6)/2 + (8.4 + 8.7)/2 + 4)/(2 + 5)). This is a weighted mean of the estimates provided in Table 3 of Wagman 2020 for 6 different climate models[21], and a stratospheric soot injection of 5 Tg[40]. For the cases in which an interval was provided, I used the mean between the lower and upper bound[21]. I attributed 2 times as much weight to the “EAMv1” model introduced in that study as to each of the other models, because it sounds like it should be expected to be more accurate. “In this study, the global climate forcing and response is predicted by combining two atmospheric models, which together span the micro-scale to global scale processes involved”.

- In Xia 2022, “the atmospheric model is the Whole Atmosphere Community Climate Model version 4 [WACCM4]”, whose e-folding time is 8.55 years[21] (= (8.4 + 8.7)/2) according to Table 3 of Wagman 2020.

- If stratospheric soot decays exponentially with an e-folding time , the mean stratospheric soot over a time , as a fraction of the initial soot , is [41].

- In Xia 2022, “in all the simulations, the soot is arbitrarily injected during the week starting on May 15 of Year 1”, and 2010 is the baseline year. So the time from this week until the end of year 2 is T = 1.62 years (= (7.5 + 12)/12).

- For the e-folding time of Xia 2022 of 8.55 years, the mean stratospheric soot over the above time, as a fraction of the initial stratospheric soot, is 91.1 % (= 8.55/1.62*(1 - e^(-1.62/8.55))). So an initial stratospheric soot of 10.5 Tg results in a mean stratospheric soot over the above time of 9.57 Tg (= 0.911*10.5).

- For my e-folding time of 4.72 years, the mean stratospheric soot over the above time, as a fraction of the initial stratospheric soot, is 84.6 % (= 4.72/1.62*(1 - e^(-1.62/4.72))). So 11.3 Tg (= 9.57/0.846) of soot have to be injected into the stratosphere to induce the climate change associated with 10.6 Tg in Xia 2022.

The similarity between the soot injections just above means the shorter climatic effects end up having a minor difference. What matters is the severity of the worst initial years, and my e-folding time is still sufficiently long for these to be roughly as bad.

I estimated 0.0491 Tg of soot injected into the stratosphere per countervalue nuclear detonation, so I expect an injection of 11.3 Tg requires 230 (= 11.3/0.0491) countervalue nuclear detonations. Since I only expect 21.5 % of offensive nuclear detonations to be countervalue, I defined a large nuclear war as having at least 1.07 k (= 230/0.215) offensive nuclear detonations, and assume no famine deaths due to the climatic effects for less than that.

David thinks having famine deaths due to the climatic effects starting to increase linearly after an injection of soot into the stratosphere of 0 Tg is much more accurate than after 11.3 Tg, because there is already significant famine now. The deaths from nutritional deficiencies and protein-energy malnutrition were 252 k and 212 k in 2019, and I suspect the real death toll is about 1 order of magnitude higher[42]. Nevertheless, I am not trying to estimate all famine deaths. I am only attempting to arrive at the famine deaths due to the climatic effects, not those resulting directly or indirectly from infrastructure destruction. I expect this will cause substantial disruptions to international food trade. As Matt Boyd commented:

Much of the catastrophic risk from nuclear war may be in the more than likely catastrophic trade disruptions, which alone could lead to famines, given that nearly 2/3 of countries are net food importers, and almost no one makes their own liquid fuel to run their agricultural equipment.

Relatedly, from Xia 2022:

Impacts in warring nations are likely to be dominated by local problems, such as infrastructure destruction, radioactive contamination and supply chain disruptions, so the results here apply only to indirect effects from soot injection in remote locations.

Famine death rate due to the climatic effects of large nuclear war

I would say the famine death rate due to the climatic effects of a large nuclear war would be 4.43 % (= 1 - (0.993 + (0.902 - 0.993)/(24.6 - 14.6)*(18.7 - 14.6))). I calculated this:

- For 22.1 Tg of soot injected into the stratosphere, i.e. a mean of 18.7 Tg (= 0.846*22.1) until the end of year 2.

- Supposing the famine death rate due to the climatic effects equals 1 minus the fraction of people with food support (1,911 kcal/person/d), which is plotted in Fig. 5b of Xia 2022.

- Getting the fraction of people with food support linearly interpolating between the scenarios of Fig. 5b of Xia 2022 in which there is no international food trade, all livestock grain is fed to humans, and there is no household food waste (top line), adjusted to include international food without equitable distribution trade dividing by 94.8 % food support “when food production does not change [0 Tg] but international trade is stopped”[39]:

- 99.3 % (= 0.941/0.948) for an injection of soot into the stratosphere of 16 Tg, which corresponds to a mean of 14.6 Tg (= 0.911*16) until the end of year 2.

- 90.2 % (= 0.855/0.948) for an injection of soot into the stratosphere of 27 Tg, which corresponds to a mean of 24.6 Tg (= 0.911*27) until the end of year 2.

Some reasons why my famine death rate due to the climatic effects may be too:

- Low:

- There would be disruptions to international food trade. I only assumed it would not in order to compensate for other factors, and because I guess it would mostly be a direct or indirect consequence of infrastructure destruction, not the climatic effects I am interested in.

- Xia 2022 assumes there is no disruption of national trade, nor of international non-food trade. This includes important inputs to agriculture, such as agricultural machinery, fertilisers, fuel, pesticides, and seeds.

- Not all livestock grain would be fed to humans. I only assumed it would in order to compensate for other factors.

- There would be some household food waste, but arguably not much. I also assumed it would not in order to compensate for other factors.

- Some food would go to people who would die. I assumed it would not (by getting the famine death rate due to the climatic effects from 1 minus the fraction of people with food support), for simplicity, and in order to compensate for other factors.

- Lower consumption of healthy food. “While this [Xia 2022’s] analysis focuses on calories, humans would also need proteins and micronutrients to survive the ensuing years of food deficiency (we estimate the impact on protein supply in Supplementary Fig. 3)”. On this topic, you can check Pham 2022.

- High:

- Foreign aid to the more affected countries, including international food assistance.

- Increase in meat production per capita from 2010, which is the reference year in Xia 2022, to 2037[43].

- Increase in real GDP per capita from 2010 to 2037 (see graph below).

- In Xia 2022:

- “Scenarios assume that all stored food is consumed in Year 1”, i.e. no rationing.

- “We do not consider farm-management adaptations such as changes in cultivar selection, switching to more cold-tolerating crops or greenhouses31 and alternative food sources such as mushrooms, seaweed, methane single cell protein, insects32, hydrogen single cell protein33 and cellulosic sugar34”.

- “Large-scale use of alternative foods, requiring little-to-no light to grow in a cold environment38, has not been considered but could be a lifesaving source of emergency food if such production systems were operational”.

- “Byproducts of biofuel have been added to livestock feed and waste27. Therefore, we add only the calories from the final product of biofuel in our calculations”. However, it would have been better to redirect to humans the crops used to produce biofuels.

- The minimum calorie supply is 1,911 kcal/person/d. In reality, lower values are possible with apparently tiny famine death rate due to the climatic effects from malnutrition:

- The calorie supply in the Central African Republic (CAR) in 2015 was 1,729 kcal/person/d.

- The disease burden from nutritional deficiencies in that year was 143 kDALY, which corresponds to 2.80 k deaths (= 143*10^3/51) based on the 51 DALY/life implied by GiveWell’s moral weights[44].

- The above number of deaths amounts to 0.0581 % (= 2.80*10^3/(4.82*10^6)) of CAR’s population in 2015.

- Lower consumption of unhealthy food.

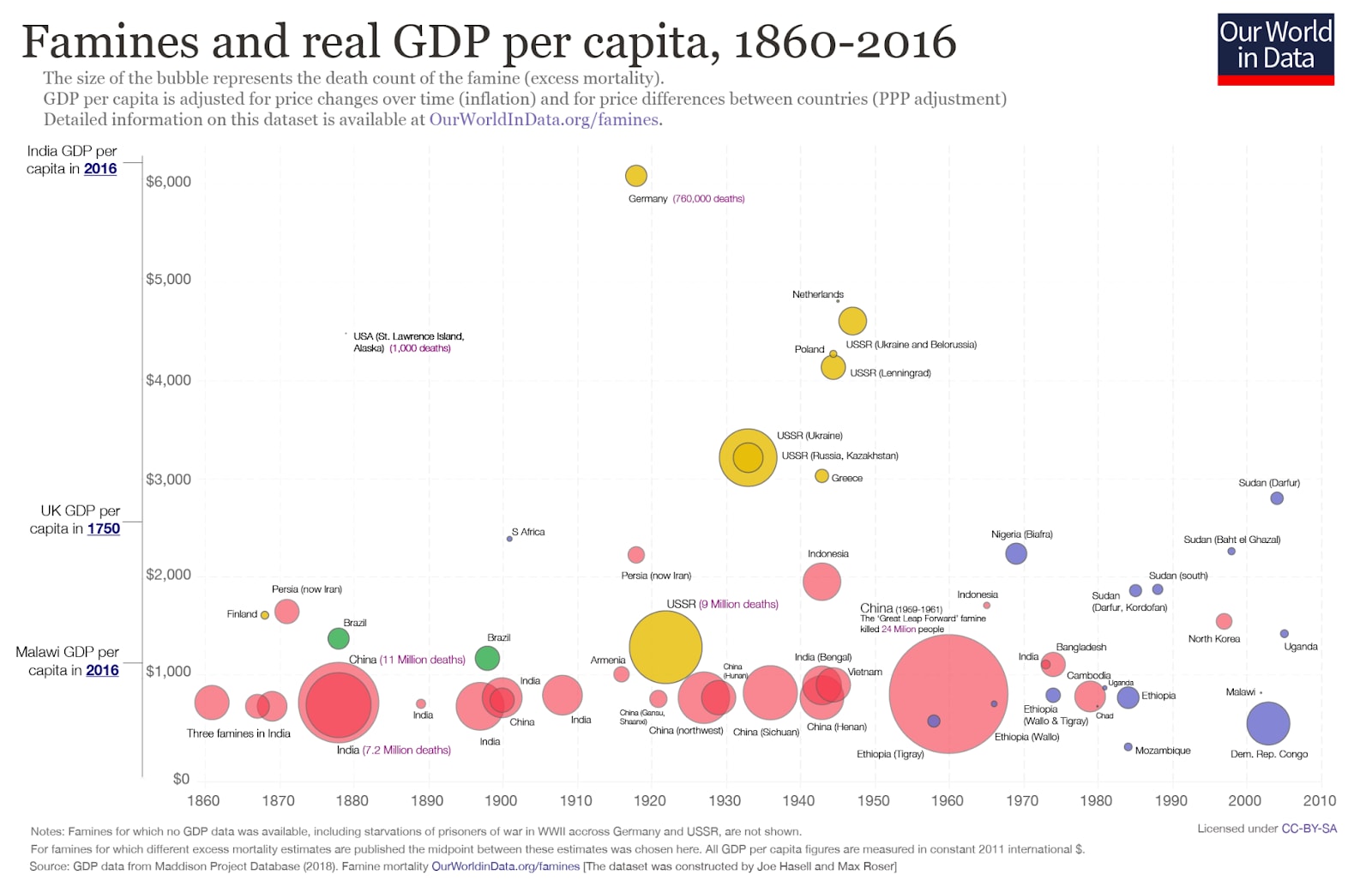

I stipulate the above roughly cancel out, although I am not so confident. I think high income countries without significant infrastructure destruction would respond particularly well. Historically, famines have only affected countries with low real GDP per capita.

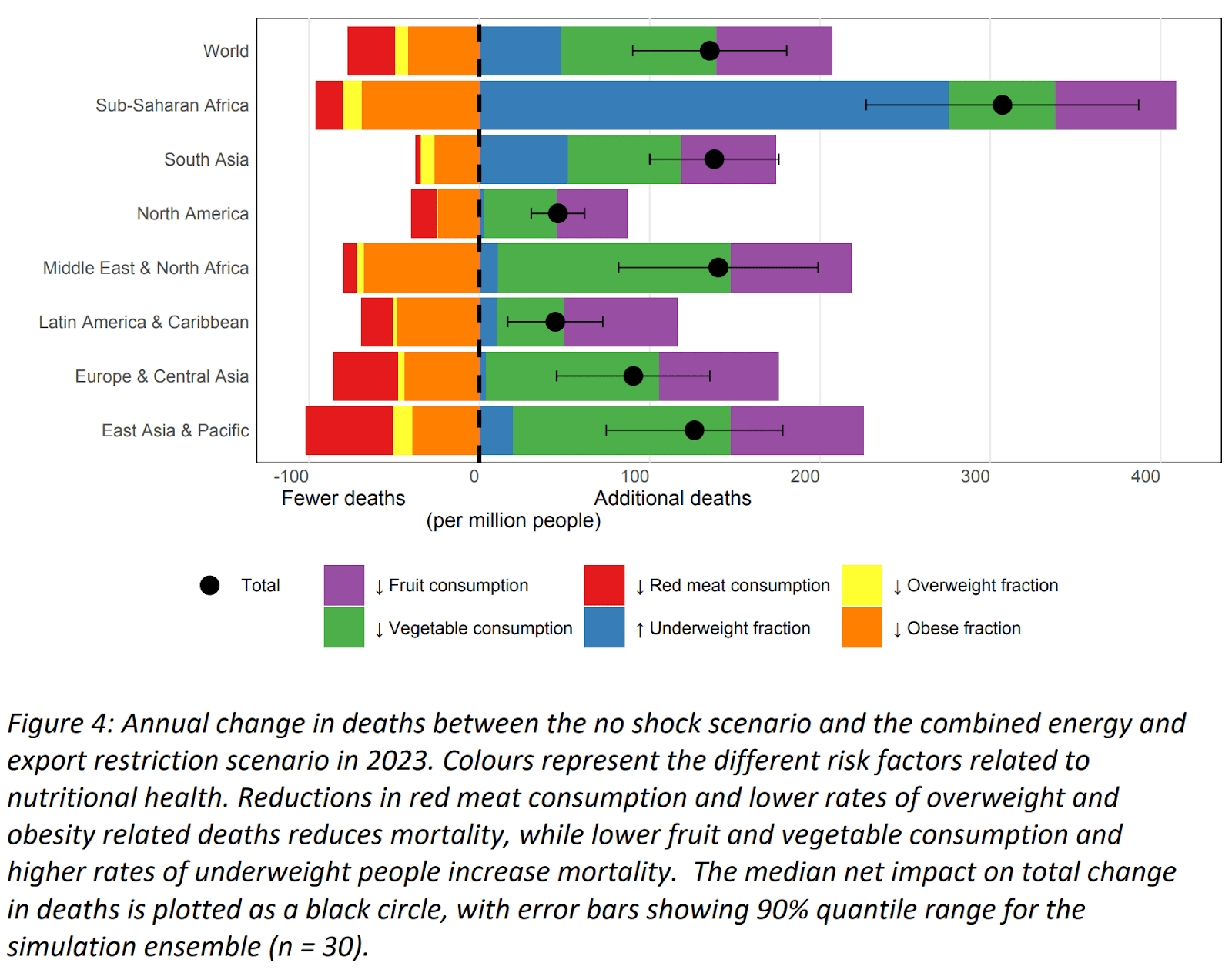

On the topic of lower consumption of healthy and unhealthy food, Alexander 2023 studies the effect of energy and export restrictions on deaths due to changes in red meat, fruits and vegetables consumption, and the fraction of the population who is underweight, overweight and obese. Lower red meat consumption, and less people being overweight and obese decreases deaths. Lower consumption of fruits and vegetables, and more people being underweight increases deaths. The results of the study are below.

The figure suggests the net effect corresponds to an increase in deaths. I am confident this would be the case for Sub-Saharan Africa, but not so much for other regions. The fraction of calories coming from animals increases with GDP per capita, so cheaper diets have a lower fraction of calories coming from meat, and the relative reduction in meat consumption would be higher than that in fruits and vegetables. I think Alexander 2023 takes this into account:

As prices increase, the model represents a consumption shift away from ‘luxury’ goods such as meat, fruit, and vegetables back towards staple crops, as well as lower consumption overall.

Alexander 2023 still concludes higher prices would lead to more deaths, but I wonder whether rationing efforts would ensure sufficient consumption of fruits and vegetables. I sense the deaths owing to decreased consumption of fruits and vegetables are overestimated in the figure above, but I have barely looked into the question.

Population

I considered a global population of 8.86 G (= (8.61 + (9.59 - 8.61)/(2052 - 2032)*(2037 - 2032))*10^9):

- For 2037 (= (2024 + 2050)/2), which is midway from now until 2050.

- Linearly interpolating between Metaculus’ median community predictions on 3 September 2023 for:

- 2032, 8.61 G.

- 2052, 9.59 G.

Uncertainty

To obtain a distribution for the famine death rate due to the climatic effects of a large nuclear war, without running a Monte Carlo simulation, I assumed a beta distribution with a ratio between the 95th and 5th percentiles equal to 702 (= e^((ln(3.70)^2 + ln(4.39)^2 + ln(68.3)^2 + ln(100)^2)^0.5)). This is the result of supposing the following follow independent lognormal distributions with ratios between the 95th and 5th percentile equal to[45]:

- 3.70 (= 4.11*10^3/(1.11*10^3)), which is the ratio between my 95th and 5th percentile offensive nuclear detonations for a large nuclear war.

- 4.39 (= 290/66.1), which is the ratio between the maximum and minimum mean yield of the United States nuclear warheads in 2023 for a large nuclear war.

- 68.3 (= 0.00215/(3.15*10^(-5))), which is the ratio between the soot injected into the stratosphere per countervalue yield I inferred for (not directly retrieved from) Reisner 2018 and Reisner 2019, and Toon 2007 and Toon 2008.

- 100, which is my out of thin air guess for the ratio between the 95th and 5th percentile famine death rate due to the climatic effects for an actual (not expected) injection of soot into the stratosphere of 22.1 Tg. A key contributing factor to such a high ratio is uncertainty in societal response. If I changed the ratio to:

- 10 (10 % as large), the overall ratio would become 181, i.e. 25.8 % (= 181/702) as large.

- 1 k (10 times as large), the overall ratio would become 4.16 k, i.e. 5.93 (= 4.16*10^3/702) times as large.

Simpler approaches to determine the ratio would lead to significantly different results:

- The maximum of the above ratios is 14.2 % (= 100/702) of my ratio. Using the maximum would only be fine if the factors were more like normal distributions.

- The product of the above ratios is 158 (= 3.70*4.39*68.3*100/702) times as large as mine. Using this product would only be correct if all the factors were perfectly correlated.

Ideally, I would have run a Monte Carlo simulation with my best guess distributions, instead of assuming just lognormals. Regardless, I would have used independent distributions for simplicity, so the results would arguably be similar.

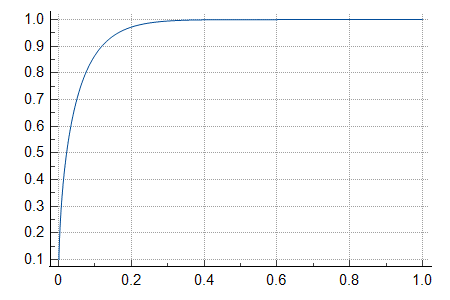

For an expected famine death rate due to the climatic effects of 4.43 %, a beta distribution with 95th percentile 702 times the 5th percentile has alpha and beta parameters equal to 0.522 and 11.3. The respective CDF is below. The horizontal axis is the famine death rate due to the climatic effects, and the vertical one the probability of less than a certain death rate. The 5th and 95th percentile famine death rate due to the climatic effects are 0.0233 % and 16.4 %, which correspond to 2.06 M (= 2.33*10^-4*8.86*10^9) and 1.45 G (= 0.164*8.86*10^9) deaths given at least one offensive nuclear detonation before 2050.

Given my 3.30 % probability of a large nuclear war before 2050, there is a 96.7 % (= 1 - 0.0330) chance of negligible famine deaths due to the climatic effects before then, thus my 5th percentile deaths before 2050 are 0 (0.05 < 0.967). My 95th percentile respects the 84.4th percentile (= 1 - (1 - 0.95)/0.32) famine death rate due to the climatic effects given at least one offensive nuclear detonation before 2050[46], which is 9.06 %[47], equivalent to 803 M (= 0.0906*8.86*10^9) deaths.

Summarising, since there are 26 years (= 2050 - 2024) before 2050, my best guess for the annual famine deaths due to the climatic effects of nuclear war before then is 496 k (= 12.9*10^6/26), and my 5th and 95th percentile are 0 and 30.9 M (= 803*10^6/26). My 95th percentile is 62.3 (= 30.9*10^6/(496*10^3)) times my best guess, which means there is lots of uncertainty.

For context, my best guess for the famine deaths due to the climatic effects is similar to the 415 k caused by homicides in 2019, and my 95th percentile identical to the 28.6 M (= (18.56 + 10.08)*10^6) caused by cardiovascular diseases and cancers in 2019.

Bear in mind my estimates only refer to the famine deaths due to the climatic effects. I exclude famine deaths resulting directly or indirectly from infrastructure destruction, and heat mortality.