Unflattering aspects of Effective Altruism

By NunoSempere @ 2024-03-15T10:47 (+192)

I've been writing a few posts critical of EA over at my blog. They might be of interest to people here:

- Unflattering aspects of Effective Altruism

- Alternative Visions of Effective Altruism

- Auftragstaktik

- Hurdles of using forecasting as a tool for making sense of AI progress

- Brief thoughts on CEA’s stewardship of the EA Forum

- Why are we not harder, better, faster, stronger?

- ...and there are a few smaller pieces on my blog as well.

I appreciate comments and perspectives anywhere, but prefer them over at the individual posts at my blog, since I have a large number of disagreements with the EA Forum's approach to moderation, curation, aesthetics or cost-effectiveness.

JWS @ 2024-03-15T21:56 (+25)

Important Update: I've made some changes to this comment given the feedback by Nuño & Arepo.[1] I was originally using strikethroughs, but this seemed to make it very hard to read, so I've instead edited it inline. Thus, the comment now is therefore fairly different from the original one (though I think that's for the better).

On reflection, I think that Nuño and I are very different people, with different backgrounds, experiences with EA, and approaches to communication. This leads to a large 'inferential distance' between us. For example:

Nuño wrote: "I disagree with the EA Forum's approach to life"

They meant: "I have a large number of disagreements with the EA Forum's approach to moderation, curation, aesthetics or cost-effectiveness"

I interpreted: "I have significant issues with the kind of people who run and use the EA Forum"

or

Nuño wrote: "I feel that EA leadership uses worries about the dangers of maximization to constrain the rank and file in a hypocritical way."

They might mean:[2] "OpenPhil's messaging has been inconsistent with their actions, and they're squandering the potential of the rank and file EA membership"

I interpreted: "OpenPhil staff are deliberately and knowingly deceptive in their communications when making non-maximalist/worldview diversification arguments, and are intentionally using them to maintain control of the community in a malicious way"

I think while some of my interpretations were obviously not what Nuño intended to communicate, I think this is partly due to Nuño's bellicose framings (his words, see Unflattering aspects of Effective Altruism footnote-3) which were unhelpful for productive communication on a charged issue. I still maintain that EA is primarily a set of ideas,[3] not institutions, and it's important to make this distinction when criticising EA organisations (or 'The EA Machine'). In retrospect, I wonder if it should have been titled something like "Unflattering Aspects of how EA is structured" or something like that, which I'd have a lot of agreement with in many respects.

I wasn't sure what to make of this, personally. I appreciate a valued member of the community offering criticism of the establishment/orthodoxy, but some of this just seemed... off to me. I've weakly down-voted, and I'll try to explain some of the reasons why below:

Nuño's criticism of the EA Forum seems to be:

i) not worth the cost (which, fair),

ii) not as lean a web interface as it could be (which is more personal preference than a reason to step away from all EA)

and iii) overly heavy handed on personal moderation.

But the examples Nuño gives in the Brief thoughts on CEA’s stewardship of the EA Forum post, especially Sabs, seem to be people being incredibly rude and not contributing to the Forum in helpful or especially truth-seeking ways to me. Nuño does provide some explanation (see the links Arepo provides), but not in the 'Unflattering Aspects' post, and I think that causes confusion. Even in Nuño's comment on another chain, I don't understand summarising their disagreement as "I disagree with the EA Forum's approach to life". Nuño has since changed that phrasing, and I think the new wording is better.

Still, it seemed like a very odd turn of phrase to use initially, and one that was unproductive to getting their point across, which is one of my other main concerns about the post. To me, some of the language in Unflattering aspects of Effective Altruism appeared to me as hostile and not providing much context for readers. For example:[4] I don't think the Forum is "now more of a vehicle for pushing ideas CEA wants you to know about", I don't think OpenPhil uses "worries about the dangers of maximization to constrain the rank and file in a hypocritical way". I don't think that one needs to "pledge allegiance to the EA machine" in order to be considered an EA. It's just not the EA I've been involved with, and I'm definitely not part of the 'inner circle' and have no special access to OpenPhil's attention or money. I think people will find a very similar criticsm expressed more clearly and helpfully in Michael Plant's What is Effective Altruism? How could it be improved? post.

There are some parts of the essay where Nuño and I very much agree. I think the points about the leadership not making itself accountable the community are very valid, and a key part of what Third Wave Effective Altruism should be. I think depicting it as a "leadership without consent" is pointing at something real, and in the comments on Nuño's blog Austin Chen is saying a lot that makes sense. I agree with Nuño that the 'OpenPhil switcheroo' phenomenon is concerning and bad when it happens. Maybe this is just a semantic difference by what Nuño and I mean by 'EA', but to me EA is more than OpenPhil. If tomorrow Dustin decided to wind down OpenPhil in it's entirety, I don't think the arguments in Famine, Affluence, or Morality lose their force, or that factory farming becomes any less of a moral catastrophe, or that we should not act prudently on our duties toward future generations.

Furthermore, while criticising OpenPhil and EA leadership, Nuño appears to claim that these organisations need to do more 'unconstrained' consequentialist reasoning,[5] whereas my intuition is that many in the community see the failure of SBF/FTX as a case where that form of unconstrained consequentialism went disastrously wrong. While many of you may be very critical of EA leadership and OpenPhil, I suspect many of you will be critiquing that orthodoxy from exactly the opposite direction. This is probably the weakest concern I have with the piece though, especially on reflection.

- ^

The edits are still under construction - I'd appreciate everyone's patience while I finish them up.

- ^

I'm actually not sure what the right interpretation is

- ^

And perhaps the actions they lead to if you buy moral internalism

- ^

I think it's likely that Nuño means something very different with this phrasing that I do, but I think the mix of ambiguity/hostility can led these extracts to be read in this way

- ^

Not to say that Nuño condones SBF or his actions in any way. I think this is just another case of where someone's choice to get off the 'train to crazy town' can be viewed as another's 'cop-out'.

NunoSempere @ 2024-03-15T22:36 (+24)

Hey, thanks for the comment. Indeed something I was worried about with the later post was whether I was a bit unhinged (but the converse is, am I afraid to point out dynamics that I think are correct?). I dealt with this by first asking friends for feedback, then posting it but distributing it not very widely, then once I got some comments (some of which private) saying that this also corresponded to other people's impressions, I decided to share it more widely.

The examples Nuño gives...

You are picking on the weakest example. The strongest one might be Sapphire. A more recent one might have been John Halstead, who had a bad day, and despite his longstanding contributions to the community was treated with very little empathy and left the forum.

Furthermore, while criticising OpenPhil/EA 'leadership', Nuňo doesn't make points that EA is too naïve/consequentialist/ignoring common-sense enough. Instead, they don't think we've gone far enough into that direction.[1] See in Alternate Visions of EA, the claim "if you aren’t producing an SBF or two, then your movement isn’t being ambitious enough". In a comment reply in Why are we not harder, better, faster, stronger?, they say "There is a perspective from which having a few SBFs is a healthy sign." While many of you may be very critical of EA leadetship and OpenPhil, I suspect many of you will be critiquing that orthodoxy from exactly the opposite direction. Be aware of this if you're upvoting.

I think this paragraph misrepresents me:

- I don't claim that "if you aren’t producing an SBF or two, then your movement isn’t being ambitious enough". I explore different ways EA could look, and then write "From the creators of “if you haven’t missed a flight, you are spending too much time in airports” comes “if you aren’t producing an SBF or two, then your movement isn’t being ambitious enough.”". The first is a bold assertion, the second one is reasonable to present in the context of exploring varied possibilities.

- The full context for the other quote is "There is a perspective from which having a few SBFs is a healthy sign. Sure, you would rather have zero, but the extreme in which one of your members scams billions seems better than one in which your followers are bogged down writing boring plays, or never organize to do meaningful action. I'm not actually sure that I do agree with this perspective, but I think there is something to it." (bold mine). Another way to word this less provocatively is: even with SBF, I think the EA community has had positive impact.

- In general, I think picking quotes out of context just seems deeply hostile.

First, their priorities are different from mine" (so what?)

So if leadership has priorities different from the rest of the movement, the rest of the movement should be more reluctant to follow. But this is for people to decide individually, I think.

"the EA machine has been making some weird and mediocre moves" (would like some actual examples on the object-level of this)

but without evidence to back this up

You can see some examples in section 5.

A view towards maximisation above all,

I think the strongest version of my current beliefs is that quantification is underdeployed on the margin and it can unearth Pareto improvements. This is joined with an impression that we should generally be much more ambitious. This doesn't require me to believe that more maximization will always be good, rather than, at the current margin, more ambition is.

JWS @ 2024-03-16T10:01 (+3)

Really appreciate your reply Nuno, and apologies if I've misrepresented you, or if I'm coming across as overly hostile. I'll edit my original comment given your & Arepo's response. I think part of why I posted my comment (even though I was nervous to), is that you're a highly valued member of the community[1], and your criticisms are listening to and carrying weight. I am/was just trying to do my part to kick the tires, and distinguish criticisms I think are valid/supported from those which are less so.

On the object level claims, I'm going to come over to your home turf (blog) and discuss it there, given you expressed a preference for it! Though if you don't think it'll be valuable for you, then by all means feel free to not engage. I think there are actually lots of points where we agree (at least directionally), so I hope it may be productive, or at least useful for you if I can provide good/constructive criticism.

- ^

I very much value you and your work, even if I disagree

Arepo @ 2024-03-16T03:29 (+14)

I think much of this criticism is off. There are things I would disagree with Nuno on, but most of what you're highlighting doesn't seem to fairly represent his actual concerns.

Nuño never argues for why the comments they link to shouldn't be moderated

He does. Also, I suspect his main concern is with people being banned rather than having their posts moderated.

Nuňo doesn't make points that EA is too naïve/consequentialist/ignoring common-sense enough. Instead, they don't think we've gone far enough into that direction. See in Alternate Visions of EA, the claim "if you aren’t producing an SBF or two, then your movement isn’t being ambitious enough". In a comment reply in Why are we not harder, better, faster, stronger?, they say "There is a perspective from which having a few SBFs is a healthy sign."

I don't know what Nuno actually believes, but he carefully couches both of these as hypotheticals, so I don't think you should cite them as things he believes. (in the same section, he hypothetically imagines 'What if EA goes (¿continues to go?) in the direction of being a belief that is like an attire, without further consequences. People sip their wine and participate in the petty drama, but they don’t act differently.' - which I don't think he advocates).

Also, you're equivocating the claim that EA is too naive (which he certainly seems to believe), too consequentialist (which I suspect but don't know he believes), ignores common sense (which I imagine he believes), what he's actually said he believes - that he thinks it should optimise more vigorously - what the hypothetical you quote.

"the EA machine has been making some weird and mediocre moves" (would like some actual examples on the object-level of this)

I'm not sure what you want here - his blog is full of criticisms of EA organisations, including those linked in the OP.

"First, their priorities are different from mine" (so what?)

He literally links to why he thinks their priorities are bad in the same sentence.

a conflation of Effective Altruism with Open Philanthropy with a disregarding of things that fall in the former but not the latter

I don't think it's reasonable to assert that he conflates them in a post that estimates the degree to which OP money dominates the EA sphere, that includes the header 'That the structural organization of the movement is distinct from the philosophy', and that states 'I think it makes sense for the rank and file EAs to more often do something different from EA™'. I read his criticism as being precisely that EA, the non-OP part of the movement has a lot of potential value, which is being curtailed by relying too much on OP.

A view towards maximisation above all, and paranoia that concerns about unconstrained maximisation are examples of "EA leadership uses worries about the dangers of maximization to constrain the rank and file in a hypocritical way" but without evidence to back this up as an actual strategy.

I think you're mispresenting the exact sentence you quote, which contains the modifier 'to constrain the rank and file in a hypocritical way'. I don't know how in favour of maximisation Nuno is, but what he actually writes about in that section is the ways OP has pursued maximising strategies of their own that don't seem to respect the concerns they profess.

You don't have to agree with him on any of these points, but in general I don't think he's saying what you think he's saying.

JWS @ 2024-03-16T09:54 (+10)

Hey Arepo, thanks for the comment. I wasn't trying to deliberately misrepresent Nuño, but I may have made inaccurate inferences, and I'm going to make some edits to clear up confusion I might have introduced. Some quick points of note:

- On the comments/Nuño's stance, I only looked at the direct comments (and parent comment where I could) in the ones he linked to in the 'EA Forum Stewardship' post, so I appreciate the added context. And having read that though, I can't really square a disagreement about moderation policy with "I disagree with the EA Forum's approach to life" - like the latter seems so out-of-distribution to me as a response to the former.

- On the not going far-enough paragraph, I think that's a spelling/grammar mistake on my part - I think we're actually agreeing? I think many people's take away from SBF/FTX is the danger of reckless/narrow optimisation, but to me it seems that Nuno is pointing in the other direction. Noted on the hypotheticals, I'll edit those to make that clearer, but I think they're definitely pointing to an actually-held position that may be more moderate, but still directionally opposite to many who would criticise 'institutional EA' post FTX.

- On the EA machine/OpenPhil conflation - I somewhat get your take here, but on the other hand the post isn't titled "Unflattering things about the EA machine/OpenPhil-Industrial-Complex', it's titled "Unflattering thins about EA". Since EA is, to me, a set of beliefs I think are good, then it reads as an attack on the whole thing which is then reduced to 'the EA machine', which seems to further reduce to OpenPhil. I think footnote 4 also scans as saying that the definition of an EA is someone who 'pledge[s] allegiance to the EA machine' - which doesn't seem right to me either. I think, again, that Michael's post raised similar concerns about OpenPhil dominance in a clearer way to me.

- Finally, on the maximisation above all point, I'm not really sure of this point? Like I think the 'constraint the rank and file' is just wrong? Maybe because I'm reading it as "this is OpenPhil's intentional strategy with this messaging" and not "functionally, this is the effect of the messaging even if unintended". I think the points I agree with Nuño here is about lack of responsiveness to feedback some EA leadership shows, not in terms of 'deliberate constraints'.

Perhaps part of this is that, while I did read some of Nuño's other blogs/posts/comments, there's a lot of context which is (at least from my background) missing in this post. For some people it really seems to have captured their experience and so they can self-provide that context, but I don't, so I've had trouble doing that here.

NunoSempere @ 2024-03-16T12:04 (+23)

Unflattering things about the EA machine/OpenPhil-Industrial-Complex', it's titled "Unflattering thins about EA". Since EA is, to me, a set of beliefs I think are good, then it reads as an attack on the whole thing which is then reduced to 'the EA machine', which seems to further reduce to OpenPhil

I think this reduction is correct. Like, in practice, I think some people start with the abstract ideas but then suffer a switcheroo where it's like: oh well, I guess I'm now optimizing for getting funding from Open Phil/getting hired at this limited set of institutions/etc. I think the switcheroo is bad. And I think that conceptualizing EA as a set of beliefs is just unhelpful for noticing this dynamic.

But I'm repeating myself, because this is one of the main threads in the post. I have the weird feeling that I'm not being your interlocutor here.

JWS @ 2024-03-18T12:39 (+17)

Hey Nuño,

I've updated my original comment, hopefully to make it more fair and reflective of the feedback you and Arepo gave.

I think we actually agree in lots of ways. I think that the 'switcheroo' you mention is problematic, and a lot of the 'EA machinery' should get better at improving its feedback loops both internally and with the community.

I think at some level we just disagree with what we mean by EA. I agree that thinking of it as a set of ideas might not be helpful for this dynamic you're pointing to, but to me that dynamic isn't EA.[1]

As for not being an interlocutor here, I was originally going to respond on your blog, but on reflection I think I need to read (or re-read) the blog posts you've linked in this post to understand your position better and look at the examples/evidence you provide in more detail. Your post didn't connect with me, but it did for a lot of people, so I think it's on me to go away and try harder to see things from your perspective and do my bit to close that 'inferential distance'.

I wasn't intentionally trying to misrepresent you or be hostile, and to the extent I did I apologise. I very much value your perspectives I hope to keep reading them in the future, and for the EA community to reflect on them and improve.

- ^

To me EA is not the EA machine, and the EA machine is not (though may be 90% funded by) OpenPhil. They're obviously connected, but not the same thing. In my edited comment, the 'what if Dustin shut down OpenPhil' scenario proves this.

NunoSempere @ 2024-03-16T11:35 (+5)

I see saying that I disagree with the EA Forum's "approach to life" rubbed you the wrong way. It seemed low cost, so I've changed it to something more wordy.

NunoSempere @ 2024-03-16T11:45 (+4)

I think people will find a very similar criticsm expressed more clearly and helpfully in Michael Plant's What is Effective Altruism? How could it be improved? post

I disagree with this. I think that by reducing the ideas in my post to those of that previous one, you are missing something important in the reduction.

Eric Neyman @ 2024-03-16T05:42 (+12)

(Comment is mostly cross-posted comment from Nuño's blog.)

In "Unflattering aspects of Effective Altruism", you write:

Third, I feel that EA leadership uses worries about the dangers of maximization to constrain the rank and file in a hypocritical way. If I want to do something cool and risky on my own, I have to beware of the “unilateralist curse” and “build consensus”. But if Open Philanthropy donates $30M to OpenAI, pulls a not-so-well-understood policy advocacy lever that contributed to the US overshooting inflation in 2021, funds Anthropic13 while Anthropic’s President and the CEO of Open Philanthropy were married, and romantic relationships are common between Open Philanthropy officers and grantees, that is ¿an exercise in good judgment? ¿a good ex-ante bet? ¿assortative mating? ¿presumably none of my business?

I think the claim that Open Philanthropy is hypocritical re: the unilateralist's curse doesn't quite make sense to me. To explain why, consider the following two scenarios.

Scenario 1: you and 999 other people smart, thoughtful people have a button. You know there's 1000 people with such a button. If anyone presses the button, all mosquitoes will disappear.

Scenario 2: you and you alone have a button. You know that you're the only person with such a button. If you press the button, all mosquitoes will disappear.

The unilateralist's curse applies to Scenario 1 but *not* Scenario 2. That's because, in Scenario 1, your estimate of the counterfactual impact of pressing the button should be your estimate of the expected utility of all mosquitoes disappearing, *conditioned on no one else pressing the button*. In Scenario 2, where no one else has the button, your estimate of the counterfactual impact of pressing the button should be your estimate of the (unconditional) expected utility of all mosquitoes disappearing.

So, at least the way I understand the term, the unilateralist's curse refers to the fact that taking a unilateral action is worse than it naively appears, *if other people also have the option of taking the unilateral action*.

This relates to Open Philanthropy because, at the time of buying the OpenAI board seat, Dustin was one of the only billionaires approaching philanthropy with an EA mindset (maybe the only?). So he was sort of the only one with the "button" of having this option, in the sense of having considered the option and having the money to pay for it. So for him it just made sense to evaluate whether or not this action was net positive in expectation.

Now consider the case of an EA who is considering launching an organization with a potentially large negative downside, where the EA doesn't have some truly special resource or ability. (E.g., AI advocacy with inflammatory tactics -- think DxE for AI.) Many people could have started this organization, but no one did. And so, when deciding whether this org would be net positive, you have to condition on this observation.

NunoSempere @ 2024-03-16T11:32 (+2)

[Answered over on my blog]

Max Görlitz @ 2024-03-15T16:05 (+8)

I disagree with the EA Forum's approach to life

Could you elaborate on what you mean by this?

Thanks for referring to these blog posts!

NunoSempere @ 2024-03-15T19:29 (+44)

Over the last few years, the EA Forum has taken a few turns that have annoyed me:

- It has become heavier and slower to load

- It has added bells and whistles, and shiny notifications that annoy me

- It hasn't made space for disagreable people I think would have a lot to add. Maybe they had a bad day, and instead of working with them forum banned them.

- It has added banners, recommended posts, pinned posts, newsletter banners, etc., meaning that new posts are harder to find and get less attention.

- To me, getting positive, genuine exchanges in the forum as I was posting my early research was hugely motivating, but I think this is less likely to happen if the forum is steering readers somewhere else

- If I'm trying to build an audience as a researcher, the forum offers a larger audience in the short term in exchange for less control over the long-term, which I think ends up being a bad bargain

- It has become very expensive (in light of which this seems like a good move), and it just "doesn't feel right".

Initially I dealt with this by writing my own frontend, but I ended up just switching to my blog instead.

Habryka @ 2024-03-16T00:04 (+54)

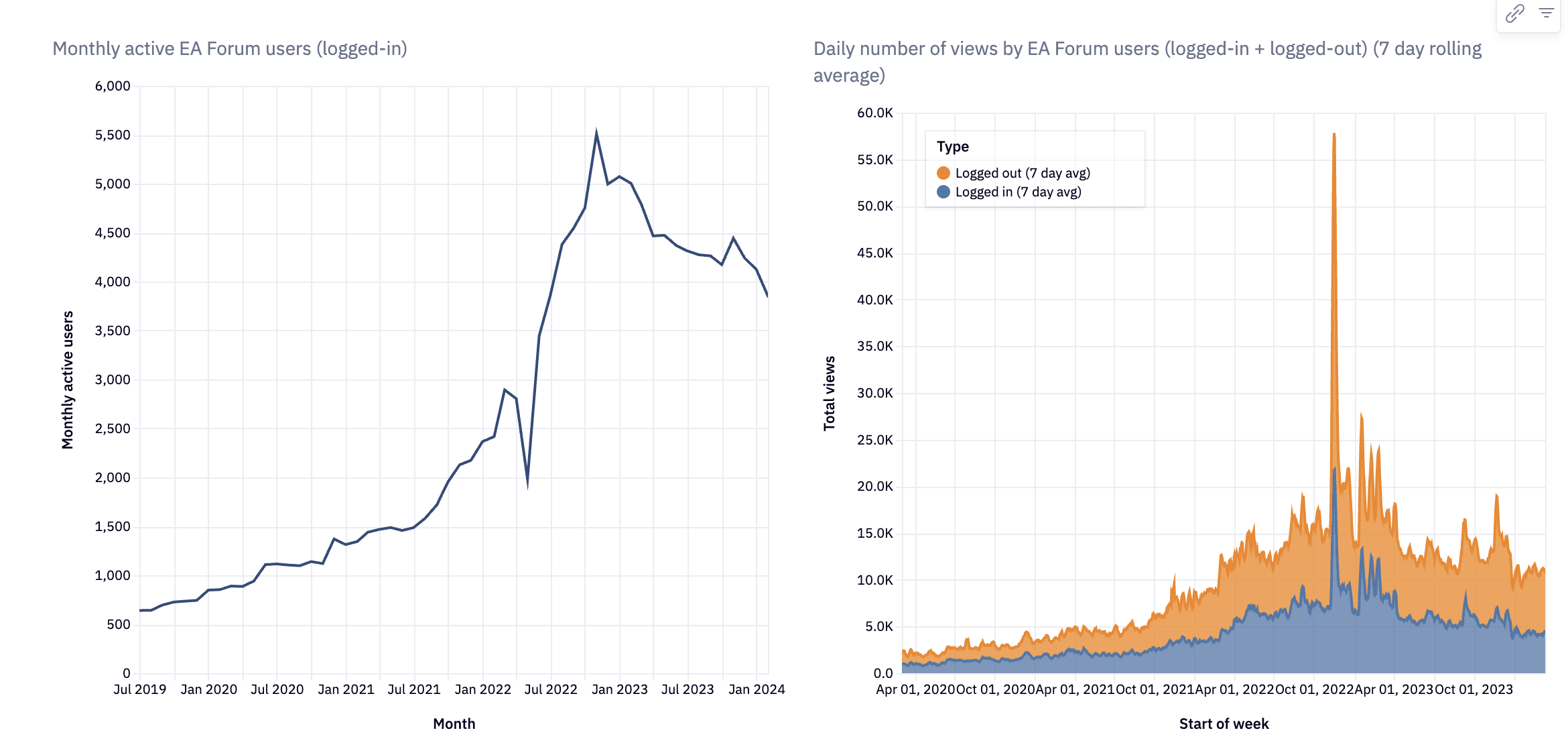

Just as a piece of context, the EA Forum now has about ~8x more active users than it had at the beginning of those few years. I think it's uncertain how good growth of this type is, but it's clear that the forum development had a large effect in (probably) the intended direction of the people who run the forum, and it seems weird to do an analysis of the costs and benefits of the EA Forum without acknowledging this very central fact.

(Data: https://data.centreforeffectivealtruism.org/)

I don't have data readily available for the pre-CEA EA Forum days, but my guess is it had a very different growth curve (due to reaching the natural limit of the previous forum platform and not getting very much attention), similar to what LessWrong 1.0 was at before I started working on it.

John Salter @ 2024-03-16T09:04 (+8)

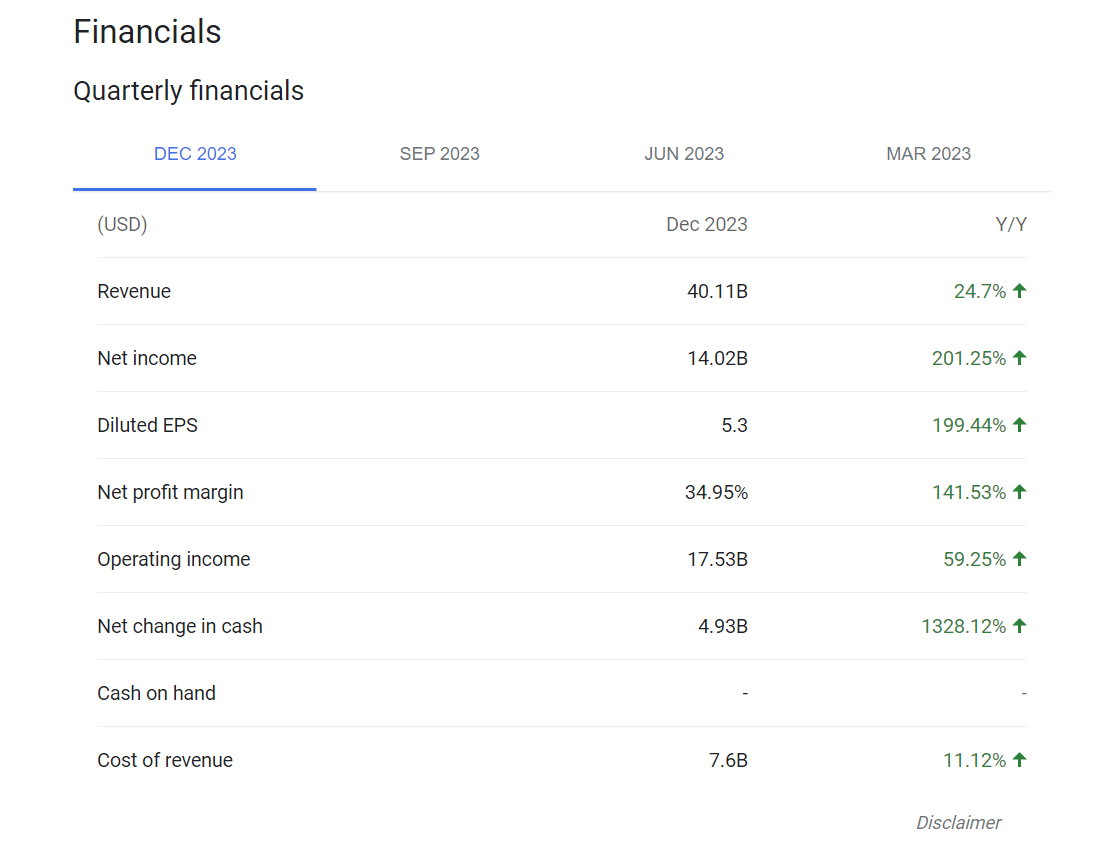

$2m / 4000 users = $500/user. They have every reason to inflate their figures, and there's no oversight, so it's not even clear these numbers can be trusted.

Many subreddits cost nothing, despite having 1000x more engagement. They could literally have just forked LessWrong and used volunteer mods.

No self-interested person is ever going to point this out because it pisses off the mods and CEA, who ultimately decide whose voices can be heard - collectively, they can quietly ban anyone from the forum / EAG without any evidence, oversight, or due process.

Will Bradshaw @ 2024-03-16T11:09 (+55)

No self-interested person is ever going to point this out because it pisses off the mods and CEA, who ultimately decide whose voices can be heard - collectively, they can quietly ban anyone from the forum / EAG without any evidence, oversight, or due process.

I've heard the claim that the EA Forum is too expensive, repeatedly, on the EA Forum, from diverse users including yourself. If CEA is trying to suppress this claim, they're doing a very bad job of it, and I think it's just silly to claim that making that first claim is liable to get you banned.

Habryka @ 2024-03-17T01:53 (+40)

$500/monthly user is actually pretty reasonable. As an example, Facebook revenue in the US is around $200/user/year, which is roughly in the same ballpark (and my guess is the value produced by the EA Forum for a user is higher than for the average Facebook user, though it's messy since Facebook has such strong network effects).

Also, 4000 users is an underestimate since the majority of people benefit from the EA Forum while logged out (on LW about 10-20% of our traffic comes from logged-in users, my guess is the EA Forum is similar, but not confident), and even daily users are usually not logged in. So it's more like $50-$100/user, which honestly seems quite reasonable to me.

No subreddit is free. If there is a great subreddit somewhere, it is probably the primary responsibility of at least one person. You can run things on volunteer labor but that doesn't make them free. I would recommend against running a crucial piece of infrastructure for a professional community of 10,000+ people on volunteer labor.

saulius @ 2024-03-22T09:34 (+13)

Also, 4000 users is an underestimate since the majority of people benefit from the EA Forum while logged out (on LW about 10-20% of our traffic comes from logged-in users, my guess is the EA Forum is similar, but not confident), and even daily users are usually not logged in.

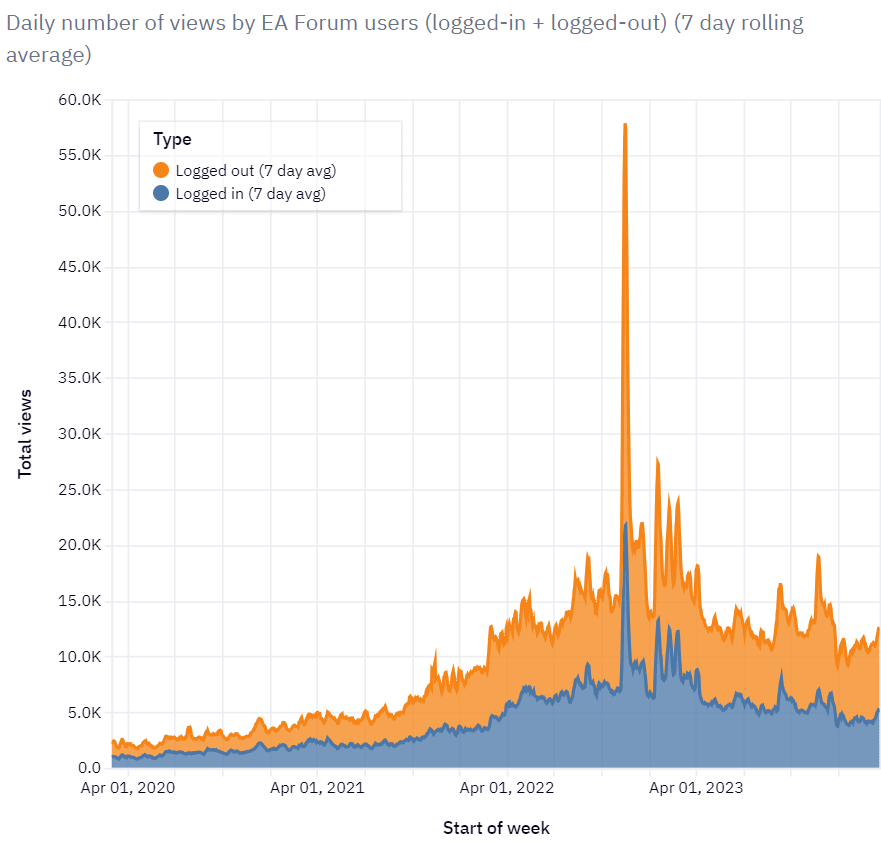

You posted this graph

If I understand it correctly, it shows that about 50% of EA forum traffic comes from logged-in users, not 10%-20%.

saulius @ 2024-03-22T09:45 (+21)

Also, it seems that EA forum gets about 14,000 views per day. So you spend about $2,000,000/(365*14,000) = $0.4 per view. That's higher than I would expect.

Note that many of these views might not be productive. For me personally, most of the views are like "I open the frontpage automatically when I want to procrastinate, see that nothing is new & interesting or that I didn't even want to use the forum, and then close it". I also sometimes incessantly check if anyone commented or voted on my post or comment, and that sort of behaviour can drive up the view count.

Habryka @ 2024-03-23T20:57 (+4)

That is definitely relevant data! Looking at the recent dates (and hovering over the exact data at the link where the graphs are from) it looks like its around 60% logged-out, 40% logged in.

I do notice I am surprised by this and kind of want confirmation from the EA Forum team they are not doing some kind of filtering on traffic here. When I compare these numbers naively to the Google Analytics data I have access to for those dates, they seem about 20%-30% too low, and it makes me think there is some filtering going on (though my guess is that 80%-90% logged-out traffic definitely still does not seem representative)

Elizabeth @ 2024-03-17T02:09 (+12)

I think you're comparing costs for EAF to revenue on FB.

Habryka @ 2024-03-17T02:13 (+4)

Yeah, that seems like the right comparison? Revenue is a proxy for value produced, so if you are arguing about whether something is worth funding philanthropically, revenue seems like the better comparison than costs. Though you can also look at costs, which I expect to not be more than a factor 2 off.

Elizabeth @ 2024-03-17T20:09 (+9)

Isn't a lot of FB's revenue generated by owning a cookie, and cooperating with other websites to track you across pages? I don't think it's fair to count that revenue as generated by the social platform, for these purposes.

Your argument also feels slippery to me in general. Registering that now in case you have a good answer to my specific criticism and the general motte-and-bailey feeling sticks around.

Habryka @ 2024-03-17T20:32 (+7)

I am not sure what you mean by the first. Facebook makes almost all of its revenue with ads. It also does some stuff to do better ad-targeting, for which it uses cookies and does some cross-site tracking, which I do think drives up profit, though my guess is that isn't responsible for a large fraction of the revenue (though I might be wrong here).

But that doesn't feel super relevant here. The primary reason why I brought up FB is to establish a rough order-of-magnitude reference class for what normal costs and revenue numbers are associated with internet platforms for a primarily western educated audience.

My best guess is the EA Forum could probably also finance itself with subscriptions, ads and other monetization strategies at its current burn rate, based on these number, though I would be very surprised if that's a good idea.

David T @ 2024-03-18T19:27 (+3)

I think the more relevant order of magnitude reference class would be the amount per user Facebook spent on core platform maintenance and moderation (and Facebook has a lot more scaling challenges to solve as well as users to spread costs over, so a better comparator would be the running expenses of a small professional forum)

I don't think FB revenues are remotely relevant to how much value the forum creates, which may be significantly more per user than Facebook if it positively influences decisions people make about employment, founding charities and allocating large chunks of money to effective causes. But the effectiveness of the use of the forum budget isn't whether the total value created is more than the total costs of running, it's decided at the margin by whether going the extra mile with the software and curation actually adds more value.

Or put another way, would people engage differently if the forum was run on stock software by a single sysadmin and some regular posters granted volunteer mod privileges?

Habryka @ 2024-03-19T03:01 (+7)

Or put another way, would people engage differently if the forum was run on stock software by a single sysadmin and some regular posters granted volunteer mod privileges?

Well, I mean it isn't a perfect comparison, but we know roughly what that world looks like because we have both the LessWrong and OG EA Forum datapoints, and both point towards "the Forum gets on the order of 1/5th the usage" and in the case of LessWrong to "the Forum dies completely".

I do think it goes better if you have at least one well-paid sysadmin, though I definitely wouldn't remotely be able to do the job on my own.

Jason @ 2024-03-17T13:29 (+2)

As to costs, I'd have to dig further but looking at the net profit margin for Meta as a whole suggests a fairly significant adjustment. Looking at the ratio between cost of revenue and revenue suggests an even larger adjustment, but is probably too aggressive of an adjustment.

If Meta actually spent $200 per user to achieve the revenue associated with Facebook, that would be a poor return on investment indeed (i.e., 0%). So I think comparing its revenue per user figure to the Forum's cost per user creates too easy of a test for the Forum in assessing the value proposition of its expenditures.

Lorenzo Buonanno @ 2024-03-17T10:30 (+5)

$500/monthly user is actually pretty reasonable. As an example, Facebook revenue in the US is around $200/user/year, which is roughly in the same ballpark

Facebook ARPU (average revenue per user) in North America is indeed crazy high, but I think misleading as for some reason they include revenues from Whatsapp and Instagram, but only count Facebook users as MAU in the denominator. (edit: I think this doesn't matter that much) Also, they seem to be really good at selling ads

In any case:

- I don't think this is a good measure of value, I don't think the average user would pay $200/year for Facebook. (I actually think Facebook's value is plausibly negative for the average user. Some people are paying for tools that limit their use of Facebook/Instagram, but I guess that's beside the point)

- Reddit's revenue per user in the US is ~$20 / user / year, 10x less

- This doesn't take into account the value of the marginal dollar given to the EA Forum. (Maybe 90% of the value is from the first million/year?)

I'm not sure if $6000 is in the same ballpark as $200(edit: oops, see comment below, the number is $500 not $6000)

But I strongly agree with your other points, and mostly I think this is a discussion for donors to the EA Forum, not for users. If someone wants to donate $2M to the EA Forum, I wouldn't criticize the forum for it or stop using it. It's not my money.

Users might worry about why someone would donate that much to the Forum, and what kind of influence comes with it, but I think that's a separate discussion, and I'm personally not that worried about it. (But of course, I'm biased as a volunteer moderator)

Jason @ 2024-03-17T13:52 (+33)

I think criticizing CEA for the Forum expenditures is fair game. If an expenditure is low-value, orgs should not be seeking funding for it. Donors always have imperfect information, and the act of seeking funding for an activity conveys the organization's tacit affirmation that the activity is indeed worth funding. I suppose things would be different if a donor gave an unsolicited $2MM/year gift that could only be used for Forum stuff, but that's not my understanding of EVF's finances.

I also think criticizing donors is fair game, despite agreeing that their funds are not our money. First, charitable donations are tax advantaged, so as a practical matter those of us who live in the relevant jurisdiction are affected by the choice to donate to some initiative rather than pay taxes on the associated income. I also think criticizing non-EA charitable donors for their grants is fair game for this reason as well.

Second, certain donations can make other EA's work more difficult. Suppose a donor really wants to pay all employees at major org X Google-level wages. It's not our money, and yet such a policy would have real consequences on other orgs and initiatives. Here, I think a pattern of excessive spending on insider-oriented activities, if established, could reasonably be seen as harmful to community values and public perception.

(FWIW, my own view is that spending should be higher than ~$0 but significantly lower than $2MM.)

Jason @ 2024-03-17T13:19 (+10)

I think the $500 figure is derived from ($2MM annual spend / 4000 monthly active users). The only work monthly is doing there is helping define who is a user. So I don't think multiplying the figure by 12 is necessary to provide comparison to Facebook.

That being said, I think there's an additional reason the $200 Facebook figure is inflated. If we're trying to compare apples to apples (except for using revenue as an overstated proxy for expenditure), I suggest that we should only consider the fraction of implied expenses associated with the core Facebook experience that is analogous to the Forum. Thus, we shouldn't consider, e.g., the implied expenditures associated with Facebook's paid ads function, because the Forum has no real analogous function.

emre kaplan @ 2024-03-17T10:29 (+2)

Where does the "$200/user/year" figure come from? They report $68.44 average revenue per user for the US and Canada in their 2023 Q4 report.

Lorenzo Buonanno @ 2024-03-17T10:32 (+2)

ARPU is per quarter. $68.44/quarter or $200/year is really high but:

1. it includes revenues from Instagram and Whatsapp, but only counts Facebook users

2. Facebook is crazy good at selling ads, compared to e.g. Reddit (or afaik anything else)

emre kaplan @ 2024-03-17T10:36 (+2)

Thanks, many websites seem to report this without the qualifier "per quarter", which confused me.

Lorenzo Buonanno @ 2024-03-17T10:39 (+2)

Yeah I had the exact same reaction, I couldn't believe it was so high but it is

Jason @ 2024-03-17T02:59 (+4)

My understanding is that moderation costs comprise only a small portion of Forum expenditures, so you don't even need to stipulate volunteer mods to make something close to this argument.

(Also: re Reddit mods, you generally get what you pay for . . . although there are some exceptions)

Nathan_Barnard @ 2024-03-16T01:37 (+3)

This is really useful context!

Dawn Drescher @ 2024-03-26T08:43 (+6)

I've (so far) read the first post and love it! But when I was working full-time on trying to improve grantmaking in EA (with GiveWiki, aggregating the wisdom of donors), you mostly advised me against it. (And I am actually mostly back to ETG now.) Was that because you weren't convinced by GiveWiki's approach to decentralizing grantmaking or because you saw little hope of it succeeding? Or something else? (I mean, please answer from your current perspective; no need to try to remember last summer.)

NunoSempere @ 2024-03-27T01:39 (+2)

Iirc I was skeptical but uncertain about GiveWiki/your approach specifically, and so my recommendation was to set some threshold such that you would fail fast if you didn't meet it. This still seems correct in hindsight.

Dawn Drescher @ 2024-03-27T10:19 (+2)

Yep, failing fast is nice! So you were just skeptical on priors because any one new thing is unlikely to succeed?

NunoSempere @ 2024-03-27T18:00 (+2)

Yes, and also I was extra-skeptical beyond that because you were getting a too small amount of early traction.

Dawn Drescher @ 2024-03-27T20:13 (+2)

Yep, makes a lot of sense!

Jonas Hallgren @ 2024-03-16T08:06 (+4)

It makes sense for the dynamics of EA to naturally go in this way (Not endorsing). It is just applying the intentional stance plus the free energy principle to the community as a whole. I find myself generally agreeing with the first post at least and I notice the large regularization pressure being applied to individuals in the space.

I often feel the bad vibes that are associated with trying hard to get into an EA organisation. I'm doing for-profit entrepreneurship for AI safety adjacent to EA as a consequence and it is very enjoyable. (And more impactful in my views)

I will however say that the community in general is very supportive and that it is easy to get help with things if one has a good case and asks for it, so maybe we should make our structures more focused around that? I echo some of the things about making it more community focused, however that might look. Good stuff OP, peace.

anormative @ 2024-03-17T00:15 (+3)

FWIW, the "deals and fairness agreement" section of this blogpost by Karnofsky seems to agree about (or at least discuss) trade between different worldviews :

It also raises the possibility that such “agents” might make deals or agreements with each other for the sake of mutual benefit and/or fairness.

Methods for coming up with fairness agreements could end up making use of a number of other ideas that have been proposed for making allocations between different agents and/or different incommensurable goods, such as allocating according to minimax relative concession; allocating in order to maximize variance-normalized value; and allocating in a way that tries to account for (and balance out) the allocations of other philanthropists (for example, if we found two worldviews equally appealing but learned that 99% of the world’s philanthropy was effectively using one of them, this would seem to be an argument – which could have a “fairness agreement” flavor – for allocating resources disproportionately to the more “neglected” view). The “total value at stake” idea mentioned above could also be implemented as a form of fairness agreement. We feel quite unsettled in our current take on how best to practically identify deals and “fairness agreements”; we could imagine putting quite a bit more work and discussion into this question.

Different worldviews are discussed as being incommensurable here (under which maximizing expected choice-worthiness doesn't work). My understanding though is that the (somewhat implicit but more reasonable) assumption being made is that under any given worldview, philanthropy in that worldview's preferred cause area will always win out in utility calculations, which makes sort of deals proposed in "A flaw in a simple version of worldview diversification" not possible/useful.

NunoSempere @ 2024-03-17T12:18 (+2)

In practice I don't think these trades happen, making my point relevant again.

My understanding though is that the (somewhat implicit but more reasonable) assumption being made is that under any given worldview, philanthropy in that worldview's preferred cause area will always win out in utility calculations

I'm not sure exactly what you are proposing. Say you have three inconmesurable views of the world (say, global health, animals, xrisk), and each of them beats the other according to their idiosyncratic expected value methodology. But then you assign 1/3rd of your wealth to each. But then:

- What happens when you have more information about the world? Say there is a malaria vaccine, and global health interventions after that are less cost-effective.

- What happens when you have more information about what you value? Say you reflect and you think that animals matter more than before/that the animal worldview is more likely to be the case.

- What happens when you find a way to compare the worldviews? What if you have trolley problems comparing humans to animals, or you realize that units of existential risk avoided correspond to humans who don't die, or...

Then you either add the epicycles or you're doing something really dumb.

My understanding though is that the (somewhat implicit but more reasonable) assumption being made is that under any given worldview, philanthropy in that worldview's preferred cause area will always win out in utility calculations, which makes sort of deals proposed in "A flaw in a simple version of worldview diversification" not possible/use

I think looking at the relative value of marginal grants in each worldview is going to be a good intuition pump for worldview diversification type stuff. Then even if, every year, every worldview prefers their marginal grants over those of other worldviews, you can/will still have cases where the worldviews can shift money between years and get more than what they all want.