Rethink Priorities needs your support. Here's what we'd do with it.

By Peter Wildeford @ 2023-11-21T17:55 (+211)

In honor of “Marginal Funding Week” for 2023 Giving Season on the EA Forum, I’d like to tell you what Rethink Priorities (RP) would do with funding beyond what we currently expect to raise from our major funders, and to emphasize that RP currently has a significant funding gap even after taking these major funders into account.

A personal appeal

Hi. I know it’s traditional in EA to stick to the facts and avoid emotional content, but I can’t help but interject and say that this fundraising appeal is a bit different. It is personal to me. It’s not just a list of things that we could take or leave, it’s a fight for RP to survive the way I want it to as an organization that is intellectually independent and serves the EA community.

To be blunt, our funding situation is not where we want it to be. 2023 has been a hard year for fundraising. A lot of what we’ve been building over the past few years is at risk right now. If you like RP, my sense is donating now is an unusually good time.

We are at the point where receiving $1,000 - $10,000 each from a handful of individual donors would genuinely make an important difference to the future trajectory of RP and decide what we can and cannot do next year.

We are currently seeking to raise at least $110K total from donors donating under $100K each. We are already ~$25K towards that goal, so there's $85K remaining towards our goal. We also hope to receive more support from larger givers as well[1].

To be clear, this isn’t just about funding growth. An RP that does not receive additional funding right now will be worse in several concrete ways. Funding gaps may force us to:

- Focus more on non-published, client-driven work that will never be released to the community (because we cannot afford to do so)

- Stop running the EA Survey, survey updates about FTX, and other community survey projects

- Do fewer of our own creative ideas (e.g., CURVE sequence, moral weights work)

- Be unable to run several of our most promising research projects (see below)

- Reduce things we think are important - like opportunities for research teams to meet in person and opportunities for staff to do further professional development.

- Spend significant amounts of time fundraising next year, distracting from our core work

For unfamiliar readers, some of our track and impact to date includes:

- Contributing significantly to burgeoning fields, such as invertebrate welfare.

- Led the way in exploring novel promising approaches to help trillions of animals, by launching the Insect Institute and uncovering the major scale of shrimp production

- Completing the Moral Weight Project to try to help funders decide how to best allocate resources across species.

- Producing >40 reports commissioned by Open Philanthropy and GiveWell answering their questions to inform their global health and development portfolios.

- Producing the EA Survey and surveys on the impact of FTX on the EA brand that were used by many EA orgs and local groups

- Conducting over 200 tailored surveys and data analysis projects to help many organizations working on global priorities.

- Launching projects such as Condor Camp and fiscally sponsoring organizations like Epoch and Apollo Research via our Special Projects team, which provides operational support.

- Setting up an Artificial Intelligence (AI) Governance and Strategy team and evolving it into a think tank that has already published multiple influential reports[2] .

Please help us keep RP impactful with your support.

Why does RP need money from individuals when there are large donors supporting you?

It’s commonly assumed that RP must get all the money it needs from large institutions. But this is not the case – we’ve historically relied on individual donors too, and this year some important changes in the landscape[3] have made it more difficult to fundraise what we need. Thus we still have a gap.

But we get asked if you need money, why don’t those large institutional funders just fund you more? And if they aren’t funding you more, why should I, in particular, fund you more?

…It’s a difficult question but the answer is some combination of:

- Sometimes we disagree with our funders about which things have the greatest value and we think it’s plausible that we’re right.

- What we think of as our most innovative work (e.g., invertebrate sentience, moral weights and welfare ranges, the cross-cause model, the CURVE sequence) or some of our most important work (e.g., 50% of the EA Survey [4], our EA FTX surveys and Public/Elite FTX surveys, and each of our three AI surveys) have historically not had institutional support and relied on individual donors to make happen.

- Our largest funders are often interested in only funding our work when it reaches a certain maturity level, so we rely a lot on unrestricted funding from smaller donors to try out new ideas, experiment, fail, and get ideas to a “proof of concept” stage.

- Additionally some of our work isn’t within the evaluation scope of any particular grant evaluator enough that they have capacity to evaluate it, even if it might be good.

- Our work can be thought of as some public good that goes undersupplied - some of our work benefits multiple different groups/individuals/the community in a diffuse way without being enough of a core focus of any particular group, which makes it hard to get any single actor to cover it all or sometimes even large parts of it

- Some of our clients have funding gaps of their own (or are organizations with small budgets) and do value our work highly but cannot afford to commission us

- Given our size, we and our institutional funders often expect us to raise a decent portion of our money from individual donors[5]

Thus I appeal to you and the broader community to help solve an important collective action problem – A lot of our work is done in a consultancy arrangement, where we are tasked with producing value for a particular client. But our work produces surplus value to the wider community that isn’t valued by any one particular client enough for them to want to pay for it. So this value goes undersupplied.

For example, many of those who commissioned our work allow us to share our research publicly if we like but provide no money for us to do so, and converting the work to a public version takes a significant amount of additional effort.[6] Thus investing in this is a surprisingly neglected and highly leveraged investment[7].

If you value the work we’ve been doing, I hope you’ll consider donating here. Any amount would help.

I also hope we can count on your vote for RP in the EA Forum Donation election. To put some skin in the game, I’ve personally donated $1000 to the EA Forum Donation election and I’m looking forward to doing everything I can to win as much of it back to RP as possible.

In the remainder of this post I will outline a number of ways in which RP could use additional funding.

General

$1K - $10K per research work to allow us to publish our backlog of research

To get concrete, we think that raising $1K - $10K per research work would be enough to allow us to publish our backlog of research that could be made public but currently isn’t due to constraints on our end. Time spent writing up these publications draws on unrestricted funding and is time not spent doing paid commissions to raise more money, so we cannot afford to allocate the amount of time it takes to publish this work. However, putting more of our work on the EA Forum allows for others to build on it in ways we don’t anticipate, has influence in ways that are hard to expect, provides positive examples of research for others to learn from, and helps make EA more of an open and equal ecosystem[8].

Some pieces of research we’d produce public versions of if we had money to do so:

- Survey and data analysis work

- The two reports mentioned by CEA here about attitudes towards EA post-FTX among the general public, elites, and students on elite university campuses.

- Followup posts about the survey reported here about how many people have heard of EA, to further discuss people's attitudes towards EA, and where members of the general public hear about EA (this differs systematically)

- Updated numbers on the growth of the EA community (2020-2022) extending this method and also looking at numbers of highly engaged longtermists specifically

- Several studies we ran to develop reliable measure of how positively inclined towards longtermism people are, looking at different predictors of support for longtermism and how these vary in the population

- Reports on differences between non-longtermists and longtermists within the EA community and on how non-longtermist / longtermist efforts influence each other (e.g. to what extent does non-longtermist outreach, like GiveWell, Peter Singer articles about poverty, lead to increased numbers of longtermists)

- Whether the age at which one first engaged with EA predicts lower / higher future engagement with EA

- Global Health and Development

- Importance, tractability, and neglectedness of drowning in low- and middle-income countries. We investigated the global burden of drowning and attempted to determine its geographical distribution. We examined specific interventions to tackle the global burden, with a particular focus on crèches and swimming lessons for children and compiled information about organizations trying to address this issue and attempted to estimate the amount of funds devoted to drowning prevention.

- A report on fungal diseases that estimated total fungal disease deaths, the degree to which fungal diseases are neglected relative to Malaria, and major barriers to their treatment. Interviews with experts highlighted potential promising interventions that included funding R&D efforts to develop antifungal vaccines, supporting diagnostic efforts, and antifungal advocacy (e.g., banning some antifungals from use in agriculture).

- Impact, distribution, effectiveness, and potential improvements of priority review vouchers for tropical diseases. We cataloged information about the 13 issuances of Priority Review Vouchers (PRV) under the United States’ Tropical Disease PRV Program, included a BOTEC of one case study and several possible improvements to the program emerged from literature and expert conversations.

- Our Global Health and Development team has a further ~20 unpublished reports that we would like to publish.

- Animal Welfare

- A new investigation into different possible pathways to help the animals most commonly used and killed for food production–shrimp. This work could significantly accelerate and strengthen the efforts of animal advocates and other players in helping billions of animals.

- Strategy diversification for wild animals: We've been studying the theories of change and some strategic gaps for helping wild animals. Additional funding could help us further communicate possible new avenues for diversifying the impact portfolio of wild animal welfare research and advocacy.

- Exploring Innovative ways to improve conditions for farmed fish. This work aims to serve a dual purpose: firstly, to highlight promising new recommendations for improving the welfare of farmed and hatchery-reared fish that currently lack widespread implementation, and secondly, to identify critical gaps in knowledge that should direct impact-orientated research. We could then also further communicate about tractability and cost-effectiveness of these ideas.

Worldview Investigations

$200K to do cause prioritization research and build on the cross-cause model

In just a few months, we have produced as much practical work on cause prioritization and valuing the long-term future as any public actor has done in the last few years. We think the cross-cause model we created is something that should’ve existed in EA long ago, but took many years of our research to create.

Prior to that, we produced major and novel contributions to questions about which animals matter (our invertebrate sentience work) and how much they matter (our moral weight work).

People may think this work was primarily institutionally funded. It wasn’t. It was our own idea and executed with our own unrestricted funding and the help of individual donors. We need more of this support to continue going. Frankly, a lot of this work may just not happen without more support.

We think the value of this work is high but diffuse and indirect. We think our work on invertebrate sentience and moral weight contributed to many EA actors taking invertebrates seriously[9], creating and funding charities to address them[10], whereas this likely wouldn’t be as much the case without our work. But it took years to get to this point. We need to keep up this investment.

We think our current work will do the same (helping actors consider the implications of taking risk aversion seriously, assess the value of the future more rigorously, and create more rationally defensible philanthropic portfolio allocations).

We have exciting plans for where to take this next, we don’t have clear funding sources lined up, and more money from individual donors could make a difference.

With more support, we’d:

- Add diverse growth future value trajectories we outlined here

- Add more interventions, including the (estimated high or low) value of movement building, plant-based and cultivated meat interventions, and fleshing out the global health portion of the model

We’d also like to create philanthropic portfolio analysis allowing everyone from $1B+ to <$20K donors figure out how to allocate a philanthropic portfolio according to their beliefs:

- Create cost-curves for interventions across areas so that we can capture the marginal cost-effectiveness as spending rises and falls

- Implement a parliamentary approach that allow users to assign credences to different decision theoretic views and see the resulting outputs for how they should give

We also think this could be an important leveraged investment as with more support we’d do user interviews for the cross-cause model and get it to a point where we can achieve product-market fit for impactful use cases, which we expect could then be used to make a case to institutional funders that want to see more of a clear track record.

We expect the portfolio analysis and CCM extension projects to take ~6 months at 2-4 FTE.

To be clear, any amount of money would be helpful towards making this goal or other goals listed in this post happen, even if you can't donate the full amount.

$500K to do more worldview cause exploration

We aren’t only interested in these immediate extensions of the CCM. We think there’s still considerable value in cause exploration, reconsidering our fundamental assumptions, and tackling large difficult problems in a public manner. We would also like to publicly write-up whatever decision making process we ultimately go with to act despite our normative and decision theory uncertainty.

Further information on a few possible projects we are considering can be seen here.

Surveys and Data Analysis

$60K to run the next EA Survey

Additionally, raising $60K would give us confidence to run the next EA Survey. Despite a lot of people citing the EA Survey and finding it valuable, there is actually little institutional support for the EA Survey. We do get some institutional support for this, but not enough to cover our costs. Since it is a diffuse community public good, there is no particular funder interested in supporting it, and the many EA groups that value this work don’t have enough money to actually support it. Given that funding has been harder in 2023 than in previous years, it’s possible that we would not be able to run the EA Survey without dedicated funding.

We also provide a lot of private analyses pro bono to community members who request them, such as EA group organizers that don’t have the budget to commission these requests but nevertheless value our insights. We’d appreciate having more monetary support to be able to support these groups.

$40K-100K to more rigorously understand branding for EA and existential risk

There has been much debate about whether people engaged in EA and longtermism should frame their efforts and outreach in terms of ‘effective altruism’, ‘longtermism’, ‘existential risk’, ‘existential security’, ‘global priorities research’, or by only mentioning specific risks, such as AI Safety and pandemic prevention. For examples, see: (1)(2)(3)(4)(5)(6)(7).

However, these debates have largely been based on a priori speculation about which of these approaches would be most persuasive.

We propose to run experiments directly testing public responses to these different framings. This will allow us to assess how responses to these different approaches differ in different dimensions (e.g. popularity, persuasiveness, interest, dislike).

Our survey and data analysis team has a good track record of running rigorous survey experiments to get to the bottom of complex low salience issues (for one example, see “Are 1-in-5 Americans familiar with EA?”).

We’d do message/framing/branding testing of “effective altruism”, “global priorities”, “effective giving”, “longtermism, “existential risk”, “existential security”, and concrete causes (e.g., AI risk). We’d build on our research mentioned by Will Macaskill in this comment.

This research could be divided up in various ways e.g.

- Assess how people respond to the brand “effective altruism” and see what they associate with it, what they understand or expect it to mean, what if anything they dislike about it

- Test whether people respond better to “principles-based EA” messaging vs specific causes

- Test whether people respond better to broader “effective altruism” or narrower longtermism/x-risk

- Test whether people respond better to “longtermism” or “existential risk” or “existential security” or concrete causes

$25K-$100K to more rigorously understand EA’s growth trajectory

As stated in one recent post:

It seems obvious to me that numerous stakeholders-- including organization leaders, donors of all sizes, group leaders, and entrepreneurs-- would all benefit from having an accurate understanding of EA’s growth trajectory. And it seems just as obvious that it would be tremendously inefficient for each of those parties to conduct their own analysis. It would be in everyone’s interest to receive a regular (every 3-6 months?) update from a reliable analyst. This wouldn’t be expensive (it wouldn’t require anything close to a full-time job initially, though the frequency and/or depth of the analyses could be scaled up if people saw value in doing so). As public goods go, this should be an easy one to provide.

We’d be happy to provide this service with sufficient funding.

$40K to more rigorously understand branding for AI risk outreach

We’d like to do some of the following to better understand AI risk outreach:

- Run messaging experiments to conduct fundamental research into what factors make AI risk cases more persuasive to different audiences (e.g. do people respond better or worse to strong ‘Yudkowsky-style’ messages which emphasize risk of extinction, or high urgency, or high probability of doom).

- Testing whether providing certain information relevant to AI risk (e.g. X experts say Y; in the year before flight was developed, many experts said that it would be years off) changes people’s views

- Understand how the public thinks about risk from open source AI models

$50K to more rigorously understand why people drop out of EA

The EA Survey only captures those who currently are close enough to EA to see and want to fill out the survey. So far, no one has done much analysis of those who join the community and then leave. Using referrals and snowball sampling, we would recruit a small number of people who dropped out of or bounced off EA to assess what the main factors are leading people to not join to leave EA.

Animal welfare

We believe that animal welfare is an important global problem that has been greatly neglected by charitable organizations and, to a lesser extent, by the effective altruist community. Nevertheless, we think that there are promising avenues for improving the lives of nonhuman animals and that further research can significantly contribute to optimizing current efforts and uncovering new impactful solutions to help these individuals.

Given their importance, relative neglectedness and tractability, and the strength of our research team, we believe we have a great opportunity to help animals by addressing some critical questions that affect farmed and wild animals, and other needs of the movement defending non-humans.

However, we don’t have much widespread support for this work and we are preparing for the possibility of getting less money in the future from Open Phil due to shifts in their priorities. Historically we have spent a large amount of unrestricted funding on our animal welfare work and we are looking for new individual donors to help.

Without your support, we suspect a lot of additional work here won’t happen, and much of what will happen will be private, and designed for specific donors.

We list below some potential directions we’d like to take our work.

$250K to create a review of interventions to reduce the consumption of animal products

Over the last five years, the volume of research on interventions to reduce edible animal product consumption has grown significantly. However, this research is difficult to access and disperse. This project would provide the evidential basis for identifying classes of effective (or ineffective) interventions to reduce the consumption of animal-based products, from classroom education to plant-based analogues to changes in menu design. We'll review the literature for each, address some foundational uncertainties, and assess the cost-effectiveness of various approaches. We expect to produce intervention briefs, summarizing critical evidence, a live and user-friendly database of evidence for various interventions, and reports with applied recommendations for advocates and other interested players.

$38K to create a Farmed Animals Impact Tracker

Farmed Animals Impact Tracker: establish a comprehensive database for reliably assessing the cost-effectiveness of interventions, starting with cage-free corporate pledges, and the Better Chicken Commitment. We expect this would produce a reliable source to assess some of the most popular interventions addressed to help hens and chickens. This work could later be expanded to include other interventions targeting other animals (see below).

$75K to understand interventions that would address fish welfare

Our work on promising new recommendations for improving the welfare of farmed and hatchery-reared fish has identified some improvements that currently lack widespread implementation. This project will further investigate such ideas, focusing on their potential tractability and cost-effectiveness so as to conclude whether they merit further promotion by advocacy groups, certifiers and others interested in improving fish welfare.

$60K to understand interventions that would address crustacean welfare

Based on the major welfare challenges faced by shrimp, this report will make recommendations for positively altering farming conditions and practices, and, whenever possible, point out already-available interventions and other strategies that might slow down the growth ot this sector. It will also suggest critical future research directions to better understand shrimp welfare and uncover new and likely more impactful interventions in the future.

$50K for development and implementation of an insect farming welfare ask

In 20 years, if we look back to study missed opportunities to help animals, failing to help insects industrially farmed for food and feed production could very well be the largest mistake the effective animal advocacy community has made. This is a pivotal decade for the industry: regulations are being considered and written for the first time, and new factories are being designed and built. This welfare ask could greatly shape the industry’s practices.

It will require producers to commit to standards for breeding, hygiene, slaughter, and reporting standards. While the first draft and strategic approach are still being developed, it may include other requirements as well. RP research will support incremental improvements to this ask, especially with regard to rearing standards and slaughter methods.

$100K to develop a database of possible near-term interventions for wild animals

Recent techonological progress suggest that there might be currently feasible methods to help wildlife populations at some scale. This project aims to identify and describe already available technologies and interventions to help wild animals, collect some initial evidence for each of them, and a shallow assessment of the potential impact of such interventions along with possible challenges. This information will be presented in a user-friendly database that might serve the wild animal welfare community in identifying the most promising interventions, current knowledge gaps, and other drawbacks, and guide further assessment or intervention implementation efforts.

$75K to do a theory of change status report for the animal advocacy movement

Various theories of change prevail in the farmed animal movement. It need not be the case that most organizations agree on one. Still, it is certainly possible to take stock of the evidence for and against each of them and, thus, discourage further resources into ones where the evidence suggests the theory fails to produce the expected results or look into potential alternative drivers, if any. By breaking theories of change down into testable hypotheses and specific KPIs, and mapping interventions onto those hypotheses, evidence on the hypotheses' indicators would generate an overall health status of each theory. This work could inform strategic resource allocation and generate motivation to run interventions and research to fill critical gaps in the grid. Additionally, this project might identify potential failure modes in theories of change and then pointing to ways to mitigate against those.

$300K to develop a better system similar to QALYs/DALYs but for animals

If we want to impartially do the most good for animals, we need to be able to translate benefits to them into units of measures that allow comparisons with other global priorities. In this regard, DALYs-averted is the common currency used by many to assess how much good they can do by helping humans. We can use the DALYs framework and treat it as a proxy for some amount of welfare for non-humans. With this project, we will attempt to translate species-specific animal welfare assessments into welfare estimates relative to each species under different conditions. Then, such calculations can be translated into a standard unit–DALYs. The estimates produced could be especially useful in guiding the allocation of resources as they provide a common numerator, allowing for the expression of utility in terms of dollar/DALY. Moreover, this would give a valuable summary that might be used to engage other stakeholders–like economists and policymakers–to visualize the impact of various farming systems on animals.

Global Health and Development

$405K to pilot our Value of Research model

Our Global Health and Development team is currently funded by both Open Philanthropy and GiveWell to do research for them[11]. However, we have a new value of research model that we’d like to try out that suggests we can be more cost-effective than most GHD interventions within the GHD-prioritizing worldview, but unfortunately this seems to be outside the scope of our current funders’ priorities.

This model suggests that we actually could have more impact with GHD research from taking a non-EA organization from a low to medium cost-effectiveness as opposed to working with OpenPhil and GiveWell to find slightly more cost-effective interventions than what they are currently supporting. This seems to be true even if the likelihood of securing work with those organizations and updating their conclusions is lower, and the ultimate target intervention(s) we might get them to update to is not as cost-effective as GiveWell’s top charities.

We’d seek $405K for a pilot for six months – this would be a highly leveraged investment to buy us enough time to attract new clients and find new streams of funding from them. The wider global health and development community is large and we think there are many potential clients we could be working with. The more general support we have to attempt this, the lower hourly rate we can charge to our clients, which means we’ll have a greater chance of attracting potential clients.

RP’s GHD team is well-positioned to execute a plan to do this but without further funding we think there’s an uncomfortably high chance that we’ll fail before we get a chance to actually test this model. Our current major funders aren’t backing this as much as is needed in part because of differing mandates (i.e., making grants that are achieving impact via grantmaking directly on interventions or research rather than this more indirect route), and in part because our funders have been shifting away from the GHD space more generally. But without this funding, the team as it currently exists is unlikely to persist even though they are well set up to do very cost-effective work.[12]

AI Governance

$15K to write up learnings from spending a year attempting longtermist incubation

Our Existential Security team worked in 2023 towards researching projects and finding external founders to run them. We’re now pivoting to running those projects in-house instead.

However, we’d like to write an evaluation of our longtermist incubation efforts in 2023 and provide high-level lessons. We’d also like to assemble key info we gathered on a lot of our project ideas and explain why we ended up not pursuing them. We think this would be useful for people who might do or fund similar work in the future. We’d explain what happened to our 20 concrete project ideas.

$114K to train an additional AI policy researcher

We’ve been able to take skilled generalist researchers with not much experience in AI policy and skill them up to the point where they can do AI policy research that is high enough quality to be engaged with from labs, regulators, and other policymakers. Getting additional funding would also be valuable for maintaining intellectual independence, which is crucial for a think tank.

We estimate that we could train a marginal additional person for $69K in salary, $9K in tax and HR fees, $8K in benefits, $7K in travel and professional development support, and $21K in marginal spending on operations.

-

We also have more speculative plans that may be of interest to longtermist donors and we can take longtermist restricted support with minimal to no fungibility issues. Please inquire to peter@rethinkpriorities.org if you want to explore this.

Included with your donation: talent pipelines and field building

Another important benefit to funding the work above that goes under-discussed is the effect of our work on talent pipelines and field building. We can hire in most countries and are pretty agnostic about location, so this allows us to engage a much wider international community and find hidden talent.

For example, Rethink Priorities is an important institution for supporting the reduction of existential risk for AI. Between RP and other organizations we support (IAPS, Apollo, and Epoch), I estimate we employ directly or indirectly (via fiscal sponsorship) 40 people working to reduce existential risk from AI, which I take to be somewhere between 3% to 10% of the entire global workforce aimed at this. That’s a significant contribution to AI x-risk reduction capacity. We likewise employ and support a similarly significant fraction of the number of researchers oriented towards effective animal advocacy.

Many of our staff also had not engaged much, or not at all, with effective altruism prior to joining RP, but then engaged with the movement and its ideas heavily during and after their RP career. This makes RP a great way to expose talented professionals to EA and give them a lot of experience and orientation to working on it, launching their EA career.

While we don’t view our impact as solely coming from talent pipelines, many people get training at RP and then go on to do other impactful things. Staff that we’ve hired have gone on to have impactful jobs at Open Philanthropy, the Long-Term Future Fund, AI Safety Communications Centre, the EA Forum, major US think tanks, and US state-level government. We’ve also supported secondments into the UK government and are interested in exploring other ways to help our staff get involved in government work more directly.

It seems like management roles at many orgs largely get picked from people who have done management before. By building a strong and fairly large organization and by doing formal and informal management training, we’ve given a lot of people the opportunity to become experienced managers. Several of our staff have been trained up by us to take important management roles at Rethink Priorities or other organizations, including at Open Philanthropy.

Lastly, our staff support the wider EA community by attending EA Globals and other conferences and mentoring others in the field. Our staff have created many coordination vehicles, such as conferences, paper archives, and Slack channels.

All of this talent pipelines, field building, and coordination comes merely from supporting RP in our object-level work. We hope you will keep these benefits in mind.

Conclusion

RP needs your support – and we need it to keep doing the work we have been doing, not just do more projects. This is not a proposal for growth – this is a proposal to keep RP to be what we want it to be. We want RP to be a project that helps the EA community as a whole, in addition to our individual clients and stakeholders.

At its core, RP wants to implement a principles-first EA: principled selections of areas to work in, principle-derived attempts to do good at scale, and principle-based updates about how to do the most good. The uncertainties involved in doing good can be daunting and changemaking is difficult. We need to rigorously quantify the value of different courses of action, we need to grapple with multiple decision theories or worldviews, and in order to create change, deeply engage with key stakeholders within priority areas to affect beliefs, actions, and outcomes.

We hope that this list shows you that RP is up to a wide variety of vibrant work and that we really need your support to keep this going. We’d be interested in you donating here or reaching out to us with offers to fund specific projects on this list. We can honor donor restrictions and preferences with minimal to no fungibility issues, simply reach out to us at development@rethinkpriorities.org.

We’d be especially keen to receive more unrestricted support from people who trust us most to allow us to innovate more with our own ideas and prototype ideas to attract the attention of larger funders. This donor diversity gives us a lot more flexibility, lets us solve collective action problems, gives us valuable intellectual independence, and just gives us peace of mind.

We tend to choose projects as and when we’re wrapping up other projects, rather than further in advance, due to rapid shifts in the field and our understanding. But this is very hard to do if we specify everything on a project-by-project basis and wait 6+ months between coming up with an idea and receiving funding. RP staff value and work best with a sense of security and planning for the long-term is more cost-effective than more shortsighted behavior. So trust is really helpful in allowing us to do our best work.

We are generally happy to try and expand further upon request. Prospective funders may be welcome to have a call with team members or leadership in order to further discuss any of the above.

Interested readers are encouraged to refer to “Rethink Priorities’ 2023 Summary, 2024 Strategy, and Funding Gaps” for further details.

Please consider supporting us as much as you can. Thank you.

Acknowledgements

This was written by Peter Wildeford, with contributions from Kieran Greig, Marcus A. Davis, and David Moss. Thanks to Henri Thunberg, Janique Behman, Melanie Basnak, Abraham Rowe, and Daniela Waldhorn for their helpful feedback. It is a project of Rethink Priorities, a research and implementation group that identifies pressing opportunities to make the world better. We act upon these opportunities by developing and implementing strategies, projects and solutions to key issues. We do this work in close partnership with foundations and impact-focused non-profits. If you're interested in Rethink Priorities' work, please consider subscribing to our newsletter. You can explore our completed public work here.

- ^

The gap between our current funding and the funding we would need to achieve our 2024 plans is several hundred thousand dollars. To further quantify the size of our funding gaps, our recent report estimated that within each area we are active our non-Open Phil funding goals respectively ranged from $300k to $700k. Note that we refer to those as goals rather than gaps at this point because there are multiple areas that we are currently undergoing renewal negotiations with Open Phil or have other large outstanding grant applications that affect our fundraising, though we expect to have a gap regardless.

- ^

This new think tank has published multiple reports, including a report on AI chip smuggling into China: Potential paths, quantities, and countermeasures, which examines the prospect of large-scale smuggling of AI chips into China and proposes six interventions for mitigating that problem. The report was prominently mentioned in The Times online newspaper. Onni Aarne also co-authored a forthcoming paper with Tim Fist and Caleb Withers of the Center for a New American Security (CNAS). Their report focuses on using on-chip governance mechanisms to manage national security risks from AI and advanced computing. Both of these above-mentioned reports have received positive feedback and interest from researchers and senior US policymakers. The authors have been asked to deliver briefings on their findings to audiences, including through roundtable discussions co-hosted by CNAS and in the Foundation for American Innovation’s podcast.

- ^

Referring to the situation with FTX which we noted last year “does impact our long-term financial outlook” and that we “need to address the funding gap left by these changed conditions for the coming years.” We underestimated the degree to which this would also correlate with other declines in fundraising from other funders. Additionally, Open Philanthropy has announced a “lower GHW funding trajectory than [...] internally anticipated over the last couple of years [and] now expect [...] GHW spending over the next couple of years to be at roughly 2021 levels” and this also affects fundraising for our work.

- ^

Note that this includes a series of posts associated with the 2022 EA Survey:

- EA Survey 2022: What Helps People Have an Impact and Connect with Other EAs

- EA Survey 2022: Community Satisfaction, Retention, and Mental Health

- ^

This is also important for the IRS - see public support test.

- ^

Due to the need to spend time checking for and removing any non-public information, getting approval from cited experts to be quoted publicly, etc.

- ^

For example, I think the time needed to share some of our GHD work publicly is only 10-20% of the time to produce the piece in the first place, so we are producing a marginal publicly shared research piece with 10x less cost than producing it from nothing. Some of our survey work could be shared with just a few weeks of work per post. My guess is that there are many diffuse benefits to sharing work on the EA Forum, by changing the minds of organizations and people other than our clients, introducing new methods and ideas to build off of, as well as signaling that the effective altruism movement does vibrant work.

- ^

Some people complain that the EA Forum doesn’t have enough interesting and in-depth research… funding RP is a great way to address that.

- ^

E.g., Amanda Hungerford, Farm Animal Program Officer at Open Philanthropy, spoke on the How I Learned to Love Shrimp podcast about how our work led her to change her personal opinions on insects:

I think the big animal related view I’ve changed my mind on fairly recently is insects. I very much used to view them as these kind of unfeeling automatons, did not give them a second thought, was very happy to not worry about their wellbeing. But after seeing some of the Moral Weight Work that Rethink Priorities has put out, I now feel worried about the insects.

- ^

On the 80,000 Hours podcast, co-founder Andrés Jiménez Zorrilla stated how Rethink Priorities’s work had helped persuade him to start the Shrimp Welfare Project:

Rob Wiblin: One of the things you read early on that helped persuade you was an article written by Rethink Priorities, where they were basically just evaluating what we know about this topic, the basic facts. We’ll stick up a link to that article, if it’s online. Is that right?

Andrés Jiménez Zorrilla: That’s correct. All this work Rethink Priorities has done around sentience with many different species was very useful for my cofounder and I to decide whether the evidence pointed towards strong probability that these animals are sentient. Also, Rethink Priorities are some of the people who have done the best estimates of the number of individual animals that are involved in the shrimp business. So their work, in general, was very, very useful. FishEthoBase was also very good. I think some of this work might not be published though.

- ^

Given Open Phil's shift towards more global catastrophic risks, and Open Phil basing their funding of us in part on the belief we get others to fund us in the future, we need non-OP funders.

- ^

In addition to consulting with further clients, we are interested in exploring potential routes to impact through policy research, advocacy and technical assistance efforts to increase political support for cost-effective global health, development, and climate change programs. This might include, for example, advocating for new cost-effective programs, technical assistance to multilateral organizations, research to improve the cost-effectiveness of existing programs or on how to evaluate the effect of policy from a grantmaking perspective. However, unfortunately, us doing further work of this nature is also very constrained by funding at this point. We'd need funds to identify the best topics and avenues to get involved with this, identify and engage with the correct policy intermediaries, and curate our materials to suit that audience.

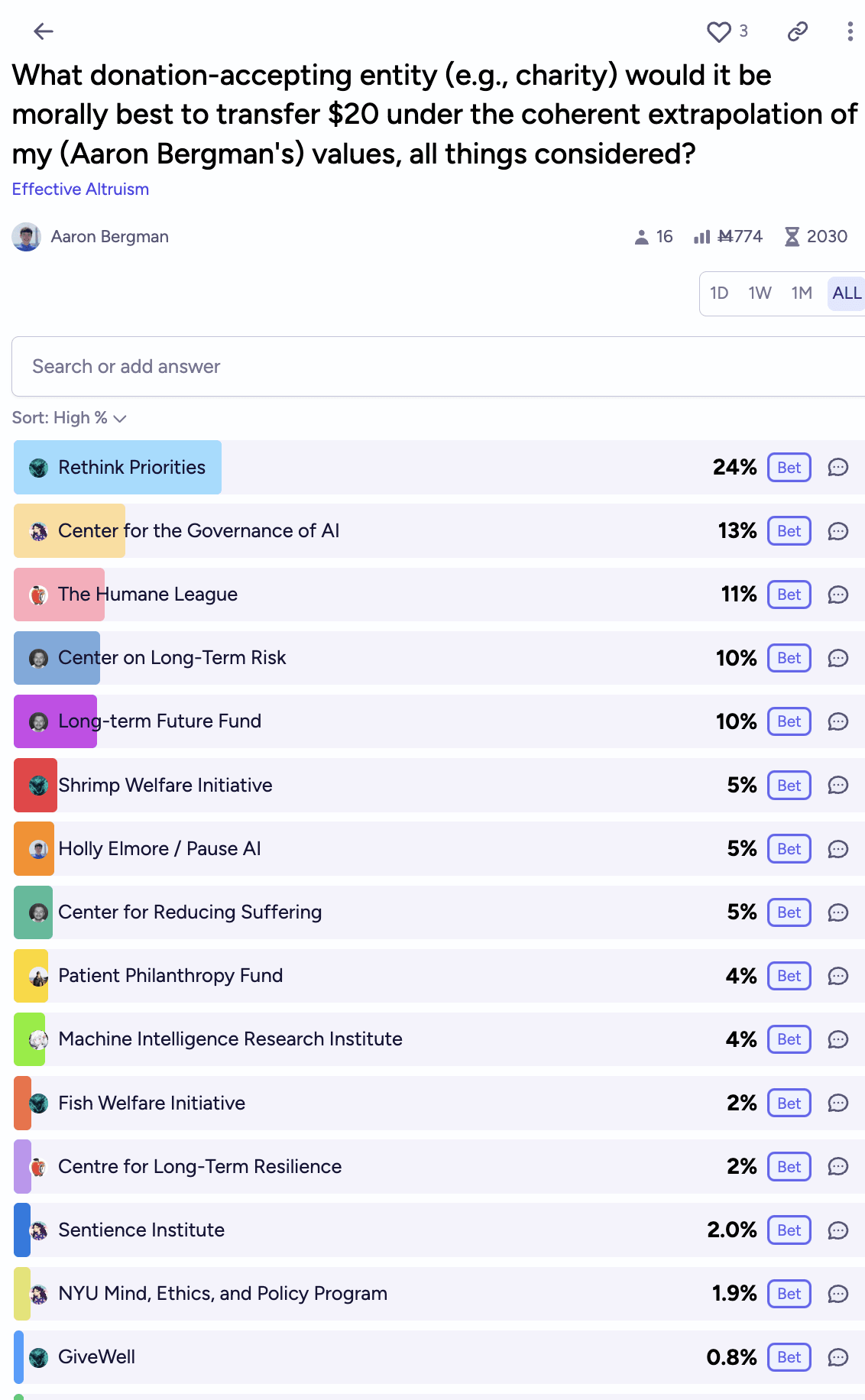

Aaron Bergman @ 2023-11-21T18:57 (+32)

For others considering whether/where to donate: RP is my current best guess of "single best charity to donate to all things considered (on the margin - say up to $1M)."

FWIW I have a manifold market for this (which is just one source of evidence - not something I purely defer to. Also I bet in the market so grain of salt etc).

oscar @ 2023-12-21T19:09 (+24)

My impression is both that Rethink pays pretty decent salaries and that you guys find hiring additional researchers quite easy. Have you guys considered lowering your salaries? I know there are practical considerations that make this hard to do in the short-term but you could, say, offer lower salaries to new staff and give existing staff lower pay rises which would lower your staff costs over the medium term

weeatquince @ 2023-12-11T20:49 (+23)

Hi Peter, Rethink Priorities is towards the top of the places I'm considering giving this year. This post was super helpful. And these projects look incredible, and highly valuable.

That said I have a bunch of questions and uncertainties I would love to get answers to you before donating to Rethink.

1. What is your cost/benefit. Specially I would love to know any or all of:

- Rethink's cost per researcher year on average, (i.e. the total org cost divided by total researcher years, not salary).

- Rethink's cost per published research report (again total org cost not amount spent on a specific projects, divided by the number of published reports where a research heavy EA Forum post of typical Rethink quality would count as a published report).

- Rethink's cost per published research report that is not majority funded by "institutional support"

2. Can you assure me that Rethink's researchers are independent?

Rethink seems to be very heavily reliant for its existence on OpenPhil (and maybe some other very large institutional supporters?). I get the impression from a few people that Rethink's researchers sometimes may be restricted in their freedom to publish or freedom to say what they want to funders, where doing so would go against the wishes of Rethinks institutional funders (OpenPhil) or would in some way pose a risk to Rethink's funding stream.

This creates a risk of bias. E.g. a publication bias that skews Rethink to only publish information that OpenPhil are happy with. This is a key reason towards me feeling happier donating to support research from more independent sources (such as EA Infrastructure Fund or HLI or Animal Ask) rather than giving to Rethink.

I would be reassured if I saw evidence of Rethink addressing this risk of bias. This could look like any or all of:

- A public policy that researchers have the freedom to publish even where donors disagree, and sets out steps to minimize the risk of bias (e.g. ignoring the views of donors when deciding if something is an info-hazard) and the accountability mechanisms to ensure that the policy is followed (e.g. whistleblowing process).

- Some case studies of how Rethink has made this publication bias / upset funders trade-off in the past, especially evidence of Rethink going against OpenPhil's direct wishes.

- An organisational risk register entry from Rethink setting out plans to mitigate these risks or why senior staff are not worried about these risk.

- A policy from OpenPhil setting out an aim not to bias Rethink in this way.

3. How will you prioritise amongst the projects listed here with unrestricted funds from small donors?

Most of these projects I find very exciting, but some more than others. Do you have a rough priority ordering or a sense of what you would do in different scenarios, like if you ended up with unrestricted funding of $0.25m/$0.5m/$1m/$2m/$4m etc how you would split it between the projects you list?

4. [Already answered] Can you clarify how much your examples you cite are funded by OpenPhil?

[This was one of my key questions but Marcus answered it on another thread, I included it as I thought it would be relevant to others.]

I noted that you say that some research "invertebrate sentience, moral weights and welfare ranges, the cross-cause model, the CURVE sequence" has "historically not had institutional support". But OpenPhil suggest here they funded some of this work.

Marcus clarifies here that the Moral Weights work was about ~50% OpenPhil and the CURVE work about ~10% OpenPhil funded.

Thank you for answering these questions. Keep up the wonderful work and keep improving our ability to do good in the world!!! <3

[Minor edits made to q2]

bruce @ 2023-12-12T00:18 (+21)

Can you assure me that Rethink's researchers are independent?.

I no longer work at RP, but I thought I'd add a data point from someone who doesn't stand to benefit from your donations, in case it was helpful.

I think my take here is that if my experience doing research with the GHD team is representative of RP's work going forwards, then research independence should not be a reason not to donate.[1]

My personal impression is that of the work that I / the GHD team has been involved with, I have been afforded the freedom to look for our best guess of what the true answers are, and have personally never felt constrained or pushed into a particular answer that wasn't directly related to interpretation of the research. I have also consistently felt free to push back on lines of research that I feel would be less productive, or suggest stronger alternatives. I think credit here probably goes both to clients as well as the GHD team, though I'm not sure exactly how to attribute this.

I feel less confident about biases that may arise from the research agenda / selection of research questions or worldviews and assumptions of clients, but this could (for example) make one more inclined towards funding RP to do their own independent research, or specifying research you think is particularly important and neglected.

Edit: See thread by Saulius detailing his views.

- ^

Caveats: I can't speak for the teams outside of GHD, and I can't speak for RP's work in 2024. This comment should not be seen as an endorsement of the claim that RP is the best place to donate to all things considered, which obviously is influenced by other variables beyond research independence.

Peter Wildeford @ 2023-12-18T10:54 (+15)

- How will you prioritise amongst the projects listed here with unrestricted funds from small donors? Most of these projects I find very exciting, but some more than others. Do you have a rough priority ordering or a sense of what you would do in different scenarios, like if you ended up with unrestricted funding of $0.25m/$0.5m/$1m/$2m/$4m etc how you would split it between the projects you list?

I think views on this will vary somewhat within RP leadership. Here I am also reporting my own somewhat quick independent impressions which could update upon further discussing with teams and the rest of leadership. We're planning to do more to finalize plans next month so it's still a bit up in the air right now. Candidly, our priorities also depend on how our end of year fundraising campaign lands.

Here's roughly what I'm thinking right now:

Highest priority / first $250k (and I am not listing these by order of priority within each tier):

- ~$35k for publishing our backlog of completed work.

- ~$100k towards more cause prioritization work

- ~$30k towards running the next EA Survey (hoping to have that matched by institutional funders)

- ~$15k for writing up our learnings from spending a year attempting longermist incubation (though we might try to 80/20 soon for less).

- ~$38k for establishing our farmed animals impact tracker

- ~$60k towards piloting our value of research model

Next highest priority / second $250k:

- ~$15k for publishing our backlog of work

- ~$75k towards more cause prioritization work/ worldview investigation work

- ~$30k towards piloting our value of research model

- ~$40k towards more rigorously understanding branding for AI risk outreach

- ~$45k towards more rigorously understanding EA’s growth trajectory

Third highest priority / next $500k:

- ~$30k towards publishing our backlog of research

- ~$75k towards more cause prioritization work/ worldview investigation work

- ~$50k towards piloting our value of research model

- ~$50k towards understanding why people drop out of EA

- ~$75k towards the theory of change status report for farmed animals

- ~$40k towards the farmed insect ask.

- ~$40k towards more rigorously understanding branding for EA And existential risk

- ~$30k towards understanding EA’s growth trajectory

- ~$60k towards QALYs/DALYS for animals

I am going to stop there as that first million is probably the most decision relevant, and right now that seems most likely where we will land with our funds raised.

With all this said, we also take donor preferences very seriously, including by taking restricted gifts. If there are particular options that are most exciting to you, then we might be able to further prioritize those if you or others were to offer support and indicated that preference .Relatedly, I am curious if you are willing to share which projects you are most excited about, or conversely which are least appealing?

And thank you too for all that you do to improve the world and these well-thought out questions!! :)

Peter Wildeford @ 2023-12-18T10:51 (+15)

- Can you assure me that Rethink's researchers are independent?

Yes. Rethink Priorities is devoted to editorial independence. None of our contracts with our clients include editorial veto power (except that we obviously cannot publish confidential information) and we wouldn't accept these sorts of contracts.

I think our reliance on individual large clients is important, but overstated. Our single largest client made up ~47% of our income in 2023 and we're on track for this to land somewhere between 40% and 60% in 2024. This means in the unlikely event that we were to lose this large client completely, it would certainly suck, but some version of RP would still survive. Our leadership is committed to taking this path if necessary.

This is also why we are proactively working hard to diversify our client base and why we are soliciting individual funding. Funding from you and others would help us further safeguard our editorial independence. We're very lucky and grateful for our existing individual donors that collectively donate a significant portion of our expenses and preserve our independence.

Luckily, I am not aware of any instance where we wanted to publish work that any of our institutional clients did not want to publish. We try to work with clients that value our independence and aim to promote truth-seeking even if it may go against the initial views of our client. We do not work with clients where they only want to hear from us a confirmation of their pre-existing beliefs -- we have been asked to do something like this (though not in these exact words) many times actually and have always turned down this work.

There have sometimes been disagreements between individual staff and RP management about what to publish that did not involve anything about upsetting our clients, and in these cases we've always allowed the staff to still publish their work in their personal capacity. Rethink Priorities does not censor staff or prevent them from publishing.

If you have reason to believe that Rethink Priorities researchers are not independent, please let me know so I can correct any issues and reassure relevant staff.

saulius @ 2024-01-01T17:41 (+166)

When I was asked to resign from RP, one of the reasons given was that I wrote the sentence “I don't think that EAs should fund many WAW researchers since I don't think that WAW is a very promising cause area” in an email to OpenPhil, after OpenPhil asked for my opinion on a WAW (Wild Animal Welfare) grant. I was told that this is not okay because OpenPhil is one of the main funders of RP’s WAW work. That did not make me feel very independent. Though perhaps that was the only instance in the four years I worked at RP.

Because of this instance, I was also concerned when I saw that RP is doing cause prioritization work because I was afraid that you would hesitate to publish stuff that threatens RP funding, and would more willingly publish stuff that would increase RP funding. I haven’t read any of your cause prio research though, so I can’t comment on whether I saw any of that.

EDIT: I should've said that this was not the main reason I was asked to resign and that I had said that I would quit in three months before this happened.

Marcus_A_Davis @ 2024-01-02T20:16 (+63)

Hey Saulius,

I’m very sorry that you felt that way – that wasn’t our intention. We aren’t going to get into the details of your resignation in public, but as you mention in your follow up comment, neither this incident, nor our disagreement over WAW views were the reason for your resignation.

As you recall, you did publish your views on wild animal welfare publicly. Because RP leadership was not convinced by the reasoning in your piece, we rejected your request to publish it under the RP byline as an RP article representative of an RP position. This decision was based on the work itself; OP was not at all a factor involved in this decision. Moreover, we made no attempt to censor your views or prevent them from being shared (indeed I personally encouraged you to publish the piece if you wanted).

To add some additional context without getting into the details of this specific scenario, we can share some general principles about how we approach donor engagement.

We have ~40 researchers working across a variety of areas. Many of them have views about what we should do and what research should be done. By no means do we expect our staff to publicly or privately agree with the views of leadership, let alone with our donors. Still, we have a donor engagement policy outlining how we like to handle communication with donors.

One relevant dimension is that we think that if one of our researchers, especially while representing RP, is sending something to a funder that has a plausible implication that one of the main funders of a department should seriously reduce or stop funding that department, we should know they are planning to do so before they do so, and roughly what is being said so that we can be prepared. While we don’t want to be seen as censoring our researchers, we do think it’s important to approach these sorts of things with clarity and tact.

There are also times when we think it is important for RP to speak with a unified voice to our most important donors and represent a broader, coordinated consensus on what we think. Or, if minority views of one of our researchers that RP leadership disagrees with are to be considered, this needs to be properly contextualized and coordinated so that we can interact with our donors with full knowledge of what is being shared with them (for example, we don’t want to accidentally convey that the view of a single member of staff represents RP’s overall position).

With regard to cause prioritization, funders don’t filter or factor into our views in any way. They haven’t been involved in any way with setting what we do or don’t say in our cause prioritization work. Further, as far as I’m aware, OP hasn’t adopted the kind of approach we’ve suggested on any of our major cause prioritization on moral weight or as seen in the CURVE sequence.

saulius @ 2024-01-03T11:43 (+128)

Thank you for your answer Marcus.

What bothers me is that if I said that I was excited about funding WAW research, no one would have said anything. I was free to say that. But to say that I’m not excited, I have to go through all these hurdles. This introduces a bias because a lot of the time researchers won’t want to go through hurdles and opinions that would indirectly threaten RP’s funding won’t be shared. Hence, funders would have a distorted view of researchers' opinions.

Put yourself into my shoes. OpenPhil sends an email to multiple people asking for opinions on a WAW grant. What I did was that I wrote a list of pros and cons about funding that grant, recommended funding it, and pressed “send”. It took like 30 minutes. Later OpenPhil said that it helped them to make the decision. Score! I felt energized. I probably had more impact in those 30 minutes than I had in three months of writing about aquatic noise.

Now imagine I knew that I had to inform the management about saying that I’m not excited about WAW. My manager was new to RP, he would’ve needed to escalate to directors. Writing my manager’s manager’s manager a message like “Can I write this thing that threatens the funding of our organisation?” is awkward. Also, these sorts of complex conversations can sometimes take a lot of time for both me and the upper management who are very busy. So I might not have done it. And I’m a very disagreeable employee who knows directors personally, so other researchers are probably less likely to do stuff like that. I also didn’t want to write OpenPhil an email that speaks frankly about the pros of funding the grant but not the cons. So it might have been easier to just ignore OpenPhil’s email and focus on finishing the aquatic noise report.

Maybe all of this is very particular to this situation. Situations like this didn’t arise when I worked at RP very often. I spent most of my time researching stuff where my conclusions had no impact on RP’s funding. But if RP grows, conflicts of interest are likely to arise more often. And such concerns might apply more often to cause-prioritisation research. If a cause prioritisation researcher concluded that say AI safety research is more important than all other causes, would they be able to talk about that freely to funders, even though it would threaten RP’s funding? If not, that’s a problem.

Vasco Grilo @ 2024-01-03T17:00 (+11)

Great points, Saulius! I think it would be pretty valuable to have a question post asking about how organisations are handling situations such as yours (you may be interested in doing it; if you or other person do not, I may do it myself). I would say employees/contractors should be free, and ideally encouraged, to share their takes with funders and publicly, more of less regardless of what are the consequences for their organisation, as long as they are not sharing confidential information.

saulius @ 2024-01-04T12:35 (+68)

Thanks Vasco. Personally, I won't do it. Actually, I pushed this issue here way further than I intended to and I don't want to talk about it more. I'm afraid that this might be one of those things that might seem like a big deal in theory but is rarely relevant in practice because reality is usually more complicated. And it's the sort of topic that can be discussed for too long, distracting busy EA executives from their actual work. Many people seem to have read this discussion, so arguments on both sides will likely be considered at RP and some other EA orgs. That is enough for me. I particularly hope it will be discussed by RP's cause prioritization team.

saulius @ 2024-01-04T12:36 (+16)

If you do decide to pose a question, I suggest focusing on whether employees at EA organizations feel any direct or indirect pressure to conform to specific opinions due to organizational policies and dynamics, or even due to direct pressure from management. Or just don't feel free to speak their minds on certain issues related to their job. People should have an option to answer anonymously. Basically to find out if my case was an isolated incident or not.

Habryka @ 2024-01-03T02:36 (+54)

One relevant dimension is that we think that if one of our researchers, especially while representing RP, is sending something to a funder that has a plausible implication that one of the main funders of a department should seriously reduce or stop funding that department, we should know they are planning to do so before they do so, and roughly what is being said so that we can be prepared. While we don’t want to be seen as censoring our researchers, we do think it’s important to approach these sorts of things with clarity and tact.

There are also times when we think it is important for RP to speak with a unified voice to our most important donors and represent a broader, coordinated consensus on what we think. Or, if minority views of one of our researchers that RP leadership disagrees with are to be considered, this needs to be properly contextualized and coordinated so that we can interact with our donors with full knowledge of what is being shared with them (for example, we don’t want to accidentally convey that the view of a single member of staff represents RP’s overall position).

FWIW, this sounds to me like a very substantial tax on sharing information with funders, and I am surprised by this policy.

As someone who is sometimes acting in a funder capacity I wasn't expecting my information to be filtered this way (and also, I feel very capable of not interpreting the statements of a single researcher as being non-representative of a whole organization, I expect other funders are similarly capable of doing that).

Indeed, I think I was expecting the opposite, which is that I can ask researchers openly and freely about their takes on RP programs, and they have no obligation to tell you that they spoke to me at all. Even just an obligation to inform you after the fact seems like it would frequently introduce substantial chilling effects. I certainly don't think my employees are required to tell me if they give a reference or give an assessment of any Lightcone project, and would consider an obligation to inform me if they do as quite harmful for healthy information flow (and if someone considers funding Lightcone, or wants to work with us in some capacity, I encourage you to get references from my employees or other people we've worked with, including offering privacy or confidentiality however you see fit about the information you gain this way).

Peter Wildeford @ 2024-01-03T16:14 (+23)

Hey, thanks for the feedback. I do think reasonable people can disagree about this policy and it entails an important trade-off.

To understand a bit about the other side of the trade-off, I would ask that you consider that we at RP are roughly an order of magnitude bigger than Lightcone Infrastructure and we need policies to be able to work well with ~70 people that I agree make no sense with ~7 (relatively independent, relatively senior) people.

Karthik Tadepalli @ 2024-01-04T07:31 (+29)

Could you say more about the other side of the tradeoff? As in, what's the affirmative case for having this policy? So far in this thread the main reason has been "we don't want people to get the impression that X statement by a junior researcher represents RP's views". I see a very simple alternative as "if individuals make statements that don't represent RP's views they should always make that clear up front". So is there more reason to have this policy?

Jason @ 2024-01-03T16:54 (+14)

Fair, but the flip side of that is that it's considerably less likely that a sophisticated donor would somehow misunderstand a junior researcher's clearly-expressed-as-personal views as expressing the institutional view of a 70-person org.

Habryka @ 2024-01-03T17:00 (+9)

Hmm, my sense is that the tradeoffs here mostly go in the opposite direction. It seems like the costs scale with the number of people, and it's clear that very large organizations (200+) don't really have a chance of maintaining such a policy anyways, as the cost of enforcement grows with the number of members (as well as the costs on information flow), while the benefits don't obviously scale in the same way.

It seems to me more reasonable for smaller organizations to have a policy like this, and worse the larger the organization is (in terms of how unhappy to be with the leadership of the organization for instituting such a policy).

Nathan Young @ 2024-01-24T08:16 (+2)

I think most larger orgs attempt to have even less information leave than this. So you're statement seems wrong. Many large organisations have good boundaries in terms of information - apple is very good at keeping upcoming product releases quiet.

Habryka @ 2024-01-24T08:47 (+3)

I think Apple is very exceptional here, and it does come at great cost as many Apple employees have complained about over the past years:

I think larger organizations are obviously worse than this, though I agree that some succeed nevertheless. I was mostly just making an argument about relative cost (and think that unless you put a lot of effort into it, at 200+ it usually becomes prohibitively expensive, though it of course depends on the exact policy and). See Google and OpenAI for organizations that I think are more representative here (and are more what I was thinking about).

Nathan Young @ 2024-01-25T10:57 (+2)

Naah I think I still disagree. I guess the median large consultancy or legal firm is much more likely to go after you for sharing stuff than than the median small business. Because they have the resources and organisational capital to do so, and because their hiring allows them to find people who probably won't mind and because they capture more of the downside and lose less to upside.

I'm not endorsing this but it's what I would expect from Rethink, OpenPhil, FTX, Manifold, 80k, Charity Entreprenurship, Longview, CEA, Lightcone, Miri. And looking at those orgs, It's what, Lightcone and Manifold that aren't normal to secretive in terms of internal information. Maybe I could be convinced to give MIRI/Longivew a pass because their secrecy might be for non-institutional reasons but "organisations become less willing for random individuals to speak their true views about internal processes as they get larger/more powerful" seems a reasonable rule of thumb, inside and outside EA.

Ben_West @ 2024-01-04T20:27 (+7)

Peter, I'm not sure if it is worth your time to share, but I wonder if there are some additional policies RP has which are obvious to you but are not obvious to outsiders, and this is what is causing outsiders to be surprised.

E.g. perhaps you have a formal policy about how funders can get feedback from your employees without going through RP leadership which replaces the informal conversations that funders have with employees at other organizations. And this policy would alleviate some of the concerns people like Habryka have; we just aren't aware of it because we aren't familiar enough with RP.

Jason @ 2024-01-03T16:59 (+17)

Would you consider whipping this up into a publicly posted policy? I'm sure Lightcone employees don't need a formal policy, but I think it would be desirable for orgs to have legible published policies on this as a way to flag which ones are / are not insisting their staff filter their opinions through management. Donors/funders will be able to weigh opinions from organizational employees accordingly.

Habryka @ 2024-01-04T00:23 (+4)

I feel weird making a top-level post about it (and feel like I would need to polish it a bunch before I feel comfortable making it an official policy). Do feel free to link to my comments here.

If many people would find this helpful, I would be happy to do it, but it does seem like a bunch of work so wouldn't want to do it based on just the support expressed so far.

Vasco Grilo @ 2024-02-09T06:58 (+2)

Hi Oliver,

I feel very capable of not interpreting the statements of a single researcher as being non-representative

I think you meant "interpreting" instead of "not interpreting", given you write "non-representive" afterwards.

weeatquince @ 2024-01-03T16:55 (+44)

[Edit: as per Saulius' reply below I was perhaps to critical here, especially regarding the WAW post, and it sounds like Saulius thinks that was manged relatively well by RP senior staff]

I found this reply made me less confident in Rethink's ability to address publication bias. Some things that triggered my 'hmmm not so sure about this' sense were:

- The reply did not directly address the claims in Saulius's comment. E.g. "I'm sorry you feel that way" not "I'm sorry”. No acknowledgement that if, as Saulius claimed, a senior staff told him that it was wrong to have expressed his views to OpenPhil (when asked by OpenPhil for his views), that this might have been a mistake by that staff. I guess I was expecting more of a 'huh that sounds bad, let us look into it' style response than a 'nothing to see here, all is great in Rethink land' style response.

- The story about Saulius' WAW post. Judging from Marcus' comment It sounds like Rethink stopped Saulius from posting a WAW post (within a work context) and it also looks like there was a potential conflict of interest here for senior staff as posting could affect funding. Marcus says that RP senior staff were only deciding based on the quality of the post. Now I notice the post itself (published a few months after Saulius left Rethink) seems to have significantly more upvotes than almost any of Rethink's posts (and lots of positive feedback). I recognise upvotes are not a great measure of research quality but it does make me worry that about the possibility that this post was actually of sufficient quality and the conflict of interest may have biased senior Rethink staff to stop the post being published as a Rethink post.

- The stated donor engagement principles seem problematic. Eg: "there are also times when we think it is important for RP to speak with a unified voice to our most important donors" is exactly the kind of reason I would use if I was censoring staff's interaction with donors. It is not that "unified organisational voice" policies are wrong, just that without additional safeguards they have a risk of being abused, of facilitating the kinds of conflict of interest driven actions under discussion here. Also as Saulius mentions such policies can also be one-sided where staff are welcome to say anything that aligns with a certain worldview but need to get sign-off to disagree with that worldview, another source of bias.

I really really do like Rethink. And I do not think that this is a huge problem, or enough of a problem to stop donors giving to Rethink in most cases. But I would still be interested in seeing additional evidence of Rethink addressing this risk of bias.

saulius @ 2024-01-05T10:56 (+36)

It sounds like Rethink stopped Saulius from posting a WAW post (within a work context) and it also looks like there was a potential conflict of interest here for senior staff as posting could affect funding

It is true that I wasn’t allowed to publish some of my WAW work on behalf of RP. Note that this WAW work includes not only the short summary Why I No Longer Prioritize Wild Animal Welfare (which got a lot of upvotes) but also three longer articles that it summarises (this, this, and this). Some of these do not threaten RP’s funding in any way. That said, I was allowed to work on these articles in my work time which should count for something.

Let me give a bit of context because it might not make sense without it. My project was finding the best WAW intervention. I struggled with it a lot. Instead of doing what I was supposed to, I started writing about why I was struggling, which eventually turned into all those posts. I asked my manager (who was quite new to RP) if I could continue working on those posts and publish them on behalf of RP. My manager allowed it. Then Marcus read some of my drafts and gave detailed useful feedback. He said that while he was happy that I looked into this stuff, these articles couldn't be published on behalf of RP. As I remember it, the reason was that they were basically opinion pieces. He wanted RP to only post stuff that is closer to an academic publication. I asked why but I don’t want to share his answer publicly in case what he told me wasn’t public. I disagreed with his position but it’s the sort of thing that reasonable people can disagree on. We both thought that it wasn’t worth trying to bring my articles to the degree of polish (and perhaps rigour) that would meet RP’s publishing standards but that they were still worth publishing. Marcus said that I’m free to finish my posts in my work time, which was very kind of him. Note that this was financially disadvantageous for RP. I felt uncomfortable spending more time on non-RP stuff in my work time so I took an unpaid leave to finish my posts, but it was my own idea and Marcus explicitly told me that I don’t need to do that.

Jason @ 2024-01-03T16:52 (+31)

I get RP's concerns that an individual researcher's opinions not come across as RP's organizational position. However, equal care needs to be given to the flipside -- that the donor does not get the impression that a response fully reflects the researcher's opinion when it has been materially affected by the donor-communication policy.

I'm not suggesting that management interference is inappropriate . . . but the donor has the right to know when it is occurring. Otherwise, if I were a donor/funder, I would have to assume that all communications from RP researchers reflected management influence, and would adjust each response in an attempt to filter out any management influence and "recover" the researcher's original intent. For example, I would not update much on the omission of any statement that would have potential negative institutional effect on RP.

As an analogy, imagine that every statement by a professor in an academic department had to be filtered through a process to make sure there was no material adverse effect on the university's interests. I would view any professorial statement coming out of that university with a jaundiced eye, and I think most people would do. In contrast, as a practicing lawyer, everyone assumes that my statements at work are not really truth-seeking and are heavily influenced by my client's institutional interests. I submit that it is important for RP's effectiveness that donors/funders see RP researchers as closer to academics than the functional equivalent of RP's lawyers.

All of this is fairly process-heavy, but that may be OK given the representation that these sorts of situations are infrequent.

(1) The donor-engagement policy should be publicly posted, so that donors/funders are aware when communications they receive may have been impacted by it. As @Habryka explains, donors/funders may not expect "information to be filtered this way" and could affirmatively expect an absence of such filtering.