Defining "Bullshit": Limitations and Related Frameworks

By Ozzie Gooen @ 2025-06-12T22:43 (+9)

This is a linkpost to https://ozziegooen.substack.com/p/defining-bullshit-limitations-and

Meta

This was mainly a research investigation I did into the concept of "bullshit" and related potential formalizations. I expect this to be a bit formal and in-the-weeds for much of the Effective Altruism Forum, but thought that perhaps some people might find it interesting.

Summary

The term "bullshit" appears promising for epistemics / rationality work due to its familiarity, but ultimately proves too imprecise for serious analysis.

While Frankfurt's definition (indifference to truth) and Galef's soldier mindset offer starting points, they're overly broad; capturing many useful communications that happen to be truth-apathetic.

I introduce two frameworks to attempt formality: (1) accuracy impact analysis that categorizes communication by whether it improves or reduces audience correctness, and (2) evidence strength gaps that quantify the ratio between implied and actual evidence quality.

It seems like "bullshit" itself may exemplify the problem. It’s an emotionally charged term with unclear definitions that implies significant conclusions while operating on minimal evidence. But I still think it can be useful in certain situations, especially with the right context.

Motivation

Epistemics remains an unfamiliar term for most people, while "bullshit" enjoys broad recognition. Since quality epistemics often involves "avoiding or removing bullshit," framing epistemological work around “bullshit” might be pragmatic for gaining popularity.

Consider potential titles like "A Systematic Offensive Against Journalistic Bullshit" or "Bullshit Hunting: A Guide" versus academic-sounding alternatives using "epistemics."

This raises an important question: Can we define bullshit in ways that are both intuitive and carve reality at meaningful joints?

Definitional Challenges

The most obvious definition to begin with is that of Harry Frankfurt's influential 1986 essay "On Bullshit", which was later republished as a popular book in 2005. Frankfurt's work represents a philosophical attempt to define bullshit, and his analysis has become a standard reference point for academic discussions of the concept.

Frankfurt opens his essay with an observation: "One of the most salient features of our culture is that there is so much bullshit." Yet despite this ubiquity, he notes that "the phenomenon itself is so vast and amorphous that no crisp and perspicuous analysis of its concept can avoid being procrustean." Frankfurt acknowledges the inherent difficulty of his project, explaining that "the expression bullshit is often employed quite loosely - simply as a generic term of abuse, with no very specific literal meaning."

Frankfurt's central thesis distinguishes bullshit from lying based on the speaker's relationship to truth.

From Wikipedia’s summary of On Bullshit:

"Frankfurt determines that bullshit is speech intended to persuade without regard for truth. The liar cares about the truth and attempts to hide it; the bullshitter doesn't care whether what they say is true or false."

I think this is a decent start, but isn’t very precise.

Related is Julia Galef’s work on mindsets. Galef defines the scout mindset as "the motivation to see things as they are, not as you wish they were" while the soldier mindset involves "motivated reasoning to defend one's existing beliefs."

So, is bullshit just anything that comes from the soldier mindset?

I think that one complicating factor is the audience’s standards for evidence. If the audience has high standards, then a process aimed at convincing the audience will also inform them in useful ways. A person with a soldier mindset would recognize that they need to approximate a scout mindset in order to accomplish their mission. This would represent a healthy alignment between the motivations of communicators and the correctness of the beliefs of the audience.

Or, stated differently, I think Harry Frankfurt’s definition of bullshit might be too broad to be very useful. I think there are many cases of truth-apathetic arguing that are still useful and wouldn’t be classified by most as bullshit.

I also recommend the open course Calling Bullshit from the University of Washington in 2017. They also have share a similar definitional frustration, but don’t seem to have a clear superior alternative.

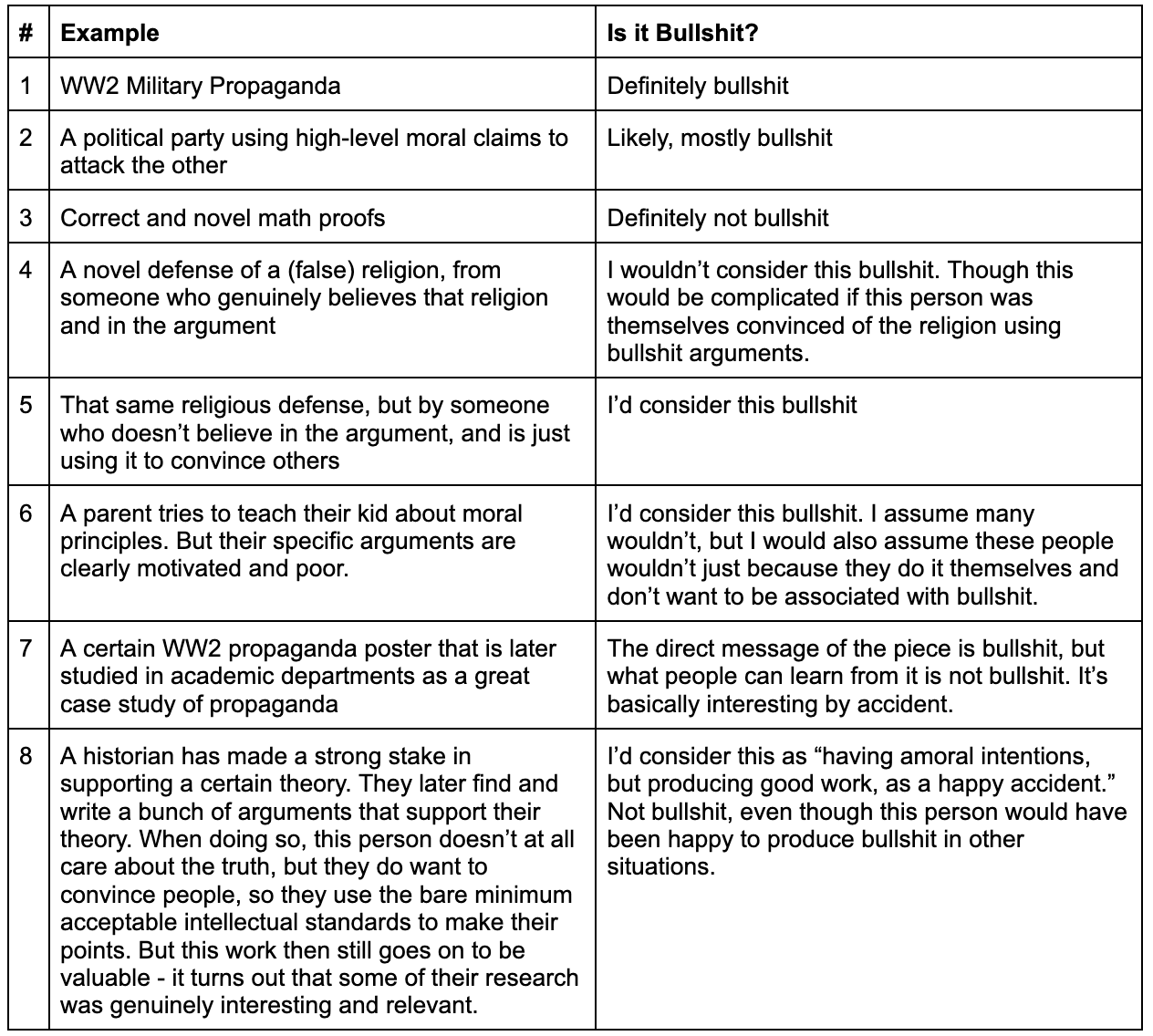

Ostentive Examples

When confused on definitions, it’s often a good idea to get precise. Let’s go through a few examples. I’ll grade each by how much like “bullshit” it naively seems.

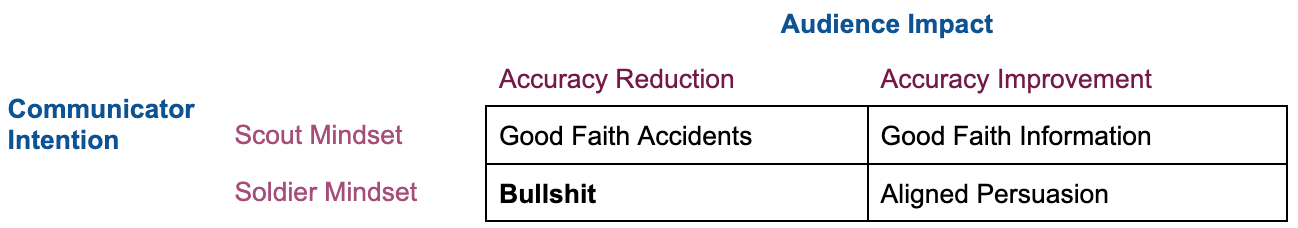

Alternative Framework 1: Accuracy Impact

We can distinguish between accuracy-improving and accuracy-reducing information based on whether audiences become more or less correct about matters important to them. This shifts some focus from speaker intent (which is often unknowable) to measurable outcomes.

Technically true information can still reduce accuracy through misunderstanding, misleading implications, or missing context. Consider carefully selected anecdotal evidence that supports a false general pattern, or precise statistics presented without necessary context about their limitations.

We can combine this with scout and soldier mindsets, into this simple table.

I think this is a decent spot that matches a lot of the intuitions people have of bullshit. It sort of matches the examples provided above. But I don’t think this framework is perfect. It’s meant to help us describe how people use the term, not to be a prescriptive and final definition.

Alternative Framework 2: Evidence Strength Gaps

A potentially superior approach focuses on the gap between real and implied evidence strength, avoiding problematic reliance on unknowable intentions.

Most concerning bullshit that I can think of features dramatically insufficient real evidence for the assumed confidence:

- Startups making civilization-scale claims ("We're helping humanity become a multiplanetary species") backed primarily by science fiction references and handwavy analogies

- Political parties hyping minor scandals as existential threats without consulting relevant experts or providing proportional context

- Propaganda films presenting carefully curated evidence for sweeping conclusions while omitting obvious counterevidence

- Academic papers that oversell preliminary findings through aggressive language while burying limitations in technical appendices

While evidence gaps don't perfectly correlate with bullshit, they frequently accompany soldier mindset approaches and create misleading effects that researchers should monitor.

This framework aligns with Bayesian thinking about evidence strength and belief updating. The key insight is that bullshit often involves presenting weak evidence (low likelihood ratios) as if it were strong evidence, leading audiences to update their beliefs more dramatically than warranted by the actual information content.

If we wanted to formalize this as bullshit, we might use something like:

Bullshit Coefficient = (Implied Evidence Strength) / (Actual Evidence Strength)

Values significantly greater than 1 indicate problematic communication that inflates evidence quality. Values near 1 suggest honest communication about evidence limitations. Values less than 1 might indicate excessive modesty or strategic underselling.

This quantitative approach could enable more systematic analysis across domains. Academic papers could include evidence strength assessments alongside their conclusions. Journalism could adopt transparency standards about source quality and uncertainty levels.

The framework also suggests practical detection methods. Bullshit often exhibits characteristic patterns: confident language paired with weak methodology, broad claims supported by narrow evidence, complex phenomena explained through simple stories, or urgent calls to action based on preliminary findings. This seems like the sort of thing that LLMs could help hunt for and distinguish.

Final Assessment

In retrospect, I think that a lot of use of the term “bullshit” is probably bullshit. Bullshit is a highly charged word with what seems like a hard-to-pin-down definition. It comes with large implications, but is often used with minimal evidence.

I find it easy to imagine people using the term bullshit on claims they don’t like. But I’d expect that any precise and meaningful definition and use of the term would call out these exact people’s valued beliefs as well. And it seems safe to expect people to get defensive when their own beliefs are called bullshit.

So we have a term that’s widely-known, yet difficult to pin down and perhaps dangerous (in that it gets used for bullshit) when used by the wrong people.

Going forward I personally plan to continue to use the term in a limited capacity, especially when communicating to audience members who would have trouble with terms like epistemics or rationality. But I expect that it’s best to quickly bring discussion to focus on other terminology where possible.