Reflecting on the Last Year — Lessons for EA (opening keynote at EAG)

By Toby_Ord @ 2023-03-24T15:35 (+274)

I recently delivered the opening talk for EA Global: Bay Area 2023. I reflect on FTX, the differences between EA and utilitarianism, and the importance of character. Here's the recording and transcript.

The Last Year

Let’s talk a bit about the last year.

The spring and summer of 2022 were a time of rapid change. A second major donor had appeared, roughly doubling the amount of committed money. There was a plan to donate this new money more rapidly, and to use more of it directly on projects run by people in the EA community. Together, this meant much more funding for projects led by EAs or about effective altruism.

It felt like a time of massive acceleration, with EA rapidly changing and growing in an attempt to find enough ways to use this money productively and avoid it going to waste. This caused a bunch of growing pains and distortions.

When there was very little money in effective altruism, you always knew that the person next to you couldn’t have been in it for the money — so they must have been in it because they were passionate about what they were doing for the world. But that became harder to tell, making trust harder.

And the most famous person in crypto had become the most famous person in EA. So someone whose views and actions were quite radical and unrepresentative of most EAs, became the most public face of effective altruism, distorting public perception and even our self-perception of what it meant to be an EA. It also meant that EA became more closely connected to an industry that was widely perceived as sketchy. One that involved a product of disputed social value, and a lot of sharks.

One thing that especially concerned me was a great deal of money going into politics. We’d tried very hard over the previous 10 years to avoid EA being seen as a left or right issue — immediately alienating half the population. But a single large donor had the potential to change that unilaterally.

And EA became extremely visible: people who’d never heard of it all of a sudden couldn’t get away from it, prompting a great deal of public criticism.

From my perspective at the time, it was hard to tell whether or not the benefits of additional funding for good causes outweighed these costs — both were large and hard to compare. Even the people who thought it was worth the costs shared the feelings of visceral acceleration: like a white knuckled fairground ride, pushing us up to vertiginous heights faster than we were comfortable with.

And that was just the ascent.

Like many of us, I was paying attention to the problems involved in the rise, and was blindsided by the fall.

As facts started to become more clear, we saw that the companies producing this newfound income had been very poorly governed, allowing behaviour that appears to me to have been both immoral and illegal — in particular, it seems that when the trading arm had foundered, customers’ own deposits were raided to pay for an increasingly desperate series of bets to save the company.

Even if that strategy had worked and the money was restored to the customers, I still think it would have been illegal and immoral. But it didn’t work, so it also caused a truly vast amount of harm. Most directly and importantly to the customers, but also to a host of other parties, including the members of the EA community and thus all the people and animals we are trying to help.

I’m sure most of your have thought a lot about this over last few months. I’ve come to think of my own attempts to process this as going through these four phases.

First, there’s: Understanding what happened.

- What were the facts on the ground?

- Were crimes committed?

- How much money have customer’s lost?

- A lot of this is still unknown.

Second, there’s: Working out the role EA played in it, and the effect it had on EA.

- Is EA to blame? Is EA a victim? —Both?

- How should we think about it when a few members of a very large and informal group do something wrong, when this is against the wishes of almost all the others, who are earnestly and conscientiously working to help the less fortunate?

- Yet we can’t just dismiss this as exceptionally rare and atypical actions. It is an important insight of EA that the total value of something can often be driven by a few outliers. We are happy to claim those atypical cases when they do great amounts of good, but we’ve see that the harms can be driven by outliers too.

Third, there’s: Working out how best to move forward.

- This is clearly an opportunity to learn how to avoid things like this ever happening again.

- Which lessons should we be learning?

- It’s a time for thinking about new ideas for improving EA and revisiting some old ideas we’d neglected.

Finally, there’s: Getting back to projects for helping make the world a better place.

- This is what EA is fundamentally all about — what inspires us.

- It’s what we ultimately need to return to, and what many of us need to keep on doing, even while others help work out which lessons we should be learning.

Different people here will be at different stages of this processing. And that’s fine.

This morning, I want to explore some ideas about the third step — working out how to best move forward.

Utilitarianism & Effective Altruism

Let me begin by taking you back in time 20 years to the beginnings of effective altruism. In 2003 I arrived in Oxford from Australia, fresh-faced and excited to get to study philosophy at a university that was older than the Aztec empire. Over the next 6 years, I completed a masters and doctorate, specialising in ethics.

One of the things I studied was a moral theory called utilitarianism. At its heart, utilitarianism consists of two claims about the nature of ethics:

1. The only thing that matters, morally speaking, is how good the outcome is.

This is a claim about the structure of ethics. It says it ethics just about outcomes (or consequences). On its own, we call this claim consequentialism.

Utilitarianism combines this with a claim about the content of ethics:

2. The value of an outcome is the total wellbeing of all individuals.

This was a radical moral theory. It is easy to forget, but one of the most radical ideas was that all beings mattered equally: women as well as men; people of all countries, religions and races; animals.

It was an attempt to free moral thinking from dogma and superstition, by requiring morality to be grounded in actual benefits or losses for individuals.

And it had practical reforming power. It wasn’t just a new way of explaining what we already believed, but could be used to find out what we were getting wrong — and fix it. In doing so, it was ahead of the curve on many issues:

- individual and economic freedom

- separation of church and state

- equal rights for women

- the right to divorce

- decriminalization of homosexuality

- abolition of slavery

- abolition of death penalty

- abolition of corporal punishment

- respecting animal welfare

Utilitarians spoke out in favour of all these things you see here, long before they were socially accepted.

But for all that, it was, and remains, a very controversial theory. It has no limits on what actions can be taken if they promote the overall good. And no limit on what morality can demand of you. It offers no role for the intentions behind an act to matter, and makes no distinction between not helping people or actively harming them. Moreover, it allows no role for ultimate values other than happiness and suffering.

And ultimately, I don’t endorse it. The best versions are more sophisticated than the critics recognise and better than most people think. But there are still cases where I feel it reaches the wrong conclusion. And it is very brittle — imperfect attempts to follow it can lead to very bad outcomes.

However, there are also key parts of utilitarianism that are not controversial. It is not controversial that outcomes really matter. As the famous opponent of utilitarianism, John Rawls, put it:

‘All ethical doctrines worth our attention take consequences into account in judging rightness. One which did not would simply be irrational, crazy.’

And it is not controversial that a key part of these consequences is the effect on people’s wellbeing.

As I studied utilitarianism, I saw some key things that became clear through that lens, but which weren’t often discussed.

One was the moral importance of producing positive outcomes. Most ethical thought focuses on avoiding harm or mistreatment. It accepts that actively helping people is great, but says very little about how to do it, or that it could be truly important.

And another was caring about how much good we do: the idea that giving a benefit to ten people is ten times more important than giving it to one.

I saw that these two principles had surprising and neglected implications, especially when combined with empirical facts about cost-effectiveness in global health. They showed that the fact that each person living in a rich country could save about 100 lives if they really wanted to is a key part of our moral predicament.

And they showed that when people (or nations) donate to help others, it really matters where they give: it’s the difference between whether they end up fulfilling just 1% of their potential or 100%. It’s almost everything.

These principles were not especially controversial — but they were almost completely neglected by moral thinkers — even those focusing on global health or global poverty. I wondered: could I build a broad tent around these robustly good ideas that the utilitarians had found?

I could leave behind the controversial claims of utilitarianism:

- that only effects on wellbeing mattered

- that these should be simply added together

- and that wellbeing only took only the form of happiness and suffering.

Instead, I could allow people to combine the ideas about the importance of doing good at scale with almost any other approach to moral thinking they may have started with. It was a place where one could draw surprisingly strong practical conclusions from surprisingly weak assumptions.

Because of the focus on the positive parts of moral life, I started calling this approach ‘positive ethics’ in 2010 and it was one of the main ingredients of what by 2012 we’d started calling ‘effective altruism’.

Unlike utilitarianism, effective altruism is not a moral theory. It is only a partial guide. It doesn’t say ‘that is all there is to ethics or value’. And it’s compatible with a real diversity of approaches to ethics: side-constraints, options, the distinction between acts and omissions, egalitarianism, allowing the intentions behind an act to matter, and so on.

Indeed, it is compatible with almost anything, just so long as we can still agree that saving a life is a big deal, and saving ten is a ten times bigger deal.

But if a way of helping a lot of people or animals conflicted with these other parts of ethics, you would want to stop and seriously think about what to do. Are there alternatives that get most of the value while avoiding these other problems? And how should one make the right trade-offs?

Effective altruism itself doesn’t have much to say about resolving such conflicts. Like similar guides to action such as environmentalism or feminism, it isn’t trying to be a complete theory. All three point to something important that others are neglecting, but don’t attempt to define how important all other facets of moral life are in comparison so that one can resolve these conflicts. Instead it is taken for granted that people from many different moral outlooks can join in the projects of environmentalism or feminism — or effective altruism.

To the extent that EA has been prescriptive about such things, it is generally to endorse widely held prohibitions on action. For example, in The Precipice I wrote:

Don’t act without integrity. When something immensely important is at stake and others are dragging their feet, people feel licensed to do whatever it takes to succeed. We must never give in to such temptation. A single person acting without integrity could stain the whole cause and damage everything we hope to achieve.

Others have made similar statements. I’d thought they were really quite explicit and clear. But I certainly wish we’d made them even more clear.

Lessons from the Philosophy of Doing Good

I think EA may still have more to learn from the centuries of philosophical thought on producing good outcomes.

A common definition of Utilitarianism is that:

An act is right if and only if it maximises total wellbeing.

There are two key problems with this way of defining it: the focus on maximising; and the sole focus on acts.

Here is a simplified case to help explain why maximisation isn’t quite the right idea, even in theory:

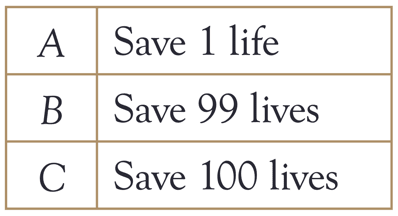

You have three options: save 1 life, save 99 lives, or save 100 lives. I think the big difference is between Aand the others. Of these options, A is mediocre, while the other two are great. But maximisation says the big difference is between C and the others. C is maximal and therefore right, while the others are both wrong.

I don’t think that is a good representation of what is going on here morally speaking. Sure, if you can get the maximum — great; that’s even better. But it’s just not that important compared to getting most of the way there. The moral importance at stake is really scalar, not binary.

And here is a case that helps explain why maximisation can be dangerous in practice:

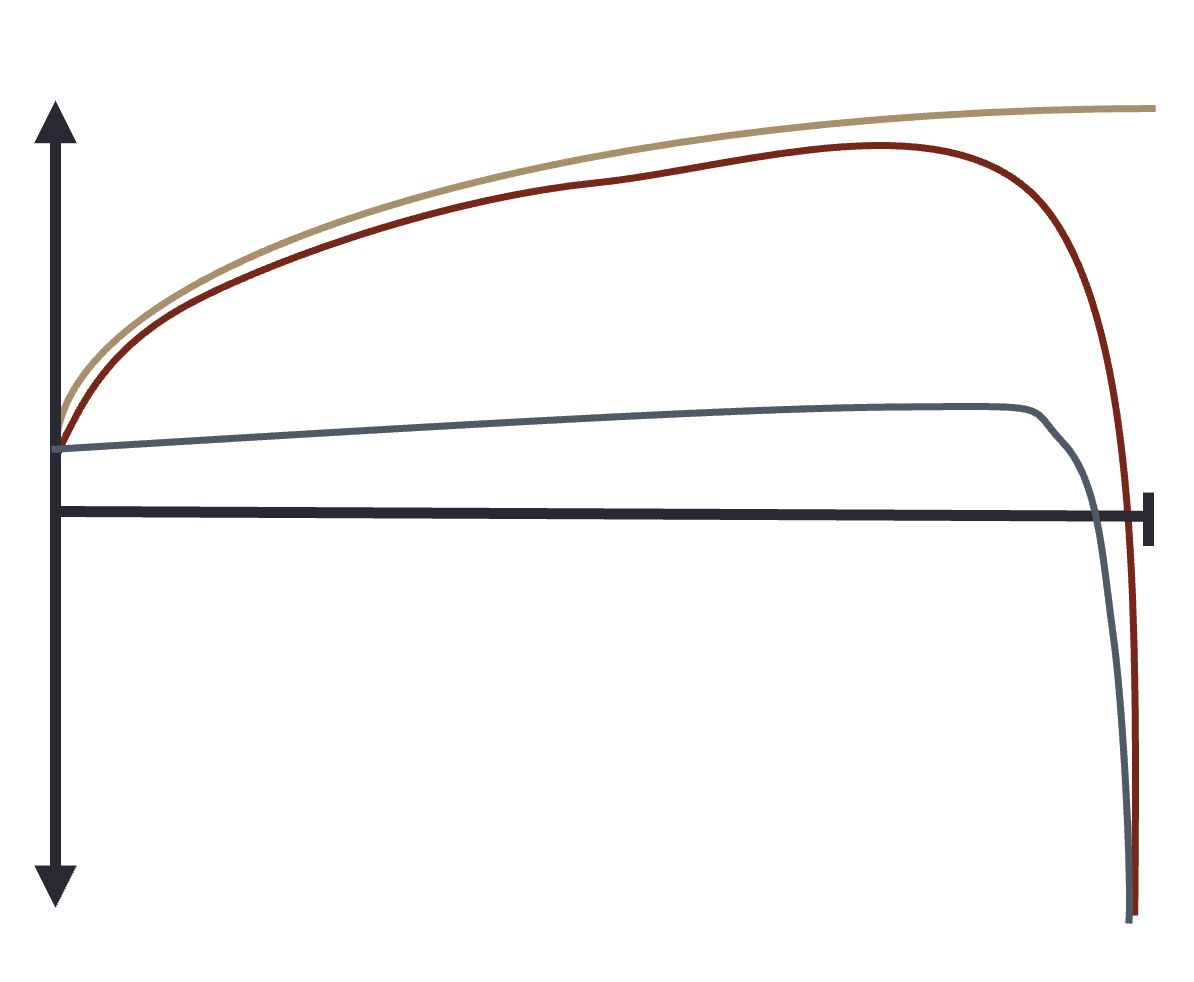

The vertical axis is supposed to represent how good the outcome will be, while the horizontal axis represents how much optimisation pressure is being brought to bear.

First let’s consider what happens if goodness is defined in terms of total happiness and you are optimising for total happiness. This is the gold curve: the situation gets better, but perhaps with diminishing returns. But what happens if you are still optimising for total happiness but we are considering things from a different value system, where happiness matters, but other things do too. This is the red curve: it starts off improving, but as you get close to full optimisation, lots of the other things are lost in order to eke out a bit more happiness. The same would be true for many other value systems (such as the grey curve).

You could think of this as an argument for the fragility of maximisation — if the thing you are maximising is even slightly off, it can go very wrong in the extremes. As Holden Karnofsky put it, in a rather prescient article: ‘Maximization is Perilous’.

This is a problem when you have any moral uncertainty at all. And even if you were dead certain that utilitarianism was right, it would be a problem if you are trying to work together in a community with other people who also want to do good, but have different conceptions of what that means — it is more cooperative and more robust to not go all the way. (Some of you will note that this is the same argument for how things can go wrong if an AI were to truly maximise its reward function.)

As it happens, some of the earliest formulations of utilitarianism, such as John Stuart Mill’s were scalar rather than maximising, and more recent utilitarians seem to be converging on that being the best way. I think that effective altruism would do well to learn this lesson too. A focus on excellence rather than perfection. A focus on the idea that scale matters: that getting twice as much benefit matters as much as all that you achieved so far, so it is really worth seeing if you can reach that rather than settling for doing quite a lot of good. But not a fixation on the absolute maximum — on getting from 99% to 100%.

How should a utilitarian go about deciding what to do? The most common, unreflective, answer is to make all your decisions by constantly calculating which act has the best consequences. Most people who teach ethics at universities imply that this is part and parcel of what utilitarianism means. And they may then point out that this is self-defeating: due to a range of human imperfections and biases, it is likely to lead to a worse outcome, even by utilitarian measures.

But utilitarian philosophers don’t recommend it. Indeed for over 200 years, they have explicitly called it out as a mistaken interpretation of their theory, and it has come to be known as ‘Naïve Utilitarianism’. Instead, they recommend making decisions in a way that will lead to good outcomes — whatever that method happens to be. If following common-sense morality would lead to the best outcomes, then by all means use that.

They see utilitarianism as an idealised criterion that ultimately grounds what it means for one choice to be better than another — that it leads to a better outcome. But they distinguish this from a decision procedurefor day-to-day use.

The same is true for motives. Universal benevolence sounds very utilitarian, but it is not always the best motive. Motives should be assessed in terms of the outcomes they lead to. Even Jeremy Bentham explicitly said this. It is OK to do things out of love for particular people or out of rage at injustice.

More recently, some consequentialist philosophers (those who are committed to outcomes being the only things that matter, but not to what makes an outcome good) have tried to systematize this thinking. They put forward an approach called global consequentialism that says to assess every kind of focal point in terms of the quality of outcome it leads to: acts, decision procedures, motives, character traits, institutions, laws — you name it.

Believe it or not, I’ve actually written a book about this.

This was the topic of my dissertation at Oxford. And while lots of dissertations are a pretty miserable read, I went to a lot of effort to write it as a readable book (albeit an academic book!). The idea was to publish it when I finished, but one month after I finished my thesis I met a student called William MacAskill, and got distracted.

I’m still distracted, and not sure when that will end, so as of this morning, I put the full text up on my website.

It starts by observing that the three main traditions in Western philosophy each emphasize a different focal point:

Consequentialism emphasises Acts.

And from a certain perspective it can seem like that covers everything in ethics: every single time you are deciding what to do you are assessing acts. Isn’t ethics about deciding what to do?

Deontology emphasises Rules.

And from a certain perspective it can seem like that covers everything in ethics: ethics is a system of rules governing action. Rules are closely related to what we called ‘decision procedures’ a moment ago. And isn’t ethics about how we should decide what to do? (i.e. which decision procedure to follow?)

Virtue ethics emphasises Character.

And from a certain perspective it can seem like that is what ethics is all about: what makes someone a good person? How can I live a more virtuous life?

But interestingly, these don’t really have to be in disagreement with each other. It is almost like they are talking past each other. So I thought: could there be a way of unifying these three traditions?

One thing I had to do was to provide a coherent theory of global consequentialism — one that ties up some loose ends in the earlier formulations and is logically sound. Then armed with that, I showed how it has the resources to capture many of the key intuitions about rules and character that make deontology and virtue ethics compelling. To use an analogy of my supervisor, Derek Parfit, it was like all three traditions had been climbing the same mountain, but from different sides.

If Naïve Utilitarianism leads to bad outcomes, what kind of decision procedure leads to good outcomes? Here’s my best guess:

- Stay close to common-sense on almost everything.

It encodes the accumulated wisdom of thousands of years of civilisation (and hundreds of thousands of years before that). Indeed, even when the stated reasons for some rule are wrong, the rule itself can still be right — preserved because it leads to good outcomes, even if we never found out why.

- Don’t trust common-sense morality fully — but trust your deviations from it even less.

It has survived thousands of years; your clever idea might not survive even one.

- Explore various ways common-sense might be importantly wrong.

Discuss them with friends and colleagues. There have been major changes to common-sense morality before, and finding them is extremely valuable.

- Make one or two big bets.

For example mine were that giving to the most cost-effective charities is a key part of a moral life and that avoiding existential risk is a key problem of our time.

- But then keep testing these ideas.

Listen to what critics say — most new moral ideas are wrong.

- And don’t break common-sense rules to fulfil your new ideas.

What about character? We can assess people’s characters (and individual character traits) by their tendency to produce good outcomes. The philosopher Julia Driver pioneered this approach.

We can go through various character traits and think about whether they are conducive to good outcomes, calling those that are conducive ‘virtues’. This will pick out character traits that have much in common with the classical conception of virtues. They are not knowable by pure reason or introspection, but require experience.

I think the importance of character is seriously neglected in EA circles. Perhaps one reason is that unlike many other areas, we don’t have comparative advantage when it comes to identifying virtues. This means that we should draw on the accumulated wisdom as a starting point.

Here are some virtues to consider.

There are virtues that help anyone achieve their aims, even if they were living on a desert island. These are less distinctively moral, but worth having. Things like: patience, determination, and prudence.

There are virtues relating to how we interact with others. Such as: generosity, compassion, humility, integrity, and honesty. I think we’re pretty good at generosity and compassion, actually. And sometimes good at humility too.

I think integrity deserves more focus than we give it. It is about consistently living up to your values; acting in a principled way in private — living up to your professed values even when no-one else is there to see. One of the features of integrity is that it allows others to trust your actions, just as honesty allows them to trust your words.

Another interesting set of character traits, that are not always identified as virtues are things like: authenticity, earnestness, and sincerity. I think these are character traits we could stand to see more of in EA.

An earnest disposition allows your motivation to be transparent to others — for everyone to be able to see the values that guide you. I think most of us who are drawn to effective altruism start with a lot earnestness, but then lose some over time — either to wanting to join the cool kids in their jaded cynicism, or to thinking it must be better to wear a poker face and choose your words carefully.

But the thing about earnestness is that it forces you to be honest and straightforward. If someone talks of something while brimming with earnest enthusiasm, it is transparent to all that they care about it. That it isn’t some kind of ploy. I think this is especially important for EA. Lots of people think we must have some kind of ulterior motives. But we often don’t. If we are cynical or detached, people can’t know either way. But earnestness and unabashed excitement is something that is hard to fake, so helps prove good intent.

Armed with this focus on character, one obvious thing to do is to try to inculcate such virtues in ourselves: to put effort into developing and maintaining these character traits. But that is not the whole story. We should also try to promote and reward good character in others. And we should avoid vouching for people with flawed characters, or joining their projects. Even if you aren’t sure that someone has a flawed character, if you have doubts, be careful. And we should put more focus on character into our community standards.

Is character really as important as other focal points, like selecting causes or interventions?

For some choices, the best options are hundreds of times better than the typical ones; thousands of times better than poor ones. This was one of the earliest results of EA — By really focusing on finding the very best charities, we can have outsized impact.

Am I saying that the same is true for character? That instead of trying to find the most amazing charity, we would do just as well if EA was all about having the most amazing character possible?

No. It’s important in a different way.

I think a really simple model of the impact of character goes like this:

The inherent quality of character doesn’t vary anywhere near as much. Imagine a factor that ranges from something like –5 to +5, where 1 is the typical value. And this factor acts as a multiplier for impact. The bigger the kind of impact someone is planning, the more important it is that they have good character. If they have an unusually good character, they might be able to create substantially more impact. But if they have a particularly flawed character, the whole thing could go into reverse. This is especially true if their impact goes via an indirect route like first accumulating money or power.

Now, charities can also end up having negative cost-effectiveness — where the more money you donate, the worse things are. But in our community we spend so much time on evaluating them, that we can usually avoid the negative ones and are trying to distinguish the good from the great. But we don’t have anything like the same focus on character, so we run a much greater risk of having people whose character is a negative multiplier on their impact. And the higher impact their work is, the more that matters.

Let’s take a moment to return to what happened at FTX last year.

I don’t think anyone fully understands what motivated Sam (or anyone else who was involved). I don’t know how much of it was greed, vanity, pride, shame, or genuinely trying to do good. But one thing we do know is that he was already a committed utilitarian before he even heard about EA.

And it increasingly seems he was that most dangerous of things — a naive utilitarian — making the kind of mistakes that philosophers (including the leading utilitarians) have warned of for centuries. He had heard of the various sophistications needed to get the theory to work but seems to have dismissed them as being ‘soft’.

If I’m right, then what he thought of as hardcore bullet biting bravado was really just dangerous naivety. And the sophistications that he thought were just a sop to conventional values were actually essential parts of the only consistent form of the theory he said he endorsed.

Conclusion

I hope it was useful to see some of the history, and how effective altruism was in part designed to capture some of the uncontroversially good ideas that utilitarians had found, without the controversial commitments — that doing good at scale really matters (but not that nothing else matters).

It has been a hard year — and we haven’t fully sorted through its import. But I’m becoming excited about some of these new ways of moving forward and improving the ideas and community of effective altruism. And I’m especially excited about getting back to the ultimate work of helping those who need us.

Toby_Ord @ 2023-03-24T16:05 (+59)

I should note that I don’t see a stronger focus on character as the only thing we should be doing to improve effective altruism! Indeed, I don’t even think it is the most important improvement. There have been many other suggestions for improving institutions, governance, funding, and culture in EA that I’m really excited about. I focused on character (and decision procedures) in my talk because it was a topic I hadn’t seen much in online discussions about what to improve, because I have some distinctive expertise to impart, and because it is something that everyone in EA can work on.

Nathan Young @ 2023-03-25T16:59 (+42)

This feels like a missed opportunity.

My sense is that this was an opportunity to give a "big picture view" rather than note a particular underrated aspect.

If you think there were more important improvements, why not say them, at least as context, in one of the largest forums on this topic?

Thanks for your work :)

jackva @ 2023-03-25T17:18 (+18)

I had the same impression, that a big picture view talk was expected but that the actual talk was focused on a single issue and in a fairly philosophical framing.

So I thought it was an excellent talk but it still gave me a strange feeling as the dominant and first EAG response to what happened (I know this made sense given other sessions covering other aspects, but I am unsure it will be perceived this way (one slice of the puzzle) given its keynote character).

MichaelPlant @ 2023-03-27T10:26 (+12)

I want to second this being a missed opportunity as being a missed opportunity to talk about wider issues, including governance (I tweeted so at the time). Listeners are likely to interpret, from your focus on character, and given your position as a leading EA speaking on the most prominent platform in EA - the opening talk at EAG - that this is all effective altruists should think about. But we don't try to stop crimes just by encouraging people to have good character. And, if the latest Time article is to be believed, there was lots of evidence of SBF's bad character, but this seemingly wasn't sufficient to avert or mitigate disaster.

I still find it surprising and disappointing that there has been no substantive public discussion of governance reform from EA leaders (I keep asking people to point me to some, but no one has!). At the very least you'd have expected someone to do the normal academic thing of "we considered all these options, but we ruled them out, which is why we're sticking with the status quo".

matthew.vandermerwe @ 2023-03-27T16:29 (+45)

Listeners are likely to interpret, from your focus on character, and given your position as a leading EA speaking on the most prominent platform in EA - the opening talk at EAG - that this is all effective altruists should think about.

Really? I don't think I've ever encountered someone interpreting the topic of an EAG opening talk as being "all EAs should think about".

MichaelPlant @ 2023-03-27T17:08 (+3)

Maybe I should have phrased what I'd said somewhat differently, but I expect EAs to very heavily take their cues from what established community leaders say, particularly when they speak in the 'prime time' slots.

Jonathan Mannhart @ 2023-03-25T14:19 (+15)

I value the “it is something that everyone in EA can work on“-sentiment.

Particularily in these times, I think it is excellent to find things that (1) seem robustly good and (2) we can broadly agree on as a community to do more of. It can help alleviate feelings of powerlessness (and help with this is, I believe, one of the things we need.)

This seems to be one of those things. Thanks!

NewLeaf @ 2023-03-25T12:18 (+7)

I'm really grateful that you gave this address, especially with the addition of this comment. Would you be willing to say more about which other suggestions for improvement you would be excited to see adopted in light of the FTX collapse and other recent events? For the reasons I gave here, I think it would be valuable for leaders in the EA community to be talking much more concretely about opportunities to reduce the risk that future efforts inspired by EA ideas might cause unintended harm.

CristinaSchmidtIbáñez @ 2023-03-24T17:34 (+21)

The bigger the kind of impact someone is planning, the more important it is that they have a good character.

Loved this line. And I second Rockwell's opinion: It's one of my favorite presentations I've seen at an EAG.

Rockwell @ 2023-03-24T17:29 (+16)

Thanks for sharing this, including the text! It was one of my favorite presentations I've seen at an EAG. Also, I really loved the line, "And it increasingly seems he was that most dangerous of things — a naive utilitarian," and I'm waiting for the memes to roll in!

JP Addison @ 2023-03-26T16:43 (+15)

Hi Toby, thanks so much for posting a transcript of this talk, and for giving the talk in the first place. It has been one of my key desires in community building to see more leaders engaging with the EA community post-FTX. This talk strikes a really good tone, and I dunno, impact measures are hard, but I suspect it was pretty important for how good of vibes EAG Bay Area felt over all, and I'm excited for the Forum community to read it.

I overall like the content of the talk, but will be expressing some disagreements. First, though, things I liked about the content: I conceptually love the "strive for excellence" frame, and the way you made "maximization is perilous" much more punchy for me than Holden's post did. I see the core of your talk as being about your dissertation. I overall think it was a valuable contribution to my models.

The core of my disagreement is about how we should think about naive utilitarianism/consequentialism. I'd like to introduce some new terms, which I find helpful when talking to people about this subject:

- Myopic consequentialism — e.g.: shoplifting to save money. Everyone can pretty easily see how this is going to go wrong pretty fast. It may be slightly nontrivial to work out how ("Nobody can see me! I'm really confident!"), but basically everyone agrees this is the bad kind of utilitarianism.

- Base-level consequentialism — My read is that you, and most people who ground their morality in a fundamentally consequentialist way, start from here. It's the consequentialism you see in intro philosophy definitions. Good consequences are good.

- Multi-layered consequentialism — We can start adding things on to our base-level consequentialism, to rescue it from the dangers of myopia that come from unrefined base-level consequentialism as a decision criterion.

I know many people who are roughly consequentialist. Everyone must necessarily use some amount of heuristics to guide decisions, instead of trying to calculate out the consequences of each breath they take. But in my experience, people vary substantially in how much they reify their heuristics into the realm of morality vs simply being useful ways to help make decisions.

For example, many people will have a pretty bright line around lying. Some might elevate that line into a moral dictum. Others might have it as a “really solid heuristic”, maybe even one that they commit to never violating. But lying is a pretty easy case. There’s a dispositional approach to “how much of my decision-making is done by thinking directly about consequences.”

My best guess is that Toby will be too quick to create ironclad rules for himself, or pay too much attention to how a given decision fits in with too many virtues. But I am really uncertain whether I actually disagree. The disagreement I have is more about, "how can we think about and talk about adding layers to our consequentialism", and, crucially, how much respect can we have for someone who is closer to the base-level.

I'm not suggesting that we be tolerant of people who lie or cheat or have very bad effects on important virtues in our community. In fact I want to be say that the people in my life who are clear enough with themselves and with me about the layers on top of their consequentialism that they don't hold sacred, are generally very good models of EA virtues, and very easy to coordinate with, because they can take the other values more seriously.

Holly Morgan @ 2023-03-26T11:04 (+12)

"A focus on excellence rather than perfection."

I really like this.

(And I feel like it completes the aphorism "Perfect is the enemy of the good," which I believe you told me in 2010 when I was overstretching myself.)

Geoffrey Miller @ 2023-03-24T18:48 (+12)

Toby - thanks for sharing this wonderful, wise, and inspiring talk.

I hope EAs read it carefully and take it seriously.

HakonHarnes @ 2023-03-26T22:15 (+8)

To echo the general sentiment, I also want to express my gratitude and appreciation for this talk. I found it warm, inclusive and positive. Thanks!

AïdaLahlou @ 2026-02-19T10:57 (+1)

Strongly upvoted this. It's three years old but as relevant now as ever

I really liked the nuance captured about the perils of maximisation, also very clearly expressed by @Holden Karnofsky with this post: EA is about maximization, and maximization is perilous

All of it really resonates with how I conceive of to the be the best way to do Effective Altruism, especially the bits about why naive utilitarianism is bad and about being respectful of common sense morality!

Clare G @ 2025-07-15T21:27 (+1)

I am interested to know more about this:

'I think the importance of character is seriously neglected in EA circles. Perhaps one reason is that unlike many other areas, we don’t have comparative advantage when it comes to identifying virtues. This means that we should draw on the accumulated wisdom as a starting point.'