Samotsvety Nuclear Risk Forecasts — March 2022

By NunoSempere, Misha_Yagudin, elifland @ 2022-03-10T18:52 (+155)

Thanks to Misha Yagudin, Eli Lifland, Jonathan Mann, Juan Cambeiro, Gregory Lewis, @belikewater, and Daniel Filan for forecasts. Thanks to Jacob Hilton for writing up an earlier analysis from which we drew heavily. Thanks to Clay Graubard for sanity checking and to Daniel Filan for independent analysis. This document was written in collaboration with Eli and Misha, and we thank those who commented on an earlier version.

Overview

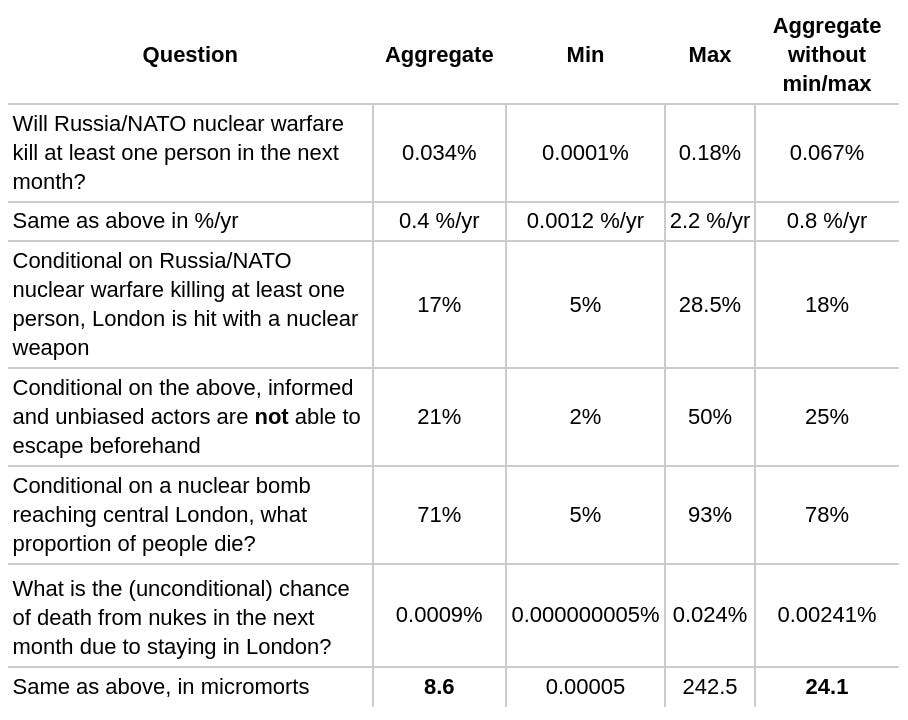

In light of the war in Ukraine and fears of nuclear escalation[1], we turned to forecasting to assess whether individuals and organizations should leave major cities. We aggregated the forecasts of 8 excellent forecasters for the question What is the risk of death in the next month due to a nuclear explosion in London? Our aggregate answer is 24 micromorts (7 to 61) when excluding the most extreme on either side[2]. A micromort is defined as a 1 in a million chance of death. Chiefly, we have a low baseline risk, and we think that escalation to targeting civilian populations is even more unlikely.

For San Francisco and most other major cities[3], we would forecast 1.5-2x lower probability (12-16 micromorts). We focused on London as it seems to be at high risk and is a hub for the effective altruism community, one target audience for this forecast.

Given an estimated 50 years of life left[4], this corresponds to ~10 hours lost. The forecaster range without excluding extremes was <1 minute to ~2 days lost. Because of productivity losses, hassle, etc., we are currently not recommending that individuals evacuate major cities.

Methodology

We aggregated the forecasts from eight excellent forecasters between the 6th and the 10th of March. Eli Lifland, Misha Yagudin, Nuño Sempere, Jonathan Mann and Juan Cambeiro[5] are part of Samotsvety, a forecasting group with a good track record — we won CSET-Foretell’s first two seasons, and have great track records on various platforms. The remaining forecasters were Gregory Lewis[6], @belikewater, and Daniel Filan, who likewise had good track records.

The overall question we focused on was: What is the risk of death in the next month[7] due to a nuclear explosion in London?. We operationalized this as: “If a nuke does not hit London in the next month, this resolves as 0. If a nuke does hit London in the next month, this resolves as the percentage of people in London who died from the nuke, subjectively down-weighted by the percentage of reasonable people that evacuated due to warning signs of escalation.” We roughly borrowed the question operationalization and decomposition from Jacob Hilton.

We broke this question down into:

- What is the chance of nuclear warfare between NATO and Russia in the next month?

- What is the chance that escalation sees central London hit by a nuclear weapon conditioned on the above question?

- What is the chance of not being able to evacuate London beforehand?

- What is the chance of dying if a nuclear bomb drops in London?

However, different forecasters preferred different decompositions. In particular, there were some disagreements about the odds of a tactical strike in London given a nuclear exchange in NATO, which led to some forecasters preferring to break down (2.) into multiple steps. Other forecasters also preferred to first consider the odds of direct Russia/NATO confrontation, and then the odds of nuclear warfare given that.

Our aggregate forecast

We use the aggregate with min/max removed as our all-things-considered forecast for now given the extremity of outliers. We aggregated forecasts using the geometric mean of odds[8].

Note that we are forecasting one month ahead and it’s quite likely that the crisis will get less acute/uncertain with time. Unless otherwise indicated, we use “monthly probability” for our and readers' convenience.

Comparisons with previous forecasts

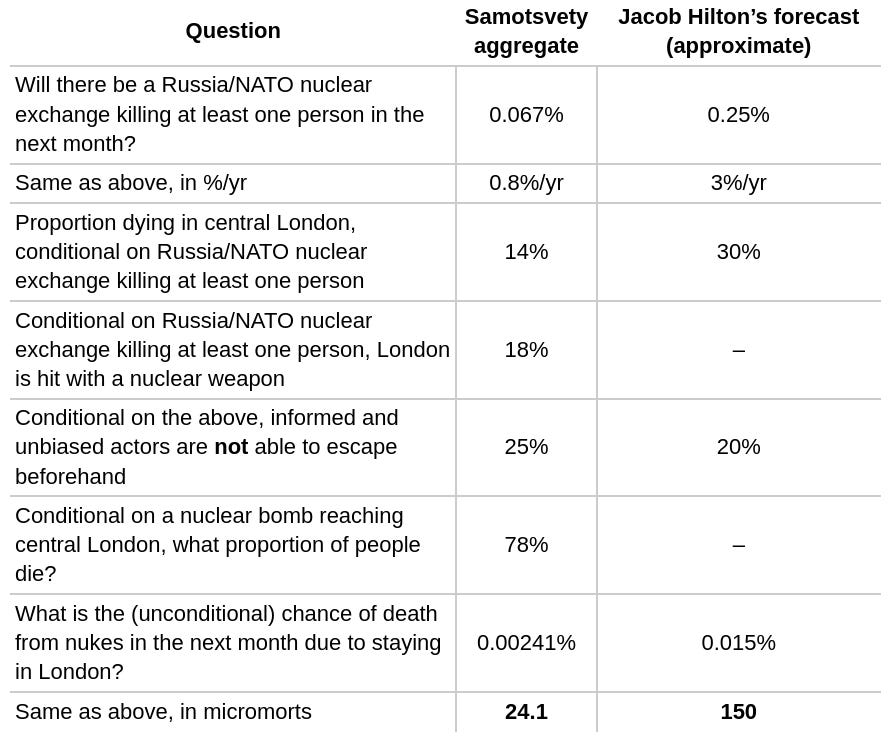

We compared the decomposition of our forecast to Jacob Hilton’s to understand the main drivers of the difference. We compare to Jacob’s revised forecast he made after reading comments on his document. Note that Jacob forecasted on the time horizon of the whole crisis then estimated 10% of the risk was incurred in the upcoming week. We guess that he would put roughly 25% over the course of a month which we forecasted (adjusting down some from weekly * 4), and assume so in the table below. The numbers we assign to him are also approximate in that our operationalizations are a bit different than his.

We are ~an order of magnitude lower than Jacob. This is primarily driven by (a) a ~4x lower chance of a nuclear exchange in the next month and (b) a ~2x lower chance of dying in London, given a nuclear exchange.

(a) may be due to having a lower level of baseline risk before adjusting up based on the current situation. For example, while Luisa Rodríguez’s analysis puts the chance of a US/Russia nuclear exchange at .38%/year. We think this seems too high for the post-Cold War era after new de-escalation methods have been implemented and lessons have been learnt from close calls. Additionally, we trust the superforecaster aggregate the most out of the estimates aggregated in the post.

(b) is likely driven primarily by a lower estimate of London being hit at all given a nuclear exchange. Commenters mentioned that targeting London would be a good example of a decapitation strike. However, we consider it less likely that the crisis would escalate to targeting massive numbers of civilians, and in each escalation step, there may be avenues for de-escalation. In addition, targeting London would invite stronger retaliation than meddling in Europe, particularly since the UK, unlike countries in Northern Europe, is a nuclear state.

A more likely scenario might be Putin saying that if NATO intervenes with troops, he would consider Russia to be "existentially threatened" and that he might use a nuke if they proceed. If NATO calls his bluff, he might then deploy a small tactical nuke on a specific military target while maintaining lines of communication with the US and others using the red phone.

Appendix A: Sanity checks

We commissioned a sanity check from Clay Graubard, who has been following the situation in Ukraine more closely. His somewhat rough comments can be found here.

Graubard estimates the likelihood of nuclear escalation in Ukraine to be fairly low (3%: 1 to 8%), but didn’t have a nuanced opinion on escalation beyond Ukraine to NATO (a very uncertain 55%: 10 to 90%). Taking his estimates at face value, this gives a 1.3%/yr of nuclear warfare between Russia and NATO, which is in line with our 0.8 %/yr estimate.

He further highlighted further sources of uncertainty, like the likelihood that the US would send anti-long range ballistic missile interceptors, which the UK itself doesn’t have. He also pointed out that in case of a nuclear bomb dropping in a highly populated city, Putin might choose to give a warning.

Daniel Filan also independently wrote up his own thoughts on the matter: his more engagingly written reasoning can be found here (shared with permission): he arrives at an estimate of ~100 micromorts. We also incorporated his forecasts into our current aggregate.

We also got reviewed by a nuclear expert. Their estimate is an order of magnitude larger, but as we point in a response, this comes down to thinking that “core EAs” would not be able to evacuate on time (3x difference) and using suboptimal aggregation methods for Luisa Rodríguez’s collection of estimates (another 1.5x to 3x reduction). After the first adjustment, their forecast is within our intra-group range; after the second adjustment, he is really close to our estimate. Some of our forecasters found this reassuring (but updated somewhat on other disagreements.) Given that experts are generally more pessimistic and given selection effects, we think overall that review is a good sign for us.

Update 2022/05/11: Zvi Moskovitz also gives his own estimates, and ends up between the Samotsvety forecast and the above-mentioned nuclear expert.

Update 2022/05/01: Peter Wildeford looks at the chance of nuclear war.

Appendix B: Tweaking our forecast

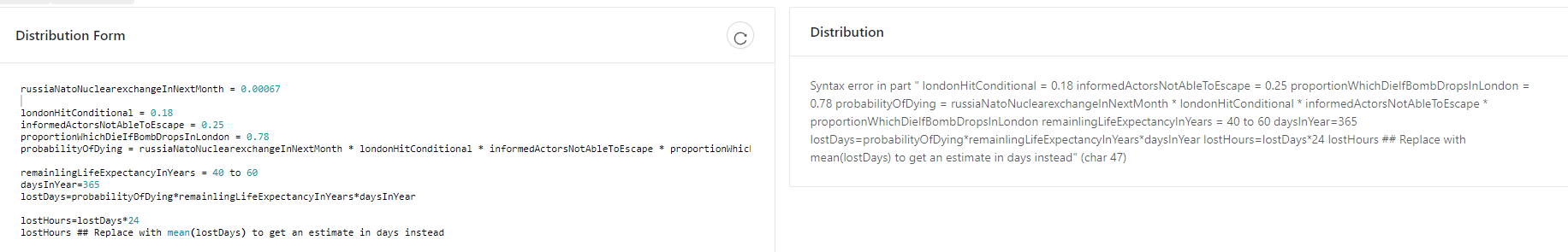

Here are a few models one can play around with by copy-and-pasting them into the Squiggle alpha.

Simple model

russiaNatoNuclearexchangeInNextMonth = 0.00067

londonHitConditional = 0.18

informedActorsNotAbleToEscape = 0.25

proportionWhichDieIfBombDropsInLondon = 0.78

probabilityOfDying = russiaNatoNuclearexchangeInNextMonth * londonHitConditional * informedActorsNotAbleToEscape * proportionWhichDieIfBombDropsInLondon

remainlingLifeExpectancyInYears = 40 to 60

daysInYear=365

lostDays=probabilityOfDying*remainlingLifeExpectancyInYears*daysInYear

lostHours=lostDays*24

lostHours ## Replace with mean(lostDays) to get an estimate in days insteadOvercomplicated models

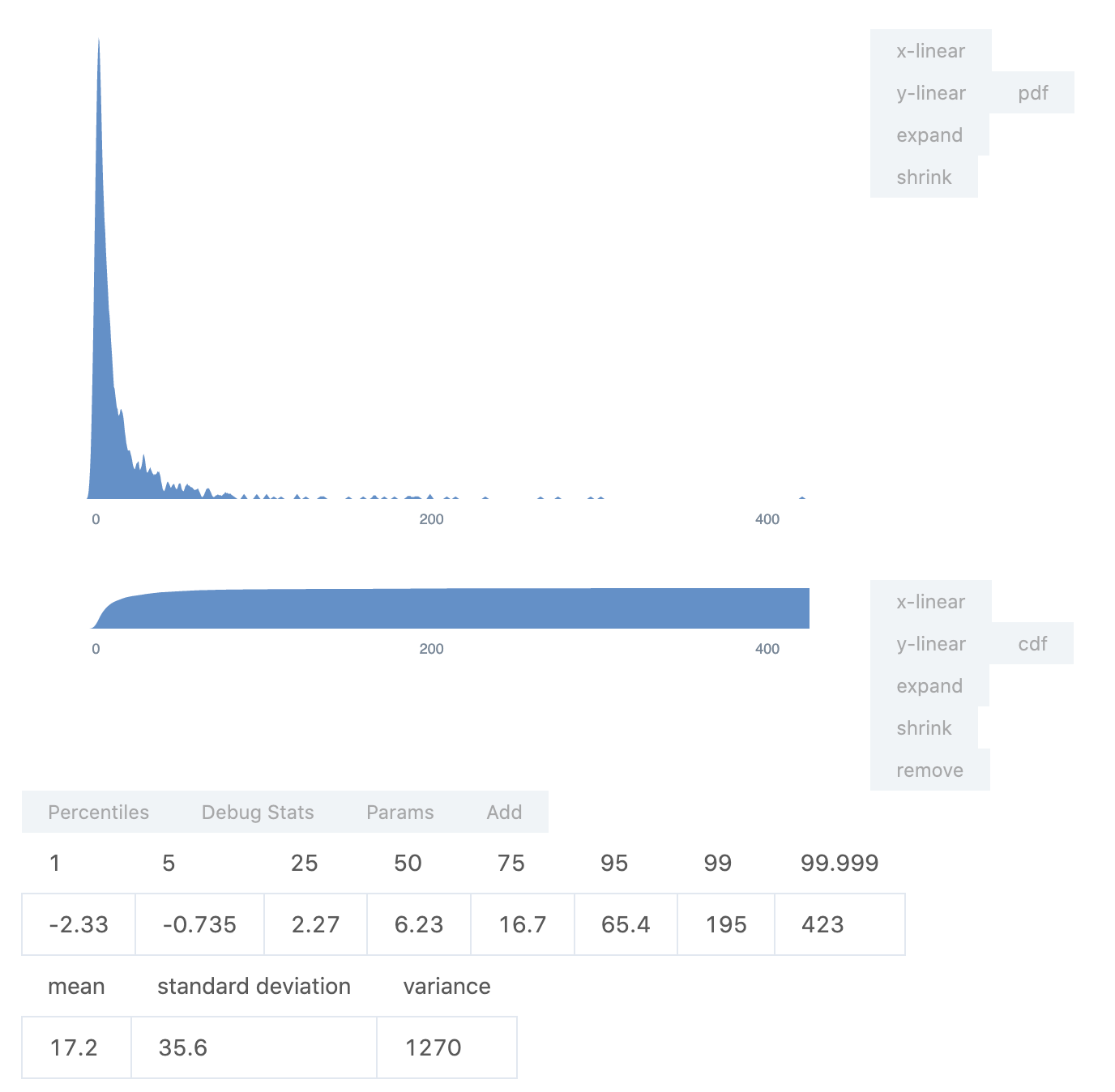

These models have the advantage that the number of informed actors not able to escape, and the proportion of Londoners who die in the case of a nuclear explosion are modelled by ranges rather than by point estimates. However, the estimates come from individual forecasters, rather than representing an aggregate (we weren’t able to elicit ranges when our forecasters were convened).

Nuño Sempere

firstYearRussianNuclearWeapons = 1953

currentYear = 2022

laplace(firstYear, yearNow) = 1/(yearNow-firstYear+2)

laplacePrediction= (1-(1-laplace(firstYearRussianNuclearWeapons, currentYear))^(1/12))

laplaceMultiplier = 0.5 # Laplace tends to overestimate stuff

russiaNatoNuclearexchangeInNextMonth=laplaceMultiplier*laplacePrediction

londonHitConditional = 0.16 # personally at 0.05, but taking the aggregate here.

informedActorsNotAbleToEscape = 0.2 to 0.8

proportionWhichDieIfBombDropsInLondon = 0.6 to 1

probabilityOfDying = russiaNatoNuclearexchangeInNextMonth*londonHitConditional*informedActorsNotAbleToEscape*proportionWhichDieIfBombDropsInLondon

remainlingLifeExpectancyInYears = 40 to 60

daysInYear=365

lostDays=probabilityOfDying*remainlingLifeExpectancyInYears*daysInYear

lostHours=lostDays*24

lostHours ## Replace with mean(lostDays) to get an estimate in days instead

Eli Lifland

Note that this model was made very quickly out of interest and I wouldn’t be quite ready to endorse it as my actual estimate (my current actual median is 51 micromorts so ~21 lost hours).

russiaNatoNuclearexchangeInNextMonth=.0001 to .003

londonHitConditional = .1 to .5

informedActorsNotAbleToEscape = .1 to .6

proportionWhichDieIfBombDropsInLondon = 0.3 to 1

probabilityOfDying = russiaNatoNuclearexchangeInNextMonth*londonHitConditional*informedActorsNotAbleToEscape*proportionWhichDieIfBombDropsInLondon

remainingLifeExpectancyInYears = 40 to 60

daysInYear=365

lostDays=probabilityOfDying*remainingLifeExpectancyInYears*daysInYear

lostHours=lostDays*24

lostHours ## Replace with mean(lostDays) to get an estimate in days instead

Footnotes

- ^

- ^

3.1 (0.0001 to 112.5) including the most extreme to either side.

- ^

Excluding those with military bases

- ^

This could be adjusted to consider life expectancy and quality of life conditional on nuclear exchange

- ^

who is also a Superforecaster®

- ^

Likewise a Superforecaster®

- ^

By April the 10th at the time of publication

- ^

See When pooling forecasts, use the geometric mean of odds. Since then, the author has proposed a more complex method that we haven’t yet fully understood, and is more at risk of overfitting. Some of us also feel that aggregating the deviations from the base rate is more elegant, but that method has likewise not been tested as much.

abukeki @ 2022-03-12T02:48 (+25)

You mentioned the successful SM-3 intercept test in 2020. While it's true it managed to intercept an "ICBM-representative target", and can be based from ships anywhere they sail (thus posing a major potential threat to the Chinese/NK deterrent in the future), I don't know if I (or the US military) would call it a meaningful operational capability yet. For one we don't even know its success rate. The more mature (and only other) system with ICBM intercept capability, the Ground-Based Interceptor, has barely 50%. [1] I'm not sure what you meant by "sending it to Europe"[2] because US Navy Aegis ships carrying the SM-3 interceptors would have to be positioned close to under the flight path to intercept Russian ICBMs in midcourse flight. That's the weakness of a midcourse BMD strategy, and why China is developing things like Fractional Orbital Bombardment which lets them fire an ICBM at the US from any direction, not just the shortest one, to avoid overflying sea-based midcourse interceptors. The thing is because Russian ICBMs would likely fly over the North Pole the ships would probably have to be in the Arctic Ocean, which I'm unsure is even practical due to ice there etc. Anyway there'd be no hope of intercepting all the hundreds of ICBMs, even ignoring Russia's SLBM force which can be launched from anywhere in the world's oceans.

That's all to say, I don't see how that's a possible event which could happen and be escalatory? Of course if the US could do something in Europe which would actually threaten Russia's second strike capability that would be massively destabilizing, but they can't at this point. [3] Russia's deterrence is quite secure, for the time being.

I basically agree with Graubard's estimate, btw. But, I mean, 3/5% is still a lot...

Edit: I'm not sure who mentioned the 60% probability of successful detonation over London after launch, but that's absurd? Modern thermonuclear warhead designs have been perfected and are extremely reliable, very unlikely to fail/fizzle. Same goes for delivery systems. The probability of successful detonation over target conditioning on launch and no intercept attempts is well over 95%. London doesn't even have a token BMD shield to speak of, while Moscow is at least protected by the only nuclear-tipped ABM system left in the world.

There are lots of other details. Both GMD/GBI and Aegis BMD/Aegis Ashore are mainly intended to defend against crude ICBMs like those from NK, Russian/Chinese ones carry lots of decoys/penaids/countermeasures to make midcourse interception much harder and thus their success rates lower. Plus the claimed rate is in an ideal test environment, etc. ↩︎

Perhaps you meant construct more Aegis Ashore facilities? But there would have to be an AWFUL lot constructed to legitimately threaten the viability of Russia's deterrent, plus it would take tons of time (far, far longer than sailing a few Aegis destroyers over) and be very visible, plus I'm unsure they could even place them close enough to the northward flight trajectories of Russian ICBMs as mentioned to intercept them. ↩︎

Not for lack of trying since the dawn of the arms race. Thermonuke-pumped space x-ray lasers, bro. ↩︎

abukeki @ 2022-03-13T01:58 (+30)

Also, not sure where best to post this, but here's a nice project on nuclear targets in the US (+ article). I definitely wouldn't take it at face value, but it sheds some light on which places are potential nuclear targets at least, non-exhaustively.

NunoSempere @ 2022-03-13T13:46 (+2)

Thanks!

NunoSempere @ 2022-03-12T04:36 (+5)

tll;dr: The 60% was because I didn't really know much about the AMB capabilities early on and gave it around a 50-50. I updated upwards as we researched this more, but not by that much. This doesn't end up affecting the aggregate that much because other forecasters (correctly, as it now seems), disagreed with me on this.

Hey, thanks for the thoughtful comment, it looks like you've looked into ABM much more than I/(we?) have.

The 60% estimate was mine. I think I considered London being targetted but not being hit as a possibility early on, but we didn't have a section on it. By the time we had looked into ICMBs more, it got incorporated into "London is hit given an escalation" and then "Conditional on Russia/NATO nuclear exchange killing at least one person, London is hit with a nuclear weapon".

But my probability for "Conditional on Russia/NATO nuclear exchange killing at least one person, London is hit with a nuclear weapon" is the lowest in the group, and I think this was in fact because I was thinking that they could be intercepted. I think I updated a bit when reading more about it and when other forecasters pushed against that, but not that much.

Concretely, I was at ~5% for "Conditional on Russia/NATO nuclear exchange killing at least one person, London is hit with a nuclear weapon", and your comment maybe moves me to ~8-10%. I was the lowest in the aggregate for that subsection, so the aggregate of 24 micromorts doesn't include it, and so doesn't change. Or, maybe your comment does shift other forecasters by ~20%, and so the aggregate moves from 24 to 30 micromorts.

Overall I'm left wishing I had modeled and updated the "launched but intercepted" probability directly throughout.

Thanks again for your comment.

Misha_Yagudin @ 2022-03-12T17:43 (+4)

Thanks that is useful and interesting! (re: edit — I agree but maybe at 90% given some uncertainty about readiness.)

Scott Alexander @ 2022-03-11T19:43 (+21)

Thank you for doing this. I know many people debating this question, with some taking actions based on their conclusions, and this looks like a really good analysis.

kokotajlod @ 2022-03-12T16:12 (+3)

FWIW I'd wildly guess this analysis underestimates by 1-2 orders of magnitude, see this comment thread. ETA: People (e.g. Misha) have convinced me that 2 is way too high, but I still think 1 is reasonable. ETA: This forecaster has a much more in-depth analysis that says it's 1 OOM.

elifland @ 2022-03-12T17:32 (+20)

The estimate being too low by 1-2 orders of magnitude seems plausible to me independently (e.g. see the wide distribution in my Squiggle model [1]), but my confidence in the estimate is increased by it being the aggregated of several excellent forecasters, who were reasoning independently to some extent. Given that, my all-things-considered view is that 1 order of magnitude off[2] feels plausible but not likely (~25%?), and 2 orders of magnitude seems very unlikely (~5%?).

- ^

EDIT: actually looking closer at my Squiggle model I think it should be more uncertain on the first variable, something like russiaNatoNuclearexchangeInNextMonth=.0001 to .01 rather than .0001 to .003

- ^

Compared to a reference of what's possible given the information we have, with e.g. a group of 100 excellent forecasters would get if spending 1000 hours each.

Misha_Yagudin @ 2022-03-12T20:04 (+8)

I will just note that 10x with 20% (= 25% - 5%) and 100x with 5% would/should dominate EV of your estimate. P = .75 * X + .20 * 10 * X + .05 * 100 * X = .75 X + 7 X = 7.75 X.

elifland @ 2022-03-12T20:24 (+4)

Great point. Perhaps we should have ideally reported the mean of this type of distribution, rather than our best guess percentages. I'm curious if you think I'm underconfident here?

Edit: Yeah I think I was underconfident, would now be at ~10% and ~0.5% for being 1 and 2 orders of magnitude too low respectively, based primarily on considerations Misha describes in another comment placing soft bounds on how much one should update from the base rate. So my estimate should still increase but not by as much (probably by about 2x, taking into account possibility of being wrong on other side as well).

kokotajlod @ 2022-03-12T23:32 (+6)

That makes sense. 2 OOMs is clearly too high now that you mention it. But I stand by my 1 OOM claim though, until people convince me that really this is much more like an ordinary business-as-usual month than I currently think it is. Which could totally happen! I am not by any means an expert on this stuff, this is just my hot take!

NunoSempere @ 2022-03-12T17:51 (+8)

FWIW I'd wildly guess this analysis underestimates by 1-2 orders of magnitude, see this comment thread.

A 2 order of magnitude would be a 6.7% chance of a nuclear exchange between NATO and Russia in the next month, and potentially more over the next year. This seems implausible enough to us that we would be willing to bet[1] our $15k against your $1k[2] on this, over the next month.

- ^

One might think that one can't bet on a catastrophic event (or, in this case, a nuclear exchange between NATO and Russia in the next month). But in fact one can: the party that doesn't believe in the catastrophic event mails the money to the party which does, which spends it now that money is worth more.

If the catastrophic event happens, the one who was right about this doesn't have to repay. If the catastrophic event doesn't happen, the party which was right is repaid with interest.

You'd think that this was equivalent to just getting a loan, and in fact has worse conditions. But this doesn't disprove that this bet would have positive expected value, it only points out that the loan is even better, and you should get both.

- ^

This is on the higher end of your proposed range. But it is also worse than what we could get just exploiting prediction market inefficiencies. But we think it's important that people could put in their money where their mouth is.

kokotajlod @ 2022-03-12T23:30 (+2)

That's why it was my upper bound. I too think it's pretty implausible. How would you feel about a bet on the +1 OOM odds?

Max_Daniel @ 2022-05-18T00:26 (+10)

I recommended some retroactive funding for this post (via the Future Fund's regranting program) because I think it was valuable and hadn't been otherwise funded. (Though I believe CEA agreed to fund potential future updates.)

I think the main sources of value were:

- Providing (another) proof of concept that teams of forecasters can produce decision-relevant information & high-quality reasoning in crisis situations on relatively short notice.

- Saving many people considerable amounts of time. (I know of several very time-pressed people who without that post would likely have spent at least an hour looking into whether they want to leave certain cities etc.).

- Providing a foil for expert engagement.

(And I think the case for retroactively funding valuable work roughly just is that it sets the right incentives. In an ideal case, if people are confident that they will be able to obtain retroactive funding for valuable work after the fact, they can just go and do that, and more valuable work is going to happen. This is also why I'm publicly commenting about having provided retroactive funding in this case.

Of course, there are a bunch of problems with relying on that mechanism, and I'm not suggesting that retroactive funding should replace upfront funding or anything like that.)

JanBrauner @ 2022-03-10T20:44 (+10)

Thanks so much for writing this! I expect this will be quite useful for many people.

I actually spent some time this week worrying a bit about a nuclear attack on the UK, bought some preparation stuff, figured out where I would seek shelter or when I’d move to the countryside, and so on. One key thing is that it’s just so hard to know which probability to assign. Is it 1%? Then I should GTFO! Is it 0.001% Then I shouldn’t worry at all.

kokotajlod @ 2022-03-12T01:53 (+8)

Thanks for doing this. Why is the next-month chance of nuclear war so low? (0.034%) That seems like basically what the base rate should be, i.e. the chance of an average month in an average year resulting in nuclear war. But this is clearly not an average month, the situation is clearly much more risky. How much more, I dunno. But probably at least an order of magnitude more, maybe two? Did you model this, or do we just disagree that this is a significantly riskier month than average?

Misha_Yagudin @ 2022-03-12T21:51 (+16)

So to be 100x of the default rate, one should have put less than 1% on events in Ukraine unfolding as they are now. This feels too low to me (and I registered some predictions in personal communications about a year ago supporting that — thou done in a hurry of email exchange).

I think a reasonable forecaster working on nuclear risk should have put significant worsening of the situation at above 10% (just from crude base rates of Russia's "foreign policy" and past engagement in Ukraine). And I think among Russia-Ukrainian conflicts, this one, while surprising (to me subjectively) in a few ways, is not in the bottom 10% (and not even in the bottom third for me in terms of Russia-NATO tensions — based on the past forecasts). So one should go above baseline but no more than an order of magnitude, imo.

kokotajlod @ 2022-03-12T23:34 (+3)

Ah, that's a good argument, thanks! Updating downwards.

NunoSempere @ 2022-03-12T04:54 (+7)

So mechanistically, one our forecasters was really low on that, as you can see on the min column. That's one of the reasons why we use the aggregate without min/max as our best guess.

Separately:

- We are not talking about nuclear war in general, we are talking about NATO/Russia specifically. So if Russia drops a nuclear bomb in Ukraine, that doesn't per see resolve the relevant subquestion positively.

- We probably do disagree with you on the base rate, and think it's a bits lower than what Laplace's law would imply, partially because Laplace tends to overestimate generically, and partially because nobody wants nuclear war.it We each did some rudimentary modeling for this.

- It's actually relatively late in the game, and it seems like Putin/NATO have figured out where the edges are (e.g., a no fly zone is not acceptable, but arming Ukranians is)

kokotajlod @ 2022-03-12T15:20 (+9)

I don't think this is a situation where the per-month base rate applies. The history of nuclear war is not a flat rate of risk every month; instead, the risk is lower most months and then much higher on some months, and it averages out to whatever flat rate you think is appropriate. (Looking at the list of nuclear close calls it seems hard to believe the overall chance of nuclear war was <50% for the last 70 years. Individual incidents like the cuban missile crisis seem to contribute at least 20%.) So there should be some analysis of how this month compares to past months--is it more like one of the high-risk months, or more like a regular month?

ETA: Analogy: Just a few hours ago I was wondering how likely it was that my flight would be cancelled or delayed. The vast majority of flights are not cancelled or delayed. However, I didn't bother to calculate this base rate, because I have the relevant information that there is a winter storm happening right now around the airport. So instead I tried to use a narrower reference class--how often do flights get cancelled in this region during winter storms? Similarly, I think the reference class to use is something like "Major flare-up in tensions / crisis between NATO and Russia" not "average month in average year." We should be looking at the cuban missile crisis, the fall of the soviet union, and maybe a half-dozen other examples, and using those as our reference class. Unless we think the current situation is milder than that.

I take the point no 3 above that it's relatively late in the game, the edges seem mostly agreed-upon, etc. This is the sort of analysis that I think should be done. I would like to see a brainstorm of scenarios that could lead to war & an attempt to estimate their probability. Like, if Putin uses nukes in Ukraine, might that escalate? What if he uses chemical WMDs? What if NATO decides to ship in more warplanes and drones? What if they start going after each other's satellites, since satellites are so militarily important? What if there is an attempted assassination or coup?

(Re point 1: Sure, yeah, but probably NATO vs. Russia risk is within an order of magnitude of general nuclear war risk, and plausibly more than 50% as high. Most of the past nuclear close calls were NATO vs. Russia, the current crisis is a NATO vs. Russia crisis, and if there is a nuclear war right now it seems especially likely to escalate to London being hit compared to e.g. pakistan vs. India in a typical year etc.)

SammyDMartin @ 2022-03-13T11:57 (+10)

(Looking at the list of nuclear close calls it seems hard to believe the overall chance of nuclear war was <50% for the last 70 years. Individual incidents like the cuban missile crisis seem to contribute at least 20%.)

There's reason to think that this isn't the best way to interpret the history of nuclear near-misses (assuming that it's correct to say that we're currently in a nuclear near-miss situation, and following Nuno I think the current situation is much more like e.g. the Soviet invasion of Afghanistan than the Cuban missile crisis). I made this point in an old post of mine following something Anders Sandberg said, but I think the reasoning is valid:

Robert Wiblin: So just to be clear, you’re saying there’s a lot of near misses, but that hasn’t updated you very much in favor of thinking that the risk is very high. That’s the reverse of what we expected.

Anders Sandberg: Yeah.

Robert Wiblin: Explain the reasoning there.

Anders Sandberg: So imagine a world that has a lot of nuclear warheads. So if there is a nuclear war, it’s guaranteed to wipe out humanity, and then you compare that to a world where is a few warheads. So if there’s a nuclear war, the risk is relatively small. Now in the first dangerous world, you would have a very strong deflection. Even getting close to the state of nuclear war would be strongly disfavored because most histories close to nuclear war end up with no observers left at all.

In the second one, you get the much weaker effect, and now over time you can plot when the near misses happen and the number of nuclear warheads, and you actually see that they don’t behave as strongly as you would think. If there was a very strong anthropic effect you would expect very few near misses during the height of the Cold War, and in fact you see roughly the opposite. So this is weirdly reassuring. In some sense the Petrov incident implies that we are slightly safer about nuclear war.

Essentially, since we did often get 'close' to a nuclear war without one breaking out, we can't have actually been that close to nuclear annihilation, or all those near-misses would be too unlikely (both on ordinary probabilistic grounds since a nuclear war hasn't happened, and potentially also on anthropic grounds since we still exist as observers).

Basically, this implies our appropriate base rate given that we're in something the future would call a nuclear near-miss shouldn't be really high.

However, I'm not sure what this reasoning has to say about the probability of a nuclear bomb being exploded in anger at all. It seems like that's outside the reference class of events Sandberg is talking about in that quote. FWIW Metaculus has that at 10% probability.

NunoSempere @ 2022-03-12T16:22 (+10)

What is the appropriate reference class:

We should be looking at the cuban missile crisis, the fall of the soviet union, and maybe a half-dozen other examples, and using those as our reference class. Unless we think the current situation is milder than that.

Yeah, we do think the situation is much lower than that. Reference class points might be the invasion of Georgia, the invasion of Crimea, the invasion of Afghanistan, the Armenia and Azerbaijan war, the invasion of Finland, etc.

kokotajlod @ 2022-03-12T16:32 (+2)

Seems less mild than the invasion of Georgia and the Armenia and Azerbaijan war. But more mild than the cuban missile crisis for sure. Anyhow, roughly how many examples do you think you'd want to pick for the reference class? If it's a dozen or so, then I think you'd get a substantially higher base rate.

NunoSempere @ 2022-03-12T16:56 (+4)

(As an aside, I think that the invasion of Finland is actually a great reference point)

kokotajlod @ 2022-03-12T23:56 (+2)

Agreed. Though that was a century ago & with different governments, as you pointed out elsewhere. Also no nukes; presumably nukes make escalation less likely than it was then (I didn't realize this until now when I just read the wiki--apparently the Allies almost declared war on the soviet union due to the invasion of finland!).

kokotajlod @ 2022-03-12T16:37 (+2)

To be clear I haven't thought about this nearly as much as you. I just think that this is clearly an unusually risky month and so the number should be substantially higher than for an average month.

elifland @ 2022-03-12T17:25 (+12)

I agree the risk should be substantially higher than for an average month and I think most Samotsvety forecasters agree. I think a large part of the disagreement may be on how risky the average month is.

From the post:

(a) may be due to having a lower level of baseline risk before adjusting up based on the current situation. For example, while Luisa Rodríguez’s analysis puts the chance of a US/Russia nuclear exchange at .38%/year. We think this seems too high for the post-Cold War era after new de-escalation methods have been implemented and lessons have been learnt from close calls. Additionally, we trust the superforecaster aggregate the most out of the estimates aggregated in the post.

Speaking personally, I'd put the baseline risk per year at ~.1%/yr then have adjusted up by a factor of 10 to ~1%/yr given the current situation, which gives me ~.08%/month which is pretty close to the aggregate of ~.07%.

We also looked some from alternative perspectives e.g. decomposing Putin's decision making process, which gave estimates in the same ballpark.

kokotajlod @ 2022-03-12T23:38 (+2)

OK, thanks!

NunoSempere @ 2022-03-12T16:48 (+4)

Deeper analysis needed?

I take the point no 3 above that it's relatively late in the game, the edges seem mostly agreed-upon, etc. This is the sort of analysis that I think should be done. I would like to see a brainstorm of scenarios that could lead to war & an attempt to estimate their probability.

I agree that this would be good to have in the abstract. But it would also be much more expensive and time-consuming, and I expect not all that much better than our current guess and current more informal/intuitive/unwritten models.

And because I/we think that this is probably not a Cuban missile crisis situation, I think it would mostly not be worth it.

kokotajlod @ 2022-03-13T16:52 (+4)

Fair enough! I'm grateful for all the work you've already done and don't think it's your job to do more research in the areas that would be more convincing to me.

NunoSempere @ 2022-03-12T16:26 (+2)

NATO : Russia :: NATO : Soviet Union?

Most of the past nuclear close calls were NATO vs. Russia, the current crisis is a NATO vs. Russia crisis

This feels wrong. Like, an important feature of the current situation is that Ukraine is in fact not part of NATO. Further, previous crises were between the Soviet Union and the Western Capitalists, which were much more ideologically opposed.

DanielFilan @ 2022-03-14T19:38 (+5)

Not to toot my own horn too much, but I think that my post gives a decent explanation for why you shouldn't update too much on recent events - I'm still higher than the consensus, but I expected to be much higher.

Lukas_Gloor @ 2022-03-15T08:21 (+3)

Not sure this is a good place to comment on your blogpost, but I found myself having very different intuitions about this paragraph:

So: what’s the probability of something like the Ukraine situation given a nuclear exchange this year? I’d actually expect a nuclear exchange to be precipitated by somewhat more direct conflict, rather than something more proxy-like. For instance, maybe we’d expect Russia to talk about how Estonia is rightfully theirs, and how it shouldn’t even be a big deal to NATO, rather than the current world where the focus has been on Ukraine specifically for a while. So I’d give this conditional probability as 1/3, which is about 3/10.

-

I think the game theory around NATO precommitments and deterrence more generally gets especially messy in the "proxy-like" situation, so much so that I remember reading takes where people thought escalation was unusually likely in the sort of situation we're in. The main reason being that if the situation is messier, it's not obvious what each side considers unacceptable, so you increase the odds of falsely modeling the other player.

-

It's true that we can think of situations that would be even more dangerous than what we see now, but if those other situations were extremely unlikely a priori, then that still leaves more probability mass for things like the current scenario when you condition on "nuclear exchange this year." Out of all the dangerous things Russia was likely to do, isn't a large invasion into Ukraine pretty high on the list? (Maybe this point is compatible with what you write; I'm not sure I understood it exactly.)

-

Putin seems to have gone off the rails quite a bit and the situation in Russia for him is pretty threatening (he's got the country hostage but has to worry about attempts to out him). Isn't that the sort of thing you'd expect to see before an exchange? Maybe game theory is overrated and you care more about the state of mind of the dictator and the sort of conditions of the country (whether people expect their family to be imprisoned if they object to orders that lead to more escalation, etc.). So I was surprised that you didn't mention points related to this.

DanielFilan @ 2022-03-15T19:53 (+1)

Re: 1, my impression is that Russia and NATO have been in a few proxy conflicts, and so have presumably worked out this deal.

Re: 2, that seems basically right.

Re: 3, I'd weight this relatively low, since it's the kind of 'soft' reporting I'd strongly expect to be less reliable in the current media environment where Putin is considered a villain.

Note that at most this will get you an order of magnitude above the base rate.

kokotajlod @ 2022-03-14T22:50 (+2)

Oh nice, thanks, this is the sort of thing I was looking for!

Alex319 @ 2022-03-12T20:09 (+6)

This was really helpful. I'm living in New York City and am also making the decision about when/whether to evacuate, so it was useful to see the thoughts of expert forecasters. I wouldn't consider myself an expert forecaster and don't really think I have much knowledge of nuclear issues, so here's a couple other thoughts and questions:

- I'm a little surprised that P(London being attacked | nuclear conflict) seemed so low since I would have expected that that would be one of the highest priority targets. What informed that and would you expect somewhere like NYC to be higher or lower than London? (NYC does have a military base, Fort Hamilton (https://en.wikipedia.org/wiki/Fort_Hamilton), although I'm not sure how much that should update my probability).

- It seems like a big contributor to the lower-than-expected risk is the fact that you could wait to evacuate if the situation looked like it was getting more serious - i.e. the "conditional on the above, informed/unbiased actors are not able to escape beforehand" I don't have a car so I would have to get on a bus or plane out which might take up to a day, I'm not sure how much that affects the calculation as I don't know what time frame they were thinking of - were they assuming you can just leave immediately whenever you want?

- It sounds like it does make sense to be monitoring the situation closely and be ready to evacuate on short notice if it looks like the risk of escalation has increased (after all that is what the calculation is based on). Does anyone have any suggestions of what I should be following/under what circumstances it would make sense to leave?

- Of course another factor here is whether lots of other people would be trying to leave at the same time. This might make it harder to leave especially if you were dependent on a bus, plane, uber, etc. to get out of there.

- Another question is where do you go? For instance in NYC, I could go to {a suburb of NY / upstate NY / somewhere even more remote in the US like northern Maine / a non-NATO country} all of which are more and more costly but might have more and more safety benefit. Are there reliable sources on what places would be the safest?

abukeki @ 2022-03-15T17:46 (+12)

See my comments here and here for a bit of analysis on targeting/risks of various locations.

Btw I want to add that it may be even more prudent to evacuate population centers preemptively than some think, as some have suggested countervalue targets are unlikely to be hit at the very start of a nuclear war/in a first strike. That's not entirely true since there are many ways cities would be hit with no warning. If Russia or China launches on warning in response to a false alarm, they would be interpreting that act as a (retaliatory) second strike and thus may aim for countervalue targets. Or if the US launches first for real, whether accidentally or because they genuinely perceived an imminent Russian/Chinese strike and wanted preemptive damage limitation, of course the retaliatory strike could happen quickly and you'd be unlikely to hear that the US even launched before the return strike lands. Etc. These plus a few other reasons mean cities may actually be among the first struck in a nuclear war with little to no warning.

Peter Wildeford @ 2022-03-12T02:38 (+5)

Dumb question but why the name "Samotsvety"? My Googling suggests this is a Soviet Vocal- Instrumental-Ensemble band formed in 1971?

Misha_Yagudin @ 2022-03-12T17:16 (+5)

One can pull off quite a bit of wordplay/puns (in Russian) with "Samotsvety" (about forecasting), which I find adorably cringy. Alas, even Nuño doesn't remember why the name was chosen.

NunoSempere @ 2022-03-12T04:56 (+5)

I find it funny that you got downvoted, because it is after the band. Misha is a fan, but nobody really knows if ironically or not.

SeanEngelhart @ 2022-03-14T01:53 (+4)

Thanks so much for posting this! Do you plan to update the forecast here / elsewhere on the forum at all? If not, do you have any recommendations for places to see high quality, up-to-date forecasts on nuclear risk?

Pablo @ 2022-03-10T19:41 (+4)

laplaceMultiplier = 0.5 # Laplace tends to overestimate stuff

It seems that a more principled way to do this, following Daniel Filan's analysis, is to ask yourself what your prior would be if you didn't know any history and then add this to your Laplace-given odds. For example, if Laplace gives 70:1 odds against nuclear war next year and in the absence of historical information you'd give 20:1 odds against nuclear war on a given year, your adjusted odds would be 90:1.

DanielFilan @ 2022-03-10T19:46 (+5)

So, that's one way to do things, but there's another perspective that looks more like the multiplier approach:

Suppose that in order for B to happen, A needs to happen first. Furthermore, suppose that you've never observed A or B. Then, instead of using Laplace to forecast B, you should probably use it to forecast A, and then multiply by p(B|A). I think this isn't such an uncommon scenario, and a US-Russia nuclear exchange probably fits (altho I wouldn't have my probability factor be as low as 0.5).

NunoSempere @ 2022-03-11T03:12 (+2)

There is a sense in which making odds additive is deeply weird, so this doesn't yet feel natural to me. In particular, I'm more used to thinking in terms of bits, where, e..g, people not wanting to is ~one bit against nuclear war, Putin sort of threatening it is ~one bit in favor, and I generally expect the Laplace estimator to overshoot by ~one bit.

But it's a cool idea.

Misha_Yagudin @ 2022-03-11T03:59 (+12)

It's not about the odds; it's about Beta distribution. You are right to be suspicious about the addition of odds, but there is nothing wrong with adding shape parameters of Beta distributions.

I don't want to go into many details but a teaser for readers:

- One want's to figure out , probability of some event happening.

- One starts with some prior about ; if it's uniform over , the prior is .

- If one then observes successes and failures, one would update to .

- If one then wants to get the probability of success next time, one needs to integrate over possible (basically to take expected value). It would lead to .

For Laplace's law of succession, you start with , observe failures and update to . And your estimate is . In this context, Pablo suggests starting with different prior of (which corresponds to the probability of success and odds of ) to then update to after observing failures.

t_adamczewski @ 2022-03-11T10:58 (+6)

I can confirm this is correct.

By the way, a similar modelling approach (Beta prior, binomial likelihood function) was used in this report.

NunoSempere @ 2022-03-11T05:04 (+4)

This (pointed me to something that) makes so much more sense, thanks Misha, strongly updated.

Greg_Colbourn @ 2022-03-11T23:07 (+1)

Meanwhile, in South Asia... India accidentally fires missile into Pakistan. A reminder that the threat from accidents is still all too apparent.

Misha_Yagudin @ 2022-03-12T00:08 (+5)

Yes, but you also should update on incidents not leading to a catastrophe. If Nuño and I scratched math correctly, you should feel times more doomed, where n is the number of incidents. If it's the 8th accident, you should only feel like only ~1% more doomed.

NunoSempere @ 2022-03-12T00:25 (+2)

(That comes from modeling incidents and catastrophe|incidents as two different beta distributions, and updating upwards on the prevalence of incidents, and a bit downwards on the prevalence of catastrophes given an incidence. The update ends up such that the overall chance of catastrophes also rises, by the factor that Misha mentioned.)

Greg_Colbourn @ 2022-03-12T13:03 (+4)

Makes sense. I was thinking for a minute that it meant something like the more near misses, the lower the overall chance of catastrophe. That would be weird, but not impossible I guess.

Adam Binks @ 2022-03-11T15:25 (+1)

Thanks for the writeup! I tried to copy and paste the simple model into Squiggle but it gave an error, couldn't immediately spot what the cause is:

NunoSempere @ 2022-03-11T20:12 (+2)

I have no idea why this is, but I've reported it here. What operating/system/browser are you using?

Adam Binks @ 2022-03-18T12:17 (+1)

Thanks, I'm using Chrome on Windows 10.