Manifund: what we're funding (week 1)

By Austin @ 2023-07-15T00:28 (+43)

This is a linkpost to https://manifund.substack.com/p/what-were-funding-week-1

We’re experimenting with a weekly newsletter format, to surface the best grants and activity on Manifund!

Overall reflections

It’s been a week since we announced our regrantor program. How are we feeling?

- Very happy with the publicity and engagement around our grant proposals

- #1 on Hacker News, multiple good EA Forum posts, and 7 new grassroots donors

- Feels like this validates our strategy of posting grant proposals in public…

- Happy with the caliber of regrantors who’ve applied for budgets

- We’ve gotten 15 applications; have onboarded 2 and shortlisted 4 more

- Now thinking about further fundraising, so we can give budgets to these awesome folks

- Happy with grantee experience for the handful of grants we’ve made

- Less happy with total grantmaking quantity and dollar volume so far

- In total, we’ve committed about $70k across 6 large grants

- Where’s the bottleneck? Some guesses: regrantors are busy people; writeups take a while; “funder chicken” when waiting for others to make grants

- To address this, we’re running a virtual “grantathon” to work on grant writeups & reviews! Join us next Wed evening, 6-9pm PT on this Google Meet.

Grant of the week

This week’s featured grant is to Matthew Farrugia-Roberts, for introductory resources for Singular Learning Theory! In the words of Adam Gleave, the regrantor who initiated this:

There's been an explosion of interest in Singular Learning Theory lately in the alignment community, and good introductory resources could save people a lot of time. A scholarly literature review also has the benefit of making this area more accessible to the ML research community more broadly. Matthew seems well placed to conduct this, having already familiarized himself with the field during his MS thesis and collected a database of papers. He also has extensive teaching experience and experience writing publications aimed at the ML research community.

In need of funding

Some of our favorite proposals which could use more funding:

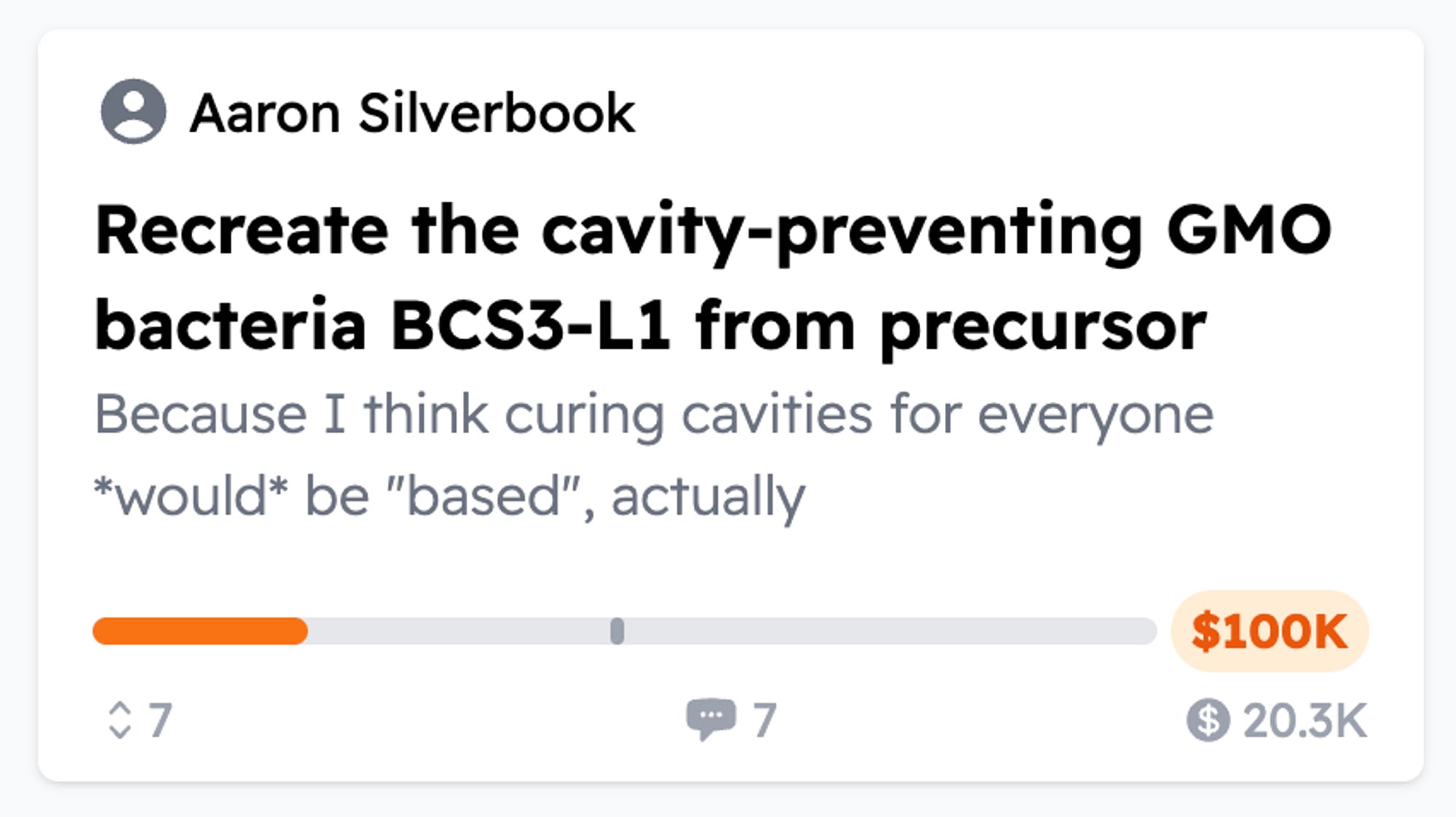

1. Aaron Silverbook: recreate the cavity-preventing GMO bacteria BCS3-L1 from precursor

A proposal to cure cavities, forever. Very out-of-left-field (Aaron: “it certainly isn't great for our Weirdness Points budget”), but I’m a fan of the ambition and the team behind it. We’re currently seeing how Manifund can make an equity investment in Lantern Bioworks.

Also: this proposal hit #1 on Hacker News, with 260 upvotes & 171 comments. Shoutout to our friend Evan Conrad for posting + tweeting this out!

2. Holly Elmore: Organizing people for a frontier AI moratorium

Holly has a stellar track record at Rethink Priorities and Harvard EA (not to mention a killer blog). She’s now looking to pivot to AI moratorium movement-organizing! As a grant that may include political advocacy, we’re still seeing to what extent our 501c3 can fund this; in the meantime, Holly has set up a separate GoFundMe for individual donors.

3. Shrimp Welfare Project: Electrical stunners

Pilot of electrical stunners to reduce shrimp suffering. Recommended by regrantor Marcus Abramovitch, as the one of the most exciting & unfunded opportunities in the entire space of animal welfare.

In a thorough EA Forum post, Matt investigates the cost-effectiveness of this proposal — it’s a thoughtful writeup, take a look at the entire thing! One takeaway:

Electric shrimp stunning might be worth supporting as a somewhat speculative bet in the animal welfare space. Considerations that might drive donor decisions on this project include risk tolerance, credence in the undiluted experience model of welfare, and willingness to take a hits-based giving approach.

New regrantors: Renan Araujo and Joel Becker

Since putting out the call for regrantors last week, we’ve gotten quite the influx of interest. After speaking with many candidates, we’re happy to announce our two newest regrantors: Renan and Joel!

- We expect regranting to integrate smoothly with Renan’s work incubating longtermist projects at Rethink Priorities, and his strong network across Southeast Asia, India, and South America exposes him to giving opportunities that other grantmakers might miss.

- Joel is responsible for all kinds of awesome projects such as SHELTER and EA Bahamas (rip). He's got an extensive set of EA connections and the best karaoke voice I've ever heard. And he's unusually insightful, as evidenced by having been the #1 most profitable forecaster on Manifold ;)

We have a waitlist of regrantor candidates who we’re still looking to fundraise for, including experts in catastrophe preparedness, EU AI Policy, and AI moratorium advocacy. If you’d like to donate to one of these causes, reach out to austin@manifund.org!

Website updates

Rachel knocked out two improvements to the Manifund site:

- You can now upvote or downvote individual grants! Click into any grant proposal and vote on whether you find this grant exciting. This helps us & our regrantors prioritize among the applications from our open call.

2. We’ve redesigned the card view for grants, emphasizing the room that applications have for funding. IMO, much cleaner than before!

Other links

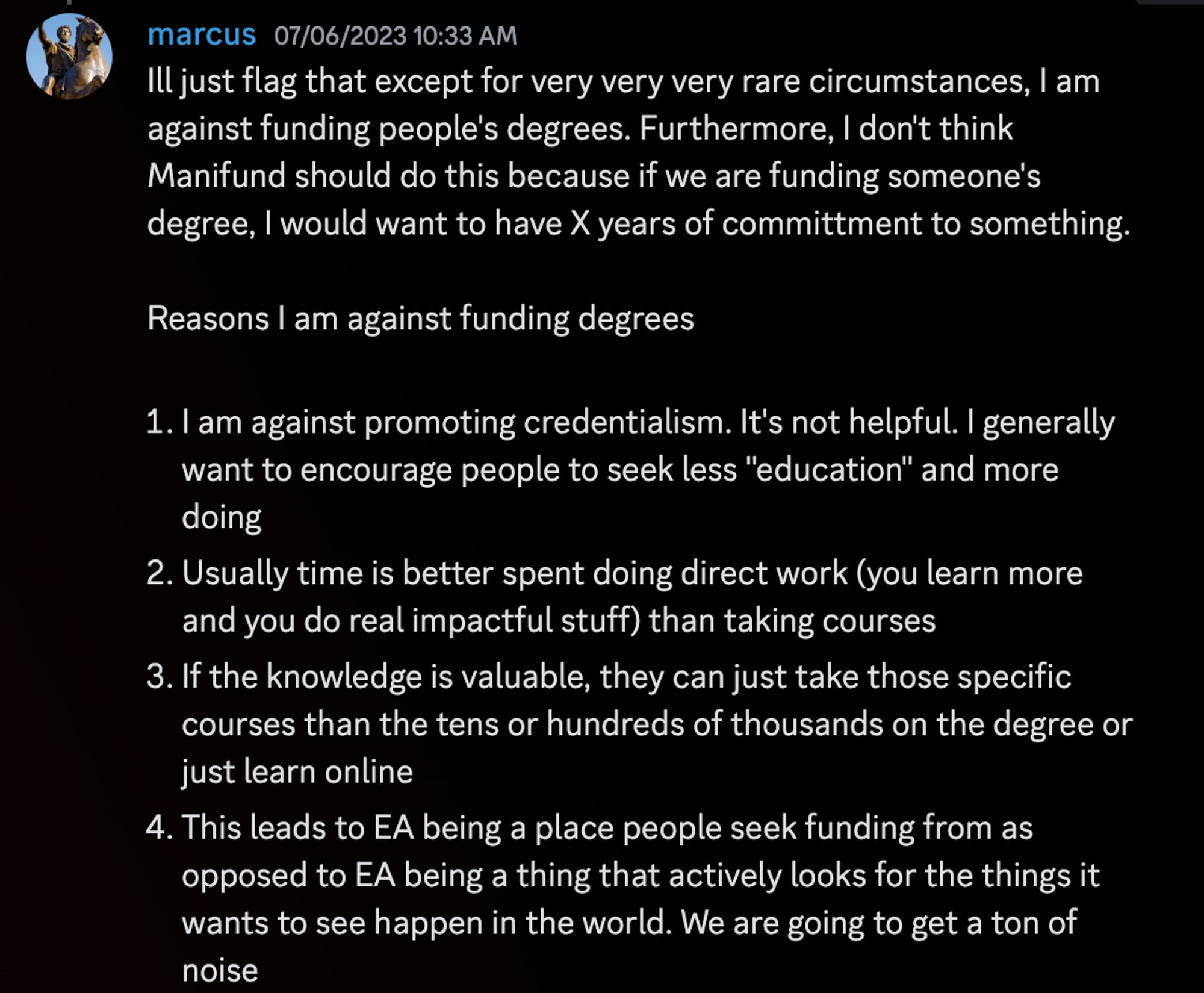

On Discord, Marcus leads with a spicy take: 13 reasons why EA funders should avoid paying for degrees…

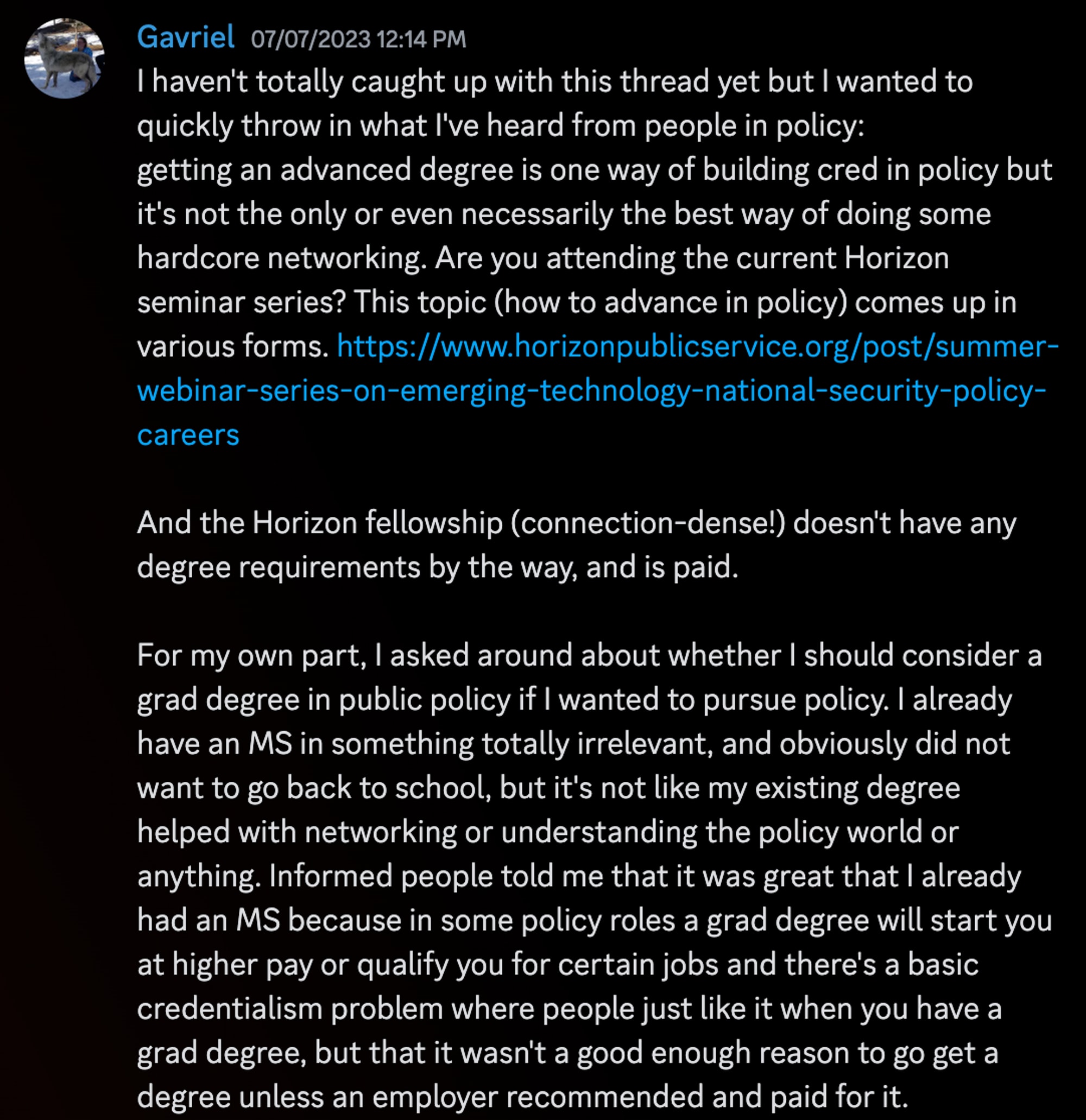

Gavriel weighs in based on her own experience in considering a policy degree:

Many other great comments interspersed; check out the full discussion on our Discord.

Meanwhile, Nathan Young writes about using futarchy for better regranting, on the EA Forum. For now, we’re still missing specific proposals on how futarchy could lead to better decisions, but I’m very grateful to Nathan for bringing up the topic. His writeup also inspired me to create a prediction market on whether Lantern Bioworks would succeed, if funded. Setting aside futarchy, just setting up predictions on specific grant outcomes is low-hanging fruit.

Thanks for reading!

— Austin

Zach Stein-Perlman @ 2023-07-15T01:05 (+45)

I'm a noted fan of Holly, but I disagree with your hagiography.

- Her published work at RP seems typical

- I'm not familiar with her work at Harvard EA and I doubt you are either

- Elsewhere you say "Holly has launched a GoFundMe to fund her work independently; it's this kind of entrepreneurial spirit that gives me confidence she'll do well as a movement organizer!"; I disagree that launching a GoFundMe is nontrivial evidence of success

dan.pandori @ 2023-07-15T19:00 (+30)

This comment came across as unnecessarily aggressive to me.

The original post is a newsletter that seems to be trying to paint everyone in their best light. That's a nice thing to do! The epistemic status of the post (hype) also feels pretty clear already.

Zach Stein-Perlman @ 2023-07-15T19:12 (+24)

Yeah, I hear you. [Edit: well, I think it was the least aggressive way of saying what I wanted to say.]

(I note that in addition to hyping the post is kinda making an ask for funding for the three projects it mentions--"Some of our favorite proposals which could use more funding"--and I'm pretty uncomfortable with one-sided-ness in funding-asks.)

Austin @ 2023-07-15T01:18 (+16)

Thanks for the feedback. I'm not sure what our disagreement cashes out to - roughly, I would expect "if funded, Holly would do a good job such that 1 year later, we were happy to have funded her for this"?

Zach Stein-Perlman @ 2023-07-15T01:24 (+9)

I wasn't commenting on expectations, just your framing of the evidence.

(Conditional or counterfactually-conditional on Holly being funded, I expect her to mostly fail because I think most advocacy mostly fails, and 1 year later I agree you will probably still think it was a reasonable grant at the time.)

Holly_Elmore @ 2023-07-17T20:54 (+11)

😂

Fwiw I was not offended by this comment and I think it’s valid (important!) to question potential halo-ing behavior in the context of funding grants even if it’s totally normal and nice in any other context

Holly_Elmore @ 2023-07-17T21:23 (+8)

I see where Zach is coming from and I sort of agree with his assessment of my work at RP. While I'm proud of what I did (including unpublished/internal/ supporting role stuff), ultimately I don't think my work at RP was unusually impactful within my cause area, and I think my manager will agree. (I felt conflicted about wild animal welfare as a cause area and part of the reason I was ready to leave RP when I did to do moratorium organizing is that I had lost confidence that WAW was tractable. My work that is public was on the best interventions within the cause area, which were way lower impact than, say, farmed animal welfare, so in EA absolute impact terms I think that work can only be so good.)

Holly_Elmore @ 2023-07-17T21:27 (+3)

And I also appreciate Austin’s vote of confidence and don’t think he did anything wrong hyping us, even if he finds it prudent to do things differently in the future