How I hope the EA community will respond to the AI bubble popping

By Yarrow Bouchard 🔸 @ 2025-12-05T09:26 (+36)

Keep this post on ice and uncork it when the bubble pops. It may mean nothing to you now; I hope it means something when the time comes.

This post is written with an anguished heart, from love, which is the only good reason to do anything.

I hope that the AI bubble popping is like the FTX collapse 2.0 for effective altruism. Not because it will make funding dry up — it won't. And not because it will have any relation to moral scandal — it won't. But it will be a financial wreck — orders of magnitude larger than the FTX collapse — that could lead to soul-searching for many people in effective altruism, if they choose to respond that way. (It may also have indirect reputational damage for EA by diminishing the credibility of the imminent AGI narrative — too early to tell.)

In the wake of the FTX collapse, one of the positive signs was the eagerness of people to do soul-searching. It was difficult, and it's still difficult, to know how to make sense of EA's role in FTX. Did powerful people in the EA movement somehow contribute to the scam? Or did they just get scammed too? Were people in EA accomplices or victims? What is the lesson? Is there one? I'll leave that to be sorted out another time. The point here is that people were eager to look for the lesson, if there was one to find, and to integrate it. That's good.

It's highly probable that there is an AI bubble.[1] Nobody can predict when a bubble will pop, even if they can correctly call that there is a bubble. So, we can only say that there is most likely a bubble and it will pop... eventually. Maybe in six months, maybe in a year, maybe in two years, maybe in three years... Who knows. I hope that people will experience the reverberations of that bubble popping — possibly even triggering a recession in the U.S., although it may be a bit like the straw that broke the camel's back in that case — and bring the same energy they brought to the FTX collapse. The EA movement has been incredibly bought-in on AI capabilities optimism and that same optimism is fueling AI investment. The AI bubble popping would be a strong signal that this optimism has been misplaced.

Unfortunately, it’s always possible to not learn lessons. The futurist Ray Kurzweil has made many incorrect predictions about the future. His strategy in many such cases is to find a way he can declare he was correct or "essentially correct" (see, e.g., page 132 here). Tesla CEO Elon Musk has been predicting every year for the past seven years or so that Teslas will achieve full autonomy — or something close to it — in a year, or next year, or by the end of the year. Every year it doesn't happen, he just pushes his prediction back a year. And he's done that about seven times. Every year since around 2018, Tesla's achievement of full autonomy (or something close) has been about a year away.

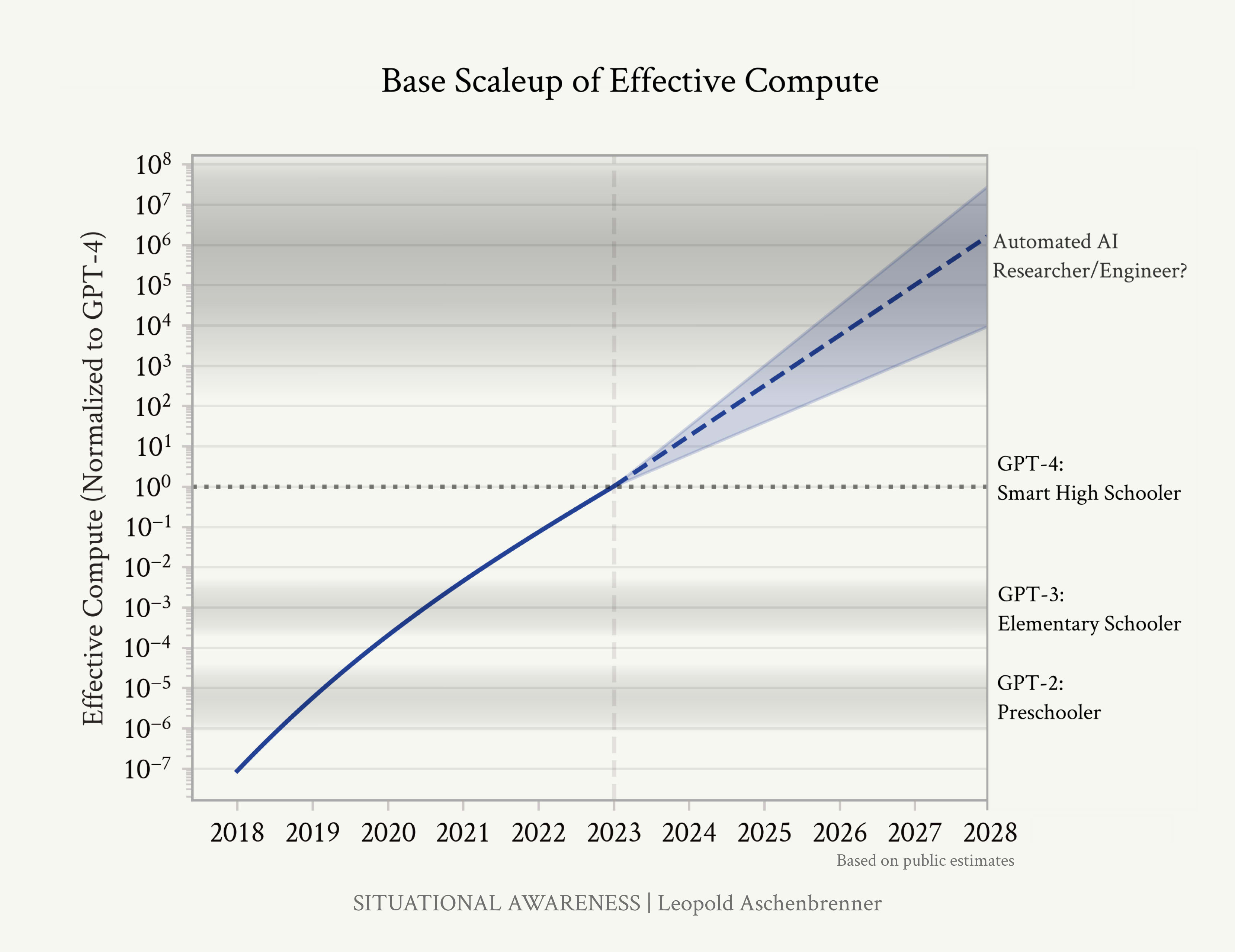

When the AI bubble pops, I fear both of these reactions. The Kurzweil-style reaction is to interpret the evidence in a way — any way — that allows one to be correct. There are a million ways of doing this. One way would be to tell a story where AI capabilities were indeed on the trajectory originally believed, but AI safety measures — thanks in part to the influence of AI safety advocates — led to capabilities being slowed down, held back, sabotaged, or left on the table in some way. This is not far off from the sorts of things people have already argued. In 2024, the AI researcher and investor Leopold Aschenbrenner published an extremely dubious essay, "Situational Awareness", which, in between made-up graphs, argues that AI models are artificially or unfairly "hobbled" in a way that makes their base, raw capabilities seem significantly less than they really are. By implementing commonsense, straightforward unhobbling techniques, models will become much more capable and reveal their true power. From here, it would only be one more step to say that AI companies deliberately left their models "hobbled" for safety reasons. But this is just one example. There are an unlimited number of ways you could try to tell a story like this.

Arguably, Anthropic CEO Dario Amodei engaged in Kurzweil-style obfuscation of a prediction this year. In mid-March, Amodei predicted that by mid-September 90% of code would be written by AI. When nothing close to this happened, Amodei said, "Some people think that prediction is wrong, but within Anthropic and within a number of companies that we work with, that is absolutely true now." When pressed, he clarified that this was only true "on many teams, not uniformly, everywhere". That's a bailey within a bailey.

The Musk-style reaction is to just to kick the can down the road. People in EA or EA-adjacent communities have already been kicking the can down the road. AI 2027, which was actually AI 2028, is now AI 2029. And that's hardly the only example.[2] Metaculus was at 2030 on AGI early in the year and now it's at 2033.[3] The can is kicked.

There's nothing inherently wrong with kicking the can down the road. There is something wrong with the way Musk has been doing it. At what point does it cross over from making a reasonable, moderate adjustment to making the same mistake over and over? I don't think there's an easy way to answer this question. I think the best you can do is see repeated can kicks as an invitation to go back to basics, to the fundamentals, to adopt a beginner's mind, and try to rethink things from the beginning, over again. As you retrace your steps, you might end up in the same place all over again. But you might notice something you didn't notice before.

There are many silent alarms already ringing about the imminent AGI narrative. One of effective altruism's co-founders, the philosopher and AI governance researcher Toby Ord, wrote brilliantly about one of them. Key quote:

Grok 4 was trained on 200,000 GPUs located in xAI’s vast Colossus datacenter. To achieve the equivalent of a GPT-level jump through RL [reinforcement learning] would (according to the rough scaling relationships above) require 1,000,000x the total training compute. To put that in perspective, it would require replacing every GPU in their datacenter with 5 entirely new datacenters of the same size, then using 5 years worth of the entire world’s electricity production to train the model. So it looks infeasible for further scaling of RL-training compute to give even a single GPT-level boost.

The respected AI researcher Ilya Sutskever, who played a role in kicking off the deep learning revolution in 2012 and who served as OpenAI's Chief Scientist until 2024, has declared that the age of scaling in AI is over, and we have now entered an age of fundamental research. Sutskever highlights “inadequate” generalization as a flaw with deep neural networks and has previously called out out reliability as an issue. A survey from earlier this year found that 76% of AI experts think it's "unlikely" or "very unlikely" that scaling will lead to AGI.[4]

And of course the signs of the bubble are also signs of trouble for the imminent AGI narrative. Generative AI isn't generating profit. For enterprise customers, it can't do much that's practically useful or financially valuable. Optimistic perceptions of AI capabilities are based on contrived, abstract benchmarks with poor construct validity, not hard evidence about real world applications.[5] Call it the mismeasurement of the decade!

My fear is that EA is going to barrel right into the AI bubble, ignoring these obvious warning signs. I'm surprised how little attention Toby Ord's post has gotten. Ord is respected by all of us in this community and therefore he has a big megaphone. Why aren't people listening? Why aren't they noticing this? What is happening?

It's like EA is car blazing down the street at racing speeds, blowing through stop signs, running red lights... heading, I don't know where, but probably nowhere good. I don't know what can stop the momentum now, except maybe something on the scale that the macroeconomy of the United States will be shaken.

The best outcome would be for the EA community to deeply reflect and to reevaluate the imminent AGI narrative before the bubble pops; the second-best outcome would be to do this soul-searching afterward. So, I hope people will do that soul-searching, like the post-FTX soul-searching, but even deeper. 99%+ of people in EA had no direct personal connection to FTX. Evidence about what EA leaders knew and when they knew it was (and largely still is) scant, making it hard to draw conclusions, as much as people desperately (and nobly) wanted to find the lesson. Not so for AGI. For AGI, most people have some level of involvement, even if small, in shaping the community's views. Everyone's epistemic practices — not "epistemics", which is a made-up word that isn't used in philosophy — are up for questioning here, even for people who just vaguely think I don't really know anything about that but I'll just trust that the community is probably right.

The science communicator Hank Green has an excellent video from October where he explains some of the epistemology of science and why we should follow Carl Sagan's famous maxim that "extraordinary claims require extraordinary evidence". Hank Green is talking about evidence of intelligent alien life, but what he says applies equally well to intelligent artificial life. When we're encountering something unknown and unprecedented, our observations and measurements should be under a higher level of scrutiny than we accept for ordinary, everyday things. Perversely, the standard of evidence in AGI discourse is the opposite. Arguments and evidence that wouldn't even pass muster as part of an investment thesis are used to forecast the imminent, ultimate end of humanity and the invention of a digital God. What's the base rate of millennialist views being correct? 0.00%?

Watch the video and replace "aliens" with "AGI":

I feel crazy and I must not be the only one. None of this makes any sense. How did a movement that was originally about rigorous empirical evaluation of charity cost-effectiveness become a community where people accept eschatological arguments based on fake graphs and gut intuition? What?? What are you talking about?! Somebody stop this car!

And lest you misunderstand me, when I started my Medium blog back in 2015, my first post was about the world-historical, natural historical importance of the seemingly inevitable advent of AGI and superintelligence. On an older blog that no longer exists, posts on this theme go back even further. What a weird irony I find myself in now. The point is not whether AGI is possible in principle or whether it will eventually be created if science and technology continue making progress — it seems hard to argue otherwise — but that this is not the moment. It's not even close to the moment.

The EA community has a whiff of macho dunk culture at times (so does Twitter, so does life), so I want to be clear that's absolutely not my intention. I'm coming at this from a place of genuine maternal love and concern. What's going on, my babies? How did we get here? What happened to that GiveWell rigour?

Of course, nobody will listen to me now. Maybe when the bubble pops. Maybe. (Probably not.)

This post is not written to convince anyone today. It's written for the future. It's a time capsule for when the bubble pops. When that moment comes, it's an invitation for sober second thought. It's not an answer, but an unanswered question. What happened, you guys?

- ^

See "Is the AI Industry in a Bubble?" (November 15, 2025).

- ^

In 2023, 2024, and 2025, Turing Award-winning AI researcher Geoffrey Hinton repeated his low-confidence prediction of AGI in 5-20 years, but it might be taking him too literally to say he pushed back his prediction by 2 years.

- ^

The median date of AGI has been slipping by 3 years per year. If you update all the way, by 2033, it will have slipped to 2057.[6]

- ^

Another AI researcher, Andrej Karpathy, formerly at OpenAI and Stanford but best-known for playing a leading role in developing Tesla's Full Self-Driving software from 2017 to 2022, made a splash by saying that he thought effective "agentic" applications of AI (e.g. computer-using AI systems à la ChatGPT's Agent Mode) were about a decade away — because this implies Karpathy thinks AGI is at least a decade away. I personally didn't find this too surprising or particularly epistemically significant; Karpathy is far from the first, only, or most prominent AI researcher to say something like this. But I think this broke through a lot of people's filter bubbles because Karpathy is someone they listen to, and it surprised them because they aren't used to hearing even a modestly more conservative view than AGI by 2030, plus or minus two years.

- ^

Edited on Monday, December 8, 2025 at 12:05pm Eastern to add: I just realized I’ve been lumping in criterion validity with construct validity, but they are two different concepts. Both are important in this context. Both concepts fall under the umbrella of measurement validity.

- ^

If you think this meta-induction is ridiculous, you’re right.

Mjreard @ 2025-12-05T17:53 (+37)

I directionally agree that EAs are overestimating the imminence of AGI and will incur some credibility costs, but the bits of circumstantial evidence you present here don't warrant the confidence you express. 76% of experts saying it's "unlikely" the current paradigm will lead to AGI leaves ample room for a majority thinking there's a 10%+ chance it will, which is more than enough to justify EA efforts here.

And most of what EAs are working on is determining whether we're in that world and what practical steps you can take to safeguard value given what we know. It's premature to declare case closed when the markets and the field are still mostly against you (at the 10% threshold).

I wish EA were a bigger and broader movement such that we could do more hedging, but given that you only have a few hundred people and a few $100m/yr, it's reasonable to stake that on something this potentially important that no one else is doing effective work on.

I would like to bring back more of the pre-ChatGPT disposition where people were more comfortable emphasizing their uncertainty, but standing by the expected value of AI safety work. I'm also open to the idea that that modesty too heavily burdens our ability to have impact in the 10%+ of worlds where it really matters.

Arepo @ 2025-12-06T13:06 (+21)

I agree that the OP is too confident/strongly worded, but IMO this

which is more than enough to justify EA efforts here.

could be dangerously wrong. As long as AI safety consumes resources that might have counterfactually gone to e.g. nuclear disarmament, stronger international relations, it might well be harmful in expectation.

This is doubly true for warlike AI 'safety' strategies like Aschenbrenner's call to intentionally arms race China, Hendrycks, Schmidt and Wang's call to 'sabotage' countries that cross some ill-defined threshold, and Yudkowsky calling for airstrikes on data centres. I think such 'AI safety' efforts are very likely increasing existential risk.

Mjreard @ 2025-12-07T02:09 (+6)

A 10% chance of transformative AI this decade justifies current EA efforts to make AI go well. That includes the opportunity costs of that money not going to other things in the 90% worlds. Spending money on e.g. nuclear disarmament instead of AI also implies harm in the 10% of worlds where TAI was coming. Just calculating the expected vale of each accounts for both of these costs.

It's also important to understand that Hendrycks and Yudkowsky were simply describing/predicting the geopolitical equilibrium that follows from their strategies, not independently advocating for the airstrikes or sabotage. Leopold is a more ambiguous case, but even he says that the race is already the reality, not something he prefers independently. I also think very few "EA" dollars are going to any of these groups/individuals.

Arepo @ 2025-12-09T19:22 (+8)

A 10% chance of transformative AI this decade justifies current EA efforts to make AI go well.

Not necessarily. It depends on

a) your credence distribution of TAI after this decade,

b) your estimate of annual risk per year of other catastrophes, and

c) your estimate of the comparative longterm cost of other catastrophes.

I don't think it's unreasonable to think, for example, that

- there's a very long tail to when TAI might arrive, given that its prospects of arriving in 2-3 decades are substantially related to to its prospects of arriving this decade) it arriving this decade (e.g. if we scale current models substantially and they still show no signs of becoming TAI, that undermines the case for future scaling getting us there under same paradigm); or

- the more pessimistic annual risk estimates I talked about in the previous essay of 1-2% per year are correct, and that future civilisations will have a sufficiently increased difficulty for a collapse to cost to have near 50% the expected cost of extinction

And either of these beliefs (and others) would suggest we're relatively overspending on on AI.

It's also important to understand that Hendrycks and Yudkowsky were simply describing/predicting the geopolitical equilibrium that follows from their strategies, not independently advocating for the airstrikes or sabotage.

This is grossly disingenuous. Yudkowsky frames his call for airstrikes as what we 'need' to do, and describes them in the context of the hypothetical 'if I had infinite freedom to write laws'. Hendrycks is slightly less direct in actively calling for it, claiming that it's the default, but the document clearly states the intent of supporting it 'we outline measures to maintain the conditions for MAIM'.

These aren't the words of people dispassionately observing a phenomenon - they are both clearly trying to bring about the scenarios they describe when the lines they've personally drawn are crossed.

David T @ 2025-12-09T21:50 (+6)

I would add that it's not just extreme proposals to make "AI go well" like Yudkowsky's airstrike that potentially have negative consequences beyond the counterfactual costs of not spending the money on other causes. Even 'pausing AI' through democratically elected legislation enacted as a result of smart and well-reasoned lobbying might be significantly negative in its direct impact, if the sort of 'AI' restricted would have failed to become a malign superintelligence but would have been very helpful to economic growth generally and perhaps medical researchers specifically.

This applies if the imminent AGI hypothesis is false, and probably to an even greater extent it if it is true.

(The simplest argument for why it's hard to justify all EA efforts to make AI go well based purely on its neglectedness as a cause is that some EA theories about what is needed for AI to go well directly conflict with others; to justify the course of action one needs to have some confidence not only that AGI is possibly a threat but that the proposed approach to it at least doesn't increase the threat. It is possible that both donations to a "charity" that became a commercial AI accelerationist and donations to lobbyists attempting to pause AI altogether were both mistakes, but it seems implausible that they were both good causes)

David Mathers🔸 @ 2025-12-05T19:31 (+12)

I don't think it's clear, absent further argument, that there has to be a 10% chance of full AGI in the relatively near future to justify the currently high valuations of tech stocks. New, more powerful models could be super-valuable without being able to do all human labour. (For example, if they weren't so useful working alone, but they made human workers in most white collar occupations much more productive.) And you haven't actually provided evidence that most experts think there's a 10% chance current paradigm will lead to AGI. Though the latter point is a bit of a nitpick if 24% of experts think it will, since I agree the latter is likely enough to justify EA money/concern. (Maybe the survey had some don't knows though?).

Yarrow Bouchard 🔸 @ 2025-12-06T00:06 (+8)

Thank you for pointing this out, David. The situation here is asymmetric. Consider the analogy of chess. If computers can’t play chess competently, that is strong evidence against imminent AGI. If computers can play chess competently — as IBM’s Deep Blue could in 1996 — that is not strong evidence for imminent AGI. It’s been about 30 years since Deep Blue and we still don’t have anything close to AGI.

AI investment is similar. The market isn’t pricing in AGI. I’ve looked at every analyst report I can find, and whatever other information I can get my hands on about how AI is being valued. The optimists are expecting AI to be a fairly normal, prosaic extension of computers and the Internet, enabling office workers to manipulate spreadsheets more efficiently, making it easier for consumers to shop online, they foresee social media platforms having chatbots that are somehow popular and profitable, LLMs playing some role in education, and chatbots doing customer support — which seems like one of the two areas, along with coding, where generative AI has some practical usefulness and financial value, although this is a fairly incremental step up from the pre-LLM chatbots and decision trees that were already widely used in customer support.

I haven’t seen AGI mentioned as a serious consideration in any of the stuff I’ve seen from the financial world.

Mjreard @ 2025-12-05T22:01 (+2)

I agree there's logical space for something less than less than AGI making the investments rational, but I think the gap between that and full AGI is pretty small. Peculiarity of my own world model though, so not something to bank on.

My interpretation of the survey responses is selecting "unlikely" when there are also "not sure" and "very unlikely" options suggests substantial probability (i.e. > 10%) on the part of the respondents who say "unlikely," or "don't know." Reasonable uncertainty is all you need to justify work on something so important if-true and the cited survey seems to provide that.

David Mathers🔸 @ 2025-12-05T23:02 (+2)

People vary a lot in how they interpret terms like "unlikely" or "very unlikely" in % terms, so I think >10% is not all that obvious. But I agree that it is evidence they don't think the whole idea is totally stupid, and that a relatively low probability of near-term AGI is still extremely worth worrying about.

Yarrow Bouchard 🔸 @ 2025-12-06T01:13 (+4)

I should link the survey directly here: https://aaai.org/wp-content/uploads/2025/03/AAAI-2025-PresPanel-Report-FINAL.pdf

The relevant question is described on page 66:

The majority of respondents (76%) assert that “scaling up current AI approaches” to yield AGI is “unlikely” or “very unlikely” to succeed, suggesting doubts about whether current machine learning paradigms are sufficient for achieving general intelligence.

I frequently shorthand this to a belief that LLMs won’t scale to AGI, but the question is actually broader and encompasses all current AI approaches.

Also relevant for this discussion: pages 64 and 65 of the report describe some of the fundamental research challenges that currently exist in AI capabilities. I can’t emphasize the importance of this enough. It is easy to think a problem like AGI is closer to being solved than it really is when you haven’t explored the subproblems involved or the long history of AI researchers trying and failing to solve those subproblems.

In my observation, people in EA greatly overestimate progress on AI capabilities. For example, many people seem to believe that autonomous driving is a solved problem, when this isn’t close to being true. Natural language processing has made leaps and bounds over the last seven years, but the progress in computer vision has been quite anemic by comparison. Many fundamental research problems have seen basically no progress, or very little.

I also think many people in EA overestimate the abilities of LLMs, anthropomorphizing the LLM and interpreting its outputs as evidence of deeper cognition, while also making excuses and hand-waving away the mistakes and failures — which, when it’s possible to do so, are often manually fixed using a lot of human labour by annotators.

I think people in EA need to update on:

- Current AI capabilities being significantly less than they thought (e.g. with regard to autonomous driving and LLMs)

- Progress in AI capabilities being significantly less than they thought, especially outside of natural language processing (e.g. computer vision, reinforcement learning) and especially on fundamental research problems

- The number of fundamental research problems and how thorny they are, how much time, effort, and funding has already been spent on trying to solve them, and how little success has been achieved so far

Jason @ 2025-12-08T12:03 (+4)

76% of experts saying it's "unlikely" the current paradigm will lead to AGI leaves ample room for a majority thinking there's a 10%+ chance it will . . . .

. . . . and the field are still mostly against you (at the 10% threshold).

I agree that the "unlikely" statistic leaves ample room for the majority of the field thinking there is a 10%+ chance, but it does not establish that the majority actually thinks that.

I would like to bring back more of the pre-ChatGPT disposition where people were more comfortable emphasizing their uncertainty, but standing by the expected value of AI safety work.

I think there are at least two (potentially overlapping) ways one could take the general concern that @Yarrow Bouchard 🔸 is identifying here. One, if accepted, leads to the substantive conclusion that EA individuals, orgs, and funders shouldn't be nearly as focused on AI because the perceived dangers are just too remote. An alternative framing doesn't necessarily lead there. It goes something like there has been a significant and worrisome decline in the quality of epistemic practices surrounding AI in EA since the advent of ChatGPT. If it -- but not the other -- framing is accepted, it leads in my view to a different set of recommended actions.

I flag that since I think the relevant considerations for assessing the alternative framing could be significantly different.

Yarrow Bouchard 🔸 @ 2025-12-08T14:20 (+3)

One not need choose between the two because they both point toward the same path: re-examine claims with greater scrutiny. There is no excuse for the egregious flaws in works like "Situational Awareness" and AI 2027. This is not serious scholarship. To the extent the EA community gets fooled by stuff like this, its reasoning process, and its weighing of evidence, will be severely impaired.

If you get rid of all the low-quality work and retrace all the steps of the argument from the beginning, might the EA community end up in basically the same place all over again, with a similar estimation of AGI risk and a similar allocation of resources toward it? Well, sure, it might. But it might not.

If your views are largely informed by falsehoods and ridiculous claims, half-truths and oversimplifications, greedy reductionism and measurements with little to no construct validity or criterion validity, and, in some cases, a lack of awareness of countervailing ideas or the all-too-eager dismissal of inconvenient evidence, then you simply don’t know what your views would end up being if you started all over again with more rigour and higher standards. The only appropriate response is to clear house. Put the ideas and evidence into a crucible and burn away what doesn’t belong. Then, start from the beginning and see what sort of conclusions can actually be justified with what remains.

A large part of the blame lies at the feet of LessWrong and at the feet of all the people in EA who decided, in some important cases quite early on, to mingle the two communities. LessWrong promotes skepticism and suspicion of academia, mainstream/institutional science, traditional forms of critical thinking and scientific skepticism, journalism, and society at large. At the same time, LessWrong promotes reverence and obsequence toward its own community, positioning itself as an alternative authority to replace academia, science, traditional critical thought, journalism, and mainstream culture. Not innocently. LessWrong is obsessed with fringe thinking. The community has created multiple groups that Ozy Brennan describes as "cults". Given how small the LessWrong community is, I’d have to guess that the rate at which the community creates cults must be multiple orders of magnitude higher than the base rate for the general population.

LessWrong is also credulous about racist pseudoscience, and, in the words of a former Head of Communications at the Centre for Effective Altruism, is largely "straight-up racist". One of the admins of LessWrong and co-founders of Lightcone Infrastructure once said, in the context of a discussion about the societal myth that gay people are evil or malicious and a danger to children:

I think... finding out (in the 1950s) that someone maintained many secret homosexual relationships for many years is actually a signal the person is fairly devious, and is both willing and capable of behaving in ways that society has strong norms about not doing.

It obviously isn't true about homosexuals once the norm was lifted, but my guess is that it was at the time accurate to make a directional bayesian update that the person had behaved in actually bad and devious ways.

Such statements make "rationalist" a misnomer. (I was able to partially dissuade of him of this nonsense by showing him some of the easily accessible evidence he could have looked up for himself, but the community did not seem to particularly value my intervention.)

I don’t know that the epistemic practices of the EA community can be rescued as long the EA community remains interpenetrated with LessWrong to a major degree. The purpose of LessWrong is not to teach rationality, but to disable one’s critical faculties until one is willing to accept nonsense. Perhaps it is futile to clamour for better-quality scholarship when such a large undercurrent of the EA community is committed to the idea that normal ideas of what constitutes good scholarship are wrong and that the answers to what constitutes actually good scholarship lie with Eliezer Yudkowsky, an amateur philosopher with no relevant qualifications or achievements in any field, who frequently speaks with absolute confidence and is wrong, who experts often find non-credible, who has said he literally sees himself as the smartest person on Earth, and who rarely admits mistakes (despite making many) or issues corrections. If Yudkowsky is your highest and more revered authority, if you follow him in rejecting academia, institutional science, mainstream philosophy, journalism, normal culture, and so on, then I don’t know what could possibly convince you that the untrue things you believe are untrue, since your fundamental epistemology comes down to whether Yudkowsky says something is true or not, and he’s told you to reject all other sources of truth.

To the extent the EA community is under LessWrong’s spell, it will probably remain systemically irrational forever. Only within the portions of the EA community who have broken that spell, or never come under it in the first place, is there the hope for academic standards, mainstream scientific standards, traditional critical thinking, journalistic fact-checking, culturally evolved wisdom, and so on to take hold. It would be like expecting EA to be rational about politics while 30% of the community is under the spell of QAnon, or to be rational about global health while a large part of the community is under the spell of anti-vaccination pseudoscience. It’s just not gonna happen.

But maybe my root cause analysis is wrong and the EA community can course correct without fundamentally divorcing LessWrong. I don’t know. I hope that, whatever is the root cause, whatever it takes to fix it, the EA community’s current low standards for evidence and argumentation pertaining to AGI risk get raised significantly.

I don’t think it’s a brand new problem, by the way. Around 2016, I was periodically arguing with people about AI on the main EA group on Facebook. One of my points of contention was that MIRI’s focus on symbolic AI was a dead-end and that machine learning had empirically produced much better results, and was where the AI field was now focused. (MIRI took a long time before they finally hired their first researcher to focus on machine learning.) I didn’t have any more success convincing people about that back then than I’ve been having lately with my current points of contention.

I agree though that the situation seems to have gotten much worse in recent years, and ChatGPT (and LLMs in general) probably had a lot to do with that.

David Mathers🔸 @ 2025-12-08T15:37 (+16)

I don't think EAs AI focus is a product only of interaction with Less Wrong,-not claiming you said otherwise-but I do think people outside the Less Wrong bubble tend to be less confident AGI is imminent, and in that sense less "cautious".

I think EAs AI focus is largely a product of the fact that Nick Bostrom knew Will and Toby when they were founding EA, and was a big influence on their ideas. Of course, to some degree this might be indirect influence from Yudkowsky since he was always interacting with Nick Bostrom, but it's hard to know in what direction the influenced flowed here. I was around in Oxford during the embryonic stages of EA, and while I was not involved-beyond being a GWWC member, I did have the odd conversation with people who were involved, and my memory is that even then, people were talking about X-risk from AI as a serious contender for the best cause area, as early as at least 2014, and maybe a bit before that. They -EDIT: by "they" here I mean, "some people in Oxford, I don't remember who"; don't know when Will and Toby specifically first interacted with LW folk-were involved in discussion with LW people, but I don't think they got the idea FROM LW. Seems more likely to me they got it from Bostrom and the Future of Humanity Institute, who were just down the corridor.

What is true is that Oxford people have genuinely expressed much more caution about timelines. I.e. in What We Owe the Future, published as late as 2022, Will is still talking about how AGI might be more than 50 years, away but also "it might come soon-within the next fifty or even twenty years." (If you're wondering what evidence he cites, it's the Cotra bioanchors report.) His discussion primarily emphasizes uncertainty about exactly when AGI will arrive, and how we can't be confident it's not close. He cites a figure from an Open Phil report guessing an 8% chance of AGI by 2036*. I know you're view is that this is all wildly wrong still, but it's quite different from what many-not all-Less Wrong people say, who tend to regard 20 years as a long time line. (Maybe Will has updated to shorter timelines since of course.)

I think there is something of a divide between people who believe strongly in a particular set of LessWrong derived ideas about the imminence of AGI, and another set of people who are mainly driven by something like "we should take positive EV bets with a small chance of paying off, and doing AI stuff just in case AGI arrives soon". Defending the point about taking positive EV bets with only a small chance of pay-off is what a huge amount of the academic work on Longtermism at the GPI in Oxford was about. (This stuff definitely has been subjected to-severe-levels of peer reviewed scrutiny, as it keeps showing up in top philosophy journals with rejection rates of like, 90%.)

*This is more evidence people were prepared to bet big on AI risk long before the idea that AGI is actually imminent became as popular as it is now. I think people just rejected the idea that useful work could only be done when AGI was definitely near, and we had near-AGI models.

Linch @ 2025-12-08T19:21 (+12)

eh, I think the main reason EAs believe AGI stuff is reasonably likely is because this opinion is correct, given the best available evidence[1].

Having a genealogical explanation here is sort of answering the question on the wrong meta-level, like giving a historical explanation for "why do evolutionists believe in genes" or telling a touching story about somebody's pet pig for "why do EAs care more about farmed animal welfare than tree welfare."

Or upon hearing "why does Google use ads instead of subscriptions?" answering with the history of their DoubleClick acquisition. That history is real, but it's downstream of the actual explanation: the economics of internet search heavily favor ad-supported models regardless of the specific path any company took. The genealogy is epiphenomenal.

The historical explanations are thus mildly interesting but they conflate the level of why.

EDIT: man I'm worried my comment will be read as a soldier-mindset thing that only makes sense if you presume the "AGI likely soon" is already correct. Which does not improve on the conversation. Please only upvote it iff a version of you that's neutral on the object-level question would also upvote this comment.

- ^

Which is a different claim from whether it's ultimately correct. Reality is hard.

David Mathers🔸 @ 2025-12-08T19:32 (+3)

Yeah, it's fair objection that even answer the why question like I did presupposes that EAs are wrong, or at least, merely luckily right. (I think this is a matter of degree, and that EAs overrated the imminence of AGI and the risk of takeover on average, but it's still at least reasonable to believe AI safety and governance work can have very high expected value for roughly the reasons EAs do.) But I was responding to Yarrow who does think that EAs are just totally wrong, so I guess really I was saying that "conditional on a sociological explanation being appropriate, I don't think it's as LW-driven as Yarrow thinks", although LW is undoubtedly important.)

Linch @ 2025-12-08T21:01 (+4)

presupposes that EAs are wrong, or at least, merely luckily right

Right, to be clear I'm far from certain that the stereotypical "EA view" is right here.

I guess really I was saying that "conditional on a sociological explanation being appropriate, I don't think it's as LW-driven as Yarrow thinks", although LW is undoubtedly important.

Sure that makes a lot of sense! I was mostly just using your comment to riff on a related concept.

I think reality is often complicated and confusing, and it's hard to separate out contingency vs inevitable stories for why people believe what they believe. But I think the correct view is that EAs' belief on AGI probability and risk (within an order of magnitude or so) is mostly not contingent (as of the year 2025) even if it turns out to be ultimately wrong.

The Google ads example was the best example I could think of to illustrate this. I'm far from certain that Google's decision to use ads was actually the best source of long-term revenue (never mind being morally good lol). But it still seemed like the internet as we understand it meant it was implausible that Google ads was counterfactually due to their specific acquisitions.

Similarly, even if EAs ignored AI before for some reason, and never interacted with LW or Bostrom, it's implausible that, as of 2025, people who are concerned with ambitious, large-scale altruistic impact (and have other epistemic, cultural, and maybe demographic properties characteristic of the movement) would not think of AI as a big deal. AI is just a big thing in the world that's growing fast. Anybody capable of reading graphs can see that.

That said, specific micro-level beliefs (and maybe macro ones) within EA and AI risk might be different without influence from either LW or the Oxford crowd. For example there might be a stronger accelerationist arm. Alternatively, people might be more queasy with the closeness with the major AI companies, and there will be a stronger and more well-funded contingent of folks interested in public messaging on pausing or stopping AI. And in general if the movement didn't "wake up" to AI concerns at all pre-ChatGPT I think we'd be in a more confused spot.

Yarrow Bouchard 🔸 @ 2025-12-08T23:49 (+2)

How many angels can dance on the head on a pin? An infinite number because angels have no spatial extension? Or maybe if we assume angels have a diameter of ~1 nanometre plus ~1 additional nanometre of diameter for clearance for dancing we can come up with a ballpark figure? Or, wait, are angels closer to human-sized? When bugs die do they turn into angels? What about bacteria? Can bacteria dance? Are angels beings who were formerly mortal, or were they "born” angels?[1]

AI is just a big thing in the world that's growing fast. Anybody capable of reading graphs can see that.

Well, some of the graphs are just made-up, like those in "Situational Awareness", and some of the graphs are woefully misinterpreted to be about AGI when they’re clearly not, like the famous METR time horizon graph.[2] I imagine that a non-trivial amount of EA misjudgment around AGI results from a failure to correctly read and interpret graphs.

And, of course, when people like titotal examine the math behind some of these graphs, like those in AI 2027, they are sometimes found to be riddled with major mistakes.

What I said elsewhere about AGI discourse in general is true about graphs in particular: the scientifically defensible claims are generally quite narrow, caveated, and conservative. The claims that are broad, unqualified, and bold are generally not scientifically defensible. People at METR themselves caveat the time horizons graph and note its narrow scope (I cited examples of this elsewhere in the comments on this post). Conversely, graphs that attempt to make a broad, unqualified, bold claim about AGI tend to be complete nonsense.

Out of curiosity, roughly what probability would you assign to there being an AI financial bubble that pops sometime within the next five years or so? If there is an AI bubble and if it popped, how would that affect your beliefs around near-term AGI?

- ^

How is correctness physically instantiated in space and time and how does it physically cause physical events in the world, such as speaking, writing, brain activity, and so on? Is this an important question to ask in this context? Do we need to get into this?

You can take an epistemic practice in EA such as "thinking that Leopold Aschenbrenner's graphs are correct" and ask about the historical origin of that practice without making a judgement about whether the practice is good or bad, right or wrong. You can ask the question in a form like, "How did people in EA come to accept graphs like those in 'Situational Awareness' as evidence?" If you want to frame it positively, you could ask the question as something like, "How did people in EA learn to accept graphs like these as evidence?" If you want to frame it negatively, you could ask, "How did people in EA not learn not to accept graphs like these as evidence?" And of course you can frame it neutrally.

The historical explanation is a separate question from the evaluation of correctness/incorrectness and the two don't conflict with each other. By analogy, you can ask, "How did Laverne come to believe in evolution?" And you could answer, "Because it's the correct view," which would be right, in a sense, if a bit obtuse, or you could answer, "Because she learned about evolution in her biology classes in high school and college", which would also be right, and which would more directly answer the question. So, a historical explanation does not necessarily imply that a view is wrong. Maybe in some contexts it insinuates it, but both kinds of answers can be true.

But this whole diversion has been unnecessary. - ^

Do you know a source that formally makes the argument that the METR graph is about AGI? I am trying to pin down the series of logical steps that people are using to get from that graph to AGI. I would like to spell out why I think this inference is wrong, but first it would be helpful to see someone spell out the inference they’re making.

Denkenberger🔸 @ 2025-12-20T08:06 (+2)

Yeah, and there are lots of influences. I got into X risk in large part due to Ray Kurzweil's The Age of Spiritual Machines (1999) as it said "My own view is that a planet approaching its pivotal century of computational growth - as the Earth is today - has a better than even chance of making it through. But then I have always been accused of being an optimist."

AïdaLahlou @ 2025-12-07T09:22 (+3)

I think the question is not 'whether there should be EA efforts and attention devoted to AGI' but whether the scale of these efforts is justified - and more importantly, how do we reach that conclusion.

You say no one else is doing effective work on AI and AGI preparedness but what I see in the world suggests the opposite. Given the amount of attention and investment of individuals, states, universities, etc in AI, statistically there's bound to be a lot of excellent people working on it, and that number is set to increase as AI becomes even more mainstream.

Teenagers nowadays have bought in to the idea that 'AI is the future' and go to study it at university, because working in AI is fashionable and well-paid. This is regardless of whether they've had deep exposure to Effective Altruism. If EA wanted this issue to become mainstream, well... it has undoubtedly succeeded. I would tend to agree that at this moment in time, a redistribution of resources and 'hype' towards other issues would be justified and welcome (but again, crucially, we have to agree on a transparent method to decide whether this is the case, as OP I think importantly calls for).

Yarrow Bouchard 🔸 @ 2025-12-06T00:48 (+1)

What percentage chance would you put on an imminent alien invasion and what amount of resources would say is rational to allocate for defending against it? The astrophysicist Avi Loeb at Harvard is warning that there is a 30-40% chance interstellar alien probes have entered our solar system and pose a threat to human civilization. (This is discussed at length in the Hank Green video I linked and embedded in the post.)

It’s possible we should start investing in R&D now that can defend against advanced autonomous space-based technologies that might be used by a hostile alien intelligence. Even if you think there’s only a 1% chance of this happening, it justifies some investment.

And most of what EAs are working on is determining whether we're in that world

As I see it, this isn’t happening — or just barely. Everything flows from the belief that AGI is imminent, or at least that’s there’s a very significant, very realistic chance (10%+) that it’s imminent, and whether that’s true or not is barely ever questioned.

Extraordinary claims require extraordinary evidence. Most of the evidence cited by the EA community is akin to pseudoscience — Leopold Aschenbrenner’s "Situational Awareness" fabricates graphs and data; AI 2027 is a complete fabrication and the authors openly admit that, but it’s not foregrounded enough in the presentation such that it’s misleading. Most stuff is just people reasoning philosophically based on hunches. (And in these philosophical discussions, people in EA even invent their own philosophical terms like "epistemics" and "truth-seeking" that have no agreed-upon definition that anyone has written down — and which don’t come from academic philosophy or any other academic field.)

It’s very easy to make science come to whatever conclusion you want when you can make up data or treat personal subjective guesses plucked from thin air as data. Very little EA "research" on AGI would clear the standards for publication in a reputable peer-reviewed journal, and the research that would clear that standard (and has occasionally passed it, in fact) tends to make much more narrow, conservative, caveated claims than the beliefs that people in EA actually hold and act on. The claims that people in EA are using to guide the movement are not scientifically defensible. If they were, they would be publishable.

There is a long history of an anti-scientific undercurrent in the EA community. People in EA are frequently disdainful of scientific expertise. Eliezer Yudkowsky and Nate Soares seem to call for the rejection of the "whole field of science" in their new book, which is a theme Yudkowsky has promoted in his writing for many years.

The overarching theme of my critique is that the EA approach to the near-term AGI question is unscientific and anti-scientific. The treatment of the question of whether it’s possible, realistic, or likely in the first place is unscientific and anti-scientific. It isn’t an easy out to invoke the precautionary principle because the discourse/"research" on what to do to prepare for AGI is also unscientific and anti-scientific. In some cases, it seems incoherent.

If AGI will require new technology and new science, and we don’t yet know what that technology or science is, then it’s highly suspect to claim that we can do something meaningful to prepare for AGI now. Preparation depends on specific assumptions about the unknown science and technology that can’t be predicted in advance. The number of possibilities is far too large to prepare for them all, and most of them we probably can’t even imagine.

Mjreard @ 2025-12-06T01:12 (+15)

Your picture of EA work on AGI preparation is inaccurate to the extent I don't think you made a serious effort to understand the space you're criticizing. Most of the work looks like METR benchmarking, model card/RSP policy (companies should test new models for dangerous capabilities a propose mitigations/make safety cases), mech interp, compute monitoring/export controls research, and trying to test for undesirable behavior in current models.

Other people do make forecasts that rely on philosophical priors, but those forecasts are extrapolating and responding to the evidence being generated. You're welcome to argue that their priors are wrong or that they're overconfident, but comparing this to preparing for an alien invasion based on Oumuamua is bad faith. We understand the physics of space travel well enough to confidently put a very low prior on alien invasion. One thing basically everyone in the AI debate agrees on is that we do not understand where the limits of progress are as data reflecting continued progress continues to flow.

Richard Y Chappell🔸 @ 2025-12-06T16:22 (+26)

The AI bubble popping would be a strong signal that this [capabilities] optimism has been misplaced.

Are you presupposing that good practical reasoning involves (i) trying to picture the most-likely future, and then (ii) doing what would be best in that event (while ignoring other credible possibilities, no matter their higher stakes)?

It would be interesting to read a post where someone tries to explicitly argue for a general principle of ignoring credible risks in order to slightly improve most-probable outcomes. Seems like such a principle would be pretty disastrous if applied universally (e.g. to aviation safety, nuclear safety, and all kinds of insurance), but maybe there's more to be said? But it's a bit frustrating to read takes where people just seem to presuppose some such anti-precautionary principle in the background.

To be clear: I take the decision-relevant background question here to not be the binary question Is AGI imminent? but rather something more degreed, like Is there a sufficient chance of imminent AGI to warrant precautionary measures? And I don't see how the AI bubble popping would imply that answering 'Yes' to the latter was in any way unreasonable. (A bit like how you can't say an election forecaster did a bad job just because their 40% candidate won rather than the one they gave a 60% chance to. Sometimes seeing the actual outcome seems to make people worse at evaluating others' forecasts.)

Some supporters of AI Safety may overestimate the imminence of AGI. It's not clear to me how much of a problem that is? (Many people overestimate risks from climate change. That seems important to correct if it leads them to, e.g., anti-natalism, or to misallocate their resources. But if it just leads them to pollute less, then it doesn't seem so bad, and I'd be inclined to worry more about climate change denialism. Similarly, I think, for AI risk.) There are a lot more people who persist in dismissing AI risk in a way that strikes me as outrageously reckless and unreasonable, and so that seems by far the more important epistemic error to guard against?

That said, I'd like to see more people with conflicting views about AGI imminence arrange public bets on the topic. (Better calibration efforts are welcome. I'm just very dubious of the OP's apparent assumption that losing such a bet ought to trigger deep "soul-searching". It's just not that easy to resolve deep disagreements about what priors / epistemic practices are reasonable.)

Yarrow Bouchard 🔸 @ 2025-12-06T21:35 (+8)

Are you presupposing that good practical reasoning involves (i) trying to picture the most-likely future, and then (ii) doing what would be best in that event (while ignoring other credible possibilities, no matter their higher stakes)?

No, of course not.

I have written about this at length before, on multiple occasions (e.g. here and here, to give just two examples). I don’t expect everyone who reads one of my posts for the first time to know all that context and background — why would they? — but, also, the amount of context and background I have to re-explain every time I make a new post is already high because if I don’t, people will just raise the obvious objections I didn’t already anticipate and respond to in the post.

But, in, short: no.

I'm just very dubious of the OP's apparent assumption that losing such a bet ought to trigger deep "soul-searching". It's just not that easy to resolve deep disagreements about what priors / epistemic practices are reasonable.

I agree, but I didn’t say the AI bubble popping should settle the matter, only that I hoped it would motivate people to revisit the topic of near-term AGI with more open-mindedness and curiosity, and much less hostility toward people with dissenting opinions, given that there are already clear, strong objections — and some quite prominently made, as in the case of Toby Ord’s post on RL scaling — to the majority view of the EA community that seem to have mostly escaped serious consideration.

You don’t need an external economic event to see that the made-up graphs in "Situational Awareness" are ridiculous or that AI 2027 could not rationally convince anyone of anything who is not already bought-in to the idea of near-term AGI for other reasons not discussed in AI 2027. And so on. And if the EA community hasn’t noticed these glaring problems, what else hasn’t it noticed?

These are examples that anyone can (hopefully) easily understand with a few minutes of consideration. Anyone can click on one of the "Situational Awareness" graphs and very quickly see that the numbers and lines are just made-up, or that the y-axis has an ill-defined unit of measurement (“effective compute”, which is relative the tasks/problems compute is used for) or no unit of measurement (just “orders of magnitude”, but orders of magnitude of what?) and also no numbers. Plus other ridiculous features, such as claiming that GPT-4 is an AGI.

With AI 2027, it takes more like 10-20 minutes to see that the whole thing is just based on a few guys’ gut intuitions and nothing else. There are other glaring problems in EA discourse around AGI that take more time to explain, such as objections around benchmark construct validity or criterion validity. Even in cases where errors are clear, straightforward, objective, and relatively quick and simple to explain (see below), people often just ignore it when someone points them out. More complex or subtle errors will probably never be considered, even if they are consequential.

The EA community doesn’t have any analogue of peer review — or it just barely does — where people play the role of rigorously scrutinizing work to catch errors and make sure it meets a certain quality threshold. Some people in the community (probably a minority, but a vocal and aggressive minority) are disdainful of academic science in general and peer review in particular, and don’t think peer review or an analogue of it would actually be helpful. This makes things a little more difficult.

I recently caught two methodological errors in a survey question asked by the Forecasting Research Institute. Pointing them out was an absolutely thankless task and was deeply unpleasant. I got dismissed and downvoted, and if not for titotal’s intervention one of the errors probably never would have gotten fixed. This is very discouraging.

I’m empathetic to the fact that producing research or opinion writing and getting criticized to death also feels deeply unpleasant and thankless, and I’m not entirely sure on the nuances of how to make both sides of the coin feel rewarded rather than punished, but surely there must be a way. I’ve seen it work out well before (and it’s not like this is a new problem no one has dealt with before).

The FRI survey is one example, but one of many. In my observation, people in the EA community are not receptive to the sort of scrutiny that is commonplace in academic contexts. This could be anything from correcting someone on a misunderstanding of the definitions of technical terms used in machine learning or pointing out that Waymo vehicles still have a human in the loop (Waymo calls it "fleet response"). The community pats itself on the back for "loving criticism". I don’t think anybody really loves criticism — only rarely — and maybe the best we can hope for is to begrudgingly accept criticism. But that involves setting up a social and maybe even institutional process of criticism that currently doesn’t exist in the EA community.

When I say "not receptive", I don’t just mean that people hear the scrutiny and just disagree — that’s not inherently problematic, and could be what being receptive to scrutiny looks like — I mean that, for example, they downvote posts/comments and engage in personal insults or accusations (e.g. explicit accusations of "bad faith", of which there is one in the comments on this very post), or other hostile behaviour that discourages the scrutiny. Only my masochism allows me to continue posting and commenting on the EA Forum. I honestly don’t know if I have the stomach to do this long-term. It's probably a bad idea to try.

The Unjournal seems like it could be a really promising project in the area of scrutiny and sober second thought. I love the idea of commissioning outside experts to review EA research. I think for organizations with the money to pay for this, this should be the default.

Yarrow Bouchard 🔸 @ 2025-12-07T20:31 (+6)

I’ll say just a little bit more on the topic of the precautionary principle for now. I have a complex multi-part argument on this, which will take some explaining that I won’t try to do here. I have covered a lot of this in some previous posts and comments. The main three points I’d make in relation to the precautionary principle and AGI risk are:

-

Near-term AGI is highly unlikely, much less than a 0.05% chance in the next decade

-

We don’t have enough knowledge of how AGI will be built to usefully prepare now

-

As knowledge of how to build AGI is gained, investment into preparing for AGI becomes vastly more useful, such that the benefits of investing resources into preparation at higher levels of knowledge totally overwhelm the benefits of investing resources at lower levels of knowledge

Linch @ 2025-12-08T08:30 (+5)

- Near-term AGI is highly unlikely, much less than a 0.05% chance in the next decade.

Is this something you're willing to bet on?

Yarrow Bouchard 🔸 @ 2025-12-08T08:38 (+6)

In principle, of course, but how? There are various practical obstacles such as:

- Are such bets legal?

- How do you compel people to pay up?

- Why would someone on the other side of the bet want to take it?

- I don’t have spare money to be throwing at Internet stunts where there’s a decent chance that, e.g. someone will just abscond with my money and I’ll have no recourse (or at least nothing cost-effective)

If it’s a bet that takes a form where if AGI isn’t invented by January 1, 2036, people have to pay me a bunch of money (and vice versa), of course I’ll accept such bets gladly in large sums.

I would also be willing to take bets of that form for good intermediate proxies for AGI, which would take a bit of effort to figure out, but that seems doable. The harder part is figuring out how to actually structure the bet and ensure payment (if this is even legal in the first place).

From my perspective, it’s free money, and I’ll gladly take free money (at least from someone wealthy enough to have money to spare — I would feel bad taking it from someone who isn’t financially secure). But even though similar bets have been made before, people still don’t have good solutions to the practical obstacles.

I wouldn’t want to accept an arrangement that would be financially irrational (or illegal, or not legally enforceable), though, and that would amount to essentially burning money to prove a point. That would be silly, I don’t have that kind of money to burn.

Jason @ 2025-12-08T15:22 (+4)

Also, if I were on the low probability end of a bet, I'd be more worried about the risk of measurement or adjudicator error where measuring the outcome isn't entirely clear cut. Maybe a ruleset could be devised that is so objective and so well captures whether AGI exists that this concern isn't applicable. But if there's an adjudication/error error risk of (say) 2 percent and the error is equally likely on either side, it's much more salient to someone betting on (say) under 1 percent odds.

Jason @ 2025-12-07T13:16 (+4)

Some supporters of AI Safety may overestimate the imminence of AGI. It's not clear to me how much of a problem that is?

It seems plausible that there could be significant adverse effects on AI Safety itself. There's been an increasing awareness of the importance of policy solutions, whose theory of impact requires support outside the AI Safety community. I think there's a risk that AI Safety is becoming linked in the minds of third parties with a belief in AGI imminence in a way that will seriously if not irrevocably damage the former's credibility in the event of a bubble / crash.

One might think that publicly embracing imminence is worth the risk, of course. For example, policymakers are less likely to endorse strong action for anything that is expected to have consequences many decades in the future. But being perceived as crying wolf if a bubble pops is likely to have some consequences.

AïdaLahlou @ 2025-12-07T09:09 (+13)

1) Regardless of who is right about when AGI might be around (and bear in mind that we still have no proper definition for this), OP is right to call for more peer-reviewed scrutiny from people who are outsiders to both EA and AI.

This is just healthy, and regardless of whether this peer-reviewed reaches the same or different conclusions, NOT doing it automatically provokes legitimate fears that the EA movement is biased because so many of its members have personal (and financial) stakes in AI.

See this point of view by Shazeda Ahmed https://overthinkpodcast.com/episode-101-transcript She's an information scholar who has looked at AI and its links with EA and one of the critics of the lack of a counter-narrative.

I, for one, will tend to be skeptical of conclusions reached by a small pool of similar (demographically, economically, but also in the way they approach an issue) people as I will feel like there was a missed opportunity for true debate and different perspectives.

I take the point that these are technical discussions and therefore it makes it difficult to involve the general public into this debate, but not doing so creates the appearance (and often, more worryingly, the reality) of bias.

This can harm the EA movement as a whole (from my perspective it already does).

I'd love to see a more vocal and organised opposition that is empowered, respected, and funded to genuinely test assumptions.

2) Studying and devoting resources to preparing the world to AI technology doesn't seem like a bad idea given:

Low probability × massive stakes still justifies large resource allocation.

But, as OP seems to suggest it becomes an issue when that focus so prevalent that other just as important / likely issues are neglected because of that. It seems that EA's focus on rationality and 'counterfactuality' means that they should encourage people to work in fields that are truly neglected.

But can we really say that AI is still neglected given the massive outpourings of both private and public money into the sector? It is now very fashionable to work in AI, and a wide-spread belief is that doing so warrants a comfortable salary. Can we say the same thing about, say, the threat of nuclear annihilation, or biosecurity risk, or climate adaptation?

3) In response to the argument that 'even a false alarm would still produce valuable governance infrastructure' Yes, but at what cost? I don't see much discussion on whether all those resources would be better spent elsewhere.

David Mathers🔸 @ 2025-12-08T20:32 (+5)

Working on AI isn't the same as doing EA work on AI to reduce X-risk. Most people working in AI are just trying to make the AI more capable and reliable. There probably is a case for saying that "more reliable" is actually EA X-risk work in disguise, even if unintentionally, but it's definitely not obvious this is true.

Denkenberger🔸 @ 2025-12-19T06:35 (+4)

I agree, though I think the large reduction in EA funding for non-AI GCR work is not optimal (but I'm biased with my ALLFED association).

Yarrow Bouchard 🔸 @ 2025-12-19T06:37 (+2)

How much reduction in funding for non-AI global catastrophic risks has there been…?

Denkenberger🔸 @ 2025-12-19T07:09 (+6)

I'm not sure exactly, but ALLFED and GCRI have had to shrink, and ORCG, Good Ancestors, Global Shield, EA Hotel, Institute for Law & AI (name change from Legal Priorities Project), etc have had to pivot to approximately all AI work. SFF is now almost all AI.

Yarrow Bouchard 🔸 @ 2025-12-19T07:23 (+2)

That’s deeply disturbing.

Vasco Grilo🔸 @ 2025-12-08T14:17 (+10)

Thanks for the good post, Yarrow. I strongly upvoted it. I remain open to bets against short AI timelines, or what they supposedly imply, up to 10 k$. I mostly think about AI as normal technology.

Yarrow Bouchard 🔸 @ 2025-12-08T14:35 (+4)

Thanks, Vasco.

"AI as normal technology" is a catchy phrase, and could be a useful one, but I was so confused and surprised when I dug in deeper to what the "AI as a normal technology" view actually is, as described by the people who coined the term.

I think "normal technology" is a misnomer, because they seem to think some form of transformative AI or AGI will be created sometime over the next several decades, and in the meantime AI will have radical, disruptive economic effects.

They should come up with some other term for their view like "transformative AI slow takeoff" because "normal technology" just seems inaccurate.

Vasco Grilo🔸 @ 2025-12-08T16:09 (+2)

Fair! What they mean is closer to "AI as a more normal technology than many predict". Somewhat relatedly, I liked the post Common Ground between AI 2027 & AI as Normal Technology.

Henry Stanley 🔸 @ 2025-12-06T12:14 (+4)

I might be missing the point, but I'm not sure I see the parallels with FTX.

With FTX, EA orgs and the movement more generally relied on the huge amount of funding that was coming down the pipe from FTX Foundation and SBF. When all that money suddenly vanished, a lot of orgs and orgs-to-be were left in the lurch, and the whole thing caused a huge amount of reputational damage.

With the AI bubble popping... I guess some money that would have been donated by e.g. Anthropic early employees disappears? But it's not clear that that money has been 'earmarked' in the same way the FTX money was; it's much more speculative and I don't think there are orgs relying on receiving it.

OpenPhil presumably will continue to exist, although it might have less money to disburse if a lot of it is tied up in Meta stock (though I don't know that it is). Life will go on. If anything, slowing down AI timelines will probably be a good thing.

I guess I don't see how EA's future success is contingent on AI being a bubble or not. If it turns out to be a bubble, maybe that's good. If it turns out not to be a bubble, we sure as hell will have wanted to be on the vanguard of figuring out what a post-AGI world looks like and how to make it as good for humanity as possible.

Yarrow Bouchard 🔸 @ 2025-12-06T21:44 (+1)

This is directly answered in the post. Edit: Can you explain why you don’t find what is said about this in the post satisfactory?

Henry Stanley 🔸 @ 2025-12-07T13:23 (+2)

You do address the FTX comparison (by pointing out that it won't make funding dry up), that's fair. My bad.

But I do think you're make an accusation of some epistemic impropriety that seems very different from FTX - getting FTX wrong (by not predicting its collapse) was a catastrophe and I don't think it's the same for AI timelines. Am I missing the point?

Yarrow Bouchard 🔸 @ 2025-12-07T14:37 (+4)

The point of the FTX comparison is that, in the wake of the FTX collapse, many people in EA were eager to reflect on the collapse and try to see if there were any lessons for EA. In the wake of the AI bubble popping, people in EA could either choose to reflect in a similar way, or they could choose not to. The two situations are analogous insofar as they are both financial collapses and both could lead to soul-searching. They are disanalogous insofar as the AI bubble popping won’t affect EA funding and won’t associate EA in the public’s mind with financial crimes or a moral scandal.

It’s possible in the wake of the AI bubble popping, nobody in EA will try to learn anything. I fear that possibility. The comparisons I made to Ray Kurzweil and Elon Musk show that it is entirely possible to avoid learning anything, even when you ought to. So, EA could go multiple different ways with this, and I’m just saying what I hope will happen is the sort of reflection that happened post-FTX.

If the AI bubble popping wouldn’t convince you that EA’s focus on near-term AGI has been a mistake — or at least convince you to start seriously reflecting on whether it has been or not — what evidence would convince you?

David T @ 2025-12-09T23:00 (+11)

I think it's also disanalogous in the sense that the EA community's belief in imminent AGI isn't predicated on the commercial success of various VC-funded companies in the same way as the EA community's belief in the inherent goodness and amazing epistemics of its community did kind of assume that half its money wasn't coming from an EA-leadership endorsed criminal who rationalized his gambling of other people's money in EA terms...

The AI bubble popping (which many EAs actually want to happen) is somewhat orthogonal to the imminent AGI hypothesis;[1] the internet carried on growing after a bunch of overpromisers who misspent their capital fell by the wayside.[2] I expect that (whilst not converging on superintelligence) the same will happen with chatbots and diffusion models, and there will be plenty of scope for models to be better fit to benchmarks or for researchers to talk bots into creepier responses over the coming years.

The Singularity not happening by 2027 might be a bit of a blow for people that attached great weight to that timeline, but a lot are cautious to do that or have already given themselves probabilistic getouts. I don't think its going to happen in 2027 or ever, but if I thought differently I'm not sure 2027 actually being the year some companies failed to convince sovereign wealth funds they were close enough to AGI to deserve a trillion would or even should have that much impact.

I do agree with the wider point that it would be nice if EAs realized that many of their own donation preferences might be shaped at least as much by personal interests and vulnerable to rhetorical tricks as normies'; but I'm not sure that was the main takeaway from FTX