Announcing RoastMyPost: LLMs Eval Blog Posts and More

By Ozzie Gooen @ 2025-12-17T18:09 (+116)

Today we're releasing RoastMyPost, a new experimental application for blog post evaluation using LLMs. Try it Here.

TLDR

- RoastMyPost is a new QURI application that uses LLMs and code to evaluate blog posts and research documents.

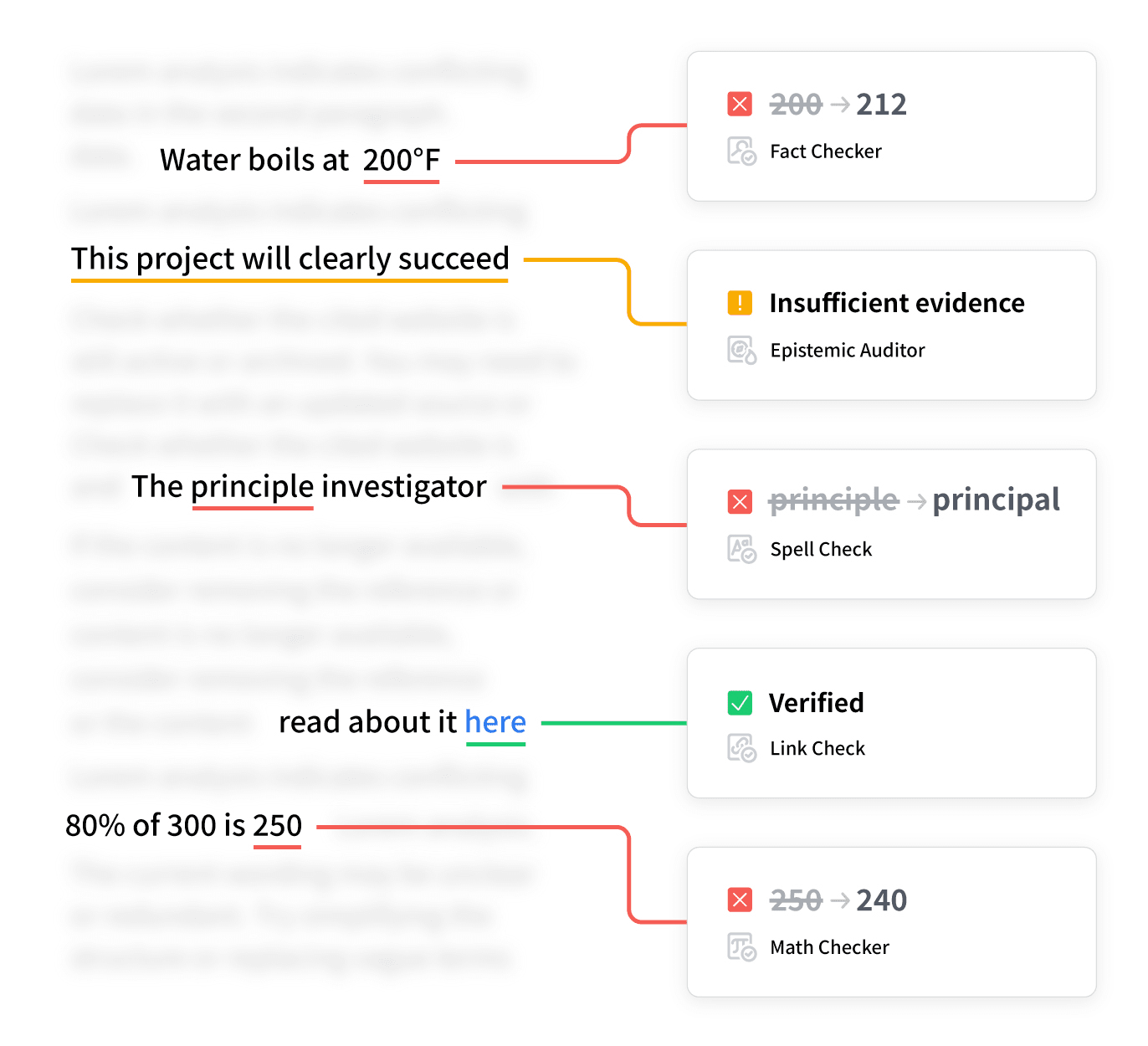

- It uses a variety of LLM evaluators. Most are narrow checks: Fact Check, Spell Check, Fallacy Check, Math Check, Link Check, Forecast Check, and others.

- Optimized for EA & Rationalist content with direct import from EA Forum and LessWrong URLs. Other links use standard web fetching.

- Works best for 200 - ~10,000 word documents with factual assertions and simple formatting. It can also do basic reviewing of Squiggle models. Longer documents and documents in LaTeX will experience slowdowns and errors.

- Open source, free for reasonable use[1]. Public examples are here.

- Experimentation encouraged! We're all figuring out how to best use these tools.

- Overall, we're most interested in using RoastMyPost as an experiment for potential LLM document workflows. The tech is early now, but it's at a good point for experimentation.

How It Works

- Import a document. Submit markdown text or provide the URL of a publicly accessible post.

- Select evaluators to run. A few are system-recommended. Others are custom evaluators submitted by users. Quality varies, so use with appropriate skepticism.

- Wait 1-5 minutes for processing. (potentially more if the site is busy)

- Review the results.

- Add or re-run evaluations as needed.

Screenshots

Reader Page

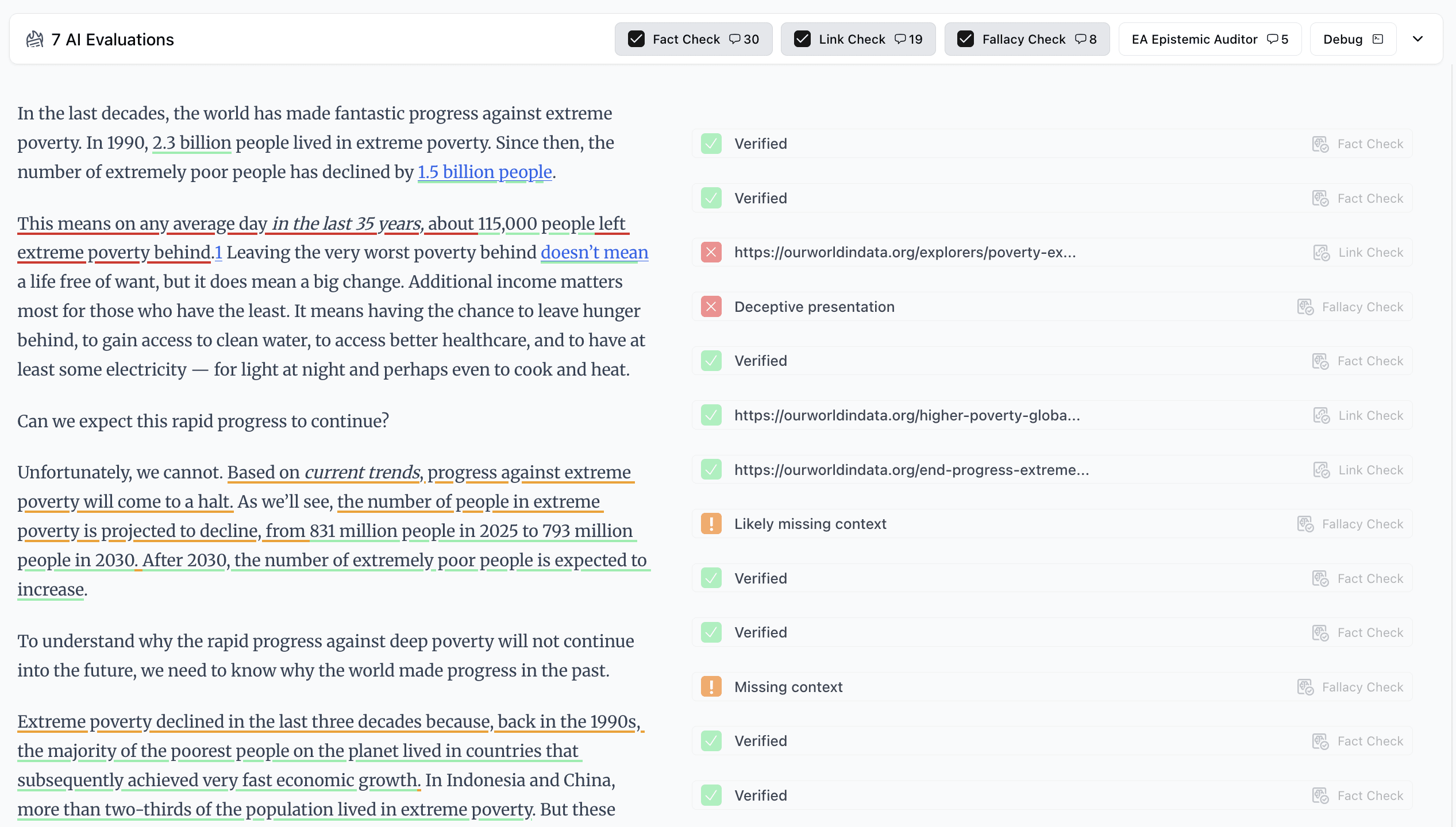

The reader page is the main article view. You can toggle different evaluators, each has a different set of inline comments.

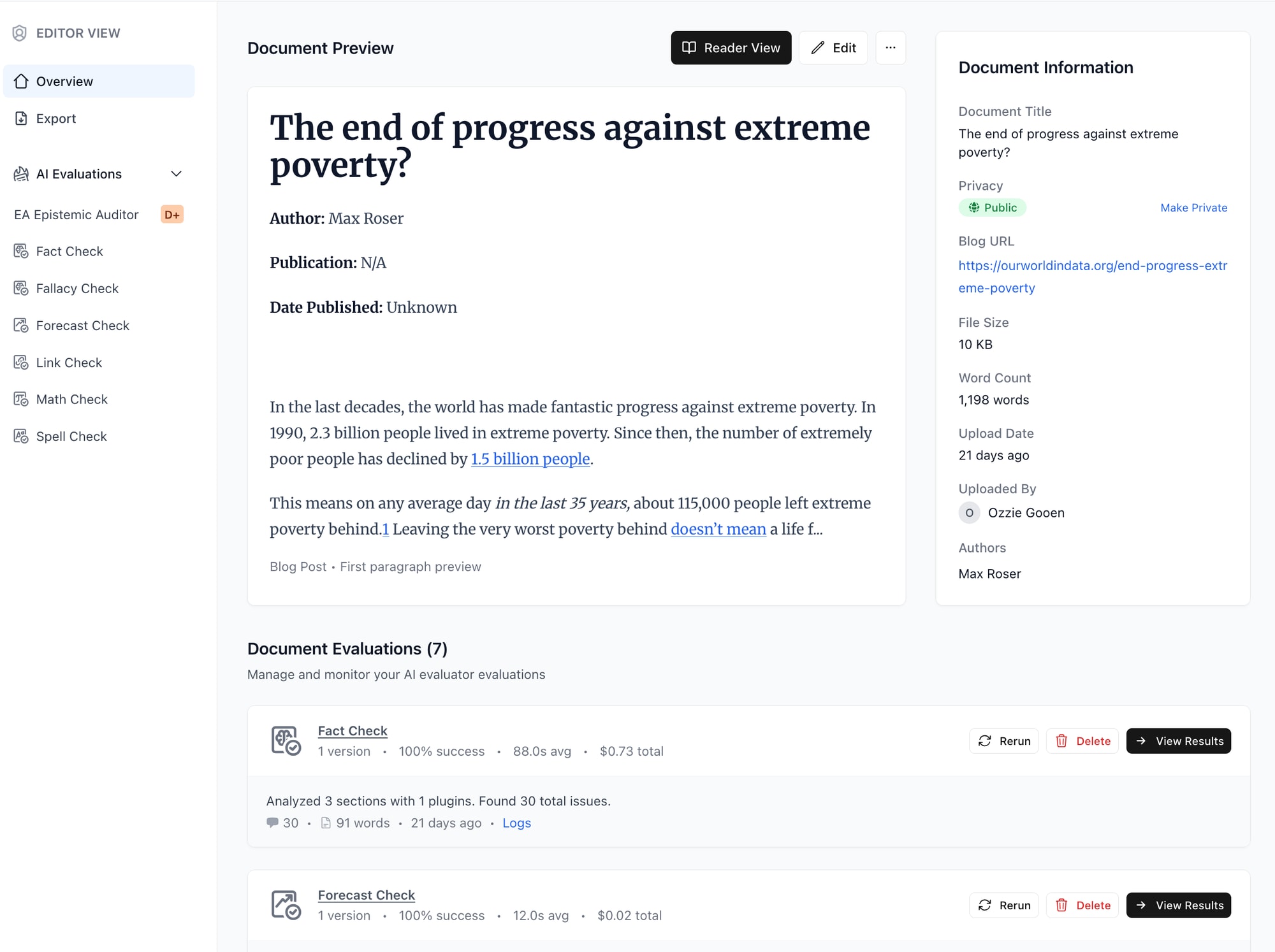

Editor Page

Add/remove/rerun evaluations and make other edits.

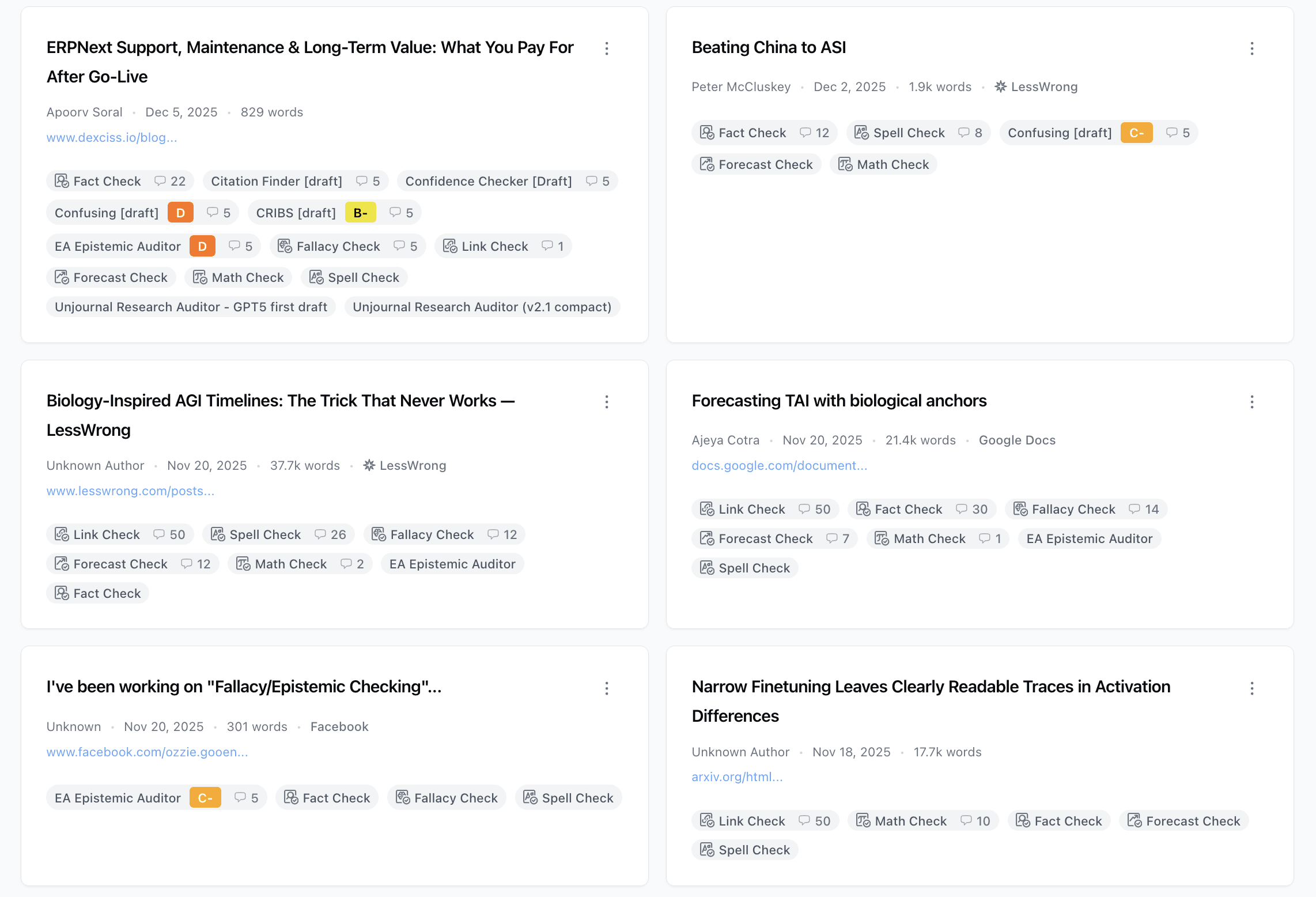

Posts Page

Current AI Agents / Workflows

| Agent Name | Description | Technical Details | Limitations |

| Fact Check | Verifies the accuracy of facts. | Looks up information with Perplexity, then forms a judgement. | Often makes mistakes due to limited context. Often limited to narrow factual disputes. Can quickly get expensive, so we only run a limited number of times per post. |

| Spell Check | Finds spelling and grammar mistakes. | Runs a simple script to decide on UK vs. US spelling, then uses an LLM for spelling/grammar mistakes. | Occasionally flags other sorts of issues, like math mistakes. Often incorrectly flags issues of UK vs. US spellings. |

| Fallacy Check | Flags potential logical fallacies and similar epistemic issues. | Uses a simple list of potential error types, with Sonnet 4.5. Does a final filter and analysis. | Overly critical. Sometimes misses key context. Doesn't do internet searching. Pricey. |

| Forecast Check | Finds binary forecasts mentioned in posts. Flags cases where the result is very different to what the author stated. | Converts them to explicit forecasting questions, then sends this to an LLM forecasting tool. This tool uses Perplexity searches and multiple LLM queries. | Limited to binary percentage forecasts, which are fairly infrequent in blog posts. Has limited context, so sometimes makes mistakes given that. Uses a very simple prompt for forecasting. |

| Math Check | Verifies straightforward math equations. | Attempts to verify math results using Math.js. Falls back to LLM judgement. | Mainly limited to simple arithmetic expressions. Doesn't always trigger where would be best. Few posts have math equations. |

| Link Check | Detects all links in a document. Checks that a corresponding website exists. | Uses HEAD requests for most websites. Uses the API for EA Forum and LessWrong posts, but not other content like Tag or user pages yet. | Many websites block automated requests like this. Also, this doesn't check that the content is relevant, just that a website exists. |

| EA Epistemic Auditor | Provides some high-level analysis and a numeric review. | A simple prompt that takes in the entirety of a blog post. | Doesn't do internet searching. Limited to 5 comments per post. It's fairly rough and could use improvement. |

Is it Good?

RoastMyPost is useful for knowledgeable LLM users who understand current model limitations. Modern LLMs are decent but finicky at feedback and fact-checking. The false positive rate for error detection is significant. This makes it well-suited for flagging issues for human review, but not reliable enough to treat results as publicly authoritative.

Different checks suit different content types. Spell Check and Link Check work across all posts. Fact Check and Fallacy Check perform best on fact-dense, rigorous articles. Use them selectively.

Results will vary substantially between users. Some will find workflows that extract immediate value; others will find the limitations frustrating. Performance will improve as better models become available. We're optimistic about LLM-assisted epistemics long-term. Reaching the full vision requires substantial development time.

Consider this an experimental tool that's ready for competent users to test and build on.

What are Automated Writing Evaluations Good For?

Much of our focus with RoastMyPost is exploring the potential of automated writing evaluations. Here's a list of potential use cases for this technology.

RoastMyPost now is not reliable and mature enough for all of this. Currently it handles draft polishing and basic error detection decently, but use cases requiring high-confidence results (like publication gatekeeping or public trust signaling) remain aspirational.

1. Individual authors

- Draft polishing: Alice is writing a blog post and wants it to be sharper and more reliable. She runs it through RoastMyPost to catch spelling mistakes, factual issues, math errors, and other weaknesses.

- Public trust signaling: George wants readers to (correctly) see his writing as reputable. He runs his drafts through RoastMyPost, which verifies the key claims. He then links to the evaluation in his blog post, similar to Markdown Badges on GitHub or GitLab. (Later, this could become an actual badge.)

2. Research teams

- Publication gatekeeping: Sophie runs a small research organization and wants LLMs in their quality assurance pipeline. Her team uses RoastMyPost to help evaluate posts before publishing.

- LLM-assisted workflows: Samantha uses LLMs to draft fact-heavy reports, which often contain hallucinated links and mathematical errors. She builds a workflow that runs RoastMyPost on the LLM outputs and uses the evaluations to drive automated revisions.

3. Readers

- Pre-flight checks for reading: Maren is a frequent blog reader. Before investing time in a post, they check its public RoastMyPost evaluations to see whether it contains major errors.

- Deeper comprehension and critique: Chase uses RoastMyPost to better understand the content they read. They can see extra details, highlighted assumptions, and called-out logical fallacies, which helps them interpret arguments more critically.

4. Researchers studying LLMs and epistemics

- Model comparison: Julian is a researcher evaluating language models. He runs RoastMyPost on reports produced by several models and compares the resulting evaluations.

- Meta-epistemic insight: Mike is interested in how promising LLMs are for improving researcher epistemics. He browses RoastMyPost evaluations and gets a clearer sense of current strengths and limitations.

Privacy & Data Confidentiality

Users can make public or private documents.

We use a few third-party providers that require access to data. Primarily Anthropic, Perplexity, and Helicone. We don't recommend using RoastMyPost in cases where you want strong guarantees of privacy.

Private information is accessible to our team, who will occasionally review LLM workflows to look for problems and improvements.

Technical Details

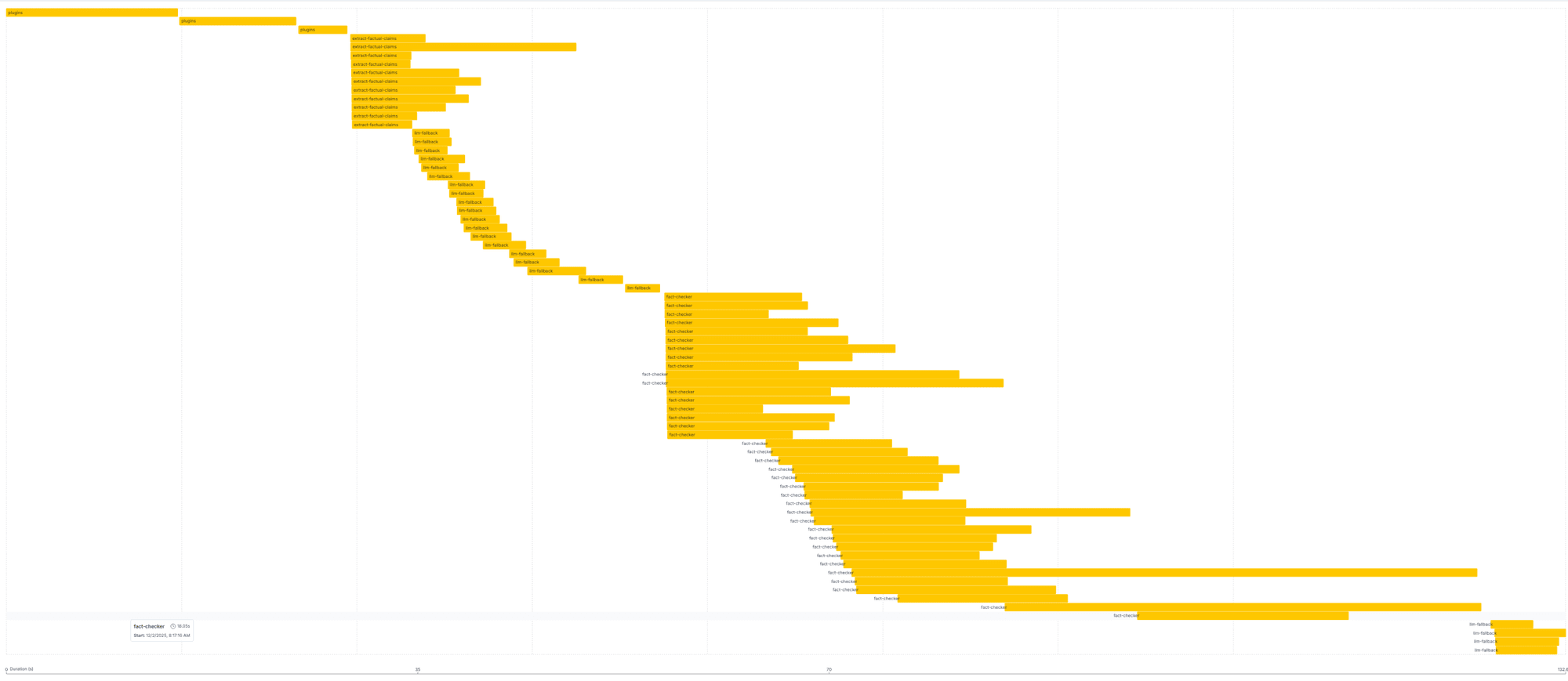

Most RoastMyPost evaluators use simple programmatic workflows. Posts are split into chunks, then verification and checking runs on each chunk individually.

LLM functionality and complex operations are isolated into narrow, independently testable tools with web interfaces. This breaks complex processes into discrete, (partially) verifiable steps.

Almost all LLM calls are to Claude Sonnet 4.5, with the main exception of calls to Perplexity via the OpenRouter API. We track data with Helicone.ai for basic monitoring.

Here you can see fact checking and forecast checking running on one large document. Evaluators run checks in parallel where possible, significantly reducing processing time.

This predefined workflow approach is simple and fast, but lacks some benefits of agentic architectures. We've tested agentic approaches but found them substantially more expensive and slower for marginal gains. The math validation workflow uses a small agent; everything else is direct execution. We'll continue experimenting with agents as models improve.

Building Custom Evaluators

The majority of RoastMyPost's infrastructure is general-purpose, supporting a long tail of potential AI evaluators.

Example evaluator ideas:

- Organization style guide checker - Enforce specific writing conventions, terminology, or formatting requirements

- Domain-specific fact verification - Medical claims, economic data, technical specifications, etc.

- Citation format validator - Check references against specific journal requirements (APA, Chicago, Nature, etc.)

- Argument structure analyzer - Map claims, evidence, and logical connections

- Readability optimizer - Target specific audiences (general public, technical experts, policymakers)

The app includes basic functionality for creating custom evaluators directly in the interface. More sophisticated customization is possible through JavaScript-based external evaluators.

If you're interested in building an evaluator, reach out and we can discuss implementation details.

Try it Out

Visit RoastMyPost.org to evaluate your documents. The platform is free for reasonable use and is being improved.

Submit feedback, bug reports, or custom evaluator proposals via GitHub issues or email me directly.

We're particularly interested in hearing about AI evaluator quality and use cases we haven't considered.

[1] At this point, we don't charge users. Users have hourly and monthly usage limits. If RoastMyPost becomes popular, we plan on introducing payments to help us cover costs.

Aidan Kankyoku @ 2025-12-19T17:59 (+7)

I love this idea! I just took it for a spin and the quality of the feedback isn't at a point I would find it very useful yet. My sense is that it's limited by the quality of the agents rather than anything about the design of the app, though maybe changes in the scaffold could help.

Most of the critiques were myopic, such as:

- It labeled one sentence in my intro as a "hasty generalization/unsupported claim" when I spend most of the post supporting that statement.

- In one sentence, it raised a flag for "missing context" about a study I reference, with a different flag affirming that the link embedded in the sentence provides the context

- I make a claim about how the majority of people view an issue, providing support for the claim, then discussing the problems with the view. It raised a flag on the claim I'm critiquing, calling it unscientific– even from the sentences immediately before and after, it should be clear that was exactly my point!

I could list several more examples, most of the flags I clicked on were misunderstandings in similar ways. Article is here if you want to take a look: https://www.roastmypost.org/docs/jr1MShmVhsK6Igp0yJCL2/reader

Jamie_Harris @ 2025-12-31T14:14 (+6)

I just tried it out and had a similar impression. I think this is a cool idea and am excited Ozzie created it, but suspect it needs active development (and/or improvements in the underlying models over time) before it's useful to me, due to similarish issues to what you found. I'll likely try a couple more times with drafts though!

Ozzie Gooen @ 2025-12-19T21:04 (+3)

Thanks for the feedback!

I did a quick look at this. I largely agree there were some incorrect checks.

It seems like these specific issues were mostly from the Fallacy Check? That one is definitely too aggressive (in addition to having limited context), I'll work on tuning it down. Note that you can choose which evaluators to run on each post, so going forward you might want to just skip that one at this point.

Aidan Kankyoku @ 2025-12-19T21:13 (+2)

It looks like maybe 60% fallacy check and 40% fact check. For instance, fact check:

- claims there are more farmed chickens than shrimps (!)

- Claims ICAW does not use aggressive tactics, apparently basing that on vague copy on their website

Ozzie Gooen @ 2025-12-19T21:26 (+2)

I'm looking now at the Fact Check. It did verify most of the claims it investigated on your post as correct, but not all (almost no posts get all, especially as the error rate is significant).

It seems like with chickens/shrimp it got a bit confused by numbers killed vs. numbers alive at any one time or something.

In the case of ICAWs, it looked like it did a short search via Perplexity, and didn't find anything interesting. The official sources claim they don't use aggressive tactics, but a smart agent would have realized it needed to search more. I think to get this one right would have involved a few more searches - meaning increased costs. There's definitely some tinkering/improvements to do here.

Aidan Kankyoku @ 2025-12-19T22:17 (+3)

That makes sense, I don't want to be overly fussy if it was getting most things right. I guess the thing is, it's not helpful if it mostly recognizes true facts as true but mistakes some true facts as false, if it does not accurately flag a significant number of incorrect facts, which in clicking through a bunch of flags I didn't see almost any I thought necessitated an edit.

Aaron Bergman @ 2025-12-19T03:23 (+5)

Super cool - a bit hectic and I substantively disagree with one of the "fallacies" the fallacy evaluator flagged on this post but I'll definitely be using this going forward

Ozzie Gooen @ 2025-12-19T21:10 (+2)

Thanks! I wouldn't take its takes too seriously, as it has limited context and seems to make a bunch of mistakes. It's more a thing to use to help flag potential issues (at this stage), knowing there's a false positive rate.

Arepo @ 2025-12-24T13:22 (+4)

Thanks Ozzie! I'll definitely try this out if I ever finish my current WIP :)

Questions that come to mind:

- Will it automatically improve as new versions of the underlying model families are released?

- Will you be actively developing it?

- Feature suggestion: could/would you add a check for obviously relevant literature and 'has anyone made basically this argument before'?

Ozzie Gooen @ 2025-12-25T06:54 (+2)

Sure thing!

1. I plan to update it with new model releases. Some of this should be pretty easy - I plan to keep Sonnet up to date, and will keep an eye on other new models.

2. I plan to at least maintain it. This year I can expect to spend maybe 1/3rd the year on it or so. I'm looking forward to seeing what use and the response is like, and will gauge things accordingly. I think it can be pretty useful as a tool, even without a full-time-equivalent improving it. (That said, if anyone wants to help fund us, that would make this much easier!)

3. I've definitely thought about this, can prioritize. There's a very high ceiling for how good background research can be for either a post, or for all claims/ideas in a post (much harder!). Simple version can be straightforward, though wouldn't be much better than just asking Claude to do a straightforward search.

david_reinstein @ 2025-12-18T17:25 (+4)

I've found it useful both for posts and for considering research and evaluations of research for Unjournal, with some limitations of course.

- The interface can be a little bit overwhelming as it reports so many different outputs at the same time some overlapping

+ but I expect it's already pretty usable and I expect this to improve.

+ it's an agent-based approach so as LLM models improve you can swap in the new ones.

I'd love to see some experiments with directly integrating this into the EA forum or LessWrong in some ways, e.g. automatically doing some checks on posts or on drafts or even on comments. Or perhaps an opt-in to that. It could be a step towards systematic ways of improving the dialogue on this forum -- and forums and social media in general, perhaps. This could also provide some good opportunity for human feedback that could improve the model, e.g. people could upvote or downvote the roast my post assessments, etc.

NickLaing @ 2025-12-18T06:58 (+4)

Will feed every post i write into this before publishing lol. Love it!

And name is A+ 🤣🤣🤣

Ozzie Gooen @ 2025-12-18T15:29 (+2)

Sounds good, thanks! When you get a chance to try it, let me know if you have any feedback!

Anthony DiGiovanni @ 2026-02-22T09:51 (+2)

Thanks for this! For what it's worth, some issues I've found with the "CRIBS" and "EA Epistemic Auditor" reviews for drafts of philosophical blog posts:

- excessively allergic to "hedging", and to sections of posts meant to preempt very important misreadings

- flagging some points as "hidden assumptions" even when they're explicitly addressed in the post, or seem clearly irrelevant to the argument

- critiquing claim X as not empirically supported, when X is the claim "Y isn't empirically supported".

But they're somewhat useful for surfacing what kinds of misunderstandings readers might have.