Yarrow's Quick takes

By Yarrow Bouchard 🔸 @ 2023-12-07T18:43 (+2)

Yarrow @ 2025-05-04T20:38 (+47)

Here are my rules of thumb for improving communication on the EA Forum and in similar spaces online:

- Say what you mean, as plainly as possible.

- Try to use words and expressions that a general audience would understand.

- Be more casual and less formal if you think that means more people are more likely to understand what you're trying to say.

- To illustrate abstract concepts, give examples.

- Where possible, try to let go of minor details that aren't important to the main point someone is trying to make. Everyone slightly misspeaks (or mis... writes?) all the time. Attempts to correct minor details often turn into time-consuming debates that ultimately have little importance. If you really want to correct a minor detail, do so politely, and acknowledge that you're engaging in nitpicking.

- When you don't understand what someone is trying to say, just say that. (And be polite.)

- Don't engage in passive-aggressiveness or code insults in jargon or formal language. If someone's behaviour is annoying you, tell them it's annoying you. (If you don't want to do that, then you probably shouldn't try to communicate the same idea in a coded or passive-aggressive way, either.)

- If you're using an uncommon word or using a word that also has a more common definition in an unusual way (such as "truthseeking"), please define that word as you're using it and — if applicable — distinguish it from the more common way the word is used.

- Err on the side of spelling out acronyms, abbreviations, and initialisms. You don't have to spell out "AI" as "artificial intelligence", but an obscure term like "full automation of labour" or "FAOL" that was made up for one paper should definitely be spelled out.

- When referencing specific people or organizations, err on the side of giving a little more context, so that someone who isn't already in the know can more easily understand who or what you're talking about. For example, instead of just saying "MacAskill" or "Will", say "Will MacAskill" — just using the full name once per post or comment is enough. You could also mention someone's profession (e.g. "philosopher", "economist") or the organization they're affiliated with (e.g. "Oxford University", "Anthropic"). For organizations, when it isn't already obvious in context, it might be helpful to give a brief description. Rather than saying, "I donated to New Harvest and still feel like this was a good choice", you could say "I donated to New Harvest (a charity focused on cell cultured meat and similar biotech) and still feel like this was a good choice". The point of all this is to make what you write easy for more people to understand without lots of prior knowledge or lots of Googling.

- When in doubt, say it shorter.[1] In my experience, when I take something I've written that's long and try to cut it down to something short, I usually end up with something a lot clearer and easier to understand than what I originally wrote.

- Kindness is fundamental. Maya Angelou said, “At the end of the day people won't remember what you said or did, they will remember how you made them feel.” Being kind is usually more important than whatever argument you're having.

Feel free to add your own rules of thumb.

- ^

This advice comes from the psychologist Harriet Lerner's wonderful book Why Won't You Apologize? — given in the completely different context of close personal relationships. I think it also works here.

Yarrow @ 2025-04-18T06:36 (+41)

I used to feel so strongly about effective altruism. But my heart isn't in it anymore.

I still care about the same old stuff I used to care about, like donating what I can to important charities and trying to pick the charities that are the most cost-effective. Or caring about animals and trying to figure out how to do right by them, even though I haven't been able to sustain a vegan diet for more than a short time. And so on.

But there isn't a community or a movement anymore where I want to talk about these sorts of things with people. That community and movement existed, at least in my local area and at least to a limited extent in some online spaces, from about 2015 to 2017 or 2018.

These are the reasons for my feelings about the effective altruist community/movement, especially over the last one or two years:

-The AGI thing has gotten completely out of hand. I wrote a brief post here about why I strongly disagree with near-term AGI predictions. I wrote a long comment here about how AGI's takeover of effective altruism has left me disappointed, disturbed, and alienated. 80,000 Hours and Will MacAskill have both pivoted to focusing exclusively or almost exclusively on AGI. AGI talk has dominated the EA Forum for a while. It feels like AGI is what the movement is mostly about now, so now I just disagree with most of what effective altruism is about.

-The extent to which LessWrong culture has taken over or "colonized" effective altruism culture is such a bummer. I know there's been at least a bit of overlap for a long time, but ten years ago it felt like effective altruism had its own, unique culture and nowadays it feels like the LessWrong culture has almost completely taken over. I have never felt good about LessWrong or "rationalism" and the more knowledge and experience of it I've gained, the more I've accumulated a sense of repugnance, horror, and anger toward that culture and ideology. I hate to see that become what effective altruism is like.

-The stories about sexual harassment are so disgusting. They're really, really bad and crazy. And it's so annoying how many comments you see on EA Forum posts about sexual harassment that make exhausting, unempathetic, arrogant, and frankly ridiculous statements, if not borderline incomprehensible in some cases. You see these stories of sexual harassment in the posts and you see evidence of the culture that enables sexual harassment in the comments. Very, very, very bad. Not my idea of a community I can wholeheartedly feel I belong to.

-Kind of a similar story with sexism, racism, and transphobia. The level of underreaction I've seen to instances of racism has been crazymaking. It's similar to the comments under the posts about sexual harassment. You see people justifying or downplaying clearly immoral behaviour. It's sickening.

-A lot of the response to the Nonlinear controversy was disheartening. It was disheartening to see how many people were eager to enable, justify, excuse, downplay, etc. bad behaviour. Sometimes aggressively, arrogantly, and rudely. It was also disillusioning to see how many people were so... easily fooled.

-Nobody talks normal in this community. At least not on this forum, in blogs, and on podcasts. I hate the LessWrong lingo. To the extent the EA Forum has its own distinct lingo, I probably hate that too. The lingo is great if you want to look smart. It's not so great if you want other people to understand what the hell you are talking about. In a few cases, it seems like it might even be deliberate obscurantism. But mostly it's just people making poor choices around communication and writing style and word choice, maybe for some good reasons, maybe for some bad reasons, but bad choices either way. I think it's rare that writing with a more normal diction wouldn't enhance people's understanding of what you're trying to say, even if you're only trying to communicate with people who are steeped in the effective altruist niche. I don't think the effective altruist sublanguage is serving good thinking or good communication.

-I see a lot of interesting conjecture elevated to the level of conventional wisdom. Someone in the EA or LessWrong or rationalist subculture writes a creative, original, evocative blog post or forum post and then it becomes a meme, and those memes end up taking on a lot of influence over the discourse. Some of these ideas are probably promising. Many of them probably contain at least a grain of truth or insight. But they become conventional wisdom without enough scrutiny. Just because an idea is "homegrown" it takes on the force of a scientific idea that's been debated and tested in peer-reviewed journals for 20 years, or a widely held precept of academic philosophy. That seems just intellectually the wrong thing to do and also weirdly self-aggrandizing.

-An attitude I could call "EA exceptionalism", where people assert that people involved in effective altruism are exceptionally smart, exceptionally wise, exceptionally good, exceptionally selfless, etc. Not just above the average or median (however you would measure that), but part of a rare elite and maybe even superior to everyone else in the world. I see no evidence this is true. (In these sorts of discussions, you also sometimes see the lame argument that effective altruism is definitionally the correct approach to life because effective altruism means doing the most good and if something isn't doing the most good, then it isn't EA. The obvious implication of this argument is that what's called "EA" might not be true EA, and maybe true EA looks nothing like "EA". So, this argument is not a defense of the self-identified "EA" movement or community or self-identified "EA" thought.)

-There is a dark undercurrent to some EA thought, along the lines of negative utilitarianism, anti-natalism, misanthropy, and pessimism. I think there is a risk of this promoting suicidal ideation because it basically is suicidal ideation.

-Too much of the discourse seems to revolve around how to control people's behaviours or beliefs. It's a bit too House of Cards. I recently read about the psychologist Kurt Lewin's study on the most effective ways to convince women to use animal organs (e.g. kidneys, livers, hearts) in their cooking during meat shortages during World War II. He found that a less paternalistic approach that showed more respect for the women's was more effective in getting them to incorporate animal organs into their cooking. The way I think about this is: you didn't have to be manipulated to get to the point where you are in believing what you believe or caring this much about this issue. So, instead of thinking of how to best manipulate people, think about how you got to the point where you are and try to let people in on that in an honest, straightforward way. Not only is this probably more effective, it's also more moral and shows more epistemic humility (you might be wrong about what you believe and that's one reason not to try to manipulate people into believing it).

-A few more things but this list is already long enough.

Put all this together and the old stuff I cared about (charity effectiveness, giving what I can, expanding my moral circle) is lost in a mess of other stuff that is antithetical to what I value and what I believe. I'm not even sure the effective altruism movement should exist anymore. The world might be better off if it closed down shop. I don't know. It could free up a lot of creativity and focus and time and resources to work on other things that might end up being better things to work on.

I still think there is value in the version of effective altruism I knew around 2015, when the primary focus was on global poverty and the secondary focus was on animal welfare, and AGI was on the margins. That version of effective altruism is so different from what exists today — which is mostly about AGI and has mostly been taken over by the rationalist subculture — that I have to consider those two different things. Maybe the old thing will find new life in some new form. I hope so.

David Mathers🔸 @ 2025-04-18T09:54 (+13)

I'd distinguish here between the community and actual EA work. The community, and especially its leaders, have undoubtedly gotten more AI-focused (and/or publicly admittted to a degree of focus on AI they've always had) and rationalist-ish. But in terms of actual altruistic activity, I am very uncertain whether there is less money being spent by EAs on animal welfare or global health and development in 2025 than there was in 2015 or 2018. (I looked on Open Phil's website and so far this year it seems well down from 2018 but also well up from 2015, but also 2 months isn't much of a sample.) Not that that means your not allowed to feel sad about the loss of community, but I am not sure we are actually doing less good in these areas than we used to.

Benevolent_Rain @ 2025-04-20T14:01 (+2)

Yes, this seems similar to how I feel: I think the major donor(s) have re-prioritized, but am not so sure how many people have switched from other causes to AI. I think EA is more left to the grassroots now, and the forum has probably increased in importance. As long as the major donors don't make the forum all about AI - then we have to create a new forum! But as donors change towards AI, the forum will inevitable see more AI content. Maybe some functions to "balance" the forum posts so one gets representative content across all cause areas? Much like they made it possible to separate out community posts?

Benevolent_Rain @ 2025-04-20T14:08 (+2)

On cause prioritization, is there a more recent breakdown of how more and less engaged EAs prioritize? Like an update of this? I looked for this from the 2024 survey but could not find it easily: https://forum.effectivealtruism.org/posts/sK5TDD8sCBsga5XYg/ea-survey-cause-prioritization

Jeroen Willems🔸 @ 2025-04-18T13:11 (+2)

Thanks for sharing this, while I personally believe the shift in focus on AI is justified (I also believe working on animal welfare is more impactful than global poverty), I can definitely sympathize with many of the other concerns you shared and agree with many of them (especially LessWrong lingo taking over, the underreaction to sexism/racism, and the Nonlinear controversy not being taken seriously enough). While I would completely understand in your situation if you don't want to interact with the community anymore, I just want to share that I believe your voice is really important and I hope you continue to engage with EA! I wouldn't want the movement to discourage anyone who shares its principles (like "let's use our time and resources to help others the most"), but disagrees with how it's being put into practice, from actively participating.

David Mathers🔸 @ 2025-04-18T14:14 (+23)

My memory is a large number of people to the NL controversy seriously, and the original threads on it were long and full of hostile comments to NL, and only after someone posted a long piece in defence of NL did some sympathy shift back to them. But even then there are like 90-something to 30-something agree votes and 200 karma on Yarrow's comment saying NL still seem bad: https://forum.effectivealtruism.org/posts/H4DYehKLxZ5NpQdBC/nonlinear-s-evidence-debunking-false-and-misleading-claims?commentId=7YxPKCW3nCwWn2swb

I don't think people dropped the ball here really, people were struggling honestly to take accusations of bad behaviour seriously without getting into witch hunt dynamics.

Jeroen Willems🔸 @ 2025-04-18T21:11 (+2)

Good point, I guess my lasting impression wasn't entirely fair to how things played out. In any case, the most important part of my message is that I hope he doesn't feels discouraged from actively participating in EA.

Yarrow Bouchard🔸 @ 2025-10-17T23:58 (+19)

If the people arguing that there is an AI bubble turn out to be correct and the bubble pops, to what extent would that change people's minds about near-term AGI?

I strongly suspect there is an AI bubble because the financial expectations around AI seem to be based on AI significantly enhancing productivity and the evidence seems to show it doesn't do that yet. This could change — and I think that's what a lot of people in the business world are thinking and hoping. But my view is a) LLMs have fundamental weaknesses that make this unlikely and b) scaling is running out of steam.

Scaling running out of steam actually means three things:

1) Each new 10x increase in compute is less practically or qualitatively valuable than previous 10x increases in compute.

2) Each new 10x increase in compute is getting harder to pull off because the amount of money involved is getting unwieldy.

3) There is an absolute ceiling to the amount of data LLMs can train on that they are probably approaching.

So, AI investment is dependent on financial expectations that are depending on LLMs enhancing productivity, which isn't happening and probably won't happen due to fundamental problems with LLMs and due to scaling becoming less valuable and less feasible. This implies an AI bubble, which implies the bubble will eventually pop.

So, if the bubble pops, will that lead people who currently have a much higher estimation than I do of LLMs' current capabilities and near-term prospects to lower that estimation? If AI investment turns out to be a bubble, and it pops, would you change your mind about near-term AGI? Would you think it's much less likely? Would you think AGI is probably much farther away?

Yarrow Bouchard🔸 @ 2025-10-21T22:40 (+1)

I'm really curious what people think about this, so I posted it as a question here. Hopefully I'll get some responses.

Yarrow Bouchard 🔸 @ 2025-12-13T09:47 (+18)

I’ve seen a few people in the LessWrong community congratulate the community on predicting or preparing for covid-19 earlier than others, but I haven’t actually seen the evidence that the LessWrong community was particularly early on covid or gave particularly wise advice on what to do about it. I looked into this, and as far as I can tell, this self-congratulatory narrative is a complete myth.

Many people were worried about and preparing for covid in early 2020 before everything finally snowballed in the second week of March 2020. I remember it personally.

In January 2020, some stores sold out of face masks in several different cities in North America. (One example of many.) The oldest post on LessWrong tagged with "covid-19" is from well after this started happening. (I also searched the forum for posts containing "covid" or "coronavirus" and sorted by oldest. I couldn’t find an older post that was relevant.) The LessWrong post is written by a self-described "prepper" who strikes a cautious tone and, oddly, advises buying vitamins to boost the immune system. (This seems dubious, possibly pseudoscientific.) To me, that first post strikes a similarly ambivalent, cautious tone as many mainstream news articles published before that post.

If you look at the covid-19 tag on LessWrong, the next post after that first one, the prepper one, is on February 5, 2020. The posts don't start to get really worried about covid until mid-to-late February.

How is the rest of the world reacting at that time? Here's a New York Times article from February 2, 2020, entitled "Wuhan Coronavirus Looks Increasingly Like a Pandemic, Experts Say", well before any of the worried posts on LessWrong:

The Wuhan coronavirus spreading from China is now likely to become a pandemic that circles the globe, according to many of the world’s leading infectious disease experts.

The prospect is daunting. A pandemic — an ongoing epidemic on two or more continents — may well have global consequences, despite the extraordinary travel restrictions and quarantines now imposed by China and other countries, including the United States.

The tone of the article is fairly alarmed, noting that in China the streets are deserted due to the outbreak, it compares the novel coronavirus to the 1918-1920 Spanish flu, and it gives expert quotes like this one:

It is “increasingly unlikely that the virus can be contained,” said Dr. Thomas R. Frieden, a former director of the Centers for Disease Control and Prevention who now runs Resolve to Save Lives, a nonprofit devoted to fighting epidemics.

The worried posts on LessWrong don't start until weeks after this article was published. On a February 25, 2020 post asking when CFAR should cancel its in-person workshop, the top answer cites the CDC's guidance at the time about covid-19. It says that CFAR's workshops "should be canceled once U.S. spread is confirmed and mitigation measures such as social distancing and school closures start to be announced." This is about 2-3 weeks out from that stuff happening. So, what exactly is being called early here?

CFAR is based in the San Francisco Bay Area, as are Lightcone Infrastructure and MIRI, two other organizations associated with the LessWrong community. On February 25, 2020, the city of San Francisco declared a state of emergency over covid. (Nearby, Santa Clara county, where most of what people consider as Silicon Valley is located, declared a local health emergency on February 10.) At this point in time, posts on LessWrong remain overall cautious and ambivalent.

By the time the posts on LessWrong get really, really worried, in the last few days of February and the first week of March, much of the rest of the world was reacting in the same way.

From February 14 to February 25, the S&P 500 dropped about 7.5%. Around this time, financial analysts and economists issued warnings about the global economy.

Between February 21 and February 27, Italy began its first lockdowns of areas where covid outbreaks had occurred.

On February 25, 2020, the CDC warned Americans of the possibility that "disruption to everyday life may be severe". The CDC made this bracing statement:

It's not so much a question of if this will happen anymore, but more really a question of when it will happen — and how many people in this country will have severe illness.

Another line from the CDC:

We are asking the American public to work with us to prepare with the expectation that this could be bad.

On February 26, Canada's Health Minister advised Canadians to stockpile food and medication.

The most prominent LessWrong post from late February warning people to prepare for covid came a few days later, on February 28. So, on this comparison, LessWrong was actually slightly behind the curve. (Oddly, that post insinuates that nobody else is telling people to prepare for covid yet, and congratulates itself on being ahead of the curve.)

In the beginning of March, the number of LessWrong posts tagged with covid-19 posts explodes, and the tone gets much more alarmed. The rest of the world was responding similarly at this time. For example, on February 29, 2020, Ohio declared a state of emergency around covid. On March 4, Governor Gavin Newsom did the same in California. The governor of Hawaii declared an emergency the same day, and over the next few days, many more states piled on.

Around the same time, the general public was becoming alarmed about covid. In the last days of February and the first days of March, many people stockpiled food and supplies. On February 29, 2020, PBS ran an article describing an example of this at a Costco in Oregon:

Worried shoppers thronged a Costco box store near Lake Oswego, emptying shelves of items including toilet paper, paper towels, bottled water, frozen berries and black beans.

“Toilet paper is golden in an apocalypse,” one Costco employee said.

Employees said the store ran out of toilet paper for the first time in its history and that it was the busiest they had ever seen, including during Christmas Eve.

A March 1, 2020 article in the Los Angeles Times reported on stores in California running out of product as shoppers stockpiled. On March 2, an article in Newsweek described the same happening in Seattle:

Speaking to Newsweek, a resident of Seattle, Jessica Seu, said: "It's like Armageddon here. It's a bit crazy here. All the stores are out of sanitizers and [disinfectant] wipes and alcohol solution. Costco is out of toilet paper and paper towels. Schools are sending emails about possible closures if things get worse.

In Canada, the public was responding the same way. Global News reported on March 3, 2020 that a Costco in Ontario ran out bottled water, toilet paper, and paper towels, and that the situation was similar at other stores around the country. The spike in worried posts on LessWrong coincides with the wider public's reaction. (If anything, the posts on LessWrong are very slightly behind the news articles about stores being picked clean by shoppers stockpiling.)

On March 5, 2020, the cruise ship the Grand Princess made the news because it was stranded off the coast of California due to a covid outbreak on board. I remember this as being one seminal moment of awareness around covid. It was a big story. At this point, LessWrong posts are definitely in no way ahead of the curve, since everyone is talking about covid now.

On March 8, 2020, Italy put a quarter of its population under lockdown, then put the whole country on lockdown on March 10. On March 11, the World Health Organization declared covid-19 a global pandemic. (The same day, the NBA suspended the season and Tom Hanks publicly disclosed he had covid.) On March 12, Ohio closed its schools statewide. The U.S. declared a national emergency on March 13. The same day, 15 more U.S. states closed their schools. Also on the same day, Canada's Parliament shut down because of the pandemic. By now, everyone knows it's a crisis.

So, did LessWrong call covid early? I see no evidence of that. The timeline of LessWrong posts about covid follow the same timeline that the world at large reacted to covid, increasing in alarm as journalists, experts, and governments increasingly rang the alarm bells. In some comparisons, LessWrong's response was a little bit behind.

The only curated post from this period (and the post with the third-highest karma, one of only four posts with over 100 karma) tells LessWrong users to prepare for covid three days after the CDC told Americans to prepare, and two days after Canada's Health Minister told Canadians to stockpile food and medication. It was also three days after San Francisco declared a state of emergency. When that post was published, many people were already stockpiling supplies, partly because government health officials had told them to. (The LessWrong post was originally published on a blog a day before, and based on a note in the text apparently written the day before that, but that still puts the writing of the post a day after the CDC warning and the San Francisco declaration of a state of emergency.)

Unless there is some evidence that I didn't turn up, it seems pretty clear the self-congratulatory narrative is a myth. The self-congratulation actually started in that post published on February 28, 2020, which, again, is odd given the CDC's warning three days before (on the same day that San Francisco declared a state of emergency), analysts' and economists' warnings about the global economy a bit before that, and the New York Times article warning about a probable pandemic at the beginning of the month. The post is slightly behind the curve, but it's gloating as if it's way ahead.

Looking at the overall LessWrong post history in early 2020, LessWrong seems to have been, if anything, slightly behind the New York Times, the S&P 500, the CDC, and enough members of the general public to clear out some stores of certain products. By the time LessWrong posting reached a frenzy in the first week of March, the world was already responding — U.S governors were declaring states of emergency, and everyone was talking about and worrying about covid.

I think people should be skeptical and even distrustful toward the claims of the LessWrong community, both on topics like pandemics and about its own track record and mythology. Obviously this myth is self-serving, and it was pretty easy for me to disprove in a short amount of time — so anyone who is curious can check and see that it's not true. The people in the LessWrong community who believe the community called covid early probably believe that because it's flattering. If they actually wondered if this is true or not and checked the timelines, it would become pretty clear that didn't actually happen.

Edited to add on Monday, December 15, 2025 at 3:20pm Eastern:

I spun this quick take out as a full post here. When I submitted the full post, there was no/almost no engagement on this quick take. In the future, I'll try to make sure to publish things only as a quick take or only as a full post, but not both. This was a fluke under unusual circumstances.

Feel free to continue commenting here, cross-post comments from here onto the full post, make new comments on the post, or do whatever you want. Thanks to everyone who engaged and left interesting comments.

Steven Byrnes @ 2025-12-14T20:53 (+19)

My gloss on this situation is:

YARROW: Boy, one would have to be a complete moron to think that COVID-19 would not be a big deal as late as Feb 28 2020, i.e. something that would imminently upend life-as-usual. At this point had China locked down long ago, and even Italy had started locking down. Cases in the USA were going up and up, especially when you correct for the (tiny) amount of testing they were doing. The prepper community had certainly noticed, and was out in force buying out masks and such. Many public health authorities were also sounding alarms. What kind of complete moron would not see what’s happening here? Why is lesswrong patting themselves on the back for noticing something so glaringly obvious?

MY REPLY: Yes!! Yes, this is true!! Yes, you would have to be a complete moron to not make this inference!! …But man, by that definition, there sure were an awful lot of complete morons around, i.e. most everyone. LessWrong deserves credit for rising WAY above the incredibly dismal standards set by the public-at-large in the English-speaking world, even if they didn’t particularly surpass the higher standards of many virologists, preppers, etc.

My personal experience: As someone living in normie society in Massachusetts USA but reading lesswrong and related, I was crystal clear that everything about my life was about to wrenchingly change, weeks before any of my friends or coworkers were. And they were very weirded out by my insistence on this. Some were in outright denial (e.g. “COVID = anti-Chinese racism” was a very popular take well into February, maybe even into March, and certainly the “flu kills far more than COVID” take was widespread in early March, e.g. Anderson Cooper). Others were just thinking about things in far-mode; COVID was a thing that people argued about in the news, not a real-world thing that could or should affect one’s actual day-to-day life and decisions. “They can’t possibly shut down schools, that’s crazy”, a close family member told me days before they did.

Dominic Cummings cited seeing the smoke as being very influential in jolting him to action (and thus impacting UK COVID policy), see screenshot here, which implies that this essay said something that he (and others at the tip-top of the UK gov’t) did not already see as obvious at the time.

A funny example that sticks in my memory is a tweet by Eliezer from March 11 2020. Trump had just tweeted:

So last year 37,000 Americans died from the common Flu. It averages between 27,000 and 70,000 per year. Nothing is shut down, life & the economy go on. At this moment there are 546 confirmed cases of CoronaVirus, with 22 deaths. Think about that!

Eliezer quote-tweeted that, with the commentary:

9/11 happens, and nobody puts that number into the context of car crash deaths before turning the US into a security state and invading Iraq. Nobody contextualizes school shootings. But the ONE goddamn time the disaster is a straight line on a log chart, THAT'S when... [quote-tweet Trump]

We're in Nerd Hell, lads and ladies and others. We're in a universe that was specifically designed to maximally annoy numerate people. This is like watching a stopped clock, waiting for it to be right, and just as the clock almost actually is right, the clock hands fall off.

Ben_West🔸 @ 2025-12-14T13:35 (+17)

Thanks for collecting this timeline!

The version of the claim I have heard is not that LW was early to suggest that there might be a pandemic but rather that they were unusually willing to do something about it because they take small-probability high-impact events seriously. Eg. I suspect that you would say that Wei Dai was "late" because their comment came after the nyt article etc, but nonetheless they made 700% betting that covid would be a big deal.

I think it can be hard to remember just how much controversy there was at the time. E.g. you say of March 13, "By now, everyone knows it's a crisis" but sadly "everyone" did not include the California department of public health, who didn't issue stay at home orders for another week.

[I have a distinct memory of this because I told my girlfriend I couldn't see her anymore since she worked at the department of public health (!!) and was still getting a ton of exposure since the California public health department didn't think covid was that big of a deal.]

Noah Birnbaum @ 2025-12-14T16:51 (+11)

I think the COVID case usefully illustrates a broader issue with how “EA/rationalist prediction success” narratives are often deployed.

That said, this is exactly why I’d like to see similar audits applied to other domains where prediction success is often asserted, but rarely with much nuance. In particular: crypto, prediction markets, LVT, and more recently GPT-3 / scaling-based AI progress. I wasn’t closely following these discussions at the time, so I’m genuinely uncertain about (i) what was actually claimed ex ante, (ii) how specific those claims were, and (iii) how distinctive they were relative to non-EA communities.

This matters to me for two reasons.

First, many of these claims are invoked rhetorically rather than analytically. “EAs predicted X” is often treated as a unitary credential, when in reality predictive success varies a lot by domain, level of abstraction, and comparison class. Without disaggregation, it’s hard to tell whether we’re looking at genuine epistemic advantage, selective memory, or post-hoc narrative construction.

Second, these track-record arguments are sometimes used—explicitly or implicitly—to bolster the case for concern about AI risks. If the evidential support here rests on past forecasting success, then the strength of that support depends on how well those earlier cases actually hold up under scrutiny. If the success was mostly at the level of identifying broad structural risks (e.g. incentives, tail risks, coordination failures), that’s a very different kind of evidence than being right about timelines, concrete outcomes, or specific mechanisms.

Jason @ 2025-12-15T00:01 (+4)

I like this comment. This topic is always at risk to devolving into a generalized debate between rationalists and their opponents, creating a lot of heat but not light. So it's helpful to keep a fairly tight focus on potentially action-relevant questions (of which the comment identifies one).

niplav @ 2025-12-14T12:32 (+8)

Thank you, this is very good. Strong upvoted.

I don't exactly trust you to do this in an unbiased way, but this comment seems the state-of-the-art and I love retrospectives on COVID-19. Plausibly I should look into the extent that your story checks out, plus how EA itself, the relevant parts of twitter or prediction platforms like Metaculus compared at the time (which I felt was definitely ahead).

Linch @ 2025-12-14T16:03 (+9)

See eg traviswfisher's prediction on Jan 24:

https://x.com/metaculus/status/1248966351508692992

Or this post on this very forum from Jan 26:

I wrote this comment on Jan 27, indicating that it's not just a few people worried at the time. I think most "normal" people weren't tracking covid in January.

I think the thing to realize/people easily forget is that everything was really confusing and there was just a ton of contentious debate during the early months. So while there was apparently a fairly alarmed NYT report in early Feb, there were also many other reports in February that were less alarmed, many bad forecasts, etc.

Yarrow Bouchard 🔸 @ 2025-12-14T16:32 (+6)

It would be easy to find a few examples like this from any large sample of people. As I mentioned in the quick take, in late January, people were clearing out stores of surgical masks in cities like New York.

Linch @ 2025-12-14T17:22 (+5)

Why does this not apply to your original point citing a single NYT article?

Yarrow Bouchard 🔸 @ 2025-12-14T16:41 (+2)

If you want a source who is biased in the opposite direction and who generally agrees with my conclusion, take a look here and here. I like this bon mot:

In some ways, I think this post isn't "seeing the smoke," so much as "seeing the fire and choking on the smoke."

This is their conclusion from the second link:

If the sheer volume of conversation is our alarm bell, this site seems to have lagged behind the stock market by about a week.

parconley @ 2025-12-14T20:57 (+3)

Unless there is some evidence that I didn't turn up, it seems pretty clear the self-congratulatory narrative is a myth.

This is a cool write-up! I'm curious how much/if you Zvi's COVID round-ups you take into account? I wasn't around LessWrong during COVID, but, if I understand correctly, those played a large role in the information flow during that time.

Yarrow Bouchard 🔸 @ 2025-12-14T21:08 (+2)

I haven't looked into it, but any and all new information that can give a fuller picture is welcome.

parconley @ 2025-12-14T21:50 (+3)

Yeah! This is the series that I am referring to: https://www.lesswrong.com/s/rencyawwfr4rfwt5C.

As I understand it, Zvi was quite ahead of the curve with COVID and moved out of New York before others. I could be wrong, though.

Yarrow Bouchard 🔸 @ 2025-12-14T21:59 (+2)

The first post listed there is from March 2, 2020, so that's relatively late in the timeline we're considering, no? That's 3 days later than the February 28 post I discussed above as the first/best candidate for a truly urgent early warning about covid-19 on LessWrong. (2020 was a leap year, so there was a February 29.)

That first post from March 2 also seems fairly simple and not particularly different from the February 28 post (which it cites).

Yarrow Bouchard 🔸 @ 2025-12-16T02:21 (+2)

Following up a bit on this, @parconley. The second post in Zvi's covid-19 series is from 6pm Eastern on March 13, 2020. Let's remember where this is in the timeline. From my quick take above:

On March 8, 2020, Italy put a quarter of its population under lockdown, then put the whole country on lockdown on March 10. On March 11, the World Health Organization declared covid-19 a global pandemic. (The same day, the NBA suspended the season and Tom Hanks publicly disclosed he had covid.) On March 12, Ohio closed its schools statewide. The U.S. declared a national emergency on March 13. The same day, 15 more U.S. states closed their schools. Also on the same day, Canada's Parliament shut down because of the pandemic.

Zvi's post from March 13, 2020 at 6pm is about all the school closures that happened that day. (The U.S. state of emergency was declared that morning.) It doesn't make any specific claims or predictions about the spread of the novel coronavirus, or anything else that could be assessed in terms of its prescience. It mostly focuses on the topic of the social functions that schools play (particularly in the United States and in the state of New York specifically) other than teaching children, such as providing free meals and supervision.

This is too late into the timeline to count as calling the pandemic early, and the post doesn't make any predictions anyway.

The third post from Zvi is on March 17, 2020 and it's mostly a personal blog. There are a few relevant bits. For one, Zvi admits he was surprised at how bad the pandemic was at that point:

Regret I didn’t sell everything and go short, not because I had some crazy belief in efficient markets, but because I didn’t expect it to be this bad and I told myself a few years ago I was going to not be a trader anymore and just buy and hold.

He argues New York City is not locking down soon enough and San Francisco is not locking down completely enough. About San Francisco, one thing he says is:

Local responses much better. Still inadequate. San Francisco on strangely incomplete lock-down. Going on walks considered fine for some reason, very strange.

I don't know how sound this was given what experts knew at the time. It might have been the right call. I don't know. I will just say that, in retrospect, it seems like going outside was one of the things we originally thought wasn't fine that we later thought was actually fine after all.

The next post after that isn't until April 1, 2020. It's about the viral load of covid-19 infections and the question of how much viral load matters. By this point, we're getting into questions about the unfolding of the ongoing pandemic, rather than questions about predicting the pandemic in advance. You could potentially go and assess that prediction track record separately, but that's beyond the scope of my quick take, which was to assess whether LessWrong called covid early.

Overall, Zvi's posts, at least the ones included in this series, are not evidence for Zvi or LessWrong calling covid early. The posts start too late and don't make any predictions. Zvi saying "I didn’t expect it to be this bad" is actually evidence against Zvi calling covid early. So, I think we can close the book on this one.

Still open to hearing other things people might think of as evidence that the LessWrong community called covid early.

Yarrow Bouchard 🔸 @ 2025-12-15T20:31 (+2)

I spun this quick take out as a full post here. When I submitted the full post, there was no/almost no engagement on this quick take. In the future, I'll try to make sure to publish things only as a quick take or only as a full post, but not both. This was a fluke under unusual circumstances.

Feel free to continue commenting here, cross-post comments from here onto the full post, make new comments on the post, or do whatever you want. Thanks to everyone who engaged and left interesting comments.

Yarrow Bouchard 🔸 @ 2025-12-03T05:17 (+18)

Rate limiting on the EA Forum is too strict. Given that people karma downvote because of disagreement, rather than because of quality or civility — or they judge quality and/or civility largely on the basis of what they agree or disagree with — there is a huge disincentive against expressing unpopular or controversial opinions (relative to the views of active EA Forum users, not necessarily relative to the general public or relevant expert communities) on certain topics.

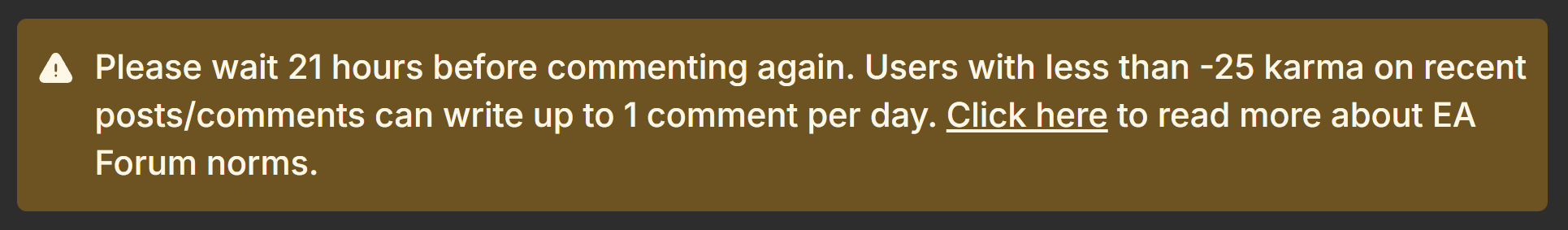

This is a message I saw recently:

You aren't just rate limited for 24 hours once you fall below the recent karma threshold (which can be triggered by one comment that is unpopular with a handful of people), you're rate limited for as many days as it takes you to gain 25 net karma on new comments — which might take a while, since you can only leave one comment per day, and, also, people might keep downvoting your unpopular comment. (Unless you delete it — which I think I've seen happen, but I won't do, myself, because I'd rather be rate limited than self-censor.)

The rate limiting system is a brilliant idea for new users or users who have less than 50 total karma — the ones who have little plant icons next to their names. It's an elegant, automatic way to stop spam, trolling, and other abuses. But my forum account is 2.5 years old and I have over 1,000 karma. I have 24 posts published over 2 years, all with positive karma. My average karma per post/comment is +2.3 (not counting the default karma that all post/comments start with; this is just counting karma from people's votes).

Examples of comments I've gotten downvoted into the net -1 karma or lower range include a methodological critique of a survey that was later accepted to be correct and led to the research report of an EA-adjacent organization getting revised. In another case, a comment was downvoted to negative karma when it was only an attempt to correct the misuse of a technical term in machine learning — a topic which anyone can confirm I've gotten right with a few fairly quick Google searches. People are absolutely not just downvoting comments that are poor quality or rude by any reasonable standard. They are downvoting things they disagree with or dislike for some other reason. (There are many other examples like the ones I just gave, including everything from directly answering a question to clarifying a point of disagreement to expressing a fairly anodyne and mainstream opinion that at least some prominent experts in the relevant field agree with.) Given this, karma downvoting as an automatic moderation tool with thresholds this sensitive just discourages disagreement.

One of the most important cognitive biases to look out for in a context like EA is group polarization, which is the tendency of individuals' views to become more extreme once they join a group, even if each of the individuals had less extreme views before joining the group (i.e., they aren't necessarily being converted by a few zealots who already had extreme views before joining). One way to mitigate group polarization is to have a high tolerance for internal disagreement and debate. I think the EA Forum does have that tolerance for certain topics and within certain windows of accepted opinions for most topics that are discussed, but not for other topics or only within a window that is quite narrow if you compare it to, say, the general population or expert opinion.

For example, 76% of AI experts believe it's unlikely or very unlikely that LLMs will scale to AGI according to one survey, yet the opinion of EA Forum users seems to be the opposite of that. Not everyone on the EA Forum seems to consider the majority expert opinion an opinion worth considering too seriously. To me, that looks like group polarization in action. It's one thing to disagree with expert opinion with some degree of uncertainty and epistemic humility, it's another thing to see expert opinion as beneath serious discussion.

I don't know what specific tweaks to the rate limiting system would be best. Maybe just turn it off altogether for users with over 500 karma (and rely on reporting posts/comments and moderator intervention to handle real problems), or as Jason suggested here, have the karma threshold trigger manual review by a moderator rather than automatic rate limiting. Jason also made some other interesting suggestions for tweaks in that comment and noted, correctly:

Strong downvoting by a committed group is the most obvious way to manipulate the system into silencing those with whom you disagree.

This actually works. I am reluctant to criticize the ideas of or express disagreement with certain organizations/books because of rate limiting, and rate limiting is the #1 thing that makes me feel like just giving up on trying to engage in intellectual debate and discussion and just quit the EA Forum.

I may be slow to reply to any comments on this quick take due to the forum's rate limiting.

Jason @ 2025-12-04T12:26 (+14)

I think this highlights why some necessary design features of the karma system don't translate well to a system that imposes soft suspensions on users. (To be clear, I find a one-comment-per-day limit based on the past 20 comments/posts to cross the line into soft suspension territory; I do not suggest that rate limits are inherently soft suspensions.)

I wrote a few days ago about why karma votes need to be anonymous and shouldn't (at least generally) require the voter to explain their reasoning; the votes suggested general agreement on those points. But a soft suspension of an established user is a different animal, and requires greater safeguards to protect both the user and the openness of the Forum to alternative views.

I should emphasize that I don't know who cast the downvotes that led to Yarrow's soft suspension (which were on this post about MIRI), or why they cast their votes. I also don't follow MIRI's work carefully enough to have a clear opinion on the merits of any individual vote through the lights of the ordinary purposes of karma. So I do not intend to imply dodgy conduct by anyone. But: "Justice must not only be done, but must also be seen to be done." People who are considering stating unpopular opinions shouldn't have to trust voters to the extent they have to at present to avoid being soft suspended.

- Neutrality: Because the votes were anonymous, it is possible that people who were involved in the dispute were casting votes that had the effect of soft-suspending Yarrow.

- Accountability: No one has to accept responsibility and the potential for criticism for imposing a soft-suspension via karma downvotes. Not even in their own minds -- since nominally all they did was downvote particular posts.

- Representativeness: A relatively small number of users on a single thread -- for whom there is no evidence of being representative of the Forum community as a whole -- cast the votes in question. Their votes have decided for the rest of the community that we won't be hearing much from Yarrow (on any topic) for a while.[1]

- Reasoning transparency: Stating (or at least documenting) one's reasoning serves as a check on decisions made on minimal or iffy reasoning getting through. [Moreover, even if voters had been doing so silently, they were unlikely to be reasoning about a vote to soft suspend Yarrow, which is what their votes were whether they realized it or not.]

There are good reasons to find that the virtues of accountability, representativeness, and reasoning transparency are outweighed by other considerations when it comes to karma generally. (As for neutrality, I think we have to accept that technical and practical limitations exist.) But their absence when deciding to soft suspend someone creates too high a risk of error for the affected user, too high a risk of suppressing viewpoints that are unpopular with elements of the Forum userbase, and too much chilling effect on users' willingness to state certain viewpoints. I continue to believe that, for more established users, karma count should only trigger a moderator review to assess whether a soft suspension is warranted.

- ^

Although the mods aren't necessarily representative in the abstract, they are more likely to not have particular views on a given issue than the group of people who actively participate on a given thread (and especially those who read the heavily downvoted comments on that thread). I also think the mods are likely to have a better understanding of their role as representatives of the community than individual voters do, which mitigates this concern.

NickLaing @ 2025-12-05T09:32 (+5)

I've really appreciated comments and reflections from @Yarrow Bouchard 🔸 and I think in his case at least this does feel a bit unfair. Its good to encourage new people on the forum, unless they are posting particularly egrarious thing which I don't think he has been.

Yarrow Bouchard 🔸 @ 2025-12-05T10:04 (+2)

She, but thank you!

Thomas Kwa @ 2025-12-04T22:27 (+5)

Assorted thoughts

- Rate limits should not apply to comments on your own quick takes

- Rate limits could maybe not count negative karma below -10 or so, it seems much better to rate limit someone only when they have multiple downvoted comments

- 2.4:1 is not a very high karma:submission ratio. I have 10:1 even if you exclude the april fool's day posts, though that could be because I have more popular opinions, which means that I could double my comment rate and get -1 karma on the extras and still be at 3.5

- if I were Yarrow I would contextualize more or use more friendly phrasing or something, and also not be bothered too much by single downvotes

- From scanning the linked comments I think that downvoters often think the comment in question has bad reasoning and detracts from effective discussion, not just that they disagree

- Deliberately not opining on the echo chamber question

Yarrow Bouchard 🔸 @ 2025-12-04T23:17 (+2)

Can you explain what you mean by "contextualizing more"? (What a curiously recursive question...)

You definitely have more popular opinions (among the EA Forum audience), and also you seem to court controversy less, i.e. a lot of your posts are about topics that aren't controversial on the EA Forum. For example, if you were to make a pseudonymous account and write posts/comments arguing that near-term AGI is highly unlikely, I think you would definitely get a much lower karma to submission ratio, even if you put just as much effort and care into them as the posts/comments you've written on the forum so far. Do you think it wouldn't turn out that way?

I've been downvoted on things that are clearly correct, e.g. the standard definitions of terms in machine learning (which anyone can Google); a methodological error that the Forecasting Research Institute later acknowledged was correct and revised their research to reflect. In other cases, the claims are controversial, but they are also claims where prominent AI experts like Andrej Karpathy, Yann LeCun, or Ilya Sutskever have said exactly the same thing as I said — and, indeed, in some cases I'm literally citing them — and it would be wild to think these sort of claims are below the quality threshold for the EA Forum. I think that should make you question whether downvotes are a reliable guide to the quality of contributions.

One-off instances of one person downvoting don't bother me that much — that literally doesn't matter, as long as it really is one-off — what bothers me is the pattern. It isn't just with my posts/comments, either, it's across the board on the forum. I see it all the time with other contributors as well. I feel uneasy dragging those people into this discussion without their permission — it's easier to talk about myself — but this is an overall pattern.

Whether reasoning is good or bad is always bound to be controversial when debating about topics that are controversial, about which there is a lot of disagreement. Just downvoting what you judge to be bad reasoning will, statistically, amount to downvoting what you disagree with. Since downvotes discourage and, in some cases, disable (through the forum's software) disagreement, you should ask: is that the desired outcome? Personally, I rarely, pretty much never, downvote based on what I perceive to be the reasoning quality for exactly this reason.

When people on the EA Forum deeply engage with the substance of what I have to say, I've actually found a really high rate of them changing their minds (not necessarily from P to ¬P, but shifting along a spectrum and rethinking some details). It's a very small sample size, only a few people, but it's something like out of five people that I've had a lengthy back-and-forth with over the last two months, three of them changed their minds in some significant way. (I'm not doing rigorous statistics here, just counting examples from memory.) And in two of the three cases, the other person’s tone started out highly confident, giving me the impression they initially thought there was basically no chance I had any good points that were going to convince them. That is the counterbalance to everything else because that's really encouraging!

I put in an effort to make my tone friendly and conciliatory, and I'm aware I probably come off as a bit testy some of the time, but I'm often responding to a much harsher delivery from the other person and underreacting in order to deescalate the tension. (For example, the person who got the ML definitions wrong started out by accusing me of "bad faith" based on their misunderstanding of the definitions. There were multiple rounds of me engaging with politeness and cordiality before I started getting a bit testy. That's just one example, but there are others — it's frequently a similar dynamic. Disagreeing with the majority opinion of the group is a thankless job because you have to be nicer to people than they are to you, and then that still isn't good enough and people say you should be even nicer.)

Thomas Kwa @ 2025-12-05T07:38 (+2)

Can you explain what you mean by "contextualizing more"? (What a curiously recursive question...)

I mean it in this sense; making people think you're not part of the outgroup and don't have objectionable beliefs related to the ones you actually hold, in whatever way is sensible and honest.

Maybe LW is better at using disagreement button as I find it's pretty common for unpopular opinions to get lots of upvotes and disagree votes. One could use the API to see if the correlations are different there.

Yarrow Bouchard 🔸 @ 2025-12-05T09:00 (+2)

Huh? Why would it matter whether or not I'm part of "the outgroup"...? What does that mean?

Thomas Kwa @ 2025-12-05T10:49 (+6)

I think this is a significant reason why people downvote some, but not all, things they disagree with. Especially a member of the outgroup who makes arguments EAs have refuted before and need to reexplain, not saying it's actually you

Yarrow Bouchard 🔸 @ 2025-12-05T17:49 (+2)

What is "the outgroup"?

Thomas Kwa @ 2025-12-09T06:05 (+6)

Claude thinks possible outgroups include the following, which is similar to what I had in mind

Based on the EA Forum's general orientation, here are five individuals/groups whose characteristic opinions would likely face downvotes:

- Effective accelerationists (e/acc) - Advocates for rapid AI development with minimal safety precautions, viewing existential risk concerns as overblown or counterproductive

- TESCREAL critics (like Emile Torres, as you mentioned) - Scholars who frame longtermism/EA as ideologically dangerous, often linking it to eugenics, colonialism, or techno-utopianism

- Anti-utilitarian philosophers - Strong deontologists or virtue ethicists who reject consequentialist frameworks as fundamentally misguided, particularly on issues like population ethics or AI risk trade-offs

- Degrowth/anti-progress advocates - Those who argue economic/technological growth is net-negative and should be reduced, contrary to EA's generally pro-progress orientation

- Left-accelerationists and systemic change advocates - Critics who view EA as a "neoliberal" distraction from necessary revolutionary change, or who see philanthropic approaches as fundamentally illegitimate compared to state redistribution

Yarrow Bouchard 🔸 @ 2025-12-09T11:26 (+2)

a) I’m not sure all of those count as someone who would necessarily be an outsider to EA (e.g. Will MacAskill only assigns a 50% probability to consequentialism being correct, and he and others in EA have long emphasized pluralism about normative ethical theories; there’s been an EA system change group on Facebook since 2015 and discourse around systemic change has been happening in EA since before then)

b) Even if you do consider people in all those categories to be outsiders to EA or part of "the out-group", us/them or in-group/out-group thinking seems like a bad idea, possibly leading to insularity, incuriosity, and overconfidence in wrong views

c) It’s especially a bad idea to not only think in in-group/out-group terms and seek to shut down perspectives of "the out-group" but also to cast suspicion on the in-group/out-group status of anyone in an EA context who you happen to disagree with about something, even something minor — that seems like a morally, subculturally, and epistemically bankrupt approach

Thomas Kwa @ 2025-12-09T22:58 (+8)

- You're shooting the messenger. I'm not advocating for downvoting posts that smell of "the outgroup", just saying that this happens in most communities that are centered around an ideological or even methodological framework. It's a way you can be downvoted while still being correct, especially from the LEAST thoughtful 25% of EA forum voters

- Please read the quote from Claude more carefully. MacAskill is not an "anti-utilitarian" who thinks consequentialism is "fundamentally misguided", he's the moral uncertainty guy. The moral parliament usually recommends actions similar to consequentialism with side constraints in practice.

I probably won't engage more with this conversation.

Mo Putera @ 2025-12-09T04:13 (+4)

I don't know what he meant, but my guess FWIW is this 2014 essay.

Yarrow Bouchard 🔸 @ 2025-12-16T10:17 (+17)

The Ezra Klein Show (one of my favourite podcasts) just released an episode with GiveWell CEO Elie Hassenfeld!

Yarrow Bouchard 🔸 @ 2025-11-27T05:42 (+17)

The NPR podcast Planet Money just released an episode on GiveWell.

Yarrow @ 2025-05-03T16:59 (+14)

Since my days of reading William Easterly's Aid Watch blog back in the late 2000s and early 2010s, I've always thought it was a matter of both justice and efficacy to have people from globally poor countries in leadership positions at organizations working on global poverty. All else being equal, a person from Kenya is going to be far more effective at doing anti-poverty work in Kenya than someone from Canada with an equal level of education, an equal ability to network with the right international organizations, etc.

In practice, this is probably hard to do, since it requires crossing language barriers, cultural barriers, geographical distance, and international borders. But I think it's worth it.

So much of what effective altruism does, including around global poverty, including around the most evidence-based and quantitative work on global poverty, relies on people's intuitions, and people's intuitions formed from living in wealthy, Western countries with no connection to or experience of a globally poor country are going to be less accurate than people who have lived in poor countries and know a lot about them.

Simply put, first-hand experience of poor countries is a form of expertise and organizations run by people with that expertise are probably going to be a lot more competent at helping globally poor people than ones that aren't.

NickLaing @ 2025-05-03T21:02 (+56)

I agree with most of you say here, indeed all things being equal a person from Kenya is going to be far more effective at doing anti-poverty work in Kenya than someone from anywhere else. The problem is your caveats - things are almost never equal...

1) Education systems just aren't nearly as good in lower income countries. This means that that education is sadly barely ever equal. Even between low income countries - a Kenyan once joked with me that "a Ugandan degree holder is like a Kenyan high school leaver". If you look at the top echelon of NGO/Charity leaders from low-income who's charities have grown and scaled big, most have been at least partially educated in richer countries

2) Ability to network is sadly usually so so much higher if you're from a higher income country. Social capital is real and insanely important. If you look at the very biggest NGOs, most of them are founded not just by Westerners, but by IVY LEAGUE OR OXBRIDGE EDUCATED WESTERNERS. Paul Farmer (Partners in Health) from Harvard, Raj Panjabi (LastMile Health) from Harvard. Paul Niehaus (GiveDirectly) from Harvard. Rob Mathers (AMF) Harvard AND Cambridge. With those connections you can turn a good idea into growth so much faster even compared to super privileged people like me from New Zealand, let alone people with amazing ideas and organisations in low income countries who just don't have access to that kind of social capital.

3) The pressures on people from low-income countries are so high to secure their futures, that their own financial security will often come first and the vast majority won't stay the course with their charity, but will leave when they get an opportunity to further their career. And fair enough too! I've seen a number of of incredibly talented founders here in Northern Uganda drop their charity for a high paying USAID job (that ended poorly...), or an overseas study scholarship, or a solid government job. Here's a telling quote from this great take here by @WillieG

"Roughly a decade ago, I spent a year in a developing country working on a project to promote human rights. We had a rotating team of about a dozen (mostly) brilliant local employees, all college-educated, working alongside us. We invested a lot of time and money into training these employees, with the expectation that they (as members of the college-educated elite) would help lead human rights reform in the country long after our project disbanded. I got nostalgic and looked up my old colleagues recently. Every single one is living in the West now. A few are still somewhat involved in human rights, but most are notably under-employed (a lawyer washing dishes in a restaurant in Virginia, for example"

https://forum.effectivealtruism.org/posts/tKNqpoDfbxRdBQcEg/?commentId=trWaZYHRzkzpY9rjx

I think (somewhat sadly) a good combination can be for co-founders or co-leaders to be one person from a high-income country with more funding/research connections, and one local person who like you say will be far more effective at understanding the context and leading in locally-appropriate ways. This synergy can cover important bases, and you'll see a huge number of charities (including mine) founded along these lines.

These realities makes me uncomfortable though, and I wish it weren't so. As @Jeff Kaufman 🔸 said "I can't reject my privilege, I can't give it back" so I try and use my privilege as best as possible to help lift up the poorest people. The organisation OneDay Health I co-founded has me as the only employed foreigner, and 65 other local staff.

Yarrow Bouchard 🔸 @ 2026-01-07T02:46 (+10)

The economist Tyler Cowen linked to my post on self-driving cars, so it ended up getting a lot more readers than I ever expected. I hope that more people now realize, at the very least, self-driving cars are not an uncontroversial, uncomplicated AI success story. In discussions around AGI, people often say things along the lines of: ‘deep learning solved self-driving cars, so surely it will be able to solve many other problems'. In fact, the lesson to draw is the opposite: self-driving is too hard a problem for the current cutting edge in deep learning (and deep reinforcement learning), and this should make us think twice before cavalierly proclaiming that deep learning will soon be able to master even more complex, more difficult tasks than driving.

Yarrow🔸 @ 2025-05-06T21:39 (+8)

There are two philosophies on what the key to life is.

The first philosophy is that the key to life is separate yourself from the wretched masses of humanity by finding a special group of people that is above it all and becoming part of that group.

The second philosophy is that the key to life is to see the universal in your individual experience. And this means you are always stretching yourself to include more people, find connection with more people, show compassion and empathy to more people. But this is constantly uncomfortable because, again and again, you have to face the wretched masses of humanity and say "me too, me too, me too" (and realize you are one of them).

I am a total believer in the second philosophy and a hater of the first philosophy. (Not because it's easy, but because it's right!) To the extent I care about effective altruism, it's because of the second philosophy: expand the moral circle, value all lives equally, extend beyond national borders, consider non-human creatures.

When I see people in effective altruism evince the first philosophy, to me, this is a profane betrayal of the whole point of the movement.

One of the reasons (among several other important reasons) that rationalists piss me off so much is their whole worldview and subculture is based on the first philosophy. Even the word "rationalist" is about being superior to other people. If the rationalist community has one founder or leader, it would be Eliezer Yudkowsky. The way Eliezer Yudkowsky talks to and about other people, even people who are actively trying to help him or to understand him, is so hateful and so mean. He exhales contempt. And it isn't just Eliezer — you can go on LessWrong and read horrifying accounts of how some prominent people in the community have treated their employee or their romantic partner, with the stated justification that they are separate from and superior to others. Obviously there's a huge problem with racism, sexism, and anti-LGBT prejudice too, which are other ways of feeling separate and above.

There is no happiness to be found at the top of a hierarchy. Look at the people who think in the most hierarchical terms, who have climbed to the tops of the hierarchies they value. Are they happy? No. They're miserable. This is a game you can't win. It's a con. It's a lie.

In the beautiful words of the Franciscan friar Richard Rohr, "The great and merciful surprise is that we come to God not by doing it right but by doing it wrong!"

(Richard Rohr's episode of You Made It Weird with Pete Holmes is wonderful if you want to hear more.)

Yarrow Bouchard 🔸 @ 2025-12-02T09:52 (+6)

A number of podcasts are doing a fundraiser for GiveDirectly: https://www.givedirectly.org/happinesslab2025/

Podcast about the fundraiser: https://pca.st/bbz3num9

Yarrow Bouchard 🔸 @ 2025-11-19T04:51 (+6)

I just want to point out that I have a degree in philosophy and have never heard the word "epistemics" used in the context of academic philosophy. The word used has always been either epistemology or epistemic as adjective in front of a noun (never on its own, always used as an adjective, not a noun, and certainly never pluralized).

From what I can tell, "epistemics" seems to be weird EA Forum/LessWrong jargon. Not sure how or why this came about, since this is not obscure philosophy knowledge, nor is it hard to look up.

If you Google "epistemics" philosophy, you get 1) sources like Wikipedia that talk about epistemology, not "epistemics", 2) a post from the EA Forum and a page from the Forethought Foundation, which is an effective altruist organization, 3) some unrelated, miscellaneous stuff (i.e. neither EA-related or academic philosophy-related), and 4) a few genuine but fairly obscure uses of the word "epistemics" in an academic philosophy context. This confirms that the term is rarely used in academic philosophy.

I also don't know what people in EA mean when they say "epistemics". I think they probably mean something like epistemic practices, but I actually don't know for sure.

I would discourage the use of the term "epistemics", particularly as its meaning is unclear, and would advocate for a replacement such as epistemology or epistemic practices (or whatever you like, but not "epistemics").

Toby Tremlett🔹 @ 2025-11-19T09:40 (+19)

I agree this is just a unique rationalist use. Same with 'agentic' though that has possibly crossed over into the more mainstream, at least in tech-y discourse.

However I think this is often fine, especially because 'epistemics' sounds better than 'epistemic practices' and means something distinct from 'epistemology' (the study of knowledge).

Always good to be aware you are using jargon though!

Yarrow Bouchard 🔸 @ 2025-11-19T21:28 (+2)

There’s no accounting for taste, but 'epistemics' sounds worse to my ear than 'epistemic practices' because the clunky jargoniness of 'epistemics' is just so evident. It’s as if people said 'democratics' instead of 'democracy', or 'biologics' instead of 'biology'.

I also don’t know for sure what 'epistemics' means. I’m just inferring that from its use and assuming it means 'epistemic practices', or something close to that.

'Epistemology' is unfortunately a bit ambiguous and primarily connotes the subfield of philosophy rather than anything you do in practice, but I think it would also be an acceptable and standard use to talk about 'epistemology' as what one does in practice, e.g., 'scientific epistemology' or 'EA epistemology'. It’s a bit similar to 'ethics' in this regard, which is both an abstract field of study and something one does in practice, although the default interpretation of 'epistemology' is the field, not the practice, and for 'ethics' it’s the reverse.

It’s neither here nor there, but I think talking about personal 'agency' (terminology that goes back decades, long predating the rationalist community) is far more elegant than talking about a person being 'agentic'. (For AI agents, it doesn’t matter.)

niplav @ 2025-11-20T13:16 (+15)

I find "epistemics" neat because it is shorter than "applied epistemology" and reminds me of "athletics" and the resulting (implied) focus on being more focused on practice. I don't think anyone ever explained what "epistemics" refers to, and I thought it was pretty self-explanatory from the similarity to "athletics".

I also disagree about the general notion that jargon specific to a community is necessarily bad, especially if that jargon has fewer syllables. Most subcultures, engineering disciplines, sciences invent words or abbreviations for more efficient communication, and while some of that may be due to trying to gatekeep, it's so universal that I'd be surprised if it doesn't carry value. There can be better and worse coinages of new terms, and three/four/five-letter abbreviations such as "TAI" or "PASTA" or "FLOP" or "ASARA" are worse than words like "epistemics" or "agentic".

I guess ethics makes the distinction between normative ethics and applied ethics. My understanding is that epistemology is not about practical techniques, and that one can make a distinction here (just like the distinction between "methodology" and "methods").

I tried to figure out if there's a pair of suffixes that try to express the difference between the theoretic study of some field and the applied version, Claude suggests "-ology"/"-urgy" (as in metallurgy, dramaturgy) and "-ology"/"-iatry" (as in psychology/psychiatry), but notes no general such pattern exists.

Yarrow Bouchard 🔸 @ 2025-11-20T14:14 (+4)

Applied ethics is still ethical theory, it’s just that applied ethics is about specific ethical topics, e.g. vegetarianism, whereas normative ethics is about systems of ethics, e.g. utilitarianism. If you wanted to distinguish theory from practice and be absolutely clear, you’d have to say something like ethical practices.

I prefer to say epistemic practices rather than epistemics (which I dislike) or epistemology (which I like, but is more ambiguous).

I don’t think the analogy between epistemics and athletics is obvious, and I would be surprised if even 1% of the people who have ever used the term epistemics have made that connection before.

I am very wary of terms that are never defined or explained. It is easy for people to assume they know what they mean, that there’s a shared meaning everyone agrees on. I really don’t know what epistemics means and I’m only assuming it means epistemic practices.

I fear that there’s a realistic chance if I started to ask different people to define epistemics, we would quickly uncover that different people have different and incompatible definitions. For example, some people might think of it as epistemic practices and some people might think of it as epistemological theory.

I am more anti-jargon and anti-acronyms than a lot of people. Really common acronyms, like AI or LGBT, or acronyms where the acronym is far better known than the spelled-out version, like NASA or DVD, are, of course, absolutely fine. PASTA and ASARA are egregious.

I’m such an anti-acronym fanatic I even spell out artificial general intelligence (AGI) and large language model (LLM) whenever I use them for the first time in a post.

My biggest problem with jargon is that nobody knows what it means. The in-group who is supposed to know what it means also doesn’t know what it means. They think they do, but they’re just fooling themselves. Ask them probing questions, and they’ll start to disagree and fight about the definition. This isn’t always true, but it’s true often enough to make me suspicious of jargon.

Jargon can be useful, but it should be defined, and you should give examples of it. If a common word or phrase exists that is equally good or better, then you should use that instead. For example, James Herbert recently made the brilliant comment that instead of "truthseeking" — an inscrutable term that, for all I know, would turn out to have no definite meaning if I took the effort to try to get multiple people to try to define it — an older term used on effectivealtruism.org was “a scientific mindset”, which is nearly self-explanatory. Science is a well-known and well-defined concept. Truthseeking — whatever that means — is not.

This isn’t just true for a subculture like the effective altruist community, it’s also true for a field like academic philosophy (maybe philosophy is unique in this regard among academic fields). You wouldn’t believe the number of times people disagree about the basic meaning of terms. (For example, do sentience and consciousness mean the same thing, or two different things? What about autonomy and freedom?) This has made me so suspicious that shared jargon actually isn’t understood in the same way by the people who are using it.

Just avoiding jargon isn’t the whole trick (for one, it’s often impossible or undesirable), it’s got to be a multi-pronged approach.

You’ve really got to give examples of things. Examples are probably more important than definitions. Think about when you’re trying to learn a card game, a board game, or a parlour game (like charades). The instructions can be very precise and accurate, but reading the instructions out loud often makes half the table go googly-eyed and start shaking their heads. If the instructions contain even one example, or if you can watch one round of play, that’s so much more useful than a precise “definition” of the game. Examples, examples, examples.

Also, just say it simpler. Speak plainly. Instead of ASANA, why not AI doing AI? Instead of PASTA, why not AI scientists and engineers? It’s so much cleaner, and simpler, and to the point.

Yarrow Bouchard 🔸 @ 2025-11-15T04:47 (+5)

People in effective altruism or adjacent to it should make some public predictions or forecasts about whether AI is in a bubble.