Forecasting Newsletter: December 2020

By NunoSempere @ 2021-01-01T16:07 (+26)

Highlights

- Nigh unbeatable forecaster gives 85% chance that the newly identified COVID-19 strain is >30% more transmissible.

- Prediction markets and betting platforms mostly resolved the election in favor of Biden already. However, new markets have been created about whether Trump will still be president in February. Those who believe he won't can still earn a circa 10% return.

- Metaculus announces their AI Progress tournament, with $50,000 in rewards.

Index

- Prediction Markets & Forecasting Platforms

- US Presidential Election Betting

- In the News

- Negative Examples

- Hard to Categorize

- Long Content

- Yearly Housekeeping

Sign up here or browse past newsletters here.

Prediction Markets & Forecasting Platforms

Metaculus organized the AI Progress tournament, covered by a Forbes contributor here; rewards are hefty ($50,000 in total). Questions for the first round, which focuses on the state of AI exactly six months into the future, can be found here. In the discussion page for the first round, some commenters point out that the questions so far aren't that informative or intellectually stimulating. Metaculus has also partnered with The Economist for a series of events in 2021, and were mentioned in this article (sadly paywalled). They are also hiring.

@lxrjl, a moderator in the platform, has gathered a list of forecasts about Effective Altruism organisations which are currently on Metaculus. This includes a series introducing ACE (Animal Charity Evaluators) to using forecasting as a tool to inform their strategy, which Misha Yagudin and I came up with after some back-and-forth with ACE.

Augur partnered with the crypto currency/protocol $COVER/CoverProtocol to provide protection from losses in case Augur got hacked. In effect, traders could also bet for or against the proposition that a given Augur market will be hacked, and they could do this in a separate protocol. Traders could then use these bets to provide or acquire insurance (source, secondary source).

In an ironic twist of fate, soon after commencing the partnership CoverProtocol was hacked (details, secondary source). Even though the money was later returned, it seems that $COVER is being delisted from major cryptocurrency trading exchanges.

I added catnip.exchange to this list of prediction markets terraform created. More suggestions are welcome, and can be made by leaving a comment in the linked document.

During Christmas, I programmed an interface to view PolyMarket markets and trades, using their GraphQL API. PolyMarket is a speculative crypto prediction market, which I trust because they successfully resolved the first round of US presidential elections without absconding with the money. PolyMarket’s frontpage has an annoying UI bug— it sometimes doesn't show the 2% liquidity provider fee, and my interface solves that.

Karen Hagar, with the collaboration of Scott Eastman, has started two forecasting-related nonprofit organizations:

AZUL Foresight is devoted to forecasting and red team analysis, and is planning to have a geopolitical analysis column.

LogicCurve is devoted to forecasting education, training and international outreach.

Both Karen and Scott are Superforecasters™ and “friends of the newsletter”; we previously worked together on EpidemicForecasting predicting the spread of COVID-19 in developing nations. They are eager to get started on forecasting, and are looking for clients. I’m curious to see what comes of it, particularly because forecasting can be combined with almost any interesting and important problem.

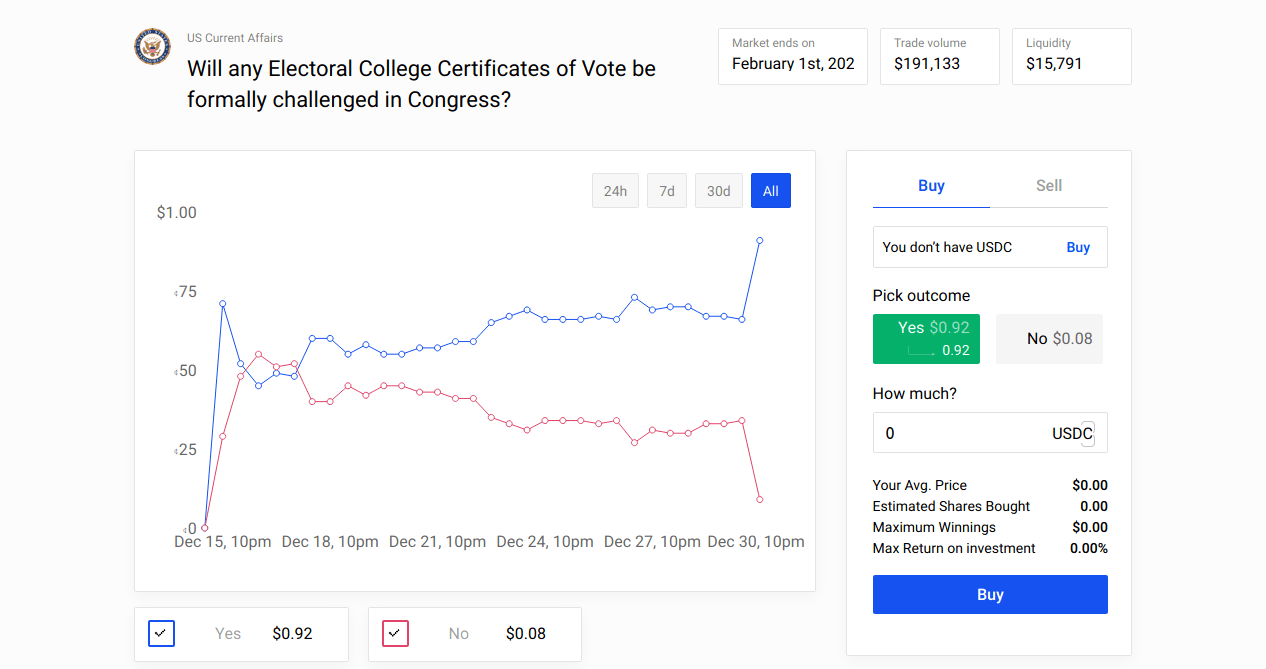

US Presidential Election Betting

The primary story for prediction markets this month was that they generally acknowledged Biden as the president of the USA, early. This was done according to the terms and conditions of the markets—they were to be resolved according to whomever Fox, CNN, AP, and other major American news outlets called as the winner. However, the resolution was also seen as problematic by those who believe that Trump still has a chance. Prediction markets reacted by opening new questions asking whether Trump will still be president by February 2021. For example, here is one on FTX, and here is a similar one about Biden on PolyMarket.

As of late December one can still get, for example, a circa 1.1x return on PolyMarket by betting on Biden if he wins, or a circa 15x return by betting on FTX by betting on Trump, if Trump wins. FTX has Trump at ca. 6%, whereas PolyMarket has Biden at ca. 88%, and arbitrage hasn't leveled them yet (but note that their resolution criteria are different). However, the process of betting on one's preferred candidate depends on one's jurisdiction. As far as I understand (and I give ~50% to one of these being wrong or sub-optimal):

- Risk-averse American living in America: Use PredictIt.

- European, Russian, American living abroad, risk-loving American: Use FTX/PolyMarket.

- British: Use Smarkets/PolyMarket/PaddyPower.

Other platforms that I haven't looked much into are catnip.exchange, Augur, and Omen. For some of these platforms, one needs to acquire USDC, a cryptocurrency pegged to the dollar. For this, I've been using crypto.com, but it's possible that Coinbase or other exchanges offer better rates. If you're interested in making a bet, you should do so before the 6th of January, one of the last checkpoints in the American election certification process. Note that getting your funds into a market might take a couple of days.

As for the object-level commentary, here is a piece by Vox, here one by the New York Times, and here one by The Hill. With regards to the case for Trump staying in the White House, here is a Twitter thread collecting information from a vocal member of the PolyMarket Discord Server. A selection of resources from that thread is:

- This site aims to collect all instances of purported election fraud and manipulation in the US.

- This thread elaborates on the role which Trump supporters are hoping Vice President Pence will take during the 6th of January joint session of the US Congress.

- An ELI5 on how Trump could win.

I'm giving more space to the views I disagree with, because I'm betting some money that Biden will, in fact, be inaugurated president, though nothing I can't afford to lose. I'm also aware that in matters of politics, it's particularly easy to confuse a 30% for a 3% chance, so I wouldn’t recommend full Kelly betting.

As an interesting tidbit, most big bets on PolyMarket’s election markets are against Trump. For instance, the largest bet placed against Trump amounts to $1,480,000, whereas the largest bet placed on his success is $622,223.

In the News

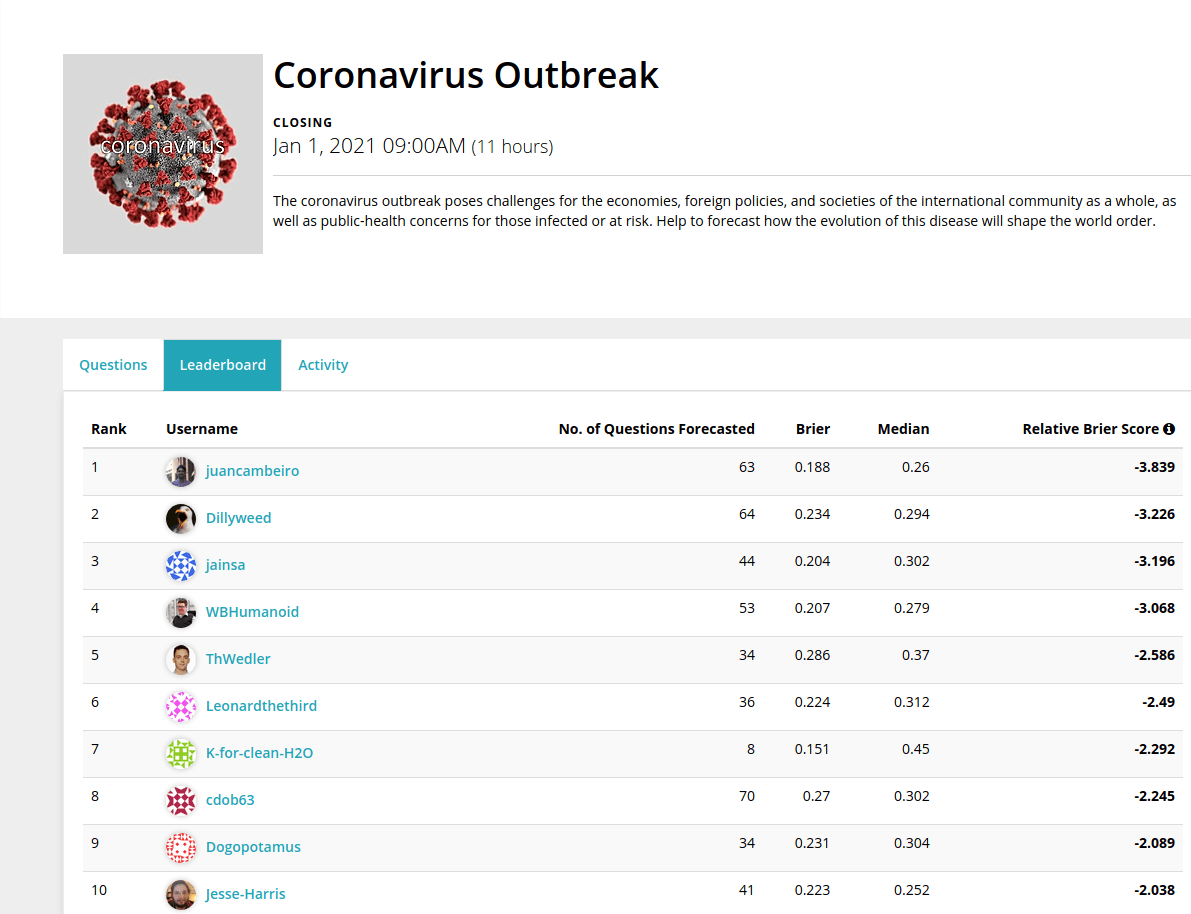

A new variant of COVID-19 has been identified (New York Times coverage here). The broader Effective Altruism and rationality communities are giving high probabilities to the possibility that this new variant is significantly more contagious. See here, here, here, and the last section here. Juan Cambeiro, an expert COVID-19 forecaster who has consistently outperformed competitors and experts across Metaculus and various Good Judgement platforms, gives an 85% chance that this specific new strain is >30% more transmissible. Because of Cambeiro’s past forecasting prowess, subject-matter expertise and nigh unbeatable track record, I suggest adopting his probabilities as your own, and acting accordingly. Note that the Metaculus questions he is forecasting are clearly, precisely, narrowly and tightly defined, so there isn’t room for doubt in that regard.

Budget forecasting in the US under COVID-19:

- Kentucky decides to go with a forecast which is very conservative and doesn't incorporate all available information. This is an interesting example of a legitimate use of forecasting which doesn't involve maximizing accuracy. The forecast will be used to craft the budget, so choosing a more pessimistic forecast might make sense if one is aiming for increased robustness. Or, in other words, the forecast isn't aiming to predict the expected revenue, but rather the lower bound of an 80% confidence interval.

- Louisiana chooses to delay their projections until early next year.

- Colorado finds that they have a $3.75 billion surplus on a $32.5 billion budget after budget cuts earlier in the year.

The Washington post editorializes: Maverick astrophysicist calls for unusually intense solar cycle, straying from consensus view (original source). On this topic, see also the New Solar Cycle 25 Question Series on Metaculus.

Our method predicts that SC25 [the upcoming sunspot cycle] could be among the strongest sunspot cycles ever observed, depending on when the upcoming termination happens, and it is highly likely that it will certainly be stronger than present SC24 (sunspot number of 116) and most likely stronger than the previous SC23 (sunspot number of 180). This is in stark contrast to the consensus of the SC25PP, sunspot number maximum between 95 and 130, i.e. similar to that of SC24.

An opinion piece by The Wall Street Journal talks about measures taken by the US Geological Survey to make climate forecasts less political (unpaywalled archive link).

The approach includes evaluating the full range of projected climate outcomes, making available the data used in developing forecasts, describing the level of uncertainty in the findings, and periodically assessing past expectations against actual performance to provide guidance on future projections.

Moving forward, this logical approach will be used by the USGS and the Interior Department for all climate-related analysis and research—a significant advancement in the government’s use and presentation of climate science.

These requirements may seem like common sense, but there has been wide latitude in how climate assessments have been used in the past. This new approach will improve scientific efficacy and provide a higher degree of confidence for policy makers responding to potential future climate change conditions because a full range of plausible outcomes will be considered.

Science should never be political. We shouldn’t treat the most extreme forecasts as an inevitable future apocalypse. The full array of forecasts of climate models should be considered. That’s what the USGS will do in managing access of natural resources and conserving our natural heritage for the American people.

Australian weather forecasters are incorporating climate change comments into their coverage (archive link).

As the year comes to a close, various news media are taking stock of past predictions, and making new predictions for 2021. These aren’t numerical predictions, and as such are difficult to score. Some examples:

- Politico: The Worst Predictions of 2020

- New Statesman: In January, I made ten predictions for 2020 – how did they turn out?

- CryptoBriefing.com: Crypto Predictions for 2020: Who Got It Right?

- New York Times: Clueless About 2020, Wall Street Forecasters Are at It Again for 2021

- The Wall Street Journal: Here’s a Market Forecast: 2021 Will Be Hard to Predict

- Financial Times: Forecasting the world in 2021.

- Forbes: What Will The Stock Market Return In 2021?

- Bloomberg: Ignore All 2021 Market Predictions - Except This One

- Washington Post: Five (somewhat) upbeat predictions for 2021

- News.Bitcoin.com: Zero to $318,000: Proponents and Detractors Give a Variety of Bitcoin Price Predictions for 2021

Negative Examples

Trump predicted that the US stock market would crash if Biden won. Though it still could, the forecast is not looking good. Here is CNN making that point.

Betting markets predicted no-deal after the failed Brexit summit. Though they did see a bump, the prediction market quoted went up briefly afterwards.

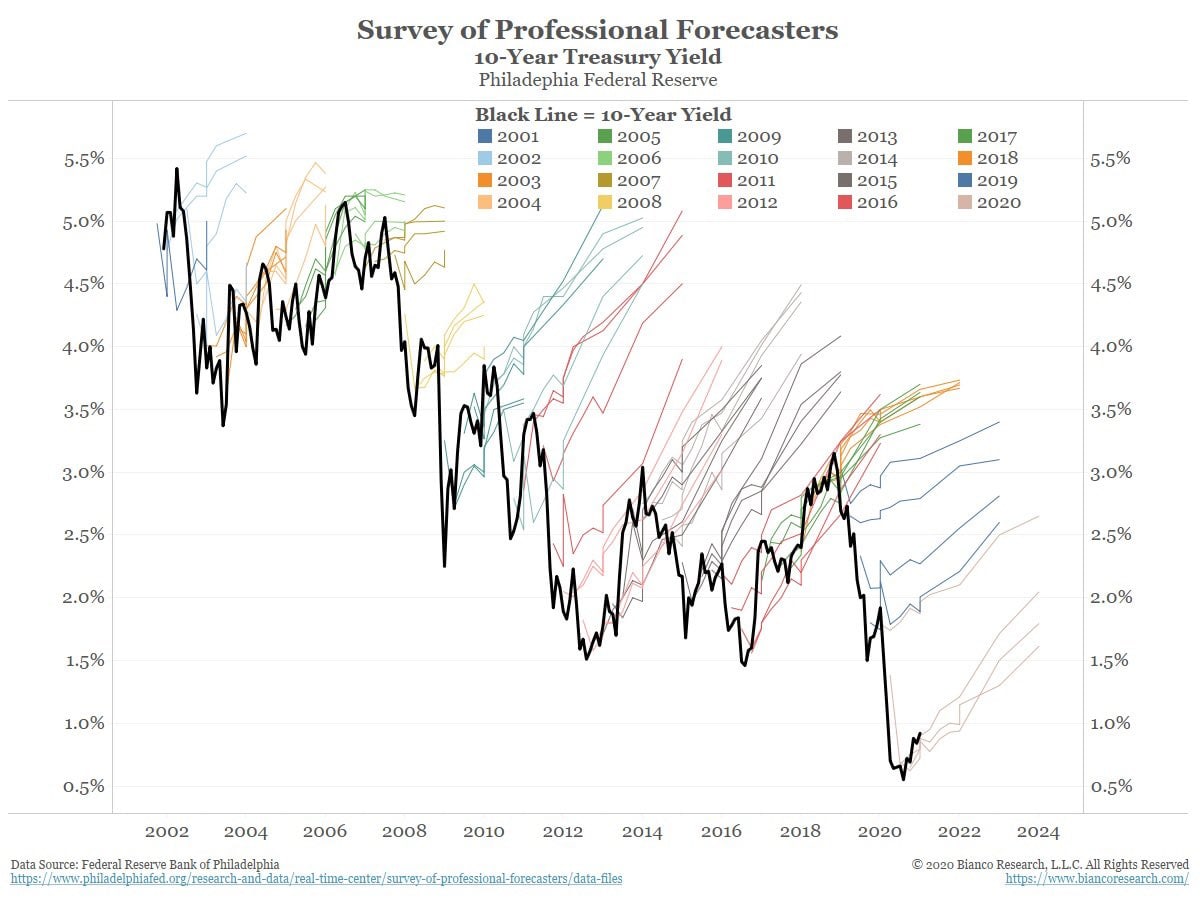

Twitter user @kjhealy visualizes forecasts from the Survey of Professional Forecasters:

Hard to Categorize

Twitch adds prediction functionalities h/t @Pongo.

The US's National Oceanic and Atmospheric Administration (NOAA) is organizing a contest to forecast movements in the Earth's magnetic field, with prizes totaling $30,000.

The efficient transfer of energy from solar wind into the Earth’s magnetic field causes geomagnetic storms. The resulting variations in the magnetic field increase errors in magnetic navigation. The disturbance-storm-time index, or Dst, is a measure of the severity of the geomagnetic storm.

In this challenge, your task is to develop models for forecasting Dst that push the boundary of predictive performance, under operationally viable constraints, using the real-time solar-wind (RTSW) data feeds from NOAA’s DSCOVR and NASA’s ACE satellites. Improved models can provide more advanced warning of geomagnetic storms and reduce errors in magnetic navigation systems.

The US Congress adopts a plan to consolidate weather catastrophe forecasting. Previously, different agencies had been in charge of predicting weather phenomena.

A new flood forecasting platform implemented in Guyana. As is becoming usual for these kinds of projects, I am unable to evaluate the extent to which this platform will be useful.

Despite Dominica and Guyana’s agriculture sectors being the primary industries, the sector has constantly been affected by disasters. Recurring hurricanes, floods and droughts represent real threat to development and food security at the national level. It also increases the vulnerability of local communities and puts small farmers, live stock holders, and aggro-processors, who are primarily women, at risk.

The SlateStarCodex subreddit talks about a paper by Andrew Gelman, which discusses flaws with "pure Bayesianism".

Bayesian updating only works if the "true model" is in the space of models you're updating over. This is never the case in practice. And, in fact Bayesian updating can lead you to becoming ever more convinced of a given model that is clearly false.

DartThrowingSpiderMonkey (@alexrjl) presents the fourth video in his Introduction to Forecasting Series. This time it’s about making Guesstimate models for questions for which a base rate is nonexistent or hard to find.

xkcd has a list of comparisons to help visualize different probabilities.

Volcano forecasting models might help New Zealand tourists who want to visit risky places.

Long Content

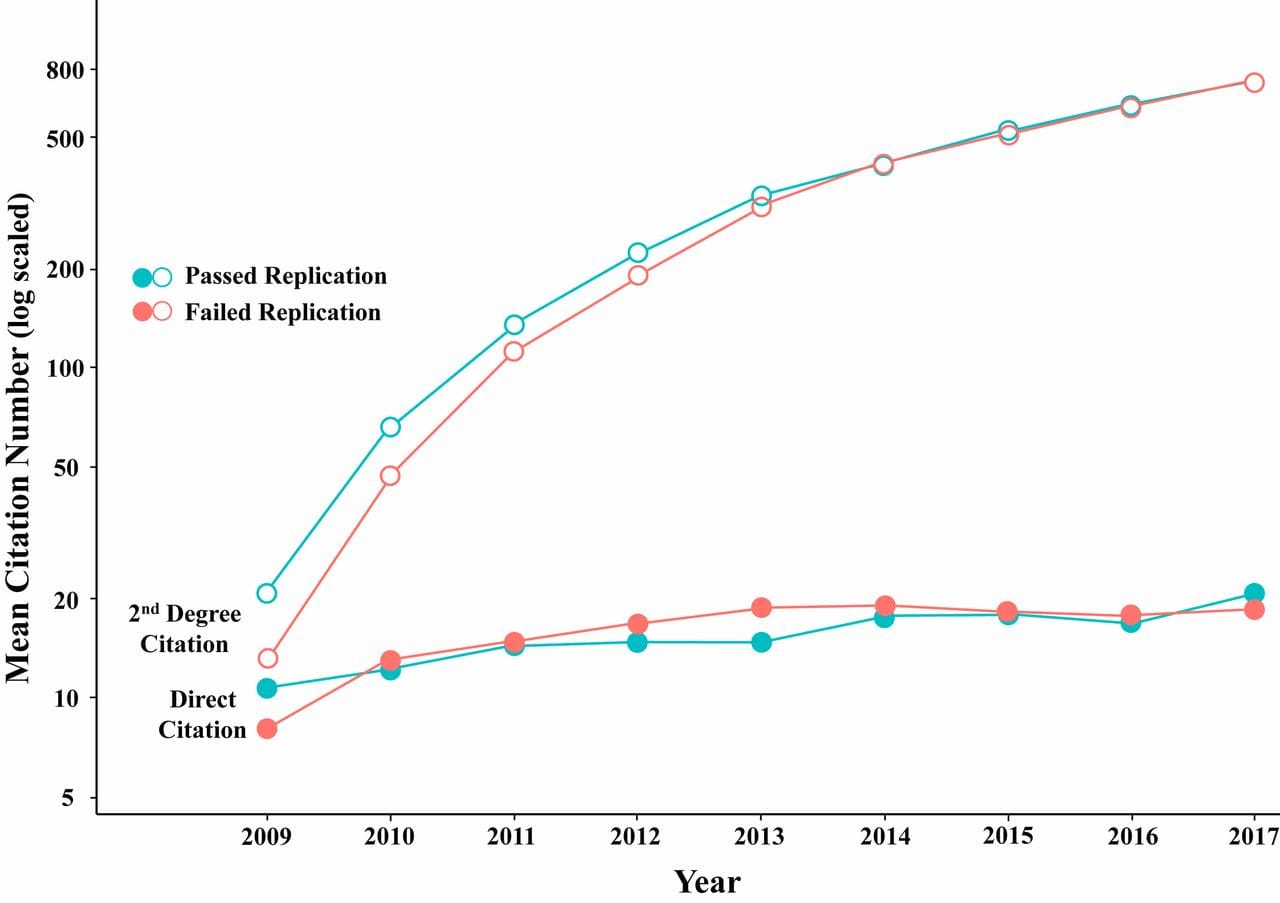

Paper: Estimating the deep replicability of scientific findings using human and artificial intelligence (secondary source).

The authors of the paper train a machine learning model to predict replicability of research results, using a relatively meager sample of 96 papers. The papers, taken from the original Reproducibility Project: Psychology, have been validated on various other datasets. While the model does only slightly better than human prediction markets, it’s important to note that a machine learning system, once set-up, would be much faster. Interestingly, the authors "did not detect statistical evidence of model bias regarding authorship prestige, sex of authors, discipline, journal, specific words, or subjective probabilities/persuasive language". Otherwise, their setup is relatively simple: a pre-processing step which translates words to vectors using Word2vec, followed by a random forest combined with bagging.

A nice tidbit from the paper is that past citation count isn’t very predictive of future replication:

Article: Forecasting the next COVID-19.

Implementing better measures and institutions to predict pandemics is probably a good idea. However, I'd expect the next catastrophic event in the scale of COVID-19 to not be a pandemic.

Princeton disease ecologist C. Jessica Metcalf and Harvard physician and epidemiologist Michael Mina say that predicting disease could become as commonplace as predicting the weather. The Global Immunological Observatory, like a weather center forecasting a tornado or hurricane, would alert the world, earlier than ever before, to dangerous emerging pathogens like SARS-CoV-2.

A GIO [Global Immunological Observatory] would require an unprecedented level of collaboration between scientists and doctors, governments and citizens across the planet. And it would require blood.

Until recently, most blood-serum tests detected antibodies for a single pathogen at a time. But recent breakthroughs have expanded that capability enormously. One example, a method developed at Harvard Medical School in 2015 called VirScan, can detect over 1,000 pathogens, including all of the more than 200 known viruses to infect humans, from a single drop of blood.

Uncertainty Toolbox is "a python toolbox for predictive uncertainty quantification, calibration, metrics, and visualization", available on GitHub. The toolbox, as per the accompanying paper, was created in order to better calibrate machine learning models. Previous similar projects in this area are: Ergo and Python Prediction Scorer.

The Alpha Pundits Challenge proposal was a proposal by the Good Judgement Project to take predictions by pundits, convert their verbal expressions of uncertainty into probabilities, and compare those probabilities to predictions made by superforecasters. Tetlock received some unrestricted funding from Open Philanthropy back in 2016, and the grant mentioned the proposal. However, since there isn’t more publicly available information about the project, we can guess that it was probably abandoned.

Whenever alpha-‐pundits balk at making testable claims —like an 80% chance of ‐2% or worse global deflation in 2016—GJPs ideologically balanced panels of intelligent readers will make good‐faith inferences about what the pundits meant. Using all the textual clues available, what is the most plausible interpretation of “serious possibility” of global deflation? GJP will then publish the readers’ estimates and of course invite alpha-‐pundits to make any corrections if they feel misinterpreted.

GJP Superforecasters will also make predictions on the same issues. And the match will have begun—indeed it has already begun.

For instance, former Treasury Secretary Larry Summers recently published an important essay on global secular stagnation in the Washington Post which included a series of embedded forecasts, such as this prediction about inflation and central bank policies: "The risks tilt heavily toward inflation rates below official targets." It is a catchy verbal salvo, but just what it means is open to interpretation.

Our panel assigned a range of 70–99% to that forecast, centering on 85%. When asked that same question, the Superforecasters give a probability of 72%. These precise forecasts can now be evaluated against reality.

What we propose is new, even revolutionary, and could with proper support evolve into a systemic check on hyperbolic assertions made by opinion makers in the public sphere. It is rigorous, empirical, repeatable, and backed by the widely-recognized success of the Good Judgment Project based at the University of Pennsylvania

Yearly Housekeeping

I'm trying to improve this newsletter’s content and find feedback really valuable. If you could take 2 minutes to fill out this form and share your thoughts, that would go a long way.

I've moved the newsletter from Mailchimp to forecasting.substack.com, where I’ve added an optional paid subscription option. Because I conceive of this newsletter as a public good, I’m not planning on offering restricted content, so the main benefits to paid subscribers would be the personal satisfaction of funding a public good.

Note to the future: all links are added automatically to the Internet Archive. In case of link rot, go here and input the dead link.

Disconfirmed expectancy is a psychological term for what is commonly known as a failed prophecy. According to the American social psychologist Leon Festinger's theory of cognitive dissonance, disconfirmed expectancies create a state of psychological discomfort because the outcome contradicts expectancy. Upon recognizing the falsification of an expected event an individual will experience the competing cognitions, "I believe [X]," and, "I observed [Y]." The individual must either discard the now disconfirmed belief or justify why it has not actually been disconfirmed. As such, disconfirmed expectancy and the factors surrounding the individual's consequent actions have been studied in various settings.

Source: Disconfirmed expectancy, Wikipedia

rajlego @ 2021-01-02T22:48 (+1)

Is there a way to subscribe to this newsletter to see new posts?

NunoSempere @ 2021-01-03T09:28 (+2)

Yes, there is a substack, which allows you to subscribe per email (and has better formatting): forecasting.substack.com