MATS Summer 2023 Retrospective

By utilistrutil @ 2023-12-02T00:12 (+28)

Co-Authors: @Rocket, @Juan Gil, @Christian Smith, @McKennaFitzgerald, @LauraVaughan, @Ryan Kidd

The ML Alignment & Theory Scholars program (MATS, formerly SERI MATS) is an education and research mentorship program for emerging AI safety researchers. This summer, we held the fourth iteration of the MATS program, in which 60 scholars received mentorship from 15 research mentors. In this post, we explain the elements of the program, lay out some of the thinking behind them, and evaluate our impact.

Summary

Key details about the Summer 2023 Program:

- Educational attainment of MATS scholars:

- 30% of scholars are students.

- 88% have at least a Bachelor's degree.

- 10% are in a Master’s program.

- 10% are in a PhD program.

- 13% have a PhD.

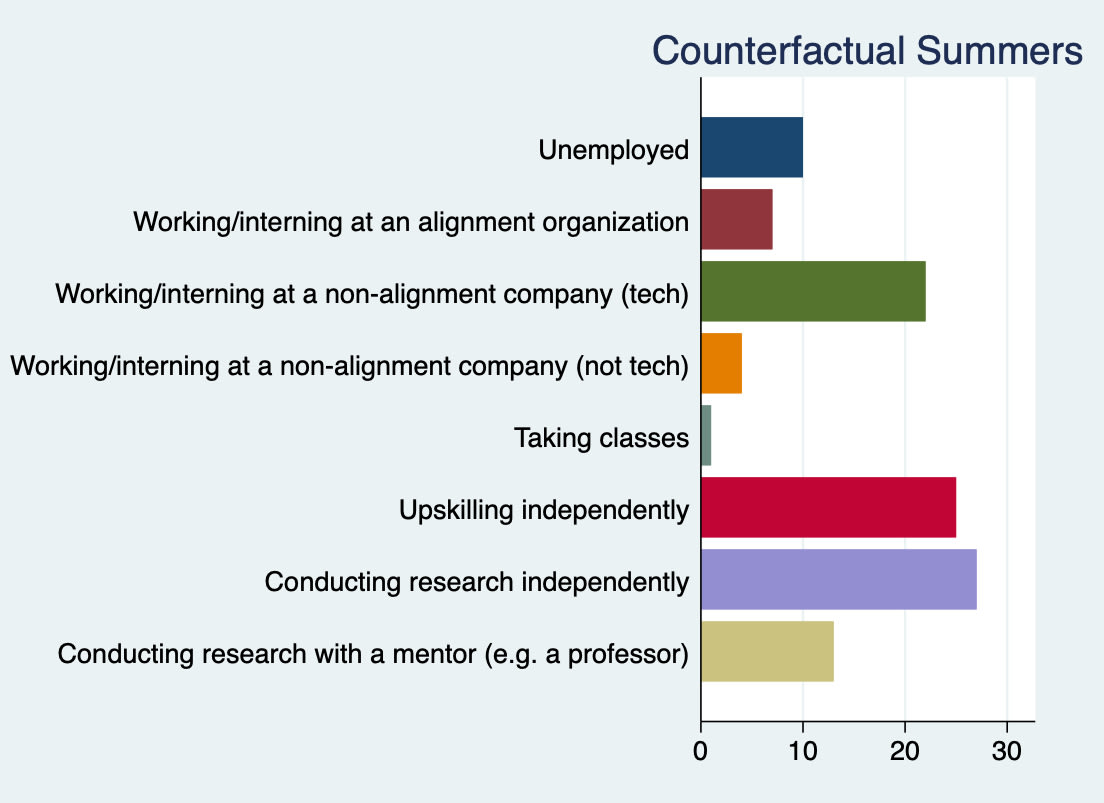

- If not for MATS, scholars might have worked at a tech company (41%), upskilled independently (46%), or conducted research independently over the summer (50%). (Note: this was a multiple-choice response.)

Key takeaways from our impact evaluation:

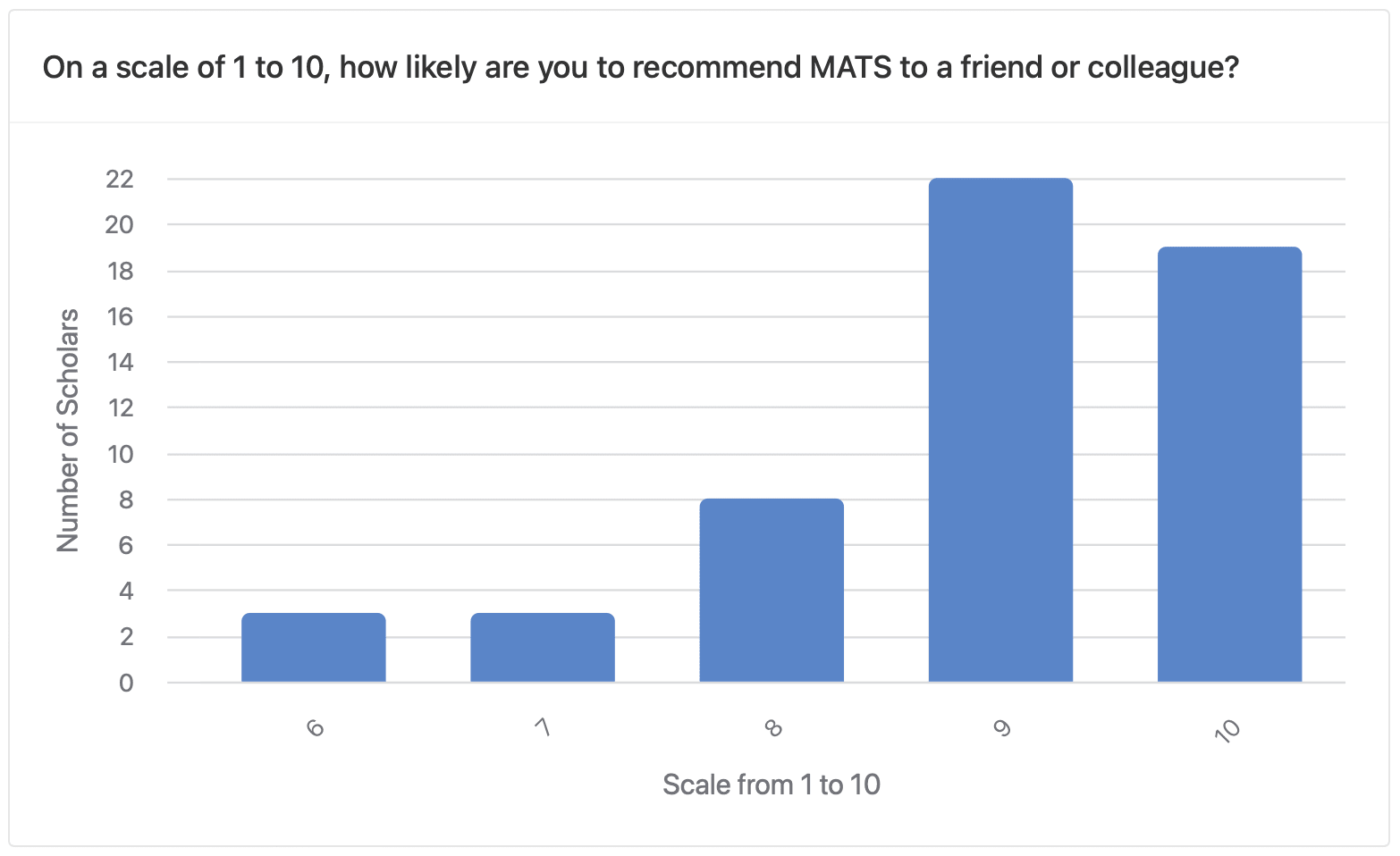

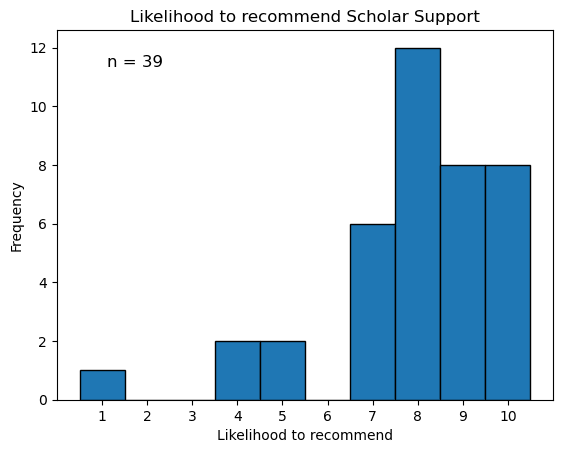

- MATS scholars are highly likely to recommend MATS to a friend or colleague. Average likelihood: 8.9/10.

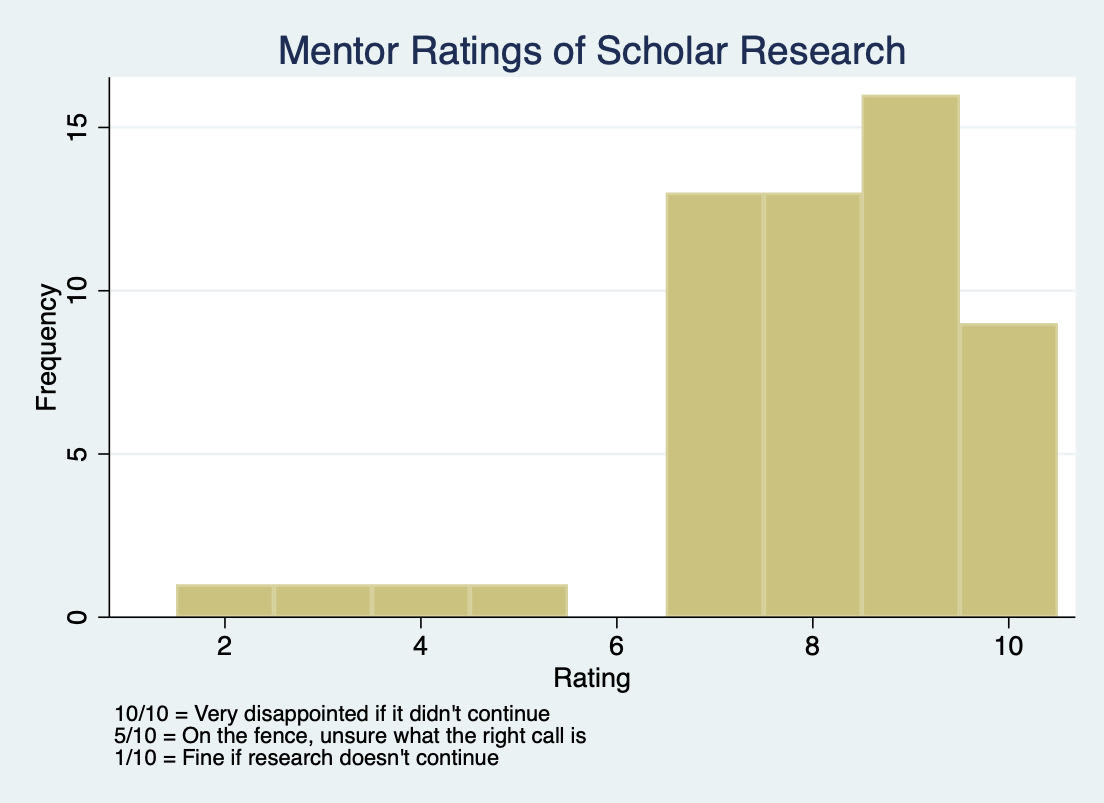

- Mentors rated their enthusiasm for their scholars to continue with their research at 7/10 or greater for 94% of scholars.

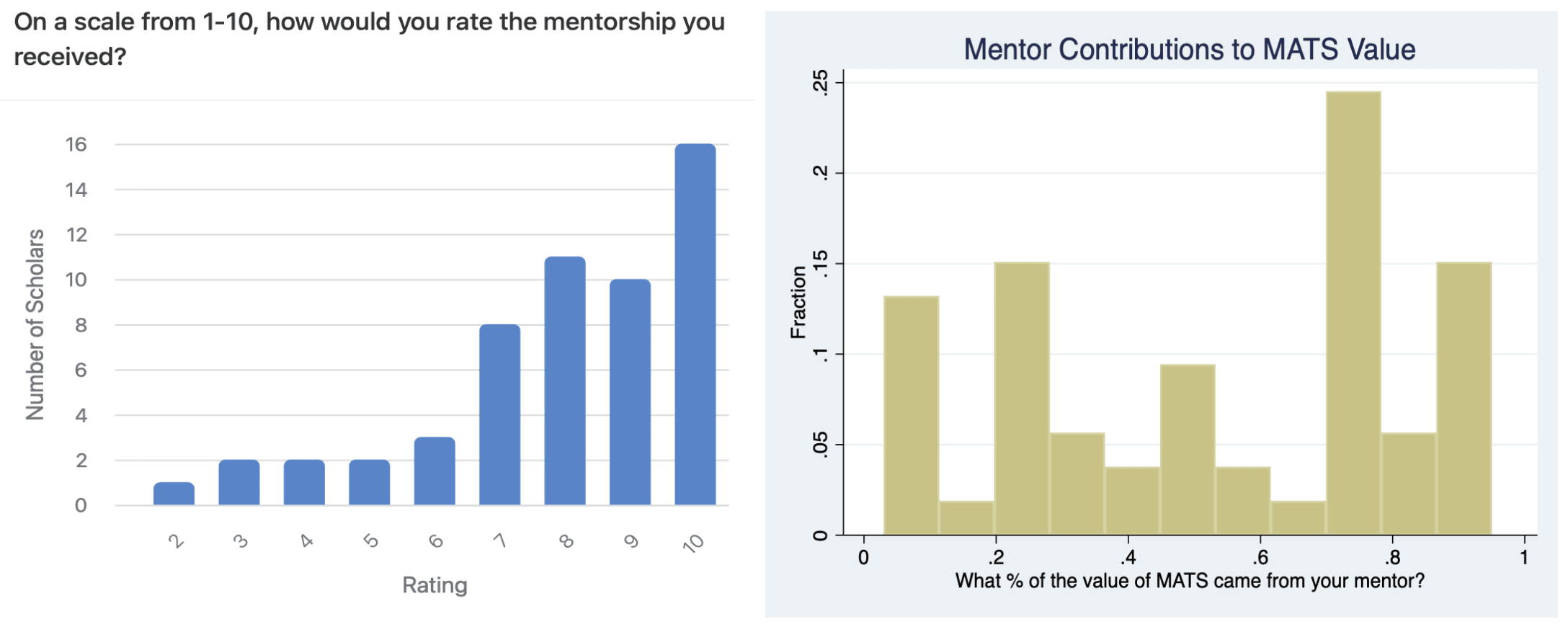

- MATS scholars rate their mentors highly. Average rating: 8.0/10.

- 61% of scholars report that at least half the value of MATS came from their mentor.

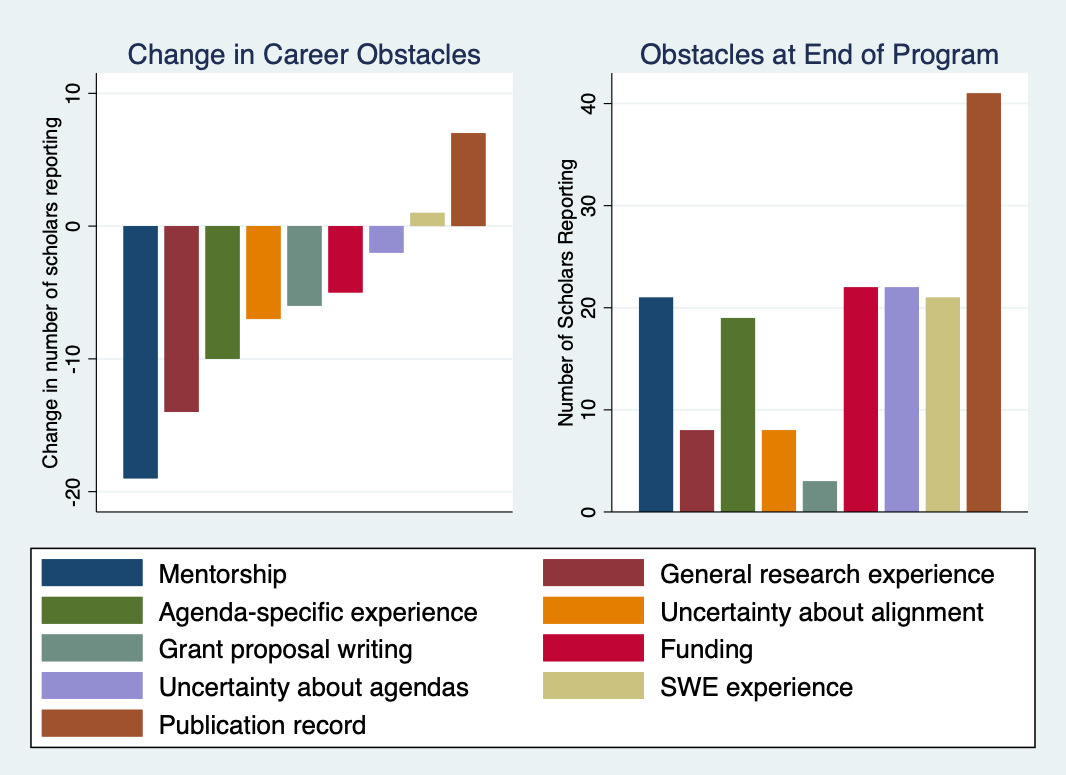

- After MATS, scholars reported facing fewer obstacles to a successful alignment career than they did at the start of the program.

- Most scholars (75%) still reported their publication record as an obstacle to a successful alignment career at the conclusion of the program.

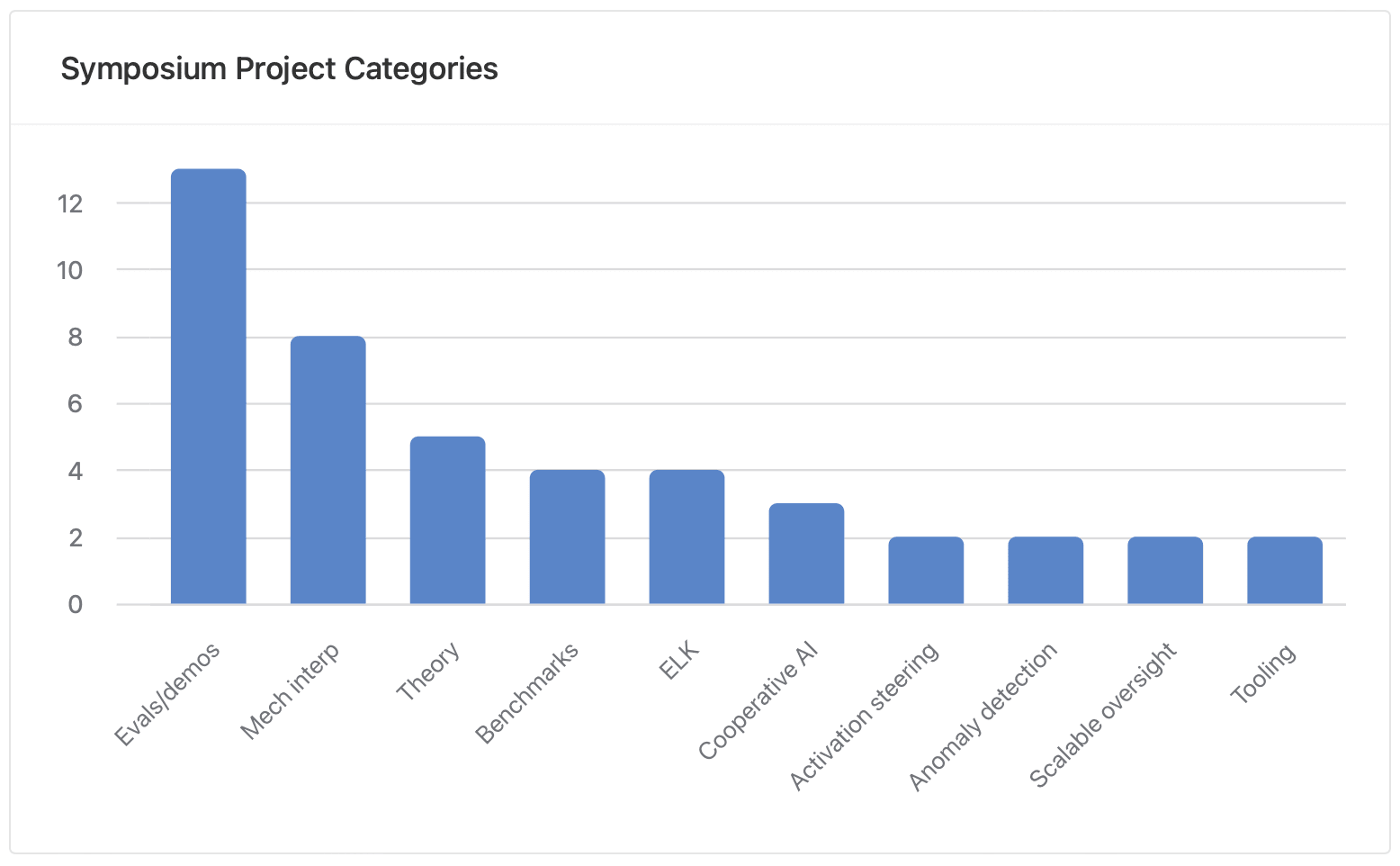

- ⅓ of final projects involved evals/demos and ⅕ involved mechanistic interpretability, representing a large proportion of the cohort’s research interests.

- Scholars self-reported improvements to their research ability on average:

- Slight increases to the breadth of their AI safety knowledge (+1.75 on 10-point scale over the program).

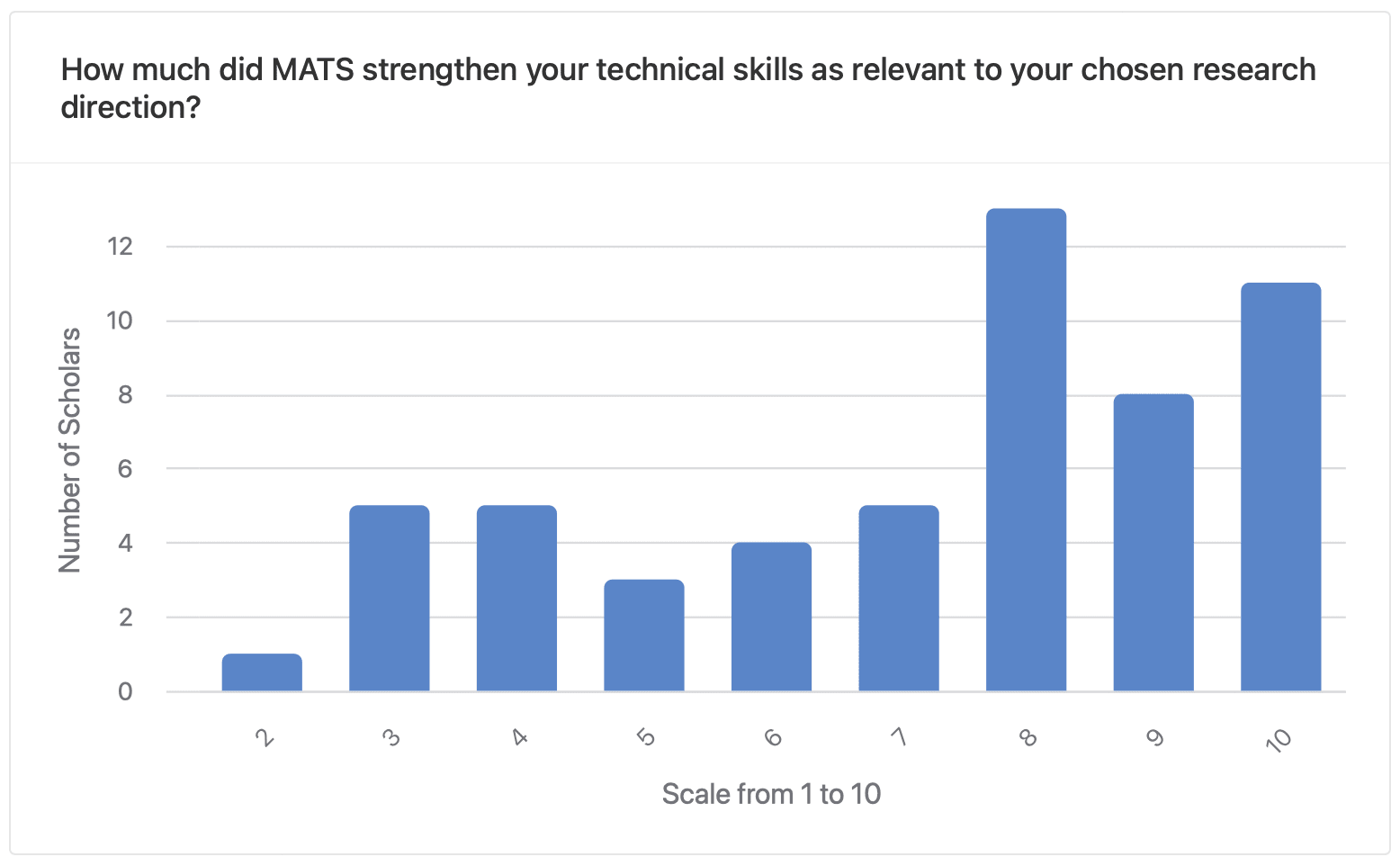

- Moderate strengthening of technical skills compared to counterfactual summer (7.2/10, where 10/10 is "significant improvement compared to counterfactual summer").

- Moderate improvements to ability to independently iterate on research direction (7.0/10, where 10/10 is "significant improvement") and ability to develop a theory of change for their research (5.9/10, where 10/10 is "substantially developed").

- The typical scholar reported making 4.5 professional connections (std. dev. = 6.2) and meeting 5 potential research collaborators on average (std. dev. = 6.8).

- MATS scholars are likely to recommend Scholar Support, our research/productivity coaching service. Average response: 7.9/10.

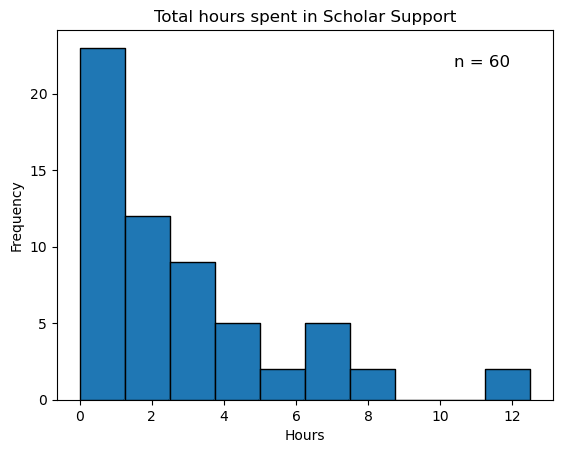

- 49 of the 60 scholars in the Research Phase met with a Scholar Support Specialist at least once.

- The average scholar who met with Scholar Support at least once spent 3.4 hours meeting with Scholar Support throughout the program.

- The average and median scholar report that they value the Scholar Support they received at $3705 and $750, respectively.

- The average scholar reports gaining 22 productive hours over the summer due to Scholar Support.

Key changes we plan to make to MATS for the Winter 2023-24 cohort:

- Filtering better during the application process;

- Pivoting Scholar Support to additionally focus on research management;

- Providing additional forms of support to scholars, particularly technical support and professional development.

Note that it is too early to evaluate any career benefits that MATS provided the most recent cohort; a comprehensive post assessing career outcomes for MATS alumni 6-12 months after their program experience is forthcoming.

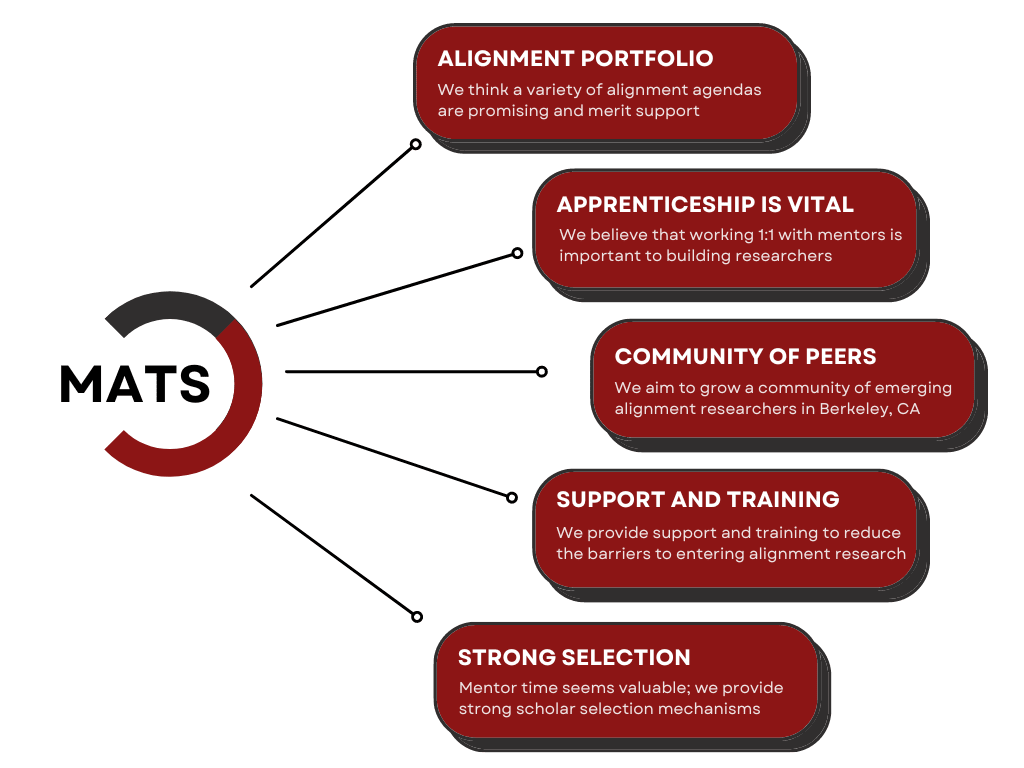

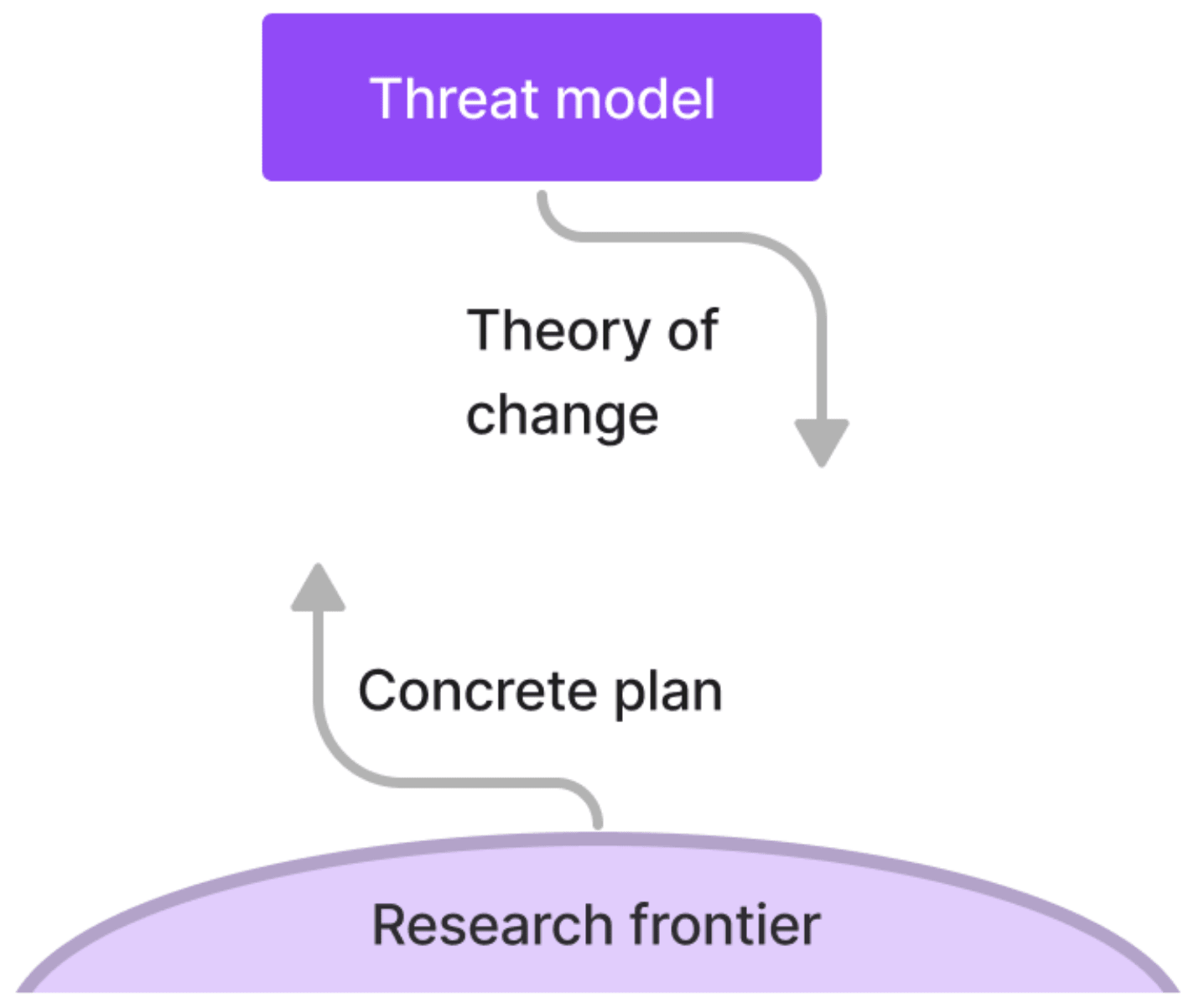

Theory of Change

MATS helps expand the talent pipeline for AI safety research by equipping scholars to work on AI safety at existing organizations, found new organizations, or pursue independent research. To this end, MATS provides funding, housing, training, office spaces, research assistance, networking opportunities, and logistical support to scholars, reducing the barriers for senior researchers to take on mentees. By mentoring MATS scholars, these senior researchers also benefit from research assistance and improve their mentorship skills, preparing them to supervise future research more effectively. Read more about our theory of change here. Further articles expanding our theory of change are forthcoming.

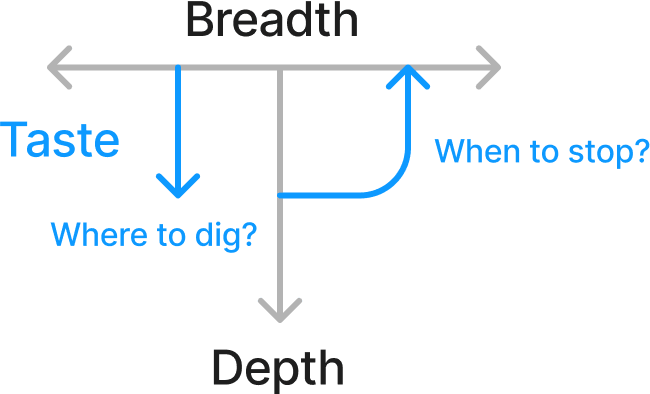

MATS aims to select and develop research scholars primarily along three dimensions:

- Depth: Thorough understanding of a specialist field of AI safety research and sufficient technical ability (e.g., ML, CS, math) to pursue novel research in this field.

- Breadth: Broad familiarity with AI safety organizations and research agendas, a large “toolbox” of useful theorems and knowledge from diverse subfields, and the ability to conduct literature reviews.

- Taste: Good judgment about research direction and strategy, including what first steps to take, what assumptions to question, what novel hypotheses to consider, and when to cease pursuing a line of research.

Overview of MATS Summer 2023 Program

Schedule Overview

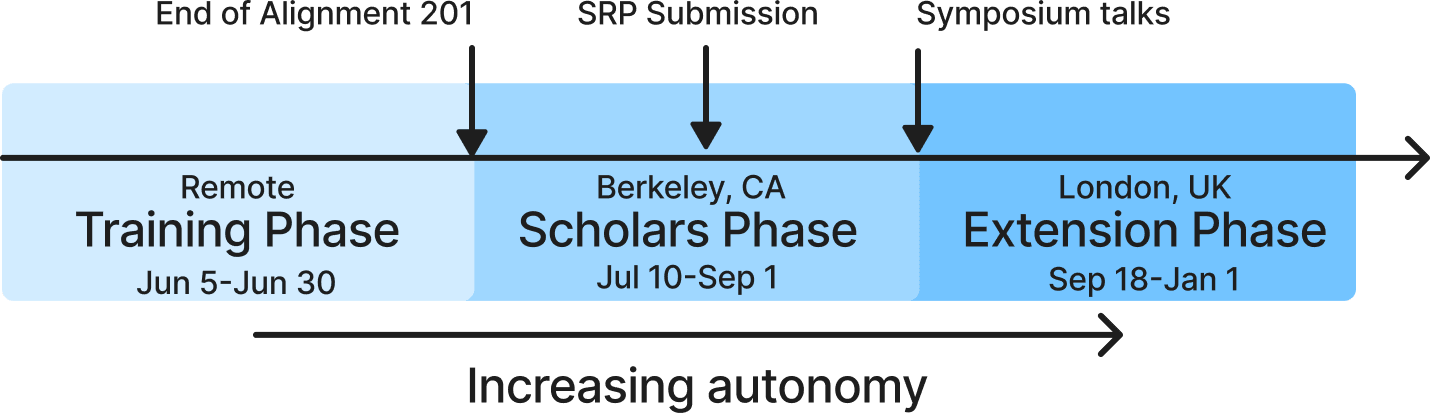

The MATS program in summer 2023 was composed of three phases.

- Training Phase: Scholars accepted into the remote Training Phase completed Alignment 201 with a discussion group and studied other educational materials assigned by their mentors.

- Research Phase: During the Berkeley Research Phase, scholars conducted research, submitted a written “Scholar Research Plan” (SRP), conducted projects, networked with the Bay Area AI safety community, connected with and worked with collaborators, and presented their findings to an audience of peers and professional researchers.

- Extension Phase: Many scholars applied to the Extension Phase in London and Berkeley, where they are continuing to pursue their research with MATS support.

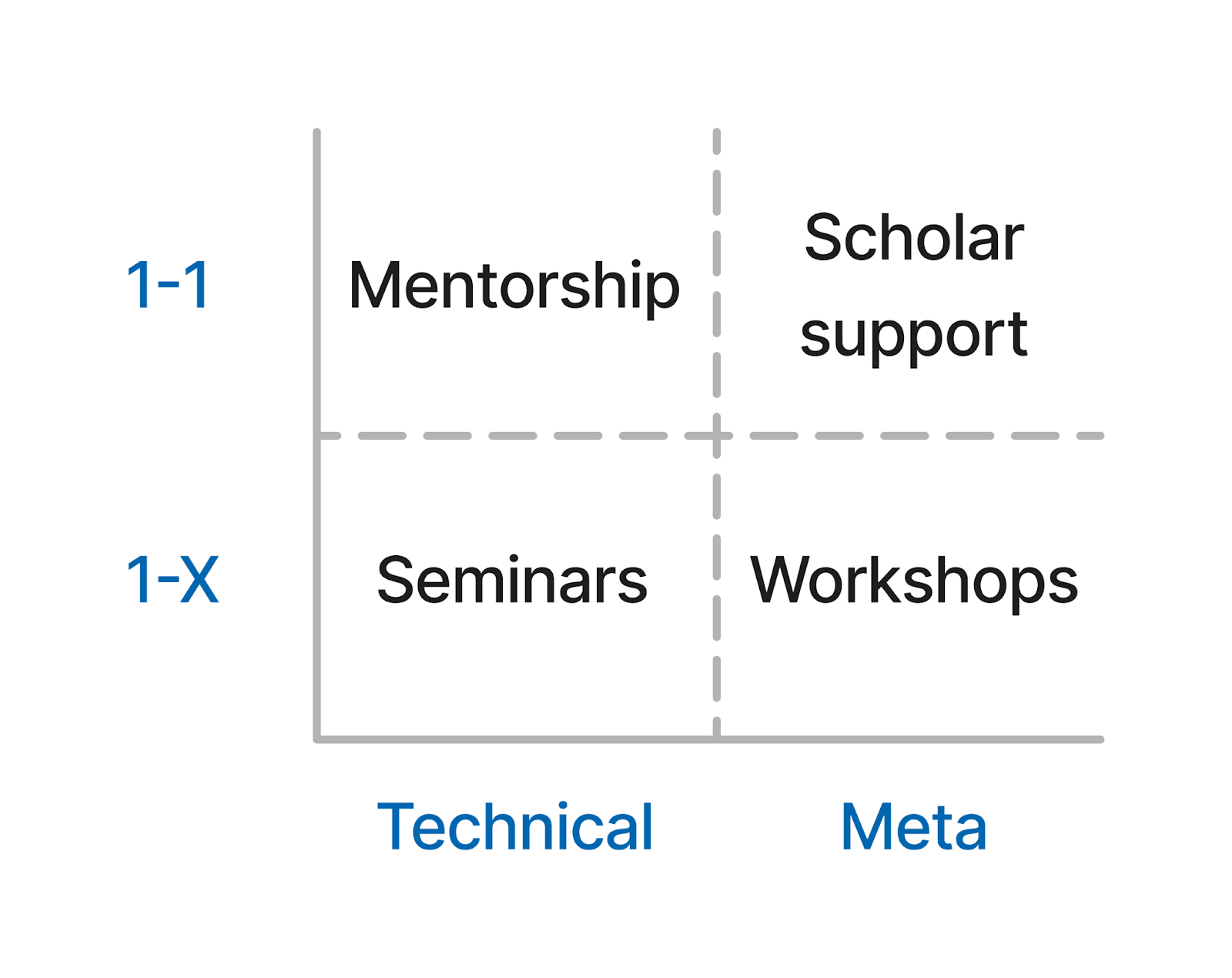

Program Elements

While mentorship continues to be the core of MATS, we choose additional program elements to cover other forms of learning and upskilling. We categorize these program elements according to whether the upskilling/support focus is technical or meta-level, and according to whether the format is 1-on-1 or 1-on-many.

Scholar Support

The Scholar Support team at MATS aims to improve scholar outcomes during the MATS Research and Extension Phases via ongoing, tailored support that complements mentorship.

Why Scholar Support?

Identifying forms of support not covered by mentors has the potential to be high-leverage in increasing the overall impact of the program. Based on observations and conversations with scholars in previous iterations of MATS, we believe that scholar support adds significant additional value for the following reasons:

- Mentors are time-constrained. Mentors often have only enough time (e.g. ~1-2 hours per week) to talk with scholars about technical components of their research. Scholar Support can enable more efficient mentor interactions by helping scholars clarify what forms of mentor support are most valuable for them, and then by providing other forms of support that mentors don’t get to with limited time.

- Scholars are sometimes disincentivized from seeking support from mentors. Because mentors evaluate their mentees for progression within MATS (and possibly to external grantmakers), scholars can feel disincentivized from revealing problems that they are experiencing. To address this, Scholar Support airgaps their support from evaluators, including mentors.

- Mentors may not be best situated to provide forms of support less specialized to technical research, like career coaching or productivity advice. In contrast, Scholar Support can hire coaches with proficiencies in these areas.

Examples of Scholar Support focus areas

Scholar Support helps different scholars in different ways, but the following non-exhaustive list is representative of what is typically discussed in 1-1 Scholar Support meetings:

- Research project development and management: help scoping out possible research directions, deciding between research directions, and finishing research projects.

- Increasing productivity: finding ways for scholars to (sustainably) spend more time per day conducting research or increasing the quality of their research time.

- Career coaching: scoping out different career options, identifying key considerations to clarify for making these decisions, and increasing the chance of career success (e.g., through networking and skill development).

- Improving communication with mentors and collaborators.

Workshops

We held nine workshops on the following topics:

- Crux decomposition;

- Theories of change for research;

- Milestone: Scholar Research Plans;

- Preventing and managing burnout;

- Grant applications + budgets;

- Exploring base models with Loom;

- Research collaborator speed meetings;

- Milestone: Scholar Symposium Talks;

- Career planning and offboarding.

Additionally, Scholar Support staff hosted several open office hour sessions for workshopping scholars’ writing and presentations.

Networking Events

In addition to the collaborator speed meeting workshop, we offered several networking events:

- Dinner with members of FAR Labs;

- Dinner with members of Constellation;

- Career Fair featuring 12 organizations and independent researchers;

- Three parties attended by many members of the Bay Area AI safety community.

Seminars

We hosted 23 seminars with guest speakers: Buck Shlegeris, Rick Korzekwa, Tom Davidson, Tamsin Leake, Victoria Krakovna, Daniel Paleka, Jeffrey Ladish, Adam Gleave, Steven Byrnes, Daniel Filan, Tom Everitt, William Saunders, Lennart Heim, Usman Anwar, Neel Nanda, Dylan Hadfield-Menell, Ryan Greenblatt and Evan Hubinger, Nora Ammann, Anthony DiGiovanni, Marius Hobbhahn, Paul Colognese and Arun Jose, Ajeya Cotra.

Community Health

Scholars had access to a Community Manager to discuss community health concerns, such as conflicts with another scholar or their mentor, and to help with emotional support and referrals to mental health resources. We believe that mental health problems like imposter syndrome and undiagnosed conditions like ADHD can be barriers to breaking into AI safety, and so it is important for programs like MATS to provide support in these areas. We aim to equip scholars with the skills and resources to stay motivated and work sustainably after they leave MATS.

We believe that interpersonal conflicts, mental health concerns, and a lack of connection can inhibit research productivity by contributing to a negative work environment, detracting from the cognitive resources that scholars can allocate toward research, and precluding fruitful research collaborations. Even scholars who do not seek out community health support benefit from the existence of this safety net (see Evaluating Program Elements below). As with Scholar Support, it is essential that programs like MATS allow air-gapping of evaluation and Community Health to incentivize scholars to seek help when they need it.

The Community Manager also organized social events to facilitate connections within the cohort, including four outings for scholars from underrepresented backgrounds.

Mentor Selection

In selecting mentors for MATS, we aim to principally support mentors with a high chance of reducing catastrophic risk from AI through their research, while also taking a “portfolio approach” to risk management. We aim to support a diversity of “research bets” that might provide some benefit to AI safety even if some constituent research agendas prove unviable, as well as offering “exploration value” that might aid potential paradigm shifts.

Our mentor selection process asks potential mentors to submit an expression of interest form, which is then reviewed by the MATS Executive. When deciding which mentors to support, we chiefly considered:

- How much might this research agenda marginally reduce catastrophic risk from AI?

- How much “exploration value” would come from supporting this research agenda?

- How much research and mentoring experience does this individual have?

- What would this individual’s counterfactual be if they were not supported by MATS?

- How does supporting this individual and their scholars shape the development of ideas and talent in the broader AI safety ecosystem?

As MATS was funding-constrained in Summer 2023, we had a financial constraint on the number of scholars in the program. Consequently, scholar “slots” were allocated to mentors (up to their self-expressed caps) based on the MATS Executive’s sense of marginal value on a per-mentor basis. For example, it might be worth supporting an experienced mentor with a self-expressed cap of four scholars with four scholar slots before supporting a less experienced mentor with one scholar slot, regardless of their cap. Conversely, supporting a new mentor with one scholar might be of higher marginal value than supporting an experienced mentor with their eighth scholar.

Of the 15 mentors in the summer 2023 program, 10 were returning from the winter 2022-23 program: Alex Turner, Dan Hendrycks, Evan Hubinger, John Wentworth, Lee Sharkey, Jesse Clifton, Neel Nanda, Owain Evans, Victoria Krakovna, and Vivek Hebbar. We welcomed 5 new mentors for the summer: Ethan Perez, Janus, Nicholas Kees Dupuis, Jeffrey Ladish, and Claudia Shi.

These mentors represented a dozen research agendas:

- Aligning language models

- Agent foundations

- Consequentialist cognition and deep constraints

- Cyborgism

- Deceptive AI

- Evaluating dangerous capabilities

- Interdisciplinary AI safety

- Mechanistic interpretability

- Multipolar AI safety

- Powerseeking in language models

- Shard theory

- Understanding AI hacking

Scholar Selection

Our initial application process for scholars was highly competitive. Of 461 applicants, 69 were accepted for the Training Phase (acceptance rate ≈ 15%). There was significant heterogeneity in acceptance rates across mentors (between 0% and 31%). Mentors generally chose to screen applicants with rigorous questions and tasks.[1]

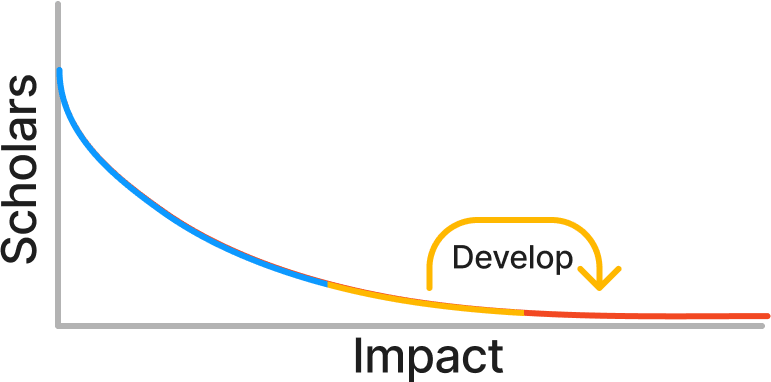

Why employ such a difficult application process? First, we believe that the distribution of expected impact from prospective scholars is long-tailed, such that most of MATS’ expected impact can be attributed to developing talent among the most promising scholars.

Second, we are principally bottlenecked on mentor time, so we cannot accept every good candidate. For these two reasons, it is imperative that our application process achieves resolution in the talent tail. From our Theory of Change:

We believe that our limiting constraint is mentor time. This means we wish to have strong filtering mechanisms (e.g. candidate selection questions) to ensure that each applicant is suitable for each mentor. We’d rather risk rejecting a strong participant than admitting a weak participant.

Indeed, one mentor did not receive any applications that met their standards, so they did not accept any scholars.

Our rigorous application process reduces noise in our assessments of applicant quality, even at the cost of discouraging some potentially promising candidates from applying. Additional filters reduced the number of scholars admitted to the Research Phase from the Training Phase (for two mentors), and then again before the Extension Phase.

A total of 60 scholars participated in the Research Phase: 50 in-person and 10 remote.

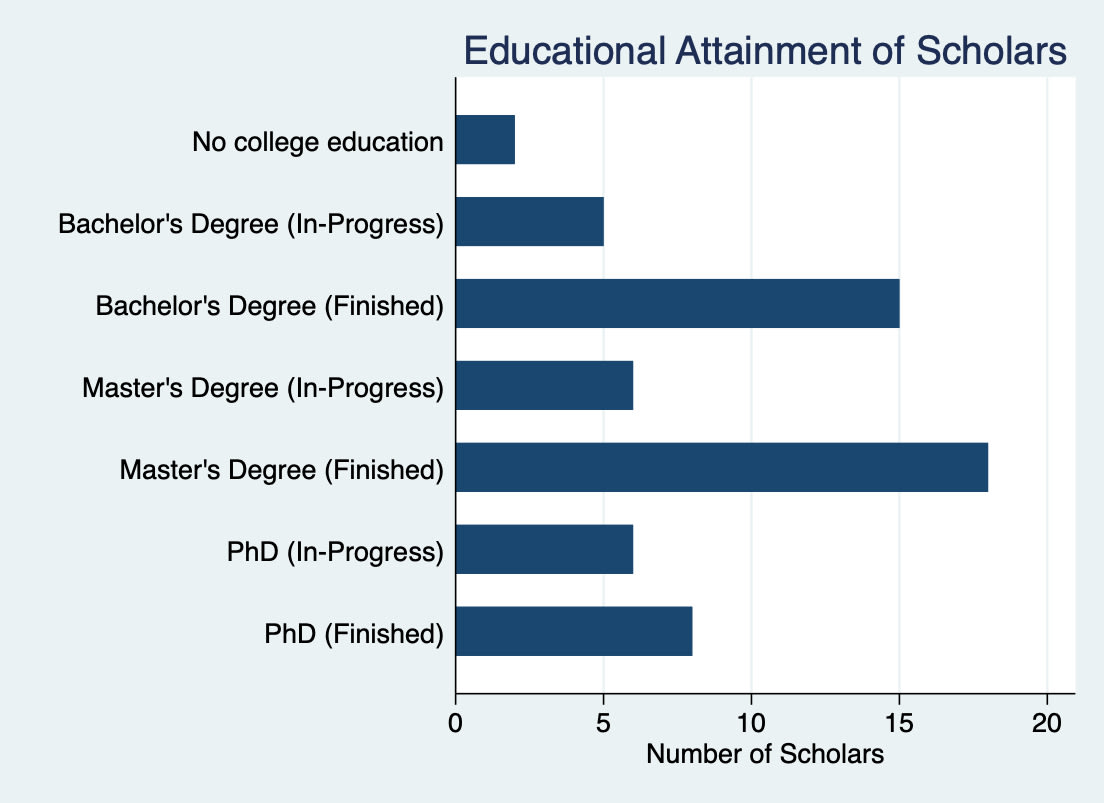

Educational attainment of scholars

The cohort included a variety of educational and employment backgrounds. 37% of scholars had at most a bachelor’s degree or below, 40% had at most a master’s degree or were in a master’s program, 23% had a PhD or were in a PhD program. About a third of scholars were students.

Note that, because we asked for scholars’ highest level of educational attainment, the categories in this graph are mutually exclusive. Overall, we were impressed by the high level of academic and engineering talent entering AI safety, but remain committed to increasing outreach to experienced researchers and engineers.

Counterfactual summers

According to their self-reports, if these scholars had not participated in MATS, their counterfactual summers would have likely involved working at a tech company, upskilling independently, or conducting research independently.

Of the 13 scholars who indicated they would likely have conducted mentored research if they had not participated in MATS, ten were students, and six were pursuing a graduate degree. Respondents elaborated on their alternative summer plans, some mentioning research mentorship options outside of the field of AI safety or skilling-up with less structure and less mentorship:

- “I would be working on my PhD thesis (pure mathematics, probably unrelated to alignment).”

- “I'd be very unlikely to be working on such an impactful project or be on such a steep L&D curve without MATS.”

- “I would have done my internship with the same mentor, but only remotely. So I would be doing the same thing but in a much worse environment. Not sure I would be very productive, or have achieved anything.”

- “I'd probably be applying, upskilling, working with friends. Overall I'd work a lot less.”

- “I would be supporting ML capabilities research in a non-lead role.”

A total of 17 scholars filled out an optional survey that asked them to compare the value of their counterfactual summers to the value they gained from MATS. Nine said their time would have been spent “somewhat less usefully” without MATS and eight said their time would have been spent “much less usefully.”

Training Phase (June 5 - June 30)

A total of 69 scholars entered the Training Phase. Over four sessions with a designated TA, scholars completed AI Safety Fundamentals’ Alignment 201 Course. These discussion groups gave scholars the broad familiarity necessary to engage with the research of their fellow scholars, talks by visiting speakers, and the work of different organizations in the AI safety ecosystem. However, around 25% of scholars did not attend any of these discussion groups, and only around 15% went to all four discussion group meetings. This is likely because many mentors assigned their own training curricula (e.g., reading lists) in the mentor’s subject area, and some explicitly told scholars to deprioritize Alignment 201.

Some mentors chose in advance to have an additional round of filtering after the Training Phase, so only 60 scholars continued to the Research Phase.[2]

Research Phase (July 10 - Sep 1)

In the Research Phase, scholars conducted AI safety research with the guidance of their mentors. Most scholars worked in-person in an office adjacent to Constellation in Berkeley, California, which allowed easy transit for mentors and seminar speakers based out of Constellation.

Mentorship styles

Mentors took different approaches to setting research direction and steering projects. According to a scholar survey, around ¼ of scholars had “total creative control” over their research direction, around ¼ picked from a list or were assigned a project, and the remaining ½ were somewhere in between.

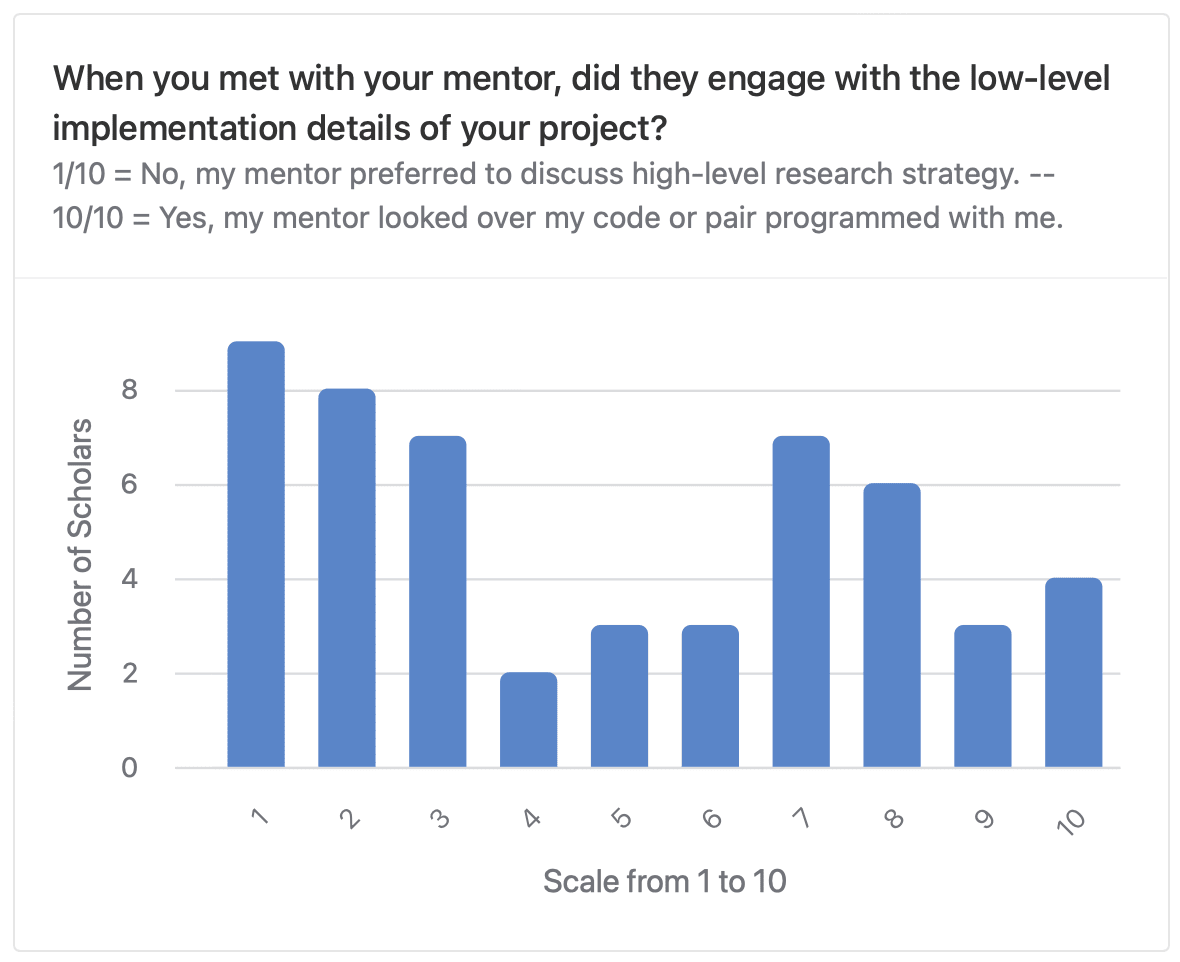

Mentorship styles also varied in terms of engagement with low-level implementation details of projects. Based on a survey of scholars, there was a roughly bimodal distribution of mentors, with around half of scholars reporting that their mentor only discussed high-level details of research projects, and the other half of scholars indicating that their mentor engaged more with the low-level implementation details of research.

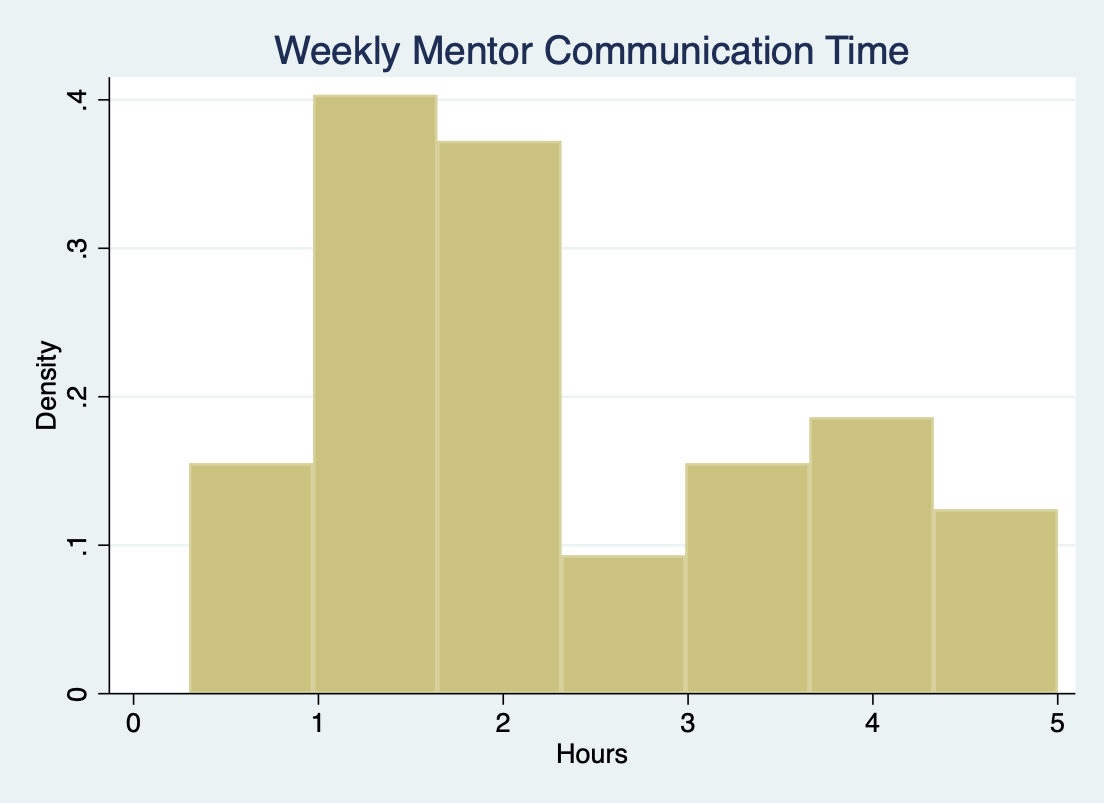

We also note a bimodal distribution of mentors based on time spent communicating with scholars per week (however, these two metrics aren’t correlated by mentor).

Some scholars from different streams collaborated on projects. Occasionally, scholars switched streams to work with a mentor who better fit their interests and needs.

Scholar Support

Our Scholar Support team consisted of five research/productivity coaches who offered assistance on research strategy, career planning, interpersonal communication, and research up-skilling (see Program Elements above).

- A total of 49 scholars booked meetings with Scholar Support during the Research Phase, 35 of whom used Scholar Support multiple times.

- The average scholar who met with Scholar Support at least once spent 3.4 hours meeting with Scholar Support throughout the program.

- Scholars spent a cumulative 160 hours in a total of 197 Scholar Support meetings.

- Ten scholars accounted for half of all Scholar Support hours.

28 out of the 49 scholars who utilized Scholar Support provided written feedback. These scholars reported finding the following particularly helpful:

- Career planning and advice (28%);

- Task prioritization (25%);

- General problem solving (14%);

- Being held accountable (10%);

- Procrastination modeling tools (10%);

- Rubberducking (7%);

- Overcoming procrastination (7%)

- Improving communication skills with mentors and peers (7%);

- Helpful line of questioning (7%).

Research Milestones

Halfway through the Research Phase, every scholar was required to submit a Scholar Research Plan (SRP). In their SRP, scholars were asked to outline the AI threat model or risk factor motivating their research, a theory of change to address that threat model, and a SMART plan for a project to enact that theory of change. One member of the MATS team and seven alumni graded the SRPs as per the provided rubric to help inform Extension Phase acceptance decisions (see below) and offer scholars constructive feedback.

We had three primary reasons for requiring Scholar Research Plans:

- Developing scholars’ ability to contribute to goal-oriented research strategy;

- Evaluating scholars for acceptance to the Extension Phase;

- Making it easier for scholars to write a subsequent grant proposal.

Many scholars chose to apply to the Long-Term Future Fund (LTFF) for post-MATS grant support using components of their SRP in their grant application. Thomas Larsen, an LTFF grantmaker, held a workshop in which he responded to questions from MATS scholars about the LTFF’s decision process and funding constraints.

The Research Phase concluded with a day-long Symposium, during which scholars delivered 10-minute talks on their research projects to their peers and members of the Berkeley AI safety community.

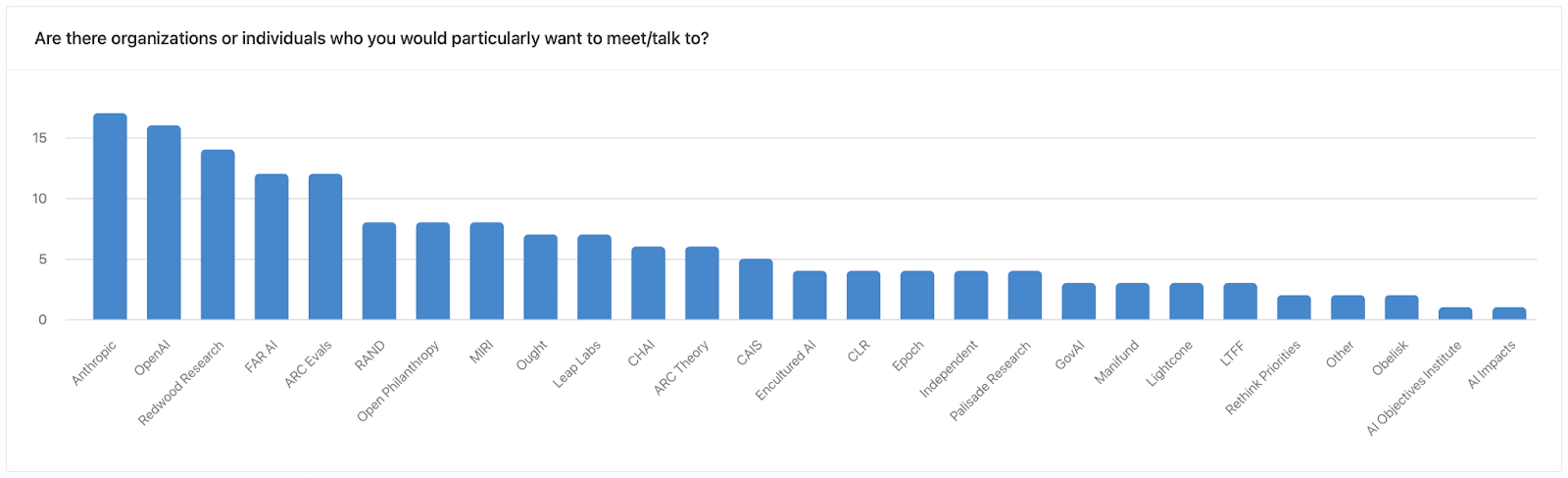

Workshops, Seminars, Networking Events

We hosted 23 seminars with guest speakers, nine workshops to develop scholars’ research abilities, and weekly networking and social events. We also held weekly lightning talk sessions, where many scholars delivered informal, five-minute research presentations. Prior to our career fair, which featured 12 organizations and independent researchers, 24 scholars filled out a survey about which organizations they would most like to see at the event. Their responses provide a snapshot of the current career interests of aspiring AI safety researchers.

Note that some organizations working on AI safety are not represented here (e.g., Google DeepMind, Apollo Research, GovAI) as we were not aware of any representatives in Berkeley at the time.

Community Health

Around 15% of scholars were responsible for the majority of meetings with the Community Manager, and around half of scholars met with the Community Manager just once.

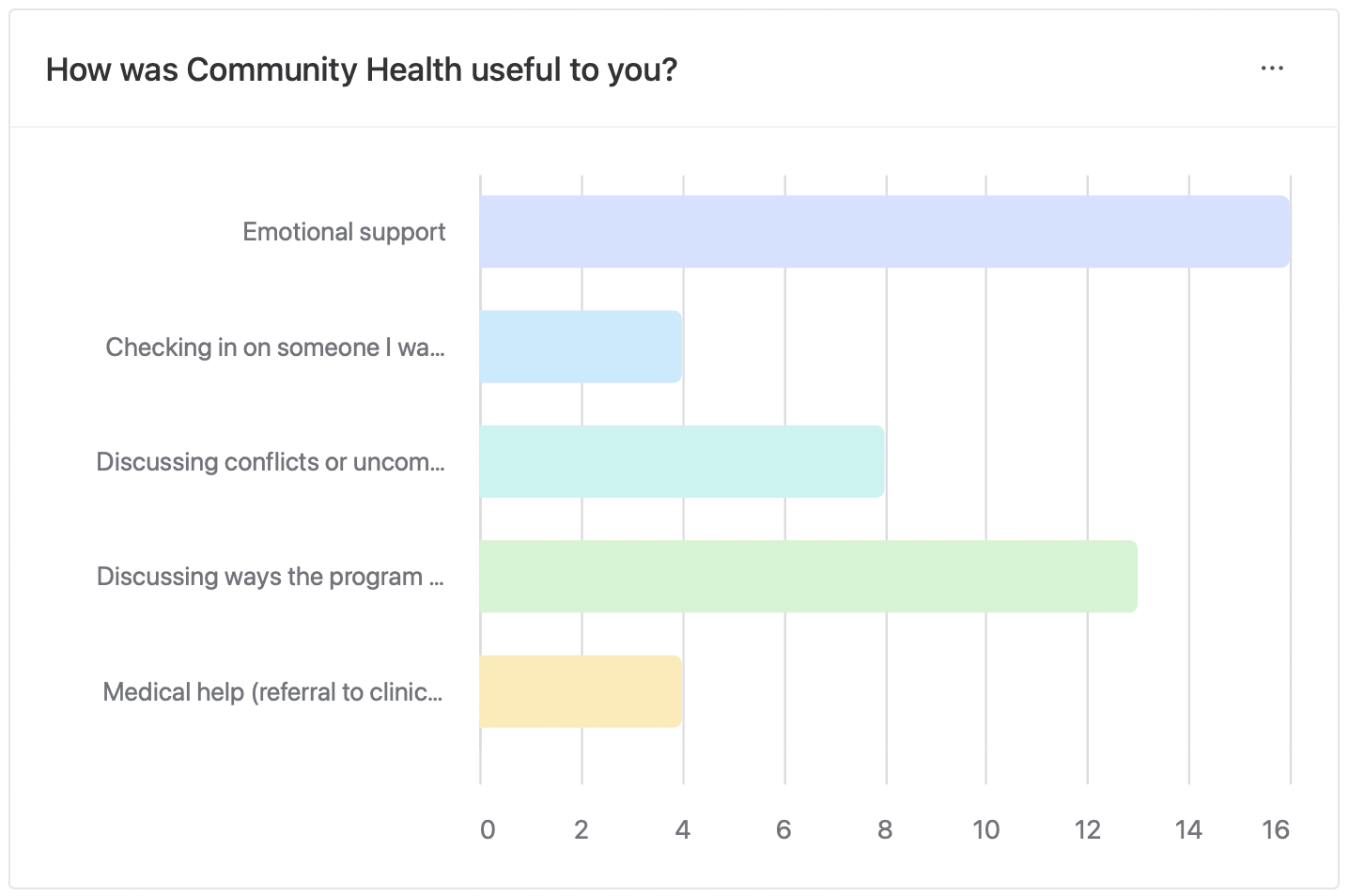

Scholars reported how the Community Manager helped in terms of:

- Emotional support;

- Checking in on someone I was worried about;

- Discussing conflicts or uncomfortable interactions;

- Discussing ways the program could be better for me;

- Medical help (referral to clinic, help with illness/injury).

Extension Phase (Sep 18 - Jan 1)

After the Research Phase, some scholars applied to the Extension Phase. The Extension Phase is aimed at supporting scholars to pursue their research projects with increasing autonomy from their mentors and from the structure that MATS provided in the Research Phase.

The MATS Executive Team accepted scholars into the Extension Phase primarily based on their mentor endorsements, SRP grades, and whether they had secured independent funding for their research. Funding from an external organization, typically the LTFF or Open Philanthropy, was an important input to this process because it provided external evaluation of the quality of a scholar’s research. Of the 33 scholars who applied, 25 were accepted into the Extension Phase (acceptance rate: 76%).

A total of 16 scholars are participating in the four-month Extension Phase at the London Initiative for Safe AI (LISA), two are continuing in Berkeley from FAR Labs, and seven are working remotely. We also provided an option for scholars to work out of Fixed Point in Prague.

During the extension, we expect scholars to formalize their research projects into what are usually their first publications and begin to plan their future career direction. To support them in this, we continue to offer day-to-day productivity support (e.g., daily standups, Scholar Support debugging, 1-1 career coaching), and we put on a mixture of seminars, workshops, and networking events, especially for the scholars based in the LISA office. This is all with the aim of preparing scholars to transition out of the supportive environment of the MATS program, by frequently reminding them to further develop their longer term plans, and to make new connections that help them advance those plans. All the while, we expect that scholars receive continued support from their mentors, albeit in most cases with decreasing frequency.

Evaluation of MATS Summer 2023

In this section, we outline how scholars rated different elements of the program and how we evaluated our impact.

Evaluating Program Elements

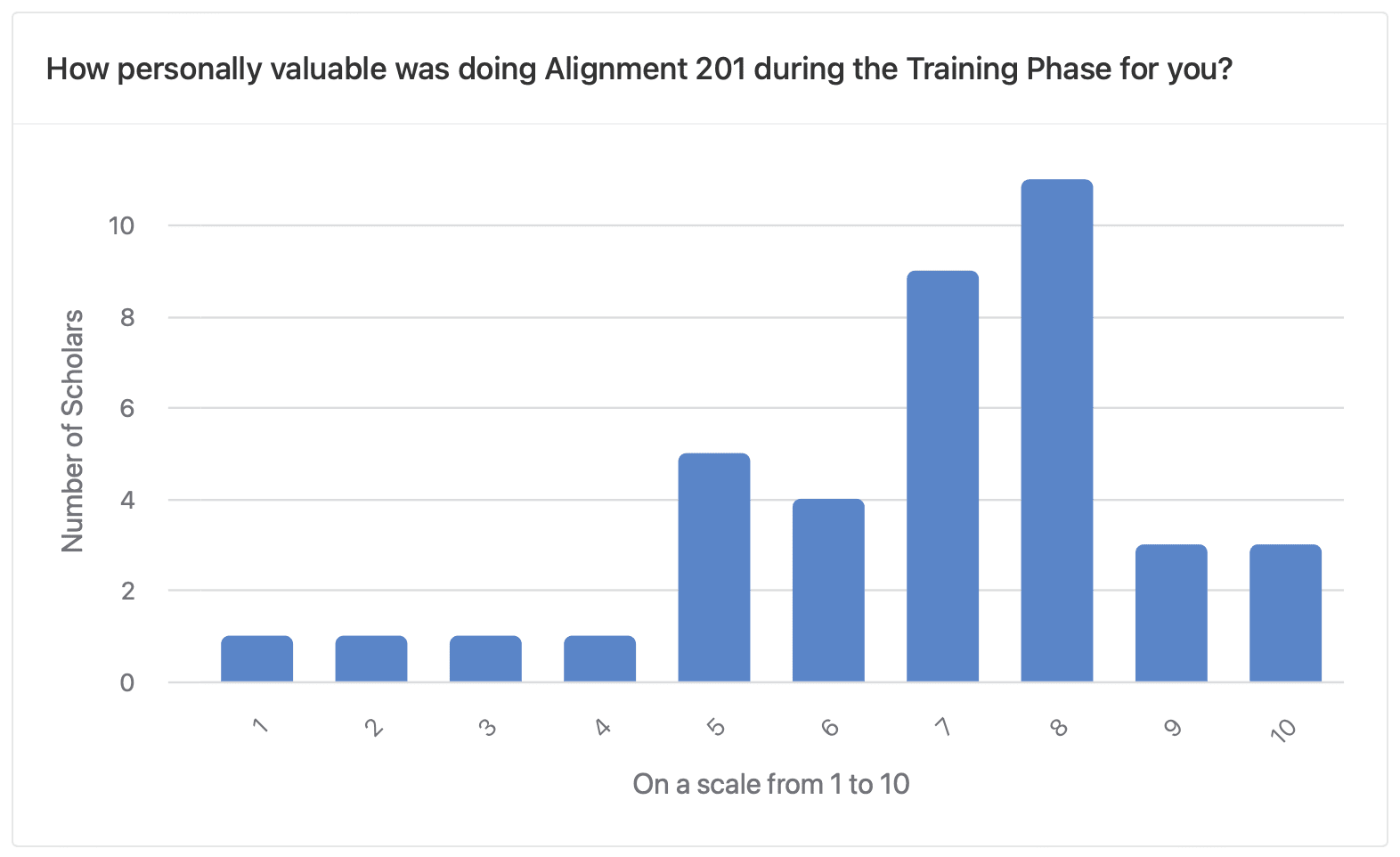

Alignment 201

Scholars reported a wide distribution of value from completing the Alignment 201 Course. The average rating was 6.8/10 and the Net Promoter Score was -18.

In written feedback, multiple scholars attributed much of the course’s value to the experience and insight of their facilitators. Scholars entered the course with different backgrounds and learning preferences: one respondent expressed their group’s conversations were less advanced than they would have liked; another mentioned a desire for a slower-paced course. One respondent appreciated the articles their facilitator would suggest; another preferred academic papers and formal essays. One scholar mentioned struggling to balance the course against the competing demands of their mentor.

Overall Program

Overall, scholars rated the Research Phase quite highly when answering the question, “On a scale of 1 to 10, how likely are you to recommend MATS to a friend or colleague?”[3] The average response was 8.9/10, and no one reported below 6/10. The Net Promoter Score was 69.

Value of Mentorship

Overall, mentors were rated highly, with an average rating of 8.0/10 and a Net Promoter Score of 29. 61% of scholars reported that at least half the value of MATS came from their mentor.

Given the negative-skew distribution of scholar ratings of mentors, it may be surprising that scholar-rated mentor contributions to MATS value are so varied. The difference is explained by the high weight that some scholars assigned to other sources of value; namely, access to the cohort and the wider Berkeley research community.

Scholars elaborated on the ways their mentors benefited them:

- 90% — “I think >90% effect of [MATS] passes through getting [mentor] time and talking with him about my research, noticing slight misconceptions about various things.”

- 70% — “[My mentor] gave me a lot of time, and did a good job of imparting a lot of his thought processes for thinking about research. He introduced me to several people I had valuable meetings with. I think I would have worked on far less promising stuff if I had not been guided by [my mentor’s] sense of research taste.”

- 65% — “From advanced linear algebra to general research direction and infohazard concerns, [our mentor] has been amazing for our research.”

- 50% — “[My mentor] spent a lot of time discussing our research with us and gave great advice on direction. He unblocked us in various ways, such as getting access to more models or to lots of compute budget. He connected us with lots of great people, some of whom became collaborators. And he was a very inspiring mentor to work with.”

- 40% — “From advanced linear algebra to general research direction and infohazard concerns, [my mentor] has been amazing for our research.”

- 20% — “The mentor introduced us (the team) to a few people in alignment, watched over the project (but mostly listened, and didn't intervene), and also reviewed our paper and organised an external review.”

- 10% — “Coming up with interesting ideas/objections I would not have come up with.”

Notably, many of these scholars attributed a low proportion of the value of MATS to their mentors but still reviewed their mentors quite positively.

After controlling for scholars’ ratings of their mentors, attributing more of the value of MATS to mentorship was associated with:

- reporting that they would have counterfactually worked at an alignment organization over the summer if not for MATS;

- reporting that publication record was a career obstacle at the beginning of the program, but not at the end of the program.

This suggests that scholars who were already connected somewhat with the alignment field, such as through a previous internship or training program, derived less value from the connection to the Berkeley community and their peers compared to direct mentorship. It also suggests that mentors were especially valuable for scholars who succeeded at publishing their research throughout the program.

Attributing less of the value of MATS to mentorship was associated with:

- reporting improved ability to develop theories of change;

- reporting more productive hours worked due to Scholar Support coaching.

This suggests that Scholar Support and other program elements intended to promote theory-of-change thinking were two important non-mentor sources of value for scholars.

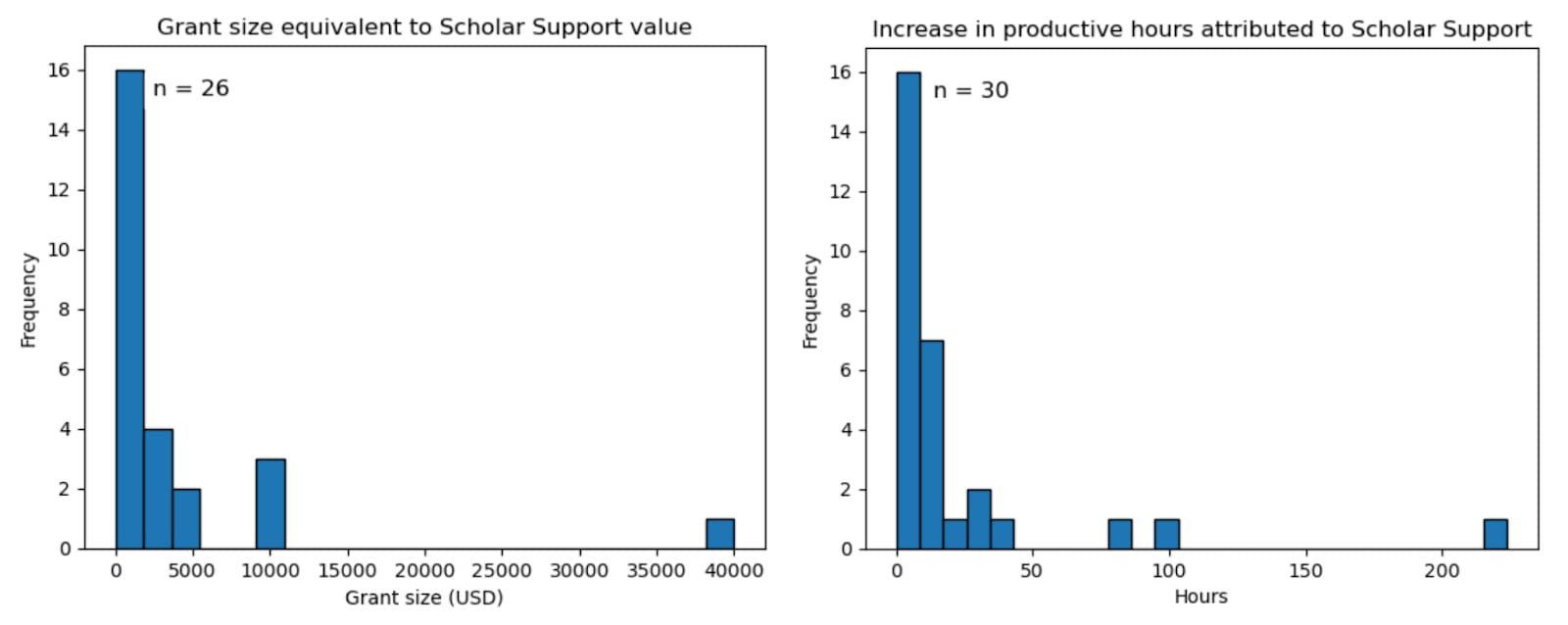

Value of Scholar Support

Overall rating

We asked scholars who reported attending at least one Scholar Support session, “On a scale of 1 to 10, how likely are you to recommend Scholar Support to future scholars?” The average response was 7.9/10 and the Net Promotor Score was 28.

Comparison with mentorship support as baseline

Because Scholar Support staff were hired for their proficiency in coaching and supporting scholars in a variety of ways, we checked for this by asking scholars, “How much of Scholar Support value could have been provided by your mentor, if they had time?” The responses were:

- Little to none of the value - 35%

- Some of the value - 50%

- Nearly all of the value - 15%

We also asked scholars whether they thought mentors would give them more support time if they asked; 55% said "probably not" or "definitely not." These two responses support our theory for the value of Scholar Support, which is that:

- Specialized coaches can provide better support than many mentors in some relevant domains;

- Mentors are time-constrained.

Grant equivalent valuation

We asked scholars who reported attending at least one Scholar Support session, “Assuming you got a grant instead of receiving 1-1 scholar support meetings during MATS, how much would you have needed to receive in order to be indifferent between the grant and coaching?”[4] The average and median responses were $3705 and $750, respectively. The scholar who responded $40,000 confirmed this figure was not a typo, citing support with “prioritization, project selection, navigation of alignment and general AI landscape, career advice, support during difficulties, individually tailored advice for specific meetings and projects.”

Productive hours gained

We asked scholars who reported attending at least one Scholar Support session, “How many more productive hours in the last 8 weeks would you estimate you had because of your Scholar Support meetings?” The average and median responses were 22 hours and 8 hours, respectively. The sum of responses was 658 productive hours gained.

Workshops & Seminars

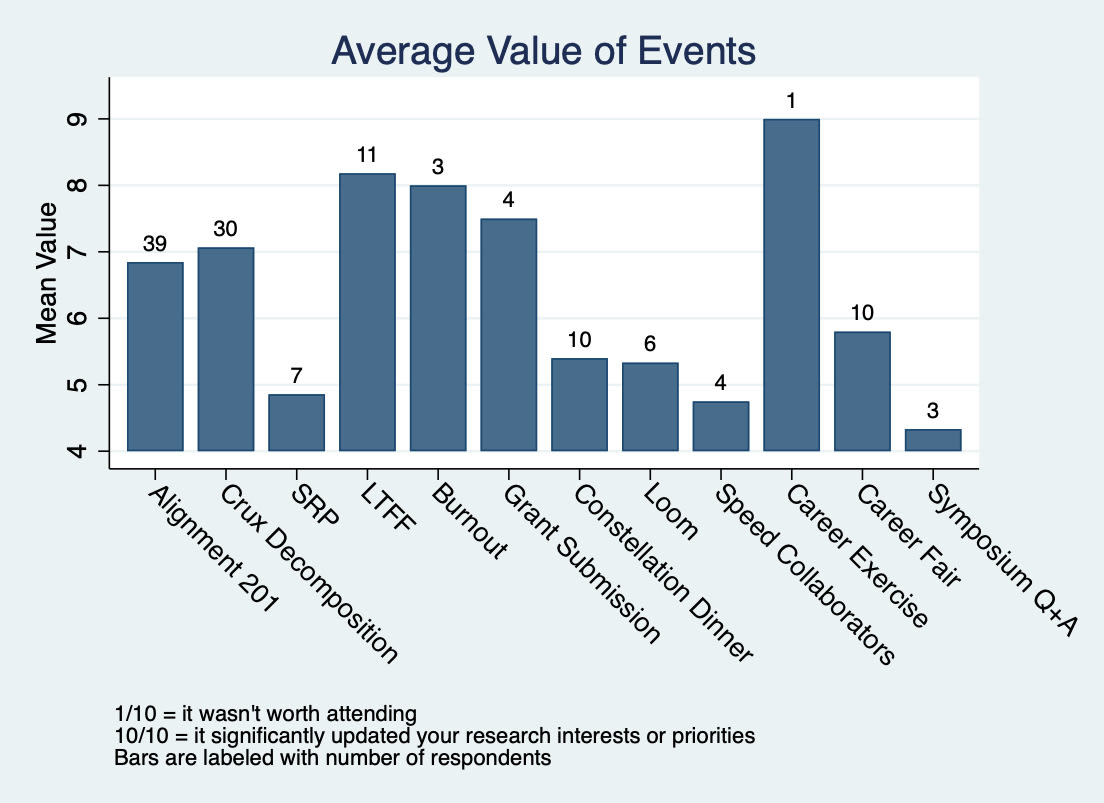

Scholars reported the value of attending workshops and networking events. Note that some events had very few respondents because we collected this feedback through optional surveys. 10/10 was a high bar on our scale, representing a significant update to a scholar’s plans.

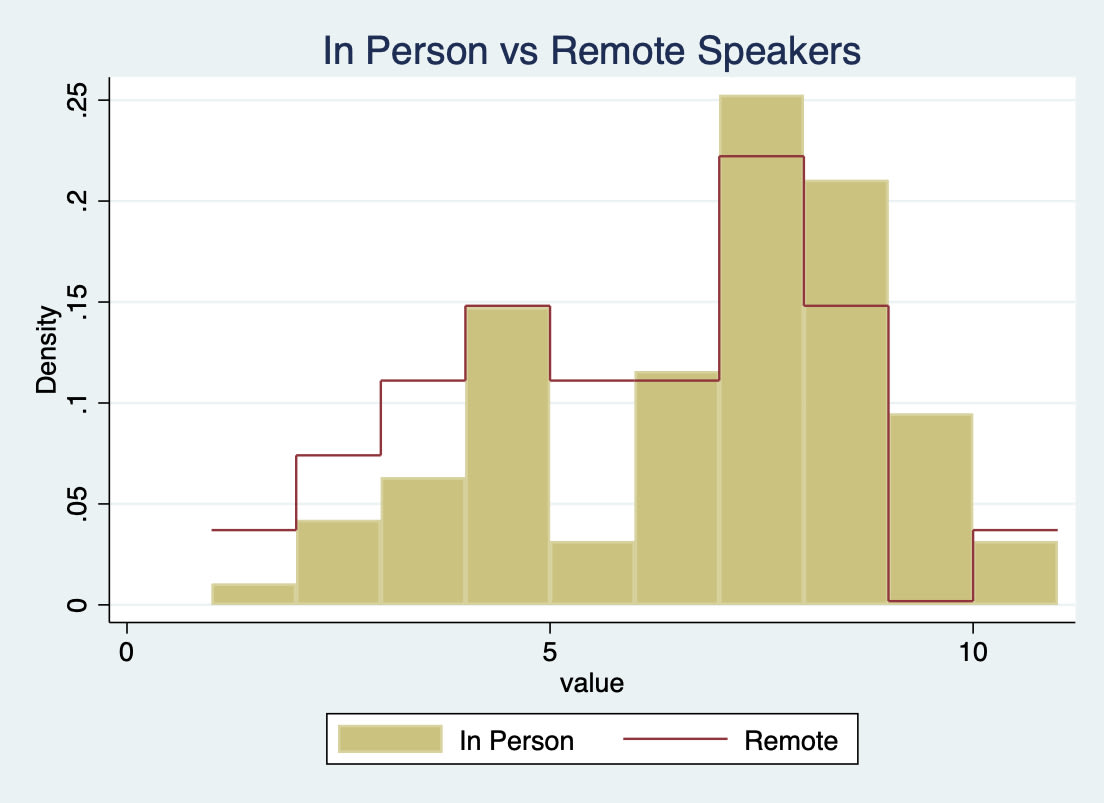

Scholars rated external speaker events according to the same 10-point scale. Of the 23 seminars, 11 were in-person, 11 were remote, and 1 was hybrid. In-person seminars were rated 0.9 points higher than remote seminars (p = 0.0088).

All else equal, we would choose an in-person seminar over a remote seminar, but analysis of the value of these formats is confounded by which researchers happen to live in the Bay Area and how scholars self-select into attending talks. A couple scholars gave seminars of their own, which were well-rated by their peers, even compared to seminars delivered by experienced researchers.

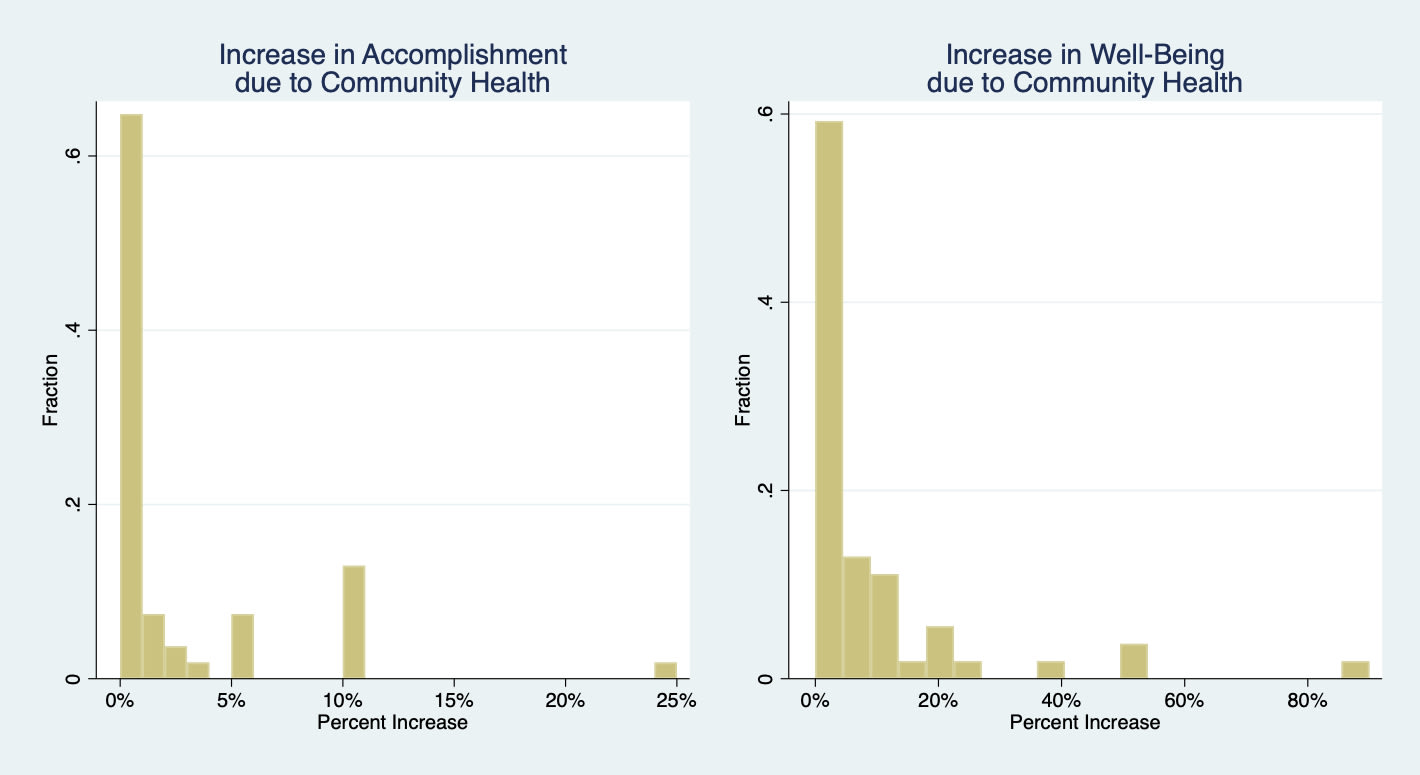

Community Health

Though many scholars did not use Community Health support, a minority of scholars benefited substantially. To investigate these benefits, we asked scholars two of the same questions we used to evaluate Scholar Support, along with a question about their well-being.

Median hours: 0 hours

Sum of hours: 202 hours

Median mental-health increase: 2%

These graphs exclude a scholar who reported a 1000% increase in mental health due to Community Health support. Scholars offered more detail:

- “It helped me feel less anxious about interpersonal issues.”

- “Happier, more sustainable, more focused, less guilt.”

- “There were two occasions in the programme where I got kind of freaked out about something; in both cases [the Community Manager] was available to chat about it, very kind and supportive, and helped make things much better for me.”

- “Generally creating a supportive environment when you are away from home and potentially at a career defining moment in your life is extremely important to me.”

Some scholars mentioned that they did not request community health support, but they were glad this safety net was in place if they needed it:

- “It was good to know I had someone to go to if something happened.”

- “I didn't really interact with Community Health, but I think there are counterfactual worlds where I would've wanted to.”

- “I didn't use it much but glad it was there.”

- “I didn’t make much use of community health, but I appreciate its existence in the case I might have needed it.”

Evaluating Outcomes

Research Milestones

Scholar Research Plan

The Scholar Research Plan was the first of the Research Phase’s two evaluation milestones. The SRPs were graded according to this rubric. The average grade was 74/100. Scholars tended to lose the most points on the threat model and theory of change sections of their SRPs; they showed stronger performance in the section laying out their research plans.

Importantly, some scholars neglected the SRP because they did not intend to apply for the Extension Phase or because they already had funding secured and did not anticipate the SRP playing a significant role in their Extension Phase decision. Some mentors encouraged their scholars to deprioritize the SRP in favor of working on their research.

Symposium talk

A symposium talk was the second Research Phase milestone. Projects were distributed across these categories as follows. Note that not every scholar’s work was represented at the Symposium and some projects fell under multiple categories.

Symposium talks received peer review on three dimensions: “What,” (50 points); “Why,” (30 points); and “Style,” (20 points). The full rubric is available here. The average grade was 86/100.

Research ability

Using the “depth, breadth, taste” framework explained above, we evaluated to what extent scholars increased their research skills along these dimensions during the program.

Depth of research skill

Because we didn’t ask a pre-program survey question about depth of research skill, we asked scholars at the end of the program to directly rate how much they strengthened their technical skills within their chosen research direction. A rating of 1/10 represented “no improvement compared to my counterfactual summer;” and a rating of 10/10 represented “significant improvement compared to my counterfactual summer.” The average rating was 7.2/10.

Respondents elaborated on the improvements to their technical skills:

- “I got from very rudimentary ML skills to reasonable researcher skills.”

- “Learned an array of strategies and techniques for finding out specific things in mech interp. Also learned a better idea of what questions to ask, standards of proof, etc.”

- “Running experiments more quickly, identifying and removing bottlenecks.”

- “I improved my skills a lot at MATS, and it was particularly valuable to be doing real research, because it meant that I didn't have to try to guess what skills are important for research, I was just doing research, and the skills needed for that are the ones that I improved on. So MATS was much better targeted, and also more intense and motivating, than my summer would have been.”

Breadth of knowledge

To assess breadth of knowledge, we asked scholars at the beginning and end of the Research Phase to rate their understanding of the research agendas of major alignment organizations on a scale from 1 to 10, where 10/10 indicated “For any major alignment org, you could talk to one of their researchers and pass their intellectual Turing Test (i.e., that researcher would rate you as fluent with their organization's agenda).”

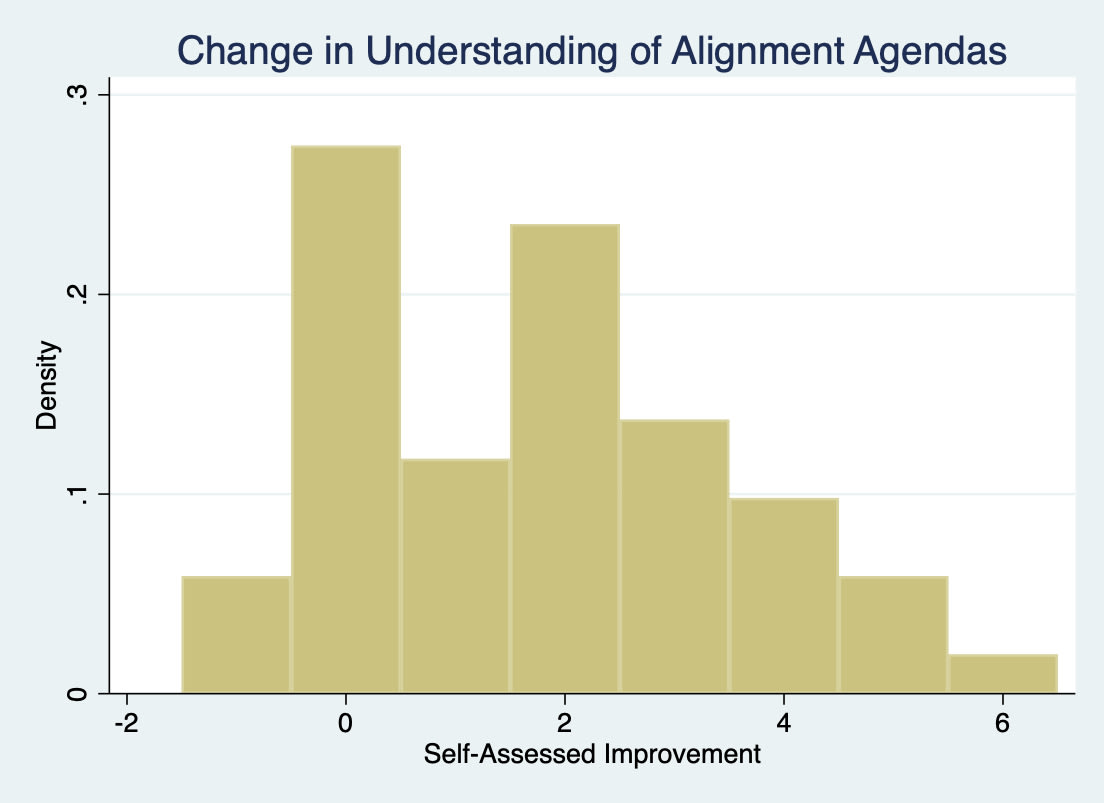

We subtracted scholar’s initial self-reported scoring from their post-program self-reported scoring to obtain the following distribution:

On average, scholars self-reported understanding of major alignment agendas increased by 1.75 on this 10 point scale. Two respondents reported a one-point decrease in their ratings, which could be explained by some inconsistency in scholars’ self-assessments (they could not see their earlier responses when they answered at the end of the Research Phase) or by scholars realizing their previous understanding was not as comprehensive as they had thought.

Research taste

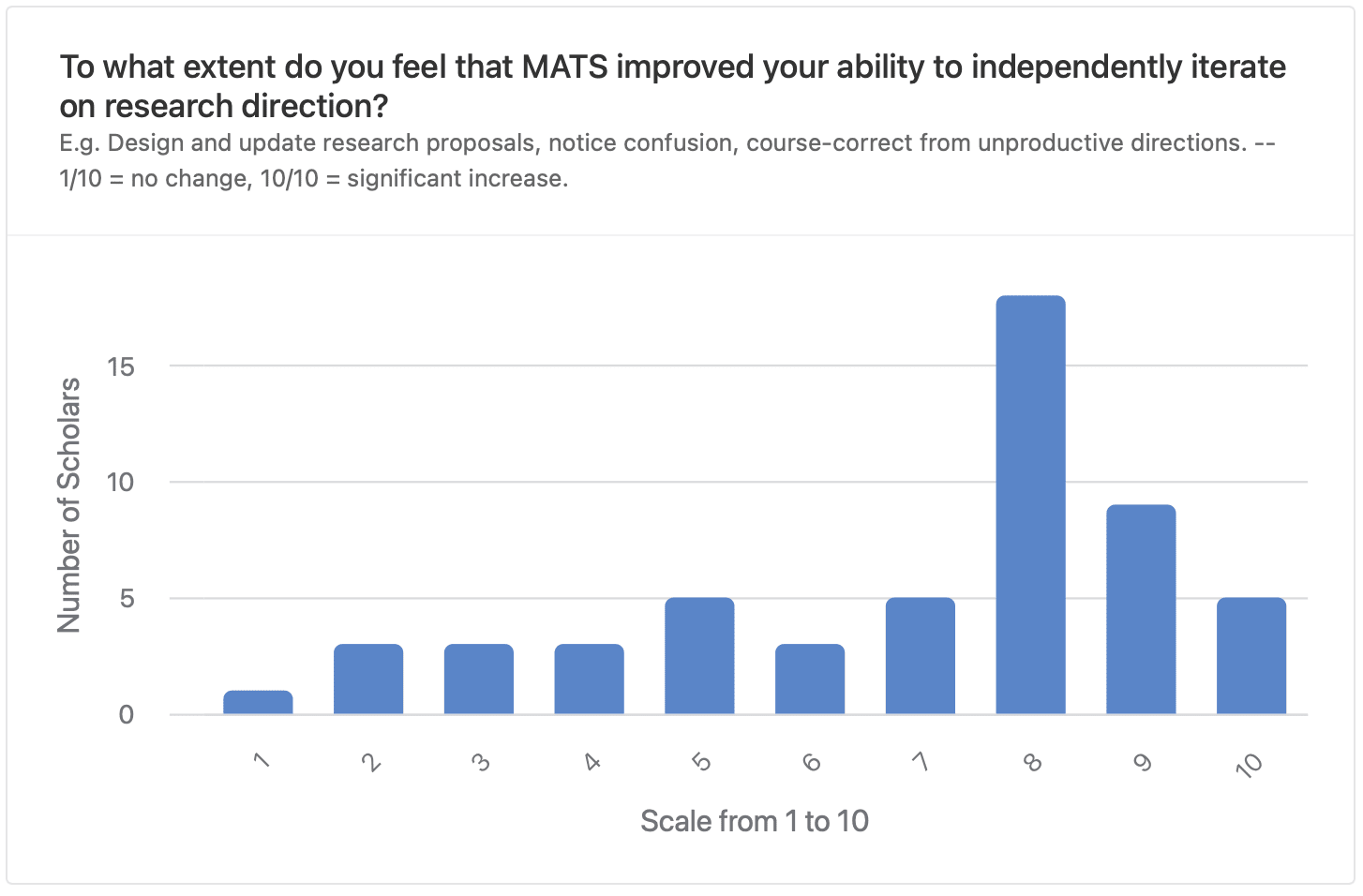

We decomposed the “taste” dimension of research ability into two post-program survey questions. The first question asked “To what extent do you feel that MATS improved your ability to independently iterate on research direction?”, where 1/10 indicated no change and 10/10 indicated significant increase. The average response was 6.9/10.

Respondents provided qualitative information on this topic:

- “Mostly I got the boost to self-confidence about stating research hypotheses. Before, I kind of assumed that everything that can be questioned/asked about a paper has already been done, or deemed uninteresting - I now believe more strongly that there is still a lot of low-hanging fruit in safety research. (One example: understanding the theory behind RLHF better).”

- “The scholars spent all day talking to each other about their research. This added quick feedback loops, i.e. ‘that approach will/won't succeed’, ‘you should read this as well’, ‘that seems low/high impact.’ Big improvement to research iteration, perhaps by a factor of 3.”

- “Better at red-teaming and being skeptical of my own models & hypotheses, and finding the most efficient way to disprove them.”

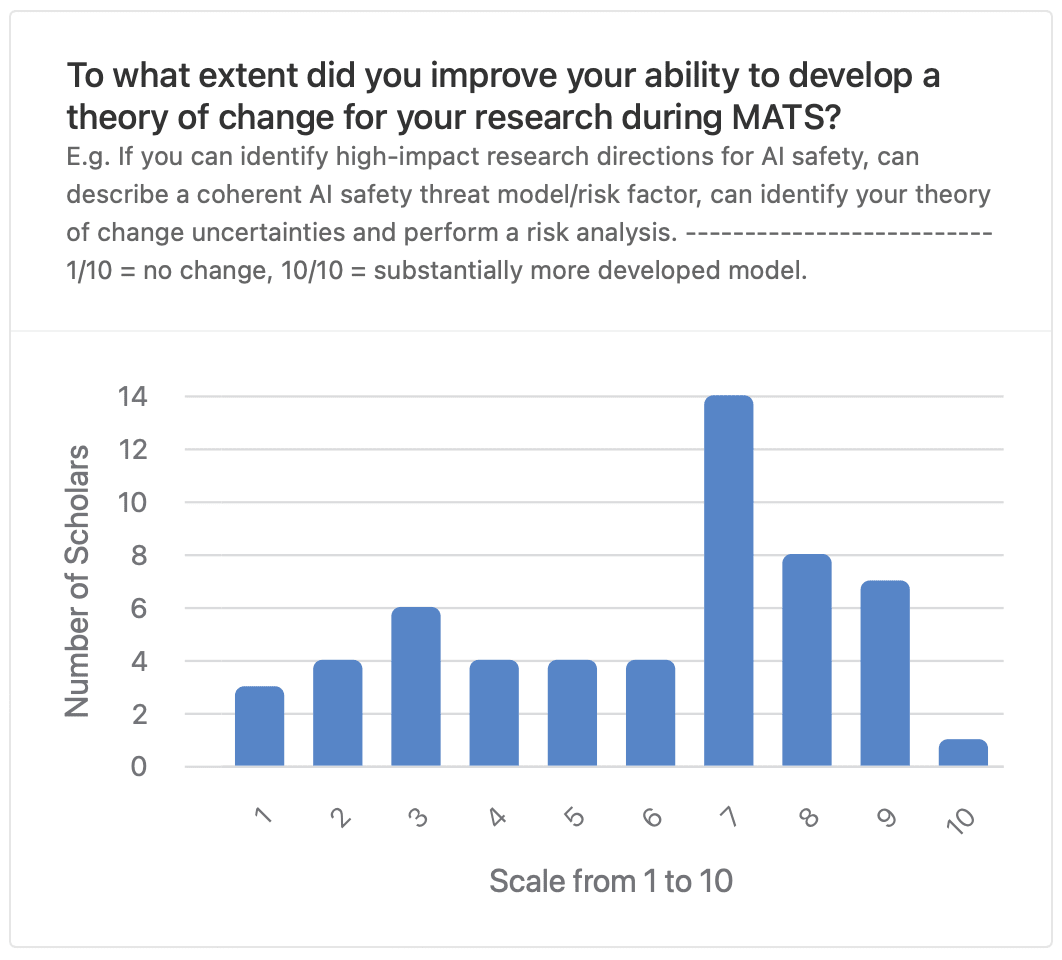

The second question covering research taste asked, “To what extent did you improve your ability to develop a theory of change for your research during MATS?”, where 1/10 represented no change and 10/10 represented possessing a substantially more developed model. The average response was 5.9/10.

Scholars elaborated on their experiences with theories of change:

- “I think MATS helped me a lot with having much more detailed models of how things within AI will go, realizing considerations that I haven't previously considered, being more able to realize when my models were not sufficiently granular, and just knowing more relevant facts and details.”

- “More critical about thinking if what I'm doing fixes anything.”

- “I think I already was thinking about this a lot before, but MATS gave me an opportunity to practice more and I think I've become more realistic about assessing agendas (rather than previously maybe thinking of too grand agendas off the bat).”

Mentor endorsement of research

In a survey, we asked mentors, “Taking [depth/breadth/taste ratings] into account, how strongly do you support the scholar's research continuing?” The average response was 8.1/10 and the Net Promoter Score was 38.

- Depth: When asked "How strong are the scholar's technical skills as relevant to their chosen research direction?" mentors rated scholars 7.0/10 on average, where:

- 10/10 = World class;

- 5/10 = Average for a researcher in this domain;

- 1/10 = Complete novice.

- Breadth: When asked "How would you rate the scholar’s understanding of the agendas of major alignment organizations?" mentors rated scholars 6.7/10 on average, where:

- 10/10 = For any major alignment org, they could talk to one of their researchers and pass their intellectual Turing Test (i.e., that researcher would rate them as fluent with their organization's agenda);

- 1/10 = Complete novice.

- Taste: When asked "How well can the scholar independently iterate on research direction (e.g. design and update research proposals, notice confusion, course-correct from unproductive directions)?" mentors rated scholars 7.1/10 on average, where:

- 10/10 = Could be a research team lead;

- 7/10 = Can typically identify and pursue fruitful research directions independently;

- 5/10 = Can identify when stuck, but not necessarily identify a better direction;

- 1/10 = Needs significant guidance.

- Motivation: When asked "To what extent does the scholar seem "value aligned", i.e. strongly motivated by achieving good AI outcomes?" mentors rated scholars 8.9/10 on average, where:

- 10/10 = Improving AI-related outcomes is their primary motivation by far;

- 5/10 = Motivated in part, but would potentially switch focus entirely if it became too personally inconvenient;

- 1/10 = coincidentally working in AI alignment for other reasons.

Importantly, judgements about scholars’ research are not consistent across mentors because each mentor has their own standards and priorities, and not every mentor responded to the survey.

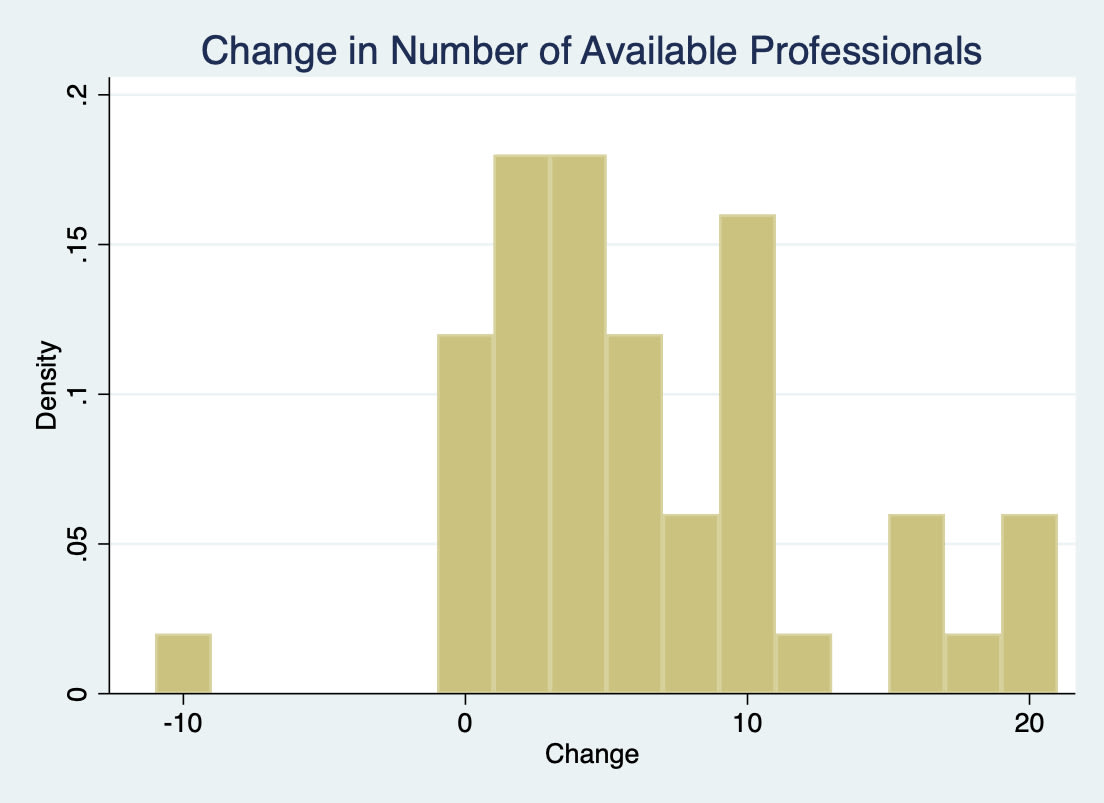

Networking

We measured the impact of networking opportunities in terms of change in professional connections and potential collaborators.[5] At the beginning and end of the Research Phase, scholars reported the “number of professionals in the alignment community you would feel comfortable reaching out to for professional advice or support.”[6] We took the difference in these responses to obtain the following distribution:

The median difference in responses was +4.5. The respondent whose two responses imply a -10 change in the number of professionals they can reach out to first responded “30” and then “20”, so we expect this number is a result of calibration variance.

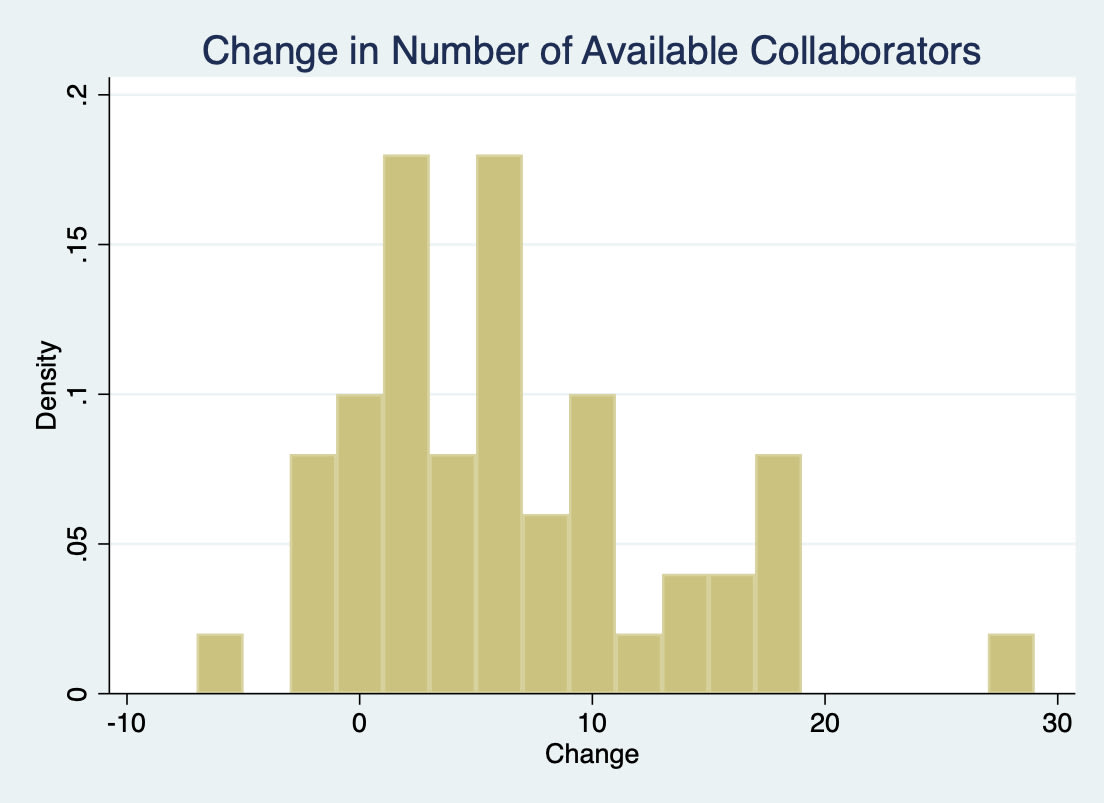

We asked a similar question about potential collaborators: “How many people do you feel you can reach out to about collaborating on an AI alignment research project?”[7] We took the difference in responses to obtain the following distribution:

The average difference in responses was +6.0. Some scholars reporting a decrease is most likely explained by some day-to-day variance in people’s judgments as scholars didn’t have access to their previous responses.

Career Obstacles

At the beginning and end of the Research Phase, we queried scholars about “the obstacles between [them] and a successful AI alignment research career.” When comparing the responses from the beginning and end of the Research Phase, we see that for most possible obstacles, fewer scholars report it as a problem. Publication record and software engineering experience were exceptions: more scholars indicated facing these obstacles by the end of the program than the beginning. These results likely reflect scholars learning about obstacles they had previously underestimated, new obstacles becoming more salient as scholars worked through earlier ones, or scholars pivoting to career paths with different obstacles. Publication record was the primary obstacle between scholars and successful alignment careers by the end of the Research Phase.

We also asked specifically about scholar confidence in writing grant proposals at the beginning and end of the program. 70% of scholars indicated feeling unconfident or very unconfident about writing grant proposals at the start of the program, but 80% of scholars indicated feeling confident or very confident about writing grant proposals at the end of the program.

Lessons and Changes for Future Programs

While we haven’t yet finalized or implemented some of the changes we plan to make for our next cohort, here are the main lessons we learned from the Summer 2023 Program.

1. Filtering better during the application stage

We expect that some scholars in the research phase are unlikely to perform alignment research in their careers that is sufficiently impactful to warrant the costs of mentoring them, and we think that we could have elicited enough information during the application stage to identify those scholars. This suggests that there is room for MATS to filter harder in initial applications or in subsequent evaluation checkpoints. Higher resolution in the upper tail of the talent distribution would allow us to select more promising applicants, or at least fewer unpromising applicants, allowing some mentors to allocate greater attention to each of their scholars.

One component of a better filter for some streams is increased screening for software engineering skill. This can be a costly evaluation process for mentors to run themselves, so for the Winter 2023-24 Program we contracted a SWE evaluation organization to screen applicants for empirical ML research streams.

For the Winter 2023-24 Program, we also decided to better communicate different mentor styles to applicants by adding a “Personal fit” section to mentor descriptions on our website. As some mentors are much more hands-off than others, we want to make it more likely that applicants can self-select out of streams for which they have a poor personal fit.

2. Providing more technical support

One form of assistance that was not explicitly covered by the Scholar Support team and only sometimes covered by mentors was technical support, including debugging code, teaching new tools like the TransformerLens library, and answering technical ML questions. In future cohorts, we may provide office hours or similar for scholars who are bottlenecked by technical problems. One possibility is to improve our infrastructure for scholars to help each other with technical problems.

3. More proactively supporting professional development beyond mentorship

Scholar Support currently helps scholars resolve a variety of bottlenecks, including challenges with productivity, prioritization, research direction, communication, mental health, etc. While this has been valuable for many scholars, it more strongly targets short-term bottlenecks that can be resolved in ~1 hour of coaching per week, and there are some skill improvements (e.g., practice reading research papers or improving programming ability) that require a greater time commitment.

In future cohorts, we may explore coaching structures or peer accountability structures that encourage scholars to spend more time on professional development beyond object-level research. This could look like paper reading groups, more regular and high-commitment workshops, and coworking sessions on topics like theory of change, research workflows, etc.

4. Offering research management help to mentors

In addition to further supporting professional development, Scholar Support is also planning to help most mentors manage scholar research for the Winter 2023-24 cohort. We (the Scholar Support team) find it plausible that a strict confidentiality policy between Scholar Support and mentors leaves too much value on the table as:

- Mentors would benefit from the shared information about scholar status;

- Scholars would benefit from their mentors’ goals being understood by the Scholar Support team, especially as the mentors’ research directions change throughout the program.

In offering research management help to mentors, Scholar Support will take a more direct role in understanding research blockers, project directions, and other trends in scholar research, and summarizing that information to mentors with scholar consent.

5. Reducing the number of seminars

We think 23 seminars was too many; the breadth we offered scholars was not worth the low average attendance we observed. In the winter, we will schedule 12-16 seminars instead, with weekly opportunities for shorter lightning talks. We will also encourage more scholars to deliver seminars (as longform talks, rather than confined to the lightning talk format), since scholar-delivered seminars were highly rated over the summer.

6. Improving communication with scholars during the program

In part due to the number of seminars, workshops, and social events, we think that the signal-to-noise ratio of our announcements may have been too low. On at least one occasion, a sizable fraction of the scholars didn’t know about an imminent deadline. In the future, we’ll more cleanly distinguish the most important announcements and make those announcements sooner.

7. Developing more robust internal systems

As the MATS team scales, we’re improving several internal systems. These include our data collection pipelines for impact evaluation, our project management systems, and investing in team members through greater professional development.

8. Frontloading social events

Some scholars reported unmet demand for socializing among the cohort. In the Winter, we intend to frontload social events to facilitate connections early in the program and hold more events near the office in the hope of reducing the activation energy required for scholars to initiate gatherings later in the program.

Acknowledgements

This report was produced by the ML Alignment & Theory Scholars Program. Rocket Drew and Juan Gil were the primary contributors to this report, Ryan Kidd scoped, managed, and edited the project with the support of Christian Smith, and Laura Vaughan and McKenna Fitzgerald contributed to data collection and analysis for the Scholar Support report section. Thanks also to Carson Jones, Henry Sleight, and Ozzie Gooen for their contributions to the project. We also thank Open Philanthropy and the Survival and Flourishing Fund Speculation Grantors, without whose donations we would have been unable to run the Summer 2023 Program or retain team members essential to this report.

To learn more about MATS, please visit our website. We are currently accepting donations for our Winter 2023-24 Program and beyond!

- ^

Examples of selection questions that some mentors included in their application:

- Spend 2-4 hours using language models to develop a dataset of question-answer pairs on which models trained by RLHF will likely give friendly but incorrect responses. Then record yourself for 2-4 hours evaluating models on your dataset and plotting scaling laws. Bonus points if your dataset shows inverse scaling.

- I would like you to spend ~10 hours (for fairness, max 20) trying to make research progress on a small open problem in mechanistic interpretability, and show me what progress you’ve made. Note that I do not expect you to solve the problem, to have a background in mech interp, get impressive results, or really to feel that you know what you’re doing.

- Is “Bayesian expected utility maximizer” a “True Name” for a generally powerful intelligence? Why/why not?

- ^

The trainees who did not progress were concentrated among two mentors, who informed their trainees about their prospects for getting into the Research Phase prior to the Training Phase. The primary determinant of these mentors’ decisions was scholars’ performance in a research sprint at the end of the Training Phase. Knowing their odds, the trainees in these streams elected to participate, in part due to the high value of a guaranteed month working with their mentor. Jay Bailey, a trainee from Winter 2022-23, attests,

“Neel [Nanda]'s training process in mechanistic interpretability was a great way for me to test my fit in the field and collaborate with a lot of smart people. Neel's stream is demanding, and he expects it to be an environment that doesn't work for everyone, but is very clear that there's no shame in this. While I didn't end up getting selected for the in-person phase, going through the process helped me understand whether I wanted to pursue mechanistic interpretability in the long term, and firm up my plans around how best to contribute to alignment going forward.”

- ^

This question is commonly used to calculate a “net promoter score” (NPS) which is standard in many industries. Based on our respondents, the NPS for MATS is +69.

- ^

This survey question and the following one come from Lynette Bye’s 2020 review of her coaching impact.

- ^

The following histograms exclude a scholar who increased from 0 to 999 available professionals and potential collaborators, explaining, “at the start I basically felt like I could message / call up no-one and now I feel like I can message / call up anyone quite literally.”

- ^

The question elaborated, “If you want a more specific scoping: how many professionals would you feel able to contact for 30 minutes of career advice? Factors that influence this include what professionals you know and whether you have sufficient connection that they'll help you out. A rough estimate is fine, and this question isn't just about people you met in MATS!”

- ^

The question elaborated, “Imagine you had some research project idea within your alignment field of interest. How many people that you know could plausibly be collaborators? A rough estimate is fine, and this question isn't just about people you met in MATS!”

NickLaing @ 2023-12-02T09:15 (+4)

Thanks that's a thorough report.

Quick note "postmortem" kind of sounds like something bad has happened which had triggered the report egg want the case, perhaps "review" or "roundup" or similar might be a bit more positive a word to use.

Ryan Kidd @ 2023-12-07T20:57 (+3)

Cheers, Nick! We decided to change the title to "retrospective" based on this and some LessWrong comments.

SummaryBot @ 2023-12-04T14:12 (+3)

Executive summary: The ML Alignment & Theory Scholars (MATS) program supported 60 AI safety scholars with mentorship, training, housing, infrastructure, and funding. Scholars improved technical ability, research taste, and knowledge breadth, and reported many positive connections with peers and researchers. Scholars and mentors form part of a talent pipeline for AI safety.

Key points:

- 60 scholars studied AI safety for 3 months with 15 mentors. Scholars rated mentors highly (8/10) and are likely to recommend MATS (8.9/10).

- Scholars improved technical research skills (self-rated 7.2/10 vs counterfactual summer), knowledge breadth (+1.75/10), research taste (5.9-6.9/10), and made 10 professional connections on average.

- Scholars faced fewer career obstacles after MATS, but lack of publications remained an issue. Mentors strongly endorsed 94% of scholars to continue research.

- Scholars valued community, seminars and Scholar Support coaching in addition to mentorship. Scholar Support meetings were valued at $750-$3700 in grant equivalent.

- MATS will improve applicant screening, support technical skills and research management, and reduce seminars for the next cohort.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.