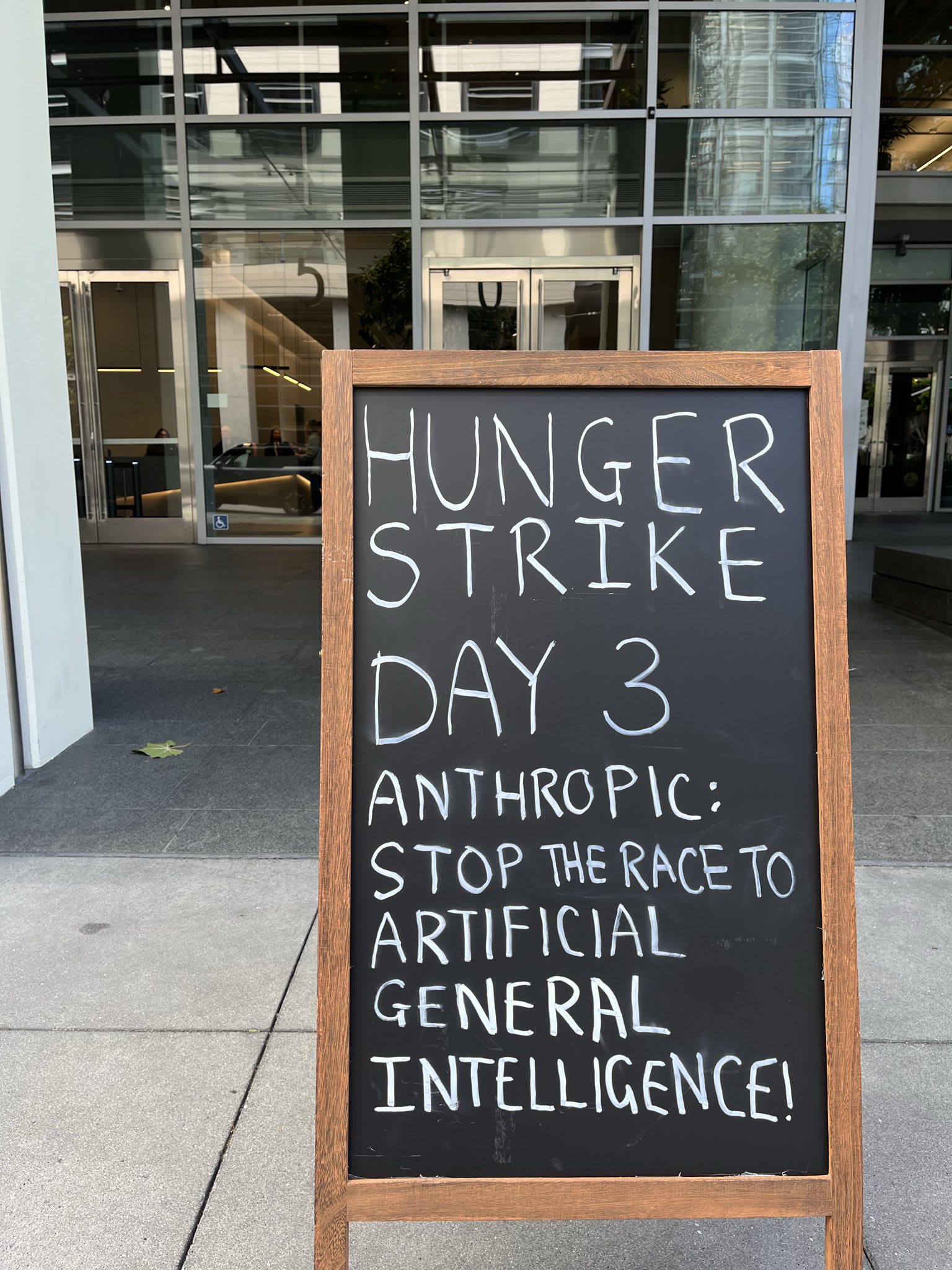

Hunger strike in front of Anthropic by one guy concerned about AI risk

By Remmelt @ 2025-09-05T04:00 (+19)

The text is below. To follow along, you can check out Guido Reichstadter’s profile.

Hi, my name's Guido Reichstadter, and I'm on hunger strike outside the offices of the AI company Anthropic right now because we are in an emergency.

Anthropic and other AI companies are racing to create ever more powerful AI systems. These AI's are being used to inflict serious harm on our society today and threaten to inflict increasingly greater damage tomorrow. Experts are warning us that this race to ever more powerful artificial general intelligence puts our lives and well being at risk, as well as the lives and well being of our loved ones. They are warning us that the creation of extremely powerful AI threatens to destroy life on Earth. Let us take these warnings seriously. The AI companies' race is rapidly driving us to a point of no return. This race must stop now, and it is the responsibility of all of us to make sure that it does.

I am calling on Anthropic's management, directors and employees to immediately stop their reckless actions which are harming our society and to work to remediate the harm that has already been caused. I am calling on them to do everything in their power to stop the race to ever more powerful general artificial intelligence which threatens to cause catastrophic harm, and to fulfill their responsibility to ensure that our society is made aware of the urgent and extreme danger that the AI race puts us in.

Likewise I'm calling on everyone who understands the risk and harm that the AI companies' actions subject us to speak the truth with courage. We are in an emergency. Let us act as if this emergency is real.

MichaelDickens @ 2025-09-05T16:51 (+53)

I'm undecided on whether things like hunger strikes are useful but I just want to comment to say that I think a lot of EAs are way too quick to conclude that they're not useful. I don't think we have strong (or even moderate) reason to believe that they're not useful.

[Ninjaedit] When I reviewed the evidence on large-scale nonviolent protests, I concluded that they're probably effective (~90% credence). But I've seen a lot of people claim that those sorts of protests are ineffective (or even harmful) in spite of the evidence in their favor. I think hunger strikes are sufficiently different from the sorts of protests I reviewed that the evidence might not generalize, so I'm very uncertain about the effectiveness of hunger strikes. But what does generalize, I think, is that many EAs' intuitions on protest effectiveness are miscalibrated.

MichaelDickens @ 2025-09-07T00:38 (+3)

This comment currently has 7 agree-votes and 0 disagree-votes. Which makes the think the median EA's intuitions on protest effectiveness aren't as pessimistic as I thought.

(Perhaps people who are critical of a strategy are more likely to comment on it, which creates a skewed perception when reading comments?)

huw @ 2025-09-05T09:52 (+18)

I don’t have a good heuristic for distinguishing between a hunger strike as legitimate protest and delusional self-harm, but this feels more toward the latter.

I think we can game this out, though. The purpose of a hunger strike as protest is to (a) generate public attention (it’s an extreme, costly signal, which can cause other people to update their beliefs) and (b) coerce an actor into doing something to prevent the nominal cause of your strike.

In this case, we can clearly rule out (b). But I also think that (a) is highly unlikely—AI safety isn’t an issue on the verge of public salience, and Anthropic aren’t even the most famous company neglecting it. In almost all cases, this will merely garner attention among a group of people who are already amenable to their message within EA and rationalism. Cynically, we might even recognise this has having a third, hidden purpose, which is to demonstrate fealty to others in the AI safety radicalism movement.

I would suggest that as the benefits of such a protest are minimal, we should turn to the harms. Not only is this person harming themself, if this gets attention within their ingroups, they’re encouraging others to do the same for the same attention. As such, I’ve strongly downvoted this post and reported it to the moderation team. I strongly believe we should not be making this behaviour visible to others.

Remmelt @ 2025-09-05T12:56 (+27)

My reaction here was: 'Good, someone shows they care enough about this issue that they're willing to give a costly signal to others that this needs to be taken seriously' (i.e. your point a).

I do personally think many people in EA and rationalist circles (particularly those concerned about AI risk) can act more proactively to try and prevent harmful AI developments (in non-violent ways).

It's fair though to raise the concern that Guido's hungerstrike could set an example for others to take actions that are harmful to themselves. If you have any example of this happening before, I'd like to learn from it (besides the Ziz stuff).

To be clear, Guido is a seasoned activist (before in climate movement, Roe v. Wade, etc). I'd expect for him to end the hunger strike before he really gets into health issues. He's also not part of the safety community, but rather the broader Stop AI movement.

At the same time, I appreciate you sharing your opinion here, and I am more just open to having a conversation about it.

Matrice Jacobine @ 2025-09-05T22:26 (+1)

This is a very big "besides"!

Remmelt @ 2025-09-06T01:37 (+2)

Yeah, it's a case of people being manipulated into harmful actions. I'm saying 'besides' because it feels like a different category of social situation than seeing someone take some public action online and deciding for yourself to take action too.

Matrice Jacobine @ 2025-09-06T15:13 (+1)

One of the killings was, as far as we know, purely mimetic and (allegedly) made by someone (@Maximilian Snyder) who never even interacted online with Ziz, so I don't think it's an invalid example to bring up actually.

titotal @ 2025-09-05T13:31 (+18)

I feel that similar reasoning could have been applied to historically successful protest movements in their early stages. The civil rights movement didn't start with the march on Washington, it started small and got bigger, and the participants risked their health in their protests. More recently I think the climate activist movement has achieved an immense amount of good through their tactics.

I don't actually believe that AI x-risk is a serious problem at the moment, so I don't support this particular protest. However I want to protect the principle of protesting being good: I want people who think there is a serious danger to be willing to actively protest that danger, not wait around passively for the media to give them permission to do so.

huw @ 2025-09-05T13:50 (+2)

I think we can cleave a reasonable boundary around hunger strikes specifically. They work well when the person you’re striking against has a duty of care over you so is forced to address your protest (ex. Guantanamo Bay), or if you’ll garner significant attention (ex. Gandhi). These, I think, reasonably outweigh the harms to the subjects and people they might inspire.

Risking other forms of harm in a protest has a different character. This is because those harms are often inflicted by the subject of the protest, which fairly reliably causes backlash or exposes some inconvenient aspect of the oppressor (ex. Civil rights). This risk is significant, but IMHO can be justified by its likelihood to further the aims of the protest.

(I guess it’s possible to believe that it’s morally good to face harms when protesting even if you’re absolutely certain you will achieve nothing, because you believe that self-sacrifice for your protest is good in and of itself. But I suspect in your example the only option for protest was to face harm, whereas this person has extensive non-harmful avenues available to them)

MichaelDickens @ 2025-09-05T16:44 (+6)

See Michaël Trazzi's comment on LW: a third (fourth?) purpose is to get Anthropic employees to pay attention to AI risk.

Tom Bibby @ 2025-09-06T10:20 (+3)

Why do you think a lower level of salience makes an action like this less useful?

huw @ 2025-09-06T11:02 (+2)

Costly signals like hunger strikes are only likely to persuade public opinion if the public actually hears about them, which is only going to happen if the issue already has some level of public salience. (Whereas protests are better for building public salience, because they’re better suited for mass turnout).

I then also argued that a costly signal like this is unlikely to persuade people at Anthropic, who are already unusually familiar with the debate around AI safety or pausing AI and don’t hold a duty of care over the protestor. It’s at this point that the harms (including inspiring other hunger strikers) overwhelm the benefits.

MellowGrenade @ 2025-09-05T12:35 (+6)

I think he’s in front of the wrong building! He should be stroking in front of OpenAI surely? The people who head up Anthropic are people who used to work on OpenAI’s AI safety team and they left because of the lack of serious priority into AI safety, so I would assume from that, that Anthropic at least has a better sense of AI safety and prioritises it more than OpenAI, that being said, I don’t really trust any tech company in the Valley because they all prioritise scale and shareholders above anything, including ethical practices

Ben_West🔸 @ 2025-09-08T01:39 (+4)

Let us act as if this emergency is real.

Banger

MellowGrenade @ 2025-09-05T12:40 (+1)

Also, I’m not sure how this situation applies to your website as what he is doing probably doesn’t fall under effective altruism? I don’t believe for a moment that he’s actually going to change anything with his hunger strike. And probably will give up before it gets serious, as history dictates that the people most likely to hold out until the end of a hunger strike are political prisoners, such as Bobby Sands in Ireland, most people who start end up giving in