A proposed hierarchy of longtermist concepts

By Arepo @ 2022-10-30T16:26 (+38)

The previous post explained why I felt unable to use existing longtermist terminology to build a longtermist expected value model. In this post I introduce terminology that I do intend to use the rest of the sequence.

I’ve tried to follow the terminology mini-manifesto from that post, but the specific terms here are much less important than the relationships between them being usefully defined. This makes them a lot easier to formally model, and hopefully easier to talk clearly about. So a) please don’t feel like you need to memorise anything here, and b) feel free to offer better suggestions - so long as they have the same relationships.

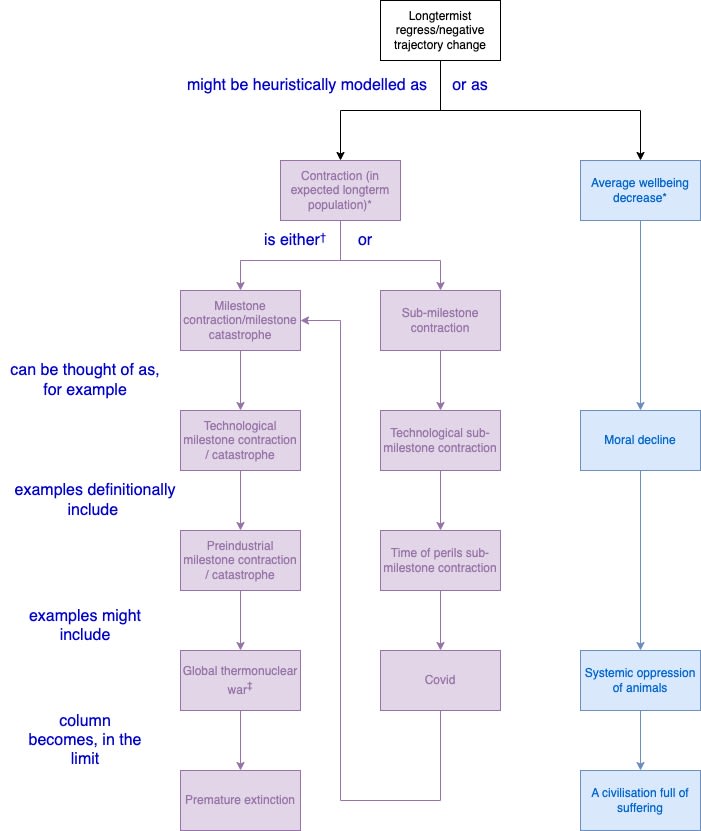

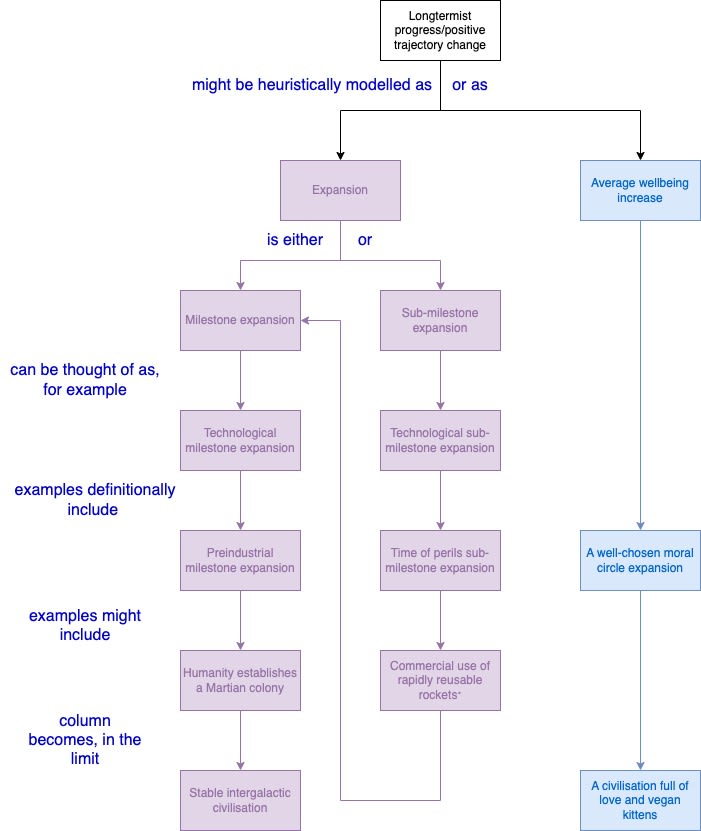

I include hierarchical graphs showing the relationships under the definitions, so feel free to skip down to them if that seems easier.

Core concept

These just correspond to changes to the measure we care about, expected utility,.

Longtermist progress/regress: the top-level concept - a noteworthy change to expected value in the long-term future. Covid and other historical pandemics would qualify to the extent you think they changed the expected value of the future, as would economic turmoil, failing states etc. This potential inclusiveness is meant to be a feature, rather than a bug. It leaves open the possibility that longtermists could get high expected value from focusing on relatively likely, relatively ‘minor’ events - which I don’t see any a priori reason to rule out.

Longtermist progress/regress maps very closely onto the concept of trajectory change, but is slightly more general in that it needn’t describe ‘a persistent change to total value at every point in the long-term future’ (emphasis mine); large spikes on the graph might increase total value the same amount, for example.[1] In practice, the terms can probably be used interchangeably to taste.

Heuristical concepts

[Edit: I've updated this section since one of the commenters pointed out an error in the original post: expected utility is not simply a product of <expected number of people> * <average utility per person> unless you expect them to be independent, which is a stronger assumption than I want to make in proposing this hierarchy. Thinking of each as holding constant or being correlated (rather than anticorrelated) still seems like a useful heuristic for many purposes, and like a substantially more accurate proxy for longtermist regress than existential risk is, but it means the two concepts below belong in the next section, rather than, as I originally had them, as core concepts.]

Expansion/contraction (one of the two subtypes of longtermist regress): any event that noticeably changes our expectation of the number of people.[2] It might be helpful to think of that number as area of a graph on which the x and y axes respectively represent time and total number of people. This is the class of events which I’m modelling.

Average wellbeing increase/decrease: any event that noticeably changes average wellbeing per person. We could think of this number as area of a graph on which the x and y axes represent time and average wellbeing per person.

Thus we can imagine longtermist progress describing the area of a third graph whose y axis is total utility over time, and tracks the product of the other graphs' y-axes. [edit: this only holds if you assume they're independent. I still think it's useful to think of hanges to average wellbeing as an important class of events that deserves some deep analysis, and is worth conceptually separating from expansion/contraction - though one might equally validly posit some specific relationship between them that lets you consider longtermist progress directly.]

The model in this sequence focuses explicitly on expansions and contractions.

From here we have a hierarchy of sub-concepts each with both a negative and positive version. I will define only the negative versions for brevity, but generally the positive version of each concept is just that concept with the opposite sign. I include a chart of the latter below the chart of the negatives, to make us all feel better.

These are ways of thinking I found useful to build the model in the next post.

Milestone: any recognisable achievement which seems essential to pass on the way to generating astronomical numbers of people.[3] Thus:

Milestone contraction (one of the two exclusive subclasses of contraction): any event that noticeably regresses us past any stage which we identify as a key milestone. I think it’s fair to refer to these as unambiguously catastrophic, so we can also call these ‘milestone catastrophes’, slightly more intuitively albeit with slightly less terminological consistency.

Sub-milestone contraction (the other subtype of contraction): any event that noticeably regresses our technological, political or other progress without passing any stage which we identify as a key milestone.

Technological milestone/sub-milestone contraction (a lens through which to view milestone/sub-milestone contractions[4]): any event that noticeably slows down or regresses beneficial technological progress (so we might reduce, say, nuclear weaponry and not consider it a ‘technological contraction’).

We can prefix milestone contractions with the thresholds they pass into, thus what MacAskill calls a civilisational catastrophe - ‘an event in which society loses the ability to create most industrial and postindustrial technology’ - we could call a preindustrial technological milestone contraction/catastrophe, trading some naturalness for internal consistency.

We can similarly prefix sub-milestone contractions with the milestone they’ve advanced from (in other words, the state they’re in) . The 'time of perils' is an important milestone I identify in the next post, hence we could have a time of perils sub-milestone contraction.

Premature extinction: the extinction of sentient human descendants significantly before the heat death of the universe.

Putting that all together we can see it has a simple hierarchy, with the branches corresponding to the two main heuristics coloured [edit: I've tweaked this graph from the original, per the comment , now highlighting the second level down as heuristics rather than core concepts]:

* Holding the other same-level concern approximately equal to or less than it would have been without the change

† There are plenty of other ways one might usefully partition this, but this follows the model I’ll use in the next post, which itself attempts to be as consistent as possible with key concepts in existing discussions

‡ This could also be ‘ascent of a technologically stagnant global totalitarian government’. A stable totalitarian government that developed the technology to spread around the universe but oppressed its citizens everywhere would be an example of average wellbeing decrease and technological milestone expansion. Depending on exactly how oppressive it was, it could either be much worse or much better than premature extinction.

And here’s the cheerier version of the same hierarchy:

* Alternatively, some marker of differential technological progress, such as The Treaty on the Prohibition of Nuclear Weapons. Or it could arguably just be any year of what we’ve come to think of as normal economic growth.

In the next post I’ll get back to our regularly scheduled gloom, and present a model for thinking probabilistically about the risks of premature extinction given technological milestone catastrophes of various severities.

- ^

It also explicitly combines the multiple graphs Beckstead mentioned in his description of y trajectory change, quoted on the forum tag page, into a single utility function. The forum description of trajectory change seems to assume that that concept implicitly does so, given that it mentions ‘total value’, but I’m unsure whether Beckstead intended this.

- ^

Strictly speaking we might prefer to talk more abstractly about ‘value-generating entities’, but for most purposes ‘people’ seems a lot clearer, and good enough. For the purpose of this sequence, you can think of the latter as shorthand for the former.

- ^

It needn’t be locally positive - for example, the development of advanced weaponry, taking us into the ‘time of perils’ seems both bad in its own right and almost impossible to circumvent on the way to developing interplanetary technology. Interplanetary travel requires vastly more energy and matter to build and operate than eg biotech. Even in the unlikely absence of biotech, nukes etc, the energy to move enough mass to an offworld colony for it to become self-sustaining is far more than the energy required for the same rockets to divert an asteroid, which NASA have just successfully done, and which could be used to do huge damage to civilisation.

Cf also Ord’s discussion in The Precipice of how asteroid deflection technology likely increases the risk of asteroids hitting us.

- ^

By a ‘lens’ I mean something like ‘a way of looking at the whole sample space from the perspective of a certain metric’. So for example, it seems clear both that populating the Virgo supercluster requires a very high level of technology and losing all of our technnology more or less implies we’ve gone extinct (perhaps not vice versa since an AI that wiped us out would seem like advanced technology. Though if one defined technology in terms of the capacity for sentient agents to achieve goals then with no sentient agents we could describe technology as either 0, or undefined).

We might similarly describe other longtermist-relevant contractions through an alternative lens, such as a political milestone contraction. Such lenses would represent different ways of thinking about the same sample space, not different sample spaces.

Emrik @ 2022-10-30T21:45 (+13)

"Cf also Ord’s discussion in The Precipice of how asteroid deflection technology likely increases the risk of asteroids hitting us."

I'm sorry, but I have to ask. What on Earth could this argument possibly be? I was thinking anthropic or some nonsense, but I can't see anything there either.

Btw, I really like how you capture this topic. Especially "milestone contraction". But... please don't bonk me, but some of the others seem a little nit-picky? I may just not be so deep into these questions that the precision becomes necessary for me.

Lorenzo Buonanno @ 2022-10-30T21:55 (+18)

I think the main argument is that it's dual use, it would enable malevolent actors to cause asteroids to hit Earth (or accidents)

Emrik @ 2022-10-30T22:04 (+7)

This is very interesting and surprising, thank you.

Arepo @ 2022-10-30T22:04 (+2)

Yup.

Jobst Heitzig (vodle.it) @ 2022-11-03T13:08 (+7)

You write

expected utility, and its main factors, expected number of people, and expected value per person

but that is only true if number of people and value per person are stochastically independent, which they probably aren't, right?

Jobst Heitzig (vodle.it) @ 2022-11-03T13:31 (+13)

Related to that:

Your figure says

Longtermist regress IS EITHER Contraction OR Average wellbeing decrease,

but consider a certain baseline trajectory A on which

- longterm population = 3 gazillion person life years for sure

- average wellbeing = 3 utils per person per life year for sure,

so that their expected product equals 9 gazillion utils, and an uncertain alternative trajectory B on which

- if nature's coin lands heads, longterm population = 7 gazillion person life years but average wellbeing = 1 util per person per life year

- if nature's coin lands tails, longterm population = 1 gazillion person life years but average wellbeing = 7 utils per person per life year,

so that their expected product equals (7 x 1 + 1 x 7) / 2 = 7 gazillion utils.

Then an event that changes the trajectory from A to B is a longtermist regress since it reduces the expected utility.

But it is NEITHER a contraction NOR an average wellbeing decrease. In fact, it is BOTH an Expansion, since the expected longterm population increases from 3 to 4 gazillion person life years, AND an average wellbeing increase, since that increases from 3 to 4 utils per person per life year.

Arepo @ 2022-11-04T10:26 (+3)

Ah, good point. In which case I don't think there's any clean way to dissolve expected utility into simple factors without making strong assumptions. Does that sound right?

Thinking about changes to expected population holding average wellbeing constant and and expected average wellbeing holding population constant still seem like they're useful approaches (the former being what I'm doing in the rest of the series), but that would make them heuristics as well - albeit higher fidelity ones than 'existential risk' vs 'not existential risk'.

Jobst Heitzig (vodle.it) @ 2022-11-04T12:33 (+1)

I think you are right, and the distinction still makes sense, but only as a theoretical device to disentangle things in thought experiments, maybe less in practice, unless one can argue that the correlations are weak.

Charlie_Guthmann @ 2022-10-31T03:42 (+3)

Anyone want to try and steelman why we should use traditional x-risk terminology instead of these terminologies?

This is an important post and should have more traction. I strongly believe the community should start using your proposed terminology.

Ardenlk @ 2022-11-05T11:55 (+3)

One reason might be that this framework seems to bake totalist utilitarianism into longtermism (by considering expansion/contraction and average willbeing incrase/decrease) as the two types of longtermist progress/regress, whereas longtermism is compatible with many ethical theories?

Arepo @ 2022-11-07T10:22 (+2)

It's phrased in broadly utilitarian terms (though 'wellbeing' is a broad enough concept potentially encompass concerns that go well beyond normal utilitarian axiologies), but you could easily rephrase using the same structure to encompass any set of concerns that would be consistent with longtermism, which is still basically consequentialist.

I think the only thing you'd need to change to have the generality of longtermism is to call 'average wellbeing increase/decrease' something more general like 'average value increase/decrease' - which I would have liked to do but I couldn't think of phrase succinct enough to fit on the diagram that didn't sound confusingly like it meant 'increase/decrease to average person's values'.

Charlie_Guthmann @ 2022-10-31T05:17 (+2)

Also this isn't really the point of your post but human extinction does not necessarily imply contraction and also it can change well-being per person.

This is because aliens might exist and also intelligent life could re-evolve on our planet. Thus there are counterfactual rates of existence and well-being per person that are non-zero. e.g. There might be an alien race in our lightcone that is going to perfectly tile the universe, using every unit of resources and converting those resources at the maximal rate into utility. If that were the case, human extinction would on expectation both be an expansion and increase in wellbeing per person.

Additionally if the average wellbeing per person < 0 (if you believe such a thing is possible), then contraction is positive EV not negative. This is a generalization of the idea that if human well being per person < alien well being per person, human extinction raises well being per person.

Arepo @ 2022-10-31T11:37 (+3)

These are both reasonable points, and I'm just going to invoke the 'out of scope' get-out-of-jail-free card on them both ;)

You have to form a view on whether our descendants will tend to be more, equally or less 'good' than you'd expect from other alien species, which would imply extinction is somewhere between very bad, somewhat bad, or perhaps very good respectively.

Obviously you need some opinion on how likely aliens with advanced technology are to emerge elsewhere. Sandberg, Drexler and Ord have written a [paper](https://arxiv.org/abs/1806.02404) suggesting ways in which we could be - and remain- the only intelligent life in the local universe. But that maybe helps explain the Fermi paradox without giving much clarity on how likely life is to emerge in future.