A personal take on why you should work at Forethought (maybe)

By Lizka @ 2025-10-14T08:59 (+64)

Basic facts:

- Forethought is hiring; apply for a research role by 2 November.

- The two open positions are for (i) “Senior Research Fellows” — people who can lead their own research directions, and (ii) “Research Fellows” — people who aren’t ready to lead an agenda yet, but who could work with others and develop their worldviews and research taste. Forethought is also open to hiring “visiting fellows” who would join for a 3-12-month stint.

- And you can refer people to get a bounty of up to £10,000.

In the rest of this post, I sketch out more of my personal take on this area, how Forethought fits in, and why you might or might not want to do this kind of work at Forethought.

Others at Forethought might disagree with various parts of what I say (and might fight me or add other info in the comments). Max reviewed a first draft and agreed that, at least at that point, I wasn’t misrepresenting the org, but that’s it for input from Forethought folks — I wrote this because I dislike posts written in an ~institutional voice and thought it could be helpful to show a more personal version of “what it’s like to work here.”[1]

A background worldview

Here’s roughly how I see our current situation:

- Increasingly advanced AI systems will be changing everything around us, very quickly

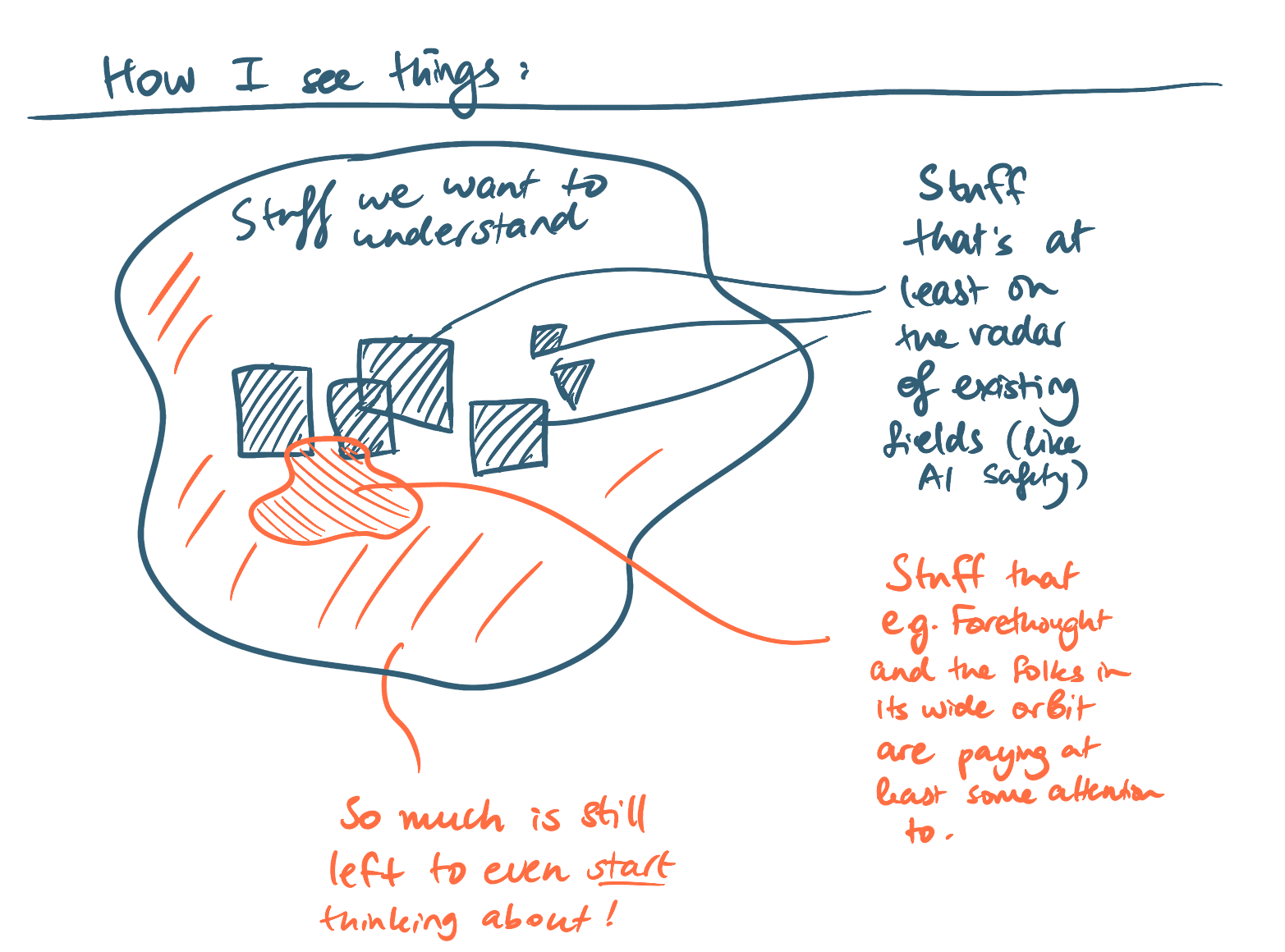

- We’re missing large chunks of the picture (the dynamics and challenges we can reason well about cover only a small fraction of the overall space)

- ...and approaching this situation deliberately instead of flying blind could be incredibly important

Forethought’s mission is to help us “navigate the transition to a world with superintelligent AI systems.” The idea is to focus in particular on the questions/issues that get less attention from other people who think that advanced AI could be a big deal. So far this has involved publishing on topics like:

- AI-enabled coups

- Aiming for flourishing — not “mere” survival

- Different types of intelligence explosion

- AI tools for existential security

- Preparing for the Intelligence Explosion

(If you want to see more, here’s the full “research” page, although a lot of my favorite public stuff from my colleagues is scattered in other places,[2] e.g. the podcast Fin runs, posts like this one from Rose, Tom’s responses on LW & Twitter, or Will’s recent post on EA. And the same goes for my own content; there's more on the EA Forum/LW & Twitter.)

So in my view Forethought is helping to fill a very important gap in the space.[3] But Forethought is pretty tiny,[4] and collectively my sense is we’re nowhere near on track for understanding things to a degree that would make me happy. I think we don’t even have a bunch of the relevant questions/issues/dynamics on our radar at this point.

(Things that give me this sense include: often running into pretty fundamental but not well articulated disagreements with "state-of-the-art" research in this space or with others at Forethought, often feeling like everyone seems to be rolling with some assumption or perspective that doesn’t seem justified or feeling like some proposal rests on a mishmash of conceptual models that don’t actually fit together.)

So I would love to see more people get involved in this space, one way or another.[5] And for some of those people, I think Forethought could be one of the best places to make progress on these questions.[6]

Why do this kind of work at Forethought? (Why not?)

I joined (proto-)Forethought about a year ago. This section outlines my takes on why someone interested in this area might or might not want to join Forethought; if you want the “official” version, you should go to the main job listing, and there’s also some relevant stuff on the more generic “Careers” page.

Doing this kind of research alone — or without being surrounded by other people thinking seriously about related topics — seems really hard for most people. Being able to develop (butterfly) ideas in discussion with others, quickly get high-context feedback on your drafts or input on possible research directions,[7] and spend time with people you respect (from whom you can learn various skills[8]) helps a lot. It’s also valuable to have a space in which your thinking is protected from various distortion/distraction forces, like the pressure to signal allegiance to your in-group (or to distance yourself from perspectives that are “too weird”), the pull of timely, bikeshed-y topics[9] (or the urge to focus on topics that will get you lots of karma), the need to satisfy your stakeholders or to get funding or to immediately demonstrate measurable results, and so on. It’s also easier to stay motivated when surrounded by people who have context on your work.

And joining a team can create a critical mass around your area. Other people start visiting your org (and engaging with the ideas, sharing their own views or expertise, etc.). It’s easier for people to remember at all that this area exists. Etc.

I think the above is basically the point of Forethought-the-institution.

Many of the same properties have, I think, helped me develop as a researcher. For instance, I’ve learned a lot by collaborating with Owen, talking to people in Forethought’s orbit, and getting feedback in seminars. The standards of seriousness[10] set by the people around me have helped train me out of shallow or overly timid engagement with these ideas. And the mix of opportunity-to-upskill, having a large surface area / many opportunities to encounter a bunch of different people, and protection from various gravitational forces (and in particular the pull to build on top of AI strategy worldviews that I don’t fully buy or understand) has helped me form better and more independent models.[11]

(I also think Forethought could improve on various fronts here. See more on that a few paragraphs down.)

Working at Forethought has some other benefits:

- One important thing for me is having a good manager (and reasonable systems)

…particularly for prompting me to focus on what I endorse, troubleshooting blockers, providing forcing functions that help me turn messy thoughts and diagrams into reasonably presentable docs, and advice/help with things that feel almost silly, like getting to a better sleep schedule.[12]

Being managed by Max / working at Forethought also gives me a sense that I have a mandate[13] — feeling like someone expects me to help us better understand this stuff — which can make it easier for me to get around an impostory feeling that I’m not “the kind of person” who should be thinking about questions this thorny or stakes-y.

- A final mundane-seeming advantage of working at Forethought that I nevertheless want to mention is that things just work; the operations side of things is really smooth and I basically don't have to think about it. There’s no bureaucracy to navigate, problems I flag just get fixed if they’re important, our infrastructure is set up sensibly from my POV, etc.

(The job listing shares more info on stuff like salary, the office[14], the support you’d get on things like making sure what you write actually reaches relevant people or turns into “action”, etc.)

Still, Forethought is not the right place for everyone, and there are things I’d tell e.g. a friend to make sure they’re ok with before they decide to join. These might include:

- Spending some nontrivial attention & time on various asks from the rest of the Forethought team

- For instance, giving feedback on early drafts, weighing in on various org-wide strategic decisions, etc.

- Relatedly, if you think Forethought’s work is overall bad, then working here would probably be frustrating.

- Less than total freedom in project choice, how you spend your time, etc.

Forethought has a pretty wide scope, and project selection tends to be fairly independent, but it’s probably not the right place for totally blue-sky research.[15]

- On my end, basically all the projects I work on at Forethought are things I chose to do without any “top-down” influence (but with input / feedback of various kinds from the team and others). This has changed somewhat over time; when I first joined, I spent more time working on project ideas suggested by others — which I think was a reasonably strong default (IIRC I also thought this was the right approach at that time, but these things reinforce each other and it feels sort of hard to untangle).

- Today I still feel like I’m naturally pulled somewhat more towards projects that I think others at Forethought would approve of (whether or not that’s true, and possibly entirely because my brain is following some social-approval gradients or the like), even when I’m not sure I wholly endorse that. I occasionally actively push against this pull, with e.g. Max’s help. (Overall I think total independence would be worse for me & my work, but do want to get better at paying attention to my independent taste and fuzzy intuitions.)

And there are some other institutional “asks” Forethought makes of staff; we have weekly team meetings and seminars, we’ve sometimes had “themes” — periods of a few weeks or so where staff were encouraged to e.g. do more quick-publishing or connecting with external visitors,[16] etc.

- Culture stuff:

People at Forethought disagree with each other a decent amount (and the vibe is that this is encouraged), regardless of seniority or similar — if that’d be stressful for you, then that’s probably a nontrivial factor to consider.[17]

- OTOH we’re not on the extreme end of “nothing-held-back disagreeableness”, I think, so if you want that, it’s also probably not the right place.

- People are really quite into philosophy.

I'll also list some things that I personally wish were different:

- The set of worldviews & perspectives represented on the team feels too narrow to me. (If I understand correctly, expanding this is one of the goals for this hiring round. Still, I’m maybe on the more extreme end, at Forethought, of wanting this to widen.)

I'd like us to get better at smoothly working on joint projects and giving each other feedback (e.g. at the right time, of the right kind).[18]

- I personally think we are too frequently in a mentality on the “look for solvable problems and solve them” end of “macrostrategy”, where instead I think a mode more purely oriented towards “I’m confused, I want to better understand what the hell will happen or is happening here and how works” would often be better.

I also have personally felt more bouts of unproductive perfectionism, or caught myself trying to do ResearchTM[19] in ways that have felt distracting. I think this was partly because others on the team had the same tendencies/pitfalls and those were somewhat getting reinforced for me, although some of this is probably better explained by something like “starting to do research (in a weird/new area) is generally hard”.

Who do I think would/wouldn’t be a good fit for this?

I’ve pasted in the official "fit" descriptions below (mostly because I don’t really trust people to just go read them on the job listing).

The main things I want to emphasize or add are:

- You really don’t have to have official credentials or any particular kind of background

- E.g. my background is pretty weird

- (And I'd be actively excited to have very different kinds of skill/experience profiles on the team)

- Strong natural curiosity about AI & this whole area seems important, and it probably helps to be fairly oriented towards trying to help society do a better job managing its problems

- Relatedly, as I discussed earlier, a lot of this space is really underbaked (“pre-paradigmatic”), which I think makes some skills unusually valuable:

Being able to notice and hold onto your confusion (without feeling totally paralyzed by it)[20]

- Willingness to try on new conceptual models (or worldviews), improve them, ditch them if/when it turns out they’re not helpful or actively distracting — and stuff like distillation or being able to translate insights from one frame/worldview to another

- The specific pairing of fairly high openness (being willing to entertain pretty weird ideas) and a healthy amount of skepticism / a strong research taste

- Something like “flexibility” — being willing to change your mind, switch projects, maybe dip between different levels of abstraction, consider earlier work from a variety of fields, etc.

And as promised here’s the official description of the roles:

Senior research fellows will lead their own research projects and set their direction, typically in areas that are poorly understood and pre-paradigmatic. They might also lead a small team of researchers working on these topics.

You could be a good fit for this role if:

| Research fellows are in the process of developing their own independent views and research directions, since they might be earlier-career or switching domains. Initially, [r]esearch fellows will generally collaborate with senior research fellows, to produce research on important topics of mutual interest. We expect research fellows to form their own view on topics that they work on, and to spend 20-50% of their time thinking and exploring their own research directions. We expect some research fellows to (potentially rapidly) develop their own research agenda and begin to set their own direction, while others may continue to play a more collaborative role within the team. Both are very valuable.

You could be a good fit for this role if:

|

More basic info

The location, salary, benefits, etc, are included in the job listing (see also the careers page). If you have any questions, you could comment below and I’ll try to pull in relevant people (or they might just respond), or you might want to reach out directly.

There’s also the referral bonus; Forethought is offering a £10,000 referral bonus for “counterfactual recommendations for successful Senior Research Fellow hires” (or £5,000 for Research Fellows. Here’s the form.

In any case:

The application looks pretty short. Consider following the classic advice and just applying.

A final note

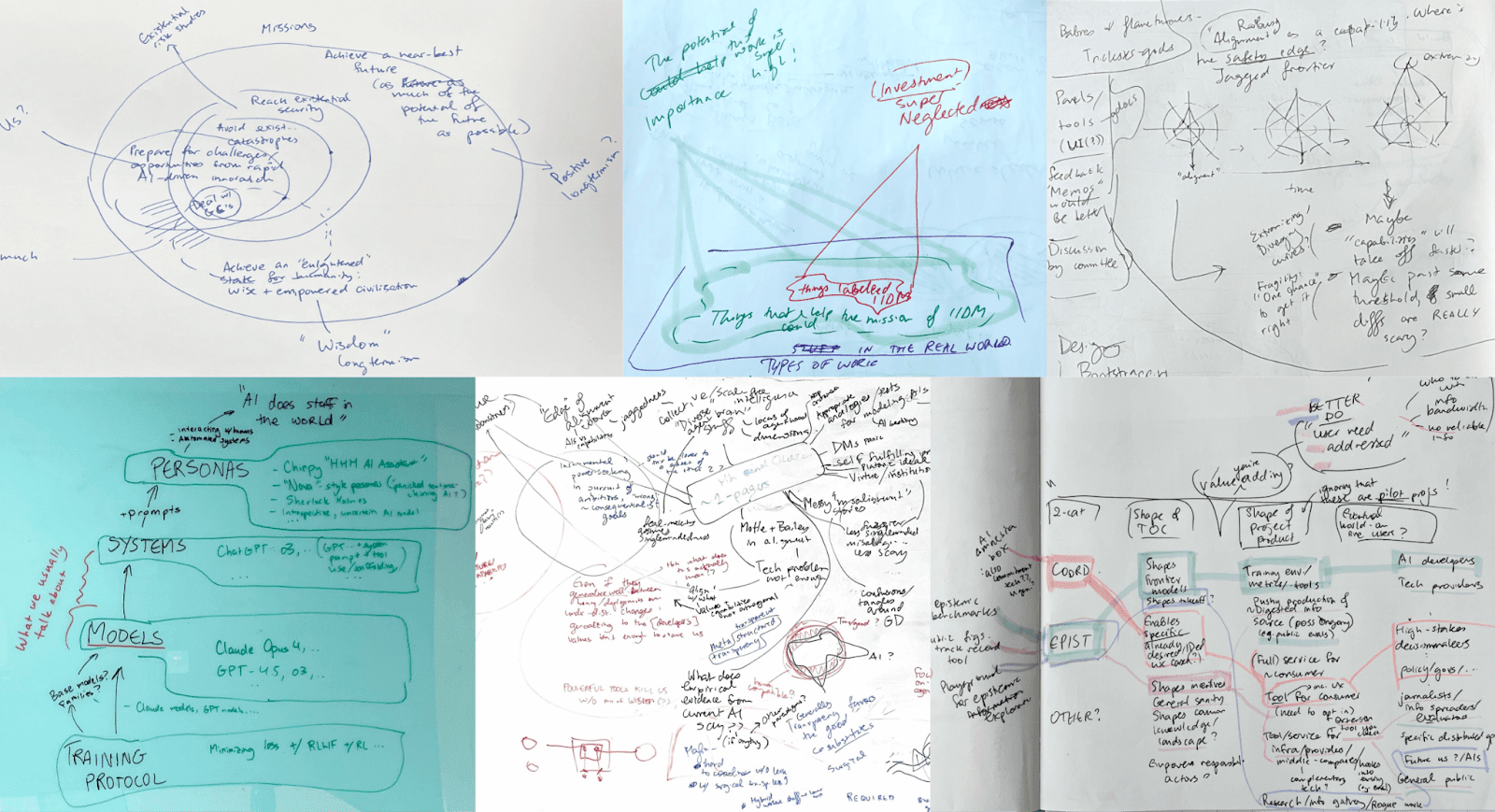

One last thing I want to say here — which you can take as a source of bias and/or as evidence about Forethought being a nice place (at least for people like me) — is that I just really enjoy spending time with the people I work with. I love the random social chats about stuff like early modern money-lending schemes[21] or cat-naming or the jaggedness of child language learning. People have been very supportive when personal life stuff got difficult for me, or when I’ve felt especially impostory. Some of my meetings happen during walks along a river, and at other times there’s home-made cake. And we have fun with whiteboards:

- ^

More specifics on the context here: Max asked me to help him draft a note about Forethought’s open roles for the EA Forum/LessWrong; I said it’d be idiosyncratic & would include critical stuff if I did that; he encouraged me to go for it and left some comments on the first partial draft (and confirmed what I was saying made sense); I wrote the next draft without input from Max or others at Forethought; made some edits after comments from Owen (hijacked some of our coworking time); and here we are.

- ^

I’ve mentioned that I think more of this (often more informal) content should go on our website (or at least the Substack); I think others at Forethought disagree with me, although we haven’t really invested in resolving this question.

- ^

(which is one of the main reasons I work here!)

- ^

Forethought has 9 people, of whom 6 are researchers. If I had to come up with some estimate of how many people overall (not just at FOrethought) are devoting a significant amount of attention to this kind of work, I might go with a number like 50. Of course, because so much of this work is interdisciplinary and preparadigmatic, there’s no shared language/context and I’m quite likely to be missing people (and per my own post, it’s pretty easy to get a skewed sense of how neglected some area is). (OTOH I also think the disjointedness of the field hurts our ability to collectively understand this space.)

Overall, I don’t feel reassured that the rest of the world “has it covered”. And at least in the broader community around EA / existential risk, I’m pretty confident that few people are devoting any real attention to this area.

- ^

While I’m at it: you might also be interested in applying for one of the open roles at ACS Research (topics listed are Gradual Disempowerment, AI/LLM psychology/sociology, agent foundations)

- ^

I wanted to quickly list some examples somewhere, and this seemed like a fine place to do that. So here are sketches of some threads that come to mind:

(I’m not trying to be exhaustive — basically just trying to quickly share a sample — and this is obviously filtered through my interests & taste [a])

-- How can we get our civilization to be in a good position by the time various critical choices are being made? Should we be working towards some kind of collective deliberation process, and what would that look like? Are there stable waypoint-worlds that we'd be excited to work towards? [Assorted references: paretotopia, long reflection stuff, various things on AI for epistemics & coordination, e.g. here]

-- What might ~distributed (or otherwise not-classical-agent-shaped) powerful AI systems look like? How does this map on to / interact with the rest of the threat/strategic landscape? (Or: which kinds of systems should we expect to see by default?) [See e.g. writing on hierarchical/scale-free agency]

-- Is it in fact the case that states will stop protecting their citizens’ interests as (or if) automation means they’re no longer “incentivized” to invest in a happy etc. labor force? (And what can/should we do if so?) [Related]

-- How should we plan for / think about worlds with digital minds that might deserve moral consideration? When might we need to make certain key decisions? Will we be able to find sources of signal about what is good for the relevant systems that we trust are connected to the thing that matters? (And also stuff like: what might this issue do to our political landscape?) [See e.g. this, this, and this]

-- How would AI-enabled coups actually play out? (What about things that look less like coups?) [ref]

-- Which specific “pre-ASI” technologies might be a big deal, in which worlds, how, ...?

-- More on how coordination tech (e.g. structured transparency stuff, credible commitments) could go really wrong, which technologies might be especially risky here, etc.

-- What might it look like for institutions/systems/entities that are vastly more powerful than us to actually interact with us in healthy (virtuous?) ways?

-- If AI systems get deeply integrated into the market etc., what would that actually look like, how would that play out? [E.g. more stuff like frictionless bargaining, or how things could go unevenly, or cascading risk stuff, perhaps.]

[a] If I try to channel others at Forethought, other things probably become more salient. E.g. how acausal trade stuff might matter, more on modeling dynamics related to automation or the intelligence explosion, exploring how we might in fact try to speed up good automation of moral philosophy, more on governance of space resources, more on safeguarding some core of liberal democracy, etc.

- ^

Sometimes there might be too many comments, I suppose:

- ^

One important dynamic here is picking up “tacit” skills, like underlying thinking/reasoning patterns. As a quick example, I’ve occasionally found myself copying a mental/collaborative move (often subconsciously) that I’d appreciated before.

- ^

Part of my model here is that my brain is often following the “easiest” salient paths, and that reasoning about stuff like radically new kinds of technology, what the situation might be like for animals in totally transformed worlds, what-the-hell-is-agency-and-do-we-care, etc. is hard. So if I don’t immerse myself in an environment in which those kinds of questions are default, my focus will slip away towards simpler or familiar topics.

- ^

Or maybe “realness”? (Tbh I’ll take any opportunity to link this post)

- ^

As an aside: I’d really like to see more people try to form their own ~worldviews, particularly trying to make them more coherent/holistic. Because the space is extremely raw and developing pretty quickly (so basically no one has the time or conceptual tools to fit all the parts together on their own), I think large chunks of the work here rest on the same shaky foundations, which I want to see tested / corrected / supported with others. I also think this is good for actually noticing the gaps.

- ^

- ^

a call to adventure ? (another post I love linking to)

- ^

which tbh might be underemphasized there; the opportunity to work in person from a nice office is a game-changer for some

- ^

In the past I’ve been overly concerned about what might not be in scope. Because hesitation around this felt distracting to me, I’ve got an agreement with Max right now that I’ll just focus on whatever seems important and he can flag things to me if he notices that I’m going too far out / account for this retrospectively. (So far I haven't hit that limit.)

- ^

(This was called “Explorethought”. There’s an abundance of puns at Forethought.; do with that information what you will.)

- ^

I like this aspect a lot, fwiw. (Especially since it doesn’t end up feeling that people are out to “win” arguments or put down others’ views.)

(Once in a while I get reminders of how weird Forethought’s culture can seem from the outside, e.g. when I remember that many (most?) people would hesitate to say they strongly disagree with a more senior researcher's doc. Meanwhile I’ve been leaving comments like “This breakdown feels pretty fake to me...” or “I think this whole proposal could only really work in some extreme/paradigmatic scenarios, and in those worlds it feels like other parts of the setup wouldn’t hold...”)

- ^

As one example, near the start of my tenure at Forethought, I ended up spending a while on a project that I now think was pretty misguided (an ITN BOTEC comparing this area of work with technical AI safety), I think partly because I hadn’t properly synced up with someone I was working with.

(Although the experience itself may have been useful for me, and it’s one of the things that fed into this post on pitfalls in ITN BOTECs.)

- ^

If interested see some notes on this here

- ^

Related themes here, IIRC: Fixation and denial (Meaningness)

- ^

Although I failed to explain it to some other people the other day, so I need some rescuing here

MaxDalton @ 2025-10-15T09:32 (+18)

Thanks for writing this up Lizka! I agree with most of it, including most of the ways I'd like us to be different.

Flagging some disagreements:

- I feel like "smooth working together on projects" is pretty good on average: I agree with the example you give as a mostly-failed collab, though I think it was ultimately OK in that it resulted in your ITN post. But I think that there have been a lot of productive co-authored papers (I think most of our pieces are co-authored, and most of those collabs gave gone well.)

- I also feel like "give each other lots of feedback" is generally pretty good: weekly pretty detailed feedback between managers, weekly commenting on work-in-progress drafts, 360 feedback every retreat. I think we should add in a monthly 360 though, I've been meaning to do that for a while. In fact, I'd maybe emphasize that if people don't want a fair amount of feedback, it might not be a great fit.

+1 to your other criticisms though, and I hope that this hiring round can help us bring in even more perspectives!

Other things I'd emphasize:

Impact focus: We have a culture of discussing research prioritization, and we all care about our work having impact. I think this is contestable and could be bad for some people: if you think you do your best work in a purely curiosity-driven way, Forethought might not be ideal.

Also, Stefan Torges recently joined to help make sure that research papers lead to action, e.g. by engaging with AI companies to make sure they introduce mitigations, and developing policy ideas. I hope that this makes it easier for researchers to focus on research, while also making sure that our research leads to some real difference. (To be clear, we're early-days figuring this out, and we might not be able to find a way to get this to work.)

+1 to just really enjoying working with this set of people, too!

Also, Fin and Mia just put out a podcast about Mia's take on what it's like to work at Forethought, in case people want another personal perspective!

Mia Taylor @ 2025-10-15T19:00 (+14)

[Cross-posted from LW]

I joined Forethought about six weeks ago as a research fellow. Here's a list of some stuff I like about Forethought and some stuff that I don't like about Forethought. Note that I wrote this before reading OP closely.

Things I like:

- Forethought gives me significant independence to develop my own views and strongly encourages me to prioritize work that genuinely excites me intellectually and which I (inside view) think is very important.

- A lot of work is very social. For example, a common form of work is for two people to stand in front of a whiteboard and try to make sense of a confusing issue together.

- Everyone is really kind while also being unafraid to point out disagreements directly.

- We have great feedback culture. Max Dalton (manager of most people at Forethought, including me) proactively seeks out feedback from us and seems to take it seriously. He also gives me weekly, systematic feedback about stuff he thinks I did well that week and how he thinks I can improve. I expect this to be great for my growth.

Things I don't like:

- We're doing weird, speculative, philosophy-flavored work. It's plausibly important and high-leverage (which is why I’m doing it), but sometimes it feels like we're just writing in Google Docs with unclear prospects of getting anywhere (I do think that leadership is aware of this and trying to address it). I used to do empirical research, where I got more immediate feedback from reality on some aspects of the quality of my work, and sometimes I miss that.

- I worry we're a bit of an echo chamber—a set of people with pretty weird and highly correlated views of how the future will go. (I’d be excited for people who disagree with the Forethought consensus to apply!)

- I'm the only full-time Forethought person working from the Bay, which is a bit lonely. So far I've been co-located with co-workers for most of my time here (because I traveled to Oxford and some of them traveled to the Bay), but I don't expect that to continue long-term. If you don’t want to move to Oxford, that’s something to consider.

cb @ 2025-10-14T14:28 (+11)

(Meta: I really liked this more personal, idiosyncratic kind of "apply here" post!)

Could you say a bit more about the "set of worldviews & perspectives" represented on Forethought, and in which ways you'd like it to be broader?

Lizka @ 2025-10-14T23:07 (+9)

ore Quick sketch of what I mean (and again I think others at Forethought may disagree with me):

- I think most of the work that gets done at Forethought builds primarily on top of conceptual models that are at least in significant part ~deferring to a fairly narrow cluster of AI worldviews/paradigms (maybe roughly in the direction of what Joe Carlsmith/Buck/Ryan have written about)

- (To be clear, I think this probably doesn't cover everyone, and even when it does, there's also work that does this more/less, and some explicit poking at these worldviews, etc.)

- So in general I think I'd prefer the AI worldviews/deferral to be less ~correlated. On the deferral point — I'm a bit worried that the resulting aggregate models don't hold together well sometimes, e.g. because it's hard to realize you're not actually on board with some further-in-the-background assumptions being made for the conceptual models you want to pull in.

- And I'd also just like to see more work based on other worldviews, even just ones that complicate the paradigmatic scenarios / try to unpack the abstractions to see if some of the stuff that's been simplified away is blinding us to important complications (or possibilities).[1]

- I think people at Forethought often have some underlying ~welfarist frames & intuitions — and maybe more generally a tendency to model a bunch of things via something like utility functions — as opposed to thinking via frames more towards the virtue/rights/ethical-relationships/patterns/... direction (or in terms of e.g. complex systems)

- We're not doing e.g. formal empirical experiments, I think (although that could also be fine given this area of work, just listing as it pops into my head)

- There's some fuzzy pattern in the core ways in which most(?) people at Forethought seem to naturally think, or in how they prefer to have research discussions, IMO? I notice that the types of conversations (and collaborations) I have with some other people go fairly differently, and this leads me to different places.

- To roughly gesture at this, in my experience Forethought tends broadly more towards a mode like "read/think -> write a reasonably coherent doc -> get comments from various people -> write new docs / sometimes discuss in Slack or at whiteboards (but often with a particular topic in mind...)", I think? (Vs things like trying to map more stuff out/think through really fuzzy thoughts in active collaboration, having some long topical-but-not-too-structured conversations that end up somewhere unexpected, "let's try to make a bunch of predictions in a row to rapidly iterate on our models," etc.)

- (But this probably varies a decent amount between people, and might be context-dependent, etc.)

- IIRC many people have more expertise on stuff like analytic philosophy, maybe something like econ-style modeling, and the EA flavor of generalist research than e.g. ML/physics/cognitive science/whatever, or maybe hands-on policy/industry/tech work, or e.g. history/culture... And there are various similarities in people's cultural/social backgrounds. (I don't actually remember everyone's backgrounds, though, and think it's easy to overindex on this / weigh it too heavily. But I'd be surprised if that doesn't affect things somewhat.)

I also want to caveat that:

- (i) I'm not trying to be exhaustive here, just listing what's salient, and

- (ii) in general it'll be harder for me to name things that also strongly describe me (although I'm trying, to some degree), especially as I'm just quickly listing stuff and not thinking too hard.

(And thanks for the nice meta note!)

- ^

I've been struggling to articulate this well, but I've recently been feeling like, for instance, proposals on making deals with "early [potential] schemers" implicitly(?) rely on a bunch of assumptions about the anatomy of AI entities we'd get at relevant stages.

More generally I've been feeling pretty iffy about using game-theoretic reasoning about "AIs" (as in "they'll be incentivized to..." or similar) because I sort of expect it to fail in ways that are somewhat similar to what one gets if one tries to do this with states or large bureaucracies or something -- iirc the fourth paper here discussed this kind of thing, although in general there's a lot of content on this. Similar stuff on e.g. reasoning about the "goals" etc. of AI entities at different points in time without clarifying a bunch of background assumptions (related, iirc).

MaxDalton @ 2025-10-15T09:17 (+8)

Thanks for writing the post and this comment, Lizka!

~deferring to a fairly narrow cluster of AI worldviews/paradigms (maybe roughly in the direction of what Joe Carlsmith/Buck/Ryan have written about)

I agree that most of Forethought (apart from you!) have views that are somewhat similar to Joe/Buck/Ryan's, but I think that's mostly not via deferral?

+1 to wanting people who can explore other perspectives, like Gradual Disempowerment, coalitional agency, AI personas, etc. And the stuff that you've been exploring!

I also agree that there's some default more welfarist / consequentialist frame, though I think often we don't actually endorse this on reflection. Also agree that there's some shared thinking styles, though I think there's a bit more diversity in training (we have people who majored in history, CS, have done empirical ML work, etc).

Also maybe general note, that on many of the axes you're describing you are adding some of the diversity that you want, so Forethought-as-a-whole is a bit more diverse on these axes than Forethought-minus-Lizka.

OscarD🔸 @ 2025-10-15T00:31 (+6)

I think I agree maybe ~80%. My main reservation (although quite possibly we agree here) is that if Forethought hired e.g. the 'AI as a normal technology' people, or anyone with equivalently different baseline assumptions and ways of thinking to most of Forethought, I think that would be pretty frustrating and unproductive. (That said, I think brining people like that in for a week or so might be great, to drill down into cruxes and download each others' world models more.) I think there is something great about having lots of foundational things in common with the people you work closely with.

But I agree that having more people who share some basic prerequisites of thinking ASI is possible, likely to come this century, being somewhat longtermist and cosmopolitan and altruistic, etc, but disagree a lot on particular topics like AI timelines and threat models and research approaches and so forth can be pretty useful.

Lizka @ 2025-10-15T03:49 (+6)

Yeah, I guess I don't want to say that it'd be better if the team had people who are (already) strongly attached to various specific perspectives (like the "AI as a normal technology" worldview --- maybe especially that one?[1]). And I agree that having shared foundations is useful / constantly relitigating foundational issues would be frustrating. I also really do think the points I listed under "who I think would be a good fit" — willingness to try on and ditch conceptual models, high openness without losing track of taste, & flexibility — matter, and probably clash somewhat with central examples of "person attached to a specific perspective."

= rambly comment, written quickly, sorry! =

But in my opinion we should not just all (always) be going off of some central AI-safety-style worldviews. And I think that some of the divergence I would like to see more of could go pretty deep - e.g. possibly somewhere in the grey area between what you listed as "basic prerequisites" and "particular topics like AI timelines...". (As one example, I think accepting terminology or the way people in this space normally talk about stuff like "alignment" or "an AI" might basically bake in a bunch of assumptions that I would like Forethought's work to not always rely on.)

One way to get closer to that might be to just defer less or more carefully, maybe. And another is to have a team that includes people who better understand rarer-in-this-space perspectives, which diverge earlier on (or people who are by default inclined to thinking about this stuff in ways that are different from others' defaults), as this could help us start noticing assumptions we didn't even realize we were making, translate between frames, etc.

So maybe my view is that (1) there were more ~independent worldview formation/ exploration going on, and that (2) the (soft) deferral that is happening (because some deferral feels basically inevitable) were less overlapping.

(I expect we don't really disagree, but still hope this helps to clarify things. And also, people at Forethought might still disagree with me.)

- ^

In particular:

If this perspective involves a strong belief that AI will not change the world much, then IMO that's just one of the (few?) things that are ~fully out of scope for Forethought. I.e. my guess is that projects with that as a foundational assumption wouldn't really make much sense to do here. (Although IMO even if, say, I believed that this conclusion was likely right, I might nevertheless be a good fit for Forethought if I were willing to view my work as a bet on the worlds in which AI is transformative.)

But I don't really remember what the "AI as normal.." position is, and could imagine that it's somewhat different — e.g. more in the direction of "automation is the wrong frame for understanding the most likely scenarios" / something like this. In that case my take would be that someone exploring this at Forethought could make sense (haven't thought about this one much), and generally being willing to consider this perspective at least seems good, but I'd still be less excited about people who'd come with the explicit goal of pursuing that worldview & no intention of updating or whatever.

--

(Obviously if the "AI will not be a big deal" view is correct, I'd want us to be able to come to that conclusion -- and change Forethught's mission or something. So I wouldn't e.g. avoid interacting with this view or its proponents, and agree that e.g. inviting people with this POV as visitors could be great.)

William_MacAskill @ 2025-10-16T18:26 (+4)

If this perspective involves a strong belief that AI will not change the world much, then IMO that's just one of the (few?) things that are ~fully out of scope for Forethought

I disagree with this. There would need to be some other reason for why they should work at Forethought rather than elsewhere, but there are plausible answers to that — e.g. they work on space governance, or they want to write up why they think AI won't change the world much and engage with the counterarguments.

Tax Geek @ 2025-10-16T10:40 (+2)

On the "AI as normal technology" perspective - I don't think it involves a strong belief that AI won't change the world much. The authors restate their thesis in a later post:

There is a long causal chain between AI capability increases and societal impact. Benefits and risks are realized when AI is deployed, not when it is developed. This gives us (individuals, organizations, institutions, policymakers) many points of leverage for shaping those impacts. So we don’t have to fret as much about the speed of capability development; our efforts should focus more on the deployment stage both from the perspective of realizing AI’s benefits and responding to risks. All this is not just true of today’s AI, but even in the face of hypothetical developments such as self-improvement in AI capabilities. Many of the limits to the power of AI systems are (and should be) external to those systems, so that they cannot be overcome simply by having AI go off and improve its own technical design.

The idea of focusing more on the deployment stage seems pretty consistent with Will MacAskill's latest forum post about making the transition to a post-AGI society go well. There are other aspects of the "AI as normal technology" worldview that I expect will conflict more with Forethought's, but I'm not sure that conflict would necessarily be frustrating and unproductive - as you say, it might depend on the person's characteristics like openness and willingness to update, etc.

OscarD🔸 @ 2025-10-15T18:34 (+2)

Nice, yes I think we roughly agree! (Though maybe you are nobler than me in terms of finding a broader range of views provocatively plausible and productive to engage with.)

William_MacAskill @ 2025-10-16T18:23 (+5)

I can't speak to the "AI as a normal technology" people in particular, but a shortlist I created of people I'd be very excited about includes someone who just doesn't buy at all that AI will drive an intelligence explosion or explosive growth.

I think there are lots of types of people where it wouldn't be a great fit, though. E.g. continental philosophers; at least some of the "sociotechnical" AI folks; more mainstream academics who are focused on academic publishing. And if you're just focused on AI alignment, probably you'll get more at a different org than you would at Forethought.

More generally, I'm particularly keen on situations where V(X, Forethought team) is much greater than than V(X) + V(Forethought team), either because there are synergies between X and the team, or because X is currently unable to do the most valuable work they could in any of the other jobs they could be in.

rosehadshar @ 2025-10-15T12:38 (+10)

Thanks Lizka!

Some misc personal reflections:

- Working at Forethought has been my favourite job ever, by a decent margin

- I spent a couple of years doing AI governance research independently/collaborating with others in an ad hoc way before joining Forethought. I think the quality of my work has been way higher since joining (because I've been working on more important questions than I was able to make headway on solo), and it's also been just a huge win in terms of productivity and attention (the costs of tracking my time, hustling for new projects, managing competing projects etc were pretty huge for me and made it really hard to do proper thinking)

One minor addition from me on why/not to work at Forethought: I think the people working at Forethought care pretty seriously about things going well, and are really trying to make a contribution.

I think this is both a really special strength, and something that has pitfalls:

- It's a privilege to work with people who care in this way, and it cuts a lot of the crap that you'd get in organisations that were more oriented towards short term outcomes, status, etc

- On the other hand, I sometimes worry about Forethought leaning a bit to heavily on EA-style 'do what's most impactful' vibes. I think this can kill curiosity, and also easily degrades into trying to try/people trying to meet their own psychological needs to make an impact instead of really staring in the face the reality we seem to be living in.

- Other people at Forethought think that we're not leaning into this enough though: most work on AI futures stuff is low quality and won't matter at all, and it's very easy to fill all your time with interesting and pointless stuff. I agree on those failure modes, but disagree about where the right place on the spectrum is.

And then a few notes on the sorts of people I'd be really excited to have apply:

- People who are thinking for themselves and building their own models of what's going on. I think this is rare and sorely needed. Some particular sub-groups I want to call out:

- Really smart independent thinkers who want to work on AI macrostrategy stuff but haven't yet had a lot of surface area with the topic or done a lot of research. I think Forethought could be a great place for someone to soak up a lot of the existing thinking on these topics, en route to developing their own agenda.

- Researchers with deep world models on the AI stuff, who think that Forethought is kind of wrong/a lot less good than it could be. The high-level aspiration for Forethought is something like, get the world to sensibly navigate the transition to superintelligence. We are currently 6 researchers, with fairly correlated views: of course we are totally failing to achieve this aspiration right now. But it's a good aspiration, and to the extent that someone has views on how to better address it, I'd love for them to apply.

- If I got to choose one type of researcher to hire, it would be this one.

- My hope would be that for many people in this category, Forethought would be able to 'get out of the way': give the person free reign, not entangle them in organisational stuff where they don't want that, and engage with them intellectually to the extent that it's mutually productive.

- I agree with Lizka that people who think Forethought sucks probably won't want to apply/get hired/enjoy working at Forethought.

- People who are working on this stuff already, but hamstrung by not having [a salary/colleagues/an institutional home/enough freedom for research at their current place of work/a manager to support them/etc]. I'd hope that Forethought could be a big win for people in this position, and allow them to unlock a bunch more of their potential.

William_MacAskill @ 2025-10-16T18:13 (+8)

Thanks for writing this, Lizka!

Some misc comments from me:

- I have the worry that people will see Forethought as "the Will MacAskill org", at least to some extent, and therefore think you've got to share my worldview to join. So I want to discourage that impression! There's lots of healthy disagreement within the team, and we try to actively encourage disagreement. (Salient examples include disagreement around: AI takeover risk; whether the better futures perspective is totally off-base or not; moral realism / antirealism; how much and what work can get punted until a later date; AI moratoria / pauses; whether deals with AIs make sense; rights for AIs; gradual disempowerment).

- I think from the outside it's probably not transparent just how involved some research affiliates or other collaborators are, in particular Toby Ord, Owen Cotton-Barratt, and Lukas Finnveden.

- I'd in particular be really excited for people who are deep in the empirical nitty-gritty — think AI2027 and the deepest criticisms of that; or gwern; or Carl Shulman; or Vaclav Smil. This is something I wish I had more skill and practice in, and I think it's generally a bit of a gap in the team.

- While at Forethought, I've been happier in my work than I have in any other job. That's a mix of: getting a lot of freedom to just focus on making intellectual progress rather various forms of jumping through hoops; the (importance)*(intrinsic interestingness) of the subject matter; the quality of the team; the balance of work ethic and compassion among people — it really feels like everyone has each other's back; and things just working and generally being low-drama.

finm @ 2025-10-15T17:46 (+8)

Thanks for writing this! Some personal thoughts:

- I have a fair amount of research latitude, and I'm working at an org with a broad and flexible remit to try to identify and work on the most important questions. This makes the Hamming question — what are the most important questions in your field and why aren't you working on them — hard to avoid! This is uncomfortable, because if you don't feel like you're doing useful work, you're out of excuses. But it's also very motivating.

- There is an 'agenda' in the sense that there's a list of questions and directions with some consensus that someone at Forethought should work on them. But there's a palpable sense that the bottleneck to progress isn't just more researchers to shlep on with writing up ideas, so much as more people with crisp, opinionated takes about what's important, who can defend their views in good faith.

- One possible drawback is that Forethought is not a place where you learn well-scoped skills or knowledge by default, because as a researcher you are not being trained for a particular career track (like junior → senior SWE) or taught a course (like doing a PhD). But there is support and time for self-directed learning, and I've learned a lot of tacit knowledge about how to do this kind of research especially from the more senior researchers.

- I would personally appreciate people applying with research or industry expertise in fields like law, law and economics, physics, polsci, and ML itself. You should not hold off on applying because you don't feel like you belong to the LessWrong/EA/AI safety sphere, and I'm worried that Forethought culture becomes too insular in that respect (currently it's not much of a concern).

- If you're considering applying, I recorded a podcast with Mia Taylor, who recently joined as a researcher!

Tom_Davidson @ 2025-10-16T17:45 (+4)

I also work at Forethought!

I agree with a lot of this, but wanted to flag that I would be very excited for ppl doing blue skies research to apply and want Forethought to be a place that's good for that. We want to work on high impact research and understand that sometimes mean doing things where it's unclear up front if it will bear fruit.

SummaryBot @ 2025-10-14T15:54 (+2)

Executive summary: A candid, personal case for (maybe) applying to Forethought’s research roles by 1 November: the org’s mission is to navigate the transition to superintelligent AI by tackling underexplored, pre-paradigmatic questions; it offers a supportive, high-disagreement, philosophy-friendly environment with solid ops and management, but it’s small, not a fit for fully blue-sky independence, and the author notes cultural/worldview gaps they want to broaden (tone: invitational and self-aware rather than hard-sell; personal reflection).

Key points:

- Roles and incentives: Forethought is hiring Senior Research Fellows (lead your own agenda) and Research Fellows (develop views while collaborating), plus possible 3–12 month visiting fellows; referral bonus up to £10k (Senior) / £5k (Research), and the application is short with a Nov 1 deadline.

- Why Forethought: Its niche is neglected, concept-forming work on AI macrostrategy (e.g., AI-enabled coups, pathways to flourishing, intelligence explosion dynamics, existential security tools), aiming to surface questions others miss and build clearer conceptual models.

- Research environment: Benefits include close collaboration, fast high-context feedback, protection from distortive incentives (status games, quick-karma topics), strong operations, and hands-on management that helps convert messy ideas into publishable work and sustain motivation.

- Tradeoffs and culture: Expect some institutional asks (feedback on drafts, org decisions), not total freedom in topic choice, and a culture of open disagreement and heavy philosophy; author thinks the team’s worldview range is too narrow and collaboration/feedback timing could improve.

- Who might fit: No specific credentials required; prized traits are curiosity about AI, comfort with pre-paradigmatic confusion, willingness to try/ditch frames, skepticism plus openness, flexibility across abstraction levels, and clear written communication.

- Epistemic stance: This is a personal take, not an institutional pitch; the author enjoys the team and day-to-day atmosphere, acknowledges biases, and frames Forethought as a strong option for some—but not all—researchers interested in shaping society’s path through rapid AI progress.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

shubhi @ 2025-10-30T11:26 (+1)

Hi Forethought team!

I have a question, would be great if you could answer.

From my understanding, Forethought focuses on researching how to navigate and mitigate potential threats that AI may pose in the future. I am curious to know, does forethought also work on AI models directly (such as training or evaluating the models, or on model's security aspects) when necessary to understand more deeply about what risks AI can pose and how they can be solved?