New Open Philanthropy Grantmaking Program: Forecasting

By Coefficient Giving @ 2024-02-19T23:27 (+92)

This is a linkpost to https://www.openphilanthropy.org/research/new-grantmaking-program-forecasting/

Written by Benjamin Tereick

Edit: several comments here question the value of forecasting as a philanthropic cause — see this comment for a reply.

We are happy to announce that we have added forecasting as an official grantmaking focus area. As of January 2024, the forecasting team comprises two full-time employees: myself and Javier Prieto. In August 2023, I joined Open Phil to lead our forecasting grantmaking and internal processes. Prior to that, I worked on forecasts of existential risk and the long-term future at the Global Priorities Institute. Javier recently joined the forecasting team in a full-time capacity from Luke Muehlhauser’s AI governance team, which was previously responsible for our forecasting grantmaking.

While we are just now launching a dedicated cause area, Open Phil has long endorsed forecasting as an important way of improving the epistemic foundations of our decisions and the decisions of others. We have made several grants to support the forecasting community in the last few years, e.g., to Metaculus, the Forecasting Research Institute, and ARLIS. Moreover, since the launch of Open Phil, grantmakers have often made predictions about core outcomes for grants they approve.

Now with increased staff capacity, the forecasting team wants to build on this work. Our main goal is to help realize the promise of forecasting as a way to improve high-stakes decisions, as outlined in our focus area description. We are excited both about projects aiming to increase the adoption rate of forecasting as a tool by relevant decision-makers, and about projects that provide accurate forecasts on questions that could plausibly influence the choices of these decision-makers. We are interested in such work across both of our portfolios: Global Health and Wellbeing and Global Catastrophic Risks. [1]

We are as of yet uncertain about the most promising type of project in the forecasting focus area, and we will likely fund a variety of different approaches. We will also continue our commitment to forecasting research and to the general support of the forecasting community, as we consider both to be prerequisites for high-impact forecasting. Supported by other Open Phil researchers, we plan to continue exploring the most plausible theories of change for forecasting. I aim to regularly update the forecasting community on the development of our thinking.

Besides grantmaking, the forecasting team is also responsible for Open Phil’s internal forecasting processes, and for managing forecasting services for Open Phil staff. This part of our work will be less public, but we will occasionally publish insights from our own processes, like Javier’s 2022 report on the accuracy of our internal forecasts.

- ^

It should be noted that administratively, the forecasting team is part of the Global Catastrophic Risks portfolio, and historically, our forecasting work has had closer links to that part of the organization.

Grayden @ 2024-02-21T06:53 (+101)

I think forecasting is attractive to many people in EA like myself because EA skews towards curious people from STEM backgrounds who like games. However, I’m yet to see a robust case for it being an effective use of charitable funds (if there is, please point me to it). I’m worried we are not being objective enough and trying to find the facts that support the conclusion rather than the other way round.

MWStory @ 2024-02-23T06:53 (+41)

I think the fact that forecasting is a popular hobby is probably pretty distorting of priorities.

There are now thousands of EAs whose experience of forecasting is participating in fun competitions which have been optimised for their enjoyment. This mass of opinion and consequent discourse has very little connection to what should be the ultimate end goal of forecasting: providing useful information to decision makers.

For example, I’d love to know how INFER is going. Are the forecasts relevant to decision makers? Who reads their reports? How well do people figuring out what to forecast understand the range of policy options available and prioritise forecasts to inform them? Is there regular contact and a trusting relationship at senior executive level? Would it help more if the forecasting were faster, or broader in scope?

These are all very important questions but are invisible to forecaster participants so end up not being talked about much.

Nathan Young @ 2024-03-06T07:07 (+2)

Yeah, it seems similar to other areas where the discussion around the cause area and the cause area itself may be quite different. (see also the disparity in resources vs discussion around global health vs ai)

Habryka @ 2024-02-29T05:24 (+27)

The interest within the EA community in forecasting long predates the existence of any gamified forecasting platforms, so it seems pretty unlikely that at a high level the EA community is primarily interested because it's a fun game (this doesn't prove more recent interest isn't driven by the gamified platforms, though my sense is that the current level of relative interest seems similar to where it was a decade ago, so it doesn't feel like it made a huge shift).

Also, AI timelines forecasting work has been highly decision-relevant to a large number of people within the EA community. My guess is it's the single research intervention that has caused the largest shift in altruistic capital allocation in the last few years. There also exists a large number of pretty simple arguments in favor of forecasting work being valuable, which have been made in many places (some links here, also a bunch of Robin Hanson's work on prediction markets).

At a higher level, there are also many instances of new types of derivatives markets increasing efficiency of some market, which would probably also apply to prediction markets.

Ozzie Gooen @ 2024-05-22T23:52 (+2)

FYI, just wrote a small piece on "Higher-order forecasts", which I see as the equivalent to derivatives. https://forum.effectivealtruism.org/posts/PB57prp5kEMDgwJsm/higher-order-forecasts

I agree they can help with efficiency.

Habryka @ 2024-05-23T00:48 (+4)

I feel like the prediction-markets themselves are best modeled as derivative markets. And then you are talking about second-order derivative markets here. But IDK, mostly sounds like semantics.

Ozzie Gooen @ 2024-05-23T01:14 (+4)

Yea, that's a reasonable way of looking at it. Agreed it is just semantics.

As semantics though, my guess is that "nth-order forecasts" will be more intuitive to most people than something like "n-1th order derivatives".

EffectiveAdvocate @ 2024-02-23T19:00 (+24)

I'm considering elaborating on this in a full post, but I will do so quickly here as well: It appears to me that there's potentially a misunderstanding here, leading to unnecessary disagreement.

I think that the nature of forecasting in the context of decision-making within governments and other large institutions is very different from what is typically seen on platforms like Manifold, PolyMarket, or even Metaculus. I agree that these platforms often treat forecasting more as a game or hobby, which is fine, but very different from the kind of questions policymakers want to see answered.

I (and I hope this aligns with OP's vision) would want to see a greater emphasis on advancing forecasting specifically tailored for decision-makers. This focus diverges significantly from the casual or hobbyist approach observed on these platforms. The questions you ask should probably not be public, and they are usually far more boring. In practice, it looks more like an advanced Delphi method than it looks like Manifold Markets. I'm somewhat surprised to see interpretations of this post suggesting a need for more funding in the type of forecasting that is more recreational, which, in my view, is and should not be a priority.

E: One obvious exemption to the dichotomy I describe above is that the more fun forecasting platforms can be a good way of identifying Superforecasters good forecasters.

Mo Putera @ 2024-02-26T17:21 (+5)

Would you count Holden's take here as a robust case for funding forecasting as an effective use of charitable funds?

It's not controversial to say a highly general AI system, such as PASTA, would be momentous. The question is, when (if ever) will such a thing exist?

Over the last few years, a team at Open Philanthropy has investigated this question from multiple angles.

One forecasting method observes that:

- No AI model to date has been even 1% as "big" (in terms of computations performed) as a human brain, and until recently this wouldn't have been affordable - but that will change relatively soon.

- And by the end of this century, it will be affordable to train enormous AI models many times over; to train human-brain-sized models on enormously difficult, expensive tasks; and even perhaps to perform as many computations as have been done "by evolution" (by all animal brains in history to date).

This method's predictions are in line with the latest survey of AI researchers: something like PASTA is more likely than not this century.

A number of other angles have been examined as well.

One challenge for these forecasts: there's no "field of AI forecasting" and no expert consensus comparable to the one around climate change.

It's hard to be confident when the discussions around these topics are small and limited. But I think we should take the "most important century" hypothesis seriously based on what we know now, until and unless a "field of AI forecasting" develops.

This is my own (possibly very naive) interpretation of one motivation behind some of Open Phil's forecasting-related grants.

Actually, maybe it's also useful to just look at the biggest grants from that list:

- $7,993,780 over two years to the Applied Research Laboratory for Intelligence and Security at the University of Maryland, to support the development of two forecasting platforms, in a project led by Dr. Adam Russell. The forecasting platforms will be provided as a resource to help answer questions for policymakers (writeup)

- two grants totaling $6,305,675 over three years to support the Forecasting Research Institute (FRI)’s work on projects to advance the science of forecasting as a tool to improve public policy and reduce existential risk. This includes developing a new modular forecasting platform and conducting research to test different forecasting techniques. This follows our October 2021 support ($275,000) for planning work by FRI Chief Scientist Philip Tetlock, and falls within our work on global catastrophic risks (writeup)

- $3,000,000 to Metaculus to support work to improve its online forecasting platform, which allows forecasters to make predictions about world events. We believe that this work will help to provide more accurate and calibrated forecasts in domains relevant to Open Philanthropy’s work, such as artificial intelligence and biosecurity and pandemic preparedness, and enable organizations and individuals working in those areas to make better decisions. This follows our May 2022 support ($5,500,000) and falls within our work on global catastrophic risks (writeup)

Grayden @ 2024-02-26T19:25 (+2)

Thanks for sharing. It’s a start, but it’s certainly not a proven Theory of Change. For example, Tetlock himself said that nebulous long-term forecasts are hard to do because there’s no feedback loop. Hence, a prediction market on an existential risk will be inherently flawed.

Nathan Young @ 2024-03-06T07:10 (+2)

I don't think that really works. You can get feedback from 5 years in 5 years. Metaculus already has some suggestions as to people who are good 5 year forecasters.

None of the above are prediction markets.

joshcmorrison @ 2024-02-26T15:38 (+5)

Personally, I think specifically forecasting for drug development could be very impactful: Both in the general sense of aligning fields around the probability of success of different approaches (at a range of scales -- very relevant both for scientists and funders) and the more specific regulatory use case (public predictions of safety/efficacy of medications as part of approvals by FDA/EMA etc.)

More broadly, predicting the future is hugely valuable. Insofar as effective altruism aims to achieve consequentialist goals, the greatest weakness of consequentialism is uncertainty about the effects of our actions. Forecasting targets that problem directly. The financial system creates a robust set of incentives to predict future financial outcomes -- trying to use forecasting to build a tool with broader purpose than finance seems like it could be extremely valuable.

I don't really do forecasting myself so I can't speak to the field's practical ability to achieve its goals (though as an outsider I feel optimistic), so perhaps there are practical reasons it might not be a good investment. But overall to me it definitely feels like the right thing to be aiming at.

Vasco Grilo @ 2024-02-22T20:14 (+5)

Thanks for the comment, Grayden. For context, readers may want to check the question post Why is EA so enthusiastic about forecasting?.

Grayden @ 2024-02-23T08:35 (+5)

Thanks for sharing, but nobody on that thread seems to be able to explain it! Most people there, like here, seem very sceptical

Nathan Young @ 2024-03-06T03:26 (+4)

COI - I work in forecasting.

Whether or not forecasting is a good use of funds, good decision-making is probably correlated with impact.

So I'm open to the idea that forecasting hasn't been a good use of funds, but it seems it should be a priori. Forecasting in one sense is predicting how decisions will go. How could that not be a good idea in theory.

More robust cases in practice:

-

- Forecasters have good track records and are provably good thinkers

- They can red team institutional decisions "what will be the impacts of this"

- In some sense this is similar to research

-

- Forecasting is becoming a larger part of the discourse and this is probably good. It is much more common to see the Economist, the FT, Matt Yglesias, twitter discourse referencing specific testable predictions

-

- In making AI policy specifically it seems very valuable to guess progress and guess the impact of changes.

- To me it looks like Epoch and Metaculus do useful work here that people find valuable.

elifland @ 2024-03-08T05:52 (+97)

All views are my own rather than those of any organizations/groups that I’m affiliated with. Trying to share my current views relatively bluntly. Note that I am often cynical about things I’m involved in. Thanks to Adam Binks for feedback.

Edit: See also child comment for clarifications/updates.

Edit 2: I think the grantmaking program has different scope than I was expecting; see this comment by Benjamin for more.

Following some of the skeptical comments here, I figured it might be useful to quickly write up some personal takes on forecasting’s promise and what subareas I’m most excited about (where “forecasting” (edit: is defined as things in the vein of "Tetlockian superforecasting" or general prediction markets/platforms, in which questions are often answered by lots of people spending a little time on them, without much incentive to provide deep rationales) is defined as things I would expect to be in the scope of OpenPhil’s program to fund).

- Overall, most forecasting grants that OP has made seem much lower EV than the AI safety grants (I’m not counting grants that seem more AI-y than forecasting-y, e.g. Epoch, and I believe these wouldn’t be covered by the new grantmaking program). In part due to my ASI timelines (

10th(edit: ~15th) percentile ~2027, median ~late 2030s), I’m most excited about forecasting grants that are closely related to AI, though I’m not super confident that no non-AI related ones are above the bar. - I generally agree with the view that I’ve heard repeated a few times that EAs significantly overrate forecasting as a cause area, while the rest of the world significantly underrates it.

- I think EAs often overrate superforecasters’ opinions, they’re not magic. A lot of superforecasters aren’t great (at general reasoning, but even at geopolitical forecasting), there’s plenty of variation in quality.

- General quality: Becoming a superforecaster selects for some level of intelligence, open-mindedness, and intuitive forecasting sense among the small group of people who actually make 100 forecasts on GJOpen. There are tons of people (e.g. I’d guess very roughly 30-60% of AI safety full-time employees?) who would become superforecasters if they bothered to put in the time.

- Some background: as I’ve written previously I’m intuitively skeptical of the benefits of large amounts of forecasting practice (i.e. would guess strong diminishing returns).

- Specialties / domain expertise: Contra a caricturized “superforecasters are the best at any forecasting questions” view, consider a grantmaker deciding whether to fund an organization. They are, whether explicitly or implicitly, forecasting a distribution of outcomes for the grant. But I’d guess most would agree that superforecasters would do significantly worse than grantmakers at this “forecasting question”. A similar argument could be made for many intellectual jobs, which could be framed as forecasting. The question on whether superforecasters are relatively better isn’t “Is this task answering a forecasting question“ but rather “What are the specific attributes of this forecasting question”.

- Some people seem to think that the key difference between questions superforecasters are good at vs. smart domain experts are in questions that are *resolvable* or *short-term*. I tend to think that the main differences are along the axes of *domain-specificity* and *complexity*, though these are of course correlated with the other axes. Superforecasters are selected for being relatively good at short-term, often geopolitical questions.

- As I’ve written previously: It varies based on the question/domain how much domain expertise matters, but ultimately I expect reasonable domain experts to make better forecasts than reasonable generalists in many domains.

- There’s an extreme here where e.g. forecasting what the best chess move is obviously better done by chess experts rather than superforecasters.

- So if we think of a spectrum from geopolitics to chess, it’s very unclear to me where things like long-term AI forecasts land.

- This intuition seems to be consistent with the lack of quality existing evidence described in Arb’s report (which debunked the “superforecasters beat intelligence experts without classified information” claim!).

- General quality: Becoming a superforecaster selects for some level of intelligence, open-mindedness, and intuitive forecasting sense among the small group of people who actually make 100 forecasts on GJOpen. There are tons of people (e.g. I’d guess very roughly 30-60% of AI safety full-time employees?) who would become superforecasters if they bothered to put in the time.

- Similarly, I’m skeptical of the straw rationalist view that highly liquid well-run prediction markets would be an insane societal boon, rather than a more moderate-large one (hard to operationalize, hope you get the vibe). See here for related takes. This might change with superhuman AI forecasters though, whose “time” might be more plentiful.

- I think EAs often overrate superforecasters’ opinions, they’re not magic. A lot of superforecasters aren’t great (at general reasoning, but even at geopolitical forecasting), there’s plenty of variation in quality.

- Historically, OP-funded forecasting platforms (Metaculus, INFER) seem to be underwhelming on publicly observable impact per dollar (in terms of usefulness for important decision-makers, user activity, rationale quality, etc.). Maybe some private influence over decision-makers makes up for it, but I’m pretty skeptical.

- Tbh, it’s not clear that these and other platforms currently provide more value to the world than the opportunity cost of the people who spend time on them. e.g. I was somewhat addicted to Metaculus then later Manifold for a bit and spent more time on these than I would reflectively endorse (though it’s plausible that they were mostly replacing something worse like social media). I resonate with some of the comments on the EA Forum post mentioning that it’s a very nerd-sniping activity; forecasting to move up a leaderboard (esp. w/quick-resolving questions) is quite addicting to me compared to normal work activities.

- I’ve heard arguments that getting superforecasted probabilities on things is good because they’re more legible/credible because they’re “backed by science”. I don’t have an airtight argument against this, but it feels slimy to me due to my beliefs above about superforecaster quality.

- Regarding whether forecasting orgs should try to make money, I’m in favor of pushing in that direction as a signal of actually providing value, though it’s of course a balance re: the incentives there and will depend on the org strategy.

- The types of forecasting grants I’d feel most excited about atm are, roughly ordered, and without a claim that any are above OpenPhil’s GCR bar (and definitely not exhaustive, and biased toward things I’ve thought about recently):

- Making AI products for forecasting/epistemics in the vein of FutureSearch and Elicit. I’m also interested in more lightweight forecasting/epistemic assistants.

- FutureSearch and systems in recent papers are already pretty good at forecasting, and I expect substantial improvements soon with next-gen models.

- I’m excited about making AIs push toward what's true rather than what sounds right at first glance or is pushed by powerful actors.

- However, even if we have good forecasting/epistemics AIs, I’m worried that it won’t convince people of the truth since people are irrational and often variance in their beliefs is explained by gaining status/power, vibes, social circles, etc. It seems especially hard to change people’s minds on very tribal things, which seem correlated with the most important beliefs to change.

- AI friends might actually be more important than AI forecasters for epistemics, but that doesn’t mean AI forecasters are useless.

- I might think/write more about this soon. See also Lukas's Epistemics Project Ideas and ACX on AI for forecasting

- Judgmental forecasting of AI threat models, risks, etc. involving a mix of people who have AI / dangerous domain expertise and/or very strong forecasting track record (>90th percentile superforecaster), ideally as many people as possible who have both. Not sure how helpful it will be but it seems maybe worth more people trying.

- In particular, forecasting that can help inform risk assessment / RSPs seems like a great thing to try. See also discussion of the Delphi technique in the context of AGI risk assessment here. Malcolm Murray at GovAI is running a Delphi study to get estimates of likelihood and impact of various AI risks from experts.

- This is related to a broader class of interventions that might look somewhat like a “structured review process” in which one would take an in-depth threat modeling report and have various people review and contribute their own forecasts in addition to qualitative feedback. My sense is that when superforecasters reviewed Joe Carlsmith’s p(doom) forecast in a similar vein that the result wasn’t that useful, but the exercise could plausibly be more useful with better quality reviews/forecasts. It’s unclear whether this would be a good use of resources above the usual ad-hoc/non-forecasting review process, but might be worth trying more.

- Forecasting tournaments on AI questions with large prize pools: I think these historically have been meh (e.g. the Metaculus one attracted few forecasters, wasn’t fun to forecast on (for me at least), and I’d guess significantly improved ~no important decisions), but I think it’s plausible things could go better now as AIs are much more capable, there are many more interesting and maybe important things to predict, etc.

- Crafting forecasting questions that are cruxy on threat models / intervention prioritization between folks working on AI safety

- It’s kind of wild that there has been so little success on this front. See frustrations from Alex Turner “I think it's not a coincidence that many of the "canonical alignment ideas" somehow don't make any testable predictions until AI takeoff has begun.” I worry that this will take a bunch of effort and not get very far (see Paul/Eliezer finding only a somewhat related bet re: their takeoff speeds disagreement), but it seems worth giving a more thorough shot with different participants.

- I’m relatively excited about doing things within the AI safety group rather than between this group and others (e.g. superforecasters) because I expect the results might be more actionable for AI safety people. (edit: I got feedback that this bullet was too tribal and I think that might be right. I think that a better distinction might be preferring inclusion of people who've thought deeply about the future of AI, rather than e.g. superforecaster generalists)

- Making AI products for forecasting/epistemics in the vein of FutureSearch and Elicit. I’m also interested in more lightweight forecasting/epistemic assistants.

I incorporated some snippets of a reflections section from a previous forecasting retrospective above, but there’s a little that I didn’t include if you’re inclined to check it out.

Ozzie Gooen @ 2024-03-09T16:35 (+19)

I feel like I need to reply here, as I'm working in the industry and defend it more.

First, to be clear, I generally agree a lot with Eli on this. But I'm more bullish on epistemic infrastructure than he is.

Here are some quick things I'd flag. I might write a longer post on this issue later.

- I'm similarly unsure about a lot of existing forecasting grants and research. In general, I'm not very excited about most academic-style forecasting research at the moment, and I don't think there are many technical groups at all (maybe ~30 full time equivalents in the field, in organizations that I could see EAs funding, right now?).

- I think that for further funding in this field to be exciting, funders should really work on designing/developing this field to emphasize the very best parts. The current median doesn't seem great to me, but I think the potential has promise, and think that smart funding can really triple-down on the good stuff. I think it's sort of unfair to compare forecasting funding (2024) to AI Safety funding (2024), as the latter has had much more time to become mature. This includes having better ideas for impact and attracting better people. I think that if funders just "funded the median projects", then I'd expect the field to wind up in a similar place to it is now - but if funders can really optimize, then I'd expect them to be taking a decent-EV risk. (Decent chance of failure, but some chance at us having a much more exciting field in 3-10 years).

- I'd prefer funders focus on "increasing wisdom and intelligence" or "epistemic infrastructure" than on "forecasting specifically". I think that the focus on forecasting is over-limiting. That said, I could see an argument to starting from a forecasting angle, as other interventions in "wisdom and intelligence / epistemic infrastructure" are more speculative.

- If I were deploying $50M here, I'd probably start out by heavily prioritizing prioritization work itself - work to better understand this area and what is exciting within it. (I explain more of this in the wisdom/intelligence post above). I generally think that there's been way too little good investigation and prioritization work in this area.

- Like Eli, I'm much more optimistic about "epistemic work to help EAs" than I am "epistemic work to help all of society", at very least in the short-term. Epistemics/forecasting work requires a lot of marginal costs to help any given population, and I believe that "helping N EAs" is often much more impactful than helping N people from most other groups. (This is almost true by definition, for people of any certain background).

- I'd like to flag that I think that Metaculus/Manifold/Samotsvety/etc forecasting has been valuable for EA decision-making. I'd hate to give this up or de-prioritize this sort of strategy.

- I don't particularly trust EA decision-making right now. It's not that I think I could personally do better, but rather that we are making decisions about really big things, and I think we have a lot of reason for humility. When choosing between "trying to better figure out how to think and what to do" vs. "trying to maximize the global intervention that we currently think is highest-EV," I'm nervous about us ignoring the former and going all-in on the latter. That said, some of the crux might be that I'm less certain about our current marginal AI Safety interventions than I think Eli is.

- Personally, around forecasting, I'm most excited about ambitious, software-heavy proposals. I imagine that AI will be a major part of any compelling story here.

- I'd also quickly flag that around AI Safety - I agree that in some ways AI safety is very promising right now. There seems to have been a ton of great talent brought in recently, so there are some excellent people (at very least) to give funding to. I think it's very unfortunate how small the technical AI safety grantmaking team is at OP. Personally I'd hope that this team could quickly get to 5-30 full time equivalents. However, I don't think this needs to come at the expense of (much) forecasting/epistemics grantmaking capacity.

- I think you can think of a lot of "EA epistemic/evaluation/forecasting work" as "internal tools/research for EA". As such, I'd expect that it could make a lot of sense for us to allocate ~5-30% of our resources to it. Maybe 20% of that would be on the "R&D" to this part - perhaps more if you think this part is unusually exciting due to AI advancements. I personally am very interested in this latter part, but recognize it's a fraction of a fraction of the full EA resources.

elifland @ 2024-03-09T17:41 (+6)

Thanks Ozzie for sharing your thoughts!

A few things I want to clarify up front:

- While I think it's valuable to share thoughts about the value of different types of work candidly, I am very appreciative of both people working on forecasting projects and grantmakers in the space for their work trying to make the world a better place (and am friendly with many of them). As I maybe should have made more obvious, I am myself affiliated with Samotsvety Forecasting, and Sage which has done several forecasting projects. And I'm also doing AI forecasting research atm, though not the type that would be covered under the grantmaking program.

- I'm not trying to claim with significant confidence that this program shouldn't exist. I am trying to share my current views on the value of previous forecasting grants and the areas that seem most promising to me going forward. I'm also open to changing my mind on lots of this!

Thoughts on some of your bullet points:

2. I think that for further funding in this field to be exciting, funders should really work on designing/developing this field to emphasize the very best parts. The current median doesn't seem great to me, but I think the potential has promise, and think that smart funding can really triple-down on the good stuff. I think it's sort of unfair to compare forecasting funding (2024) to AI Safety funding (2024), as the latter has had much more time to become mature. This includes having better ideas for impact and attracting better people. I think that if funders just "funded the median projects", then I'd expect the field to wind up in a similar place to it is now - but if funders can really optimize, then I'd expect them to be taking a decent-EV risk. (Decent chance of failure, but some chance at us having a much more exciting field in 3-10 years).

I was trying to compare previous OP forecasting funding to previous AI Safety. It's not clear to me how different these were; sure, OP didn't have a forecasting program but AI safety was also very short-staffed. And re: the field maturing idk Tetlock has been doing work on this for a long time, my impression is that AI safety also had very little effort going into it until like mid-late 2010s. I agree that funding of potentially promising exploratory approaches is good though.

3. I'd prefer funders focus on "increasing wisdom and intelligence" or "epistemic infrastructure" than on "forecasting specifically". I think that the focus on forecasting is over-limiting. That said, I could see an argument to starting from a forecasting angle, as other interventions in "wisdom and intelligence / epistemic infrastructure" are more speculative.

Seems reasonable. I did like that post!

4. If I were deploying $50M here, I'd probably start out by heavily prioritizing prioritization work itself - work to better understand this area and what is exciting within it. (I explain more of this in the wisdom/intelligence post above). I generally think that there's been way too little good investigation and prioritization work in this area.

Perhaps, but I think you gain a ton of info from actually trying to do stuff and iterating. I think prioritization work can sometimes seem more intuitively great than it ends up being, relative to the iteration strategy.

6. I'd like to flag that I think that Metaculus/Manifold/Samotsvety/etc forecasting has been valuable for EA decision-making. I'd hate to give this up or de-prioritize this sort of strategy.

I would love for this to be true! Am open to changing mind based on a compelling analysis.

7. I don't particularly trust EA decision-making right now. It's not that I think I could personally do better, but rather that we are making decisions about really big things, and I think we have a lot of reason for humility. When choosing between "trying to better figure out how to think and what to do" vs. "trying to maximize the global intervention that we currently think is highest-EV," I'm nervous about us ignoring the former and going all-in on the latter. That said, some of the crux might be that I'm less certain about our current marginal AI Safety interventions than I think Eli is.

There might be some difference in perceptions of the direct EV of marginal AI Safety interventions. There might also be differences in beliefs in the value of (a) prioritization research vs. (b) trying things out and iterating, as described above (perhaps we disagree on absolute value of both (a) and (b)).

8. Personally, around forecasting, I'm most excited about ambitious, software-heavy proposals. I imagine that AI will be a major part of any compelling story here.

Seems reasonable, though I'd guess we have different views on which ambitious AI-related software-heavy projects.

9. I'd also quickly flag that around AI Safety - I agree that in some ways AI safety is very promising right now. There seems to have been a ton of great talent brought in recently, so there are some excellent people (at very least) to give funding to. I think it's very unfortunate how small the technical AI safety grantmaking team is at OP. Personally I'd hope that this team could quickly get to 5-30 full time equivalents. However, I don't think this needs to come at the expense of (much) forecasting/epistemics grantmaking capacity.

I think you might be understating how fungible OpenPhil's efforts are between AI safety (particularly governance team) and forecasting. Happy to chat in DM if you disagree. Otherwise reasonable point, though you'd ofc still have to do the math to make sure the forecasting program is worth it.

(edit: actually maybe the disagreement is still in the relative value of the work, depending on what you mean by "much" grantmaking capacity)

10. I think you can think of a lot of "EA epistemic/evaluation/forecasting work" as "internal tools/research for EA". As such, I'd expect that it could make a lot of sense for us to allocate ~5-30% of our resources to it. Maybe 20% of that would be on the "R&D" to this part - perhaps more if you think this part is unusually exciting due to AI advancements. I personally am very interested in this latter part, but recognize it's a fraction of a fraction of the full EA resources.

Seems unclear what should count as internal research for EA, e.g. are you counting OP worldview investigation team / AI strategy research in general? And re: AI advancements, it both improves the promise of AI for forecasting/epistemics work but also shortens timelines which points toward direct AI safety technical/gov work.

Ozzie Gooen @ 2024-03-09T18:12 (+6)

Thanks for the replies! Some quick responses.

First, again, overall, I think we generally agree on most of this stuff.

Perhaps, but I think you gain a ton of info from actually trying to do stuff and iterating. I think prioritization work can sometimes seem more intuitively great than it ends up being, relative to the iteration strategy.

I agree to an extent. But I think there are some very profound prioritization questions that haven't been researched much, and that I don't expect us to gain much insight from by experimentation in the next few years. I'd still like us to do experimentation (If I were in charge of a $50Mil fund, I'd start spending it soon, just not as quickly as I would otherwise). For example:

- How promising is it to improve the wisdom/intelligence of EAs vs. others?

- How promising are brain-computer-interfaces vs. rationality training vs. forecasting?

- What is a good strategy to encourage epistemic-helping AI, where philanthropists could have the most impact?

- What kinds of benefits can we generically expect from forecasting/epistemics? How much should we aim for EAs to spend here?

I would love for this to be true! Am open to changing mind based on a compelling analysis.

We might be disagreeing a bit on what the bar for "valuable for EA decision-making" is. I see a lot of forecasting like accounting - it rarely leads to a clear and large decision, but it's good to do, and steers organizations in better directions. I personally rely heavily on prediction markets for key understandings of EA topics, and see that people like Scott Alexander and Zvi seem to. I know less about the inner workings of OP, but the fact that they continue to pay for predictions that are very much for their questions seems like a sign. All that said, I think that ~95%+ of Manifold and a lot of Metaculus is not useful at all.

I think you might be understating how fungible OpenPhil's efforts are between AI safety (particularly governance team) and forecasting

I'm not sure how much to focus on OP's narrow choices here. I found it surprising that Javier went from governance to forecasting, and that previously it was the (very small) governance team that did forecasting. It's possible that if I evaluated the situation, and had control of the situation, I'd recommend that OP moved marginal resources to governance from forecasting. But I'm a lot less interested in this question than I am, "is forecasting competitive with some EA activities, and how can we do it well?"

Seems unclear what should count as internal research for EA, e.g. are you counting OP worldview diversification team / AI strategy research in general?

Yep, I'd count these.

elifland @ 2024-03-11T15:57 (+11)

Just chatted with @Ozzie Gooen about this and will hopefully release audio soon. I probably overstated a few things / gave a false impression of confidence in the parent in a few places (e.g., my tone was probably a little too harsh on non-AI-specific projects); hopefully the audio convo will give a more nuanced sense of my views. I'm also very interested in criticisms of my views and others sharing competing viewpoints.

Also want to emphasize the clarifications from my reply to Ozzie:

- While I think it's valuable to share thoughts about the value of different types of work candidly, I am very appreciative of both people working on forecasting projects and grantmakers in the space for their work trying to make the world a better place (and am friendly with many of them). As I maybe should have made more obvious, I am myself affiliated with Samotsvety Forecasting, and Sage which has done several forecasting projects (and am for the most part more pessimistic about forecasting than others in these groups/orgs). And I'm also doing AI forecasting research atm, though not the type that would be covered under the grantmaking program.

- I'm not trying to claim with significant confidence that this program shouldn't exist. I am trying to share my current views on the value of previous forecasting grants and the areas that seem most promising to me going forward. I'm also open to changing my mind on lots of this!

Ozzie Gooen @ 2024-03-25T20:38 (+6)

Audio/podcast is here:

https://forum.effectivealtruism.org/posts/fsnMDpLHr78XgfWE8/podcast-is-forecasting-a-promising-ea-cause-area

MarcusAbramovitch @ 2024-02-22T06:34 (+46)

I'm in the process of writing up my thoughts on forecasting in general and particularly EA's reverence for forecasting but I feel, similar to @Grayden that forecasting is a game that is nearly perfectly designed to distract EAs from useful things. It's a combination of winning, being right when others are wrong and seemingly useful, all wrapped into a fun game.

I'd like to see tangible benefits to more broad funding of forecasting that seems to be done in t he millions and tens of millions of dollars.

I would also be the type of person you would think would be a greater fan of forecasting. I'm the number one forecaster on Manifold and I've made tens of thousands of dollars on Polymarket. But I think we should start to think of forecasting as more of a game that EAs like to play, something like Magic the Gathering that is fun and has some relations to useful things but isn't really useful by itself.

David Mathers @ 2024-02-22T10:41 (+10)

Maybe Open Phil are doing this because they feel like they often attempt to get good forecasts about stuff they care about in the course of trying to make the best grants they can in other areas, and after they have done that enough times, it seemed sensible to just formally declare that forecasting is something they fund. The theory here isn't "developing forecasting as an art is an EA cause because it will improve worldwide epistemics" or whatever, but rather "we, Open Phil, need good forecasts to get funding decisions about other stuff right".

Nick Whitaker @ 2024-02-22T10:52 (+25)

If they mostly care about AI timelines, subsidize some markets on it. Funding platforms and research doesn’t seem particularly useful here (as opposed to much more direct research).

David Mathers @ 2024-02-23T16:27 (+1)

Fair point.

MarcusAbramovitch @ 2024-02-24T19:57 (+6)

At some point, I kinda just want to say "ok, where has the forecasting money gone?", and it seems to have overwhelmingly gone to community forecasting sites like Manifold and Metaculus. I don't see anything like "paying 3 teams of 3 forecasters to compete against each other on some AI timelines questions"

BenjaminTereick @ 2024-02-29T22:15 (+13)

Just confirming that informing our own decisions was part of the motivation for past grants, and I expect it to play an important role for our forecasting grants in the future.

[The forecasting money] seems to have overwhelmingly gone to community forecasting sites like Manifold and Metaculus. I don't see anything like "paying 3 teams of 3 forecasters to compete against each other on some AI timelines questions".

That’s directionally true, but I think “overwhelmingly” isn’t right.

- We did not fund Manifold.

- One of our largest forecasting grants went to FRI, which is not a platform.

- While it’s fair to say that Metaculus is mostly a platform, it also runs externally-funded tournaments, and has a pro forecaster service.

- There were a few grants to more narrowly defined projects.

Most of these are currently not assgined to forecasting as a cause area, but you can find themhere(searching for “forecast” in our grants database), see especially those before August 2021.[Update: we have updated the labels, and these grants are now listed here ].

I expect that we’ll make more of these types of grants now that forecasting is a designated area with more capacity.

BenjaminTereick @ 2024-02-29T15:49 (+43)

I’m glad to see the debate on decision relevance in the comments! I think that if we end up considering forecasting a successful focus area in 5-10 years, thinking hard about the value-add to decision-making will likely have played a crucial role in this success.

As for my own view, I do agree that judgmental / subjective probability forecasting hasn’t been as much of a success story as one might have expected about 10 years ago. I also agree that many of the stories people tell about the impact of forecasting naturally raise questions like “so why isn’t this a huge industry now? Why is this project a non-profit?”. We are likely to ask questions of this kind to prospective grantees way more often than grantmakers in other focus areas.

However, I (unsurprisingly) also disagree with the stronger claim that the lack of a large judgmental forecasting industry is conclusive evidence that forecasting doesn’t provide value, and is just an EA hobby horse. While I don’t have capacity to engage in this debate deeply, a few points of rebuttal:

- I do think there have been some successes. For instance, the XPT mentioned in this comment certainly affected the personal beliefs of some people in the EA community, and thereby had an influence on resource allocation and career decisions.

- Forecasting, as such, is a large industry. I’d assign considerable weight to the idea that making judgmental forecasting a success of the kind that model-driven forecasting approaches have been in areas like finance, marketing or sports, is a harder, but solvable task. There might simply be a free-riding problem for investing the resources necessary for figuring out the best way to make it work.

- As a related indirect argument, forecasting has a pretty straightforward a priori case (more accurate information leads to better decision-making), and there are plenty of candidate explanations for why its widespread adoption would have been difficult despite forecasting having the potential to be widely useful (e.g. I’m sympathetic to the points made by MaxRa here). Thus, even after updating on the observation that judgmental forecasting hasn’t conquered the world yet, I don’t think we should assign high confidence that it will forever stay a niche industry.

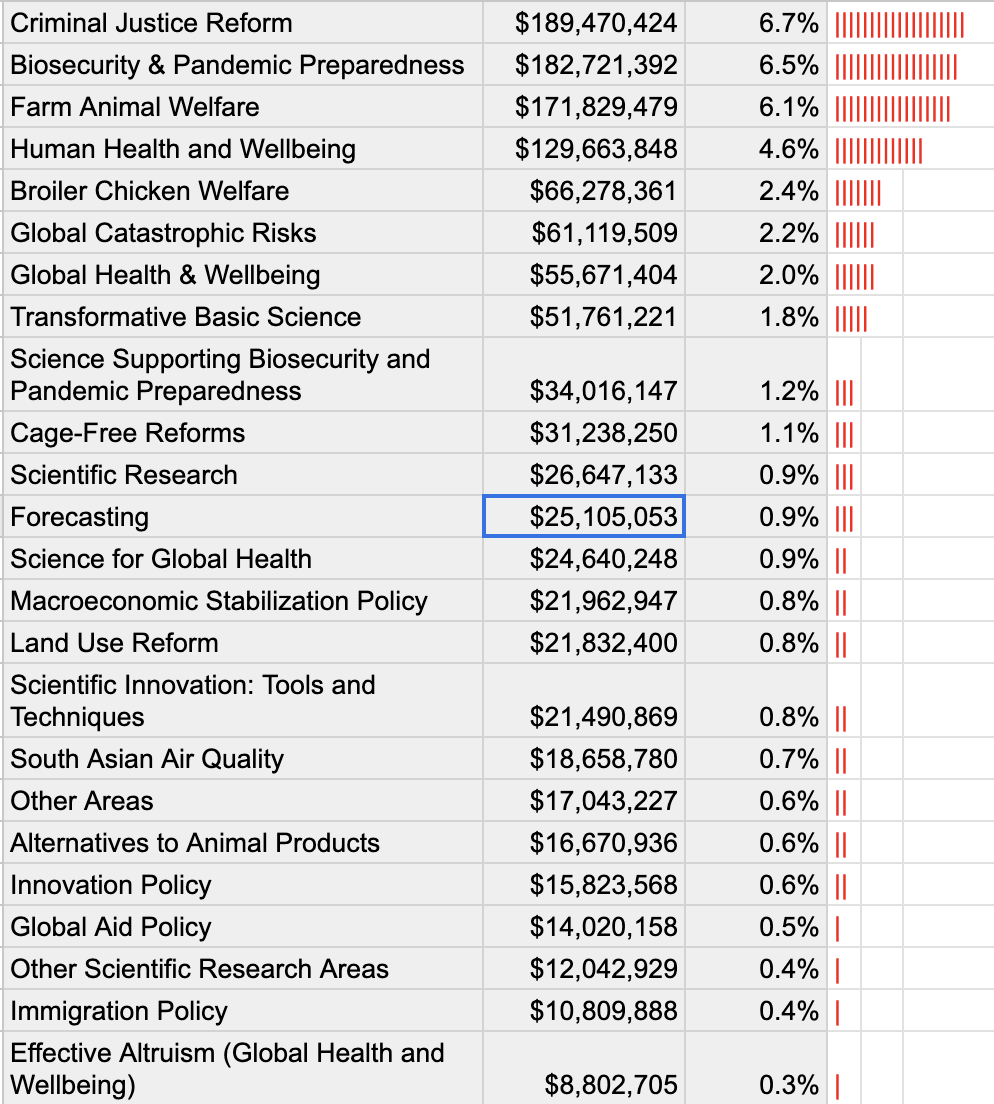

- As others have pointed out, only a fairly small fraction of Open Phil’s spending has gone into forecasting so far (about 1%), and this is unlikely to dramatically change in the future. The forecasting community doesn’t need to become a multi-billion industry to justify that level of spending.

Nathan Young @ 2024-03-06T07:12 (+3)

I do think there have been some successes. For instance, the XPT mentioned in this comment certainly affected the personal beliefs of some people in the EA community, and thereby had an influence on resource allocation and career decisions.

Also it was pretty widely covered in wider discourse.

SuperDuperForecasting @ 2024-02-21T11:22 (+34)

This will be a total waste of time and money unless OpenPhil actually pushes the people it funds towards achieving real-world impact. The typical pattern in the past has been to launch yet another forecasting tournament to try to find better forecasts and forecasters. No one cares, we already know how to do this since at least 2012!

The unsolved problem is translating the research into real-world impact. Does the Forecasting Research Institute have any actual commercial paying clients? What is Metaculus's revenue from actual clients rather than grants? Who are they working with and where is the evidence that they are helping high-stakes decision makers improve their thought processes?

Incidentally, I note that forecasting is not actually successful even within EA at changing anything: superforecasters are generally far more relaxed about Xrisk than the median EA, but has this made any kind of difference to how EA spends its money? It seems very unlikely.

CAISID @ 2024-02-22T08:41 (+35)

At the risk of damaging my networks in EA, I am inclined to tentatively agree with some of your comment. Disclaimer here that I have very little interaction with forecasting for various reasons, so this is more of a general comment than anything else.

I think one of the major problems I see in EA as a whole is a fairly loose definition of 'impact'. Often I see five or six groups using vast sums of money and talent to produce research or predictions that are shared and reviewed between each other and then hosted on the websites but never seem to actually be implemented anywhere. There's no external (of EA) stakeholder participation, no follow-up to check for changed trends, no update on how this affects the real-world outside of EA circles.

I don't always think paying clients are the best measurement system for impact, but I do think there needs to be a much higher focus on bridging the connection between high-quality forecasting and real-world decision-makers.

Obviously this doesn't apply everywhere in EA, and there are lots and lots of exemptions, but I do think your comment has merit.

Devon Fritz @ 2024-02-22T14:37 (+15)

I think one of the major problems I see in EA as a whole is a fairly loose definition of 'impact'.

I find the statement is more precise if you put "longtermism" where "EA" is. Is that your sense as well?

CAISID @ 2024-02-22T14:49 (+4)

I think that's a good modification of my initial point, you may well be right.

Will Aldred @ 2024-02-21T18:02 (+12)

I’m confused about why you think forecasting orgs should be trying to acquire commercial clients.[1] How do you see this as being on the necessary path for forecasting initiatives to reduce x-risk, contribute to positive trajectory change, etc.? Perhaps you could elaborate on what you mean by “real-world impact”?

COI note: I work for Metaculus.

- ^

The main exception that comes to mind, for me, is AI labs. But I don’t think you’re talking about AI labs in particular as the commercial clients forecasting orgs should be aiming for?

MWStory @ 2024-02-22T04:54 (+9)

What better test of the claim "we are producing useful/actionable information about the future, and/or developing workable processes for others to do the same" do we have than some of the thousands of organisations whose survival depends on this kind of information being willing to pay for it?

MichaelDickens @ 2024-02-23T05:05 (+4)

IMO if a forecasting org does manage to make money selling predictions to companies, that's a good positive update, but if they fail, that's only a weak negative update—my prior is that the vast majority of companies don't care about getting good predictions even if those predictions would be valuable. (Execs might be exposed as making bad predictions; good predictions should increase the stock price, but individual execs only capture a small % of the upside to the stock price vs. 100% of the downside of looking stupid.)

MWStory @ 2024-02-23T06:37 (+5)

I think if you extend this belief outwards it starts to look unwieldy and “proves too much”. Even if you think that executives don’t care about having access to good predictions the way that business owners do, then why not ask why business owners aren’t paying?

SuperDuperForecasting @ 2024-02-22T11:55 (+5)

MW Story already said what I wanted to say in response to this, but it should be pretty obvious. If people think of something as more than just a cool parlor trick, but instead regard as useful and actionable, they should be willing to pay hand over fist for it at proper big boy consultancy rates. If they aren't, that strongly suggests that they just don't regard what you're producing as useful.

And to be honest it often isn't very useful. Tell someone "our forecasters think there's a 26% chance Putin is out of power in 2 years" and the response will often be "so what?" That by itself doesn't tell anything about what Putin leaving power might mean for Russia or Ukraine, which is almost certainly what we actually care about (or nuclear war risk, if we're thinking X-risk). The same is true, to a degree, for all these forecasts about AI or pandemics or whatever: they often aren't sharp enough and don't cut to the meat of actual impacts in the real world.

But since you're here, perhaps you can answer my question about your clients, or lack thereof? If I were funding Metaculus, I would definitely want it to be more than a cool science project.

David Mathers @ 2024-02-23T10:42 (+4)

It's worth saying also that we already have 1 commercial forecasting organisation Good Judgment (I do a little bit of professional forecasting for them though it's not my main job.) Not clear why we need another. (I don't know who GJ clients actually are though, plus presumably I wouldn't be allowed to tell you even if I did. EDIT: Actually, in some cases I think client info became public and/or we were internally told who they were, but I have just forgotten who.)

SanteriK @ 2024-02-21T08:00 (+31)

As the program is about forecasting, what is your stance on the broader field of foresight & futures studies? Why is forecasting more promising than some other approaches to foresight?

EffectiveAdvocate @ 2024-02-23T20:08 (+5)

I'm not OP, obviously, and I am only speaking from experience here, so I have no data to back this up, but:

My feeling is that foresight projects have a tendency to become political very quickly, and they are much more about stakeholder engagement than they are about finding the truth, whereas forecasting can remain relatively objective for longer.

That being said: I am very excited about combining these approaches.

BenjaminTereick @ 2024-02-29T22:25 (+14)

As the program is about forecasting, what is your stance on the broader field of foresight & futures studies? Why is forecasting more promising than some other approaches to foresight?

We are open to considering projects in “forecasting-adjacent" areas, and projects that combine forecasting with ideas from related fields are certainly well within the scope of the program.

As for projects that would exclusively rely on other approaches: My worry is that non-probabilistic foresight techniques typically don’t have more to show in terms of evidence for their effectiveness, while being more ad hoc from a theoretical perspective.

Vasco Grilo @ 2024-02-22T20:17 (+4)

Thanks for asking, SanteriK! For context, reader may want to check the (great!) post A practical guide to long-term planning – and suggestions for longtermism.

MaxRa @ 2024-02-22T11:19 (+15)

I‘m really excited about more thinking and grant-making going into forecasting!

Regarding the comments critical of forecasting as a good investment of resources from a world-improving perspective, here some of my quick thoughts:

-

Systematic meritocratic forecasting has a track record of outperforming domain experts on important questions - Examples: Geopolitics (see Superforecasting), public health (see COVID), IIRC also outcomes of research studies

-

In all important domains where humans try to affect things, they are implicitly forecasting all the time and act on those forecasts. Random examples: - "If lab-grown meat becomes cheaper than normal meat, XY% of consumers will switch" - "A marginal supply of 10,000 bednets will decrease malaria infections by XY%" - Models of climate change projections conditional on emmissions

-

In many domains humans are already explicitly forecasting and acting on those forecasts - Insurance (e.g. forecasts on loan payments) - Finance (e.g. on interest rate changes) - Recidivism - Weather - Climate

-

Increases in use of forecasting has the potential to increase societal sanity - Make people more able to appreciate and process uncertainty in important domains - Clearer communication (e.g. less talking past one another by anchoring discussion on real world outcomes) - Establish feedback loops with resolvable forecasts ➔ stronger incentives for being correct & ability to select people who have better world models

That said, I also think that it's often surprisingly difficult to ask actionable questions when forecasting, and often it might be more important to just have a small team of empowered people with expert knowledge combined with closely coupled OODA loops instead. I remember finding this comment from Jan Kulveit pretty informative:

In practice I’m a bit skeptical that a forecasting mindset is that good for generating ideas about “what actions to take”. “Successful planning and strategy” is often something like “making a chain of low-probability events happen”, which seems distinct, or even at tension with typical forecasting reasoning. Also, empirically, my impression is that forecasting skills can be broadly decomposed into two parts—building good models / aggregates of other peoples models, and converting those models into numbers. For most people, the “improving at converting non-numerical information into numbers” part has initially much better marginal returns (e.g. just do calibration trainings...), but I suspect doesn’t do that much for the “model feedback”.

Jason @ 2024-02-22T14:40 (+6)

Why do you think there is currently little/no market for systematic meritocratic forecasting services (SMFS)? Even under a lower standard of usefulness -- that blending SMFS in with domain-expert forecasts would improve the utility of forecasts over using only domain-expert input -- that should be worth billions of dollars in the financial services industry alone, and billions elsewhere (e.g., the insurance market).

I don't think the drivers of low "societal sanity" are fundamentally about current ability to estimate probabilities. To use a current example, the reason 18% of Americans believe Taylor Swift's love life is part of a conspiracy to re-elect Biden isn't that our society lacks resources to better calibrate the probability that this is true. The desire to believe things that favor your "team" runs deep in human psychology. The incentives to propagate such nonsense are, sadly, often considerable. The technological structures that make disseminating nonsense easier are not going away.

MaxRa @ 2024-02-22T16:28 (+12)

Thanks, I think that's a good question. Some (overlapping) reasons that come to mind that I give some credence to:

a) relevant markets are simply making an error in neglecting quantified forecasts

- e.g. COVID was an example where I remember some EA adjacent people making money because investors were underrating the pandemic potential signifiantly

- I personally find it plausible when looking e.g. at the quality of think tank reports which seems significantly curtailed due to the amount of vague propositions that would be much more useful if more concrete and quantified

b) relevant players train the relevant skills sufficiently well into their employees themselves (e.g. that's my fairly uninformed impression from what Jane Street is doing, and maybe also Bridgewater?)

c) quantified forecasts are so uncommon that it still feels unnatural to most people to communicate them, and it feels cumbersome to be nailed down on giving a number if you are not practiced in it

d) forecasting is a nerdy practice, and those practices need bigger wins to be adopted (e.g. maybe similar to learning programming/math/statistics, working with the internet, etc.)

e) maybe more systematically I'm thinking that it's often not in the interest of entrenched powers to have forecasters call bs on whatever they're doing.

- in corporate hierarchies people in power prefer the existing credentialism, and oppose new dimensions of competition

- in other arenas there seems to be a constant risk of forecasters raining on your parade

f) maybe previous forecast-like practices ("futures studies", "scenario planning") maybe didn't yield many benefits and made companies unexited about similar practices (I personally have a vague sense of not being impressed by things I've seen associated with these words)

MaxRa @ 2024-02-22T16:43 (+8)

I agree that things like confirmation bias and myside bias are huge drivers impeding "societal sanity". And I also agree that it won't help a lot here to develop tools to refine probabilities slightly more.

That said, I think there is a huge crowd of reasonably sane people who have never interacted with the idea of quantified forecasting as a useful epistemic practice and a potential ideal to thrive towards when talking about important future developments. Like other commentators say, it's currently mostly attracting a niche of people who thrive for higher epistemic ideals, who try to contribute to better forecasts on important topics, etc. I currently feel like it's not intractable for quantitative forecasts to become more common in epistemic spaces filled with reasonable enough people (e.g. journalism, politics, academia). Kinda similar to how tracking KPIs where probably once a niche new practice and are now standard practice.

Saul Munn @ 2024-02-20T01:06 (+12)

this is really cool! i'm excited to watch the forecasting community grow, and for a greater number of impactful forecasting projects to be built.

We are as of yet uncertain about the most promising type of project in the forecasting focus area, and we will likely fund a variety of different approaches ... we plan to continue exploring the most plausible theories of change for forecasting.

i'm curious what you're currently excited about (specific projects, broad topic areas, etc). what is OP's theory of change for how forecasting can be most impactful? what sorts of things would you be most excited to see happen?

on the flipside, if — 1/5/20 years from now — we look back and realize that forecasting wasn't so impactful, why do you think that would be the case?

Austin @ 2024-02-20T00:23 (+10)

Awesome to hear! I'm happy that OpenPhil has promoted forecasting to its own dedicated cause area with its own team; I'm hoping this provides more predictable funding for EA forecasting work, which otherwise has felt a bit like a neglected stepchild compared to GCR/GHD/AW. I've spoken with both Ben and Javier, who are both very dedicated to the cause of forecasting, and am excited to see what their team does this year!

Grayden @ 2024-02-23T08:39 (+11)

Preventing catastrophic risks, improving global health and improving animal welfare are goals in themselves. At best, forecasting is a meta topic that supports other goals

Austin @ 2024-02-23T17:20 (+2)

Yes, it's a meta topic; I'm commenting less on the importance of forecasting in an ITN framework and more on its neglectedness. This stuff basically doesn't get funding outside of EA, and even inside EA had no institutional commitment; outside of random one-of grants, the largest forecasting funding program I'm aware of over the last 2 years were $30k in "minigrants" funded by Scott Alexander out of pocket.

But on the importance of it: insofar as you think future people matter and that we have the ability and responsibility to help them, forecasting the future is paramount. Steering today's world without understanding the future would be like trying to help people in Africa, but without overseas reporting to guide you - you'll obviously do worse if you can't see outcomes of your actions.

You can make a reasonable argument (as some other commenters do!) that the tractability of forecasting to date hasn't been great; I agree that the most common approaches of "tournament setting forecasting" or "superforecaster consulting" haven't produced much of decision-relevance. But there are many other possible approaches (eg FutureSearch.ai is doing interesting things using an LLM to forecast), and I'm again excited to see what Ben and Javier do here.

MarcusAbramovitch @ 2024-02-24T00:30 (+5)

Yes, it's a meta topic; I'm commenting less on the importance of forecasting in an ITN framework and more on its neglectedness. This stuff basically doesn't get funding outside of EA, and even inside EA had no institutional commitment;

- I don't think it's necessary to talk in terms of an ITN framework but something being neglected isn't nearly reason enough to fund it. Neglectedness is perhaps the least important part of the framework and something being neglected alone isn't a reason to fund it. Getting 6 year olds in race cars for example seems like a neglected cause but one that isn't worth pursuing.

- I think something not getting funding outside of EA is probably a medium-sized update to the thing not being important enough to work on. Things start to get EA funding once a sufficient number of the community finds the arguments for working on a problem sufficiently convincing. But many many many problems have come across EA's eyes and very few of them have stuck. For something to not get funding from others suggests that very few others found it to be important.

- Forecasting still seems to get a fair amount of dollars, probably about half as much as animal welfare. https://docs.google.com/spreadsheets/d/1ip7nXs7l-8sahT6ehvk2pBrlQ6Umy5IMPYStO3taaoc/edit?usp=sharing

Your points on helping future people (and non-human animals) are well taken.

Austin @ 2024-02-24T02:07 (+4)

- Yeah, I agree neglectedness is less important but it does capture something important; I think eg climate change is both important and tractable but not neglected. In my head, "importance" is about "how much would a perfectly rational world direct at this?" while "neglected" is "how far are we from that world?".

- Also agreed that the lack of external funding is an update that forecasting (as currently conceived) has more hype than real utility. I tend to think this is because of the narrowness of how forecasting is currently framed, though (see my comments on tractability above)

- That's a great resource I wasn't aware of, thanks (did you make it?). I do think that OpenPhil has spent a commendable amount of money on forecasting to date (though: nowhere near half Animal Welfare, more like a tenth). But I think this has been done very unsystematically, with no dedicated grantmaker. My understanding it was like, a side project of Luke Muehlhauser for a long time; when I reached out in Jan '23 he said they were not making new forecasting grants until they filled this role. Even if it took a year, I'm glad this program is now launched!

MarcusAbramovitch @ 2024-02-24T19:52 (+2)

- I think your point 1 is a good starting point but I would add "in percentage terms compared to all other potential causes" and you have to be in the top 1% of that for EA to consider the cause neglected.

3. I didn't make it. It is great though. I was talking about on a yearly basis in the last couple years. That said, I made the comment off memory so I could be wrong.