How to build AI you can actually Trust - Like a Medical Team, Not a Black Box

By Ihor Ivliev @ 2025-03-22T21:27 (+2)

From intern hunches to expert oversight - this is how AI earns your trust.

Modern AI can feel like a genius intern: brilliant at spotting patterns, but incapable of explaining its reasoning.

But what if your AI worked more like a clinical team?

- One specialist proposing hypotheses

- Another translating it into actionable logic

- A senior verifying against formal rules

- And an ethics officer with veto power

This isn’t a metaphor. It’s a system. And in high-stakes domains (like healthcare) we need systems that don’t just act fast, but act accountably.

The Problem: Black Boxes in Life-or-Death Decisions

When an AI decides who gets a loan, a transplant, or a cancer diagnosis, "it just works" isn’t good enough.

In clinical trials, unexplained AI outputs led doctors to make significantly more diagnostic errors - even small biases had measurable impact.

See:

• Measuring the Impact of AI in the Diagnosis of Hospitalized Patients

• False conflict and false confirmation errors in medical AI

• AI Cannot Prevent Misdiagnoses

We need AI that explains itself. That self-checks. That knows when to escalate.

The Idea: Give AI a Crew

Enter CCACS: the Comprehensible Configurable Adaptive Cognitive Structure - a transparent governance layer for AI. Think of it as a crew, not a monolith.

- The Guesser (MOAI): Spots patterns and proposes actions.

- The Explainer (LED): Assigns trust scores, explains the guess

- The Rulebook (TIC): Verifies outputs against formal knowledge

- The Captain (MU): Oversees ethics, blocks unsafe actions

Use Case: Medical Triage Walkthrough

In an emergency room, CCACS might operate like this:

- [MOAI] → Signs of stroke pattern detected.

- [LED] → 72% trust score based on scan history + vitals.

- [TIC] → Matches protocol 3.2 for stroke verification.

- [MU] → DNR detected. Escalate to human oversight.

Not just diagnosis. Justified, auditable, ethically-aware reasoning.

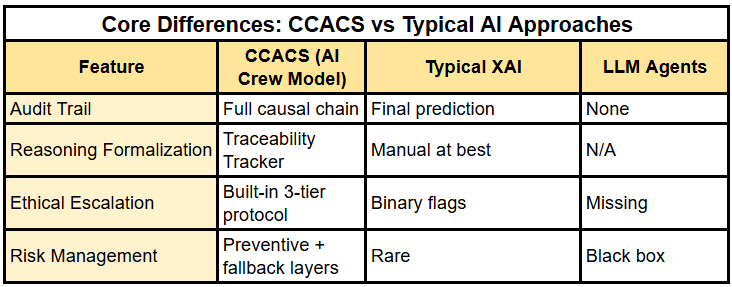

Why This Is Different

Traditional AI Systems:

- No audit trail

- Post-hoc rationalization

- Weak ethical pathways

CCACS (AI Crew Model):

- Full traceability: guess → explain → verify → ethics

- Built-in arbitration across specialized layers

- Escalates low-confidence or high-risk decisions

Like moving from a solo intern to a credentialed care team.

Trust in AI starts with explanation and transparency. Would you trust this approach more?

Practitioner Addendum: How CCACS Validates, Escalates, and Explains Its Reasoning

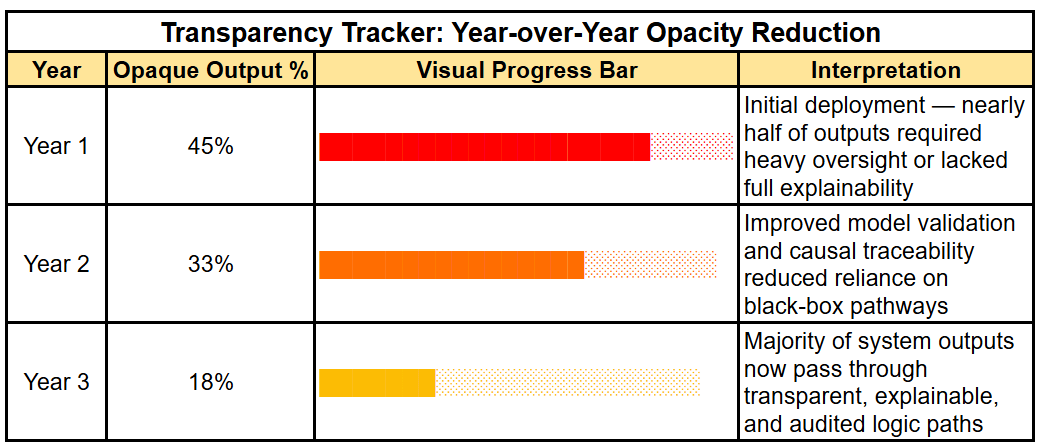

This section outlines how the CCACS model achieves transparency and trustworthiness at the implementation level.

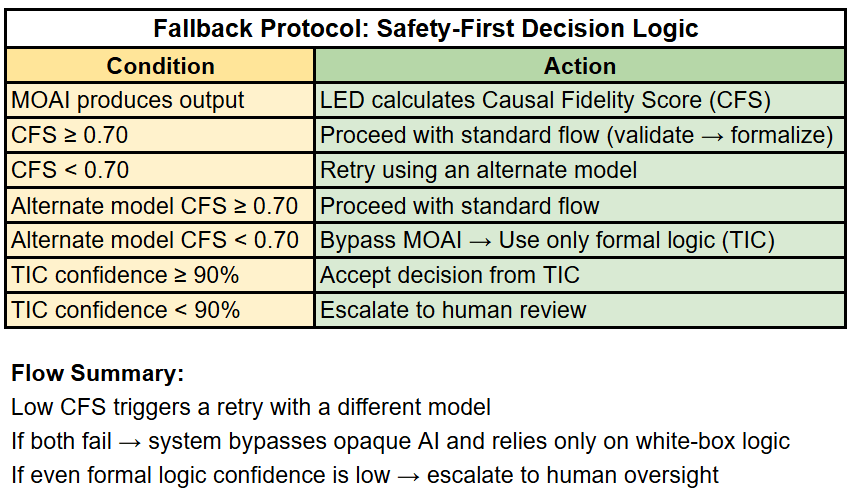

Causal Fidelity Score (CFS)

CFS = (Causal Coherence × Evidence Weight) / (Opacity Penalty + 1)

- Causal Coherence: Model alignment with formal reasoning (e.g., Bayesian priors)

- Evidence Weight: Stability of supporting signals (e.g., SHAP variance over 10k samples)

- Opacity Penalty: Higher for black-box architectures

Example: Cancer detection model earns CFS = 0.92

- Biomarker match = High coherence

- SHAP stable = Strong evidence

- Hybrid model = Low opacity

How the Explainer Validates AI Outputs

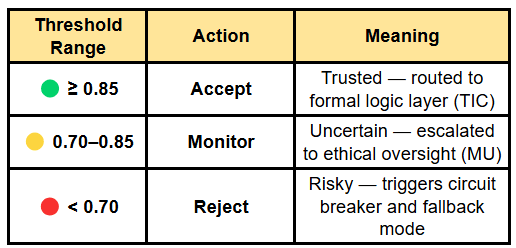

Here’s how the system (via the LED layer) decides what to do with an AI-generated insight:

- Calculate the Causal Fidelity Score (CFS) This score reflects how trustworthy the AI's insight is - based on logic alignment, supporting evidence, and model opacity.

- Apply tiered thresholds:

- Final safety check: If the formal logic layer (TIC) shows low confidence and the ethical layer (MU) detects high risk, → The system automatically escalates to human review.

CFS Thresholds: Clinical Parallels in Healthcare Decision Logic

CCACS uses tiered thresholds to determine how AI-generated insights are handled - based on their reliability, traceability, and ethical risk.

These thresholds mirror how clinicians manage diagnostic uncertainty in emergency settings.

Real-world triage doesn't rely on fixed confidence percentages — it uses risk stratification, urgency categories, and physiological signals to guide decisions. But the decision logic is strikingly similar:

- High certainty → Intervene with confidence

- Moderate uncertainty → Observe, validate, or escalate

- Low confidence → Pause or defer to senior judgment

CCACS applies this same tiered reasoning to AI outputs, using confidence scores (like CFS) as structured proxies for diagnostic risk. It brings clinical-style caution and auditability into AI cognition — without pretending to be medicine.

Just like in emergency care:

- 🟢 High confidence → Act with transparency

- 🟡 Moderate confidence → Escalate under ethical guardrails

- 🔴 Low confidence → Stop and defer to human oversight

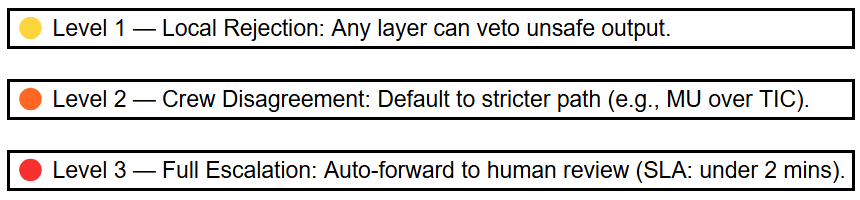

Handling Disagreements and Emergencies

Ethical Alignment Index (EAI) in Action

- During deployment, model denied 14% more loans to women under 30

- EAI dropped by 18%, triggering MU review

- Logic retrained, automation paused until bias resolved

Beyond a Single Crew: The ACCCU Vision

What happens when one AI crew isn’t enough?

In high-stakes environments - like managing a hospital network during a crisis, coordinating financial systems during market volatility, or overseeing autonomous fleets - we don’t just need a decision team, we need a network of decision teams.

That’s where ACCCU comes in: the Adaptive Composable Cognitive Core Unit.

Instead of scaling by adding more black-box power, ACCCU scales by adding more crews, each with a focused role - and then coordinating them with strict governance.

Each “crew” (a CCACS unit) can specialize:

- One might prioritize ethics (MU-LFCL)

- Another, formal logic and regulatory compliance (TIC-LFCL)

- Another, generative insight and exploratory reasoning (MOAI-LFCL)

- A fourth, validation and interpretability (LED-LFCL)

They communicate via Cognitive Coordination Channels (C3) - trusted interfaces that allow units to escalate, defer, or negotiate actions based on urgency, domain relevance, or ethical weight.

For example, if two crews disagree (say, one recommends action and another flags risk) the 3-Tier Override Protocol resolves it by prioritizing safety, defaulting to human oversight if needed.

This modular setup also means that any single crew can be retrained, updated, or replaced without rebuilding the entire system - making the architecture adaptive by design, not just configurable. Instead of one big brain, ACCCU builds a thinking institution - where every insight is vetted, explained, and governed across domains.

Let’s make AI less like magic - and more like medicine.

Want the deep dive?

Read the full technical framework - including layered validation protocols, CFS scoring logic, and architecture maps (this is a dense, architect-level writeup - not light reading) - here:

Medium - Adaptive Composable Cognitive Core Unit (ACCCU)