AMA: Holden Karnofsky @ EA Global: Reconnect

By Barry Grimes @ 2021-03-12T15:12 (+27)

Update: Here's the video of Holden's session.

Holden Karnofsky will be answering questions submitted by community members during one of the main sessions at EA Global: Reconnect.

Submit your questions here by 11:59 pm PDT on Thursday, March 18, or vote for the questions you most want Holden to answer.

About Holden

Holden Karnofsky sets the strategy and oversees the work of Open Philanthropy. Holden co-founded GiveWell in 2007, and began co-developing Open Philanthropy (initially called GiveWell Labs) in 2011. He has a degree in Social Studies from Harvard University. His writings include Radical Empathy, Excited Altruism, and Some Thoughts on Public Discourse.

BrianTan @ 2021-03-15T14:33 (+35)

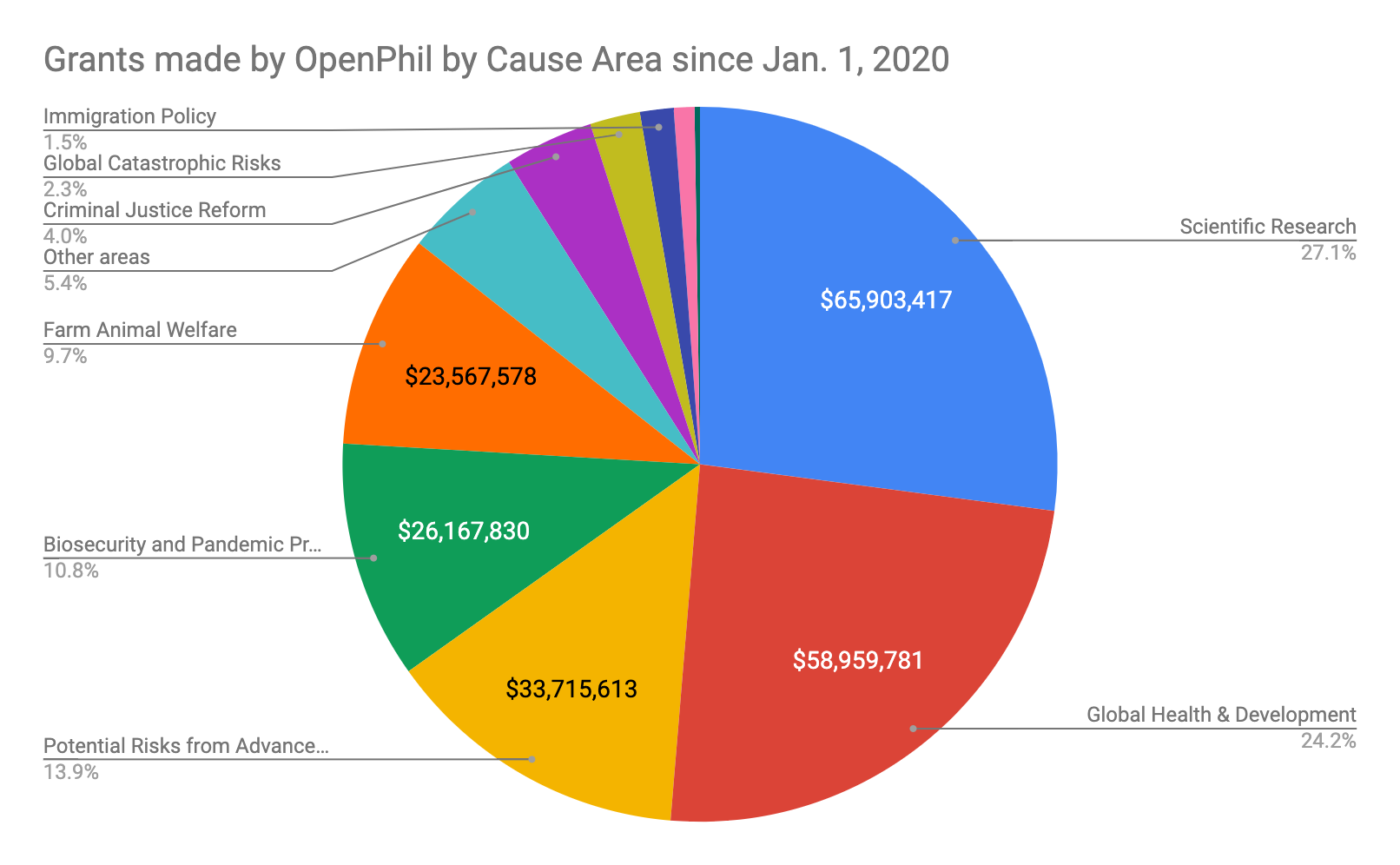

I re-analyzed OpenPhil's grants data just now (link here), and I noticed that across all your grants since Jan. 1, 2020, scientific research is the focus area with the largest amount granted to, at $66M, or 27% of total giving since then, closely beating out global health and development (chart shown below).

OpenPhil also gave an average of $48M per year from 2017-2020 to scientific research. I'm surprised by this - I knew OpenPhil gave some funding to scientific research, but I didn't know it's now the largest cause OpenPhil grants to.

- Was there something that happened that made OpenPhil decide to grant more in this area?

- Scientific research isn't a cause area heavily associated with EA currently - 80K doesn't really feature scientific research in their content or as a priority path, other than for doing technical AI safety research or biorisk research. Also, EA groups and CEA don't feature scientific research as one of EA's main causes - the focus still tends to be on global health and development, animal welfare, longtermism, and movement building / meta. (I guess scientific research is cross-cutting across these areas, but I still don't see a lot of focus on it). Do you think more EAs should be looking into careers in scientific research? Why or why not?

KarolinaSarek @ 2021-03-16T14:53 (+35)

A follow-up question: What would this chart look like if all the opportunities you want to fund existed? In other words, to what extent does the breakdown of funding shown here capture Open Phil’s views on cause prioritization vs. reflect limiting factors such as the availability of high-quality funding opportunities, and what would it look like if there were no such limiting factors?

Sean_o_h @ 2021-03-16T19:37 (+14)

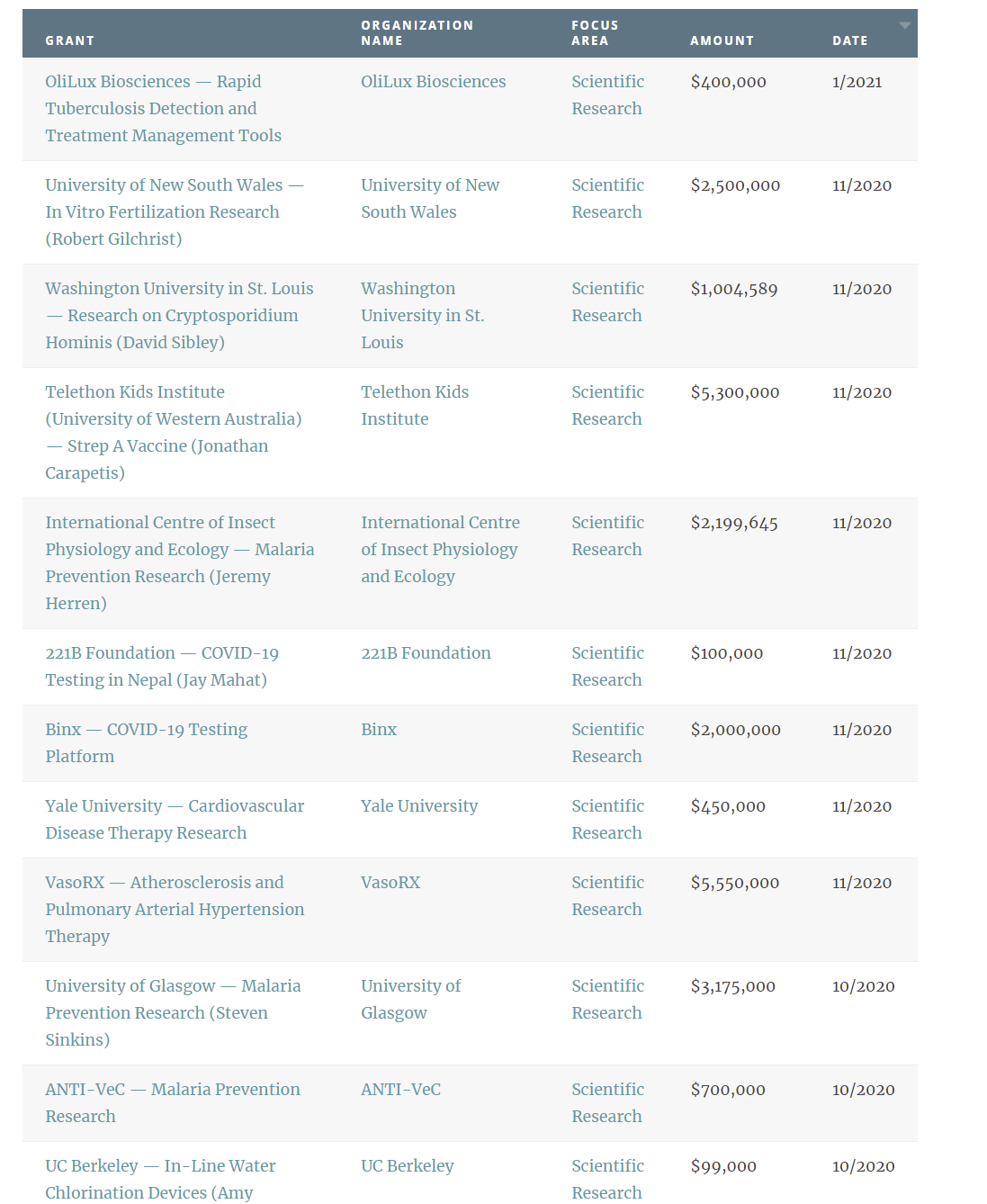

I haven't done a systematic analysis, but at a quick glance I'd note that quite a number of the grants in scientific research seem like their outputs would directly support main EA cause areas such as biorisk and global health - e.g. in the last 1-2 years I see a number on malaria prevention, vaccine development, antivirals, disease diagnostics etc.

jackmalde @ 2021-03-15T18:13 (+10)

By the way, you're right 80K doesn't feature scientific research as a priority path but, they do have a short bit on it in "other longtermist issues".

Also, Holden wrote about it here (although this is almost 6 years old).

BrianTan @ 2021-03-16T00:28 (+2)

Ah right, thanks!

Charles He @ 2021-03-18T17:20 (+3)

Hi Brian,

(Uh, I just interacted with you but this is not related in any sense.)

I think your are interpreting Open Phil's giving to "Scientific research" to mean it is a distinct cause priority, separate from the others.

For example, you say:

... EA groups and CEA don't feature scientific research as one of EA's main causes - the focus still tends to be on global health and development, animal welfare, longtermism, and movement building / meta

To be clear, in this interpretation, someone looking for an altruistic career could go into "scientific research" and make an impact distinct from "Global Health and Development" and other "regular" cause areas.

However, instead, is it possible that "scientific research" mainly just supports Open Philanthropy's various "regular" causes?

For example, a malaria research grant is categorized under "Scientific Research", but for all intents and purposes is in the area of "Global Health and Development".

So this interpretation, funding that is in "Scientific Research" sort of as an accounting thing, not because it is a distinct cause area.

In support of this interpretation, taking a quick look at the recent grants for "Scientific Research" (on March 18, 2021) shows that most are plausibly in support of "regular" cause areas:

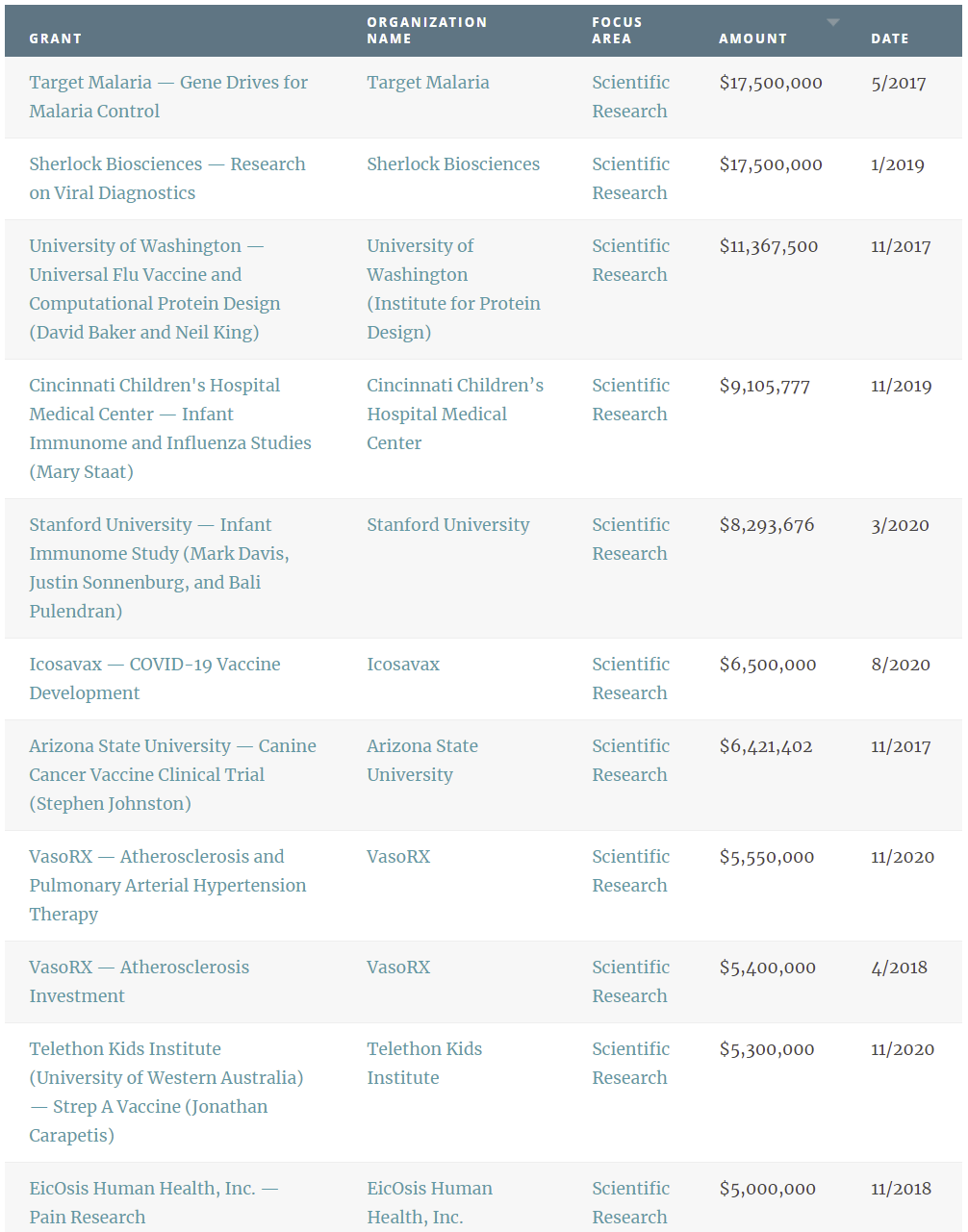

Similarly, sorted by largest amount of grant, the top grants seem to be in the areas of "Global Health", and "Biosecurity".

Your question does highlight the importance of scientific research in Open Philanthropy.

Somewhat of a digression (but interesting) are secondary questions:

- Theories of change related, e.g. questions about institutions, credibility, knowledge, power and politics in R1 academia, and how could these be edited or improved by sustained EA-like funding.

- There is also the presence of COVID-19 related projects. If we wanted to press, maybe unduly, we could express skepticism of these grants. This is an area immensely less neglected and smaller in scale (?)—many more people will die of hunger or sanitation in Africa, even just indirectly from the effects of COVID-19, than the virus itself. The reason why this is undue is that I could see why people sitting on a board donating a large amount of money would not act during a global crisis in a time with great uncertainty.

BrianTan @ 2021-03-19T01:03 (+3)

Hey Charles, yeah Sean _o_ h made a similar comment. I now see that a lot of the scientific research grants are still targeted towards global health and development or biosecurity and pandemic preparedness.

Nevertheless, I think my questions still stand - I'd still love to hear how OpenPhil decided to grant more towards scientific research, especially for global health and development. I'm also curious if there are already any "big wins" among these scientific research grants.

I also think it's worth asking him "Do you think more EAs should be looking into careers in scientific research? Why or why not?". I think only a few EA groups have discussion groups about scientific research or improving science, so I guess a related question would be if he thinks that there should be more reading groups / discussion groups on scientific research or improving science, in order to increase the number of EAs interested in scientific research as a career.

Charles He @ 2021-03-19T01:32 (+3)

This seems like great points and of course, your question stands.

I wanted to say that most R1 research is problematic for new grads: this is because of difficulty of success, low career capital, and frankly "impact" can also be dubious. It is also hard to get started. It typically requires PhD, post-doc(s), all poorly paid—contrast with say, software engineering.

My motivation for writing the above is for others, akin to the "bycatch" article—I don't think you are here to read my opinions.

Thanks for responding thoughtfully and I'm sure you will get an interesting answer from Holden.

KarolinaSarek @ 2021-03-16T14:54 (+29)

In each cause area, what are high-quality funding opportunities/project ideas that Open Phil would like to fund but that don’t currently exist?

KarolinaSarek @ 2021-03-16T14:55 (+23)

What are some of the most common misconceptions EAs have about Open Phil grantmaking?

anonymous_banana @ 2021-03-16T20:08 (+18)

Don’t tell me what you think, tell me what you have in your portfolio

-- Nassim Taleb

What does your personal investment portfolio look like? Are there any unusual steps you've taken due to your study of the future? What aspect of your approach to personal investment do you think readers might be wise to consider?

anonymous_banana @ 2021-03-16T20:08 (+17)

How has serving on the OpenAI board changed your thinking about AI?

anonymous_banana @ 2021-03-16T20:08 (+17)

How do your thoughts on career advice differ from those of 80,000 Hours? If you could offer only generic advice in a paragraph or three, what would you say?

Milan_Griffes @ 2021-03-16T02:07 (+12)

Does this post still basically reflect your feelings about public discourse?

Milan_Griffes @ 2021-03-20T00:25 (+2)

I expanded a bit on this question here.

BrianTan @ 2021-03-15T13:58 (+12)

What have you changed your mind on or updated your beliefs the most on within the last year?

tessa @ 2021-03-14T04:27 (+10)

How has OpenPhil's Biosecurity and Pandemic Preparedness strategy changed in light of how the COVID-19 pandemic has unfolded so far? What biosecurity interventions, technologies or research directions seem more (or less) valuable now than they did a year ago?

AnonymousEAForumAccount @ 2021-03-16T15:31 (+9)

In addition to funding AI work, Open Phil’s longtermist grantmaking includes sizeable grants toward areas like biosecurity and climate change/engineering, while other major longtermist funders (such as the Long Term Future Fund, BERI, and the Survival and Flourishing Fund) have overwhelmingly supported AI with their grantmaking. As an example, I estimate that “for every dollar Open Phil has spent on biosecurity, it’s spent ~$1.50 on AI… but for every dollar LTFF has spent on biosecurity, it’s spent ~$19 on AI.”

Do you agree this distinction exists, and if so, are you concerned by it? Are there longtermist funding opportunities outside of AI that you are particularly excited about?

lynettebye @ 2021-03-19T17:44 (+7)

What’s a skill you have spent deliberate effort in developing that has paid off a lot? Or alternatively, what is a skill you wish you had spent deliberate effort developing much earlier than you did?

eca @ 2021-03-16T14:05 (+7)

To operate in the broad range of cause areas openphil does, I imagine you need to regularly seek advice from external advisors. I have the impression that cultivating good sources of advice is a strong suite of both yours and OpenPhils.

I bet you also get approached by less senior folks asking for advice with some frequency.

As advisor and advisee: how can EAs be more effective at seeking and making use of good advice?

Possible subquestions: What common mistakes have you seen early career EAs make when soliciting advice, eg on career trajectory? When do you see advice make the biggest positive difference in someone’s impact? What changes would you make to how the EA community typically conducts these types of advisor/advisee relationships, if any?

Charles He @ 2021-03-16T04:56 (+7)

Question:

What would you personally want to do with 10x the current level of funds available? What would you personally like to do with 200x the current level of funds available?

Some context:

There is sentiment that funding is high and that Open Philanthropy is “not funding constrained”.

I think it is reasonable to question this perspective.

This is because while Open Philanthropy may have access to $15 billion dollars of funds from the main donors, annual potato chip spending in the US may be $7 billion dollars, and bank overdraft fees may be about $11 billion.

This puts into perspective the scale of Open Philanthropy next to the economic activity of just one country.

Many systemic issues may only be addressable at this scale of funding. Also, billionaire wealth seems large and continues to grow.

BrianTan @ 2021-03-15T13:33 (+7)

Do you think the emergence of COVID-19 has increased or decreased our level of existential risk this century, and why?

lynettebye @ 2021-03-19T17:43 (+6)

I hear the vague umbrella term “good judgement” or even more simply “thinking well” thrown around a lot in the EA community. Do you have thoughts on how to cultivate good judgement? Did you do anything - deliberately or otherwise - to develop better judgement?

lynettebye @ 2021-03-19T17:43 (+6)

For you personally, do you think that loving what you do is correlated with or necessary for doing it really well?

Charles He @ 2021-03-16T04:52 (+6)

Consider the premise that the current instantiation of Effective Altruism is defective, and one of the only solutions is some action by Open Philanthropy.

By “defective”, I mean:

A. EA struggles to engage even a base of younger “HYPS” and “FAANG”, much less millions of altruistic people with free time and resources. Also, EA seems like it should have more acceptance in the “wider non-profit world” than it has.

B. The precious projects funded or associated with Open Philanthropy and EA often seem to merely "work alongside EA". Some constructs or side effects of EA, such as the current instantiation of Longtermism and “AI Safety'' have negative effects on community development.

Elaborations on A:

- Posts like “Bycatch”, “Mistakes on road” and “Really, really, hard” seem to suggest serious structural issues and underuse of large numbers of valuable and highly engaged people

- Interactions in meetings with senior people in philanthropy indicates low buy-in: For example, in a private, high-trust meeting, a leader mentions skepticism of EA, and when I ask for elaboration, the leader pauses, visibly shifts uncomfortably in the Zoom screen, and begins slowly, “Well, they spend time in rabbit holes…”. While anecdotal, it also hints perhaps that widespread "data" is not available due to reluctance (to be clear, fear of offending institutions associated with large amounts of funding).

Elaboration on B:

Consider Longtermism and AI as either manifestations or intermediate reasons for these issues:

The value of present instantiations of “Longtermism” and “AI'' is far more modest than they appear.

This is because they amount to rephrasing of existing ideas and their work usually treads inside a specific circle of competence. This means that no matter how stellar, their activities contribute little to execution of the actual issues.

This is not benign because these activities (unintentionally) are allowing backing in of worldviews that encroach upon the culture and execution of EA in other areas and as a whole. It produces “shibboleths” that run into the teeth of EA’s presentation issues. It also takes attention and interest from under-provisioned cause areas that are esoteric and unpopularized.

Aside: This question would benefit from sketches of solutions and sketches of the counterfactual state of EA. But this isn’t workable as this question is already lengthy, may be contentious, and contains flaws. Another aside: causes are not zero-sum and it is not clear the question contains a criticism of Longtermism or AI as a concern, even stronger criticism can be consistent with say, ten times current funding.

In your role in setting strategy for Open Philanthropy, will you consider the above premise and the three questions below:

- To what degree would you agree with the characterizations above or (maybe unfair to ask) similar criticisms?

- What evidence would cause you to change your mind to the answer to question #1 (e.g. if you believed EA was defective, what would make disprove this in your mind? Or, if you disagreed with the premise, what evidence would be required for you to agree?)

- If there is a structural issue in EA, and in theory Open Philanthropy could intervene to remedy it, is there any reason that would prevent intervention? For example, from an entity/governance perspective or from a practical perspective?

anonymous_banana @ 2021-03-16T20:07 (+5)

What are some of the central feedback loops by which people who are hoping to positively influence the long run future can evaluate their efforts? What are some feedback sources that seem underrated, or at least worth further consideration?

lynettebye @ 2021-03-19T17:43 (+4)

What is your process for deciding your high-level goals? What role does explicit prioritization play? What role does gut-level/curiosity-/intuition-driven prioritization play?

lynettebye @ 2021-03-19T17:42 (+4)

Do you (or did you) ever have doubts about whether you were "good enough" to pursue your career?

(Sorry for posting after the deadline - I haven't been on screens recently due to a migraine and just saw it.)

BrianTan @ 2021-03-15T13:55 (+3)

I think your career story is one of the best examples of not needing formal background/study to do well or become well-versed in multiple EA causes, since from a hedge fund background, you started and led GiveWell, and now you oversee important work across multiple causes with Open Philanthropy. What do you think helped you significantly to learn and achieve these things? Did you have a mindset or mantras you repeated to yourself, or a learning process you followed?

BrianTan @ 2021-03-15T13:30 (+3)

How likely do you think that the amount of funding pledged to be donated to EA causes/projects/orgs will double within the next 5 years, and why?

Here are two other alternative phrasings to the question above:

- Do you think it is likely that the EA movement will have one or more billionaires whose total planned EA-aligned giving is as high or higher than Dustin Moskovitz and Cari Tuna’s planned amount?

- How likely it is do you think that the amount of funding that OpenPhil can allocate will double within the next 5 years, and why? Is it a goal of OpenPhil to expand the funding it can grant out?

wmhowell18 @ 2021-03-13T16:49 (+2)

How much work is going into working out how quickly ($/year) OpenPhil should be spending their money? And does OpenPhil offer any advice to Good Ventures on the money that is being invested as it seems like this is a large variable in the total amount of good OpenPhil / Good Ventures will be able to achieve?