Crucial considerations in the field of Wild Animal Welfare (WAW)

By Holly Elmore ⏸️ 🔸 @ 2022-04-10T19:43 (+68)

Cross-posted from my blog, hollyelmore.substack.com

Wild animal welfare (WAW) is:

- A paradigm that sees the wellbeing and experiences of individual animals as the core moral consideration in our interaction with nature

- An interdisciplinary field of study, largely incubated by Effective Altruism (EA)

- An EA cause area hoping to implement interventions

A crucial consideration is a consideration that warrants a major reassessment of a cause area or an intervention.

WAW is clueless or divided on a bevy of foundational and strategic crucial considerations.

The WAW account of nature

There are A LOT of wild animals

- 100 billion to 1 trillion mammals,

- at least 10 trillion fish,

- 100 to 400 billion birds (Brian Tomasik,^^)

- 10 quintillion insects (Rethink Priorities)

- Each year, there are 30 trillion wild-caught shrimp alone! (Rethink Priorities, unpublished work)

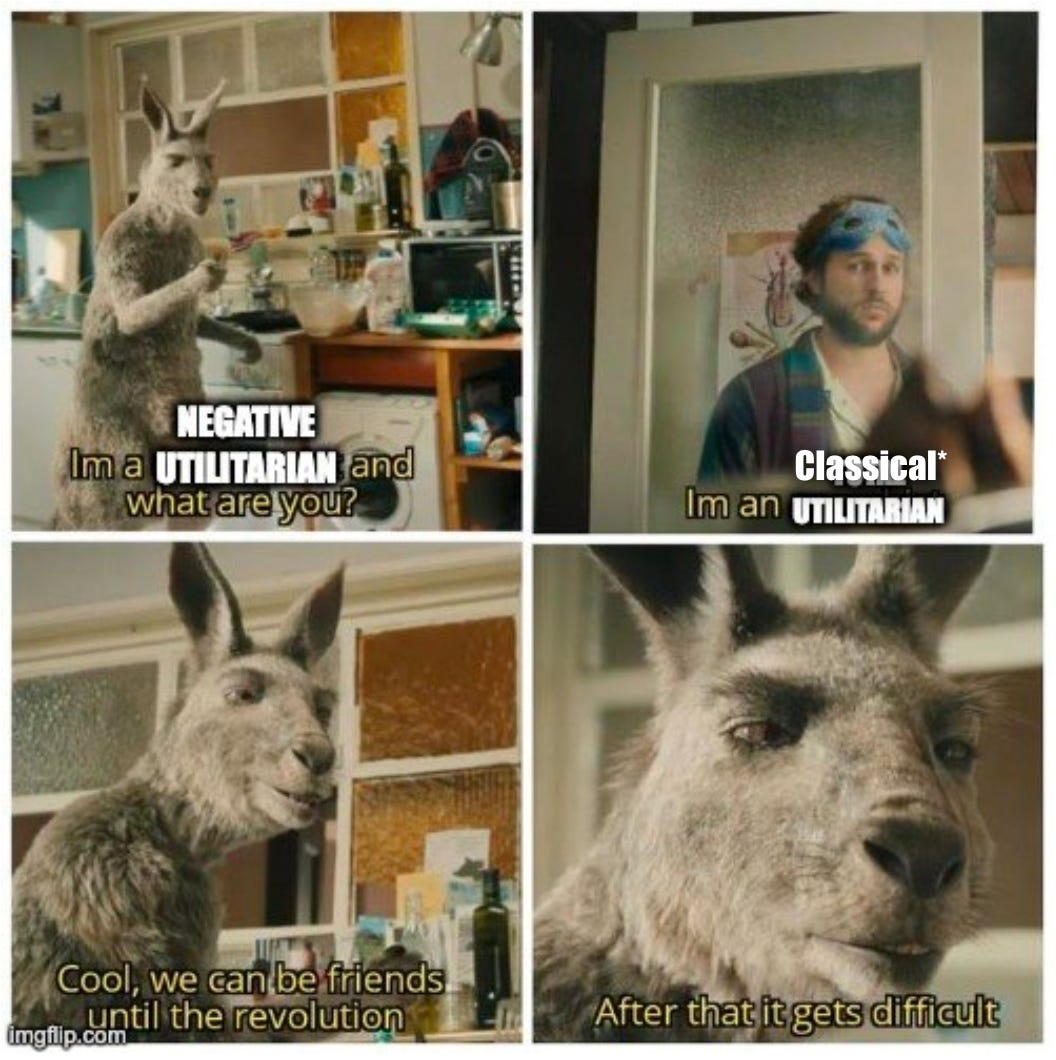

H/T Sami Mubarak via Dank EA Memes

In contrast, there are 24 billion animals alive and being raised for meat at any time

WAW: Nature is not already optimized for welfare

- Though humans do cause wild animals to suffer, like the suffering of polar bears as their ice drifts melt due to anthropogenic climate change, suffering is more fundamentally a part of many wild animal lives

- There are many natural disasters, weather events, and hardships

- Sometimes animals have positive sum relationships (like mutualistic symbiosis), but a lot of times animals are in zero sum conflict (like predation)

- Nature is an equilibrium of every different lineage maximizing reproductive fitness. Given that they evolved, suffering and happiness presumably exist to serve the goal of maximizing fitness, not maximizing happiness

Therefore, nature could, in theory, be changed to improve the welfare of wild animals, which is expected to be less than what it could be.

Foundational Crucial Considerations

Should we try to affect WAW at all?

- Is taking responsibility for wild animals supererogatory?

- Do we have the right to intervene in nature?

- Can we intervene competently, as we intend, and in ways that don’t ultimately cause more harm than good?

What constitutes “welfare” for wild animals?

- What animals are sentient?

- What constitutes welfare?

- How much welfare makes life worth living?

- Negative vs. classical utilitarianism

What are acceptable levels of abstraction?

- Species-level generalizations?

- “Worth living” on what time scale?

- A second?

- A lifetime?

- The run of the species?

- How to weigh intense states

- Purely affective welfare or also preference satisfaction?

How much confidence do we need to intervene?

- Should irreversible interventions be considered?

- Is it okay to intervene if the good effects outweigh some negative effects?

- Are we justified in not intervening?

- Status quo bias

- Naturalistic fallacy

Strategic Crucial Considerations

Emphasis on direct or indirect impact?

- Theory of Change: Which effects will dominate in the long run?

- Direct impact or values/moral circle expansion? (Direct impact for instrumental reasons?)

- How to evaluate impact?

Is WAW competitive with other EA cause areas?

- Should we work on WAW if there aren’t direct interventions now that are cost competitive with existing EA interventions?

- How much should EA invest in developing welfare science cause areas vs exploiting existing research?

What is the risk of acting early vs. risk of acting late?

- How long is the ideal WAW timeline?

- How much time do we have before others act?

- How long do we have before AI will take relevant actions?

How will artificial general intelligence (AGI) affect WAW? How should AI affect WAW?

- AGI could be the only way we could implement complex solutions to WAW

- AGI could also have perverse implementations of our values

- WAW value alignment problem:

- We don’t know/agree on our own values regarding wild animals

- We don’t know how to communicate our values to an AGI

- How do we hedge against different takeoff scenarios?

Convergence?

H/T Nathan Young via Dank EA Memes

Most views converge… in the short term

- “Field-building” for now

- Alliances with conservationists, veterinarians, poison-free advocates, etc.

- As with every other cause area, those with different suffering:happiness value ratios will want different things

- WAW Value Alignment Problem is fundamental, especially troubling because we can get only limited input from the animals themselves.

- How would we know if we got the wrong answer from their perspective?

The long term future of WAW is at stake!

- WAW as a field is still young and highly malleable

- Prevent value lock-in or pick good values to get locked in

- Be transparent about sources of disagreement, separating values from empirical questions from practical questions

Acknowledgments

Thanks to the rest of the WAW team at Rethink Priorities, Will McAuliffe and Kim Cuddington, for help with brainstorming the talk this post was based on, to my practice audience at Rethink Priorities, and to subsequent audiences at University College London and the FTX Fellows office.

I practice post-publication editing and updating.

Denkenberger @ 2022-04-12T00:54 (+4)

Each year, there are 30 trillion wild-caught shrimp alone! (Rethink Priorities,^)

I'm not seeing the 30 trillion number in that reference - is there a direct link to the analysis? 4000 shrimp caught per person per year seems high.

Holly_Elmore @ 2022-04-13T01:33 (+2)

Okay, so it turns out the details of how that number was estimated are still unpublished, and I'll cite them as such along with that meme Peter shared.

Good catch, once again!

Denkenberger @ 2022-04-13T03:26 (+3)

Thanks - good pun!

Holly_Elmore @ 2022-04-13T19:39 (+3)

I almost preemptively disavowed it lol

Linch @ 2022-04-14T01:51 (+2)

I still don't know what pun you guys are talking about.

Holly_Elmore @ 2022-04-14T17:31 (+4)

Good "catch"

Holly_Elmore @ 2022-04-12T16:50 (+2)

Oh shoot, you seem to be right. I must have left a link out. This is the fastest link I could find that makes reference to the Rethink Priorities findings just to give you guys some assurance: https://www.facebook.com/groups/OMfCT/posts/3060710004243897

I'll get a real one!

Charles He @ 2022-04-10T23:07 (+2)

This is an awesome post!

I want to learn more!

How will artificial general intelligence (AGI) affect WAW? How should AI affect WAW?

AGI could be the only way we could implement complex solutions to WAW

How do we hedge against different takeoff scenarios?

I guess one potential premise of this point, is the consideration that AGI may have enormous perception and physical, real world faculty. This faculty includes deep understanding and the ability to edit complex, natural systems. This can be used to reduce suffering in wild animal welfare.

Does the below seem like a useful comment?

- I think the root concern behind AI safety is overwhelming AI faculty overpowering human agency and values. Maybe this faculty will involve enormous capability (omniscient, extremely powerful machines that can edit real world ecologies). But AGI or even “ASI” doesn’t need to do this to be dangerous. It seems like it can just overpower/lock-in humans without obtaining these competencies (it doesn't even need to be AGI to be extremely dangerous).

- (I guess this involves topics like "tractability") it's unclear why humans can’t become competent and use a variety of tools, including “AI”, in prosaic ways that are pretty effective. For example, Google ads optimize for clicks pretty well and complex rules are used to fly planes or drones. The extent of these systems actually seem sort of improbable until developed. So it’s possible that relatively simple tools are sufficient to improve WAW, or at least the sophistication is orthogonal to AGI?

If the above is true, then maybe in some deep sense, safety work on AGI/ASI might be disjoint from work on WAW. So the approach to AGI that was focused on WAW might be very different?

I don’t know much about these areas though. I would like to be corrected to learn more.

Holly_Elmore @ 2022-04-11T02:58 (+2)

It seems like it can just overpower/lock-in humans without obtaining these competencies (it doesn't even need to be AGI to be extremely dangerous).

Ideally, I think WAW would consider all the different AI timelines. TAI that just increases our industrial capacity might be enough to seriously threaten wild animals if it makes us even more capable of shaping their lives and we don't have considered values about how to look out for them.

So it’s possible that relatively simple tools are sufficient to improve WAW, or at least the sophistication is orthogonal to AGI?

I agree! Personally, I don't think it's lack of ntelligence per se holding us back from complex WAW intervention (by which I mean interventions that have to compensate for ripple effects on the ecosystem or require lots of active monitoring). I think we're more limited by the number of monitoring measurements we can take and our ability to deliver specific, measured intervention at specific places and times. I think we could conceivably gain this ability with hardware upgrades alone and no further improvement in algorithms.

Black Box @ 2022-06-16T05:10 (+1)

I think we're more limited by the number of monitoring measurements we can take and our ability to deliver specific, measured intervention at specific places and times.

This seems a bit surprising to me, as currently we don't even have a good understanding of biology/ecology in general, and of welfare biology in particular. (which means that we need intelligence to solve these)

So, did you mean that engineering capabilities (e.g. the minotoring measurements that you mentioned) are more of a bottleneck to WAW than theoretical understanding (into welfare biology) is? If yes, could you explain the reason?

One plausible reason I can think of: When developping WAW interventions, we could use a SpaceX-style approach, i.e. doing many small-scale experiments, iterating rapidly, and learn from tight feedback loops, in a trial-and-error manner. Is that what you were having in mind?