Introduction to Longtermism

By finm @ 2021-01-27T16:01 (+80)

This is a linkpost to https://effectivealtruism.org/articles/longtermism

'Longtermism' is the view that positively influencing the long-term future is a key moral priority of our time.

Three ideas come together to suggest this view. First, future people matter. Our lives surely matter just as much as those lived thousands of years ago — so why shouldn’t the lives of people living thousands of years from now matter equally? Second, the future could be vast. Absent catastrophe, most people who will ever live have not yet been born. Third, our actions may predictably influence how well this long-term future goes. In sum, it may be our responsibility to ensure future generations get to survive and flourish.

Longtermism is not a single, fully worked-out, perspective. Instead, it's a family of views that agree on the importance of safeguarding and improving humanity’s long-term prospects. Neither is it entirely new, of course — it builds on a long history of concern for future generations.

But if the key claims of longtermism are true, this could be a very big deal. Not just in the abstract: there might be ways you could begin working on longtermist causes yourself.

The plan for this post, then, is to explain what longtermism is, why it could be important, and what some possible objections look like.

The case for longtermism

Let's start with definitions. By 'the long-run future', I mean something like the period from now to many thousands of years in the future, or much further still. I'll also associate longtermism with a couple specific claims: first, that this moment in history could be a time of extraordinary influence over the long-run future; and second, that society currently falls far short of taking that influence seriously.

The most popular argument for longtermism appeals to the importance and size of the effects certain actions can have on the world as reasons for taking those actions. I've broken it down into these headers:

- Future people matter

- Humanity's long-term future could be enormous

- We can do things to significantly and reliably affect the long-term future

- Conclusion: positively influencing the long-term future is a key priority

Future people matter

Imagine burying broken glass in a forest. In one possible future, a child steps on the glass in 5 years' time, and hurts herself. In a different possible future, a child steps on the glass in 500 years' time, and hurts herself just as much. Longtermism begins by appreciating that both possibilities seem equally bad: why stop caring about the effects of our actions just because they take place a long time from now?

The lesson that longtermists draw is that people's lives matter, no matter when in the future they occur. In other words, the intrinsic value of harms or benefits in the far future may matter no less than the value of harms or benefits next year.

Of course, this isn't denying that there are often practical reasons to prefer focusing on the effects of our actions over the nearer-term. For instance, it's typically far easier to know how to influence the near future.

Instead, the point is that future effects don't matter less merely because they will happen a long time from now. In cases where we can bring about good lives now or in the future, we shouldn't think those future lives are important just because they haven't yet begun.

Consider that something happening far away from us in space isn't less intrinsically bad just because it's far away. Similarly, people who live on the other side of the world aren't somehow worth less just because they're removed from us by a long plane ride. Longtermism draws an analogy between space and time.

From a moral perspective, we shouldn't discount future times

One way you might object to the above is by suggesting some kind of 'discount rate' over time for the intrinsic value of future welfare, and other things that matter. The simplest version of a social discount rate scales down the value of costs and benefits by a fixed proportion every year.

As it happens, very few moral philosophers have defended this idea. In fact, the reason governments employ a social discount rate may be more about electoral politics than ethical reflection. On the whole, voters prefer to see slightly smaller benefits soon compared to slightly larger benefits a long time from now. Plausibly, this gives governments a democratic reason to employ a social discount rate. But the question of whether governments should respond to this public opinion is separate from the question of whether that opinion is right.

Economic analyses sometimes yield surprising conclusions when a social discount rate isn't included, which is sometimes noted as a reason for using them. But longtermists could suggest that this gets things the wrong way around: since a social discount rate doesn't make much independent sense, maybe we should take those surprising recommendations more seriously.

Consider the 'burying glass in the forest' example above. If we discounted welfare by 3% every year, we would have to think that the child stepping on the glass in 500 years' time is around 2 million times less bad — which it clearly isn't. Since discounting is exponential, this shows that even a modest-seeming annual discount rate implies wildly implausible differences in intrinsic value over long enough timescales.

Consider how a decision-maker from the past would have weighed our interests in applying such a discount rate. Does it sound right that a despotic ruler from the year 1500 could have justified some slow ecological disaster on the grounds that we matter millions of times less than his contemporaries?

Humanity's long-term future could be enormous

Put simply, humanity might last for an incredibly long time. If human history were a novel, we may still be living on its very first page.

Note that 'humanity' doesn't just need to mean 'the species Homo sapiens'. We're interested in how valuable the future could be, and whether we might have some say over that value. Neither of those things depends on the biological species of our descendants.

How long could our future last? To begin with, a typical mammalian species survives for an average of 500,000 years. Since Homo sapiens has been around for around 300,000 years, then we might expect to have about 200,000 years left. But that definitely doesn't mean we can't survive for far longer: only like other mammals, humans are able to anticipate and prevent potential causes of our own extinction.

We might look to the future of the Earth. Absent catastrophe, the Earth is on track to remain habitable for about another billion years (before it gets sterilised by the sun). If humanity survived for just 1% of that time, and similar numbers of people lived per-century as in the recent past, then we could expect at least a quadrillion future human lives: 10,000 future people for every person who has every lived.

If we care about the overall scale of the future, duration isn't the only thing that matters. So it's worth taking seriously the possibilty that humanity could choose to spread far beyond Earth. We could settle other planetary bodies, or construct vast life-sustaining structures.

If humanity becomes spacefaring, all bets are off for how expansive its future could be. The number of stars in the affectable universe, and the number of years over which their energy will be available, are (of course) astronomical.

It's right to be a bit suspicious of crudely extrapolating from past trends. But what matters is multiple signs point towards the enormous potential — even likely — size of humanity's future.

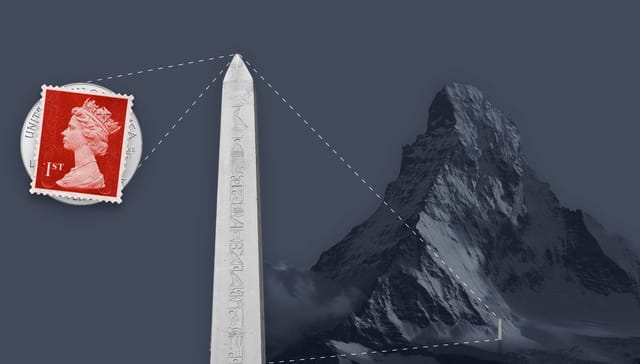

The physicist James Jeans suggested a way of picturing all this. Imagine a postage stamp on a single coin. If the thickness of the coin and stamp combined represents our lifetime as a species, then the thickness of the stamp alone represents the extent of recorded human civilisation. Now imagine placing the coin on top of a 20-metre tall obelisk. If the stamp represents the entire sweep of human civilisation, the obelisk represents the age of the Earth. Now we can consider the future. A 5-metre tree placed atop the obelisk represents the Earth's habitable future. And behind this arrangement, the height of the Matterhorn mountain represents the habitable future of the universe.

If all goes well, the long-term future could be extraordinarily good

Of course, the survival of humanity across these vast timescales is only desirable if the lives of future people are worth living. Fortunately, there are reasons to suspect that the future could be extraordinarily good. Technological progress could continue to eliminate material scarcity and improve living conditions. We have already made staggering progress: the fraction of people living in extreme poverty fell from around 90% in 1820 to less than 10% in 2015. Over the same period, child mortality fell from over 40% to less than 5%, and the number of people living in a democracy increased from less than 1%, to most people in the world. Arguably it would be better to be born into a middle-class American family in the 1980s than to be born as the king of France around the year 1700. Yet, looking forward, we might expect opportunities and capabilities available to a person born in the further future which a present-day billionaire can only dream of.

The lives of nonhuman animals matter morally, and their prospects could also improve a great deal. For instance, advances in protein alternatives could come close to eliminating the world's reliance on farming sentient animals on an industrial scale.

Beyond quality of life, you might also care intrinsically about art and beauty, the reach of justice, or the pursuit of truth and knowledge for their own sakes. Along with material abundance and more basic human development, all of these things could flourish and multiply beyond levels they have ever reached before.

Of course, the world has a long way to go before it is free of severe, pressing problems like extreme poverty, disease, animal suffering, and destruction from climate change. Pointing out the scope of positive futures open to us should not mean ignoring today's problems: problems don't get fixed just by assuming someone else will fix them. In fact, fully appreciating how good things might eventually be could be an extra motivation for working on them — it means the pressing problems of our time needn't be perennial: if things go well, solving them now could come close to solving them for good.

The long-term future could be extraordinary, but that’s not guaranteed. It might instead be bad: perhaps characterised by stagnation, an especially stable kind of totalitarian political regime, or ongoing conflict. That is no reason to give up on protecting the long-term future. On the contrary, noticing that the future could be very bad should make the opportunity to improve it seem more significant — preventing future tragedies and hardship is surely just as important as making great futures more likely.

We don’t know exactly what humanity's future will look like. What matters is that the future could be extraordinarily good or inordinately bad, and it is likely vast in scope — home to most people who will ever live, and most of what we find valuable today. We've reached a point where we can say: if we could make it more likely that we do eventually reach achieve this potential, or if we could otherwise improve the lives of thousands of generations hence, doing so could matter enormously.

We can do things to significantly and reliably affect the long-term future

What makes the enormous potential of our future morally significant is the possibility that we might be able to influence it.

Of course, there are ways to influence the far future in predictable but insignificant ways. For example, you could carve your initials into a stone, or bury your favorite pie recipe in a time capsule. Both actions could reach people hundreds of years in the future — but they aren't likely to matter very much.

You can also influence the far future in significant but unpredictable ways. By crossing the road, you could hold up traffic for some people planning to conceive a child that day, changing which sperm cell fertilises the egg. In doing so, you will have changed the identity of all of that child's descendents, and the children of everyone they meet. In a sense, you can't help but have this kind of influence on the future every day.

However, it's less clear whether there are ways to influence the trajectory of the long-term future both significantly and predictably. And that's the important question.

One answer is climate change. We now know beyond reasonable doubt that human activity disrupts Earth's climate, and that unchecked climate change will have devastating effects. We also know that some of these effects could last a very long time, because carbon dioxide can persist in the Earth's atmosphere for tens of thousands of years. But we also have control over how much damage we cause, such as by redoubling efforts to develop green technology, or building more zero-carbon energy sources. For these reasons, longtermists have strong reasons to be concerned about climate change, and many are actively working on climate issues. Mitigating the effects of climate change is a king of ‘proof of concept’ for positively influencing the long-run future; but there may be even better examples.

In July of 1945, the first nuclear weapon was detonated at the Trinity Site in New Mexico. For the first time, humanity had built a technology capable of destroying itself. This suggests the possibility of an existential catastrophe — an event which permanently curtails human potential, such as by causing human extinction. To prevent an existential catastrophe would be indescribably significant: it would make the difference between a potentially vast and worthwhile future, and no future at all.

Since the major risks of existential catastrophe come from human inventions, it stands to reason that there are things we can do to control those risks.

Consider biotechnology. We've seen the damage that COVID-19 caused. But it may soon become possible to engineer pathogens to be far more deadly or transmissible than anything we've seen before. But the same breakneck pace of innovation that could pose such risks also suggests ways of mitigating them; such as by using metagenomic sequencing to detect an emerging pathogen before it goes pandemic.

Second, consider artificial intelligence. Experts in the field such as Stuart Russell are increasingly trying to warn us about the dangers of advanced AI. But just how some people conduct research to improve the capabilities of AI, we might also spend more efforts researching how to make sure the arrival of transformative AI doesn't permanently derail humanity's long-run future.

And while few of us are in a place to work on these projects directly, many of us are in a position to support them indirectly, or even to shift our career toward that work.

There might be other ways to improve the long-run future more incrementally, as well as reducing the risks from catastrophes.

You might identify some moments from history where the values that prevailed were very sensitive in a narrow window of time, but where those values ended up influencing enormous stretches of history. For instance, the belief system of Confucianism has determined much of Chinese history, but it's one of a few belief systems (including Legalism and Daoism) which could have taken hold before the reigning emperor during the Han dynasty was persuaded to embrace Confucianism. Perhaps some of us could be in position to help the right values prevail over the coming few centuries — such as values of cosmopolitanism and political liberalism.

This could be the most important century

There might always have been some things our predecessors could have done to improve the long-run future. But some moments in history were more influential than others. From our vantage point in time, we can see how some periods have an especially strong claim to determining the course of history in a lasting way.

So if the present day looked influential in this way, that would strengthen the case for thinking we can significantly and reliably affect the long-term future.

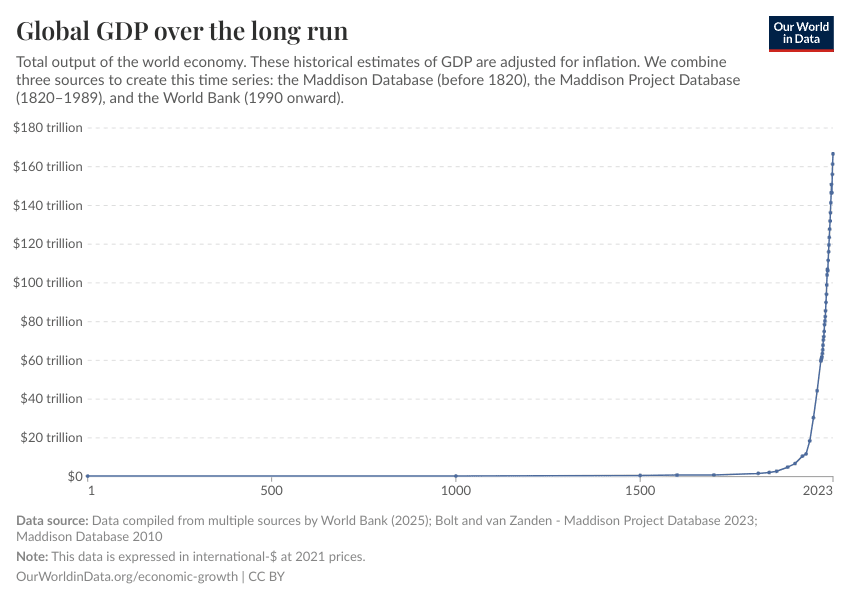

Indeed, this moment in history is far from normal. If you zoom out and look at the century we're living in against the context of human history, this point in time looks totally unprecedented. In particular, we appear to be living during a period of unsustainably rapid economic growth and technological change. And there are some indications that we might soon develop transformatively powerful AI systems — outclassing human intellect in every way that matters, and perhaps helping bootstrap a period of blisteringly fast, self-reinforcing economic growth.

For these reasons, Holden Karnofsky argues that what we do in the next few decades could turn out to be extremely influential over the long-run future. In fact, he goes further: he argues that we could be living in the most important century ever.

Conclusion: positively influencing the long-term future is a key priority

Perhaps it's already obvious, but let's spell out how this argument comes together.

We've seen reasons for thinking that future people matter; that there could be an enormous number of future people; and that there are things we can do now to improve the long-run future and ensure that future people get to live in the first place. It follows that we should take action: that we should do those things to improve the lives of future people, and that we should safeguard against catastrophes which could prevent them from ever existing.

But the thing that makes longtermism seem like a key priority is the vast potential number of future people. When we're thinking about how to do the most good, we should consider the scale of the problem we're trying to address. If two problems are equally difficult to make progress on, but the first affects one hundred people while the second affects one billion, it makes sense to strongly prioritise the second. On this kind of reasoning, projects which could improve the very long-term future look like they should be prioritised very highly, because the lives of so many people hang in the balance. In other words, the stakes look astonishingly high.

Truly taking time to appreciate what we might achieve over the long-term makes this possibility look remarkably significant: our decisions now might be felt, in a significant sense, by trillions of people yet to be born.

That said, perhaps you don't need philosophical arguments about the size of the future to see that longtermist cause areas look like urgent priorities. It's alarming enough to see how little the world is still doing about potentially catastrophic risks from nuclear weapons, engineered pandemics, or powerful and unaligned AI. On those fronts, the cumulative risk between now and even when our own grandchildren grow up looks unacceptably high. Philosophising aside, it looks like we would be crazy not to strive to reduce that level of risk.

Lastly, note that you don't need to believe that the future is likely to be overwhelmingly good to care about actions that improve its trajectory. If anything, you might think that it's more important to prevent the risk that future lives are characterised by suffering, than to improve the lives of future people who are already well-off.

Going Further

If you'd read this far, you should now have a good overview of longtermism. From here, you could leave this page to investigate one of the topics that sounded especially interesting to you.

Otherwise, below I've gone into a bit more depth on different framings, definitions, and objections.

Neglectedness

The more neglected a cause is, the less effort and attention is going into it compared to its overall scale and how feasible it could be to make progress on it. Some of the causes that look hugely important by the lights of longtermism also seem highly neglected in this sense. And that might count as a reason for working on them.

For instance, the philosopher Toby Ord estimates that bioweapons, including engineered pandemics, pose about 10 times more existential risk than the combined risk from nuclear war, runaway climate change, asteroids, supervolcanoes, and naturally arising pandemics.[1]

The Biological Weapons Convention is the international body responsible for the continued prohibition of these bioweapons — and its annual budget is less than that of the average McDonald's restaurant.

If actions aimed at improving or safeguarding the long-run future are so important, why expect them to be so neglected?

One major reason is that future people don't have a voice, because they don't presently exist. For instance, women needed political representation in order to begin improving their lives. But women fought to be represented in the first place by using their voices, through protest and writing. But because future people don't exist yet, they are entirely ‘voiceless’. Yet, they still deserve moral status: they can still be seriously harmed or helped by political decisions.

A second perspective comes from Peter Singer's concept of an 'expanding moral circle'. Singer describes how human society has come to recognise the interests of progressively widening circles. For much of history, we lived in small groups, and we were reluctant to help someone from a different group. But over time, our sympathies grew to include more and more people. Today, many people act to help complete strangers on the other side of the world, or restrict their diets to avoid harming animals (which would have baffled most of our earliest ancestors). Singer argues that these 'circle-widening' developments should be welcomed. Yet, expanding the moral circle to include nonhuman animals doesn't mark the last possible stage — it could expand to embrace the many billions of people, animals, and other beings yet to be born in the (long-run) future.

A third explanation for the neglectedness of future people has to do with 'externalities'. An externality is a harm or benefit of our actions that affects other people.

If we act out of self-interest, we will ignore externalities and focus on actions that benefit us personally. But this could mean ignoring actions with enormous total benefits or harms, when they mostly affect other people. If a country pollutes, it harms other countries but captures the benefits for itself. Alternatively, if a country commissioned publicly available medical research, the benefits could extend to people all over the world (a "positive externality"). But these benefits are shared, while that one country pays all the costs. Publicly accessible research is one example of a "global public good", where it might never be in the interests of a single country to invest in them, even thought the overall benefits far outweigh the total costs. So we should expect countries to underinvest in global public goods as long as they're looking out for their self-interest. Conversely, countries may overinvest in activities that benefit them but impose costs on other countries.

The externalities from actions which stand to influence the long-run future not only extend to people living in other countries, but also to everyone in the future who will be affected, but who is not yet born. As such, the positive externalities from improvements to the long-run future are enormous, and so are the negative externalities from increasing risks to that future. If we think of governments as mostly self-interested actors, we shouldn't be surprised to see them neglect longtermist actions, even if the total benefits stood to hugely outweigh their total costs. Such actions aren't just global public goods, they're intergenerational global public goods.

Other motivations for longtermism

Above, I described a broadly consequentialist argument for longtermism. In short: the future holds a vast amount of potential, and there are (plausibly) things we can do now to improve or safeguard that future, which emphasises the potneital scale of the long-term future. Because there could be so many future people, and we should be sensitive to scale when considering what to prioritise, we should really take seriously whether there are things we can do to poisitvely influence the long-run future. Further, we should expect some of the best such opportunities to remain open, because improving the long-run future is a public good, and because future people are disenfranchised. Because of the enormous difference such actions can make, we should treat them as key priorities.

But you don't need to be a strict consequentialist to buy into this kind of argument, as long as you care to some degree about the expected size of the impact of your actions (e.g. how many people they are likely to affect).

That said, there are other motivations for longtermism, and especially mitigating existential risks.

One alternative framing invokes obligations to tradition and the past. Our forebears made sacrifices in the service of projects they hoped would far outlive them — such as advancing science or opposing authoritarianism. Many things are only worth doing in light of a long future, during which people can enjoy the knowledge, progress, and freedoms you secured for posterity. No cathedrals would have been built if their architects didn't expect them to survive for centuries. As such, to fail to keep humanity on course for a flourishing long-term future might also betray those efforts from the past.

Another framing invokes 'intergenerational justice'. Just as you might think that it's unjust that so many people live in extreme poverty when so many other people enjoy outrageous wealth, you might also think that it would be unfair or unjust that people living in the far future were much worse off than us, or unable to enjoy things we take for granted. On these lines, the 'intergenerational solidarity index' measures "how much different nations provide for the wellbeing of future generations".

Finally, from an astronomical perspective, life looks to be exceptionally rare. We may very well represent the only intelligent life in our galaxy, or even the observable universe. Perhaps this confers on us a kind of 'cosmic significance' — a special responsibility to keep the flame of consciousness alive beyond this precipitous period for our species.

Other definitions and stronger versions

By 'longtermism', I had in mind the idea that we may be able to positively influence the long-run future, and that doing so should be a key moral priority of our time.

Some 'isms' are precise enough to come with a single, undisputed, definition. Others, like feminism or environmentalism, are broad enough to accommodate many (often partially conflicting) definitions. I think longtermism is more like environmentalism in this sense. But it could still be helpful to consider some more precise definitions.

The philosopher William MacAskill suggests the following —

Longtermism is the view that: (i) Those who live at future times matter just as much, morally, as those who live today; (ii) Society currently privileges those who live today above those who will live in the future; and (iii) We should take action to rectify that, and help ensure the long-run future goes well.

This means that longtermism would become false if society ever stopped privelaging present lives over future lives.

Other minimal definitions don't depend on changeable facts about society. Hilary Greaves suggests something like —

The view that the (intrinsic) value of an outcome is the same no matter what time it occurs.

If that were true today, it would be true always.

Some versions of longtermism go further than the above, and make some claim about the high relative importance of influencing the long-run future over other ways we might improve the world.

Nick Beckstead's 'On the Overwhelming Importance of Shaping the Far Future' is one of the first serious discussions of longtermism. His thesis:

From a global perspective, what matters most (in expectation) is that we do what is best (in expectation) for the general trajectory along which our descendants develop over the coming millions, billions, and trillions of years.

More recently, Hilary Greaves and Will MacAskill have made a tentative case for what they call 'strong longtermism'.

Axiological strong longtermism: In the most important decision situations facing agents today, (i) Every option that is near-best overall is near-best for the far future; and (ii) Every option that is near-best overall delivers much larger benefits in the far future than in the near future.

Deontic strong longtermism: In the most important decision situations facing agents today, (i) One ought to choose an option that is near-best for the far future; and (ii) One ought to choose an option that delivers much larger benefits in the far future than in the near future.[2]

The idea being expressed is something like this: in cases such as figuring out what to do with your career, or where to spend money to make a positive difference, you'll often have a lot of choices. Perhaps surprisingly, the best options are pretty much always among the (relatively few) options directed at making the long-run future go better. Indeed, the reason they are the best options overall is almost always because they stand to improve the far future by so much.

It's useful to lay out what the strongest versions of some view could look like, but please note that you do not have to agree with 'strong longtermism' in order to agree with longtermism! Similarly, it's perfectly fine to call yourself a feminist without buying into a single, precise, and strong statement of what feminism is supposed to be.

Objections

There are many pressing problems that affect people now, and we have a lot of robust evidence about how best to address them. In many cases, those solutions also turn out to be remarkably cost-effective. Longtermism holds that other actions may be just as good, if not better, because they stand to influence the very long-run future. By their nature, these activities are backed by less robust evidence, and primarily protect the interests of people who don't yet exist. Could these more uncertain, future-focused activities really be the best option for someone who wants to do good?

If the case for longtermism is to succeed, it must face up to a number of objections.

Person-affecting views

'Person-affecting' moral views try to capture the intuition that an act can only be good or bad if it is good or bad for someone. In particular, many people think that while it may be good to make somebody happier, it can't be as good to literally make an extra (equivalently) happy person. Person-affecting views make sense of that intuition: making an extra happy person doesn't benefit that person, because in the case where you didn't make them, they don't exist, so there's no person who could have benefited from being created.

One upshot of person-affecting views is that failing to bring about the vast number of people which the future could contain isn't the kind of tragedy which longtermism makes it out to be, because there's nobody to complain of not having been created in the case where you don't create them — nobody is made worse-off. Failing to bring about those lives would not be morally comparable to ending the lives of an equivalent number of actually existing people; rather, it is an entirely victimless crime.

Person-affecting views have another, subtler, consequence. In comparing the longer-run effects of some options, you will be considering their effects on people who are not yet born. But it's almost certain that the identities of these future people will be different between options. That's because the identity of a person depends on their genetic material, which depends (among other things) on the result of a race between tens of millions of sperm cells. So basically anything you do today is likely to have 'ripple effects' which change the identities of nearly everyone on Earth born after, say, 2050.

Assuming the identity of nearly every future person is different between options, then it cannot be said of almost any future person that one option is better or worse than the other for that person. But again, person-affecting views claim that acts can only be good or bad if they are good or bad for particular people. As a result, they find it harder to explain why some acts are much better than others with respect to the long-run future.

Perhaps this is a weakness of person-affecting views — or perhaps it reflects a real complication with claims about 'positively influencing' the long-run future. To give an alternative to person-affecting views, the longtermist still needs to explain how some futures are better than others, when the outcomes contain totally different people. And it's not entirely straightforward to explain how one outcome can be better, if it's better for nobody in particular!

Risk aversion and recklessness

Many efforts at improving the long-run future are highly uncertain. Yet, some longtermists suggest they are still worth trying, because their expected value looks extremely high (the chance of the efforts working may be small, but they would make a huge difference if they did). This is especially relevant for existential risks, where longtemists argue it may be very important to divert efforts towards mitigating those risks, even when many of them may be very unlikely to transpire anyway. When the probabilities involved are small enough, a natural response is to call this 'reckless'[3]: it seems like something may have gone wrong when what we ought to do is determined by outcomes with very low probabilities.

Demandingness

Some critics point out that if we took strong versions of longtermism seriously, we would end up morally obligated to make sacrifices, and radically reorient our priorities. For instance, we might forego economic gains from risky technologies in order to build and implement ways of making them safer. On this line of cricitism, the sacrifices demanded of us by longtermism are unreasonably big.

There are some difficult hypothetical questions about precisely how much a society should be prepared to sacrifice to ensure its own survival, or look after the interests of future members. But one reasonable response may be to point out that we seem to be very far away from having to significant near-term sacrifices. As the philosopher Toby Ord points out, "we can state with confidence that humanity spends more on ice cream every year than on ensuring that the technologies we develop do not destroy us". Meeting the 'ice cream bar' for spending wouldn't be objectionably demanding at all, but we're not even there yet.

Epistemic uncertainty: do we even know what to do?

If we were totally confident about the long-run effects of our actions, then the case for longtermism would be far clearer. However, in the real world, we're often close to clueless. And sometimes we're clueless in especially 'complex' ways — when there are reasons to expect our actions to have systematic effects on the long-run future, but it's very hard to know whether those effects are likely to be good or bad.

Perhaps it's so hard to predict long-run effects that the expected value of our options actually isn't much determined by the long-run future at all.

Relatedly, perhaps we can sometimes just be confident that it's just far too difficult to reliably influence the long-run future in a positive way, making it better to focus on present-day impact.

Alexander Berger, co-CEO of Open Philanthropy, makes the point another way:

"When I think about the recommended interventions or practices for longtermists, I feel like they either quickly become pretty anodyne, or it’s quite hard to make the case that they are robustly good".

One way of categorising longtermist interventions is to distinguish between 'broad' and 'targeted' approaches. Broad approaches focus on improving the world in ways that seem robustly good for the long-run future, such as reducing the likelihood of a conflict between great powers. Targeted approaches zoom in on more specific points of influence, such as by focusing on technical research to make sure the transition to transformative artificial intelligence goes well. On one hand, Berger is saying that you don't especially need the entire longtermist worldview to see that 'broad' interventions are sensible things to do. On the other hand, the more targeted interventions often look like they're focusing on fairly speculative scenarios — we might need to be correct on a long list of philosophical and empirical guesses for such interventions to end up being important.

Longtermists themselves are especially concerned with these this kind of worry. How might they respond?

- You could say that because longtermism is a claim about what's best to do on the margin, it only requires that we can identify some cases where the long-run effects of our actions are predictably good — there's no assumption about how predictable long-run effects are in general.

- Alternatively, you could concede that we are currently very uncertain about how concretely to influence the long-run future, but this doesn't mean giving up. Instead, it could mean turning our efforts towards becoming less uncertain, by doing empirical and theoretical research.

Political risks

Some people worry that in advocating for the “seriousness about the enormous potential stakes” of the long-term future could have go wrong if not communicated carefully. In particular, this "high stakes" worldview could cause us to care less than we should about immediate pressing problems, like people living in severe poverty today. Alternatively, others might use the ideas of longtermism to justify curtailing political freedoms.[4]

The liberal philosopher Isaiah Berlin pithily summarises this kind of argument:

To make mankind just and happy and creative and harmonious forever - what could be too high a price to pay for that? To make such an omelette, there is surely no limit to the number of eggs that should be broken.

Another response is to note that much discussion within longtermism is focused on reducing the likelihood of great power conflict, improving institutional decision-making, and spreading good (liberal) political norms — in other words, securing an open society for our descendants.

But perhaps it would be too arrogant to entirely ignore the worry about longtermist ideas being used to justify political harms in the future, if its ideas end up getting twisted or misunderstood. We know that even very noble aspirations can eventually transform into terrible consequences in the hands of normal, fallible people. If that worry were legitimate, it would be very important to handle the idea of longtermism with care.

Conclusion

Arthur C. Clarke once wrote:

“Two possibilities exist: either we are alone in the Universe or we are not. Both are equally terrifying”.

Similarly, one of two things will happen over the long-run future: either humanity flourishes far into the future, or it does not.

These are both very big possibilities to comprehend. We rarely do it, but taking them both seriously makes clear the importance of trying to safeguard and improve humanity's long-run future today. Our point in history suggests this project may be a key priority of our time — and there is much more to learn.

Further Reading

- Longtermism.com (includes an FAQ and a reading list)

- Will MacAskill | What we owe the future (40-minute presentation)

- Against the Social Discount Rate — Tyler Cowen and Derek Parfit

- On the Overwhelming Importance of Shaping the Far Future — Nick Beckstead

- The Case for Strong Longtermism — Will MacAskill and Hilary Greaves

- Reasons and Persons — Derek Parfit

- Future generations and their moral significance — 80,000 Hours

- 'Longtermism' (EA Forum post) — Will MacAskill

- Orienting towards the long-term future — Joseph Carlsmith (talk and Q&A)

- The Epistemic Challenge to Longtermism — Christian J. Tarsney

- Formalising the "Washing Out Hypothesis" — Duncan Webb

- Alexander Berger on the case for working on global health and wellbeing instead of longtermist cause areas

Toby Ord, The Precipice (2020). p. 167, Table 6.1 ↩︎

Roughly speaking, 'axiology' has to do with what's best to do, and 'deontology' has to do with what you should do. That's why Greaves and MacAskill give two versions of strong longtermism — it could be the case that actions which stand to influence the very long-run future are best in terms of their expected outcomes, but not the case that we should always do them (because you might think this is unreasonably demanding). ↩︎

For more on this, see this working paper from Beckstead and Thomas (2021), 'A paradox for tiny possibilities and enormous values' ↩︎

The philosopher Nick Bostrom describes a relevant scenario in his 2019 paper 'The Vulnerable World Hypothesis'. ↩︎

AGB @ 2021-01-30T12:55 (+8)

Thank you for this! This is not the kind of post that I expect to generate much discussion, since it's relatively uncontroversial in this venue, but is the kind of thing I expect to point people to in future.

I want to particularly draw attention to a pair of related quotes partway through your piece:

I've tried explaining the case for longtermism in a way that is relatively free of jargon. I've argued for a fairly minimal version — that we may be able to influence the long-run future, and that aiming to achieve this is extremely good and important. The more precise versions of longtermism from the proper philosophical literature tend to go further than this, making some claim about the high relative importance of influencing the long-run future over other kinds of morally relevant activities.

***

Please note that you do not have to buy into strong longtermism in order to buy into longtermism!

Davidmanheim @ 2021-02-04T14:09 (+7)

This is great. I'd note, regarding your discussion of risk aversion and recklessness, that our (very) recent paper disputes (and I would claim refutes) the claim that you can justify putting infinities in the calculation.

See the forum post on the topic here: https://forum.effectivealtruism.org/posts/m65rH3D3pfJzsBMfW/the-upper-limit-of-value

weeatquince @ 2021-02-07T11:38 (+2)

Great introduction. Strongly upvoted. It is really good to see stuff written up clearly. Well done!!!

To add some points on discounting. This is not to disagree with you but to add some nuance onto a topic it is useful for people to understand. Government's (or at least the UK government) apply discount rates for three reasons:

- Firstly, pure-time discounting as people want things now rather than in the future of roughly 0.5%. This is what you seem to be talking about here when you talk about discounting. Interestingly this is not done for electoral politics (the discount rate is not a big political issue) but because the literature on the topic has numbers ranging from 0% to 1% so the government (which listens to experts) goes for 0.5%.

- Secondly, catastrophic risk discounting of 1% to account for the fact that a major catastrophic risk could make a project's value worthless, eg earthquakes could destroy the new hospital, social unrest could ruin a social programs success, etc.

- Thirdly, wealth discounting of 2%, to account for the fact that the future will be richer so transferring wealth form now to the future has a cost. This does not apply to harms such as loss of life.

Ultimately it is only the fist of these that longtermists and philosophers tend to disagree with. The others may still be valid to longtermists.

For example, if you were to estimate there is a background basically unavoidable existential risk rate of 0.1% (as the UK government Stern Review discount rate suggests) then basically all the value of your actions (99.5%) is eroded after 5000 years and arguably it could be not worth thinking beyond that timeframe. There are good counter-considerations to this, not trying to start a debate here, just explain how folk outside the longtermist community apply discounting and how it might reasonably apply to longtermists' decisions.

jackmalde @ 2021-02-07T19:48 (+3)

For example, if you were to estimate there is a background basically unavoidable existential risk rate of 0.1% (as the UK government Stern Review discount rate suggests) then basically all the value of your actions (99.5%) is eroded after 5000 years and arguably it could be not worth thinking beyond that timeframe.

Worth bearing in mind that uncertainty over the discount rate leads to you applying a discount rate that declines over time (Weitzman 1998). This avoids conclusions that say we can completely ignore the far future.

weeatquince @ 2021-02-08T01:46 (+2)

Yes that is true and a good point. I think I would expect a very small non-zero discount rate to be reasonable, although still not sure what relevance this has to longtermism arguments.

jackmalde @ 2021-02-08T08:26 (+2)

Appendix A in The Precipice by Toby Ord has a good discussion on discounting and its implications for reducing existential risk. Firstly he says discounting on the basis of humanity being richer in the future is irrelevant because we are talking about actually having some sort of future, which isn't a monetary benefit that is subject to diminishing marginal utility. Note that this argument may apply to a wide range of longtermist interventions (including non-x-risk ones).

Ord also sets the rate of pure time preference equal to zero for the regular reasons.

That leaves him with just discounting for the catastrophe rate which he says is reasonable. However, he also says that it is quite possible that we might be able to reduce catastrophic risk over time. This leads us to be uncertain about the catastrophe rate in the future meaning, as mentioned, that we should have a declining discount rate over time and that we should actually discount the longterm future as if we were in the safest world among those we find plausible. Therefore longtermism manages to go through, provided we have all the other requirements (future is vast in expectation, there are tractable ways to influence the long-run future etc.).

Ord also points out that reducing x-risk will reduce the discount rate (through reducing the catastrophe rate) which can then lead to increasing returns on longtermist work.

I guess the summary of all this is that discounting doesn't seem to invalidate longtermism or even strong longtermism, although discounting for the catastrophe rate is relevant and does reduce its bite at least to some extent.

weeatquince @ 2021-02-08T10:30 (+2)

Thank you Jack very useful. Thank you for the reading suggestion too. Some more thoughts from me

"Discounting for the catastrophe rate" should also include discounting for sudden positive windfalls or other successes that make current actions less useful. Eg if we find out that the universe is populated by benevolent intelligent non-human life anyway, or if a future unexpected invention suddenly solves societal problems, etc.

There should also be an internal project discount rate (not mentioned in my original comment). So the general discount rate (discussed above) applies after you have discounted the project you are currently working on for the chance that the project itself becomes of no value – capturing internal project risks or windfalls, as opposed to catastrophic risk or windfalls.

I am not sure I get the point about "discount the longterm future as if we were in the safest world among those we find plausible".

I don’t think any of this (on its own) invalidates the case for longtermism but I do expect it to be relevant to thinking through how longtermists make decisions.

jackmalde @ 2021-02-08T19:36 (+1)

I am not sure I get the point about "discount the longterm future as if we were in the safest world among those we find plausible".

I think this is just what is known as Weitzman discounting. From Greaves' paper Discounting for Public Policy:

In a seminal article, Weitzman (1998) claimed that the correct results [when uncertain about the discount rate] are given by using an effective discount factor for any given time t that is the probability-weighted average of the various possible values for the true discount factor R(t): Reff(t) = E[R(t)]. From this premise, it is easy to deduce, given the exponential relationship between discount rates and discount factors, that if the various possible true discount rates are constant, the effective discount rate declines over time, tending to its lowest possible value in the limit t → ∞.

This video attempts to explain this in an excel spreadsheet.

weeatquince @ 2021-02-08T20:28 (+2)

Makes sense. Thanks Jack.

weeatquince @ 2021-02-07T11:51 (+2)

To add a more opinionated less factual point, as someone who researches and advises policymakers on how to think and make long-term decisions, I tend to be somewhat disappointed by the extent to which the longtermist community lacks discussions and understanding of how long-term decision making is done in practice. I guess, if worded strongly, this could be worded as an additional community-level objection to longtermism along the lines of:

Objection: The longtermist idea makes quite strong somewhat counterintuitive claims about how to do good but the longtermist community has not yet demonstrated appropriately strong intellectual rigour (other than in the field of philosophy) about these claims and what they mean in practice. Individuals should therefore should be sceptical of the claims of longtermists about how to do good.

If worded more politely the objection would basically be that the ideas of longtermism are very new and somewhat untested and may still change significantly so we should be super cautious about adopting the conclusions of longtermists for a while longer.

finm @ 2021-02-08T08:22 (+1)

Thanks so much for both these comments! I definitely missed some important detail there.

jackmalde @ 2021-02-07T19:58 (+1)

Objection: The longtermist idea makes quite strong somewhat counterintuitive claims about how to do good but the longtermist community has not yet demonstrated appropriately strong intellectual rigour (other than in the field of philosophy) about these claims and what they mean in practice. Individuals should therefore should be sceptical of the claims of longtermists about how to do good.

Do you think there are any counterexamples to this? For example certain actions to reduce x-risk?

weeatquince @ 2021-02-08T01:33 (+2)

I guess some of the: AI will be transformative therefore deserves attention arguments are some of the oldest and most generally excepted within this space.

For various reasons I think the arguments for focusing on x-risk are much stronger than other longtermist arguments, but how best to do this, what x-risks to focus on, etc, is all still new and somewhat uncertain.