Émile P. Torres’s history of dishonesty and harassment

By anonymous-for-obvious-reasons @ 2024-05-01T12:47 (+249)

This is a cross-post and you can see the original here, written in 2022. I am not the original author, but I thought it was good for more EAs to know about this.

I am posting anonymously for obvious reasons, but I am a longstanding EA who is concerned about Torres's effects on our community.

An incomplete summary

Introduction

This post compiles evidence that Émile P. Torres, a philosophy student at Leibniz Universität Hannover in Germany, has a long pattern of concerning behavior, which includes gross distortion and falsification, persistent harassment, and the creation of fake identities.

Note: Since Torres has recently claimed that they have been the target of threats from anonymous accounts, I would like to state that I condemn any threatening behavior in the strongest terms possible, and that I have never contacted Torres or posted anything about Torres other than in this Substack or my Twitter account. I have no idea who is behind these accounts.

To respect Torres's privacy and identity, I have also omitted their first name from the screenshots and replaced their previous first name with 'Émile'.

Table of contents

Demonstrable falsehoods and gross distortions

Brief digression on effective altruism

More falsehoods and distortions

My story

Before I discuss Torres’s behavior, I will provide some background about myself and my association with effective altruism (EA). I hope this information will help readers decide what biases I may have and subject my arguments to the appropriate degree of critical scrutiny.

I first heard about EA upon attending Aaron Swartz’s memorial in January 2013. One of the speakers at that event was Holden Karnofsky, co-founder of GiveWell, a charity evaluator for which Aaron had volunteered. Karnofsky described Aaron as someone who “believed in trying to maximize the good he accomplished with each minute he had.” I resonated with that phrase, and in conversation with some friends after the memorial, I learned that Aaron’s approach, and GiveWell’s, were examples of what was, at the time, a new movement called “effective altruism.”

Despite my sympathy for EA, I never got very involved with it, due to a combination of introversion and the sense that I hadn't much to offer. I have donated a small fraction of my income to the Against Malaria Foundation for the last nine years, but I have never taken the Giving What We Can pledge, participated in a local EA group, or volunteered or worked for an EA organization.

I decided to write this article after a friend forwarded me one of Torres’s critical pieces on longtermism. I knew enough about this movement –– which emerged out of EA –– to quickly identify some falsehoods and misrepresentations in Torres’s polemic. So I was surprised to find, when I checked the comments on Twitter, that no one else was pointing out these errors. A few weeks later, I discovered that this was just one of a growing number of articles by Torres that attacked these ideas and their proponents. Since I also noticed several factual inaccuracies in these other publications, I got curious and decided to look into Torres’s writings more closely.

I began to follow Torres's Twitter presence with interest and to investigate older Twitter feuds that Torres occasionally references. After looking into these and systematically checking the sources Torres cites in support of their various allegations, I found Torres’s behavior much more troubling than I had expected. Specifically, I learned that Torres had not only misrepresented and distorted many people's views and made various other demonstrably false claims, but had also engaged in stalking, harassment, and sockpuppetry.

My original plan was to spend a day or two examining this material, but the project took more than a week of my spare time. Furthermore, the initial draft was about 60% longer than the article you are now reading. To publish what I ultimately decided to leave out would have taken a lot more time and effort, and I currently have a limited budget for these side projects.

I tried to document every claim in the article rigorously. If you spot any mistakes, however, please let me know, anonymously or otherwise, using this form.1 You are also welcome to contact me if you have additional evidence corroborating my findings or other documented examples of concerning behavior by Torres. Please note that I have a real life and a real job, and that I may be unable to respond to your messages or suggestions.

In what follows, I discuss:

How Torres harassed and stalked multiple people, and made racist comments about at least one person. [more]

How Torres repeatedly made demonstrably false claims. [more]

How Torres grossly distorted the views of several people. [more]

How Torres created multiple fake accounts to harass their targets, evade bans, and discredit their opponents. [more]

Stalking and harassment

Peter Boghossian

For much of the 2010s, Torres was heavily involved with New Atheism and was close with several prominent New Atheist figures, including Peter Boghossian, back then a professor of philosophy at Portland State University. In 2016, for example, Torres published a Time magazine article with Boghossian and James Lindsay, entitled “How to Fight Extremism with Atheism.”

In a pattern that has repeated itself with several people Torres was friends with and communities Torres was part of, around late 2017 Torres switched from regarding Boghossian as a “brilliant” scholar to the sort of person who “defends Nazis.”

(It is beyond the scope of this article to examine Torres's violent "sign reversals" in their assessment of people and communities. But it is striking by how often these shifts in opinion appear, upon closer inspection, to be triggered by Torres experiencing a feeling of rejection, such as being denied a job, not being invited to a podcast, or having a book collaboration terminated. Torres’s subsequent “realization” that these people and communities, once held in such high esteem, were in fact profoundly evil or dangerous routinely comes after those personal setbacks, as a post hoc rationalization. A future article may explore these incidents, which shed so much light into Torres’s character and motives, in much greater detail.)

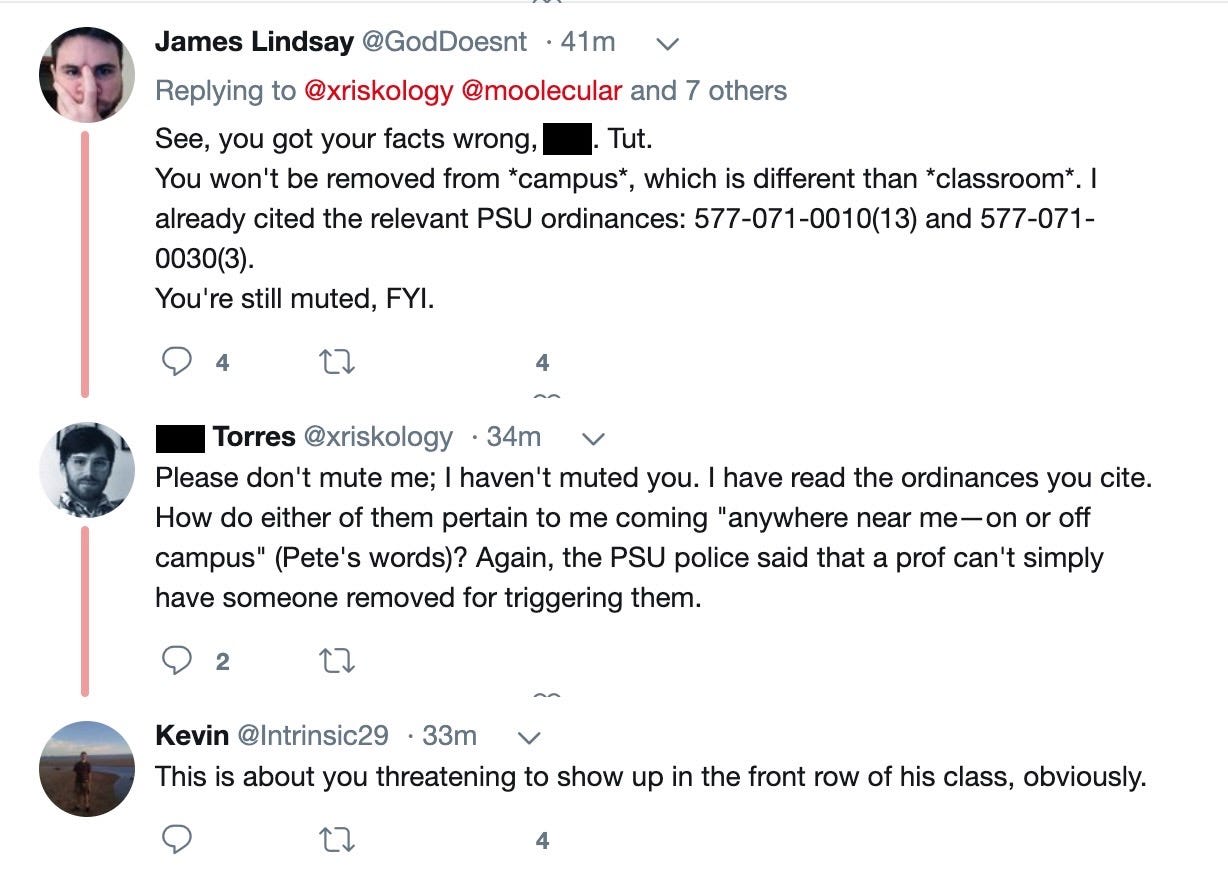

In February 2018, when Boghossian tweeted about a seminar he would teach, Torres replied with a link to an unrelated Buzzfeed story, prompting Boghossian to block Torres. Torres responded by creating a second account and leaving further replies to Boghossian, who also blocked the second account. Astonishingly, Torres then made a third account to further evade Boghossian’s block and leave even more comments.

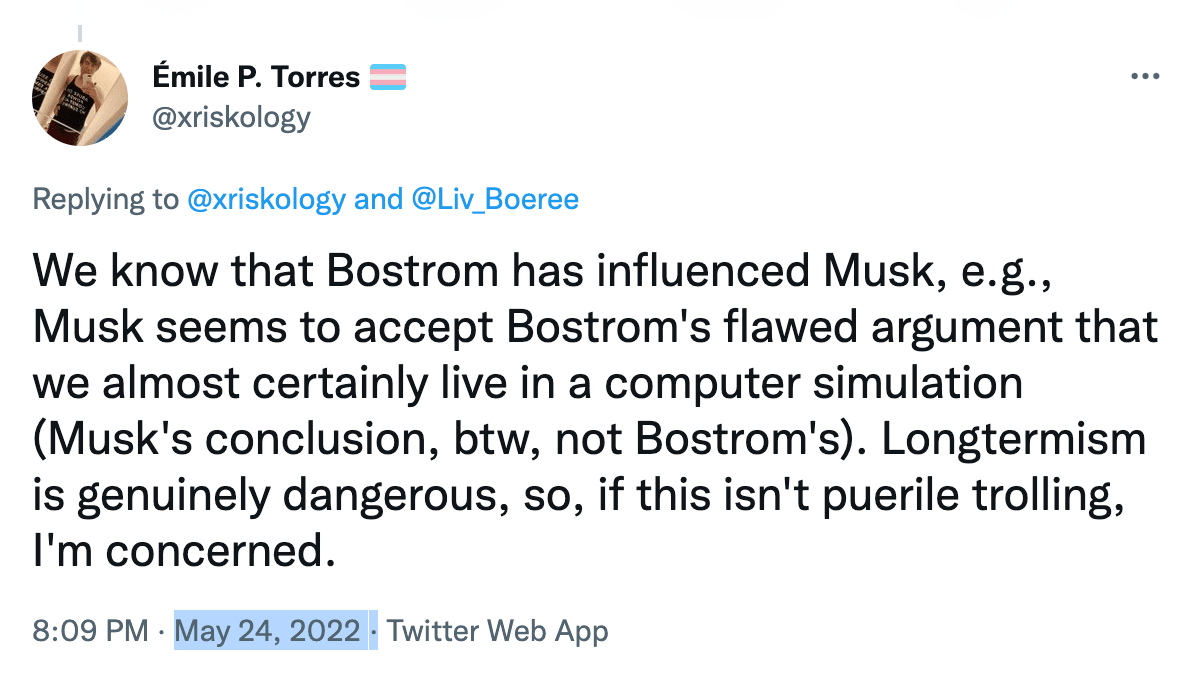

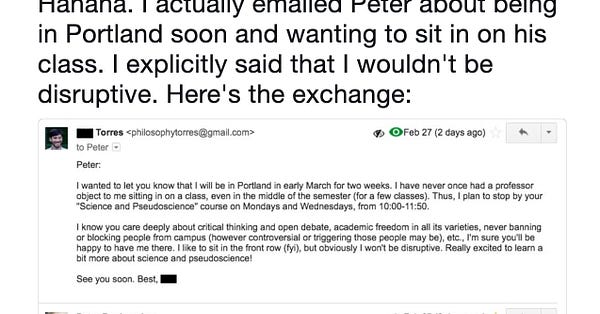

At the same time as Torres was harassing Boghossian and creating these accounts, they were also tweeting about Boghossian from their main account. In a disturbing tweet, Torres gloats about having informed Boghossian that they would visit Portland and sit in the front row of his class:

Torres then contacted Portland State University (without disclosing that they had been stalking Boghossian) with the apparent intention of going ahead with their plan.

When confronted by a user, Torres even admitted that Boghossian was justified in perceiving their email as threatening:

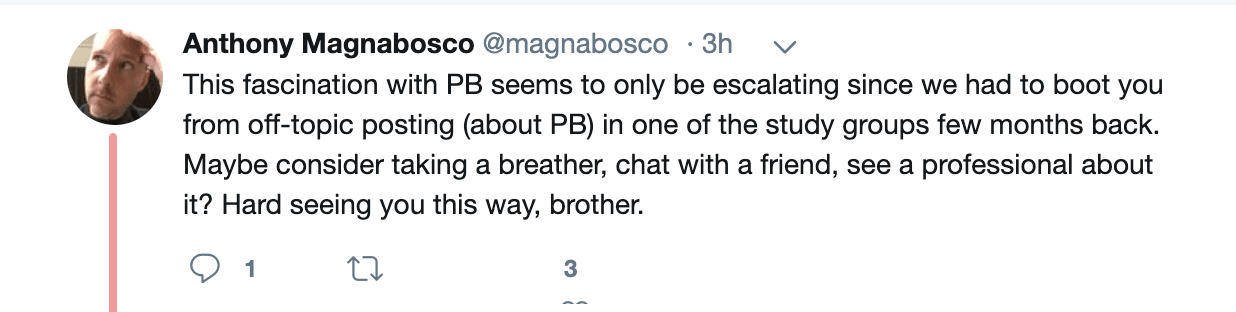

Aside from harassing Boghossian, Torres inundated various Facebook groups with posts about Boghossian unrelated to the groups’ purposes. People who appeared to be on good terms with Torres began to worry about his mental health:

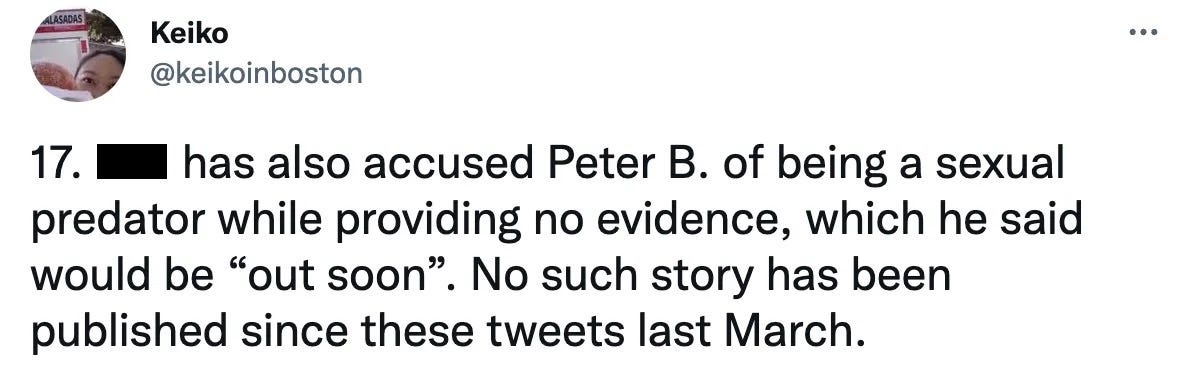

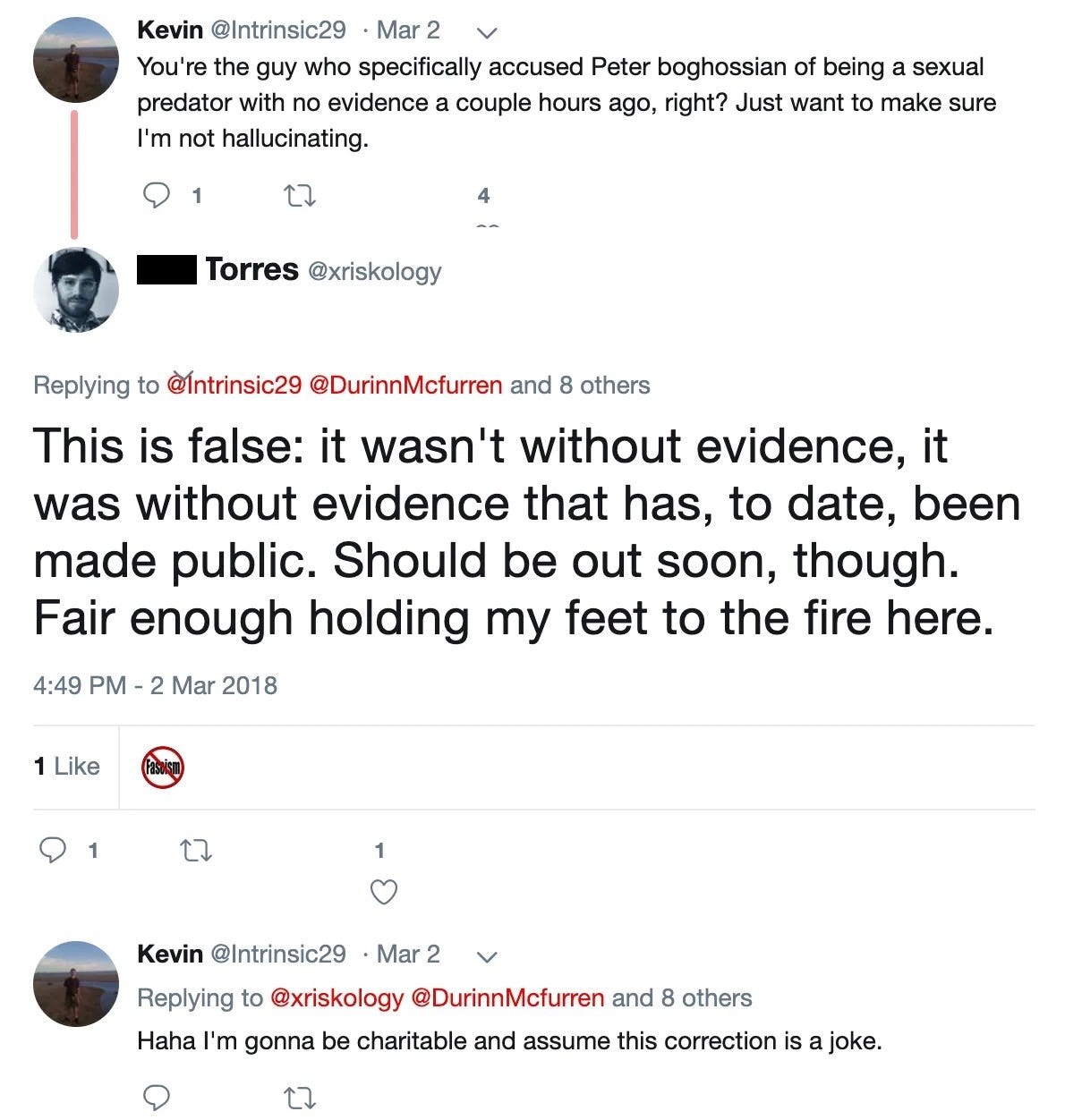

Torres also accused Boghossian of being a sexual predator:

When questioned by a user, Torres explained that the evidence “should be out soon.”

This was in March 2018. Five years on, they have yet to deliver on that promise.

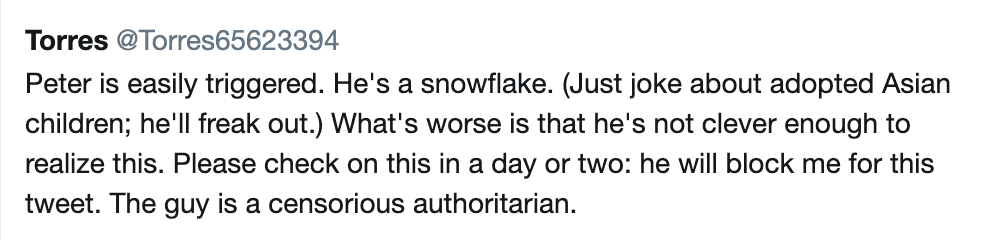

Another disturbing incident occurred when, from one of their secondary accounts, Torres urged Boghossian’s followers to “freak [Boghossian] out by “jok[ing] about adopted Asian children”:

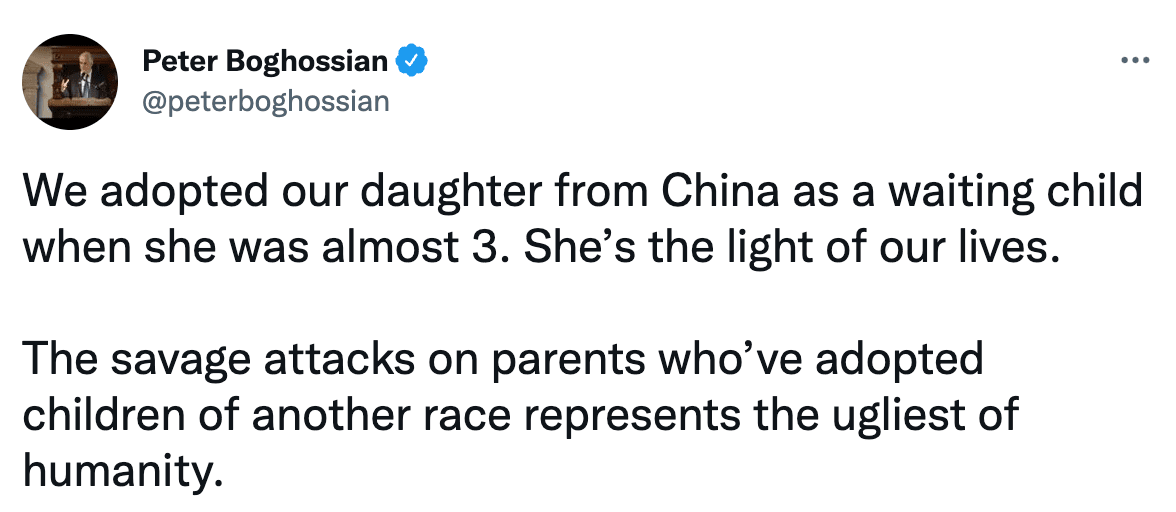

The reference to “adopted Asian children” is Torres’s way of alluding to Boghosian’s daughter:

I don’t like to accuse people of racism lightly, but the accusation seems appropriate in this case. Even if Torres had intended to provoke Boghossian by encouraging his followers to ask him about his daughter, the reference to her race seems completely gratuitous. Consider what the reaction would have been if Torres had joked about “adopted Black children.”

[Update: Torres silently deleted the racist tweet after this post was published. An archived copy of it has been preserved here.]

In sum, there is clear evidence that Torres did the following: began posting obsessively about Boghossian and being openly hostile; in this context, threateningly told Boghossian he was going to show up at his class; laughed about how this made Boghossian uncomfortable; referenced Boghossian's daughter as “adopted Asian children”; and encouraged their followers to “freak [Boghossian] out” by messaging him about his daughter.

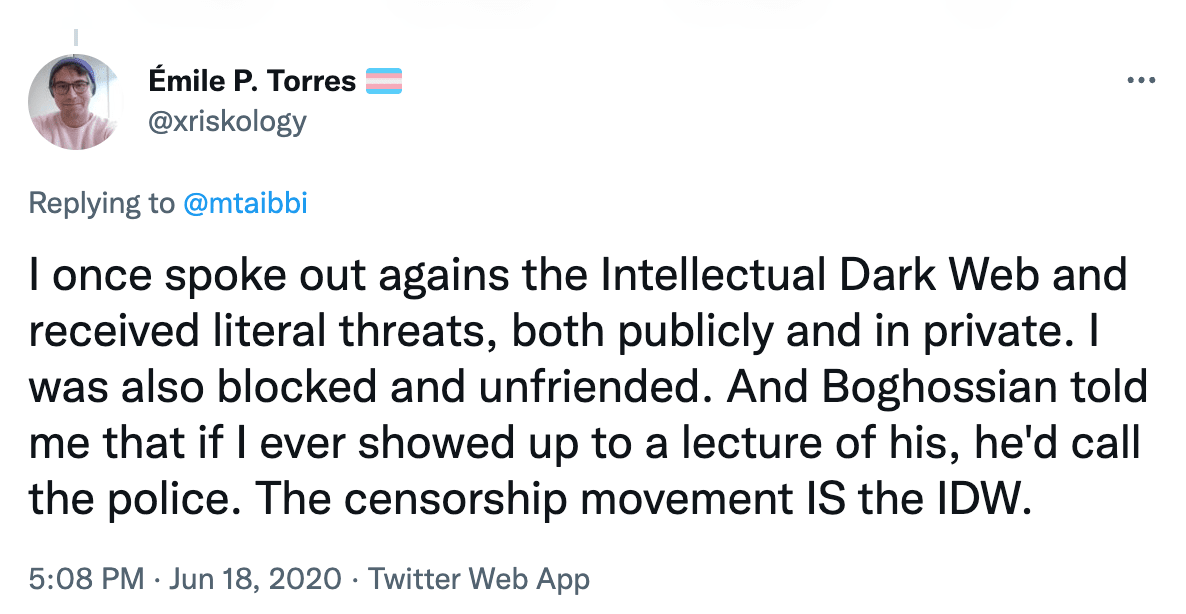

Yet, in recounting this incident years later, Torres makes it sound like Boghossian told Torres that he'd call the police in response to Torres's criticisms. There is no mention of the persistent harassment, the multiple accounts, or the incitement to “freak [Boghossian] out by “jok[ing] about adopted Asian children.”

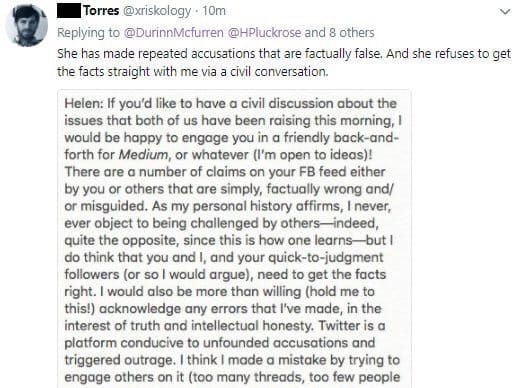

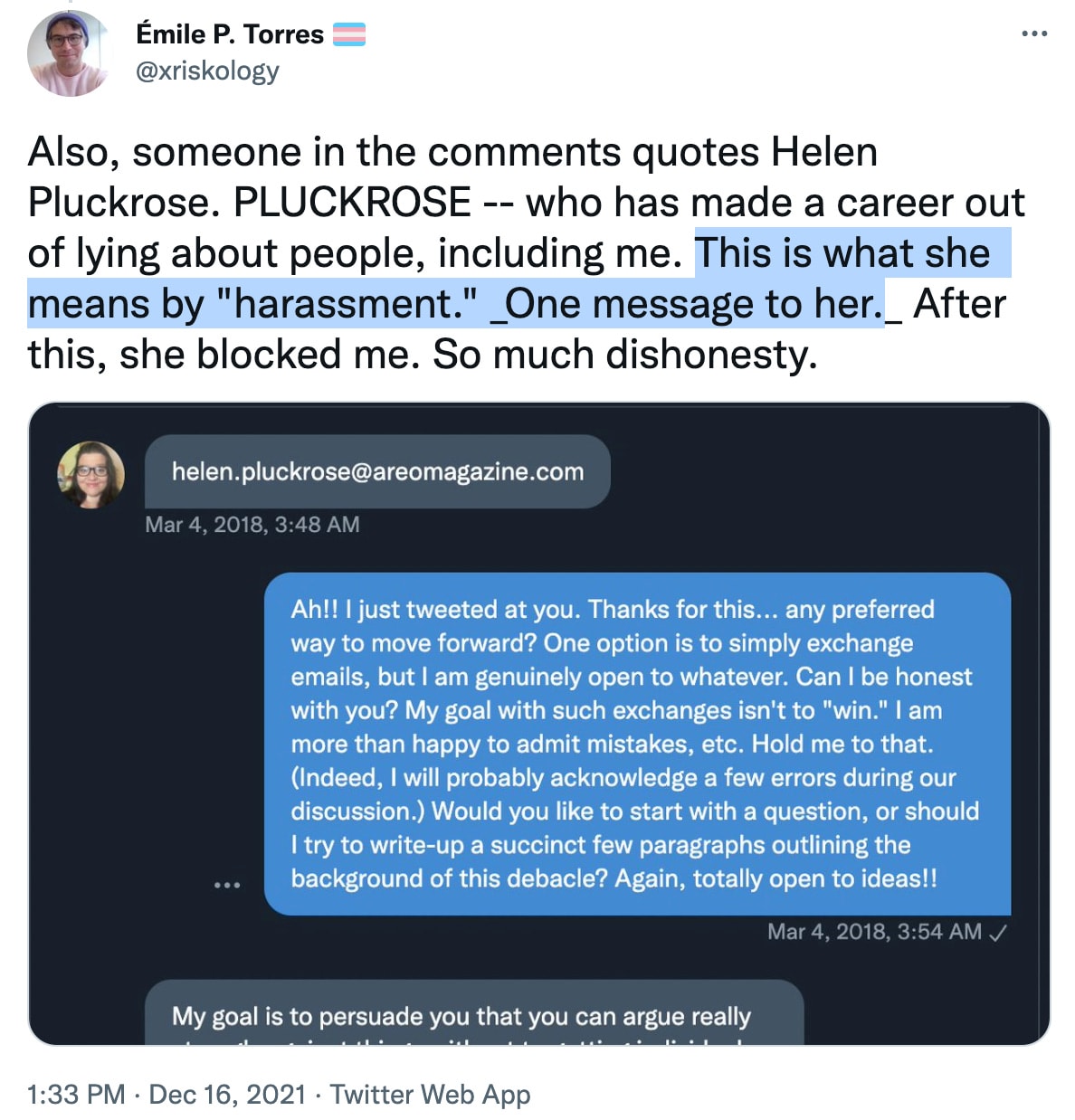

Helen Pluckrose

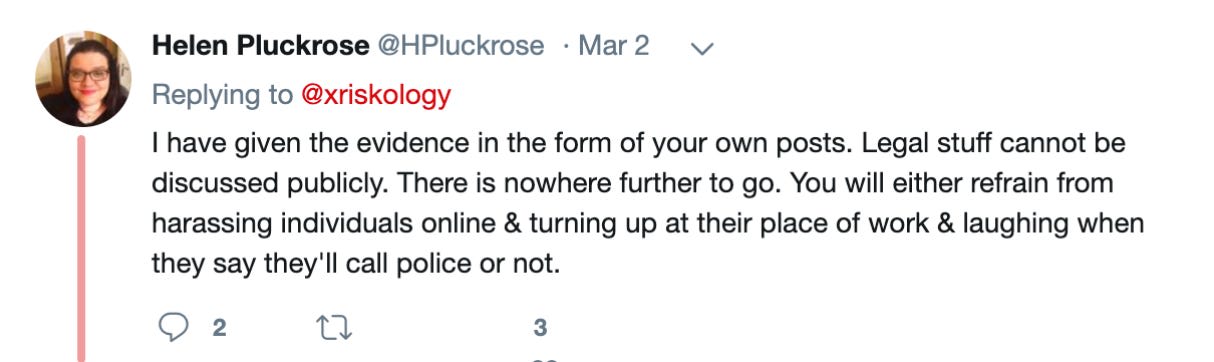

Another victim of Torres' harassment is Helen Pluckrose, a British author and liberal humanist. In this case, the harassment began when Pluckrose pleaded with Torres to stop stalking Boghossian. In response, Torres sent Pluckrose a torrent of tweets, upon which Pluckrose, fearing for her safety, asked Torres to stop tweeting at her.

In that same thread, Pluckrose tells Torres: "I really don't want a connection with you. You scare me."

Torres, however, continued tweeting at Pluckrose, ignoring her request.

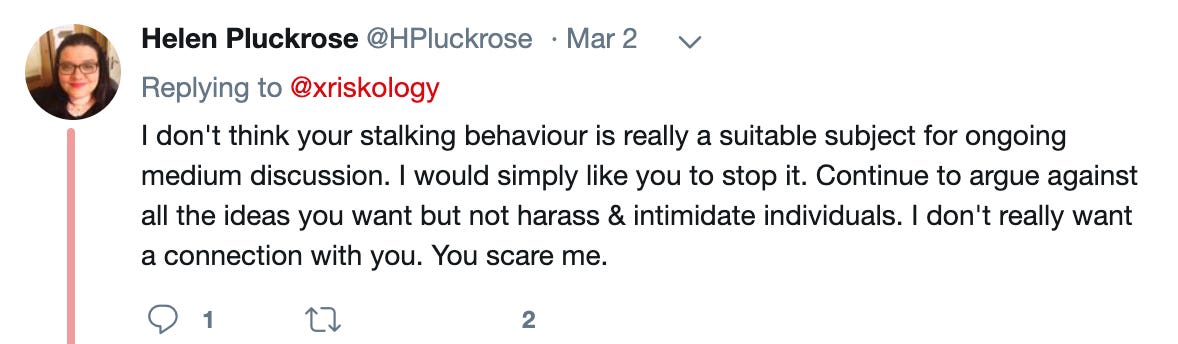

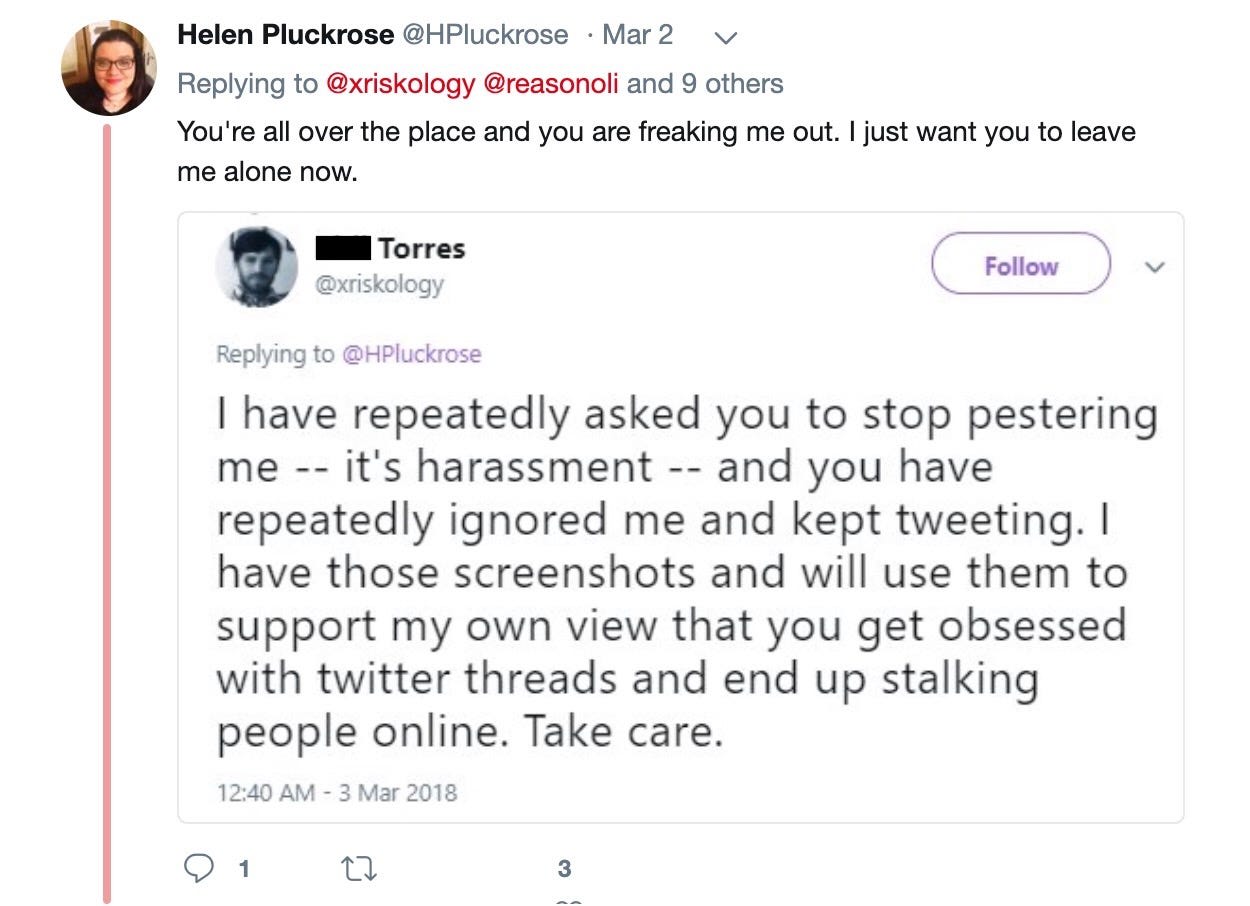

Disturbingly, Torres responds by accusing Pluckrose of harassing them:

After Torres made Pluckrose their new harassment target, other users intervened, but Torres still refused to back down:

Describing these events year later, Torres claimed that Pluckrose –– “who has made a career out of lying about people” –– had blocked them over a single Twitter DM. However, as documented in the screencaps above, Torres admits that they were tweeting at Pluckrose "almost for an entire day", and complains that she "ignored" Torres. This was after Pluckrose had said, on March 2, that she was afraid of Torres due to their behavior regarding Boghossian, whom (as we’ve seen) Torres had been stalking for months. Just as with Boghossian, Torres recounting of their behavior paints a very distorted picture of the actual events.

In part as a result of the abuse she received from Torres and others over the years, Pluckrose suffered serious mental health issues, was in psychiatric care, and has mostly withdrawn from public life.

Demonstrable falsehoods and gross distortions

“Forcible” removal

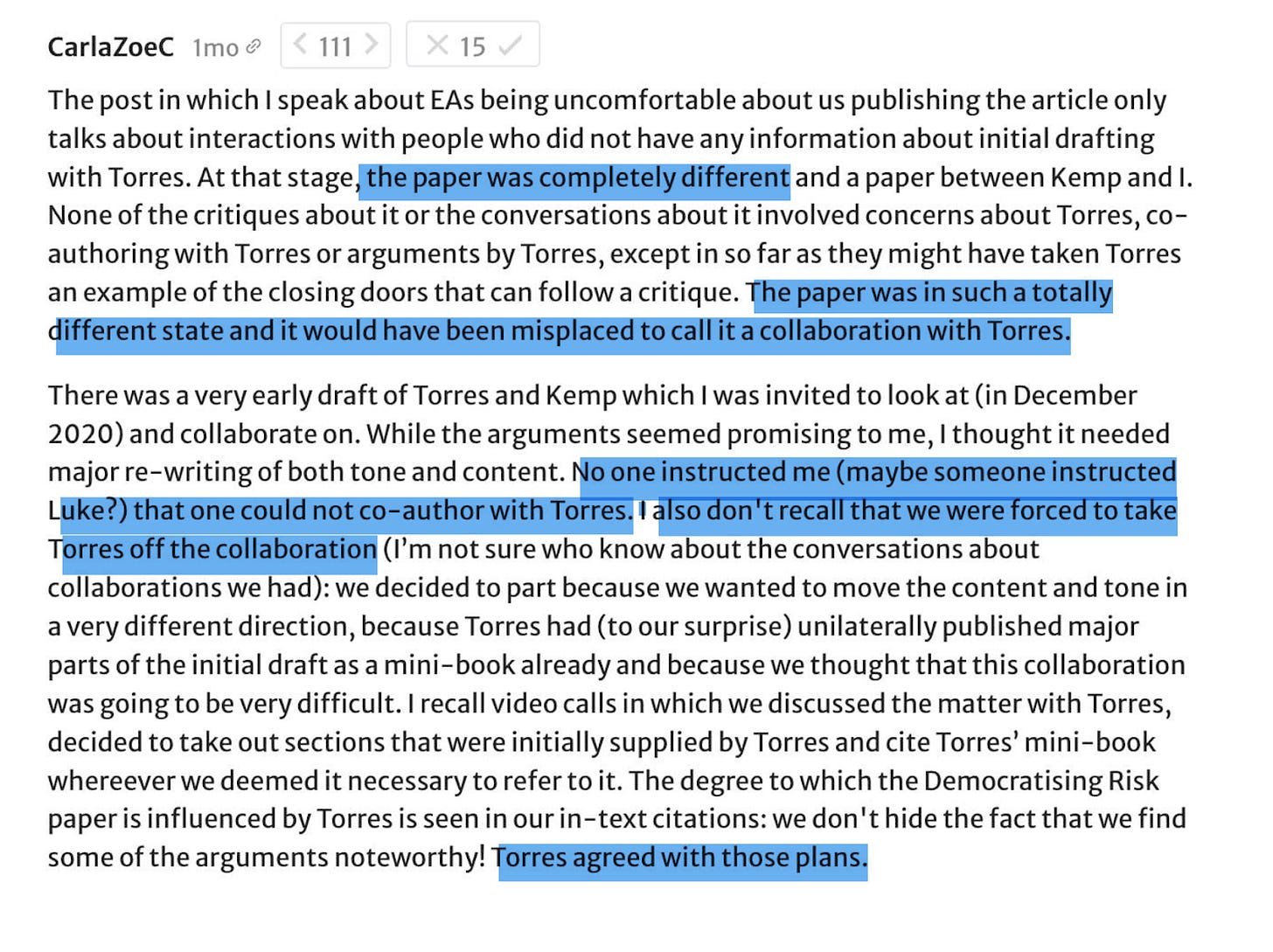

In December 2021, Carla Zoe Cremer, a Research Scholar at the Future of Humanity Institute, and Luke Kemp, a Research Associate at the Centre for the Study of Existential Risk, released a paper entitled “Democratising Risk: In Search of a Methodology to Study Existential Risk.”

The following year, Torres made the startling revelation that the paper had been written by three people: Cremer, Kemp… and Torres. Indeed, Torres indicated that they (Torres) were the first author. Torres also noted that, when the paper was near completion (“penultimate draft”), Torres was “removed [f]orcibly” after Cremer and Kemp “were instructed that Torres could not be part of the collaboration.”

However, when Torres's tweet was discussed on the Effective Altruism Forum a few days later, Cremer gave a substantially different account of what happened.

Cremer first observed that Torres’s contribution to the paper was restricted to collaborating with Kemp on “a very early draft” that, according to Cremer, required “major re-writing of both tone and content.” The paper that Cremer and Kemp eventually published “was completely different” and, as Cremer put it, “in such a totally different state and it would have been misplaced to call it a collaboration with Torres.”

Moreover, Cremer stated that no one had instructed her to abstain from collaborating with Torres, and that she did not remember being "forced" to take Torres off the collaboration. In fact, it was Cremer and Kemp who decided to end Torres' involvement, and Cremer notes that “Torres agreed with those plans.”

Surprisingly, in their reply, Torres thanked Cremer “for correcting the record” and apologized “if I’d misremembered some of this.”

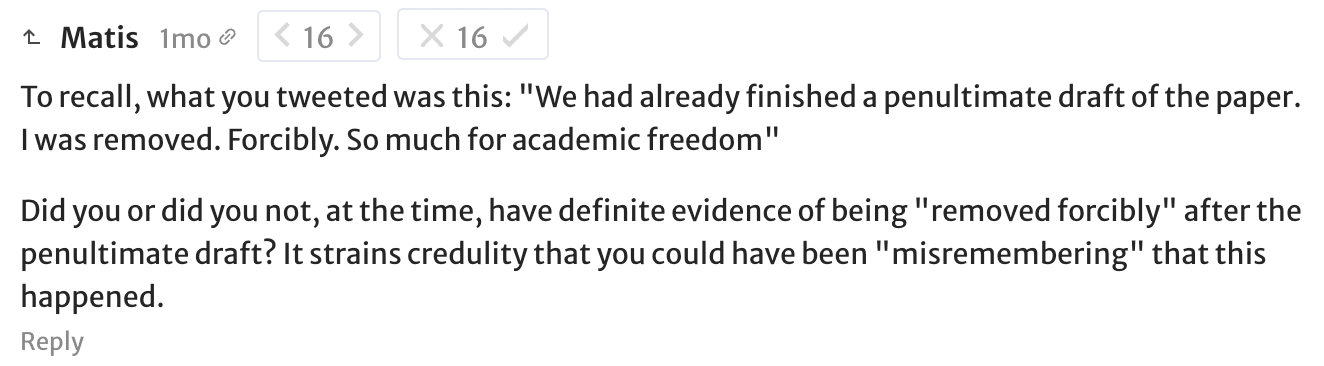

A user then asked Torres to explain how it was possible for Torres to misremember something as peculiar as having been “removed forcibly”:

Torres never answered the question.

“Researcher at CSER”

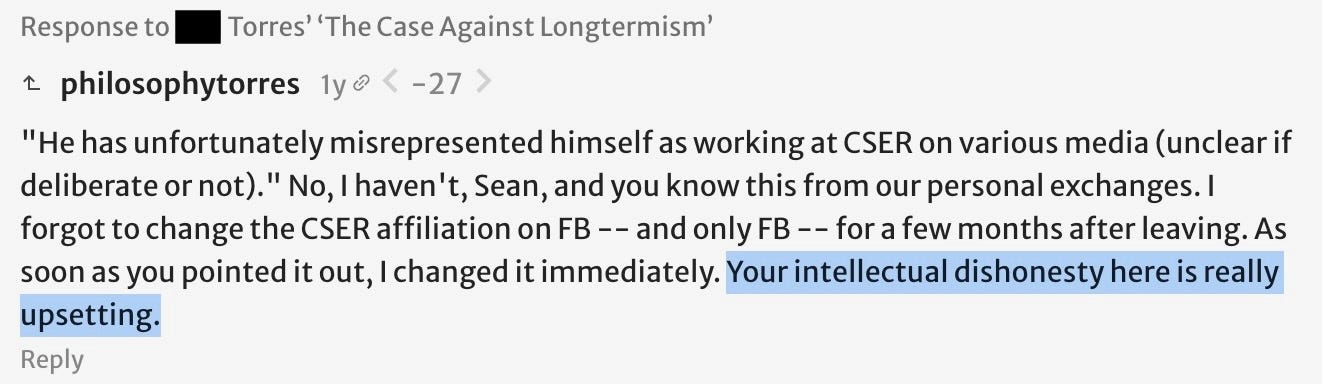

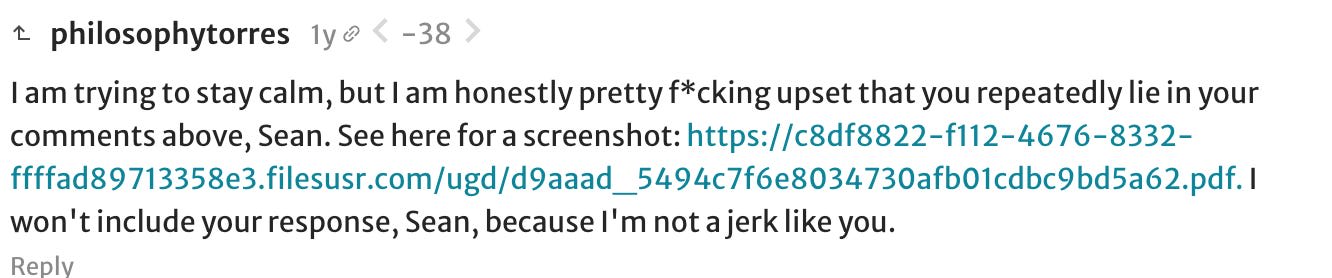

Another occasion in which Torres conveniently forgot something relates to Torres’s involvement with the Centre for the Study of Existential Risk (CSER). Although Torres was only a visitor for a couple of months, years later Torres was, intentionally or otherwise, presenting themself as still working at that institution. When Seán Ó hÉigeartaigh, then CSER’s co-Director, pointed this out on the Effective Altruism Forum, Torres responded with a series of uncivil comments.

In one comment, Torres tells Ó hÉigeartaigh: “your intellectual dishonesty here is really upsetting.”

In another comment, they accuse Ó hÉigeartaigh of “lying… over and over again” and ask: “How can someone lie this much about a colleague and still have a job?”

In a third comment, a “pretty f*cking upset” Torres calls Ó hÉigeartaigh “a jerk.”

But Ó hÉigeartaigh was not lying: as he explains in his response, years after Torres’s CSER visitorship had ended, Torres was still listing themself as a "Researcher at Centre for the Study of Existential Risk, University of Cambridge” on LinkedIn, the most popular professional networking platform. In their defense, all Torres could say was, once more, that they had forgotten about it –– tacitly admitting that Ó hÉigeartaigh was right all along.

Giving What We Can

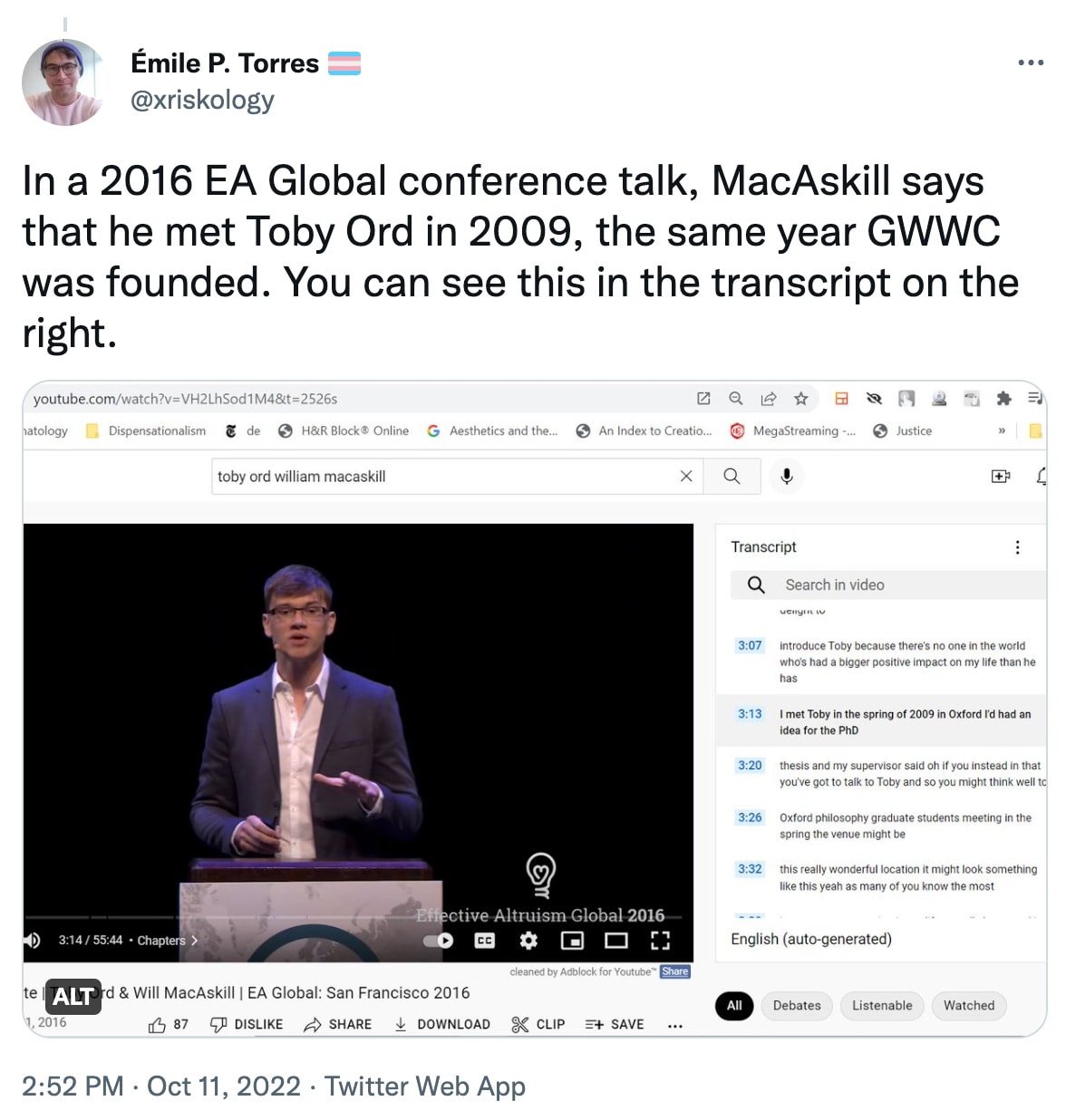

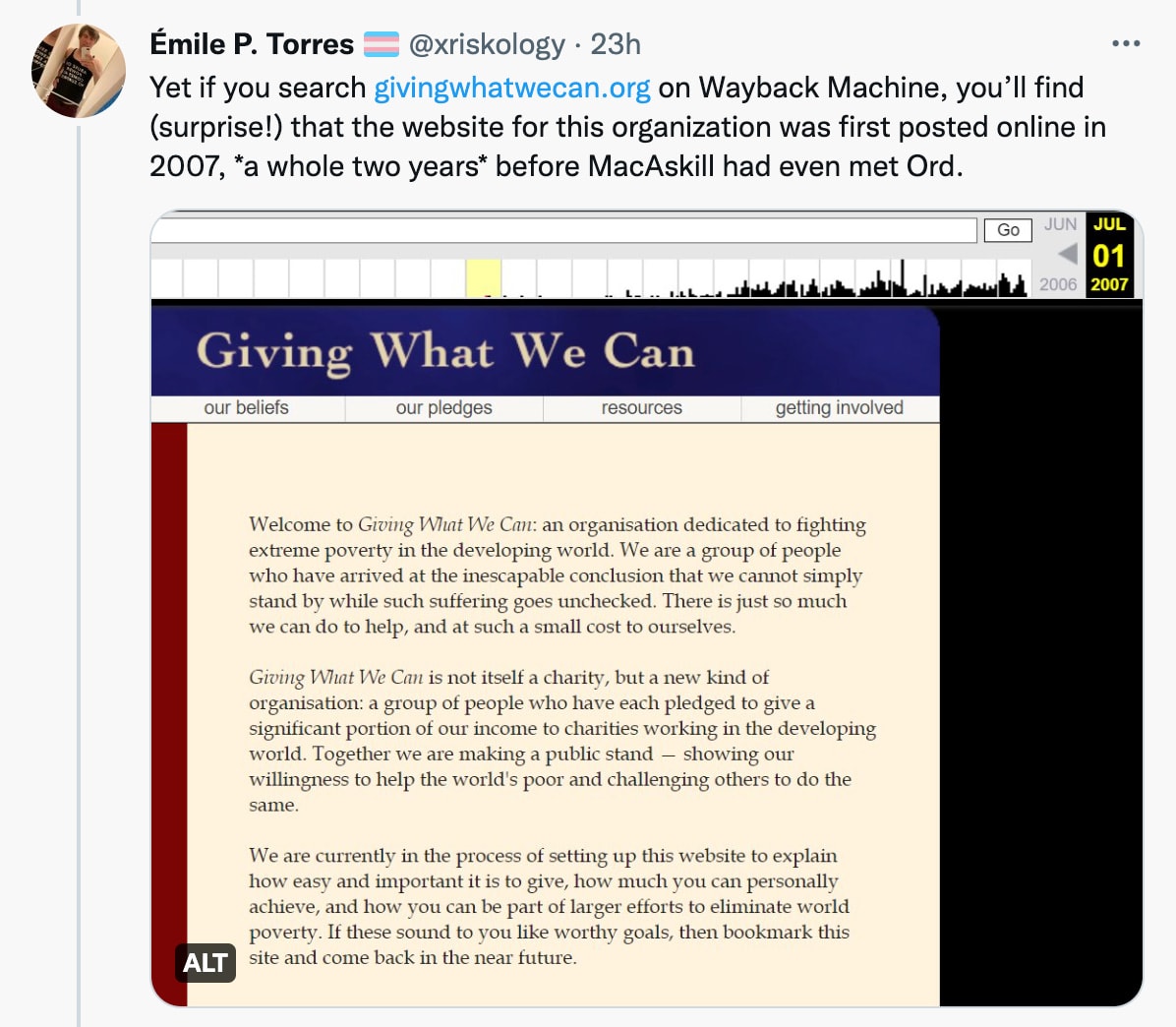

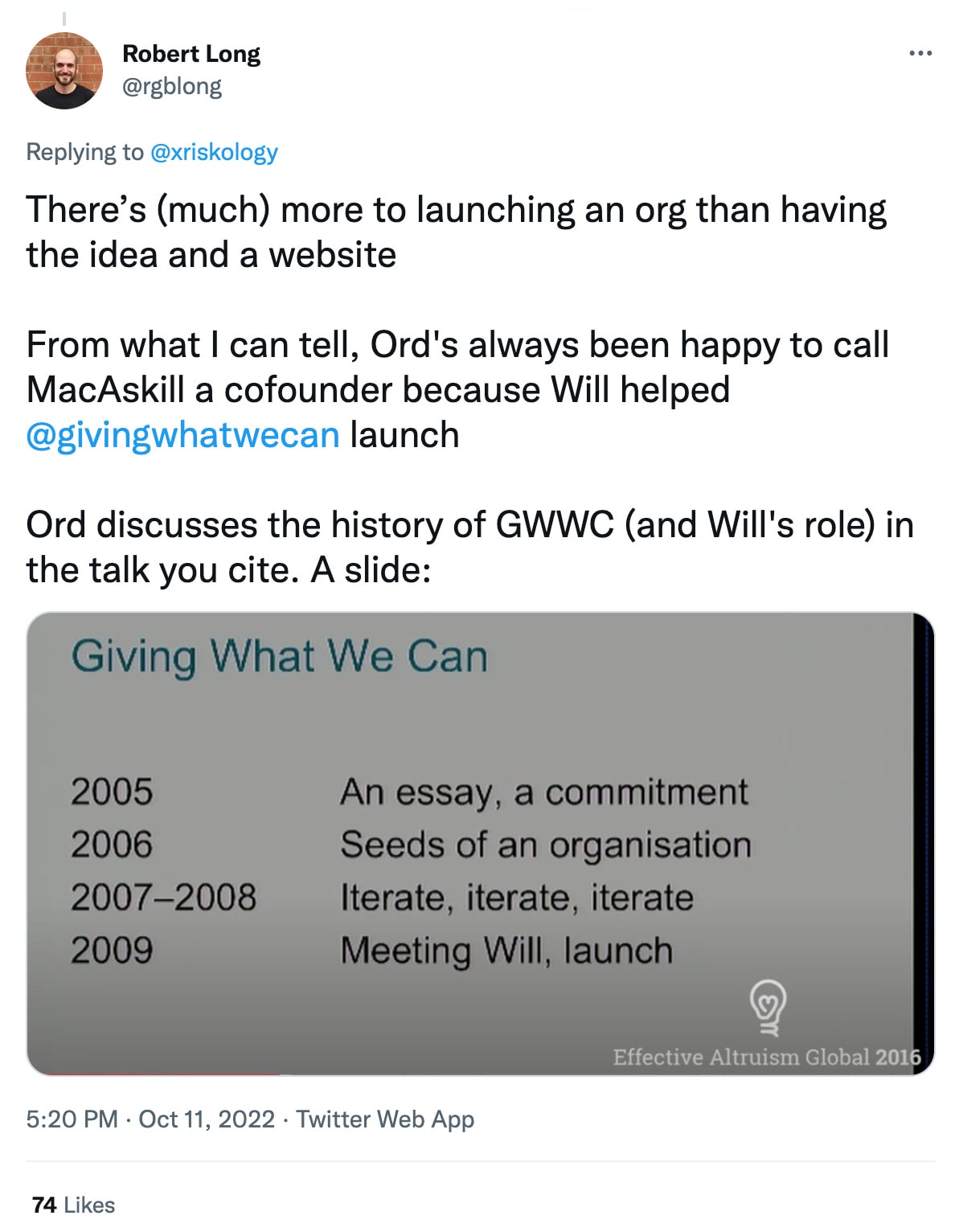

In a series of tweets published in October 2022, Torres implied that William MacAskill had not co-founded Giving What We Can. To back up this audacious allegation, Torres cited a clip from a 2016 talk in which MacAskill said he first met Toby Ord in 2009, and a snapshot from the Wayback Machine showing that Giving What We Can’s website existed already in 2007.

I watched the talk and was flabbergasted by Torres’s accusation. Torres neglects to mention that this very same talk includes Toby Ord's story of the early years of Giving What We Can, in which Ord acknowledges that the idea of Giving What We Can pre-dates MacAskill. Far from being a shocking secret unearthed from a forgotten video recording, MacAskill and Ord have both acknowledged that the idea of Giving What We Can was Ord’s. MacAskill is regarded as a co-founder because he helped Ord launch it as a public-facing international community, as several Twitter users pointed out:

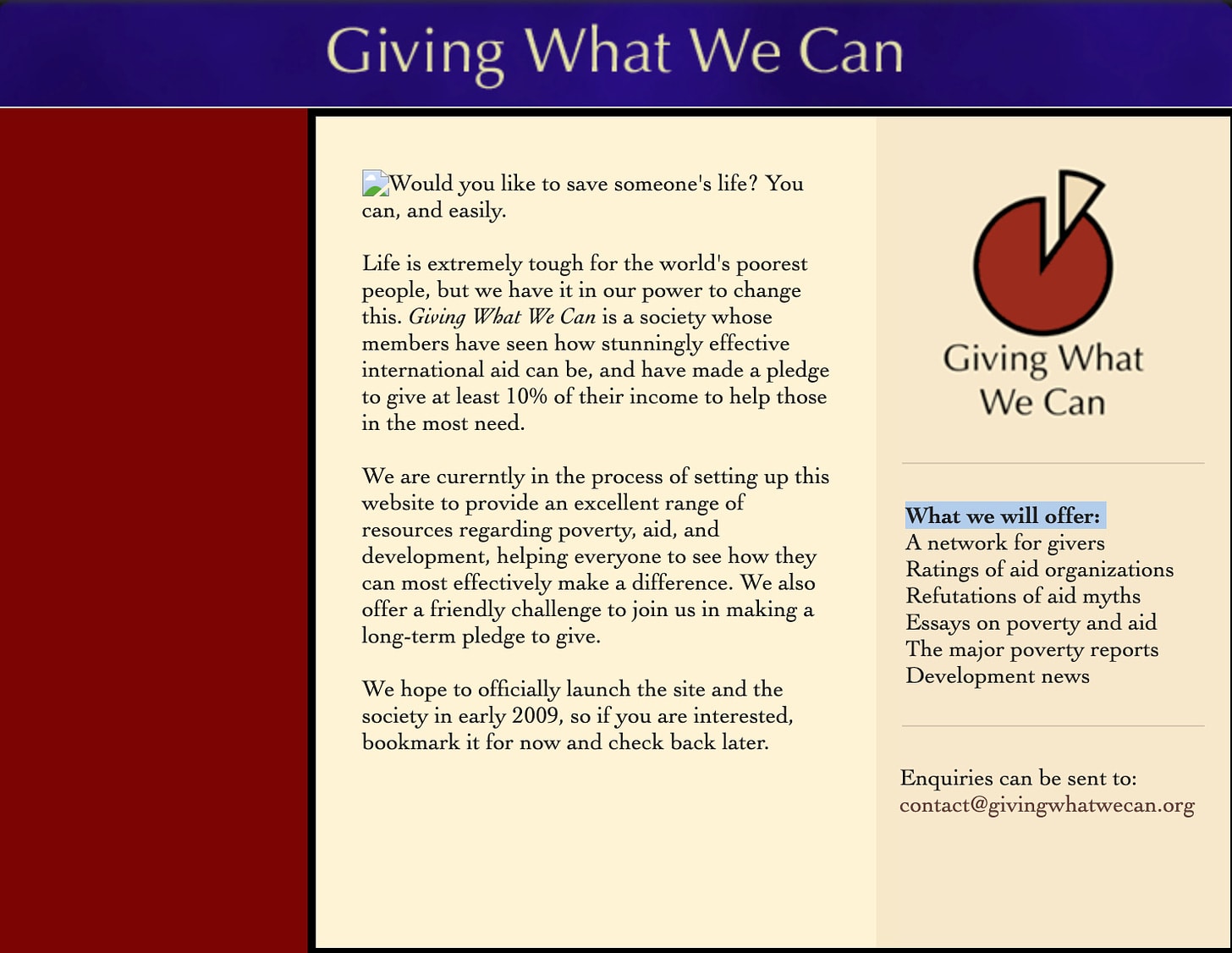

Additionally, another Wayback Machine snapshot, which Torres chose not to link to, proves that, even though the website did exist in 2007, the organization hadn’t yet launched: the sidebar lists a bunch of content and services that they “will offer.”

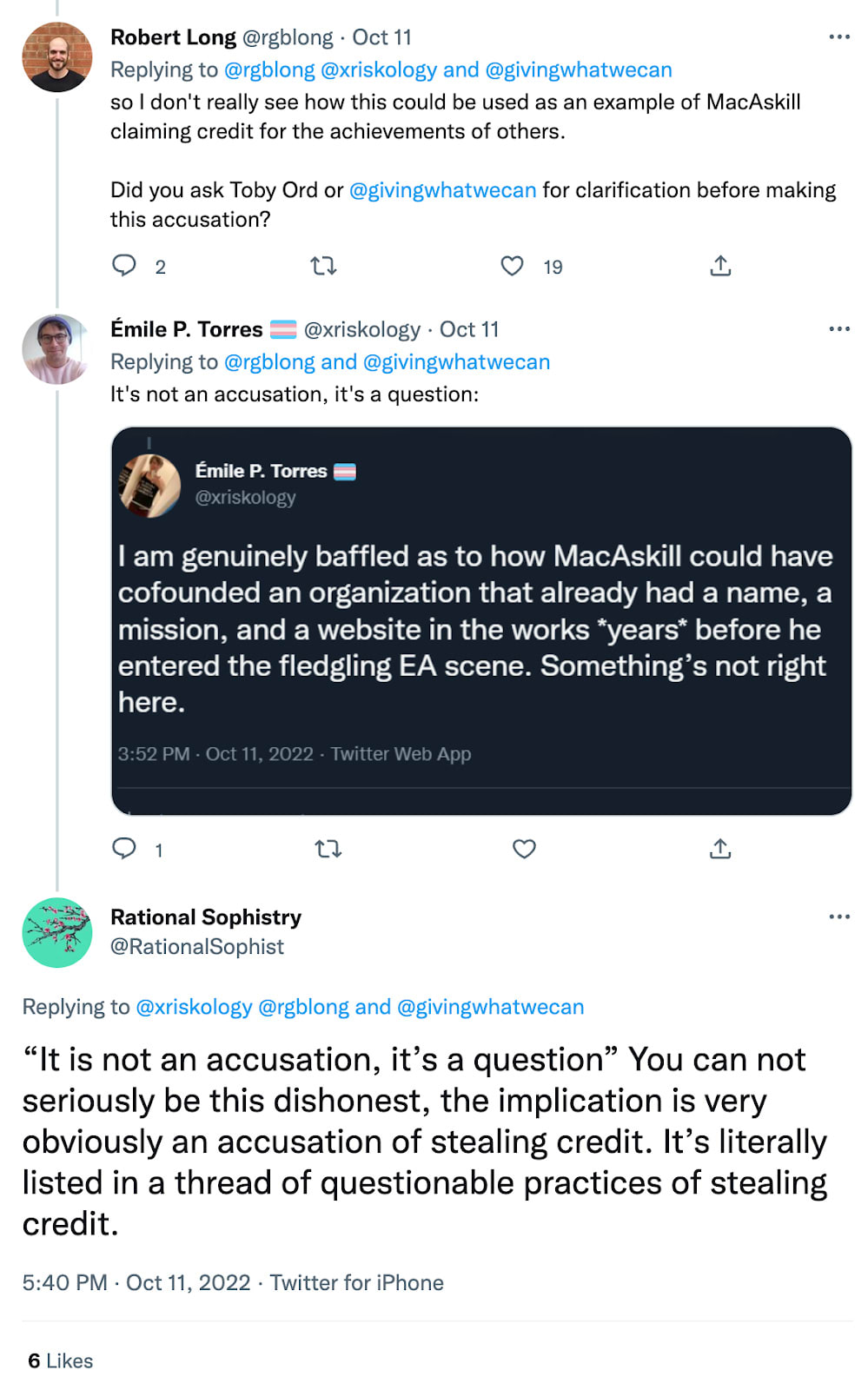

When users on Twitter asked Torres if they had reached out to Toby Ord or Giving What We Can for clarification before making their accusation, Torres responded that they were merely asking "a question":

Brief Digression on Effective Altruism

Torres has levelled some serious accusations against certain figures in the effective altruism (EA) movement, which we will examine shortly. But before we do so, I will provide a brief overview of EA, which should help those unfamiliar with it to understand the discussion that follows.

Effective altruists (EAs) are primarily interested in answering the question of how to use the available resources to best help others. Many EAs believe that supporting charities focused on global health is the best way to help the global poor. Other EAs, however, have argued that something else might be even more beneficial: reducing the risk of global catastrophes that threaten future generations. My understanding is that this group of EAs –– sometimes known as “longtermists” –– see this position as a natural extension of the principle of “radical empathy”, or “working hard to extend empathy to everyone it should be extended to, even when it is unusual or seems strange to do so.” In particular, longtermists EAs believe that empathy should be extended not only to other historically neglected groups, such as people in other countries and animals from other species, but also to people in the distant future. As Fin Moorhouse writes,

For much of history, we lived in small groups, and we were reluctant to help someone from a different group. But over time, our sympathies grew to include more and more people. Today, many people act to help complete strangers on the other side of the world, or restrict their diets to avoid harming animals (which would have baffled most of our earliest ancestors). […] Yet, expanding the moral circle to include nonhuman animals doesn't mark the last possible stage — it could expand to embrace the many billions of people, animals, and other beings yet to be born in the (long-run) future.

So, whether the best ways to do good involve helping the global poor, reducing animal suffering, or protecting future generations (or something completely different) is an area of active debate among EAs. What's critical for our purposes is that Torres has repeatedly done the following:

Quote someone explaining why and how longtermism implies that donating to prevent (e.g.) engineered pandemics is even more cost-effective than donating to global poverty causes.

Characterize that person as advocating for actively taking money from poor people and giving it to rich people.

I will now review some examples of Torres doing this.

More falsehoods and distortions

Hilary Greaves

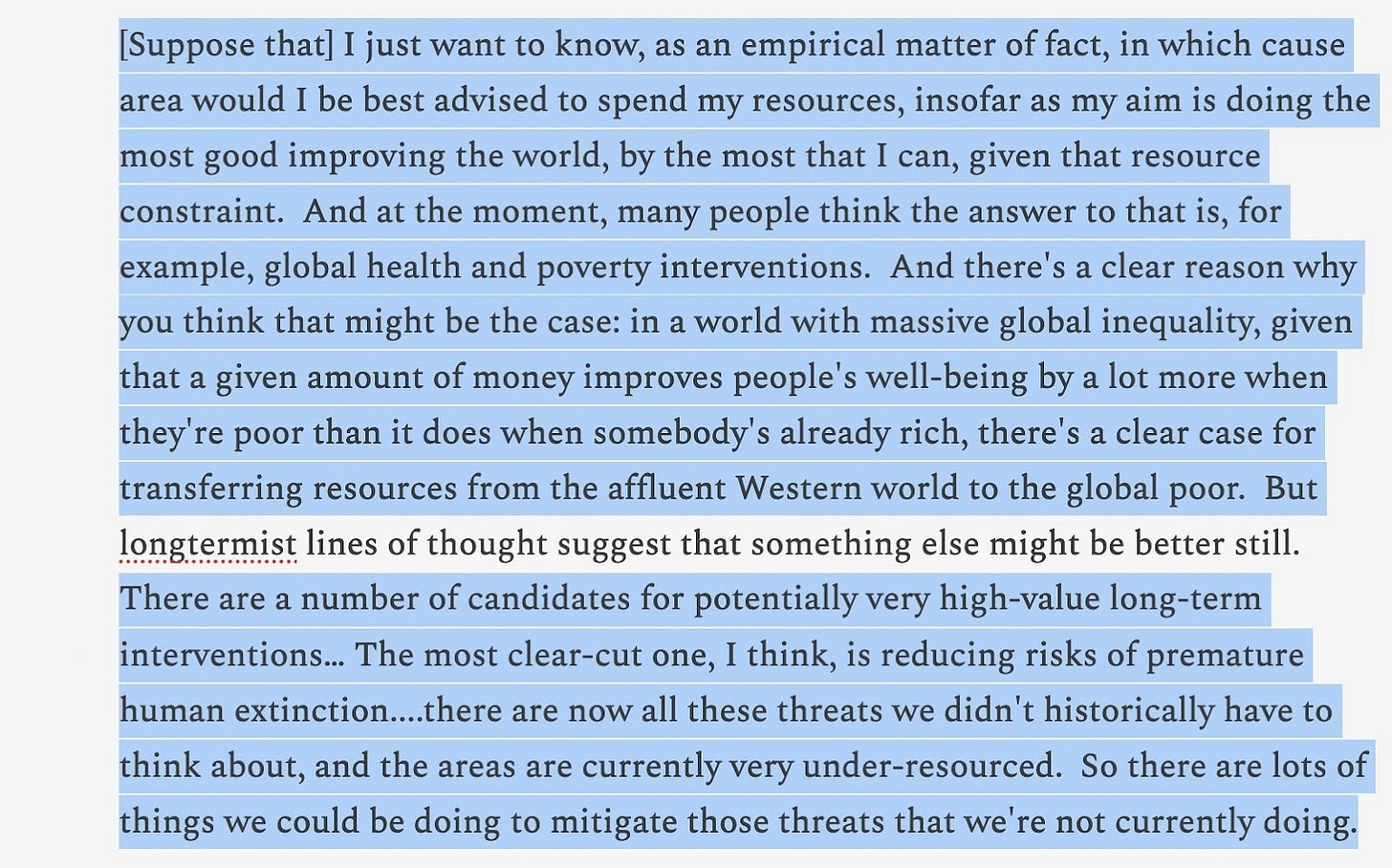

Torres's first target is Hilary Greaves, Director of the Global Priorities Institute. In 2020, Greaves conducted a video interview as part of a series featuring prominent philosophers discussing their work. Torres’s polemical Salon article, “Understanding longtermism,” focuses on one remark Greaves made during the interview:

However, nowhere in that interview (or anywhere else) does Greaves say that “transferring wealth in the opposite direction” would be better still. It is a fabrication by Torres.

In the interview, Greaves merely notes that, while some global health interventions are very cost-effective, other interventions could be even more so. The reason is that (I) relatively few resources are being spent on preventing risks that could lead to humanity’s premature extinction, and (II) humanity could have a very long and flourishing future if it does not go prematurely extinct. Analogously, climate change activists or animal rights activists might argue that preventing climate catastrophe or reducing animal suffering is currently even more cost-effective than improving global health, because of the sheer number of people or animals involved. No matter what one thinks of this argument, these activists are evidently not advocating for poor-to-rich wealth transfers. Nor is Greaves.

To leave no room for doubt about this point, here is a transcript of the relevant passage in the interview, with the parts omitted by Torres highlighted:

When Hayden Wilkinson, a researcher at the Global Priorities Institute, confronted Torres, Torres pretended that they had never attributed those views to Greaves:

It is perplexing that Torres would accuse Wilkinson of putting words in their mouth, when Torres did attribute to Greaves, as we just saw, a view she never defended.

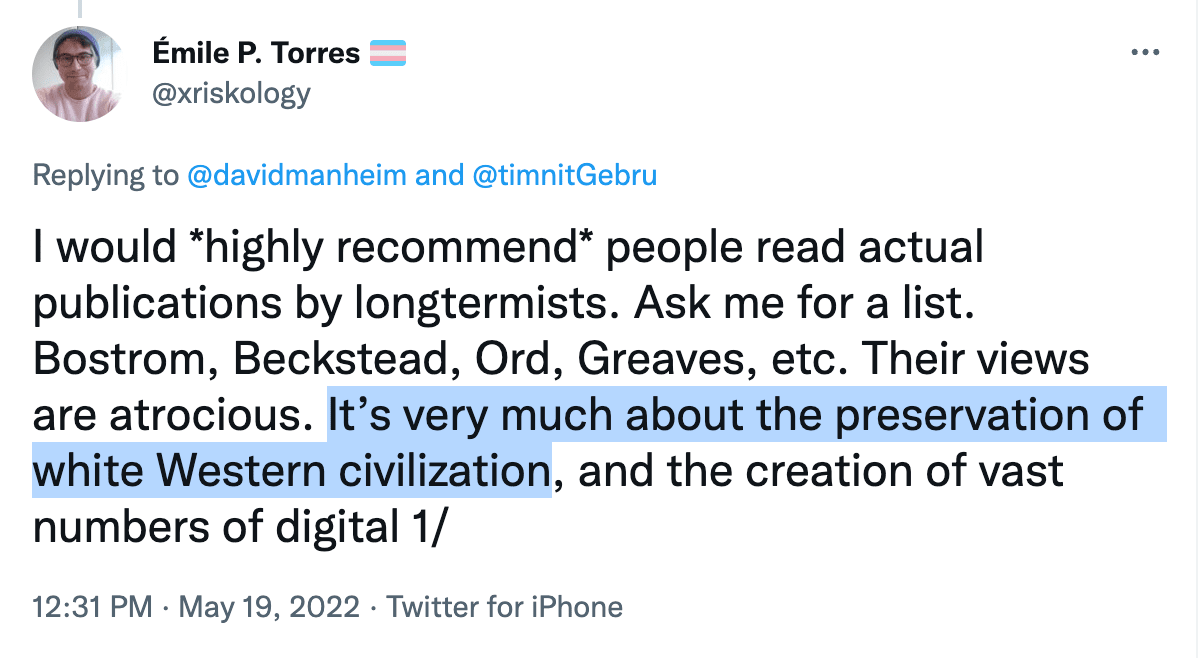

Torres then relied on that fabricated attribution to even claim that Greaves, along with other longtermists, endorses “atrocious” views, which are “very much about the preservation of white Western civilization”:

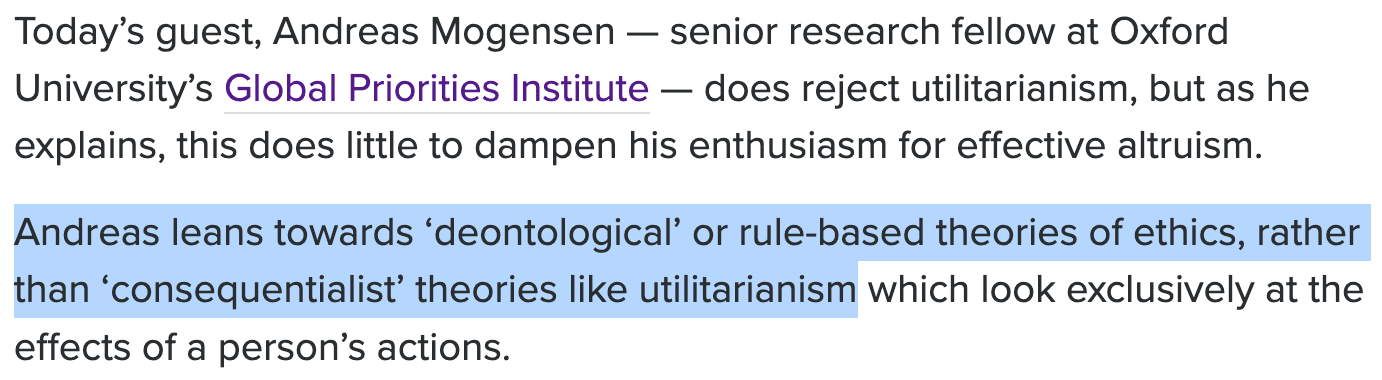

Andreas Mogensen

Torres has also made similar critical remarks about Andreas Mogensen, another researcher at Oxford University:

However, Mogensen here describes what he considers to be the implications of utilitarianism. Mogensen is not himself endorsing this view.2 In fact, Mogensen is not a consequentialist, let alone a utilitarian, as one can verify online:

Moreover, and more seriously, Torres again falsely equates the claim that there are more urgent moral requirements than transferring resources from the rich to the poor with the claim that there is a requirement to transfer these resources in the opposite direction. Mogensen never claimed that utilitarianism requires this, let alone endorsed such a view himself.

Having mischaracterized Mogensen’s position, Torres (just like they did with Greaves) again draws the conclusion that these claims are “clearly white supremacist”:

Torres draws this conclusion even though, as noted, (I) Mogensen is not endorsing –– and, in fact, rejects –– the view whose implications he describes and (II) Mogensen never claimed that this view had the implications Torres attributes to it.

Nick Beckstead

Torres is especially critical of Nick Beckstead, an early and respected member of the effective altruist community. Torres criticizes Beckstead in several publications and mentions him ad nauseam in over 30 separate tweets from 2022 alone. However, virtually all of Torres’s critical mentions focus on a single passage from Beckstead’s unpublished doctoral dissertation. Here is the passage in question:

Torres has summarized this passage as “claim[ing that] we should actually care more about people in rich countries than in poor countries”; as noting that “‘giving to the rich’ is the obvious conclusion”; and as “literally arguing that, given the longtermist framework, we should value the lives of people in rich countries more than the lives of people in poor countries.” And Torres has, based on these summaries, drawn the conclusion that Beckstead’s views are “overtly white-supremacist”3 and in “the spirit of eugenics.”

It would be surprising if this characterization of Beckstead were true, given that he has a long track record of working to support global health and development. According to this Wiki article, Beckstead founded the first US chapter of Giving What We Can and pledged to donate 50% of his lifetime income to organizations fighting global poverty in developing countries, such as the Against Malaria Foundation.

And in fact, as we will now see, Torres’s summaries and characterizations of Beckstead’s views are grossly inaccurate.

Beckstead is not arguing here that “we should actually care more about people in rich countries” or that “we should value the lives of people in rich countries more than the lives of people in poor countries.” As an effective altruist, Beckstead believes that we should care about all lives equally. In the passage cited, Beckstead discusses only what effects saving lives in different countries will have on the future. Put differently, Beckstead is making an empirical claim about the long-run consequences of different actions, rather than a normative claim about the intrinsic value of different lives.

Similarly, Beckstead never once indicates that there should be global transfers of wealth from the poor to the rich, and in fact believes the opposite (and his track record of donations reflects this belief). The wellbeing of the poor should be prioritized precisely because they are poor, and therefore don’t have access to even the most basic medical care. Even if, all other things being equal, saving a rich person’s life causes greater economic flow-on effects that have greater benefits for future generations, one can save hundreds or thousands of times as many lives by focusing on healthcare for the extremely poor than one can by focusing on healthcare for the rich.

Beckstead explained his views to Torres when Torres first published the criticism, leaving a Facebook comment correcting many of these misrepresentations:

Since Torres responded to Beckstead's comment, we know that, when Torres published their critical tweets and articles, they did so fully aware that they distorted Beckstead's views.

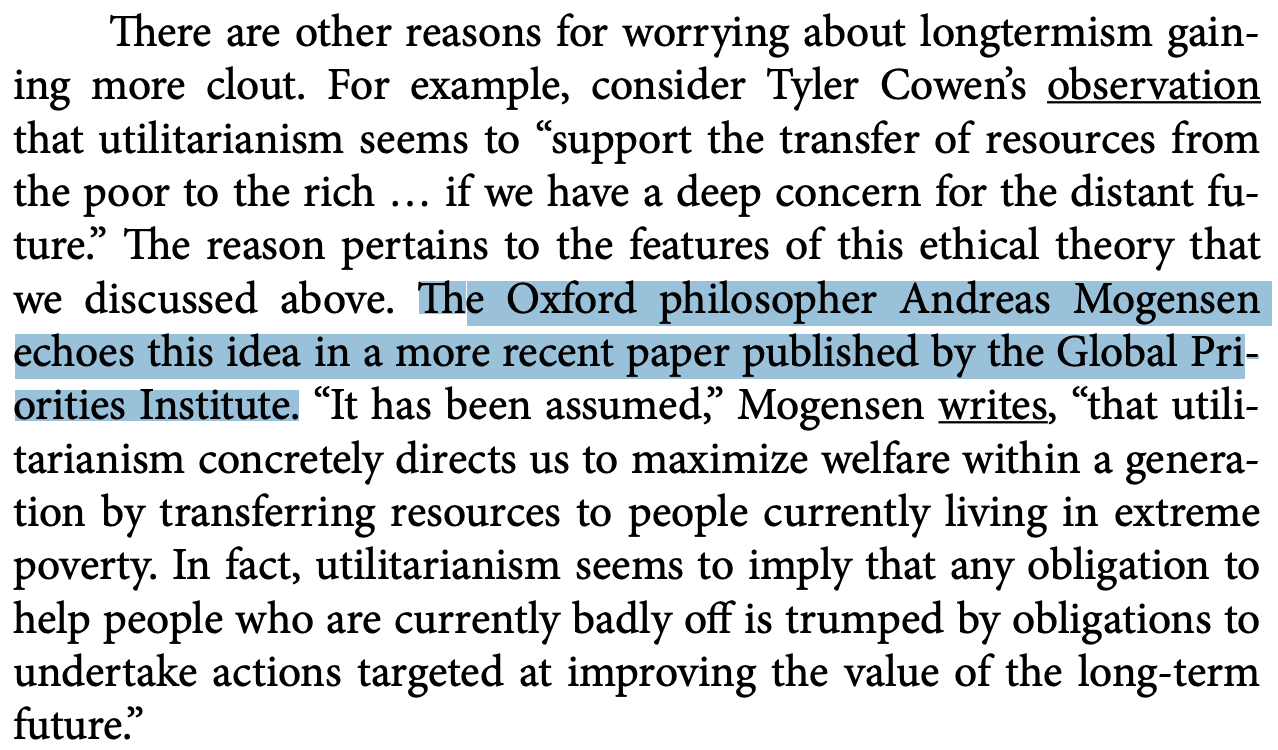

Tyler Cowen

In the passage containing the quote by Mogensen examined previously, Torres also quotes Cowen, a professor of economics at George Mason University, who is not actually a longtermist:

However, Torres seriously misquotes Cowen. The ellipsis in the passage quoted separates two clauses that occur 20 lines apart in the original text. By omitting all the content, Torres leaves out context that is critical for a proper understanding of Cowen’s argument. In the screenshot below, I have highlighted Torres’s omissions:

Torres’s truncated quote portrays Cowen as attributing to utilitarianism the view that “the lives of people in rich countries matter more than the lives of people in poor countries.” But Cowen is not saying this. His point, instead, is that utilitarians who agree with Piketty (that returns to capital exceed the rate of growth of the economy as a whole) should expect actions that primarily benefit the rich in the present to benefit the poor in the future. Since utilitarians with a concern for the future will be more concerned about these future poor people, utilitarians who agree with Piketty may favor wealth redistribution from the present poor to the present rich, out of concern for the future poor people whom this wealth transfer will benefit. In other words, the key contrast here is not “poor vs. rich”, but “present poor vs. future poor.” One may or may not agree with the argument, but it bears little resemblance to the argument Torres attributes to Cowen.

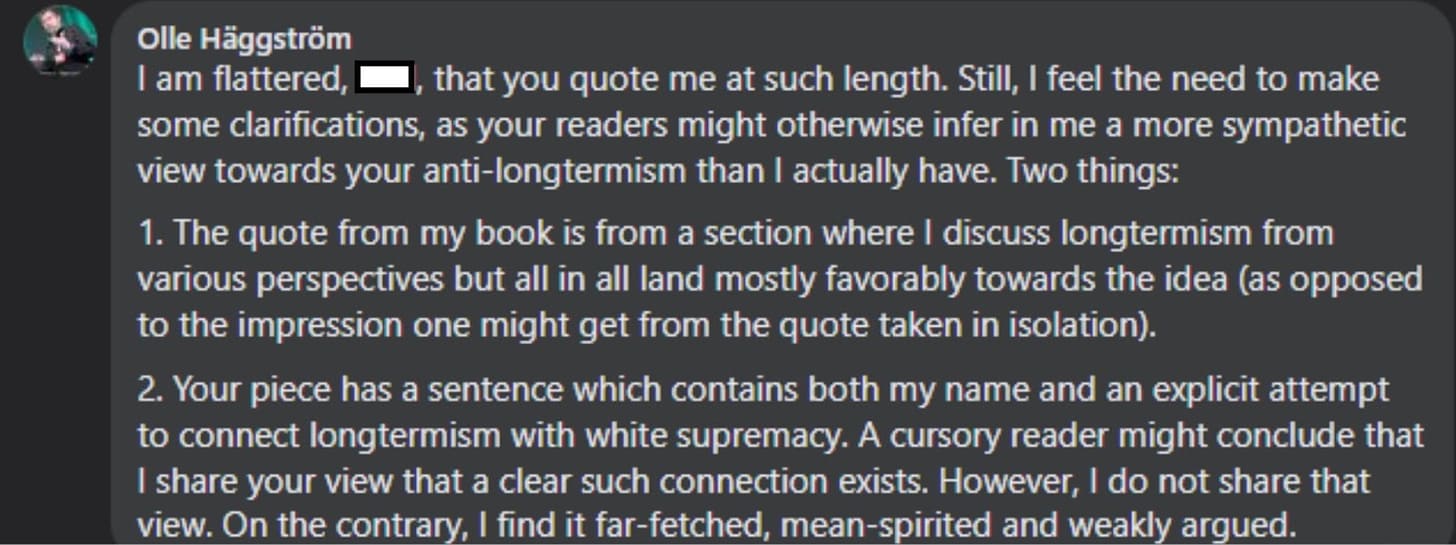

Olle Häggström

As evidence that longtermism has "profoundly harmful real-world consequences", Torres quotes Olle Häggström, a professor of mathematical statistics at the Chalmers University of Technology:

However, Häggström is not discussing here longtermism, but Nick Bostrom’s views specifically. The distinction is not pedantic: there are many types of longtermism, of which Bostrom’s version is but one. In fact, Häggström is sympathetic to longtermism, despite the impression one gets from reading the passage.

But why does one get this mistaken impression? Because Torres has truncated its first sentence to make it seem as if Häggström disagrees with Bostrom to a much greater degree than he actually does. Here is the sentence in full, with Torres’s omissions highlighted:4

That is, Häggström is not rejecting the conclusion as "patently absurd and wrong", as Torres implies. Indeed, just a page later, Häggström states his position clearly:

Back in 2020, Häggström contacted Torres to complain that Torres had misquoted him and that he did not say what Torres had portrayed him as saying. Torres, however, refused to issue a correction and, in fact, continued to misrepresent Häggström’s views in other articles, even including the exact same mutilated quote in a piece published as recently as October 2021. This, despite professing to “really care about getting the facts right”:

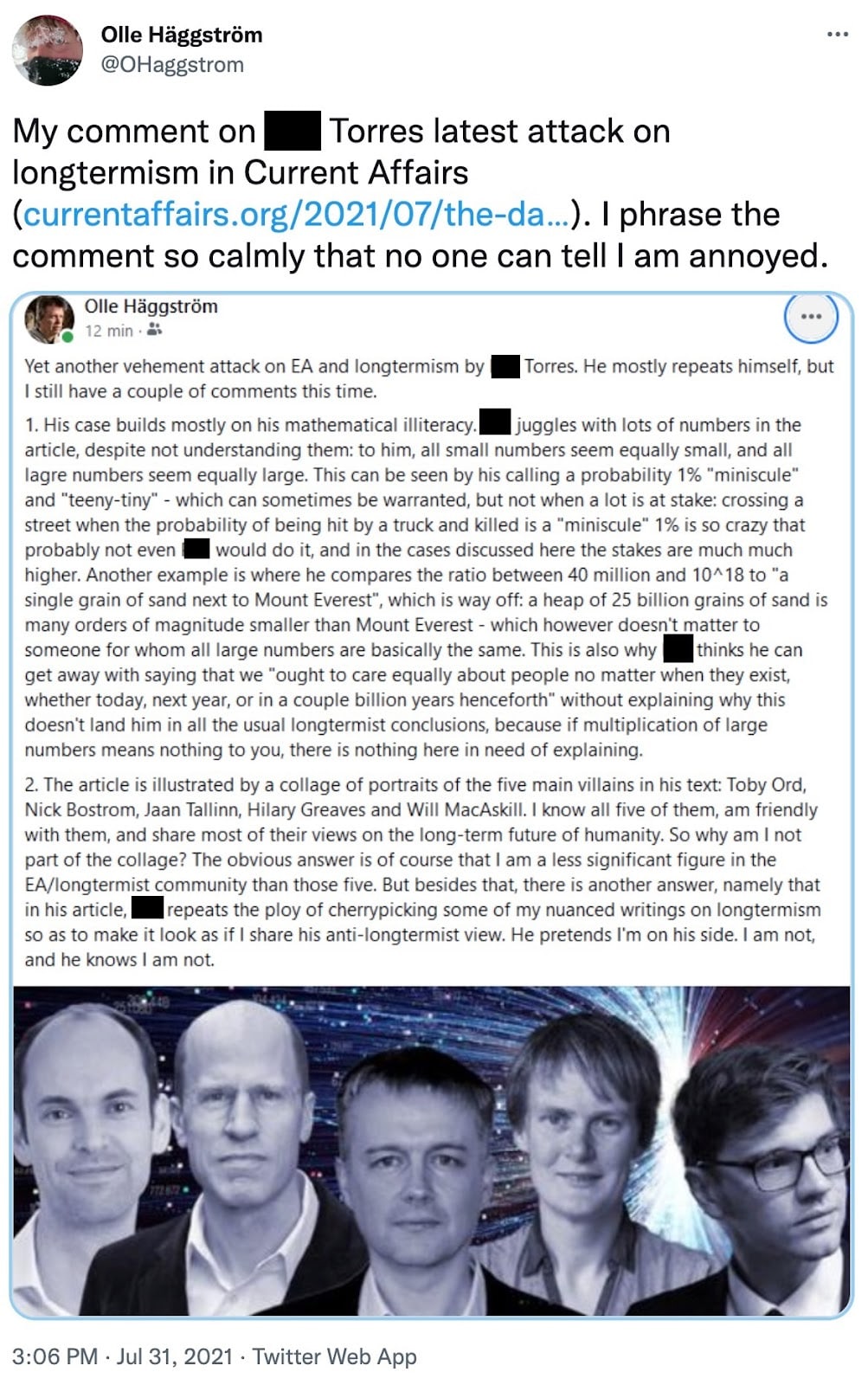

Eventually, Häggstrom became so frustrated with Torres’s misrepresentations that he made several public statements to correct the record. In those statements, Häggstrom characterizes Torres’s attempt to connect longtermism with white supremacy as “far-fetched, mean-spirited and weakly argued”; notes that “this is far from the first time that [Émile] Torres references my work in a way that is set up to give the misleading impression that I share his anti-longtermism view”; and denounces Torres’s “misrepresentation of and attacks on longtermist ideas along with his disingenuous cherrypicking of writings by Beckstead, Bostrom, myself and others.”

Sockpuppetry

As documented previously, Torres is known to have created multiple Twitter accounts to evade blocks and continue harassing their targets. But this isn’t the only instance in which Torres was caught posting under a false identity.

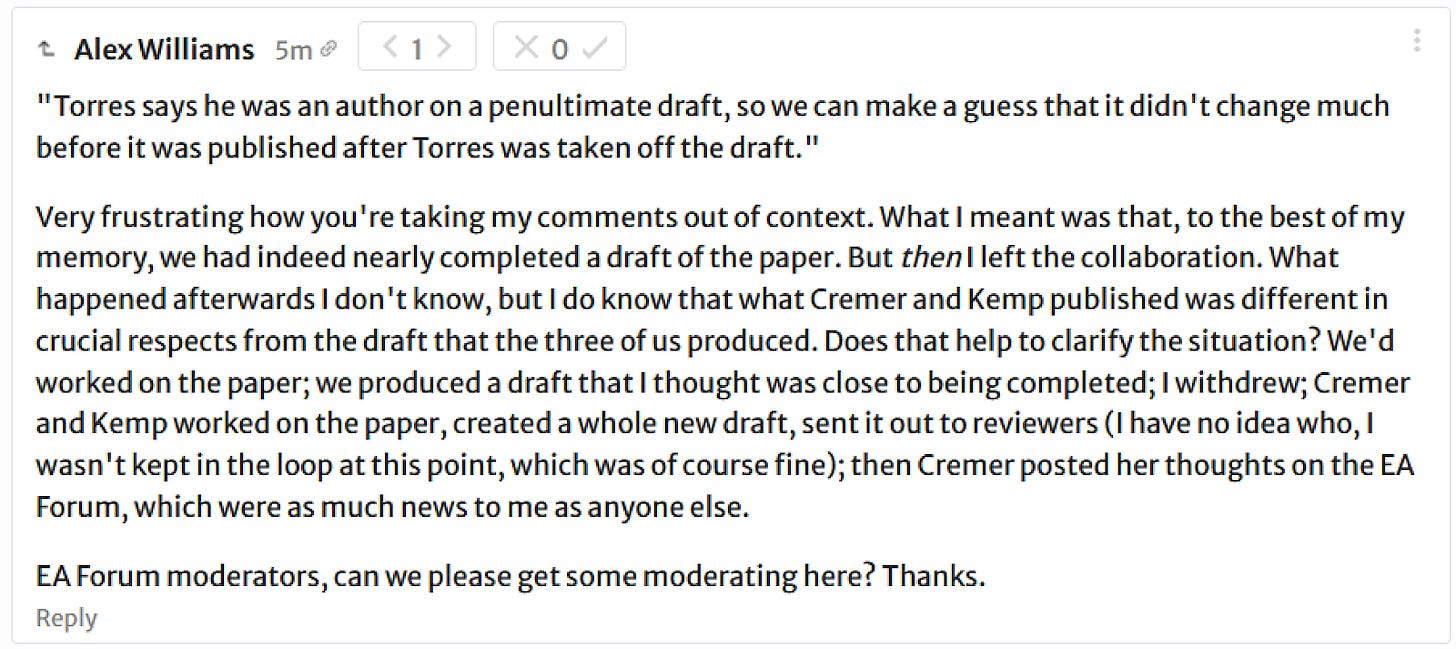

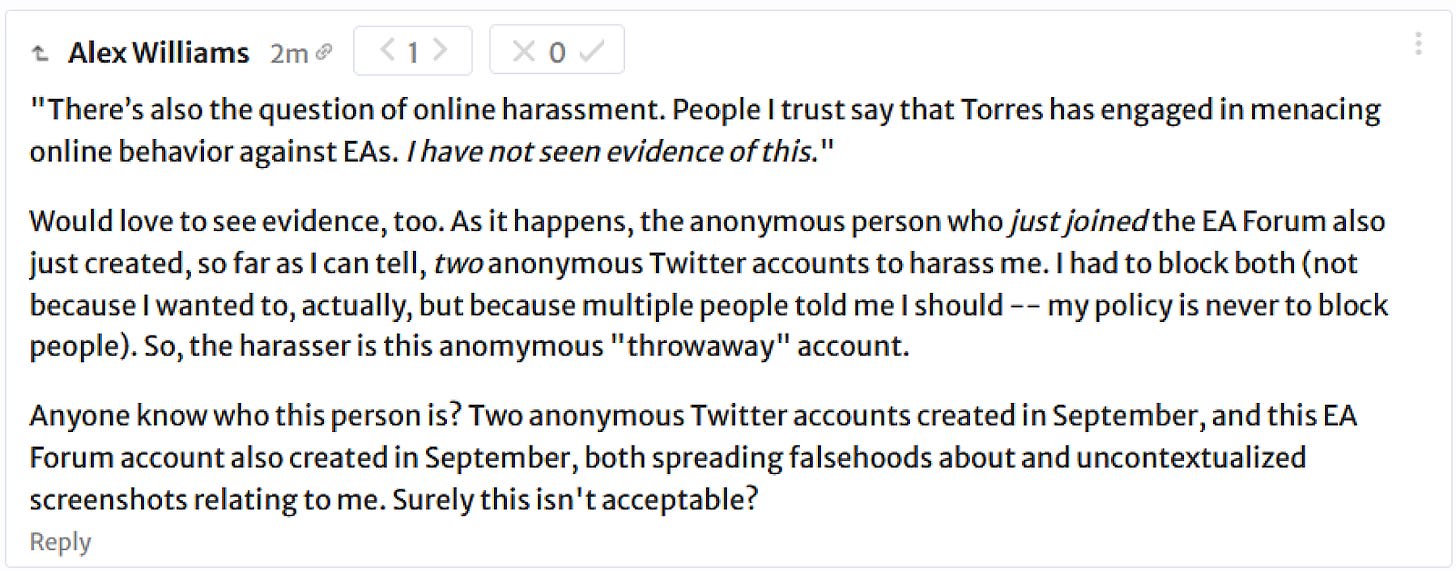

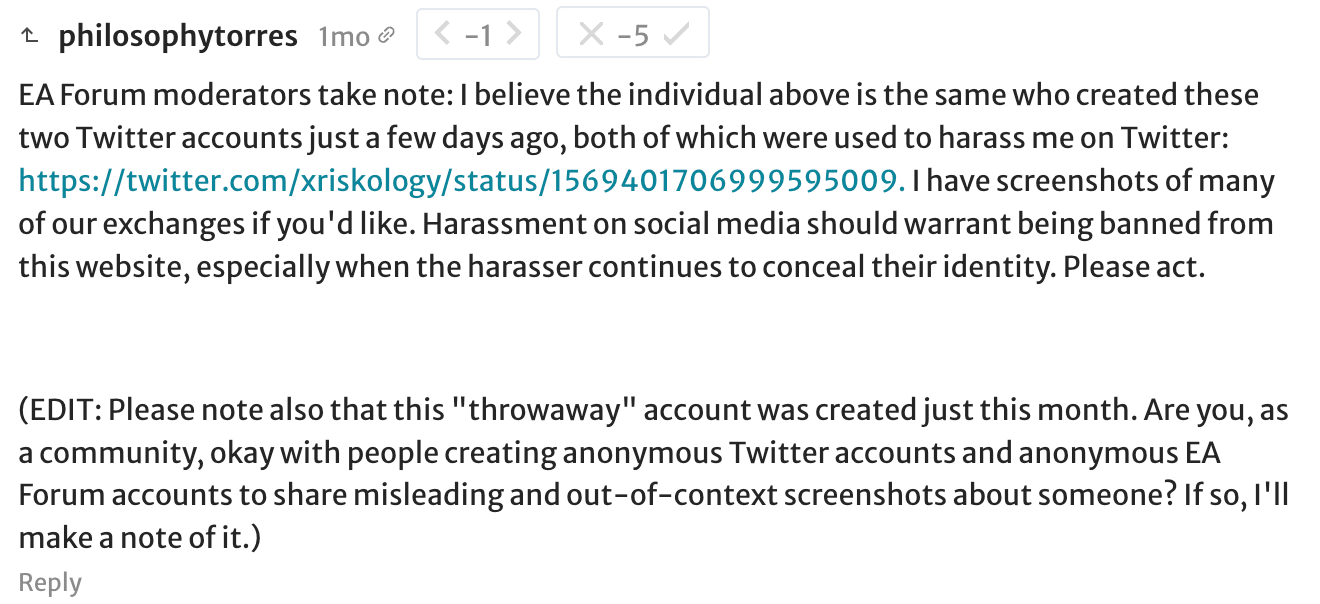

“Alex Williams”

In August 2022, Torres made the careless error of responding to a critic on the Effective Altruism Forum from the account of one "Alex Williams." This "Alex" replies in a thread about and involving "philosophytorres", Torres's Effective Altruism Forum username. It is evident that “Alex” is no other than Torres themself, using the wrong account. That is, Alex Williams is a sockpuppet of Émile P. Torres (a “sockpuppet” is a false online identity used for the purposes of deception).

Minutes after posting their first comment, Torres left a second reply, also from Alex’s account. Ironically, the comment complains that one of Torres’s critics had failed to disclose their true identity:

In a further ironic twist, moments later, in a comment posted under their real account, Torres addressed the EA community and asked whether its members were okay with people creating anonymous Forum accounts to discredit others:

Upon discovering their mistake, “Alex” (i.e., Émile) proceeded to delete the comments, attempting to quickly conceal evidence of their deception.

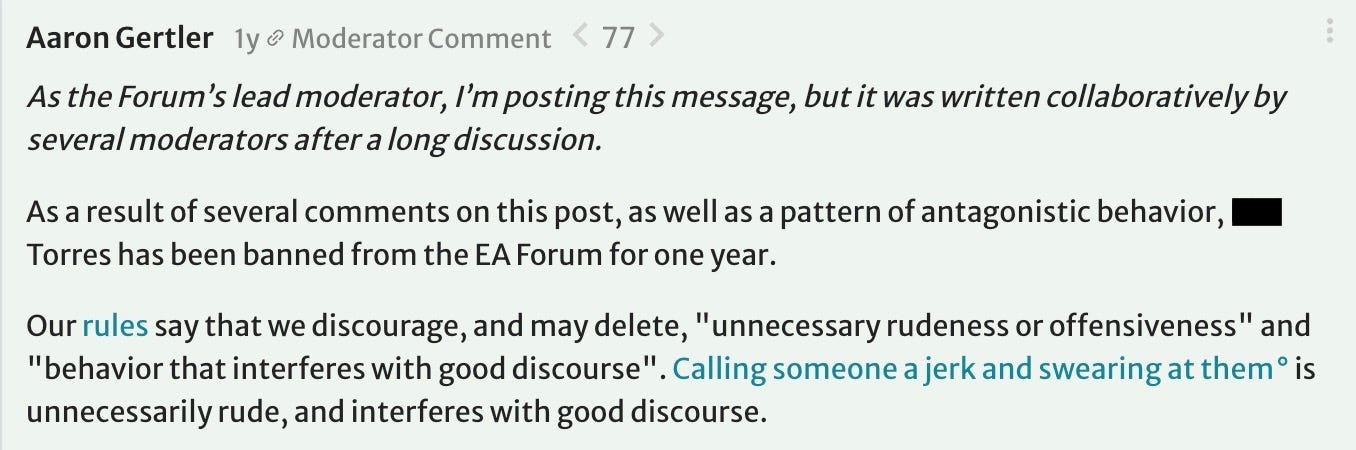

In creating this fake account, Torres was deliberately circumventing a ban that had been imposed on them for “[c]alling someone a jerk and swearing at them” (see above), still in effect on the date the account was created:

Torres then used the Alex Williams fake account, among other things, to ask a seemingly innocent question about Bostrom’s simulation argument. In fact, this was all disingenuous. By this point, Torres had already concluded that Bostrom's argument was flawed and wanted to find ways to discredit him.

In response to a user who had provided an answer to Torres’s question, Torres leaves two comments expressing “surpris[e]” that people took Bostrom’s argument seriously and that Bostrom’s paper made it through peer review:

After this user further responds to Torres’s insistent questions, Torres again is puzzled that “people take the argument seriously” and that “the peer review process didn’t pick up this problem”:

That this was part of an effort to discredit Bostrom is confirmed by a tweet Torres posted under Torres’s real account just some hours before they posted the EA Forum comments under the fake account:

In sum, Torres insulted and called someone a liar for telling the truth; was banned for this uncivil behavior; created a fake account to evade this ban; and used that account to discredit another person, abusing the trust of the EA community.

Conclusion

At the beginning of this post, I announced that I would show

How Torres repeatedly made demonstrably false claims.

How Torres grossly distorted the views of several people.

How Torres created multiple fake accounts to harass their targets, evade bans, and discredit their opponents.

How Torres harassed and stalked multiple people, and made racist comments about at least one person.

I have now presented the evidence supporting all those allegations. As a result of these findings, I believe any claims Émile Torres makes should be viewed with extreme skepticism.

I noted at the beginning that the above represents only about 40% of the evidence I have collected. Given that Torres deleted all tweets between 2017-2019 and was known to be harassing people during this time, I suspect there are many more issues that I don't know about. Indeed, we only know about the Boghossian and Pluckrose incidents because someone took the time to save some of the relevant tweets to an online archive.

The purpose of this post was only to document some examples of Torres’s misbehavior. For a more detailed examination of Torres’s criticisms of effective altruism and longtermism, you can check out this piece by Avital Balwit. Torres wrote a reply, which you may want to read as well.

Added November 23rd, 2022:

In a disturbing tweet, Émile P. Torres claims that I have been "stalking, harassing, lying about, impersonating, and threatening [them] with physical violence."

This is an complete fabrication.

To repeat what I wrote above, I have never messaged Torres, let alone done any of the things they accuse me of.

Since I am not behind these attacks and Torres cannot conceivably have come to this conclusion, it seems fairly clear that Torres is knowingly lying about me.

Added February 15th, 2023:

Andreas Kirsch, a DPhil student at Oxford University unaffiliated with the EA movement, recently decided to fact-check this piece after Torres claimed it was "defamatory and false". His conclusion was that Torres's claims were unfounded and that the article was substantially true. You can read Andreas's thread here.

markfuentes1.substack.comÉmile P. Torres’s history of dishonesty and harassmentAn incomplete summary

References

Beckstead, N. (2013). On the Overwhelming Importance of Shaping the Far Future. PhD Thesis.

Boghossian, P. (2020, September 28). “We adopted our daughter from China as a waiting child when she was almost 3.” Twitter.

Cowen, T. (2018). Stubborn Attachments: A Vision for a Society of Free, Prosperous, and Responsible Individuals. San Francisco: Stripe Press. p. 89-90.

Cremer, C. Z. (2022, September 14). “The post in which I speak about EAs being uncomfortable about us publishing the article only talks about interactions with people who did not have any information about initial drafting with Torres.” EA Forum.

Gertler, A. (2021, March 8). “As the Forum’s lead moderator, I’m posting this message, but it was written collaboratively by several moderators after a long discussion.” EA Forum.

Häggström, O. (2016). Here Be Dragons: Science, Technology and the Future of Humanity. Oxford: OUP. p . 240-1.

Häggström, O. (2021, July 13). “My comment on [Émile] Torres latest attack on longtermism in Current Affairs.” Twitter.

Häggström, O. (2021, August 6). “Many thanks for this, Rohin.” EA Forum.

Häggström, O. (2021, December 16). “I strongly endorse this piece by @avitalbalwit about longtermism and in response to @xriskology’s misrepresentation of and attacks on longtermist ideas along with his disingenuous cherrypicking of writings by Beckstead, Bostrom, myself and others.” Twitter.

Karnofsky, H. (2017, February 16). Radical Empathy. Open Philanthropy.

Long, Robert. (2022, October 11). “There’s (much) more to launching an org than having the idea and a website.” Twitter.

Magnabosco, A. (2018, March 3). “This fascination with PB seems to only be escalating since we had to boot you from off-topic posting (about PB) in one of the study groups few months back.” Twitter.

Matis (2022, September 15). “To recall, what you tweeted was this.” EA Forum.

Ó hÉigeartaigh. (2021, Mach 8). “A quick point of clarification that [Émile] Torres was never staff at CSER.” EA Forum.

Pluckrose, H. (2018, March 2). “I don't think your stalking behaviour is really a suitable subject for ongoing medium discussion.” Twitter.

Pluckrose, H. (2018, March 2). “I have given the evidence in the form of your own posts.” Twitter.

Pummer, T. (2020, April 19). Hilary Greaves talks longtermism.

Torres, É. P. (2016, September 16). “My @TIME magazine article about atheism, co-authored with two brilliant scholars.” Twitter.

Torres, É. P. (2018, March 1). “Hahaha. I actually emailed Peter about being in Portland soon and wanting to sit in on his class.” Twitter.

Torres, É. P. (2018, March 1). “Lol. I actually wrote to the PSU police.” Twitter.

Torres, É. P. (2018, March 2). “Please don't mute me.” Twitter.

Torres, É. P. (2018, March 2). “You're not getting it.” Twitter.

Torres, É. P. (2018, March 2). “I have repeatedly asked you to stop pestering me.” Twitter.

Torres, É. P. (2018, March 2). “Well, why does she keep making false claims?” Twitter.

Torres, É. P. (2018, March 3). “I try to ignore these guys, then something pops into my feed.” Twitter.

Torres, É. P. (2018, March 6). “Just for the record, I had asked her to stop harassing me for almost an entire day.” Twitter.

Torres, É. P. (2018, March 6). “Peter is easily triggered.” Twitter.

Torres, É. P. (2021). Is Existential Risk a Useless Category? Could the Concept Be Dangerous?

Torres, É. P. (2021). Were the great tragedies of history “mere ripples”? The case against longtermism.

Torres, É. P. (2021, Abril 27). “Haydn, Michael Plant, etc. etc.” EA Forum.

Torres, É. P. (2021, Abril 27). “He has unfortunately misrepresented himself as working at CSER on various media (unclear if deliberate or not).” EA Forum.

Torres, É. P. (2021, Abril 27). “I am trying to stay calm, but I am honestly pretty f*cking upset that you repeatedly lie in your comments above, Sean.” EA Forum.

Torres, É. P. (2021, Abril 27). “That I didn't know about, Sean, nor did you mention it.” EA Forum.

Torres, É. P. (2021, July 28). The dangerous ideas of “longtermism” and “existential risk.” Current Affairs.

Torres, É. P. (2021, October 19). Why Longtermism Is the World’s Most Dangerous Secular Credo. Aeon.

Torres, É. P. (2021, December 16). “Also, someone in the comments quotes Helen Pluckrose.” Twitter

Torres, É. P. (2022, May 19). “I would *highly recommend* people read actual publications by longtermists.” Twitter.

Torres, É. P. (2022, May 24). “We know that Bostrom has influenced Musk, e.g., Musk seems to accept Bostrom’s flawed argument that we almost certainly live in a computer simulation (Musk’s conclusion, btw, not Bostrom’s).” Twitter.

Torres, É. P. (2022, August 20). Understanding ‘longtermism.’ Salon.

Torres, É. P. (2022, September 9). “No, they wouldn't.” Twitter.

Torres, É. P. (2022, September 15). “EA Forum moderators take note.” EA Forum

Torres, É. P. (2022, September 15). “Thank you for correcting the record, Zoe, and my apologies if I'd misremembered some of this.” EA Forum.

Torres, É. P. (2022, October 11). “Yet if you search givingwhatwecan.org on Wayback Machine, you’ll find (surprise!) that the website for this organization was first posted online in 2007, *a whole two years* before MacAskill had even met Ord.” Twitter.

Torres, É. P. (2022, October 11). “It's not an accusation, it's a question.” Twitter.

Wiblin, R. (2022, September 8). Andreas Mogensen on whether effective altruism is just for consequentialists. 80,000 Hours.

Wilkinson, H. (2022, September 8) “Another out-of-context quote from someone who disagrees with the view expressed when it's taken in isolation?” Twitter.

Wilkinson, H. (2022, September 8) “"It seems more plausible to me that X" doesn't mean "I'm convinced of X".” Twitter

Wilkinson, H. (2022, September 8) “Just a reminder that, regardless of whatever actions you speculate that other particular longtermists *would* endorse or *should* endorse, it’s still a lie that greaves has *in fact* advocated for poor-to-rich wealth transfer.” Twitter.

Williams, A. [Torres, É. P.] (2022). Comments on the EA Forum. EA Forum.

Please note that ‘Mark Fuentes’ is a pseudonym I am using to protect my safety, since I fear that, were I to post under my real name, I would become Torres’s next harassment target. As I hope readers who have read this article will agree, these fears are not unwarranted.

Indeed, in the concluding section of his paper, Mogensen makes it clear that, because utilitarianism has these implications, he is inclined to the view that it is a less plausible moral theory overall.

Revealingly, in another version of this article, Torres calls Beckstead’s views “unambiguously white-supremacist.”

Häggström, Here Be Dragons: Science, Technology and the Future of Humanity. Oxford: OUP. 2016. p . 240.

Nathan Young @ 2024-05-02T07:41 (+76)

Broadly I think that both Torres and Gebru engage in bullying. They have big accounts and lots of time and will quote tweet anyone who disagrees with them, making the other person seem bad to their huge followings.

yanni kyriacos @ 2024-05-02T00:26 (+64)

I worry that, due to their high levels of openness and conscientiousness, EAs have an overly high "burden of proof" bar to consider someone a malicious actor. Taking one look at this Émile person's online content says to me he shouldn't be taken seriously. I can't tell if EAs lack a "gut instinct" OR they have one but ignore it to a harmful degree!

Jason @ 2024-05-02T01:11 (+47)

If someone semi-regularly gets quoted in major publications, it is impractical to simply write them off as not to be taken seriously.

(Torres uses they/them pronouns, by the way)

yanni kyriacos @ 2024-05-02T06:53 (+7)

Hi Jason! Thanks for the reply. Would mind laying out why you believe it is impractical to ignore Émile? I think this is a crux.

JWS @ 2024-05-02T19:22 (+44)

I think the main thing is their astonishing success. Like, whatever else anyone wants to say to Émile, they are damn hard working and driven. It's just in their case they are driven by fear and pure hatred of EA.

Approximately ~every major news media piece critical of EA (or covering EA with a critical lens, which are basically the same thing over the last year and a half) seems to link to/quote Émile at some point as a reputable and credible report on EA.

Sure, those more familiar with EA might be able to see the hyperbole, but it's not imo out there to imagine that Émile's immensely negative presentation of EA being picked out by major outlets has contributed to the fall of EA's reputation over the last couple of years.

Like, I was wish we could "collectively agree to make Émile irrelevant", but EA can't do that unilaterally given the influence their[1] ideas and arguments have had. Those are going to have to be challenged or confronted sooner or later.

- ^

That is, Émile's

Jason @ 2024-05-03T15:51 (+24)

I basically agree with @JWS' response. Generally, one should respond to poor-quality criticism when there is a risk that it will actually interfere with mission accomplishment. (This is in contrast to quality criticism, engagement with which will hopefully make EA better).

In general, the default rule for dealing with bad-faith, delusional, wildly inaccurate, etc. criticism on X and in similar places should be to ignore it. Consider the flat-earther movement. Many people have viewed debunkings of the flat-earther beliefs, which were doubtless produced merely for entertainment value because lots of people think it's fun to sneer at people with such beliefs. The problem is that now many, many more people know there is a flat-earther movement. The debunkers have given their ideas reach and may have even given them a smidgen of legitimacy by implying they are worth debunking.

If Émile were a random person on X, this would likely be the correct approach. However, their ideas have reach and perceived legitimacy due to all the quotations in major media sources. Repeated appearances in such sources (that are not as a target of the piece) is a strong signal to most people that the individual is unlikely to be seriously dishonest from an intellectual perspective, is worth taking at least somewhat seriously, etc.

Unless the reader is doing a lot of their own investigation, the media imprimatur will still carry some weight. Ignoring someone with such a media imprimatur is ordinarily unwise, as it makes it harder for readers inclined to do so independent research to find out what the critical flaws with that person's views are. Silence also makes it harder for future editors to detect that there are serious problems with the person and/or their views, and they are likely to defer to their colleagues' prior assessments that the person's reactions are worth covering in an EA-related story.

Ben Millwood @ 2024-05-02T01:17 (+4)

I guess it depends what you mean by taking seriously. I would say Émile is (correctly) not being taken seriously in the sense of expecting their criticisms to have merit?

Jason @ 2024-05-02T01:22 (+2)

Yeah -- based on the use of "consider someone a malicious actor" in the previous sentence, I read it as ~ "dismiss the person as a bad-faith actor who is trolling and ignore them"

Ben Millwood @ 2024-05-02T01:15 (+27)

Do you see EAs taking Émile seriously? Émile is banned from the forum, and I would guess most people who know the name at all know it as the name of someone who has been persistently unreasonable in their criticisms.

(btw though, your comment says "he shouldn't be taken seriously", but Émile is a they, not a he)

yanni kyriacos @ 2024-05-02T06:52 (+10)

Hi Ben! I suppose it depends on what each of us means by "taken seriously". I would prefer a post like this didn't get 185 karma, because I want us to collectively agree to make Émile irrelevant.

David M @ 2024-05-03T12:15 (+38)

Unfortunately, that's not a viable strategy. Emile is often the source for articles on EA in the media. Here are three examples from the guardian.

Remmelt @ 2024-05-08T07:22 (+6)

I actually think EAs have been rather quick to dismiss outside folks when those folks have different views on the world (that can’t be easily translated into EA speak).

I would be much more careful about opening up to insiders that are widely praised as leading figures in the community (like SBF) than to outsiders whose views are commonly perceived to be in conflict with the community’s aims.

Owen Cotton-Barratt @ 2024-05-02T17:44 (+61)

When I first read this article I assumed it was written in good faith (and found it quite helpful). However, at this point I think it’s correct to assume that “Mark Fuentes” (an admitted pseudonym which has only been used to write about Torres) is misrepresenting their identity, and in particular likely has some substantial history of involvement with the EA community, and perhaps history of beef with Torres, rather than having come to this topic as a disinterested party.

This view is based on:

- Torres’s claims about patterns they’ve seen in criticism (part 3 of this; evidence I take as suggestive but by no means conclusive)

- Mark refusing to consider any steps to verify their identity, and instead inviting people to disregard the content in the section called “my story”

- Some impressions I can’t fully unpack about the tone and focus of Mark’s comments on this post (and their private message to me) seeming better explained by them not having been a disinterested party than by them having been one

- A view that we’re not supposed to give fully anonymous accounts the benefit of the doubt:

- … in order not to be open to abuse by people claiming whatever identity most supports their points;

- … because they’re not putting their reputation on the line;

- … because the costs are smaller if they are incorrectly smeared (it doesn’t attach to any real person’s reputation).

With that assumption, I feel kind of upset. I’m not a fan of Torres, but I think grossly misrepresenting authorship is unacceptable, and it’s all the more important to call it out when it’s coming from someone I might otherwise find myself on the same side of an argument as. And while I expect that much of the content of the post is still valid, it’s harder to take at face value now that I more suspect that the examples have been adversarially selected.

Nathan Young @ 2024-05-03T08:12 (+12)

Naaah this seems about right. I have always felt a bit this way about this post which is why I rarely share it. That said, smoke is predictive of fire, and I think that these stories can be written is a bad sign.

Also Émile does harass people a bit. I know people who agree with them more than I do who are scared to interact, they use their big reach to bother people, they stay focused on perceived slights for a long time. I would understand why someone would do this semi-anonymously, but refusing to suggests what you say.

Jason @ 2024-05-02T18:05 (+9)

I'd add that Mark's rationale -- "disclosure always carries some risk" -- seems underspecified. I'm sure Mark, as a lawyer, can cobble together an NDA that sharply limits the verifier(s), and requires them to clear any reference to his name or identifying info from their computer after verification. Probably we'd be looking at flashing an ID, and providing a bar number / state of licensure. That would probably be enough, since AFAIK no major EA works as a public defender in NY.

Owen Cotton-Barratt @ 2024-05-02T22:04 (+4)

(If anyone disagreeing wants to get into explaining why, I'm interested. Honestly it would be more comforting to be wrong about this.)

Owen Cotton-Barratt @ 2024-05-03T08:16 (+5)

OK actually there's been a funny voting pattern on my top-level comment here, where I mostly got a bunch of upvotes and agree-votes, and then a whole lot of downvotes and disagree-votes in one cluster, and then mostly upvotes and agree-votes since then. Given the context, I feel like I should be more open than usual to a "shenanigans" hypothesis, which feels like it would be modest supporting evidence for the original conclusion.

Anyone with genuine disagreement -- sorry if I'm rounding you into that group unfairly, and I'm still interested in hearing about it.

DavidNash @ 2024-05-03T09:06 (+9)

Could also be the Bay Area/UK voting dynamic.

Owen Cotton-Barratt @ 2024-05-03T09:54 (+5)

Maybe? It seems a bit extreme for that; I think 5/6 of the "disagree" votes came in over a period of an hour or two mid-evening UK time. But it could certainly just be coincidence, or a group of people happening to discuss it and all disagree, or something.

DavidNash @ 2024-05-03T10:04 (+2)

Yeah, I don't know if that dynamic exists but it would be interesting if we could see what the forum looks like if you just count votes from different locations.

Nathan Young @ 2024-05-06T18:56 (+2)

Or indeed other kinds of clustering.

Nathan Young @ 2024-05-02T07:37 (+46)

I am personally pretty fond of Émile, but I see nothing here which seems untrue.

Émile makes huge claims against people even when they have done similar things. They wrote about 20 tweets about Will MacAskill having an old place of work on his academic page even though Émile has done ~ the same thing. It seems like both were accidents but I don't get why Will should be hounded but Émile can say it was a small error.

I know at least 1 person critical of EA who doesn't interact with Émile for fear that Émile will attack them on twitter.

I have never seen them admit fault or retract a statement, even when they say they will or the statement is obviously false. If I recall correctly, they wrote a set of tweets implying Hanania was an EA based on a blog subtitled "why I am not an EA".

Nathan Young @ 2024-05-06T19:02 (+24)

I tried to find errors in this article and wasn't able. Though I didn't try that hard.

I am not sure I want to tweet it, since it seems very adversarially selected. But of similar magnitude is the issue that people quote torres as if they are a reasonable source when they aren't.

I wish someone would write a more balanced article. I don't think torres was being racist to boghossian's kid, for instance. I think they were harassing boghossian though, and making light of jokes about the kid. And that's bad enough.

I find it hard to know what one should do over the long term about a badly behaved actor criticized by other badly behaved actors.

SummaryBot @ 2024-05-01T15:27 (+14)

Executive summary: The post presents evidence that Émile P. Torres has engaged in a pattern of dishonesty, harassment, stalking, and sockpuppetry in their interactions with the effective altruism community and others.

Key points:

- Torres harassed and stalked Peter Boghossian and Helen Pluckrose, including making racist comments about Boghossian's daughter.

- Torres made demonstrably false claims, such as being "forcibly removed" from a paper collaboration and misrepresenting their affiliation with the Centre for the Study of Existential Risk (CSER).

- Torres grossly distorted the views of several people, including Hilary Greaves, Andreas Mogensen, Nick Beckstead, Tyler Cowen, and Olle Häggström, to portray them and the longtermist philosophy as "white supremacist".

- Torres created fake accounts, including the "Alex Williams" sockpuppet, to evade bans, harass targets, and discredit opponents.

- When confronted with their misrepresentations, Torres either refused to issue corrections or briefly acknowledged mistakes before continuing the same behavior.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

David Thorstad @ 2024-05-02T13:36 (+8)

It is possible that many complaints about Torres are true and also that Torres raises important concerns. I would not like to see personal criticism of Torres become a substitute for engagement with criticism by Torres.

I sometimes worry that concern about Torres has made the community less receptive to any criticism of racism, whether or not raised by Torres. That strikes me as an outcome that should definitely be avoided.

JWS @ 2024-05-02T19:28 (+48)

Again, a fan of you and your approach David, but I think you underestimate just how hostile/toxic Émile has been toward all of EA. I think it's very fair to substitute one for the other, and it's the kind of thing we do all the time in real, social settings. In a way, you seem to be emulating a hardcore 'decoupling' mindset here.

Like, at risk of being inflammatory, an intuition pump from your perspective might be:

It is possible that many complaints about Trump are true and also that Trump raises important concerns. I would not like to see personal criticism of Trump become a substitute for engagement with criticism by Trump.

I think many EAs view 'engagement with criticism by Torres' in the same way that you'd see 'engagement with criticism by Trump', that the critic is just so toxic/bad-faith that nothing good can come of engagement.

Geoffrey Miller @ 2024-05-02T21:16 (+25)

As a tangent, I think EAs should avoid using partisan political examples as intuition pumps for situations like this.

Liberals might think that 'engagement with criticism by Trump' would be worthless. But conservative crypto investors might think 'engagement with criticism by Elizabeth Warren' would be equally worthless.

Let's try to set aside the reflexive Trump-bashing.

Elizabeth @ 2024-05-02T19:30 (+10)

Is there any level of bad behavior that you think merits totally ignoring someone? Where is that line for you?

David Thorstad @ 2024-05-02T19:36 (+10)

As far as not interacting with someone, there are lots of people I ignore on the basis of bad behavior. As far as not taking the types of concerns they raise seriously, I'd like to think that it's possible to separate the person from the concerns.

For example, in my discipline (philosophy) there is a truly excellent ranking of philosophy departments. It's also run by a terrible human being. Most of us still use the ranking, just with the warning not to engage with this individual, which we repeat to our students.

Elizabeth @ 2024-05-03T00:25 (+13)

Have you seen people dismiss concerns because Torres shares them (as opposed to dismissing Torres as a source)? I haven't, but I'm sure it's happening somewhere. I agree that would be bad epistemics.

Jason @ 2024-05-02T14:41 (+6)

Fully agree on paragraph two. On paragraph one, I do think certain past conduct could justify dismissal of a critic without engagement on the merits, such as a bad enough history of unfair and arguably dishonest quotations/citations.

Once you can't trust the other dialogue partner not to do that, the conversation is over. And I dont think anyone should feel an obligation to cite-check bad work. If one has reached that point -- I express no opinion as one who has generally kept a distance from Torres drama -- it would be reasonable to respond only to work that had been vetted by reputable publications, or that had other legible indicia of trustworthiness.

Nathan Young @ 2024-05-02T13:55 (+6)

What specific criticisms of racism do you mean?

David Thorstad @ 2024-05-02T14:10 (+21)

I'm starting to think that I need to write these up. I thought you folks knew. I don't think the EA community will react very well if I do write these up, so I have tended to hold off.

Jason @ 2024-05-02T14:46 (+21)

I think that would be helpful -- Torres is just not the right messenger for this message in my opinion. The community has made up its mind on them, and there have been enough allegations of harassment on both sides that many voices in the middle would probably nope out of a Torres - EA Orthodoxy dialogue/debate.

David Thorstad @ 2024-05-02T14:49 (+16)

Pleasantly surprised by this (ditto for David Mathers' comment). Maybe I will try this? I care a great deal about this issue, but not (yet) enough to burn my ability to speak with EAs.

Nathan_Barnard @ 2024-05-02T19:30 (+26)

I'd be very surprised if this burnt your ability to speak with EAs.

Radical Empath Ismam @ 2024-05-05T01:48 (+24)

You should absolutely do it, and I would agree that you probably would not receive material backlash.

But I would be careful to assume that your success means that any plain old person can critique EA and receive a warm reception.

You've spent a long time building amicable relationships with EAs (I suspect by walking on eggshells, self-censoring - hope I am not being presumptuous here David).

David Thorstad @ 2024-05-05T02:52 (+2)

<3

Ben Millwood @ 2024-05-08T22:05 (+21)

I feel a bit worried that everyone would like to believe that EAs will receive criticism in good faith, so they will be excited to tell you this is true, even if they can't really be that confident. I hope they're right, but worry they're really saying "I, personally, would receive this in good faith, and I think others ought to, and I don't see why they wouldn't" or something like that.

I would guess from things like the Bostrom email controversy that you'll get at least a couple of frustrating comments, and perhaps a small handful people who will be reflexively and unfairly judgemental. I would hope and guess that these experiences will be outweighed by people being grateful for you raising your concerns, including from people who ultimately disagree with the concerns. But obviously it's hard to be sure (even harder given that I don't know what the concerns are).

I'm a bit worried this comment raises the barrier to commentary, so let me try to lower it by saying you can feel free to DM me if you think talking to me privately about your post will help you get it published :)

David Mathers @ 2024-05-02T14:42 (+18)

You are a polite and careful critic, I think you will not get a mega-hostile reaction from most people. (If the worry is just that you won't persuade, then, well, you're not making things worse.)

titotal @ 2024-05-01T17:06 (+6)

As someone who is broadly on Torre's side of the fence, I find Torre's antics such as described here to be extremely annoying and unhelpful. I despise Boghossian's et als politics but the behavior here was clearly unjustified, and a lot of the other things here looks like either deliberate dishonesty or a severe lack of reading comprehension. I think this sort of behavior just makes things harder for people who genuinely want to criticize the real flaws and harms in EA thinking.

In fairness, you should probably link Torres response to the article (from part 3 onwards, although it doesn't actually address a lot of the accusations). Torres account of receiving harassment and threats of violence seem plausible to me, although we can never know for sure (another reason not to use sockpuppets and other underhanded tactics).

EffectiveAdvocate @ 2024-05-01T17:19 (+48)

What do you mean by being on their side of the fence? It is quite hard for me to discern the underlying disagreement here. I feel like I am one of the most engaged EAs in my local community, but the beliefs Torres ascribes to EA are so far removed from my own that it is difficult to determine whether there is any actual substantial disagreement underlying all this nastiness in the first place.

Guy Raveh @ 2024-05-01T21:16 (+7)

I feel like I am one of the most engaged EAs in my local community, but the beliefs Torres ascribes to EA are so far removed from my own

This might have to do with "how local" your local community is. It seems to me that the weirder sides of EA (which I usually consider bad, but others here might not) are common in the EA hubs (Bay Area, Oxbridge, London, and the cluster of large groups in Europe) but not as common in other places (like here in Israel).

dr_s @ 2024-05-02T12:29 (+31)

But even then, a nuanced engagement with that would require making distinctions, not just going "all EA evil". Both Torres and Gebru these days are very invested in pushing this label of "TESCREAL" to bundle together completely different groups, from EAs who spend 10% of their income in malaria nets to rationalists who worry about AI x-risk to e/accs who openly claim that ASI is the next step in evolution. I think here there are two problems:

- abstract moral philosophy can't be for the faint of heart, you're engaging with the fundamental meaning of good or evil, you must be able to realize when a set of assumptions leads to a seemingly outrageous conclusion and then decide what to make of that. But if a moral philosopher writes "if we assume A and B, that leads us to concluding that it would be moral to eat babies", the reaction can't be "PHILOSOPHER ENDORSES EATING BABIES!!!!", because that's both a misunderstanding of their work and if universalized will have a chilling effect leading to worse moral philosophy overall. Sometimes entertaining weird scenarios is important, if only to realise the contradictions in our assumptions;

- for good or bad, left-wing thought and discourse in the last ten-fifteen years just hasn't been very rational. And I don't mean to say that the left can't be rational. Karl Marx's whole work was based on economics and an attempt to create a sort of scientific theory of history, love it or hate it the man obviously had a drive more akin to those of current rationalists than of current leftists. What is happening right now is more of a fad, a current of thought in which basically rationality and objectivity have been sort of depreciated as memes in the left wing sphere, and therapy-speak that centres the self and the inner emotions and identity has become the standard language of the left. And that pushes away a certain kind of mind very much, it feels worse than wrong, it feels like bullshit. As a result, the rationalist community kind of leans right wing on average for evaporative cooling reasons. Anyone who cares to be seen well by online left wing communities won't associate. Anyone who's high decoupling will be more attracted, and among those, put together with the average rationalist's love for reinventing the wheel from first principles, some will reach some highly controversial beliefs in current society that they will nevertheless hold up as true (read: racism). It doesn't help when terms like "eugenics" are commonly used to lump together things as disparate as Nazis literally sterilizing and killing people and hypothetical genetical modifications used to help willing parents have children that are on average healthier or live longer lives, obviously very different moral issues.

Honestly I do think the rationalist space needs to confront this a bit. People like Roko or Hanania hold pretty extreme right wing beliefs, to the point where you can't even really call them rational because they are often dominated by confirmation bias and the usual downfalls of political polarization. Longtermism itself is a pretty questionable proposition in my book, though that argument still lays in the space of philosophy for the most part. I would be all for the rise of a "rational left", both for the good of the rationalist community and for the good of the left, which is currently really mired into an unproductive circle jerk of emotionalism and virtue signalling. But this "TESCREAL" label if anything risks having the opposite effect, and polarizing people away from these philosophically incompetent and intellectually dishonest representatives of what's supposed to be the current left wing intelligentsia.

RyanCarey @ 2024-05-02T13:59 (+31)

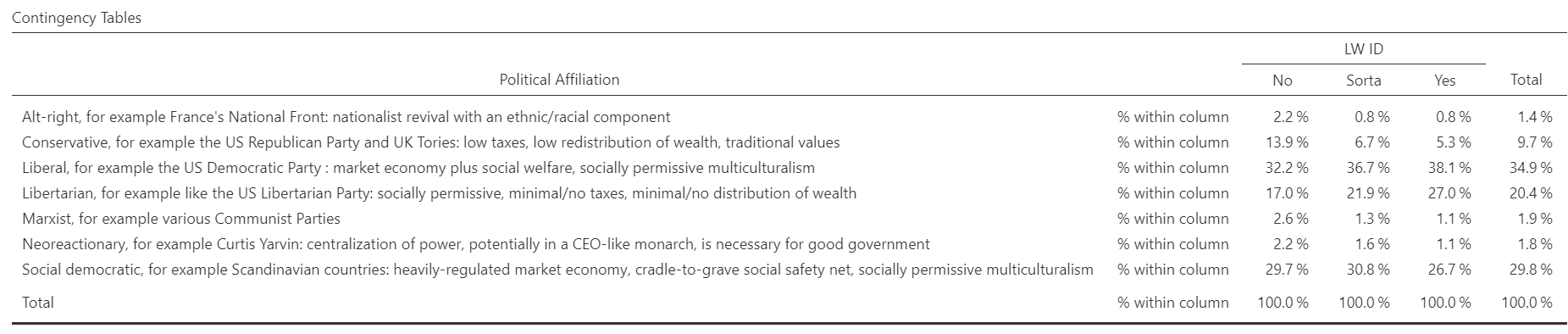

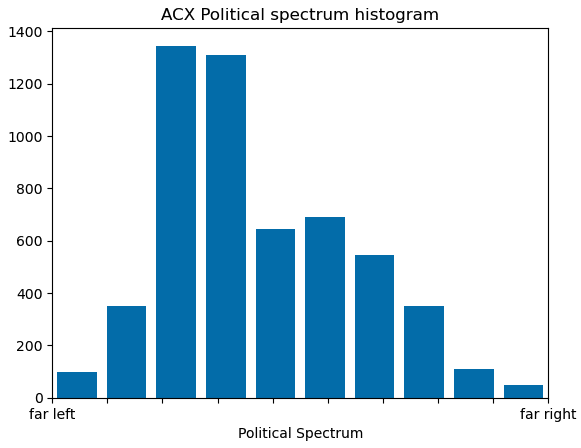

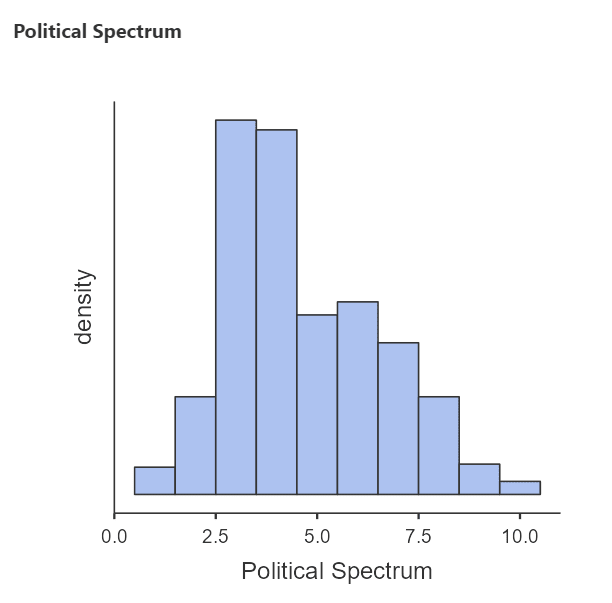

I think the trend you describe is mostly an issue with "progressives", i.e. "leftists" rather than an issue for all those left of center. And the rationalists don't actually lean right in my experience. They average more like anti-woke and centrist. The distribution in the 2024 ACX survey below has perhaps a bit more centre-left and a bit less centre and centre-right than the rationalists at large but not by much, in my estimation.

dr_s @ 2024-05-02T14:06 (+1)

Fair! I think it's hard to fully slot rationalists politically because, well, the mix of high decoupling and generally esoteric interests make for some unique combinations that don't fit neatly in the standard spectrum. I'd definitely qualify myself as centre-left, with some more leftist-y views on some aspects of economics, but definitely bothered by the current progressive vibe that I hesitate to define "woke" since that term is abused to hell but am also not sure how to call since they obstinately refuse to give themselves a political label or even recognise that they constitute a noteworthy distinct political phenomenon at all.

How was this survey done, by the way? Self ID or some kind of scored test?

RyanCarey @ 2024-05-02T14:34 (+3)

This was just a "where do you rate yourself from 1-10" type question, but you can see more of the questions and data here.

dr_s @ 2024-05-02T14:39 (+1)

So the thing with self-identification is that I think it might suffer from a certain skew. I think there's fundamentally a bit of a stigma on identifying as right wing, and especially extreme right wing. Lots of middle class, educated people who perceive themselves as rational, empathetic and science-minded are more likely to want to perceive themselves as left wing, because that's what left wing identity used to prominently be until a bit over 15 years ago (which is when most of us probably had their formative youth political experiences). So someone might resist the label even if in practice they are on the right half of the Overton window. Must be noted though that in some cases this might just be the result of the Overton window moving around them - and I definitely have the feeling that we now have a more polarized distribution anyway.

David Mathers @ 2024-05-02T14:18 (+25)

Do we actually have hard statistical evidence that rationalists as a group "lean right"? I am highly unsympathetic to right rationalism, as you can see here: https://forum.effectivealtruism.org/posts/kgBBzwdtGd4PHmRfs/an-instance-of-white-supremacist-and-nazi-ideology-creeping?commentId=tNHd9C8ZbazepnDqs And it certainly feels true emotionally to me that "rationalism is right-wing". (Which is one reason I consider myself an EA but not a rationalist, although that is mostly just because I entered EA through academic philosophy not rationalism and other than reading a lot of SSC/ACX over the years, have only ever interacted with rationalists in the context of doing EA stuff.) Certain high profile individual rationalist seem to hold a lot of taboo/far-right beliefs (i.e. Scott Alexander on race and IQ here: https://www.astralcodexten.com/p/book-review-the-origins-of-woke). Roko and Hannania are of course even more right-wing (and frankly pretty gross in my view), though hopefully they are outliers.

BUT

Over the years, I have observed a general pattern with, what we can call "rationalist-like" groups: i.e. lots of men, mostly straight and white, lots of autism broadly construed, an interest in telling the harsh truth, reverence for STEM and skepticism of the humanities, more self-declared right libertarians than the population average etc.:

1) The group gains a reputation for being right-wing, sexist, bigoted etc.

2) People in the group get very offended about this; I get a bit offended too: most people I have met within the group seem moderate, with a lean towards the centre-left rather than the centre-right. I feel as a person with mild autism that autistic truth-telling and bluntness is getting stigmatised by annoying, overemotive people who can't defend their views in a fair argument.

3) Gradually thought leaders of the group have a lot of scandals involving some combination of: misogyny, sexual harassment, Islamophobia, racism, eugenics, Western chauvinism etc.

4) I start thinking "ok, maybe [group] actually is right-wing, and I am either a sucker to be involved with it, or self-deceived about my own (self-declared liberal centrist) political preferences (after all, I do get irritated with the left a lot and believe some un-PC things, I think I am pro some transhumanist genetic engineering stuff in principle maybe, am not particularly left-or right-economically, maybe "liberalism" is what angry Marxists on twitter say it is etc. etc.)"

5) Some survey data comes out about the political views of rank-and-file members of [group]. They are overwhelmingly centre-left liberal: Where there is evidence on views about gender specifically, they are also pretty centre-left liberal. I feel even more confused.

Over the years I have seen this pattern to varying degrees with:

-Movement atheism (Can't find the survey data I once saw highlighted on twitter on this by a surprised critic of right-wing movement "skepticism" so you'll have to trust me on this one.)

-Analytic philosophy (relatively speaking: seen as a "right-wing" subject in the humanities in relative terms, and a bastion of sexism: both those might be true, but nonetheless, considerably more analytic philosophers endorse socialism than capitalism, and a slight majority are socialists: https://survey2020.philpeople.org/survey/results/5122)

-"Tech" itself: https://www.noahpinion.blog/p/silicon-valley-isnt-full-of-fascists

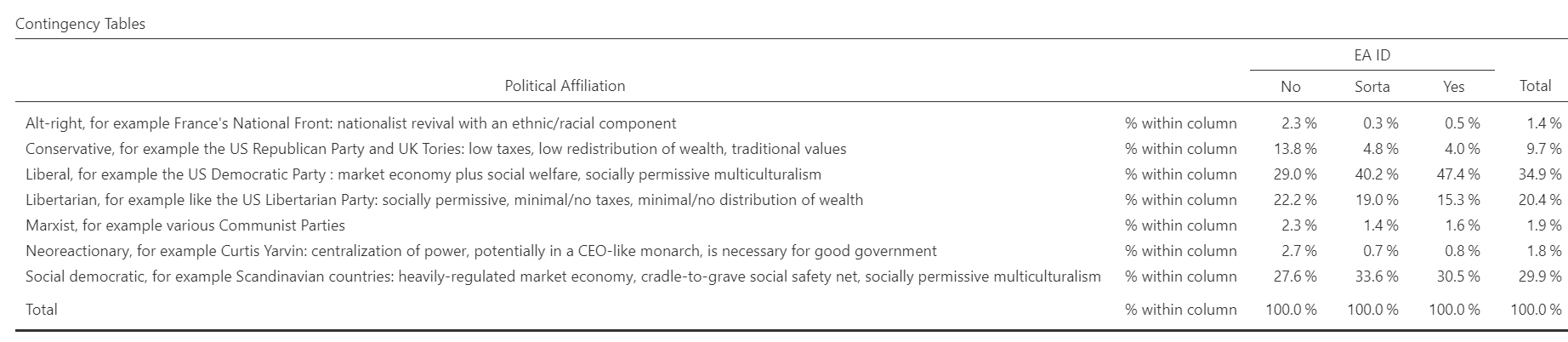

-EA itself (Compare our bad reputation on the left-as far as I can tell, with the fact that more EAs identify as "left" than "centre" "centre-right" "right" "libertarian" or "other" put together even when "centre-left" is also an option: https://forum.effectivealtruism.org/posts/AJDgnPXqZ48eSCjEQ/ea-survey-2022-demographics#Politics).

I have seen less hard data for the rationalists, but I do recall about ten years ago Scott Alexander trumpeting that the average LessWrong user had at least as positive a rating of "feminism" on a 1-5 scale as the average American woman. (Though the median American woman politically is probably like an elderly Latina church goer with economically left-wing socially conservative Catholic views?) And that whilst survey data of SSC readers at one point showed most endorsed "race realism" (I remember David Thorstad pointing this out on twitter), and I would not hesitate to describe ACX as "linked to the far-right", nonetheless I seem to remember than when Scott surveyed the readers on a 1-10 left-right scale, the median reader was a 4.something, i.e. very slightly more left than right identified:

I am not sure what is going on with this, probably a mixture of:

-People being self-deceived about their views and being more right-wing than they think they are, because the right is stigmatized in wider intellectual culture and people don't want to see themselves as part of it.

-People in these spaces hold mostly left views, but they mostly hold relatively uncontroversial left-wing views, or are not a prime target for the right-wing press for other reasons, whilst they minority of right-wing views they do hold tend to be radioactively controversial so they end up in the media.

-I mostly read centre-leftish media (The Guardian, Yglesias, Vox until the last couple of years) or critics of "wokeness" who are not straightforwardly conservative (Yglesias again, Singal), rather than conventional conservative stuff, so I hear about "woke"/left anger with these groups, but not right-wing anger with them. I also pay less attention to the latter because I just care less about it; it's not a source of personal angst for me in the same way.

-People who want to/get to become leaders in these sorts of spaces differ in their traits from the median member of the group in ways that make them predictably more right-wing than the average.

-*Becoming* a leader makes you more right-wing, since you like hierarchy more when you're on top of the local hierarchy.

-People confused a (perceived and/or real) tendency towards sexual bad behaviour amongst autistic nerds with a right-wing political position.

-These groups are well to the left of the median citizens, but they are to the right of the median person with a master degree, so most people in "intellectual" spheres are correctly picking up on them being more right-wing than them and they're friends, but wrongly concluding that makes them "right-wing" by the standards of the public as a whole.

-Anything stereotypically "masculine" outside of a strike by manual labourers gets coded as "right" these days, facts be damned.

-There is a distinctive cluster of issues around "biodeterminism" on which these groups are very, very right-wing on average-eugenics, biological race and gender differences etc.-but on everything else they are centre-left.

Larks @ 2024-05-02T14:53 (+20)

You have some good hypothesis. One other: a lot of left wing activist types (who are disproportionately noisy) have very strong ideological purity preferences, so a person with mainly left wing views but some right wing views can be condemned for the latter, and likewise a movement with mainly left wing people but a few right wing people can be condemned. Any sufficiently public person or movement, unless they are very homogeneous or very PR-conscious, will eventually reveal they have some diversity of views and hence be subject to potential censure.

RyanCarey @ 2024-05-02T14:52 (+16)

In my view, what's going on is largely these two things:

[rationalists etc] are well to the left of the median citizens, but they are to the right of [typical journalists and academics]

Of course. And:

biodeterminism... these groups are very, very right-wing on... eugenics, biological race and gender differences etc.-but on everything else they are centre-left.

Yes, ACX readers do believe that genes influence a lot of life outcomes, and favour reproductive technologies like embryo selection, which are right-coded views. These views are actually not restricted to the far-right, however. Most people will choose to have an abortion when they know their child will have a disability, for example.

Various of your other hypotheses don't ring true to me. I think:

- People aren't self-deceiving about their own politics very much. They know which politicians and intellectuals they support, and who they vote for.

- Rationalist leadership is not very politically different from the rationalist membership.

- Sexual misbehaviour doesn't change perceived political alignment very much.

- The high % of male rationalist is at most a minor factor in the difference between perceived and actual politics.

David Mathers @ 2024-05-02T14:54 (+6)

The race stuff is much more right-coded than some of the other genetic/disability stuff.

dr_s @ 2024-05-02T14:56 (+3)

Yes, ACX readers do believe that genes influence a lot of life outcomes, and favour reproductive technologies like embryo selection, which are right-coded views. They're actually not restricted to the far-right, however.

The problem is that this is really a short step away from "certain races have lower IQ and it's kinda all there is to it to explain their socio-economic status", and I've seen many people take that step. Roko and Hanania which I mentioned explicitly absolutely do so publicly and repeatedly.

RyanCarey @ 2024-05-02T15:21 (+22)

It sounds like you would prefer the rationalist community prevent its members from taking taboo views on social issues? But in my view, an important characteristic of the rationalist community, perhaps its most fundamental, is that it's a place where people can re-evaluate the common wisdom, with a measure of independence from societal pressure. If you want the rationalist community (or any community) to maintain that character, you need to support the right of people to express views that you regard as repulsive, not just the views that you like. This could be different if the views were an incitement to violence, but proposing a hypothesis for socio-economic differences isn't that.

dr_s @ 2024-05-02T15:27 (+10)

Well, it's complicated. I think in theory these things should be open to discussion (see my point on moral philosophy). But now suppose that hypothetically there was incontrovertible scientific evidence that Group A is less moral or capable than Group B. We should still absolutely champion the view that wanting to ship Group A into camps and exterminate them is barbaric and vile, and that instead the humane and ethical thing to do is help Group A compensate for their issues and flourish at the best of their capabilities (after all, we generally hold this view for groups with various disabilities that absolutely DO hamper their ability to take part in society in various ways). But to know that at all can be also construed as an infohazard: just the fact itself creates the condition for a Molochian trap in which Group A gets screwed by nothing other than economic incentives and everyone else acting in their full rights and self-interest. So yeah, in some way these ideas are dangerous to explore, in the sense that they may be a case where truth-finding has net negative utility. That said, it's pretty clear that people are way too invested in them either way to just let sleeping dogs lie.

David Mathers @ 2024-05-02T15:09 (+2)