Why I'm excited about AI safety talent development initiatives

By JulianHazell @ 2025-08-28T18:12 (+61)

This is a linkpost to https://thirdthing.ai/p/ai-safety-funders-love-this-one-secret

Just a couple of weeks ago, I hit my two-year mark at Open Philanthropy. I’ve learned a lot over this period, but I’ve been especially surprised by how great the ROI is on AI safety talent development programs after digging into the numbers.

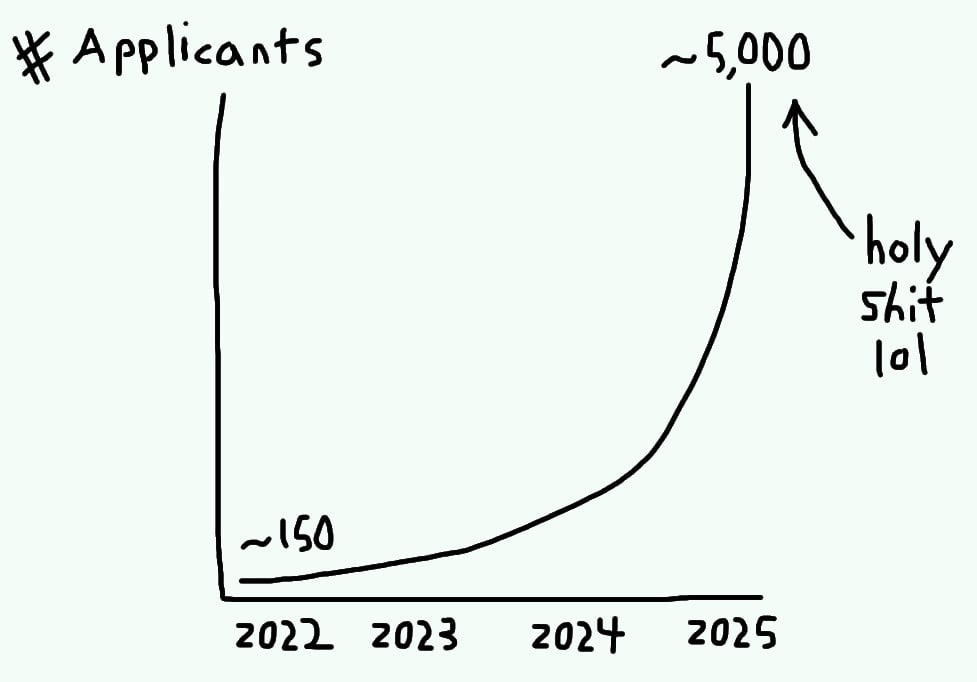

Consider this graph:

I’ve heard at least four examples in the last ~year or so of this dynamic playing out at AI safety organizations that run fellowship programs.1 This amount of applications isn’t the norm per se, but it happens often enough that we literally have an emoji in our Slack called :fellowship-growth: that we use to celebrate these cases.

So yeah, now that I’ve seen how the sausage is made, I’m officially talent development pilled.

A brief case for talent development

Talent gaps are still very much a thing. Key institutions2 ruthlessly compete to hire from a small pool of qualified + high-context + productive people who can work on hard AI safety challenges. I’m regularly asked for leads on such folks, and I often share fewer names than would be ideal.

But at the same time, a large number of smart people are also trying to break into the world of AI safety, sometimes to little success (at least initially).

Here’s how to reconcile this discrepancy.

A lot of smart, talented, ambitious people are keen to get involved, but can’t immediately contribute because they lack training, domain-specific knowledge, connections, and context. Organizations, on the other hand, want people who can pretty much kick ass right away.

This is a tricky equilibrium, but talent development programs can help. Even a single 3-month fellowship can provide some people with surprisingly huge career boosts (I should know, I largely credit my start in this field to one such program).

We're still operating under massive uncertainty. Back in 2020 and 2021, Luke Muehlhauser, who leads our work on AI governance and policy, wrote a few blog posts about AI governance priorities and challenges. At that time, one of the key bottlenecks he identified was a lack of strategic clarity on which intermediate goals should be pursued.

We have relatively more strategic clarity than in 2021, but let's be honest: AI safety still involves navigating a wildly uncertain future. Given this, investing in talent remains one of the most robust bets we can make — we still don’t have enough strategic clarity for this not to be the case.

There's a multiplier effect. People who benefit from these programs often go on to train and mentor others. It's a positive feedback loop — today's fellowship participant becomes tomorrow's program director.

The cost-effectiveness is often excellent. A well-designed, well-excuted fellowship program can significantly alter someone's career trajectory for a relatively modest cost. It just isn’t that expensive to pay a fellow’s salary for three months. When you compare that to other interventions, the bang for your buck can be quite remarkable.

What to do about this

For donors: If you're looking to give to the AI safety space, talent development programs seem to deliver pretty great returns. If you're eager to fund programs like this (to the tune of $250k or more) and want to chat about specific opportunities, shoot me an email. If you’re looking to give less than that, I’d recommend donating to Horizon.

For entrepreneurs/people looking for their next thing: If you're between projects and have experience in the AI safety world, you should seriously consider starting up a new talent development organization (or starting a program at an existing organization). Some of the most successful programs started as scrappy experiments by one or two people who saw a gap and decided to fill it.

Here are a few examples of initiatives that are already happening in some form or another, but might serve as a good source of inspiration:

- A research fellowship program for mid-career technologists (e.g., a PhD and/or 5+ years of experience) looking to transition into AI policy, featuring placements at think tanks and other research institutions.

- A seminar program or series of workshops for economists, focused on explosive growth from AI R&D.

- A program for experienced cybersecurity professionals interested in learning more about/transitioning into AI security.

If running a program like this appeals to you, apply to our RFP, or speak to people running existing organizations to see if any of them could use help starting something up.

For people trying to break into the world of AI safety: I remember exactly what it was like to be where you are. It was hard and emotionally draining — breaking into such a competitive field requires real grit and determination. But keep applying, and keep pushing forward!

MichaelDickens @ 2025-08-29T04:10 (+22)

What do you think about what I wrote about Horizon Institute here? The info I could find about the org made me skeptical of its effectiveness but I may have had misconceptions worth correcting, I assume you know more than I do.

tlevin @ 2025-08-29T19:25 (+17)

(Speaking for myself as someone who has also recommended donating to Horizon, not Julian or OP)

I basically think the public outputs of the fellows is not a good proxy for the effectiveness of the program (or basically any talent program). The main impact of talent programs, including Horizon, seems better measured by where participants wind up shortly after the program (on which Horizon seems objectively strong), plus a subjective assessment of how good the participants are. There just isn't a lot of shareable data/info on the latter, so I can't do much better than just saying "I've spent some time on this (rather than taking for granted that they're good) and I think they're good on average." (I acknowledge that this is not an especially epistemically satisfying answer.)

MichaelDickens @ 2025-08-29T20:17 (+6)

Sounds reasonable. My concern is less that the fellows aren't talented—I'm confident that they're talented; or that Horizon isn't good at placing fellows into important positions—it seems to have a good track record of doing that. My concern is more that the fellows might not use their positions to reduce x-risk. The public outputs of fellows are more relevant to that concern, I think.

Holly Elmore ⏸️ 🔸 @ 2025-08-29T03:58 (+5)

What are you developing this talent for? I basically think Horizon is good, but recruiting for Anthropic is bad. So it wouldn’t surprise me if this ROI is actually negative.

MichaelDickens @ 2025-08-29T04:13 (+6)

I think this is a legitimate concern.

Data on MATS alumni show that 80% are currently working on AI safety and 10% on capabilities. 10% is still too high but an 80/10 ratio still seems like positive ROI.

(It's not entirely clear to me because 27% are working at AI companies and I'm nervous about people doing AI safety work at AI companies, although my guess is still that it's net positive.)

JulianHazell @ 2025-08-29T12:19 (+2)

People who participate in talent development programs can go on to work in a variety of roles outside of the government and AI companies.

Peter Drotos 🔸 @ 2025-09-08T18:38 (+2)

But keep applying, and keep pushing forward!

Is it also a good use of the 5000 aplicant’s time? Could there be clear bars someone should meet to have non-negligible chance? Shouldn’t many focus on upskilling outside the bubble instead?

keivn @ 2025-09-12T18:04 (+1)

“There's a multiplier effect. People who benefit from these programs often go on to train and mentor others.”

^ issue is that this remains researcher and engineer centric

it sounds like what is needed are people who are skilled in navigating ambiguity, especially in a frontier context like this (unlikely to be your avg researcher or engineer)

outside of the box thinking in this domain requires outside of the box talent