CEA: still doing CEA things

By Ben_West🔸, Angelina Li, Oscar Howie @ 2023-07-14T22:07 (+65)

This is a linkpost for our new and improved public dashboard, masquerading as a mini midyear update

It’s been a turbulent few months, but amidst losing an Executive Director, gaining an Interim Managing Director, and searching for a CEO[1], CEA has done lots of cool stuff so far in 2023.

The headline numbers[2]

- 4,336 conference attendees (2,695 EA Global, 1,641 EAGx)

- 133,041 hours of engagement on the Forum, including 60,507 hours of engagement with non-Community posts (60% of total engagement on posts)

- 26 university groups and 33 organizers in the University Group Accelerator Program (UGAP)

- 622 participants in Virtual Programs[3]

There’s much more, including historical data and a wider range of metrics, in the dashboard!

Updates

The work of our Community Health & Special Projects and Communications teams lend themselves less easily to stat-stuffing, but you can read recent updates from both:

- Community Health & Special Projects: Updates and Contacting Us

- How CEA’s communications team is thinking about EA communications at the moment

What else is new?

Our staff, like many others in the community (and beyond), have spent more time this year thinking about how we should respond to the rapidly evolving AI landscape. We expect more of the community’s attention and resources to be directed toward AI safety at the margin, and are asking ourselves how best to balance this with principles-first EA community building.

Any major changes to our strategy will have to wait until our new CEO is in place, but we have been looking for opportunities to improve our situational awareness and experiment with new products, including:

- Exploring and potentially organizing a large conference focussed on existential risk and/or AI safety

- Learning more about and potentially supporting some AI safety groups

- Supporting AI safety communications efforts

These projects are not yet ready to be announcements or commitments, but we thought it worth sharing at a high level as a guide to the direction of our thinking. If they intersect with your projects or plans, please let us know and we’ll be happy to discuss more.

It’s worth reiterating that our priorities haven’t changed since we wrote about our work in 2022: helping people who have heard about EA to deeply understand the ideas, and to find opportunities for making an impact in important fields. We continue to think that top-of-funnel growth is likely already at or above healthy levels, so rather than aiming to increase the rate any further, we want to make that growth go well.

You can read more about our strategy here, including how we make some of the key decisions we are responsible for, and a list of things we are not focusing on. And it remains the case that we do not think of ourselves as having or wanting control over the EA community. We believe that a wide range of ideas and approaches are consistent with the core principles underpinning EA, and encourage others to identify and experiment with filling gaps left by our work.

Impact stories

And finally, it wouldn’t be a CEA update without a few #impact-stories:[4]

Online

- Training for Good posted about their EU Tech Policy Fellowship on the EA Forum. 12/100+ applicants they received came from the Forum, and 6 of these 12 successfully made it on to the program, out of 17 total program slots.

Community Health & Special Projects

- Following the TIME article about sexual misconduct, people have raised a higher-than-usual number of concerns from the past that they had noticed or experienced in the community but hadn't raised at the time. In many of these cases we’ve been able to act to reduce risk in the community, such as warning people about inappropriate behavior and removing people from CEA spaces when their past behavior has caused harm.

Communications

- The team’s work on AI safety communications has led to numerous examples of positive coverage in the mainstream media.

Events

- An attendee at the Summit on Existential Security fundraised more than 20% of their organisation’s budget through a connection they made at the event.

- An attendee at EAG Bay Area consequently changed their plans, applying to and being accepted by the Charity Entrepreneurship Incubation Program.

- EAGxIndia helped accelerate a project focused on AI safety movement-building in the region, which has now had funding approved.

Groups

- A co-team lead for a new-in-2023 EAGx conference credited Virtual Programs and their interactions with their program facilitator as the main reason they were involved in organising the conference and in EA more generally.

- A University Groups retreat led to one attendee working with an EA professional on her Honors program, and her interning with CEA.

- EA Sweden, a group supported by our Post-uni Groups team via the Community Building Grants program, founded The Mimir Institute for Long Term Futures Studies.

- ^

We’re now looking for a “CEO” rather than an “ED”, but the role scope remains unchanged

- ^

As of the end of Q2, 2023

- ^

Counting up until the May 2023 virtual programs cohort

- ^

We use impact stories (and our internal #impact-stories channel) to illustrate some of the ways we’ve helped people increase their impact by providing high-quality discussion spaces to consider their ideas, values and options for and about making impact, and connecting them to advisors, experts and employers

AnonymousEAForumAccount @ 2023-07-18T00:17 (+25)

I want to start off by saying how great it is that CEA is publishing this dashboard. Previously, I’ve been very critical about the fact that the community didn’t have access to such data, so I want to express my appreciation to Angelina and everyone else who made this possible. My interpretation of the data includes some critical observations, but I don’t want that to overshadow the overall point that this dashboard represents a huge improvement in CEA’s transparency.

My TLDR take on the data is that Events seem to be going well, the Forum metrics seem decent but not great, Groups metrics look somewhat worrisome (if you have an expectation that these programs should be growing), and the newsletter and effectivealtruism.org metrics look bad. Thoughts on metrics for specific programs, and some general observations, below.

Events

FWIW, I don’t find the “number of connections made” metric informative. Asking people at the end of a conference how many people they’d hypothetically feel comfortable asking for a favor seems akin to asking kids at the end of summer camp how many friends they made that they plan to stay in touch with; if you asked even a month later you’d probably get a much lower number. The connections metric probably provides a useful comparison across years or events, I just don’t think the unit or metric is particularly meaningful. Whereas if you waited a year and asked people how many favors they’ve asked from people they met at an event, that would provide some useful information.

That said, I like that CEA is not solely relying on the connections metric. The “willingness to recommend” metric seems a lot better, and the scores look pretty good. I found it interesting that the scores for EAG and EAGX look pretty similar.

Online (forum)

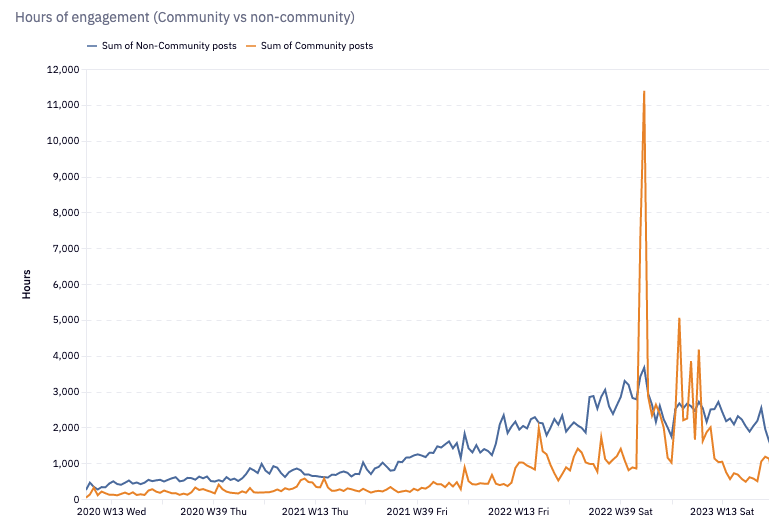

It doesn’t seem great that after a couple of years of steady growth, hours of engagement on the forum seems to have spiked from FTX (and to a lesser extent WWOTF), then fallen to roughly levels from April 2022. Views by forum users follows the same pattern, as does the number of posts with >2 upvotes.

Monthly users seem to have spiked a lot around WWOTF (September 2022 users are >50% higher than March 2022 users), and is now dropping, but hasn’t reverted as much as the other metrics. Not totally sure what to make of that. It would be interesting to see how new users acquired in mid-2022 have behaved subsequently.

Online (effectivealtruism.org)

It seems pretty bad that traffic to the homepage and intro pages grew only very modestly from early 2017 to early 2022 (CEA has acknowledged mistakenly failing to prioritize this site over that period). WWOTF, and then FTX, both seem to have led to enormous increases in traffic relative to that baseline, and homepage traffic remains significantly elevated (though it is falling rapidly).

IMO it is very bad that WWOTF doesn’t seem to have driven any traffic to the intro page and that intro page traffic is the lowest level since the data starts in April 2017, and has been falling steadily since FTX. Is CEA doing anything to address this?

Going forward, it would be great if the dashboard included some kind of engagement metric(s) such as average time on site in addition to showing the number of visitors.

Online (newsletter)

Subscriber growth grew dramatically from 2016-2018 (perhaps boosted by some ad campaigns during the period of fastest growth?), then there were essentially no net additions of subscribers in 2019-2020. We then saw very modest growth in 2021 and 2022, followed by a decline in subscribers year to date in 2023. So 2019, 2020, and 2023 all seem problematic, and from the end of 2018 to today subscriber growth has only grown about 15% (total, not annually) despite huge tailwinds (e.g. much more spent on community building and groups, big investments in promoting WWOTF, etc.) And the 2023 YTD decline seems particularly bad. Do we have any insight into what’s going on? There are obviously people unsubscribing (have they given reasons why?); are we also seeing a drop in people signing up?

Going forward, it would be great if the dashboard included some kind of engagement metric(s) in addition to showing the number of subscribers.

Groups (UGAP)

I was somewhat surprised there wasn’t any growth between spring 2022 and spring 2023, as I would have expected a new program to grow pretty rapidly (like we saw between fall 2021 and fall 2022). Does CEA expect this program to grow in the future? Are there any specific goals for number of groups/participants?

Groups (Virtual)

The data’s kind of noisy, but it looks like the number of participants has been flat or declining since the data set starts. Any idea why that’s the case? I would have expected pretty strong growth. The number of participants completing all or most also of the sessions also seems to be dropping, which seems like a bad trend.

The exit scores for the virtual programs have been very consistent for the last ~2 years. But the level of those scores (~80/100) doesn’t seem great. If I’m understanding the scale correctly, participants are grading the program at about a B-/C+ type level. Does CEA feel like it understands the reason for these mediocre scores and have a good sense of how to improve them?

General observations

- When metrics are based on survey data, it would be very helpful to provide a sample size and any information that would help us think about response biases (e.g. are the virtual group exit scores from all participants? Just those who completed all the content? What percentage of EAG/x attendees completed a survey?)

- It would be helpful to have a way to export the dashboard data into a csv file.

- I’d be much more interested in reading CEA’s interpretation of the dashboard data vs. “impact stories.” I do think anecdata like impact stories is useful, but I view the more comprehensive data as a lot more important to discuss. I’d also like to see that discussion contextualize the dashboard data (e.g. placing the OP’s headlines numbers in historical context as they are almost impossible to interpret on a standalone basis).

- My general expectation is that most growth metrics (e.g. number of forum users) should be improving over time due to large increases in community building funding and capacity. For quality metrics (e.g. willingness to recommend EAG) I think/hope there would be some upward bias over time, but much less so than the growth metrics. I’d be curious whether CEA agrees with these baseline expectations.

jessica_mccurdy @ 2023-07-20T14:34 (+12)

Hi! Just responding on the groups team side :)

This is a good observation. As we mentioned in this retrospective from last Fall, we decided to restrict UGAP to only new university groups to keep the program focused. In the past, we had more leeway and admitted some university groups that had been around longer. I think we have hit a ~ plateau on the number of new groups we expect to pop up each semester (around 20-40) so I don't expect this program to keep growing.

We piloted a new program for organizers from existing groups in the winter alongside the most recent round of UGAP. However, since this was a fairly scrappy pilot we didn't include it on the dashboard. We are now running the full version of the program and have accepted >60 organizers to participate. This may scale even more in the future but we are more focused on improving quality than quantity at this time. It is plausible that we combine this program and UGAP into a single program with separate tracks but we are still exploring.

We are also experimenting with some higher-touch support for top groups which is less scalable (such as our organizer summit). This type of support also lends itself less well to dashboards but we are hoping to produce some shareable data in the future.

AnonymousEAForumAccount @ 2023-07-21T16:06 (+2)

Thanks for providing that background Jessica, very helpful. It'd be great to see metrics for the OSP included in the dashboard at some point.

It might also make sense to have the dashboard provide links to additional information about the different programs (e.g. the blog posts you link to) so that users can contextualize the dashboard data.

ronsterlingmd @ 2023-08-07T06:37 (+1)

I have been an "unofficial" golden rule guy all my life (do they have an org or certification?) I have been following EA for many years and have done my part in my profession over the years to think in terms of more than monetizing, aggregating, streamlining, making bucks. As a healthcare provide in the US until recent retirement, I provided thousands of hours of pro bono or sliding scale work to meet the real needs of the clients I assisted which, as you may know, in the US healthcare has been manipulated into aggressive money making schemes.

Intro over. I found that without a good domain name or service mark, my work on the web with providing up to date mental health care information did not get much attention. When I dove in using the domain name DearShrink.com, attention to and distribution of reliable information became much more effective.

I am wondering if CEA has thought about a service mark or motto that would be easier to understand, identify with, get attached to, and remember, such as BeKindAndProsper com (.org .net)

If so, please advise me as to how I could move these domain names on to the future they did not get a chance to have with me (I was a little optimistic...). I sure don't want to sell those domain names to some manipulative merch to make themselves appear good.

Let me know

Ron Sterling MD (retired)

Author, writer, neuroscientist

Ben_West @ 2023-09-29T17:20 (+2)

Thanks for the suggestion! I think CEA is unlikely to change our domain, but if you have some you would like to go to a good use, you could consider putting them up on ea.domains.

ronsterlingmd @ 2023-09-30T08:17 (+4)

Absolutely, thank you! I keep looking for a way to move several humanistic domain names on to new owners and users, since I did not get to use them as I wished. Now that I am getting close to 80 earth years, I figure I should get these potentially useful domain names into the right hands. I have not found the right place for that so far. So, your suggestion is awesome.

I wasn't really suggesting a change in the EA branding or marks. I was hoping I could find just what you led me to. I will post there soon, after I introduce myself a bit more. I am trying to support the Stop Starlink Satellites movement and have a simple website that hopefully can be found by coincidence if any searches for "how do I stop my service?" Or, things like that. www.stopstarlink.com

OllieBase @ 2023-07-18T10:19 (+12)

Thanks for your comment and feedback. I work on the events team, so just responding to a few things:

Asking people at the end of a conference how many people they’d hypothetically feel comfortable asking for a favor seems akin to asking kids at the end of summer camp how many friends they made that they plan to stay in touch with; if you asked even a month later you’d probably get a much lower number

We do ask attendees about the number of new connections they made at an event a few months later and FYI it's (surprisingly, for the reasons you describe) roughly the same. LTR dips slightly lower a few months after the event, but not by much.

I agree it's an imperfect metric though, and we're exploring whether different survey questions can give us better info. As you note, we also supplement this with the LTR score as well as qualitative analysis of written answers and user interviews. You can look at some more thorough analyses in my recent sequence, I'd love more feedback there.

I found it interesting that the scores for EAG and EAGX look pretty similar.

Just to note that I don't think LTR closely tracks the overall value of the event either. We find that events that reach a lot of new people often get high LTRs (but not always) so a high LTR score at an EAGx event could be due to first-timers feeling excited/grateful to attend their first event which I think is a meaningfully good thing but perhaps worth mentioning.

What percentage of EAG/x attendees completed a survey?

It's usually 20 - 50% of attendees in the surveys referenced by the data you see. Thanks for the suggestion of including no. of respondents.

AnonymousEAForumAccount @ 2023-07-20T02:33 (+2)

Thanks for sharing the survey response rate, that’s helpful info. I’ve shared some other thoughts on specific metrics via a comment on your other post.

Angelina Li @ 2023-07-18T20:51 (+8)

Thanks, I’m glad you like the dashboard and are finding it a productive way to engage with our work! That's great feedback for us :) As a quick aside, we actually launched this in late December during our end of year updates, this version just includes some fresh data + a few new metrics.

I’m not sure if all our program owners will have the capacity to address all of your program-specific thoughts, but I wanted to provide some high level context on the metrics we included for now.

When selecting metrics to present, we had to make trade offs in terms of:

- How decision guiding a particular metric is internally

- How much signal we think a metric is tracking on our goals for a particular project

- How legible this is to an external audience

- How useful is this as an additional community-known data point to further outside research

- E.g. Metrics like “number of EAG attendees” and “number of monthly active Forum users” seem potentially valuable as a benchmark for the size of various EA-oriented products, but aren’t necessarily the core KPIs we are trying to improve for a given project or time period – for one thing, the number of monthly active Forum users only tracks logged in users!

- The quality of the underlying data

- E.g. We’ve tried to include as much historical data as we could to make it easier to understand how CEA has changed over time, but our program data from many years ago is harder for current staff to verify and I expect is generally less reliable.

- Whether something is tracking confidential information

- Time constraints

- Updating this dashboard was meant as a ~2 week sprint for me, and imposes a bunch of coordination costs on program owners to align on the data + presentation details.

So as a general note, while we generally try to present metrics that meet many of the above criteria, not all of these are numbers we are trying to blindly make go up over time. My colleague Ollie’s comment gives an example of how the metrics presented here (e.g. # total connections, LTR) are contextualized and supplemented with other evidence when we evaluate how our programs are performing.

Also thanks for your feature requests / really detailed feedback. This is a WIP project not (yet) intended to be comprehensive, so we’ll keep a note of these suggestions for if and when we make the next round of updates!

AnonymousEAForumAccount @ 2023-07-20T02:27 (+3)

Thank you for this thoughtful response. It is helpful to see this list of tradeoffs you’re balancing in considering which metrics to present on the dashboard, and the metrics you’ve chosen seem reasonable. Might be worth adding your list to the “additional notes” section at the top of the dashboard (I found your comment more informative than the current “additional notes” FWIW).

While I understand some metrics might not be a good fit for the dashboard if they rely on confidential information or aren’t legible to external audiences, I would love to see CEA provide a description of what your most important metrics are for each of the major program areas even if you can’t share actual data for these metrics. I think that would provide valuable transparency into CEA’s thinking about what is valuable, and might also help other organizations think about which metrics they should use.

John Salter @ 2023-07-15T13:00 (+16)

What did the conferences cost to run?

AnonymousAccount @ 2023-07-15T15:40 (+14)

Thank you for the transparency!

At 2M$/year for this forum, it seems that CEA is willing to pay 7.5$ for the average hour of engagement and 40$/month for the average monthly active user.

I found this much higher than I expected!

I understand that most of the value of spending on the forum is not in current results but in future returns, and in having a centralized place to "steer" the community. But this just drove home to me how counter-intuitive the cost-effectiveness numbers for AI-safety are compared to other cause areas.

E.g. I expect Dominion to have cost 10-100x less per hour of engagement, and the amount of investment on this forum to not make sense for animal welfare concerns.

I was really, really happy to see transparency on the team now focusing more on AI-safety, after months/years of rumors and the list of things we are not focusing on still including "Cause-specific work (such as ... AI safety)". I think it's a great change in terms of transparency and greatly appreciate you sharing this

Linch @ 2023-07-15T23:19 (+24)

But this just drove home to me how counter-intuitive the cost-effectiveness numbers for AI-safety are compared to other cause areas.

E.g. I expect Dominion to have cost 10-100x less per hour of engagement, and the amount of investment on this forum to not make sense for animal welfare concerns.

Your first sentence might well be true. But the comparison with the Dominion documentary doesn't seem very relevant. If I consider the primary uses of the forum for animal welfare folks, I expect them to be some combination of a) sharing and critiquing animal-welfare related research and strategy, b) fundraising, and c) hiring.[1]

So the comparison with an animal welfare-related documentary for mass consumption is less apt, and perhaps you should compare with an hour of engagement for animal-welfare related journals (for the research angle) or fundraisers with fairly wealthy[2] animal welfare-interested people.

- ^

Looking at the animal welfare posts on the forum sorted by relevance, I think my summary is roughly true. Feel free to correct me!

- ^

Though I suppose normal fundraisers are with very wealthy people, and not just fairly wealthy ones? But I imagine EAF onlookers to have a long tail, wealth-wise.

Ben_West @ 2023-07-16T18:32 (+13)

Thanks for the comment!

- I understand your intuition behind dividing the hours by expenses, but I don't think those numbers are correct; this comment has a bit more detail.

- I also agree with Linch that Dominion is probably not the right comparison; a conference like CARE might be a better reference class of "highly dedicated people seriously engaging with complex animal welfare topics"

- That being said, I think you have an underlying point which is underappreciated: we sometimes talk about "cause prioritization" as though one cause would be universally better than another, but it's entirely consistent to be willing to pay more for someone to read a forum post about AI safety than about animal welfare, while simultaneously being willing to pay more for an animal welfare documentary than an AI safety one. I think it is, in fact, true that many people I talk to would pay more for an hour of someone reading about AI safety than an hour of reading about animal welfare, and that is a relevant fact for figuring out how we should prioritize our work.

- (This argument also should make us aware of bias in the users: people who use the EA Forum are disproportionately interested in causes where the EA Forum is useful. My guess, though I'm not sure, is that the EA Forum is more oriented towards existential risk than EA overall is, for this reason. Thanks to AGB for pointing this out to me.)

- (This argument also should make us aware of bias in the users: people who use the EA Forum are disproportionately interested in causes where the EA Forum is useful. My guess, though I'm not sure, is that the EA Forum is more oriented towards existential risk than EA overall is, for this reason. Thanks to AGB for pointing this out to me.)

Jason @ 2023-07-15T18:10 (+7)

Given the recent increase in Forum expenditures, it'd probably better to use some measure that incorporates historical cost if it were available to compute hourly rate -- we wouldnt expect to see much of the effects of recent budget expansion (much of which went to hiring developers) to reflect in the 2023 level of engagement.

AnonymousAccount @ 2023-07-15T18:27 (+2)

I fully agree. If I understand correctly, the extra hires were in June 2022, and these numbers are from January 2023. I think the main point still stands though

MHR @ 2023-07-21T19:41 (+12)

Due to FTX's collapse and EA orgs' successes at growing the number of people interested in EA careers, it appears that that EA causes are trending back toward being funding constrained rather than talent constrained. However, the metrics being tracked on the dashboard seem more relevant to addressing talent and engagement bottlenecks than they do to addressing funding bottlenecks. Do you have thoughts about how CEA can help attract more funding to EA causes (either via existing core projects or via new initiatives), and about what metrics would make sense to track for those efforts?

Oscar Howie @ 2023-07-26T08:16 (+12)

Thanks for raising this. I agree that the dial has shifted somewhat toward funding constraints for non-mysterious reasons, and I’d note that this is true across different cause areas. CEA is funding constrained too! Our Interim MD has framed this as the start of EA’s Third Wave.

I’ve been leading internally on how we might unconstrain CEA itself, and (in its very early stages) thinking about how we might coordinate fundraising activities between EA meta (~movement building) orgs. But I want to be explicit that there are a couple nearby things CEA is not currently doing and hasn’t been in recent history:

- Fundraising for non-CEA projects

- Grantmaking to non-CEA projects

- Similarly, EA Funds was spun out of CEA in 2020

- The bulk of our current grantmaking is to the groups and EAGx conferences we directly support and their organizers

The dashboard is accurately capturing the fact that we have not (yet) made any big strategic changes to our programs intended to address funding bottlenecks. But it does disguise the fact that we’ve done some things within existing projects, including launching an effective giving subforum (later shut down) and supporting an Effective Giving Summit. I’m confident that there are more things we could do to move the needle here, although I’m less confident that they would be more impactful than our existing programs, even in the new funding environment.

There is important work to be done figuring out the costs and benefits of a more money-oriented approach, but I’d caution that it’s unlikely CEA makes any major changes to our current strategy until we appoint a new CEO. Worth noting, though, that we’re open to pretty major changes as part of that process.

pseudonym @ 2023-07-16T02:04 (+11)

The dashboard is neat!

Some questions about the AI safety related updates.

There are risks to associating AI safety work with the EA brand, as well as risks to non-AI portions of EA if the "Centre of Effective Altruism" moves towards more AI work. On risks to AI safety:

- What's CEA's comparative advantage in communicating AI safety efforts? I'd love to see some specific examples of positive coverage in the mainstream media that was due to CEA's efforts.

- What are CEA's plans in making sure the EA brand does not negatively affect AI safety efforts, either in community building, engaging policy makers, engaging the public, or risking exacerbating further politicization between AI safety and ethics folk?

- Which stakeholders in the AI safety space have you spoken to or have already planned to speak to about CEA's potential shift in strategic direction?

On risks to non-AI portions of EA:

- Which stakeholders outside of the AI safety space have you spoken to or have already planned to speak to about CEA's potential shift in strategic direction?

- If none, how are you modelling the negative impacts non-AI cause areas might suffer as a result, or EA groups who might move away from EA branding as a result when making this decision?

- How much AI safety work will CEA be doing before the team decides that the "Centre for Effective Altruism" is no longer the appropriate brand for its goals, or whether an offshoot/different organization is a better home for more AI safety heavy community building work?

Lastly, the linked quote of "list of things we are not focusing on" currently includes "cause-specific work (such as community building specifically for effective animal advocacy, AI safety, biosecurity, etc.)", which seems to be in tension somewhat with the AI related updates (e.g. AI safety group support). I'd love to see the website updated accordingly once the decision is finalized so this doesn't contribute to more miscommunication in future.

Ben_West @ 2023-07-19T16:54 (+3)

Glad you like the dashboard! Credit for that is due to @Angelina Li .

I think we are unlikely to share names of the people we talk to (mostly because it would be tedious to create a list of everyone and get approval from them, and presumably some of them also wouldn’t want to be named) but part of the motivation behind EA Strategy Fortnight was to discuss ideas about the future of EA (see my post Third Wave Effective Altruism), which heavily overlaps with the questions you asked. This includes:

- Rob (CEA’s Head of Groups) and Jessica (Uni Groups Team Lead) wrote about some trade-offs between EA and AI community building.

- Multiple non-CEA staff also discussed this, e.g. Kuhanj and Ardenlk

- Regarding “negative impacts to non-AI causes”: part of why I was excited about the EA’s success no one cares about post (apart from trolling Shakeel) was to get more discussion about how animal welfare has been benefited from being part of EA. Unfortunately, the other animal welfare posts that were solicited for the Fortnight have had delays in publishing (as have some GH&WB posts), but hopefully they will eventually be published.

- My guess is that you commenting about why having these posts being public would be useful to you might increase the likelihood that they eventually get published, so I would encourage you to share that, if you indeed do think it would be useful.

- I also personally feel unclear about how useful it is to share our thinking in public; if you had concrete stories of how us doing this in the past made your work more impactful I would be interested to hear them. The two retrospectives make me feel like Strategy Fortnight was nice, but I don't have concrete stories of how it made the world better.

- There were also posts about effective giving, AI welfare, and other potential priorities for EA.

On comms and brand, Shakeel (CEA’s Head of Comms) is out at the moment, but I will prompt him to respond when he returns.

On the list of focus areas: thanks for flagging this! We’ve added a note with a link to this post stating that we are exploring more AI things.

pseudonym @ 2023-08-10T03:57 (+1)

Thanks for the response! Overall I found this comment surprisingly less helpful than I'd hoped (though I wasn't one of the downvotes). My best guess for why is that most of the answers seem like they should be fairly accessible but I feel like I didn't get as much of substance as I originally expected.[1]

1) On the question "How are you modelling the negative impacts non-AI cause areas might suffer as a result, or EA groups who might move away from EA branding as a result when making this decision?", I thought it was fairly clear I was mainly asking about negative externalities to non-AI parts of the movement. That there are stories of animal welfare benefitting from being part of EA in the past doesn't exclude the possibility that such a shift in CEA's strategic direction is a mistake. To be clear, I'm not claiming that it is a mistake to make such a shift, but I'm interested in how CEA has gone about weighing the pros and cons when considering such a decision.

As an example, see Will giving his thoughts on AI safety VS EA movement building here. If he is correct, and it is the case that AI safety should have a separate movement building infrastructure to EA, would it be a good idea for the "Centre for Effective Altruism" to do more AI safety work, or more "EA qua EA" work? If the former, what kinds of AI safety work would they be best placed to do?

These are the kind of questions that I am curious about seeing what CEA's thoughts are and would be interested in more visibility on, and generally I'm more interested in CEA's internal views rather than a link to a external GHD/animal welfare person's opinion here.

My guess is that you commenting about why having these posts being public would be useful to you might increase the likelihood that they eventually get published, so I would encourage you to share that, if you indeed do think it would be useful.

I also personally feel unclear about how useful it is to share our thinking in public; if you had concrete stories of how us doing this in the past made your work more impactful I would be interested to hear them.

2) If the organization you run is called the "Centre for Effective Altruism", and your mission is "to nurture a community of people who are thinking carefully about the world’s biggest problems and taking impactful action to solve them", then it seems relevant to share your thinking to the community of people you purport to nurture, to help them decide whether this is the right community for them, and to help other people in adjacent spaces decide what other gaps might need to be filled, especially given a previous mistake of of CEA being that CEA "stak[ed] a claim on projects that might otherwise have been taken on by other individuals or groups that could have done a better job than we were doing". This is also part of why I asked about CEA's comparative advantage in the AI space.

3) My intention with the questions around stakeholders is not to elicit a list of names, but wanting to know the extent to which CEA has engaged other stakeholders who are going to be affected by their decisions, and how much coordination has been done in this space.

4) Lastly, also just following up on the Qs you deferred to Shakeel on CEA's comparative advantage, i.e., specific examples of positive coverage in the mainstream media that was due to CEA's efforts, and the two branding related Qs.

Thanks!

- ^

For example, you linked a few posts, but Rob's post discusses why a potential community builder might consider AI community building, while Jess focuses on uni group organizers. Rob states "CEA’s groups team is at an EA organisation, not an AI-safety organisation", and "I think work on the most pressing problems could go better if EAs did not form a super-majority (but still a large enough faction that EA principles are strongly weighted in decision making)", while Jess states she is "fairly confused about the value of EA community building versus cause-specific community building in the landscape that we are now in (especially with increased attention on AI). All this is to say that I don't get a compelling case from those posts that support CEA as an organization doing more AI safety work etc.

Larks @ 2023-07-15T17:56 (+10)

133,041 hours of engagement on the Forum, including 60,507 hours of engagement with non-Community posts

I can only imagine how bad this ratio was before the redesign if it's still worse than 1:1 ; if anything I think this suggests community content should be even further deprioritized.

Ben_West @ 2023-07-16T18:38 (+14)

Thanks for the suggestion! This comment from Lizka contains substantial discussion of community versus noncommunity; for convenience, I will reproduce one of the major graphs here:

You can see that there was a major spike around the FTX collapse, and another around the TIME and Bostrom articles, which might make the "average" unintuitive, since it's heavily determined by tail events.

Angelina Li @ 2023-07-15T20:02 (+5)

Thanks for engaging with this!

To chime in with a quick data note: "hours of engagement on the Forum" also includes time spent not viewing posts (e.g. time spent viewing events, local groups, and other non-post content on the Forum), so comparing those two numbers will give you a slightly misleading picture of the Community v. non-Community engagement breakdown.

The Forum saw a total of 39,965 hours of engagement with Community posts up until the end of Q2 in 2023, so the actual ratio between Community:non-Community post engagement is more like 2:3. I'll edit the top level post to make this clear (thanks for the nudge).

I can only imagine how bad this ratio was before the redesign if it's still worse than 1:1

FYI, the dashboard we published has this metric broken out over time, so you don't have to imagine this! :) (I don't work on the Online team, but I built this dashboard.)

Larks @ 2023-07-15T20:59 (+2)

Thanks for this explanation!

Nathan Young @ 2023-07-15T07:03 (+4)

Training for Good posted about their EU Tech Policy Fellowship on the EA Forum. 12/100+ applicants they received came from the Forum, and 6 of these 12 successfully made it on to the program, out of 17 total program slots.

This is wild.

Cillian Crosson @ 2023-07-15T08:44 (+11)

Not sure why you unendorsed this.

I run Training for Good & also found this wild!

Nathan Young @ 2023-07-17T21:28 (+8)

so I misread it. (I thought the course was run by the EU)

why do you think it's wild? surely the forum is a central vector of EA stuff?

Vasco Grilo @ 2024-02-03T08:52 (+3)

Hi,

Thanks for having broken down the dashboard into 3 (events, online and groups).

You have a graph for the "Number of EA Forum posts with 2+ upvotes (per month)". I think it would be better to have graphs for the number of posts per month and their mean karma.

You have a graph for the "Overall EA Newsletter subscriber count (at the start of each month)". Have you considered having one for the number of subscribers of the EA Forum Digest?

Angelina Li @ 2024-02-03T23:30 (+4)

Thanks Vasco!

You have a graph for the "Number of EA Forum posts with 2+ upvotes (per month)". I think it would be better to have graphs for the number of posts per month and their mean karma.

Curious why — what insights do you hope to gain from this / are there specific questions you have about the Forum that you're hoping to answer with this data?

You have a graph for the "Overall EA Newsletter subscriber count (at the start of each month)". Have you considered having one for the number of subscribers of the EA Forum Digest?

Thanks, we've considered this but haven't prioritized so far. I'd be interested to know if there was a particular reason you think this would be useful, or if it's more that generally having this sort of data available to people would be nice? (Both seem like reasonable motivations ofc!)

Vasco Grilo @ 2024-02-04T04:21 (+2)

Thanks for the reply, Angelina!

I mostly thought that data on:

- Karma could be relevant to the question of whether EA Forum content has been declining in quality (or not), and how this relates to quantity (number of posts). Besides the mean karma of the posts published in a given month, it would also be interesting to know the distribution (e.g. 10th and 90th percentile).

- Subscribers to the EA Forum Digest would be relevant as a measure of the growth (or lack thereof) of EA Forum.

more that generally having this sort of data available to people would be nice

I had this in mind too. I do not know how much making the above available to the public would be useful, but in general lean towards thinking that more transparency is good. However, depending on how much effort is required to make that sort of data public, it might not be worth it.

Vasco Grilo @ 2023-11-20T14:11 (+2)

Thanks for the dashboard! It is a great summary of the output of the various programs. Have you considered adding metrics on the costs / resources invested to run the programs? I like that 80,000 Hours has publicly available data on the FTEs spent on their various programs. This is useful because it allows one to assess cost-effectiveness (ratio between outputs and cost).

Angelina Li @ 2023-12-18T19:25 (+4)

Hey Vasco! Sorry for such a late response. I imagine you've already seen the data we have on CEA's 2023 budget (although note that we expect actual expenditures in 2023 to be lower than the budget) — hope that helps.

Thanks for suggesting the idea of adding some sort of cost data to our dashboard, I'll keep it in mind for the future :)

Vasco Grilo @ 2023-12-18T21:57 (+4)

Thanks, Angelina! I had actually not looked into that.

Vasco Grilo @ 2023-07-20T15:19 (+2)

Thanks for the update!

26 university groups and 33 organizers in UGAP

What is UGAP? University Groups And Programs?

Angelina Li @ 2023-07-20T17:11 (+3)

University Group Accelerator Program - I'll update the top level post for clarity too, thanks!

Conor Barnes @ 2023-07-15T02:05 (+1)

I hadn't seen the previous dashboard, but I think the new one is excellent!

Ben_West @ 2023-07-16T18:39 (+8)

Thanks! @Angelina Li deserves the credit :)