US public attitudes towards artificial intelligence (Wave 2 of Pulse)

By Jamie E, David_Moss @ 2025-09-12T14:15 (+47)

This report is the second in a sequence detailing the results of Wave 2 of the Pulse project by Rethink Priorities (RP). Pulse is a large-scale survey of US adults aimed at tracking and understanding public attitudes towards effective giving and multiple impactful cause areas over time. Wave 2 of Pulse was fielded between February and April of 2025 with ~5600 respondents.[1] Results from our first wave of Pulse, fielded from July-September of 2024, can be found here, with the forum sequence version here.

This part of the Wave 2 sequence focuses on attitudes towards AI.

Findings at a glance

Survey features:

- Wave 2 of Rethink Priorities’ Pulse project surveyed ~5,600 US adults between February and April 2025, following up on Wave 1 (July–September 2024).

- Results were poststratified to be representative of the US adult population with respect to Age, Sex, Income, Racial identification, Educational attainment, State and Census Region, and Political party identification.

Worry about AI:

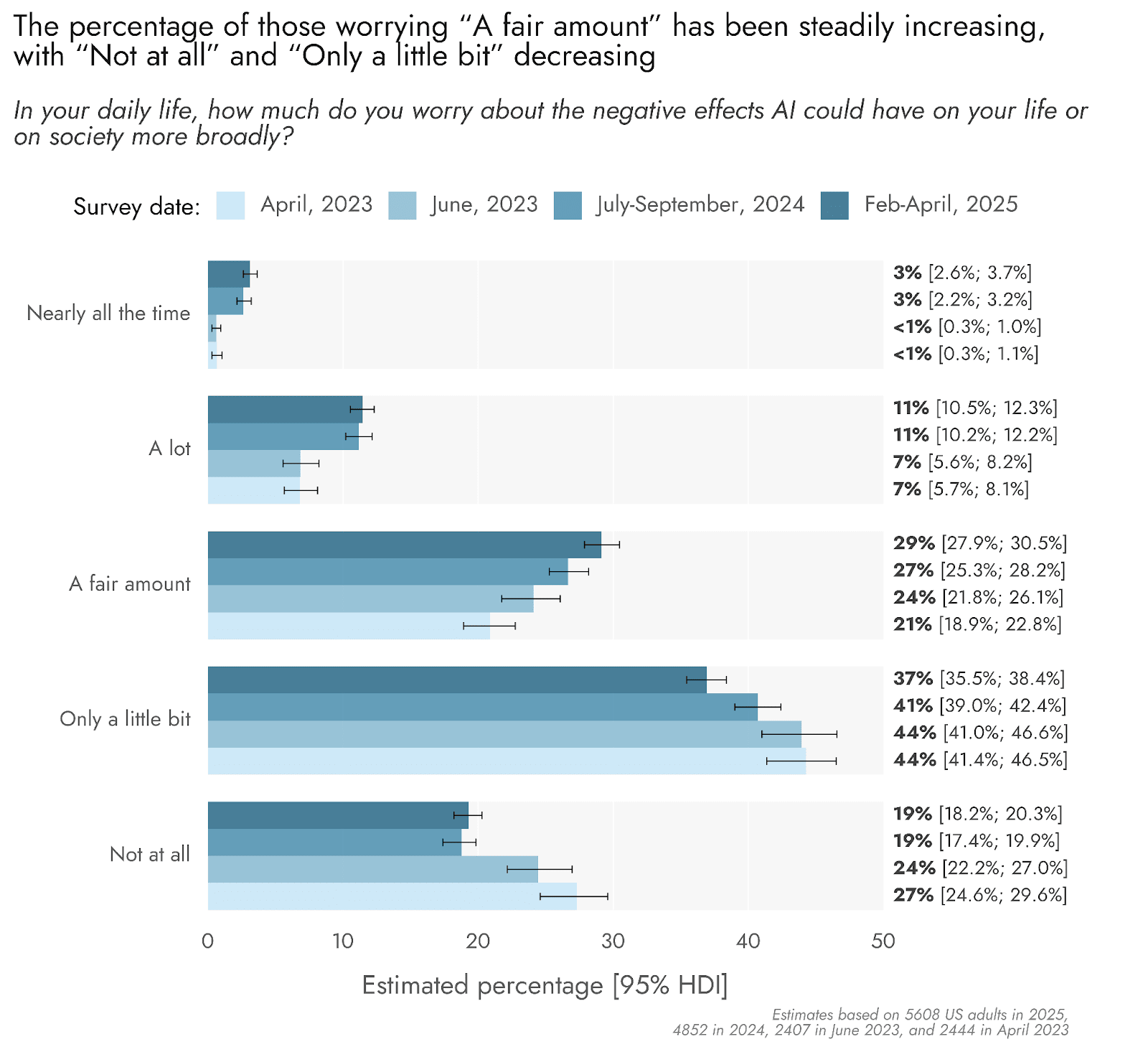

- Worry over the possible effects of AI has been increasing since our earliest assessments in 2023, though a majority of US adults still report worrying “Only a little bit” (37%) or “Not at all” (19%).

- Since Wave 1 of Pulse in July-September 2024, worry has only marginally increased, mostly due to shifting from “Only a little bit” (41% in 2024 vs. 37% in 2025) to “A fair amount” (27% in 2024 vs. 29% in 2025)—we will look to Wave 3 of Pulse to see whether worry about AI is plateauing.

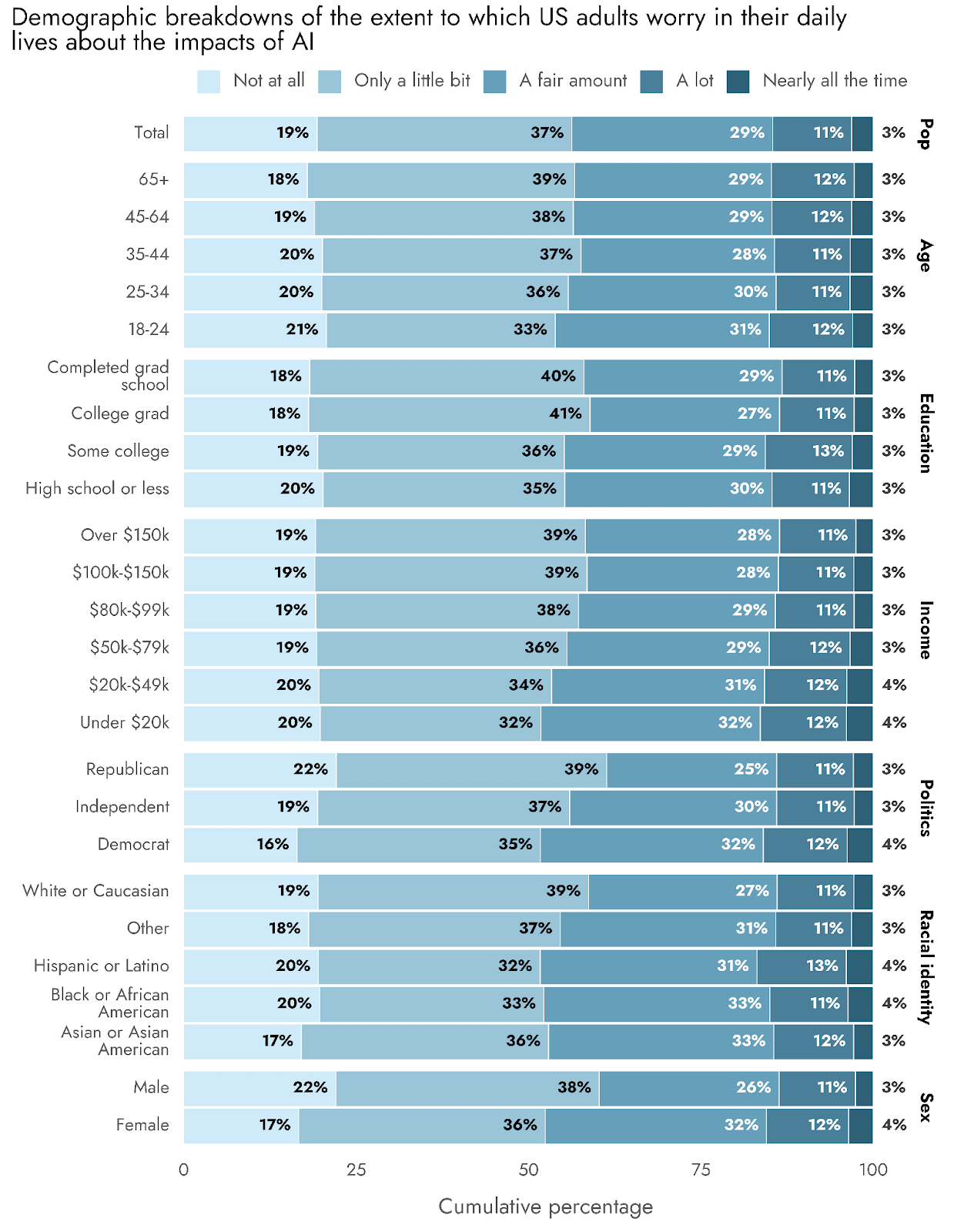

- Worry about AI tended to be higher among US adults from several demographic groups: Female, those with lower household incomes, White or Caucasian, and Democrats, with Independents falling between Democrats and Republicans.

Stances on AI:

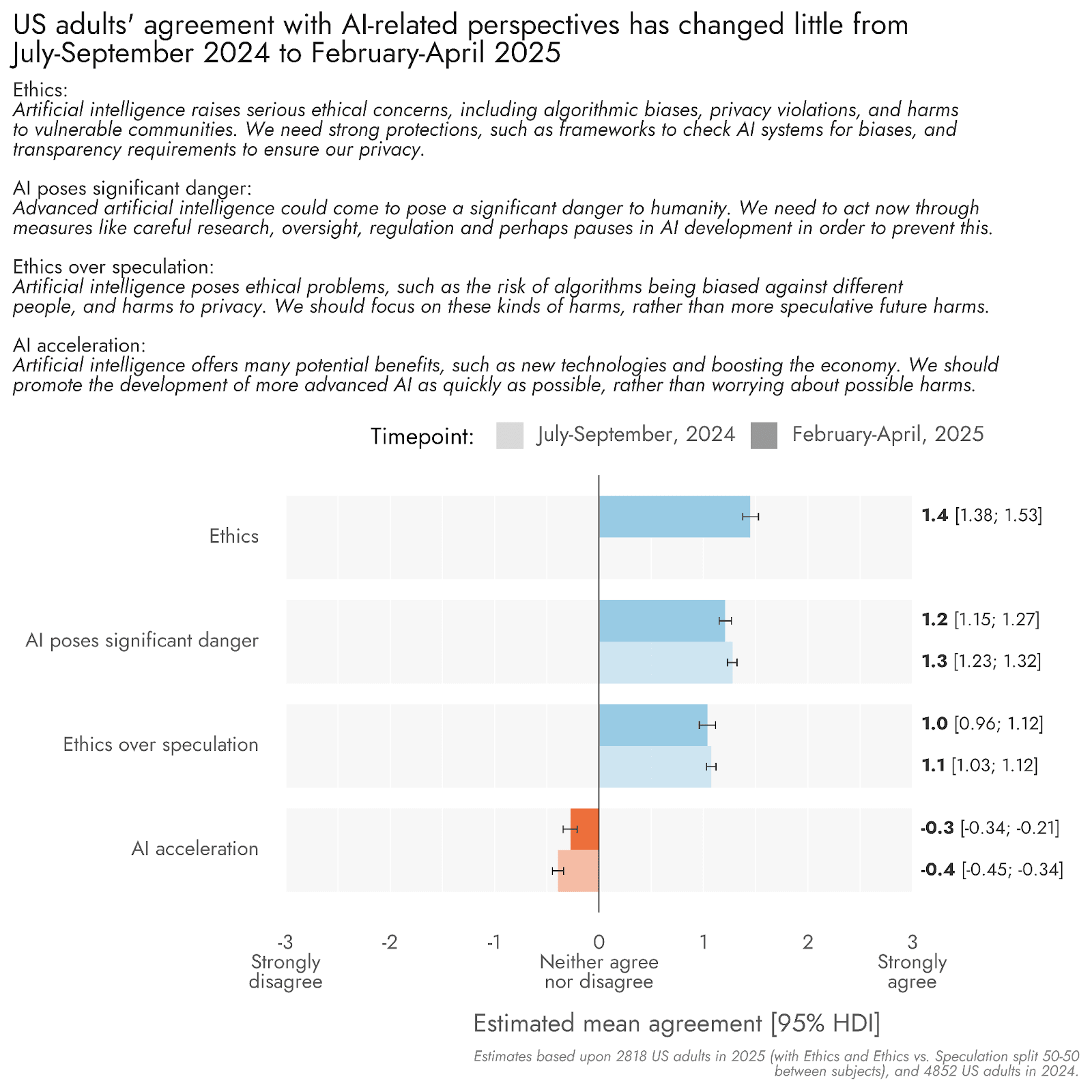

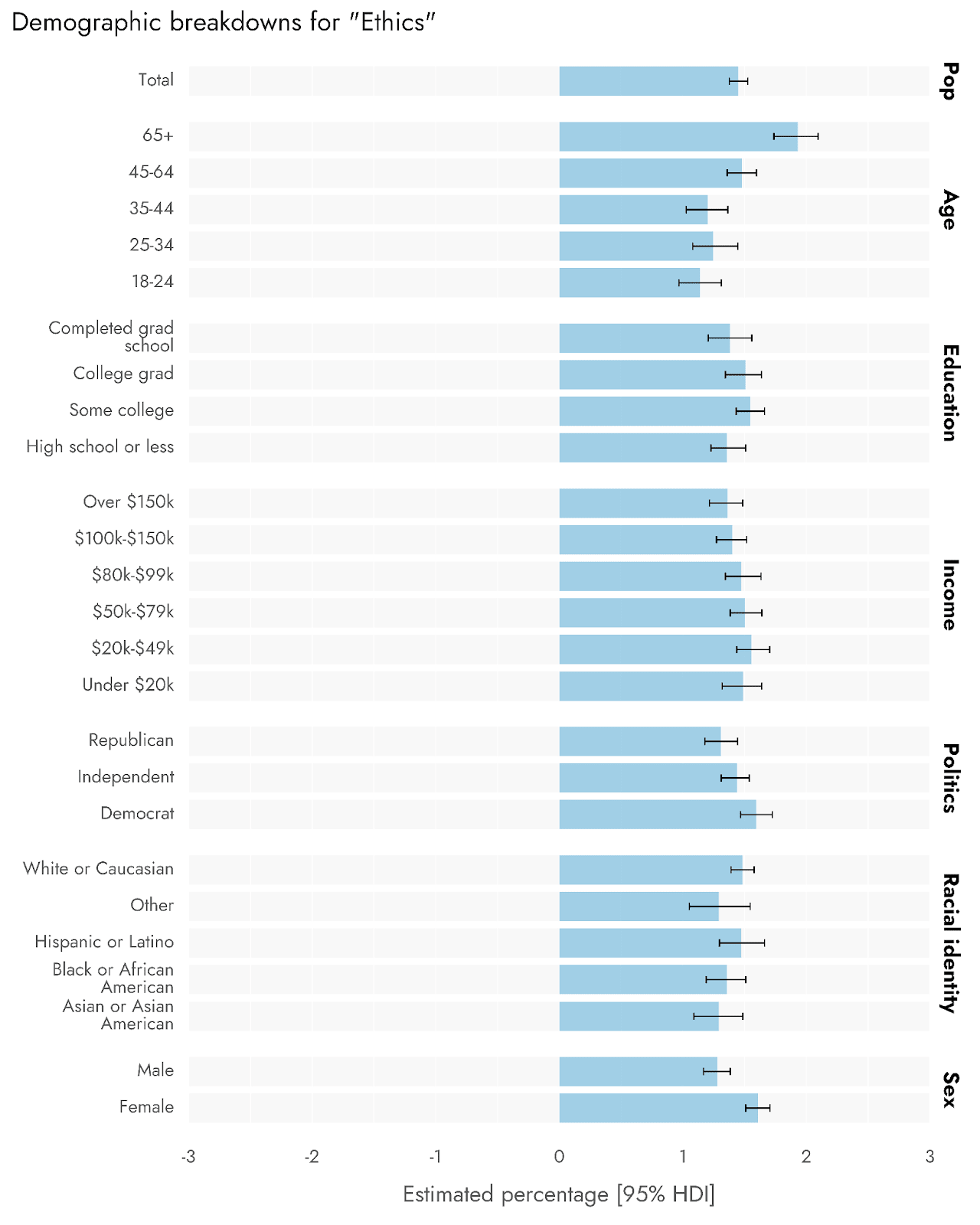

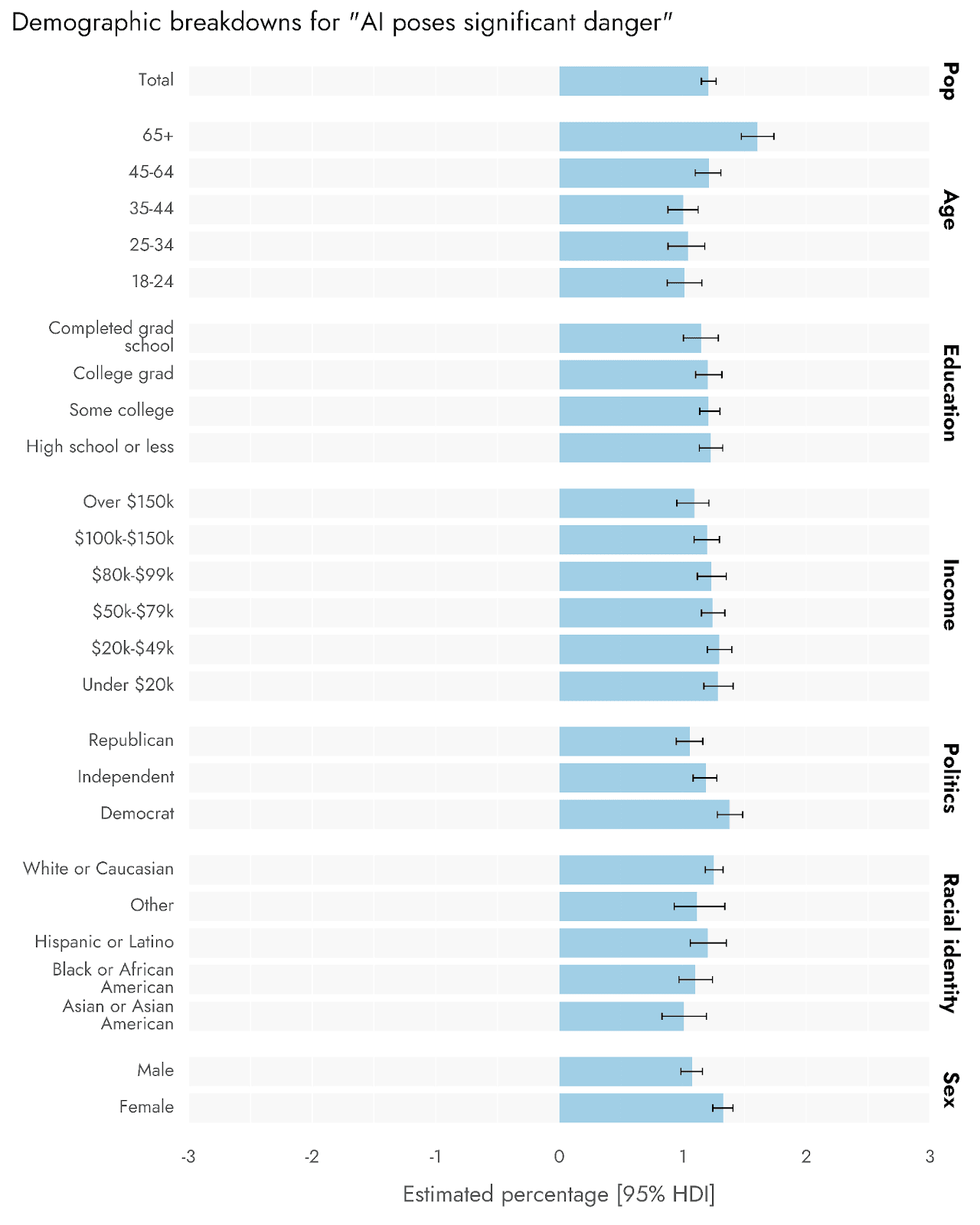

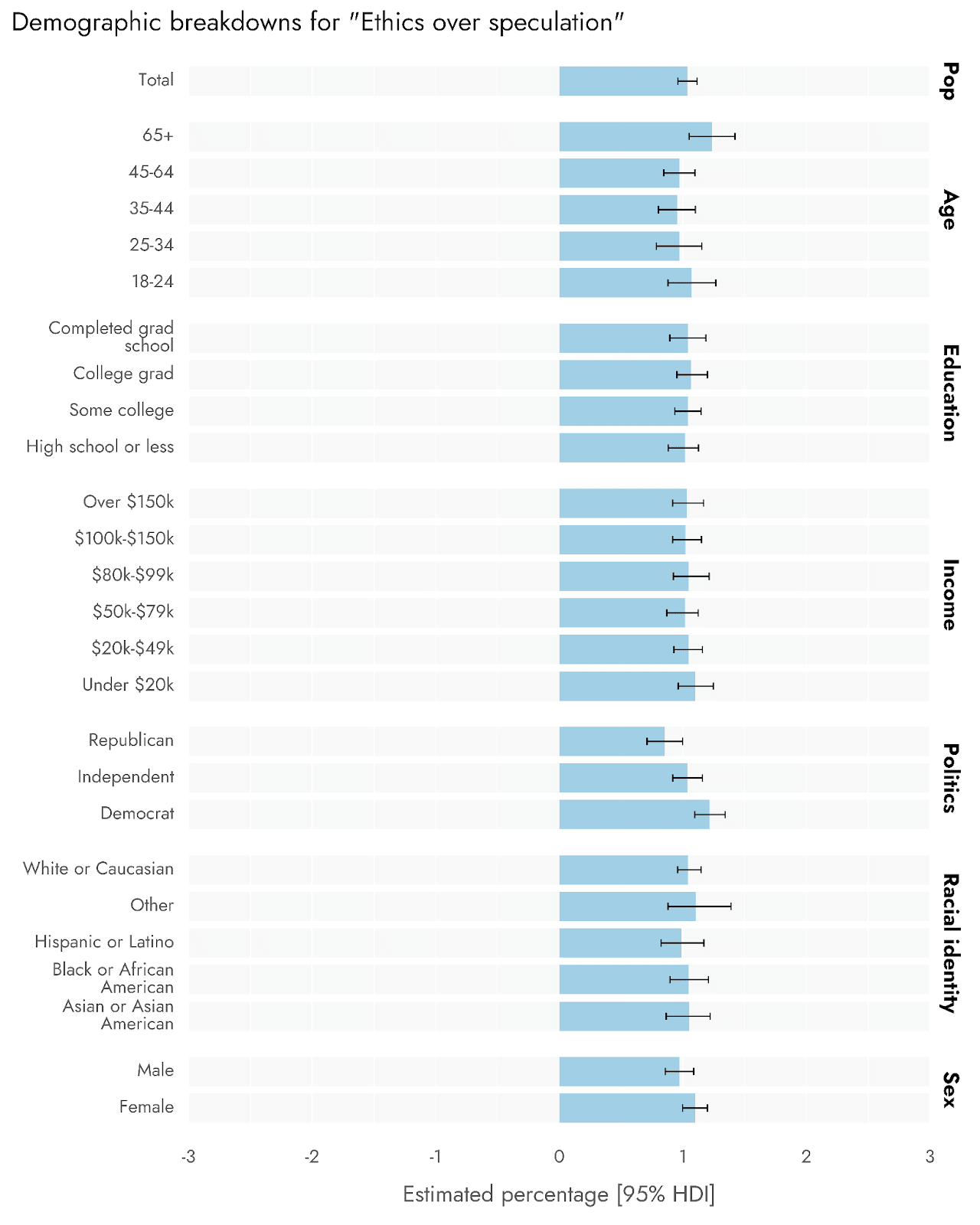

- Half of the survey respondents also gave their attitudes towards several stances on AI: statements covered severe dangers from AI (“AI poses significant danger”), concerns about issues such as algorithmic biases and privacy violations (“Ethics”), a focus on AI ethics over more speculative harms (“Ethics over speculation”), and the view that AI development should be accelerated to gain benefits instead of focusing on risks (“AI acceleration”).

- The stance receiving most agreement was “Ethics” (1.4, from -3 to +3), followed by “AI poses significant danger” (1.2), “Ethics over Speculation” (1.0), and then—substantially lower—“AI acceleration” (-0.3).

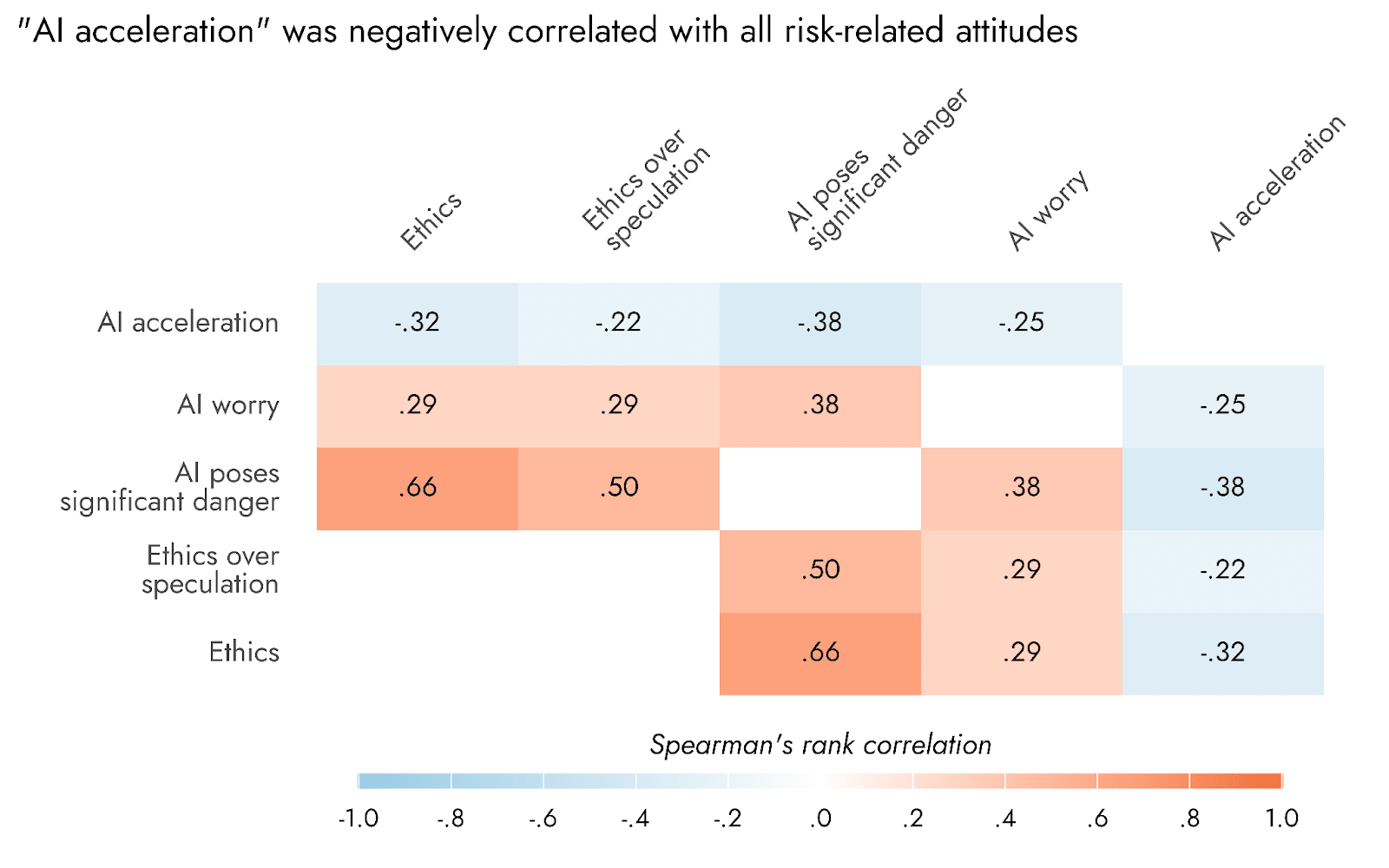

- Responses to stances focusing on any of the risk-related items were positively correlated with one another, and agreement with “Ethics” was higher when not posed in contrast to “speculative” risks (“Ethics over speculation”), suggesting that members of the public are concerned both about catastrophic and more practical and ethical issues, not one or the other.

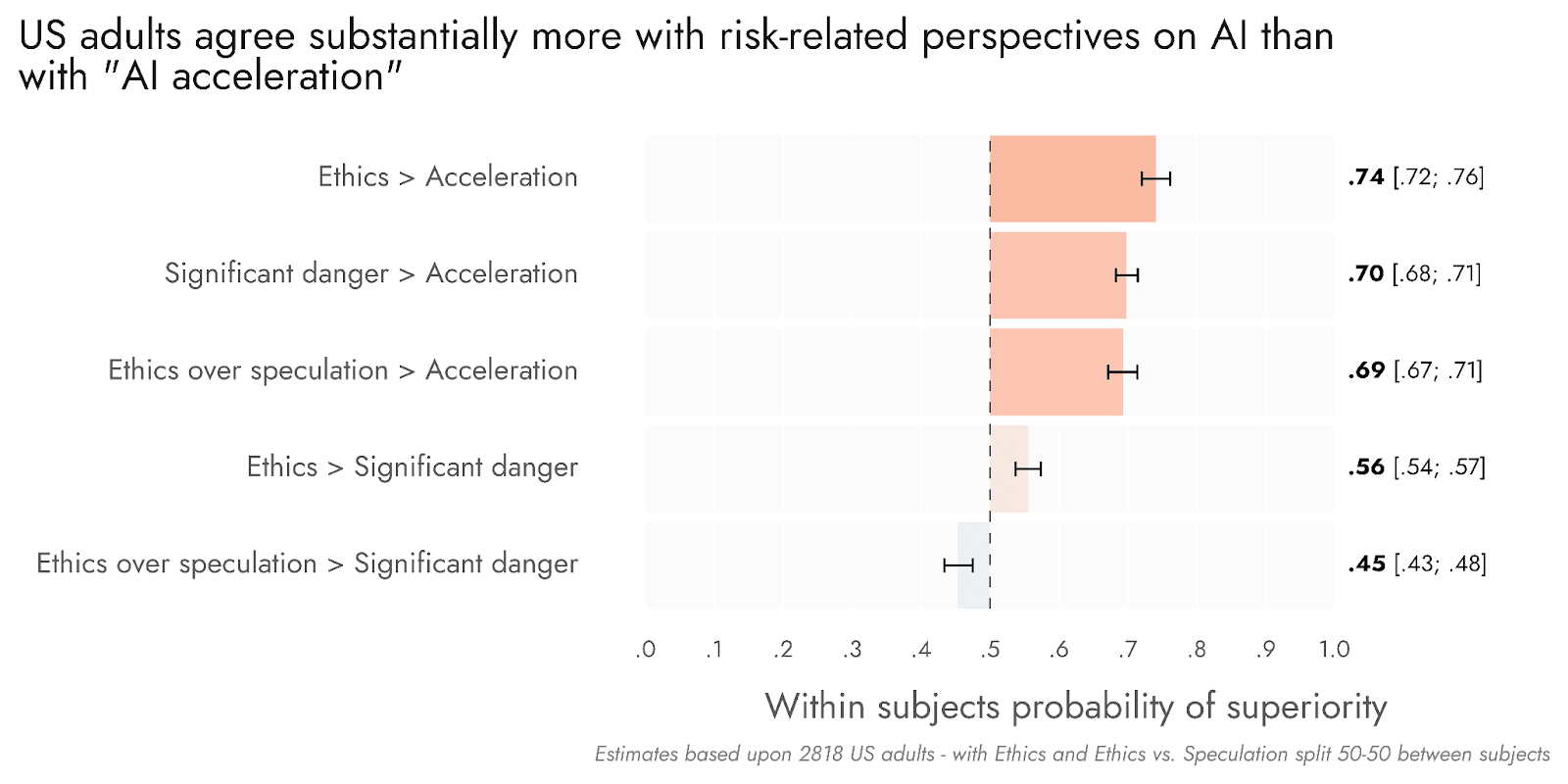

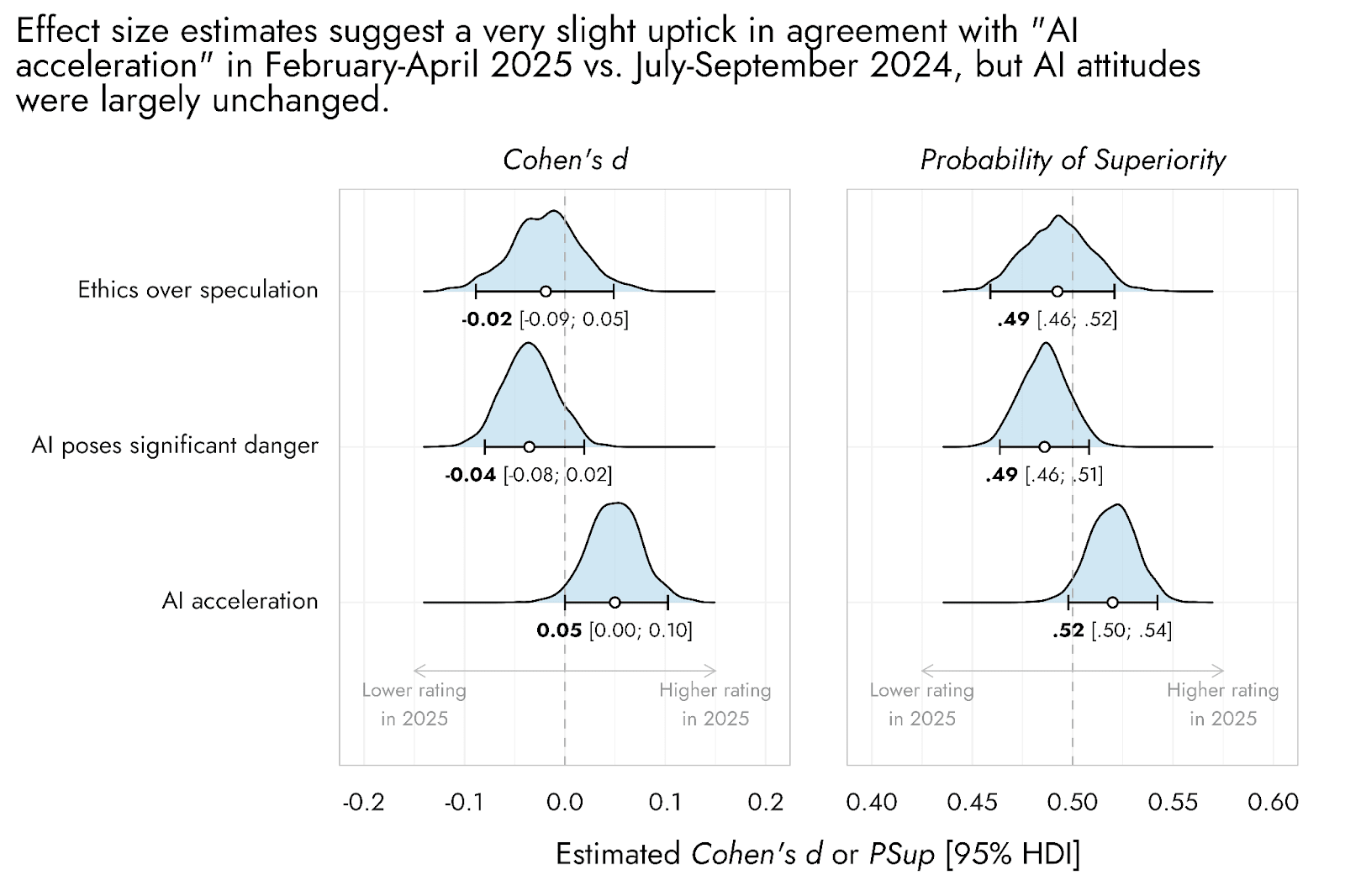

- “AI acceleration” showed a very small increase in agreement vs. Wave 1 of Pulse, but we would still expect over two-thirds of US adults to favor the risk-related statements over “AI acceleration”.

Worry about AI

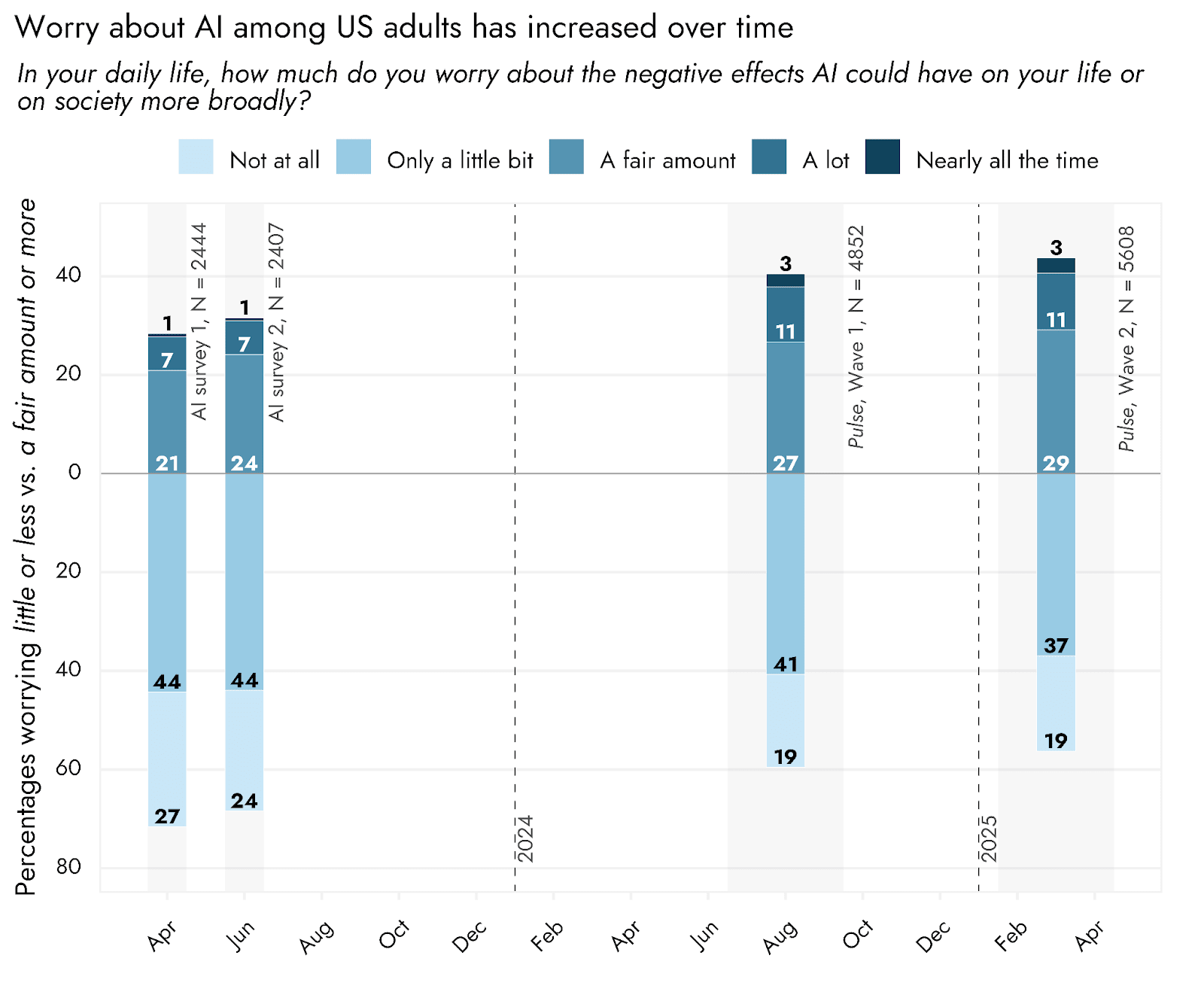

As in Wave 1 of Pulse, respondents were asked to indicate the extent to which they worried about the impact AI might have on their lives and society more broadly (Figure 1). We were able to supplement data from Pulse with additional survey results from April[2] and June[3] of 2023, allowing for a trajectory of AI worry to be traced over the previous two years.

Both Waves of Pulse showed a rise in AI worry in 2024 and 2025 relative to 2023, but we found relatively little difference in worry when comparing the most recent Pulse outcomes (from February-April of 2025) with those of the previous Pulse (from July-September 2024). Compared with the previous Pulse, there appears to have been a slight shift: people were less likely to say they worry "Only a little bit" and more likely to say they worry "A fair amount” (Figure 2).

Figure 1: Worry about AI among US adults has increased over time

Figure 2: The percentage of those worrying “A fair amount” has been steadily increasing, with “Not at all” and “Only a little bit” decreasing.

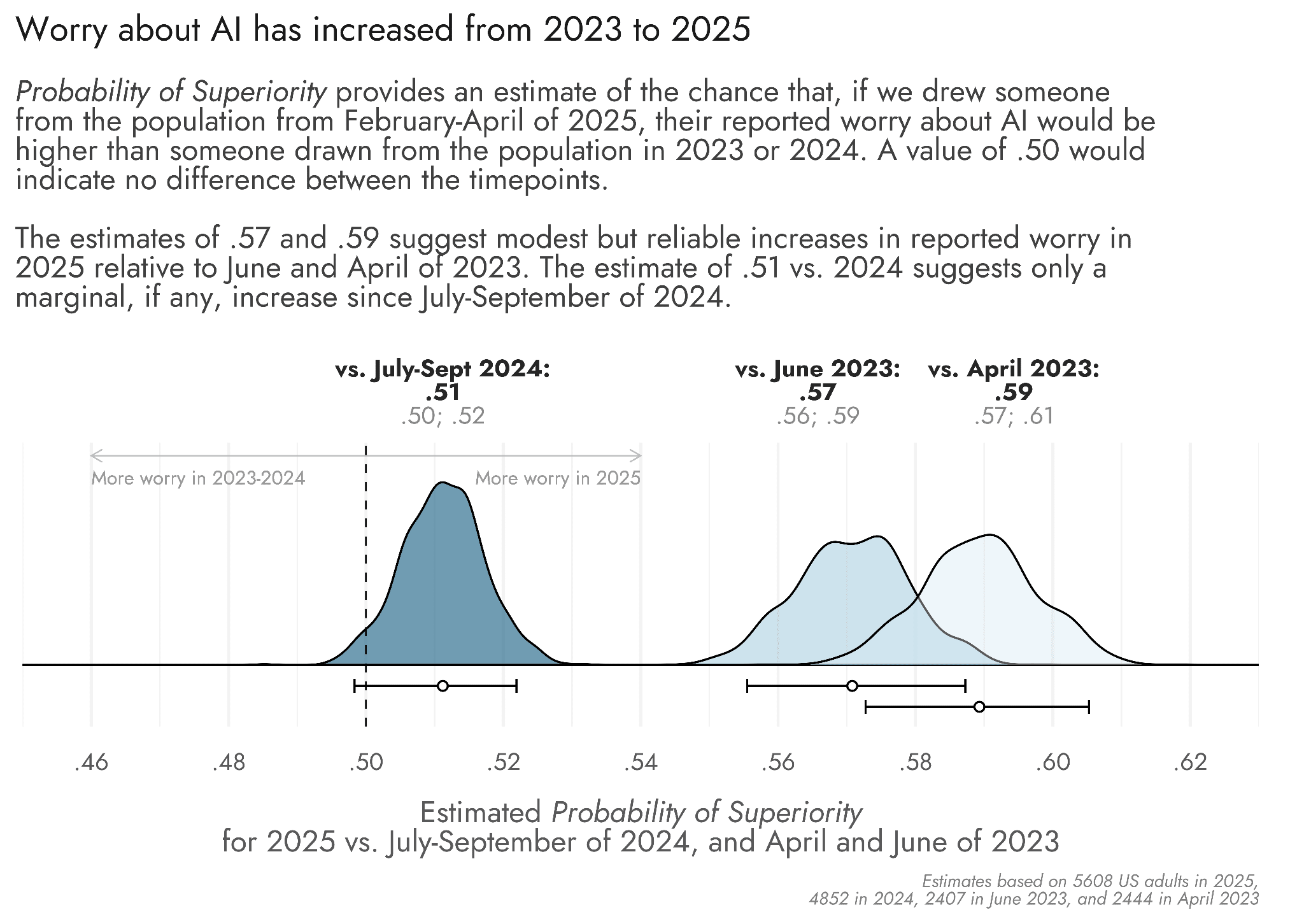

Estimates of Probability of Superiority (PSup) over time support this impression (Figure 3). Specifically, when comparing Wave 2 of Pulse with estimates from 2023, we saw PSup values of .59 and .57 for April and June, respectively (i.e., 57%-59% of US adults would be expected to report higher worry in 2025 than they would have in 2023), indicative of modest but reliable increases. When comparing Wave 2 of Pulse with Wave 1, the PSup value was .51 [.50-.52], suggesting only a very slight increase since mid-late 2024.

Based on these observations, there may be a tapering off or stabilization in levels of AI worry, as usage of AI and the existence of powerful large language models (LLMs) become more normalized. However, a plateauing of worry can be more convincingly assessed once we have another assessment later in 2025 in Wave 3 of Pulse. We would also expect levels of worry to be susceptible to publicly legible developments in AI or a major AI-related event.

Figure 3: Worry about AI has increased from 2023 to 2025

Worry about AI was not evenly distributed across different demographic groups in the US population (Figure 4). For example, proportions of those who worry at least “A fair amount” tended to be higher among Female than Male adults, those with lower incomes, Democrats, and non-white respondents. The relatively higher reported worry among Asian/Asian American respondents relative to White or Caucasian is somewhat surprising, given results below indicating that Asian/Asian American adults were the only demographic besides 18-24 year olds with slightly positive views, on average, towards AI acceleration.

Figure 4: Demographic breakdowns of the extent to which US adults worry in their daily lives about the impacts of AI

How the US public views different stances on AI

In addition to assessing worry about AI, we presented roughly half of survey respondents (n = 2818) with a range of perspectives on AI in the form of statements that they could agree or disagree with. Similarly to our previous Pulse survey, we included statements stressing severe dangers posed by AI (“AI poses significant danger”), a focus on AI ethics over more speculative harms (such as risks of biases—“Ethics over speculation”), and the view that AI development should be accelerated to gain benefits as opposed to focusing on risks (“AI acceleration”). We additionally included a modified statement of AI ethics that removed the comparison with more speculative harms, and simply stated concerns about issues such as algorithmic biases and privacy violations (“Ethics”). Half of those who were shown the AI perspectives saw this simpler “Ethics” version, and half saw “Ethics over speculation.”

As shown in Figure 5, we estimate that the US public would show positive responses, on average, to each of the risk-related items (“Ethics,” “AI poses significant danger,” and “Ethics over speculation”), and slightly negative responses to “AI acceleration.”

As can be seen in Figure 5, and as assessed formally in Figure 6, these responses were essentially the same as in Wave 1 of Pulse, though with “AI acceleration” being marginally more positive in Wave 2. Even with this potential increase, all risk-related items substantially outperform “AI acceleration” (Figure 7), with over two-thirds of US adults expected to rate the risk-related items more positively.

Figure 5: US adults’ agreement with AI-related perspectives has changed little from July-September 2024 to February-April 2025

Figure 6: Effect size estimates suggest a very slight uptick in agreement with “AI acceleration” in February-April 2025 vs. July-September 2024, but AI attitudes were largely unchanged

Figure 7: US adults agree substantially more with risk-related perspectives on AI than with “AI acceleration”

Furthermore, whereas all the risk-related items—and AI worry—were positively correlated with one another, “AI acceleration” was negatively correlated with these other items (Figure 8). The positive correlation between “Ethics over speculation” and “AI poses significant danger” may be viewed as surprising, given that “Ethics over speculation” would seem to downplay more severe risks. However, we suspect that these items capture a broad tendency to be concerned about multiple AI risks, rather than the potentially more siloed attitudes of advocates who focus specifically on existential AI-risk or on AI ethics and have sometimes sought to disparage or downplay one another's efforts. This possibility is supported by the increase in support that “AI ethics” received when the comparison with more speculative harms was removed, which also resulted in a higher correlation with “AI poses significant danger.” It appears the US public is concerned both about “threats to humanity” and more ethical concerns.

Figure 8: “AI acceleration” was negatively correlated with all risk-related attitudes

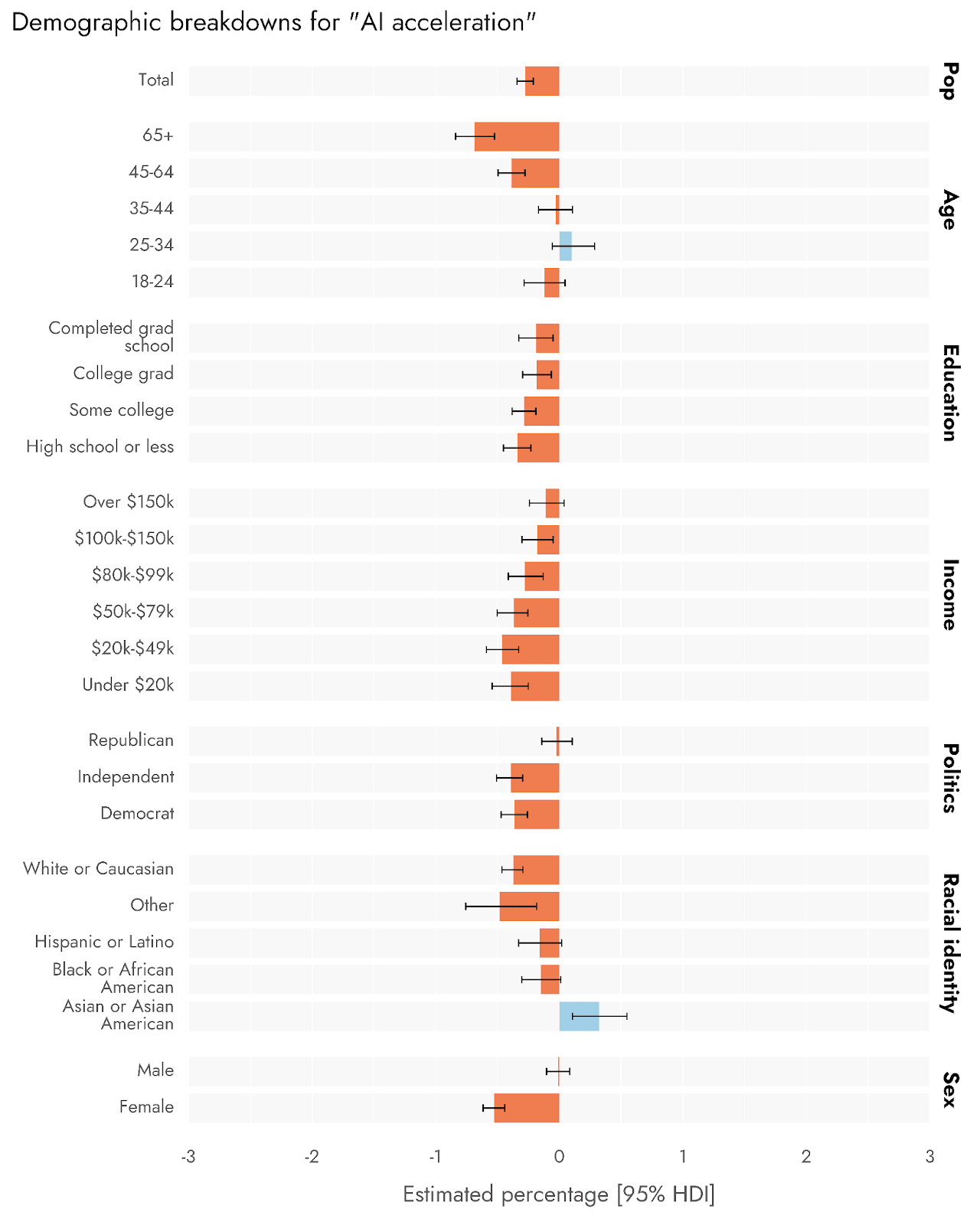

Figures 9 through 12 show demographic breakdowns in responses to the various AI perspectives. Across items, one of the most consistent trends was for older adults, and especially those aged 65+, to agree more with risk-related items, and to hold negative views of “AI acceleration.” Interestingly, while the risk-related items tended to show a linear increase in agreement from Republicans to Independents to Democrats, Independents and Democrats had similarly negative views of “AI acceleration.” Finally, Female respondents were, on average, more in agreement than were Males with risk-related items, and held lower views of “AI acceleration.”

Figure 9: Demographic breakdowns for “AI acceleration”

Figure 10: Demographic breakdowns for “AI poses significant danger”

Figure 11: Demographic breakdowns for “Ethics over speculation”

Rethink Priorities is a think-and-do tank dedicated to informing decisions made by high-impact organizations and funders across various cause areas. We invite you to explore our research database and stay updated on new work by subscribing to our newsletter.

Jamie Elsey conducted analyses and wrote this report, with editing and review from David Moss.

- ^

The exact number of respondents used in each analysis can vary, owing to non-usability or non-response for some questions, or splitting of respondents across different experimental conditions.

- ^

Elsey, J.W.B., & Moss, D. (2023). US public opinion of AI policy and risk. Rethink Priorities. https://rethinkpriorities.org/research-area/us-public-opinion-of-ai-policy-and-risk/

- ^

Elsey, J.W.B., & Moss, D. (2023). US public perception of CAIS statement and the risk of extinction. Rethink Priorities. https://rethinkpriorities.org/research-area/us-public-perception-of-cais-statement-and-the-risk-of-extinction/