Interstellar travel will probably doom the long-term future

By JordanStone @ 2025-06-18T11:34 (+144)

Once we expand to other star systems, we may begin a self-propagating expansion of human civilisation throughout the galaxy. However, there are existential risks potentially capable of destroying a galactic civilisation, like self-replicating machines, strange matter, and vacuum decay. Without an extremely widespread and effective governance system, the eventual creation of a galaxy-ending x-risk seems almost inevitable due to cumulative chances of initiation over time across numerous independent actors. So galactic x-risks may severely limit the total potential value that human civilisation can attain in the long-term future. The requirements for a governance system to prevent galactic x-risks are extremely demanding, and it may need to be in place before interstellar colonisation is initiated.

Introduction

I recently came across a series of posts from nearly a decade ago, starting with a post by George Dvorsky in io9 called “12 Ways Humanity Could Destroy the Entire Solar System”. It’s a fun post discussing stellar engineering disasters, the potential dangers of warp drives and wormholes, and the delicacy of orbital dynamics.

Anders Sandberg responded to the post on his blog and assessed whether these solar system disasters represented a potential Great Filter to explain the Fermi Paradox, which they did not[1]. However, x-risks to solar system-wide civilisations were certainly possible.

Charlie Stross then made a post where he suggested that some of these x-risks could destroy a galactic civilisation too, most notably griefers (von Neumann probes). The fact that it only takes one colony among many to create griefers means that the dispersion and huge population of galactic civilisations[2] may actually be a disadvantage in x-risk mitigation.

In addition to getting through this current period of high x-risk, we should aim to create a civilisation that is able to withstand x-risks for as long as possible so that as much of the potential value[3] of the universe can be attained as possible. X-risks that would destroy a spacefaring civilisation are important considerations for forward-thinking planning related to long-term resilience. Our current activities, like developing AGI and expanding into space may have a large foundational impact on the long-term trajectory of human civilisation.

So I've investigated x-risks with the capacity to destroy a galactic civilisation[4] ("galactic x-risks"), defined here as an event capable of destroying the long-term potential of an arbitrarily large spacefaring civilisation[5]. A galactic x-risk would be a huge moral catastrophe. First, that's a lot of death. Second, once a civilisation has overcome the barriers required to become a galactic civilisation, their long-term potential in expectation is probably much higher than a civilisation on one planet (i.e., a pre-Precipice or pre-Great Filter civilisation). So, the loss of future value may be much greater[6].

Existential Risks to a Galactic Civilisation

I'll start by ruling out the risks that I believe are limited in scope to a one planet civilisation, and then to a small spacefaring civilisation. Don't worry too much about the categories/subheadings here. These threats could be in multiple categories depending on their severity, and, if artificial, their design and deployment strategy[7]. The threats in the "Galactic Existential Risks" section will be the most relevant to the long-term resilience of a galactic civilisation.

I have not classified existential risks into artificial and natural because we’re dealing with arbitrarily advanced technology. So any natural event that we know can occur, could conceivably be induced by arbitrarily advanced technology, or at least the probability of its occurrence could be increased artificially.

Threats Limited to a One Planet Civilisation

The threats we will escape by becoming spacefaring are those that drastically change a planet’s atmospheric conditions without spreading to space. Included in this group are volcanic eruptions[8], geoengineering disasters[9], atmospheric positive feedback loops[10], the release of highly toxic molecules/chemical warfare[11], nuclear war[12], and an asteroid or comet impact[13]. By spreading outside of one’s own atmosphere, other settlements can survive and hopefully safeguard the long-term potential of human civilisation[14].

Threats to a Small Spacefaring Civilisation

Some existential risks can propagate through space and potentially destroy civilisations across multiple star systems. In contrast, threats that are not self-propagating or are limited in range can eventually be overcome by spreading throughout the galaxy. These threats, therefore, only pose existential risks during a relatively brief phase in the expansion of human civilisation in space.

Most threats in this transitionary phase come from stars. Firstly, a civilisation's host star may be the source of x-risks like superflares[15], stellar mass loss events[16], increasing stellar luminosity or volume, or stellar engineering disasters[17]. Alternatively, localised existential risks could come from natural energetic events of other stars[18], like supernova explosions, magnetar flares, pulsar beams, kilonova winds[19], and M-dwarf megaflares. These energetic stellar events would affect multiple star systems, with a range of impacts depending on the type of event and its distance.

There are also all sorts of cosmic phenomena that could be encountered by a civilisation occupying one or multiple solar systems that would likely destroy them. These include (in order of increasing speculativeness) interstellar clouds[20], supernovae remnants[21], primordial black holes[22], cosmic strings[23], domain walls[24], and wormholes[25],

Alternatively, any artificial or natural[26] alterations to the orbits of planets could conceivably destroy a spacefaring civilisation around one star by altering UV radiation influx or inadvertently causing planet collisions[27].

Giant artificial structures in space like Dyson spheres, orbital rings, sunshades, Shkadov thrusters, or space particle accelerators could conceivably end a spacefaring civilisation too. Their immense energy demands, gravitational influence, or stored kinetic potential makes them capable of causing catastrophic failure modes, like stellar destabilisation, orbital cascade, or directed energy misfires[28]. Additionally, interstellar weapons like high powered lasers and particle beams may be used to annihilate a civilisation occupying a solar system from very far away[29].

The most devastating localised existential risks are galactic core explosions and quasars[30]. A quasar could generate a galactic superwind from the center of a galaxy, stripping away atmospheres as it expands, sterilising large regions of the inner galaxy.

Galactic Existential Risks

This is a list of threats that could conceivably destroy the long-term potential of a whole galactic civilisation, or plausibly even a civilisation occupying multiple galaxies. Preventing any of these from occurring[31] is essential to the long-term resilience of a galactic civilisation.

Self-replicating Machines

If you’re worried about alien invasions, then von Neumann probes are a great bet. Von Neumann probes are essentially self-replicating machines that can travel through space. If they're advanced enough, they can self-replicate indefinitely, travel at near light speed, detect life throughout the galaxy and systematically eliminate it. It has been suggested that von Neumann probes could destroy a galactic civilisation[32]:

all it takes is one civilization of alien ass-hat griefers who send out just one von Neumann Probe programmed to replicate, build N-D lasers, and zap any planet showing signs of technological civilization, and the result is a galaxy sterile of interplanetary civilizations until the end of the stelliferous era (at which point, stars able to power an N-D laser will presumably become rare).

I think the key here is self-replication. If technologically feasible, self-replicating interstellar lasers are much worse than interstellar lasers. Presumably, to annihilate a galactic civilisation, the creator of the von Neumann probe would also be eliminating themself. But I think that releasing von Neumann probes that kill most civilisations and prevent others from emerging is sufficient to destroy most of the long-term potential of a galactic civilisation[33]. In the words of Charlie Stross, “it only has to happen once, and it f***s everybody".

I'm also including nanotechnology in the self-replicating machines section. There are definitely motivations to decrease the size of a von Neumann probe. If the probes are adaptive, then nanotechnology might be an effective strategy at times. So the same overview above applies to nanotechnology.

Biological machines (i.e., engineered pandemics) are also self-replicating machines, but are probably not an effective strategy for deployment in space. One could argue that weaponised bioengineered pandemics could be a threat to a spacefaring civilisation if they were adaptive[34], engineered for maximum spread and lethality, and deployed artificially across the galaxy[35].

Strange matter

Strange matter has been theorised to exist as a more stable form of matter, and it may be able to turn normal matter into strange matter on contact. Strange matter is hypothesised to form when atoms are compressed beyond a critical density, dissociating the protons and neutrons in quarks, creating quark matter and strange matter. These conditions might be reached during a collision between two neutron stars, and strangelets (small droplets of strange matter) may be released. Alternatively, extremely advanced technology may be used to create strange matter in the long-term future.

If a strangelet hit the Earth, we'd all be converted into strange matter, and strangelets would be created and dispersed. Eventually this process of conversion and spread could destroy a galactic civilisation.

Strange matter is probably a solvable risk for an extremely advanced civilisation. With huge amounts of energy, they may be able to create gravitational waves to act as a repulsor beam. But still, a huge amount of long-term value in the universe may be lost if the strange matter is not contained rapidly.

Vacuum decay

Vacuum decay is a hypothetical scenario in which a more stable vacuum state exists than our current "false vacuum". Calculations indicate that the probability of spontaneous vacuum decay is exceedingly low on cosmological timescales. However, local decay might occur if enough energy is concentrated in a small volume, or the fundamental fields are otherwise manipulated into a configuration that relaxes to the true vacuum rather than to our metastable vacuum. To quote Nick Bostrom[36]:

This would result in an expanding bubble of total destruction that would sweep through the galaxy and beyond at the speed of light, tearing all matter apart as it proceeds.

Developments in the standard model of particle physics (especially up to high energy scales) should eventually tell us whether or not vacuum decay is possible. If it is, then we might not have enough time to create a galactic civilisation anyway:

Subatomic Particle Decay

Very speculative grand unified theories in the 1970s (e.g. SU(5)[37] and SU(10)) implied that subatomic particles can decay[38], meaning all matter is ultimately unstable. For example, the proton may decay via an interaction with a magnetic monopole[39] or via pathways involving virtual black holes and hawking radiation[40]. Additionally, neutrons may decay[41] via pathways like neutron-antineutron oscillations or by leaking into other dimensions[42]. However, these processes mainly act locally, so they are unlikely to be galaxy-ending scenarios unless they were self-propagating reactions[43] or another fundamental process altered physics (either artificially or naturally over time) to allow the decay to occur across the universe[44].

Time travel

In general relativity, if it's possible to create spacetime warping structures like cosmic strings and wormholes, then time travel back in time is not definitely impossible. There are some rebuttals like chronology protection from quantum physics and the chronology protection conjecture. But, generally, it seems impossible to know whether time travel is actually possible until a unifying theory to join quantum gravity with quantum mechanics and general relativity has been developed[45]. If time travel is possible, then (depending on your personal favourite theory of time travel) a galactic civilisation might be destroyed by itself before it is created.

Fundamental Physics Alterations

There are so many different fundamental constants and properties that are highly precise and necessary to the existence of life. With extremely developed physics and arbitrarily advanced technology, if any of them are possible to alter at a large scale, then life in the universe is at risk. This seems extremely unlikely. Though, it has been suggested that fundamental constants may drift over time in accordance with the age of the universe. The fundamental constants include the gravitational constant[46], strong coupling constant[47], Planck’s constant[48], the cosmological constant[49], and the fine structure constant[50]. Other stuff breaking down that would be very bad include color confinement[51], quark-leption unification[52], and the speed of light.

Interactions with Other Universes

If there are other universes or our universe is a sub-universe[53], then everything could end quite abruptly.

According to brane theory, other universes exist in non-visible dimensions, but are able to collide with our universe (brane collision)[54]. One of these collisions might have ignited the Big Bang. If a Brane collided with our universe again, it could initiate another Big Bang-like event and reset our universe.

Based on the eternal inflation theory, our universe is one of many bubbles that form through quantum tunnelling within an inflating space. Bubbles expand at near-light speed and may collide with each other, causing observable effects or even catastrophic consequences. A collision with another bubble could even initiate vacuum decay or create domain walls.

Alternatively, our universe could actually exist in the black hole of another universe. Black holes contain spacetime singularities, which break down everything we know about space and time, so space and time may switch roles. From the outside, a black hole is almost infinitely large in time (i.e., it may continue existing almost indefinitely), but from the inside it is infinitely large in space. That means a black hole could contain a whole universe... our universe. This might not actually change much about our understanding of the universe. However, the collapse of a black hole universe into a singularity would probably end our universe in a similar way to the Big Crunch[55]. Alternatively, our host black hole might just evaporate slowly by releasing hawking radiation, this would normally take 1067 years, far longer than the current age of our universe (1.38 x 1010).

Finally, (I say in a slow rhythmic voice) if the universe is a simulation and the simulation was turned off, a civilisation at any scale in our universe would be destroyed.

Societal Collapse or Loss of Value

Galactic civilisations may fizzle out due to societal collapse or loss of their moral value. Societal collapse may occur via predator prey dynamics[56], galactic tyranny, weakness by homogeneity[57], or an interstellar coordination breakdown. A loss of moral value might come about by an outcompeting of sentient beings by non-sentient posthumans or artificial intelligence, the creation of s-risks, or a gradual drift of values.

Artificial Superintelligence

Artificial superintelligence with access to the resources greater than a star system may use all of the above to kill a galactic civilisation.

I think the creation of superintelligent AI may always be an x-risk, even if alignment has been solved previously somewhere else in the universe.

While war has typically favoured defense, destructive capabilities have always been more powerful than defensive or constructive capabilities. While countries have been created over thousands of years, any country on Earth could be destroyed within the next hour by nuclear weapons. So I would assume that, at the limits of technology, destructive capabilities will eventually be so great as to defeat any defences[58], vacuum decay being the prime example.

So even if an aligned superintelligence exists in a galactic civilisation. Then assuming new civilisations are able to emerge independently of it, the creation of an evil superintelligence somewhere else is probably still an x-risk as its destructive nature would make it innately more powerful than the benevolent AI. Especially if the evil AI has no interest in self-preservation (e.g., it was created to eliminate astronomical suffering), it could initiate any number of x-risks that there is no defence against, like vacuum decay, but also possibly other forms of interstellar weapons combining von Neumann probes, interstellar lasers and particle beams, strange matter, or likely many more things that I couldn't even conceive of.

One potential solution to prevent an evil superintelligence from emerging is to have an aligned superintelligence that is able to observe all activities across a galaxy (likely requiring independently acting but coordinated superintelligences to mitigate communication delays). This way, the emergence of a new superintelligence could be prevented, along with all the other galactic x-risks on the list. More on this in the last section as there are obvious downsides to address.

Conflict with alien intelligence

There are countless ways an alien civilisation with arbitrarily advanced technology might choose to eliminate a galactic civilisation[59]. Just pick any item on the galactic existential risks list and insert before it "aliens decide to use...", or pick anything in the previous section and insert "aliens decide to use ____ millions of times".

I think if aliens exist, they are more dangerous than superintelligence, firstly, because aliens could create superintelligence. Secondly, we may have no control over their creation or what they might do. They could just initiate vacuum decay right now and we wouldn't even see it coming as it approaches at light speed. More on aliens in the very uplifting section entitled: "If aliens exist, there is no long-term future".

Unknowns

Assuming that humanity right now is nowhere near the limits of technological and scientific knowledge, a post-ASI civilisation could expand the number of galactic x-risks drastically. If you asked a 21st-century PhD student (me) and a superintelligent AI to list all possible galaxy-ending existential risks, what percentage of the AI's total list would the PhD student be able to name? Asking us to list galaxy-ending x-risks today might be like asking an ancient Roman to predict how artificial intelligence could collapse 21st-century civilisation - the concepts simply don’t exist yet. A Roman wouldn't get anywhere 50%, so I wouldn't be so bold as to claim I could name more than 50% of the galactic x-risks.

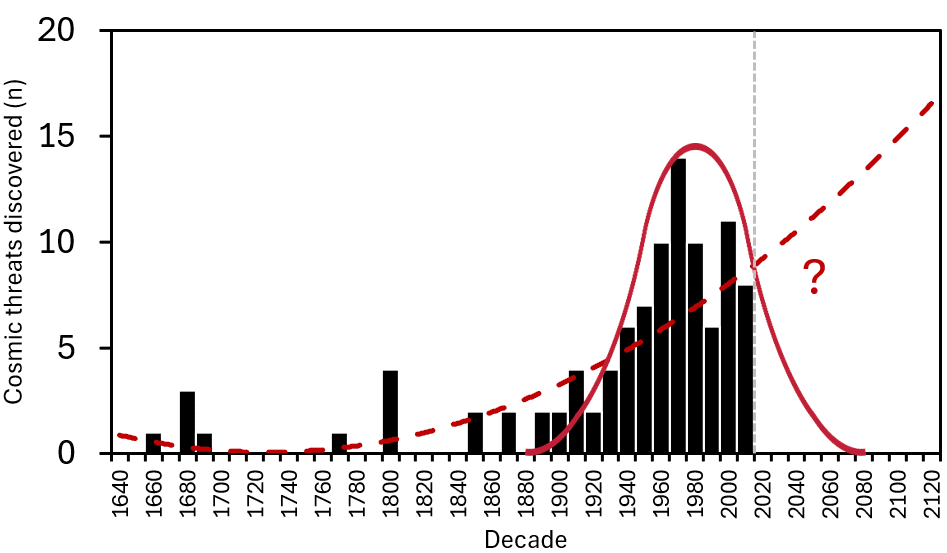

Figuring out how many unknown unkowns there are is really hard. The sample size of galactic x-risks isn't really big enough to say anything confidently about a rate of discovery, especially since I don't know which are actually real. So I've listed 100 cosmic threats[60] (anything arising from space that could destroy Earth as a proxy for galactic existential risks/ dangerous physics discoveries), summed up how many were discovered in each decade, and plotted that on a bar graph[61]:

Aside from this just being super interesting, there are signs we may be reaching diminishing returns - it looks like we might havepassed the peak of a bell curve[62]. However, unexpected discovery classes remain possible, and the discovery rate may go up rapidly if there is an intelligence explosion. How high is pure speculation I think. There is so much that we know we don't know about the universe, so there must be so much that we don't know we don't know. But playing it safe, I'd assume that I've listed less than 50% of the actual number of galactic x-risks, and we should probably act as if we're certain that there are many more galactic x-risks.

What is the probability that galactic x-risks I listed are actually possible?

For an x-risk on the list to actually be a galactic x-risk, it must be real and capable of destroying a galactic civilisation. Here are my best guesses for each of the galactic x-risks[63]. For each, I have given my percentage probability of the threat actually being a thing that could happen in the long-term future (i.e., the laws of physics permit it's existence) and of the threat being capable of ending a galactic civilisation if it was initiated. Threats resolving 100% on either get a tick.

| Galactic x-risk | Is it possible? | Would it end Galactic civ? |

| Self-replicating machines | 100% | ✅ | 75% | ❌ |

| Strange matter | 20%[64] | ❌ | 80% | ❌ |

| Vacuum decay | 50%[65] | ❌ | 100% | ✅ |

| Subatomic particle decay | 10%[64] | ❌ | 100% |✅ |

| Time travel | 10%[64] | ❌ | 50% | ❌ |

| Fundamental Physics Alterations | 10%[64] | ❌ | 100% | ✅ |

| Interactions with other universes | 10%[64] | ❌ | 100% | ✅ |

| Societal collapse or loss of value | 10% | ❌ | 100% | ✅ |

| Artificial superintelligence | 100% | ✅ | 80% | ❌ |

| Conflict with alien intelligence | 75% | ❌ | 90% | ❌ |

Reassuringly, none of these have two ticks in my estimation. However, combined, I think this list represents a threat that is extremely likely to be real and capable of ending a galactic civilisation.

What is the probability that an x-risk will occur?

What are the factors?

There are multiple factors that simultaneously increase and decrease x-risk as a civilisation expands in space[66]:

- Dispersion: As a colony expands into space, it becomes more dispersed, which reduces the probability that a single x-risk will destroy everyone. However, dispersion across interstellar space makes effective governance increasingly challenging due to the huge communication time lags[67].

- Population size: Similar to dispersion, the more sentient beings that exist, the higher the probability of survival. But the higher the probability that one of those individuals or civilisations will cause an x-risk - it only takes one to initiate a galactic x-risk.

- Resource availability: As we expand into space, we are able to take advantage of more and more energy to simultaneously create much greater weapons and defences against x-risks.

Cumulative Chances

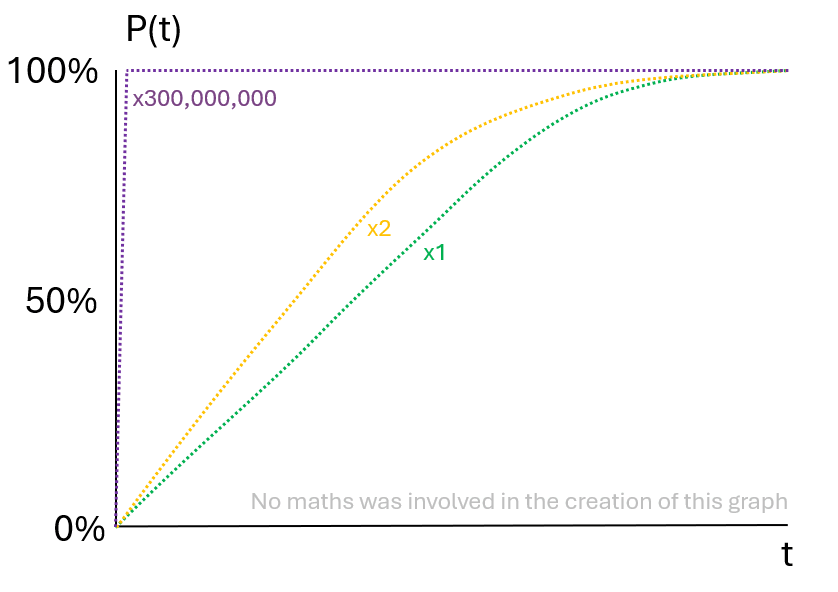

The initiation of a galactic x-risk could be motivated by a desire to end ongoing astronomical suffering across a galaxy, which I think is the most likely scenario. But generally, I think it doesn't really matter what motivations there are, because even very small probabilities of an event occurring can become extremely large given enough time. Assuming that galactic x-risks are possible (i.e., they exist and can destroy a galactic civilisation), cumulative chances over time and space make the probability of a galactic x-risk occurring nearly 100%.

If an event has an n > 0 probability of occurring over a period of time P(t), then the probability of that event having occurred over increasingly long time periods will eventually approach 100% (the green line in the graph). Additionally, the probability of an event occurring increases proportionally to the number of actors who are capable of initiating that event (as indicated by the other two lines in the graph). This graph is adapted from a talk that Toby Ord gave at EAG London 2025 on forecasting over long time periods:

So, for a galactic civilisation[68], even if the probability of a star system inducing a galactic x-risk is very low, the probability that a galactic x-risk will eventually be triggered is effectively 100%. This poses a potentially enormous threat to the total value that human civilisation could gain from the universe.

If aliens exist, there is no long-term future

I hinted at this in the 'conflict with alien intelligence' section. I have suggested that galactic x-risks are real and could destroy a spacefaring civilisation of arbitrarily large size. I then argued that the probability of one of those risks occurring is extremely high. So if aliens exist at the same time as us (especially under a grabby aliens scenario), then the probability that they will initiate an x-risk that would affect us is also very high, whether intentionally or not. There might already be vacuum decay bubbles headed our way, so human civilisation would end even if we did everything right. In addition to concerns I'm raising about the resilience of our future galactic civilisation, I think aliens have big implications for discussions around big picture cause prioritisation[69].

If alien-induced galactic x-risks have a non-zero probability of occurring, it's plausible that interstellar civilisations facing similar existential risks might develop coordination mechanisms or mutual deterrence strategies over time. And we shouldn’t rule out the possibility that sufficiently advanced alien civilisations could be benevolent or even value-aligned in ways that reduce risk rather than exacerbate it. But the risk is very high if they will emerge and spread independently of us - the cumulative probabilities graph above is multiplied and out of our control.

The Way Forward

From hereon I will assume we are the only intelligent life in the universe at the moment. What's our best path?

The governance systems of a galactic civilisation would have to be amazing to prevent some of these galactic x-risks. This problem might not be solved or be given sufficient attention within the next few decades. However, once an interstellar mission is sent (Metaculus predicts 2116 (25%), 2248 (median), >2500 (75%)[70]), a self-perpetuating expansion of humanity throughout the cosmos is plausibly initiated. Taking time for a long reflection before initiating such an expansion seems important for increasing the chances of exporting a viable galactic governance system - one capable of sustaining a flourishing civilisation that can endure and spread across the galaxy for billions of years.

One solution is to abandon galactic colonisation and only expand in the digital space, using the real world exclusively for gathering resources[71]. But if we choose galactic colonisation, then a governance system would need to meet the following requirements in order to prevent galactic x-risks:

- The governance system should be able to spread ahead of (or at least with) human civilisation throughout the affectable universe.

- The governance system should be impossible to overthrow, manipulate, sidestep, or avoid.

- All potential routes to the creation of a galactic x-risk or astronomical suffering should be known by the governance system.

- Any activities pertaining to potential routes to galactic x-risks or astronomical suffering should be observable by the governance system.

- The governance system should hold or have access to the powers to prevent any actor from initiating a galactic x-risk or astronomical suffering.

- The governance system should be able to identify emerging alien civilisations and integrate itself into them or collaborate with them to continue meeting the above requirements.

It's worth noting that conditions 3 to 5 basically describe God - all knowing and all powerful. So it might be necessary to create a God-like being, or at least a superintelligence, prior to interstellar colonisation. The amount of foresight required to meet these conditions is inconceivable without a superintelligent system that is able to adapt to emerging hazards (or fundamentally unknowable future scenarios like other universes interacting with ours). It seems impossibly hard to predict what any one of hundreds of millions of star systems developing across a galaxy for billions of years might do. The good news is that we are in a very powerful position right now, especially if we are alone in the universe. We could prevent any star system in the future from gaining more power than their governance system. This advantage is lost if humanity spreads to other star systems soon and allows them to culturally drift or remove themselves from a centralised governance system.

Another point to mention from the requirements is that I've included the prevention of astronomical suffering. Inadvertently locking human civilisation in a state of astronomical suffering with no way out is literally the worst possible scenario. There are situations where the initiation of a galactic x-risk may be morally preferable. So, to avoid galactic x-risks, astronomical suffering should necessarily be prevented. There may even be an option to include a back door to allow galactic x-risks if the governance system fails to prevent astronomical suffering.

This 'governance system' I'm describing has very authoritarian vibes. Of course, pairing this with a long reflection may allow us to solve issues around freedom and governance associated with an AI overlord, or find alternative solutions. Or maybe we could become so wise that the probability of us or any of our descendants initiating a galactic x-risk is 0%.

One could argue that superintelligent AI will solve these problems, so we don't need to bother now - it would be best to focus on AI alignment. However, interstellar missions may come before aligned superintelligence. In fact, humanity would likely be able to launch an operation to colonise the entire reachable universe quite easily if we wanted to. So an interstellar mission may have to be actively prevented until we can be certain that its accompanying governance structure would either prevent further cosmic propagation or future galactic x-risks.

However, the point of no return might not be "an interstellar mission is launched". If we launch an interstellar mission in the next couple of decades, it would likely not reach anywhere near light speed and may take decades to reach its destination. So future missions with more advanced technology and faster spacecraft could likely catch up to it, even if they leave many years later. Additionally, the first interstellar settlers probably wouldn't be thinking about moving on to the next star system as soon as possible. Or, at least they probably wouldn't spread as quickly as they possibly could. So, a mission to catch up with them post-transformative AI and invite them to participate in our "governance system" would likely be successful[72]. This pathway has bad vibes though, and other star systems could be defence-dominant in the short-term[73]. In any case, I think a lot more work on investigating current plans and long-term scenarios for interstellar travel now is well justified[74].

Some key takeaways and hot takes to disagree with me on

- Existential risks capable of destroying a galactic civilisation (galactic x-risks) are possible.

- A galactic x-risk will inevitably occur even if the probability of a star system initiating one is extremely low.

- In the long-term future, conflicts between star systems will be offence-dominant.

- If aliens exist, there is no long-term future (there is no galactic civilisation lasting billions of years).

- There are 6 requirements for a governance system to prevent galactic x-risks, and they suggest the creation of God.

- Interstellar travel should be banned until galactic x-risks and galactic governance are solved.

- Galactic x-risks are relevant to big picture cause prioritisation.

- At least some of us should care about all of the above points now, rather than in 30 years or after ASI.

Edit: Adding some further reading as a lot of these great works are hidden within all the footnotes (or were missed originally):

- Daniel Deudney. 2020. Dark Skies: Space Expansionism, Planetary Geopolitics, and the Ends of Humanity (space expansion increases x-risk)

- Phil Torres. 2018. Space colonization and suffering risks: Reassessing the “maxipok rule". Futures 100 (2018): 74-85. (galactic colonisation will lower existential security)

- Toby Ord. 2025. Forecasting can get easier over longer timeframes. EAG London 2025. (Toby had clearly thought about my whole post before I wrote it)

- Charlie Stross. 2015. On the Great Filter, existential threats, and griefers. (correlated galactic x-risks: "all it takes is one")

Acknowledgements: Thanks to Jess Riedel, Toby Ord, and Jan Gaida for comments on the draft. I made a lot of changes since their comments and all mistakes remain my own.

- ^

These solar system x-risks were unlikely to be future great filters as they mainly required extremely advanced technology (e.g. stellar engineering), far more advanced than interstellar travel. So, spacefaring civilisations would most likely be interstellar before any of these large-scale sci-fi disasters occurred and so they don’t represent a reliable Great Filter.

- ^

It's a combination of both these factors. A huge population with a lot of different civilisations that will be independent actors because of the huge space between them. It means that they don't act as one civilisation that could do a bad thing, but potentially millions of civilisations that could do a bad thing. In the latter scenario, the bad thing has a much higher probability of occurring... it only takes one.

- ^

Whatever that might mean. I imagine wellbeing of sentient beings + number of sentient beings.

- ^

I am aiming to include very speculative or hypothetical threats that might emerge as real risks in the long-term future.

- ^

I don't think there's a good word for a civilisation spanning a huge amount of space. Galactic x-risk has a good ring to it, but most of the threats I'll discuss don't care about how big your civilisation is. "Universal x-risk" isn't as clear and "cosmic x-risk" sounds too much like cosmic threats.

This definition is, of course, based on the definition of x-risk used by Toby Ord in The Precipice.

- ^

Assuming that there isn't a near limit on the amount of time that the cosmos will be conducive to the existence of life.

- ^

Basically anything that can destroy a civilisation occupying a star system is potentially a galactic x-risk if it's used millions of times.

- ^

Large magnitude eruptions may dramatically cool our climate through the release of ash and sulfur, or warm the planet from significant releases of CO2. But other impacts include:

ozone destruction, reduction in rainfall, further biodiversity collapse/environmental damage (already in decline), widespread blockages of maritime trade, destruction of communication, and technology, financial losses, may lead to mass shortages of food, water and energy resources

All of these impacts combined are certainly a global catastrophic risk, and may be sufficient to cause the collapse of a global civilisation and the loss of its long-term potential.

No volcano in the Solar System is powerful enough to affect the habitability of multiple planets. However, a related but highly speculative scenario might be the detonation of a gas giant planet by the nuclear fusion of deuterium (e.g. of Jupiter). This may be initiated by a black hole impacting the planet or by the deployment of an initiator bomb[75]. The ignition of a gas giant planet may create an explosion so great that a whole solar system would be affected by it. But I'm hard-pressed to see how even this stretch of the imagination could be an existential threat to a whole spacefaring civilisation.

- ^

A geoengineering disaster may be initiated with a desire to cool the atmosphere of a planet, potentially by use of aerosols. It’s clear to see how this could go wrong. The best mitigation for this is to not do it, or make sure that if geoengineering is necessary, it is executed extremely responsibly.

The equivalent of this for a Solar System civilisation and a galactic civilisation are a stellar engineering disaster and a galactic core engineering disaster, respectively. Or maybe a planetary core engineering disaster is the more accurate planetary equivalent. Maybe. Moving on.

- ^

Including the runaway greenhouse effect and runaway refrigerator effect.

An artificially induced runaway greenhouse effect could occur if large-scale activity released vast quantities of greenhouse gases into the atmosphere. As heat becomes trapped more effectively, surface temperatures would escalate, causing oceans to evaporate and the water vapor to amplify the greenhouse effect further. This positive feedback loop could render the planet uninhabitable (Venus’ fate), collapsing ecosystems, infrastructure, and ultimately ending civilization. This is not even remotely a scenario for the present-day climate crisis.

There's also the "runaway refrigerator effect", where a positive feedback loop emerges that drives temperatures down[76]. This effect has been responsible for past ice ages on Earth and Mars.

- ^

The release of large quantities of highly toxic molecules into the atmosphere (e.g., through overenthusiastic chemical warfare) may destroy the long-term potential of human civilisation by eradicating the vast majority of the population. Most space outside of Earth's atmosphere is essentially toxic to humans anyway, so this isn't a big deal for a spacefaring civilisation.

- ^

Apart from the initial destruction, a nuclear war may generate a nuclear winter, reducing the solar influx and generating global sub-freezing temperatures. This is very likely to destroy the long-term potential of a global civilisation.

Nukes do work in space, but a spacefaring civilisation is not as vulnerable to this because firing missiles at every space station, moon, and planet to destroy everyone would be very difficult. A self-sustaining colony capable of preserving the civilisation’s long-term potential is very likely to survive. Other more powerful interstellar weapons are discussed later.

- ^

An asteroid hitting a planet has a proven capability to cause mass extinctions and a large impact could potentially destroy human civilisation.

By expanding to other planets, moons, and space stations, we prevent all of humanity from being wiped out by one impact.

Some extreme scenarios like a strong gravitational wave from a nearby and massive cataclysmic event like a black hole merger (not gonna happen[77]) creating a large scale instability in the asteroid belt and Oort cloud could result in chaos that might destroy a spacefaring civilisation. But in those speculative scenarios, the asteroid impacts are more like an effect than the existential risk itself.

- ^

I don't think this is a strong motivation for rapid space expansion. Almost none of those scenarios make Earth less habitable than Mars. But this will change in the long-term future once space settlements are more established, large, and self-sustaining. To me, patience with space expansion seems like the path that leads to the best existential security in the long-term. We should aim to increase existential security on Earth first before we export our fragile and unsustainable society to other planets where the challenges will be greater.

- ^

Solar flares and coronal mass ejections from stars are fairly common, and they can damage infrastructure like electrical grids and satellites.

Some have suggested that extremely powerful solar flares might also occur, so-called “superflares”. If the superflare were powerful enough, it may be capable of destroying a spacefaring civilisation by altering the surface temperatures of planets or destroying ozone layers. Humans living in space colonies may be more vulnerable to super flares if they exist outside the protection of an atmosphere. An advanced civilisation might easily mitigate the effects of superflares by predicting them in advance and using radiation shielding, or even constructing large protective structures in space.

- ^

A sudden stellar mass loss event would reduce the luminosity of the Sun. These are more common in the later stages of a star's life (and for larger stars). A mass loss event could be very destructive to a spacefaring civilisation was taken off guard. A sudden stellar mass loss event may also have other destructive consequences from the ejection of plasma and particles. However, in general, this would be predicted quite easily, is probably not a plausible scenario for the Sun, and the catastrophic consequences may be prevented with solar shields in space, stellar engineering, geoengineering, or planetary orbit alteration. A similar argument also applies to the increasing luminosity of the sun over billions of years.

- ^

The Sun may also be damaged or altered by a stellar engineering project, such as an attempt to extend the lifetime of a star or extract material, with resulting (presumably) unanticipated and destructive consequences. Additionally, an attempt to turn Jupiter into a star (e.g. to terraform the Galilean moons) may destroy Jupiter and wipe out a spacefaring civilisation. The proposed method for this was to seed Jupiter with a primordial black hole. Sounds safe.

- ^

These events have the capability to affect large regions of space. The effectors include microwave radiation, x-rays, gamma-ray bursts, cosmic radiation and particles, and neutrino showers. Space colonies may be more vulnerable to stellar explosions if they exist outside the protection of an atmosphere.

- ^

(neutron star mergers)

- ^

Interstellar clouds dominated by gas may enrich atmospheres in unusual elements, or interstellar dust clouds could generate meteor showers and destroy satellites and space stations.

Interstellar clouds would usually be a mix of gas and dust.

- ^

Supernova remnants are basically a type of interstellar (plasma) cloud.

Supernova explosions are one of the most explosive events in our universe, and they leave behind highly energetic remnants. Particularly dangerous remnants are left when pulsars go supernova, as they can contain pulsar wind nebulae, which are some of the most energetic objects in our universe (though only dangerous at short distances). If a solar system were to pass through a supernovae remnant (not gonna happen to us), then chaos would ensue for the civilisation that inhabited it.

- ^

Primordial black holes are a theoretical type of black hole that may have formed shortly after the Big Bang. The universe was very dense then, so black holes were forming everywhere and at a range of masses. So there could still be football-sized black holes lurking about the universe. If one were to hit us at high speed, it could destroy a planet.

While physicists predict that primordial black holes would move very quickly, it's a very different story if the primordial black hole approaches slowly. In that case it could settle in a planet’s core, which it would devour from the inside. Alternatively, if primordial black holes evaporate nearby, their intense burst of radiation during final stages could cause significant damage locally.

So it seems that these primordial black holes could get up to all sorts of mischief that may destroy a civilisation spread across a solar system. Though, primordial black holes remain highly theoretical and their impacts are entirely speculative.

- ^

Cosmic strings are one-dimensional defects or cracks in spacetime that stretch for potentially millions of lightyears. They are thought to have formed from the trapping of energy into strings during early universe phase transitions. They are hypothetical but are predicted from the Big Bang and may be detectable. Simulations show that a string crossing Earth would induce "global oscillations" (like an earthquake (non-catastrophically) ringing the whole planet). Depending on the density of the strings, this could conceivably shatter Earth or the Sun. There are probably a bunch of other lethal scenarios, including but not limited to bursts of high energy particles, intense gravitational waves, and distortions of spacetime.

- ^

The early universe likely underwent multiple phase transitions, during which the fundamental fields of physics changed. Domain walls could have formed when different regions of the universe settled into different vacuum states, creating two-dimensional topological defects at the boundaries between them. But it doesn't seem that they would be stable until the present because they would be so deadly that if they were formed during the early universe, they probably would have destroyed the universe by now. But if they were stable, and we passed through one, a spacefaring civilisation would be at the mercy of whole new fundamental physics, which isn't good for biochemistry.

- ^

Some quantum gravity models allow for the spontaneous temporary formation of microscopic or macroscopic wormholes, which would quickly collapse, potentially into a black hole. If a wormhole were to spontaneously form near or within our Solar System and then collapse abruptly, it might produce extreme gravitational disturbances or tear spacetime locally.

A wormhole could also be created artificially. It seems that there are multiple potential pathways to creating stable wormholes under various assumptions and theories, and its plausibility may be clearer with a theory of everything. Quoting Anders Sandberg on wormholes:

dump one end in the Sun and another elsewhere (a la Stephen Baxter’s Ring), and you might drain the Sun and/or irradiate the Solar System if it is large enough.

- ^

A rogue planet entering our solar system may naturally produce these catastrophic scenarios, throwing orbits into chaos. NASA estimates that there are far more rogue planets in our galaxy than planets orbiting stars. This risk is also very conducive to extremely unlikely imaginative scenarios, like how does that change if a gas giant instead of a rocky planet enters the Solar System? What about a “rogue” black hole (or maybe even a primordial black hole)?

- ^

For this to happen accidentally, there would have to be some extreme oversight. If you have the power to move planets, you can do the maths. Also, moving planets wouldn't be the most subtle military strategy in an interplanetary conflict. So I think the natural scenario (i.e., a rogue planet) is most likely.

- ^

More info: https://gizmodo.com/12-ways-humanity-could-destroy-the-entire-solar-system-1696825692

Their strategic value would probably make them targets in conflict, or their control could be seized by rogue actors or misaligned AI systems, leading to civilisation-wide collapse. So a spacefaring civilisation would need to incorporate very well-thought out safeguards and protections into the design of a giant structure.

- ^

Dispersion throughout a galaxy likely counters this threat. Kurzgesagt made a great video on interstellar war featuring interstellar weapons.

Though, interstellar weapons are the kings of "use it a bunch of times and it's a galactic x-risk". I'm assuming this comes under "self-replicating machines" though. Don't worry too much about the categories.

- ^

Quasars form around black holes like the one at the centre of our galaxy:

The inflow of gas into the black hole releases a tremendous amount of energy, and a quasar is born. The power output of the quasar dwarfs that of the surrounding galaxy and expels gas from the galaxy in what has been termed a galactic superwind

This "superwind" drives all gas away from the inner galaxy. Most galaxies have already gone through a quasar phase as they were common in the early universe.

Another potential threat from the centres of galaxies are cosmic rays from galactic core explosions. Hundreds of thousands of times more damaging than supernova explosions, some theorise that cosmic rays from other galaxies have already caused mass extinctions on Earth. Explosions from the core of the Milky Way likely make the inner galaxy uninhabitable, so large explosions may have catastrophic impacts for a galactic civilisation.

- ^

Or, at least, all of the ones that turn out to actually be real and capable of destroying a galactic civilisation.

- ^

Say we send out a fleet of exponentially self-replicating von Neumann probes to colonize the Galaxy. Assuming they’re programmed very, very poorly, or somebody deliberately creates an evolvable probe, they could mutate over time and transform into something quite malevolent. Eventually, our clever little space-faring devices could come back to haunt us by ripping our Solar System to shreds, or by sucking up resources and pushing valuable life out of existence.

- ^

There is an important question about offence-defence balance here. If von Neumann probes are already spread throughout a galaxy and an alien civilisation emerges, the emerging civilisations can be systematically destroyed before they become a threat.

However, if star systems are defence dominant, then it may not be a threat if one star system among a galactic civilisation releases a von Neumann probe. I am a minority viewpoint among people I've talked to about this in believing that cosmic warfare would be offence dominant. I cannot conceive of any defence against a self-replicating probe that is able to observe your defences and re-design itself accordingly with arbitrarily advanced weapons like interstellar lasers. I played this game as a kid, there is always a more powerful weapon to overcome your defence. In the plateau of technological innovation and resource availability, offence will always win. Change my mind.

- ^

A galactic civilisation would have a huge biological diversity (or even digitally sentient beings). No single pandemic would be able to infect all of them unless a superintelligent being was repeatedly designing them. In which case, superintelligence is the x-risk, and also it would have more efficient ways to kill everyone than generating plagues.

- ^

I'm definitely leaning into superintelligence for these last two points on nanotech and biological machines. Don't worry too much about the categories - the biggest galactic existential risk is a combination of multiple items on the list.

- ^

Bostrom, Nick. "Existential risks: Analyzing human extinction scenarios and related hazards." Journal of Evolution and technology 9 (2002).

- ^

SU(5) is disproven because observational evidence indicates that the proton's half life is way longer than the theory predicts. Proton decay was a key prediction of the theory.

- ^

Other particles like photons could also decay into lighter unknown particles in speculative scenarios like Lorentz-violating theories or hidden sectors.

- ^

The existence of proton decay and magnetic monopoles are some of the key predictions of many of the grand unifying theories in the 1970s. The vector boson or the Higgs mesons would have to be extremely massive for the proton to decay, which they aren't, and they can't be changed because they're fundamental parameters. However, there's a theoretical particle called a magnetic monopole that is stable independent of those values. So passing a magnetic monopole through matter allows the extremely massive energy conditions to be sidestepped, and the proton could decay. Recent developments have even argued that this is possible without new physics, just a deeper understanding of existing symmetries. However, as this process requires a monopole to interact with matter directly, it's not going to spread out and destroy all matter in the universe unless the reaction was self-propagating, i.e., the interaction created new monopoles.

- ^

Emerging from a theory of quantum gravity, which reveals new routes to induce proton decay, similarly only acting locally.

- ^

Free neutrons (i.e., neutrons not bound within an atomic nucleus) are already known to decay into protons (and an electron and an antineutrino).

- ^

This emerges from brane theory, where multiple other dimensions exist within our universe and can interact with our own.

- ^

e.g. a magnetic monopole interacting with a proton and causing it to decay produces another magnetic monopole.

- ^

e.g. decay of subatomic particles might follow vacuum decay

- ^

That does not represent a consensus view among physicists.

- ^

The gravitational constant is a fundamental physical constant that quantifies the strength of the gravitational force between objects. It appears in Newton's Law of Universal Gravitation and Einstein's theory of General Relativity. Some theories like Scalar–Tensor Theories, String Theory, Braneworld Models allow the gravitational constant to change.

- ^

- ^

Planck's constant is a fundamental parameter in quantum mechanics: "a photon's energy is equal to its frequency multiplied by the Planck constant, and the wavelength of a matter wave equals the Planck constant divided by the associated particle momentum". A slight increase could lead to atoms being much larger than they are now, potentially affecting the stability of matter and the size of celestial objects.

- ^

This has huge implications for end-of-universe scenarios as it is closely associated with dark energy. If the total mass-energy density of the universe is high enough (i.e., if dark energy weakens), the expansion could eventually slow down and stop. If gravity overcomes dark energy, the universe would begin to contract. Eventually, the universe would shrink into a singularity - a state of infinite density and temperature, similar to the conditions at the moment of the Big Bang.

- ^

This constant governs the strength of electromagnetic interactions. Life as we know it might not be possible under even modest changes in the fine structure constant, as protein folding, DNA stability, and biochemical reactions depend on electromagnetic interactions.

- ^

Color confinement confines quarks inside protons and neutrons. If this broke down, quarks could roam free or hadrons might disintegrate.

- ^

In some Grand Unifying Theories, quarks and leptons are two sides of the same field. This could allow for quark-to-lepton transitions (e.g. a neutron turning into an antineutrino). Not good.

- ^

Referring to the simulation theory and black hole universe theory (explained below)

- ^

Long & accurate explanation here.

- ^

Kurzgesagt is the elite source on this:

"This collapse of the black hole universe into a singularity looks like one of the scenarios for the end of our universe: The Big Crunch, where long after the Big Bang the whole universe collapses into a singularity again. But if there is a Big Crunch, there might be a Big Bounce – like a rubber ball that you’ve squeezed too much and that suddenly rebounds, space might expand again." https://sites.google.com/view/sources-black-hole-universe/

https://www.youtube.com/watch?v=71eUes30gwc&t=3s&ab_channel=Kurzgesagt%E2%80%93InaNutshell

- ^

Robin Hanson explained this in his blog: https://www.overcomingbias.com/p/beware-general-visible-near-preyhtml

"So, bottom line, the future great filter scenario that most concerns me is one where our solar-system-bound descendants have killed most of nature, can’t yet colonize other stars, are general predators and prey of each other, and have fallen into a short-term-predatory-focus equilibrium where predators can easily see and travel to most all prey. Yes there are about a hundred billion comets way out there circling the sun, but even that seems a small enough number for predators to careful map and track all of them."

- ^

Maybe some kind of thing has ultimate moral value and is optimised for across the galaxy e.g. a simulation of digital sentience in bliss. This means that the society no longer has heterogeneity that would protect it against different types of threats. So the society may be very weak to one particular thing, like an advanced computer virus-type threat or an evil superintelligence.

- ^

I have a lot of uncertainty about this. I'd love to hear another take.. it's stuff like vacuum decay that concern me. Vacuum decay is utterly impossible to defend against, unless you could separate your star system from the rest of the universe or move into another dimension. Similar thing with strange matter - sure you might be able to produce gravitational waves to protect against them, but then you might have to deal with strange matter AND incoming gravitational waves. With effectively infinite resources and arbitrarily advanced technology, offence always wins in the end.

I'm particularly unsure about whether I'm right about this statement: Even if a superintelligence exists in your solar system, the creation of a superintelligence optimised for total destruction somewhere else in the galaxy will inevitably lead to your destruction.

- ^

How bad this would be for the long term potential of sentience is very dependent on the aliens that destroy us. It’s possible that the aliens might be intelligent but not sentient, so if they replaced us that would be a disaster. The aliens could also be tyrannous, or have little respect for other sentient beings, and thus their conquering of our galaxy represents an s-risk. Much ambiguity.

- ^

Yeah this took me more than just one afternoon. I really missed the mark on this "exhaustive list of cosmic threats" in 2023, which lists a pathetic 19 threats.

- ^

The data for the bar graph can be accessed in this spreadsheet: https://docs.google.com/spreadsheets/d/1DoSyDlwsuH2GXd_xI4kCxfKlnaNOtk1WvemZdQhVsAA/edit?usp=sharing

Email jordan.stone@spacegeneration.org if you can't access itFYI there are like a million caveats and conditions with each thing on the list, so if its confusing I'm very communicative over email or on the forum and I'm happy to answer them if you're confused or interested.

- ^

If someone did this in 1980, things would look a lot more concerning!

- ^

There is so much uncertainty here that these can only really be guesses. I'm happy to update them if other points or new research comes to light. Maybe I'll re-write the post in 2040.

- ^

All speculative physics stuff is set at a 10% probability of being real as a geometric mean to reflect my uncertainty. If I were being conservative, the probability would be more like 1%.

- ^

- ^

Some factors like dispersion may initially decrease x-risk, but over time increase it. See Toby Ord's comment.

- ^

Potentially avoided by wormholes or quantum coupling

- ^

Again, assuming that a superintelligence has not accompanied the expansion of human civilization to prevent any galactic x-risks from occurring.

- ^

i.e., neartermist vs longtermist.

- ^

Some good arguments for why this might take a really long time. We need to send probes first:

We wouldn't send humans (the question specifies humans):

- ^

Without a strong presence in the real world, this would leave the civilisation vulnerable to the emergence of alien life elsewhere in the universe though, which is probably an inevitability given enough time.

- ^

Once we have this governance system, we probably need to spread throughout the affectable universe as fast as possible to prevent independent alien civilisations from emerging, or gain the ability to collaborate with them on preventing galactic x-risks.

- ^

Up until we become a type II civilisation maybe, then I think things become very offence dominant.

- ^

The seminal work on the subject is this paper: Armstrong, Stuart, and Anders Sandberg. "Eternity in six hours: Intergalactic spreading of intelligent life and sharpening the Fermi paradox." Acta Astronautica 89 (2013): 1-13.

- ^

Thanks to Alexey Turchin for pointing me towards this potential risk.

- ^

Wikipedia: "Mars and Earth during the Cryogenian period may have experienced the opposite of a runaway greenhouse effect: a runaway refrigerator effect. Through this effect, a runaway feedback process may have removed much carbon dioxide and water vapor from the atmosphere and cooled the planet. Water condenses on the surface, leading to carbon dioxide dissolving and chemically binding to minerals. This reduced the greenhouse effect, lowering the temperature and causing more water to condense. The result was lower temperatures, with water being frozen as subsurface permafrost, leaving only a thin atmosphere.[38][39] In addition, ice and snow are far more reflective than open water, with an albedo of 50-70% and 85% respectively. This means that as a planet's temperature decreases and more of its water freezes, its ability to absorb light is reduced, which in turn makes it even colder, creating a positive feedback loop.[40] This effect, combined with the decrease in heat-retaining clouds and vapor, becomes runaway once snow and ice coverage reach a certain threshold (within 30 degrees of the equator), plunging the planet into a stable snowball state.[41][42]"

- ^

1 in 2.8 quadrillion chance of a binary black hole approaching the Solar System in the next 100 years. The internet is a big place: https://physics.stackexchange.com/questions/464372/what-is-the-closest-distance-for-an-undiscovered-black-hole#:~:text=It%20is%20also%20absurdly%20unlikely,parsecs%2C%20or%2016.3%20light%20years.

- ^

Toby_Ord @ 2025-06-18T13:44 (+35)

This is a very interesting post. Here's how it fits into my thinking about existential risk and time and space.

We already know about several related risk effects over space and time:

- If different locations in space can serve as backups, such that humanity fails only if all of them fail simultaneously, then the number of these only needs to grow logarithmically before there is a non-zero chance of indefinite survival.

- However, this does not solve existential risk, as it only helps with uncorrelated risks such as asteroid impacts. Some risks are correlated between all locations in a planetary system or all stars in a galaxy (often because an event in one causes the downfall of all others) and having multiple settlements doesn't help with those.

- Also, to reach indefinite survival, we need to reduce per-century existential risk by some constant fraction each century (quite possibly requiring a deliberate and permanent prioritisation of this by humanity)

You pointed out a fourth related issue. When it comes to the correlated risks, settling more and more star systems doesn't just not help with these — it creates more and more opportunities for these to happen. If there were 100 billion settled systems then at least for the risks that can’t be defended against (such as vacuum collapse) a galactic-scale civilisation would undergo 100 billion centuries worth of this risk per century. So as well as an existential-risk-reducing effect for space settlement, there is also a systematic risk-increasing effect. (And this is a more robust and analysable argument than those about space wars.)

I’ve long felt that humanity would want to bind all its settlements to a common constitution, ruling out certain things such as hostile actions towards each other, preparing advanced weaponry that could be used for this purpose, or attempts to seize new territory in inappropriate ways. That might help sufficiently for some of the coordination problems, but I hadn’t noticed that even if each location is coordinated and aligned, if we settle 100 billion worlds, a certain part of the accident risk gets multiplied by more than a billion-fold, and this creates a fundamental tension between the benefits of settling more places and the risks of doing so. I feel like getting per-century risk down to 1% in a few centuries might not be that hard, but if we need to get it down to 0.00000000001%, it is less clear that is possible (though at least it is only the *objective probability* that needs to get so low — it’s OK if your confidence you are right isn’t as strong as 99.999999999%).

One style of answer is to require that almost no settlements are capable of these galaxy-wide existential risks. e.g.

- they have no people, but contribute to our goals in some other way (such as pure happiness or robotic energy-harvesting for some later project)

- or they have people flourishing in relatively low-tech utopian states

- or they have people flourishing inside virtual worlds maintained by machines, where the people have no way of affecting the outside world

- or we find all the possible correlated risks in advance and build defense-in-depth guardrails around all of them.

Alternatively, if each settlement is capable of imposing such risks, then you could think of each star-system-century as playing the same role as a century in my earlier model. i.e. instead of needing to exponentially decrease per-period risk over time, we need to exponentially decrease it per additional star system as well. But this is a huge challenge if we are thinking of adding billions of places within a small number of centuries. Alternatively, one could think of it as requiring that we divide the acceptable level of per-period risk by the number of settlements. In the worst case, one might not be able to gain any EV by settling other star systems relative to just staying on one, as the risk-downsides outweigh the benefits. (But the kinds of limited settlements listed above should still be possible.)

There is an interesting question about whether raw population has the same effect as extra settled star systems. I’m inclined to think it doesn’t, via a model whereby well-governed star systems aren’t just as weak as their most irresponsible or unlucky citizen, even if the galaxy is as weak as its most irresponsible or unlucky star system. e.g. that doubling the population of a star system doesn’t double the chance it triggers a vacuum collapse, but doubling the number of independently governed star systems might (as a single system might decide not to prioritise risk avoidance).

Toby_Ord @ 2025-06-18T13:48 (+16)

Here is a nice simple model of the trade-off between redundancy and correlated risk. Assume that each time period, each planet has an independent and constant chance of destroying civilisation on its own planet and an independent and constant chance of destroying civilisation on all planets. Furthermore, assume that unless all planets fail in the same time period, they can be restored from those that survive.

e.g. assume the planetary destruction rate is 10% per century and the galaxy destruction rate is 1 in 1 million per century. Then with one planet the existential risk for human civilisation is ~10% per century. With two planets it is about 1% per century, and reaches a minimum at about 6 planets, where there is only a 1 in a million chance you lose all planets simultaneously from planetary risk, but now ~6 in a million chance of one of them destroying everything. In this case, beyond 6 planets, the total risk starts rising as the amount of redundancy they add is smaller than the amount of new risk they create, and by the time you have 1 million planets, the existential risk rate for human civilisation per century is about 63%.

This is an overly simple model and I've used arbitrary parameters, but it shows it is quite easy for risk to first reduce and then increase as more planets are settled, with a risk-optimal level of settlement in between.

Toby Tremlett🔹 @ 2025-06-26T11:25 (+20)

I think this post is underrated (karma-wise) and I'm curating it. I love how thorough this is, and the focus on under-theorised problems. I don't think there are many other places like this where we could have a serious conversation about these risks.

I'd like to see more critical engagement on the key takeaways (helpfully listed at the end). As a start, here's a poll for the key claim Jordan identifies in the title:

Lukas Finnveden @ 2025-06-29T04:56 (+10)

I think it will probably not doom the long-term future.

This is partly because I'm pretty optimistic that, if interstellar colonization would predictably doom the long-term future, then people would figure out solutions to that. (E.g. having AI monitors travel with people and force them not to do stuff, as Buck mentions in the comments.) Importantly, I think interstellar colonization is difficult/slow enough that we'll probably first get very smart AIs with plenty of time to figure out good solutions. (If we solve alignment.)

But I also think it's less likely that things would go badly even without coordination. Going through the items in the list:

Galactic x-risk Is it possible? Would it end Galactic civ? Lukas' take Self-replicating machines 100% | ✅ 75% | ❌ I doubt this would end galactic civ. The quote in that section is about killing low-tech civs before they've gotten high-tech. A high-tech civ could probably monitor for and destroy offensive tech built by self-replicators before it got bad enough that it could destroy the civ. Strange matter 20%[64] | ❌ 80% | ❌ I don't know much about this. Vacuum decay 50%[65] | ❌ 100% | ✅ "50%" in the survey was about vacuum decay being possible in principle, not about it being possible to technologically induce (at the limit of technology). The survey reported significantly lower probability that it's possible to induce. This might still be a big deal though! Subatomic particle decay 10%[64] | ❌ 100% |✅ I don't know much about this. Time travel 10%[64] | ❌ 50% | ❌ I don't know much about this, but intuitively 50% seems high. Fundamental Physics Alterations 10%[64] | ❌ 100% | ✅ I don't know much about this. Interactions with other universes 10%[64] | ❌ 100% | ✅ I don't know much about this. Societal collapse or loss of value 10% | ❌ 100% | ✅ This seems like an incredibly broad category. I'm quite concerned about something in this general vicinity, but it doesn't seem to share the property of the other things in the list where "if it's started anywhere, then it spreads and destroys everything everywhere". Or at least you'd have to narrow the category a lot before you got there. Artificial superintelligence 100% | ✅ 80% | ❌ The argument given in this subsection is that technology might be offense-dominant. But my best guess is that it's defense-dominant. Conflict with alien intelligence 75% | ❌ 90% | ❌ The argument given in this subsection is that technology might be offense-dominant. But my best guess is that it's defense-dominant.

Expanding on the question about whether space warfare is offense-dominant or defense-dominant: One argument I've heard for defense-dominance is that, in order to destroy very distant stuff, you need to concentrate a lot of energy into a very tiny amount of space. (E.g. very narrowly focused lasers, or fast-moving rocks flinged precisely.) But then you can defeat that by jiggling around the stuff that you want to protect in unpredictable ways, so that people can't aim their highly-concentrated energy from far away and have it hit correctly.

Now that's just one argument, so I'm not very confident. But I'm at <50% on offense-dominance.

(A lot of the other items on the list could also be stories for how you get offense-dominace, where I'm especially concerned about vacuum decay. But it would be double-counting to put those both in their own categories and to count them as valid attacks from superintelligence/aliens.)

JordanStone @ 2025-07-05T16:03 (+3)

Thanks for the in-depth comment. I agree with most of it.

if interstellar colonization would predictably doom the long-term future, then people would figure out solutions to that.

Agreed, I hope this is the case. I think there are some futures where we send lots of ships out to interstellar space for some reason or act too hastily (maybe a scenario where transformative AI speeds up technological development, but not so much our wisdom). Just one mission (or set of missions) capable of self-propagating to other star systems almost inevitably leads to galactic civilisation in the end, and we'd have to catch up to it to ensure existential security, which would become challenging if they create von-Neumann probes.

"50%" in the survey was about vacuum decay being possible in principle, not about it being possible to technologically induce (at the limit of technology). The survey reported significantly lower probability that it's possible to induce. This might still be a big deal though!

Yeah this is my personal estimate based on that survey and its responses. I was particularly convinced by one responder who put 100% probability that its possible to induce (conditional on the vacuum being metastable), as anything that's permitted by the laws of physics is possible to induce with arbitrarily advanced technology (so, 50% based on that chance of the vacuum is metastable).

Lukas Finnveden @ 2025-07-06T03:25 (+5)

anything that's permitted by the laws of physics is possible to induce with arbitrarily advanced technology

Hm, this doesn't seem right to me. For example, I think we could coherently talk about and make predictions about what would happen if there was a black hole with a mass of 10^100 kg. But my best guess is that we can't construct such a black hole even at technological maturity, because even the observable universe only has 10^53 kg in it.

Similarly, we can coherently talk about and make predictions about what would happen if certain kinds of lower-energy states existed. (Such as predicting that they'd be meta-stable and spread throughout the universe.) But that doesn't necessarily mean that we can move the universe to such a state.

Buck @ 2025-06-27T15:13 (+6)

Interstellar travel will probably doom the long-term future

Seems false, probably people will just sort out some strategy for enforcing laws (e.g. having AI monitors travel with people and force them not to do stuff).

Jelle Donders @ 2025-06-28T15:41 (+5)

Interstellar travel will probably doom the long-term future

Some quick thoughts: By the time we've colonized numerous planets and cumulative galactic x-risks are starting to seriously add up, I expect there to be von Neumann probes traveling at a significant fraction of the speed of light (c) in many directions. Causality moves at c, so if we have probes moving away from each other at nearly 2c, that suggests extinction risk could be permanently reduced to zero. In such a scenario most value of our future lightcone could still be extinguished, but not all.

A very long-term consideration is that as the expansion of the universe accelerates so does the number of causally isolated islands. For example, in 100-150 billion years the Local Group will be causally isolated from the rest of the universe, protecting it from galactic x-risks happening elsewhere.

I guess this trades off with your 6th conclusion (Interstellar travel should be banned until galactic x-risks and galactic governance are solved). Getting governance right before we can build von Neumann probes at >0.5c is obviously great, but once we can build them it's a lot less clear if waiting is good or bad.

Thinking out loud, if any of this seems off lmk!

Dan_Keys @ 2025-06-29T01:18 (+7)

Causality moves at c, so if we have probes moving away from each other at nearly 2c, that suggests extinction risk could be permanently reduced to zero.

This isn't right. Near-speed-of-light movement in opposite directions doesn't add up to above speed of light relative movement. e.g., Two probes each moving away from a common starting point at 0.7c have a speed relative to each other of about 0.94c, not 1.4c, so they stay in each other's lightcone.

(That's standard special relativity. I asked o3 how that changes with cosmic expansion and it claims that, given our current understanding of cosmic expansion, they will leave each other's lightcone after about 20 billion years.)

Jelle Donders @ 2025-07-01T12:49 (+2)

Right, so even with near-c von Neumann probes in all directions, vacuum collapse or some other galactic x-risk moving at c would only allow civilization to survive as a thin spherical shell of space on a perpetually migrating wave front around the extinction zone that would quickly eat up the center of the colonized volume.

Such a civilization could still contain many planets and stars if they can get a decent head start before a galactic x-risk occurs + travel at near c without getting slowed down much by having to make stops to produce and accelerate more von Neumann probes. Yeah, that's a lot of if's.

20 billion ly estimate seems accurate, so cosmic expansion only protects against galactic x-risks on very long timescales. And without very robust governance it's doubtful we might not get to that point.

JordanStone @ 2025-06-28T22:03 (+2)

Awesome speculations. We're faced with such huge uncertainty and huge stakes. I can try and make a conclusion based on scenarios and probabilities, but I think the simplest argument for not spreading throughout the universe is that we have no idea what we're doing.

This might even apply to spreading throughout the Solar System too. If I'm recalling correctly, Daniel Deudney argued that a self-sustaining colony on Mars is the point of no return for space expansion as it would culturally diverge from Earth and their actions would be out of our control.

JordanStone @ 2025-06-26T20:50 (+3)

Interstellar travel will probably doom the long-term future

By "probably" in the title, apparently I mean just over 50% chance ;)

Mo Putera @ 2025-07-01T11:32 (+2)

I'm admittedly confused by this. I suppose when you wrote

... none of these have two ticks in my estimation. However, combined, I think this list represents a threat that is extremely likely to be real and capable of ending a galactic civilisation.

you meant that, combined, they nudge your needle 10%?

Dan_Keys @ 2025-06-29T01:24 (+2)

I'm unsure how to interpret "will probably doom". 2 possible readings: