Yudkowsky and Christiano on AI Takeoff Speeds [LINKPOST]

By aog @ 2022-04-05T00:57 (+15)

Linkpost for “Yudkowsky Contra Christiano on AI Takeoff Speeds”, published by Scott Alexander on March 4, 2021. Here is the original conversation.

Key Quotes:

Around the end of last year, Paul and Eliezer had a complicated, protracted, and indirect debate, culminating in a few hours on the same Discord channel. Although the real story is scattered over several blog posts and chat logs, I’m going to summarize it as if it all happened at once.

Core Debate

Paul Christiano's position:

Paul sums up his half of the debate as:

“There will be a complete 4 year interval in which world output doubles, before the first 1 year interval in which world output doubles. (Similarly, we’ll see an 8 year doubling before a 2 year doubling, etc.)”

That is - if any of this “transformative AI revolution” stuff is right at all, then at some point GDP is going to go crazy (even if it’s just GDP as measured by AIs, after humans have been wiped out). Paul thinks it will go crazy slowly. Right now world GDP doubles every ~25 years. Paul thinks it will go through an intermediate phase (doubles within 4 years) before it gets to a truly crazy phase (doubles within 1 year).

Why? Partly based on common sense. Whenever you can build a cool thing at time T, probably you could build a slightly less cool version at time T-1. And slightly less cool versions of cool things are still pretty cool, so there shouldn’t be many cases where a completely new and transformative thing starts existing without any meaningful precursors.

But also because this is how everything always works. [See explanations from Katja Grace and Nintil.]

Eliezer Yudkowsky's response:

Eliezer counters that although progress may retroactively look gradual and continuous when you know what metric to graph it on, it doesn’t necessarily look that way in real life by the measures that real people care about.

(one way to think of this: imagine that an AI’s effective IQ starts at 0.1 points, and triples every year, but that we can only measure this vaguely and indirectly. The year it goes from 5 to 15, you get a paper in a third-tier journal reporting that it seems to be improving on some benchmark. The year it goes from 66 to 200, you get a total transformation of everything in society. But later, once we identify the right metric, it was just the same rate of gradual progress the whole time. )

So Eliezer is much less impressed by the history of previous technologies than Paul is. He’s also skeptical of the “GDP will double in 4 years before it doubles in 1” claim, because of two contingent disagreements and two fundamental disagreements.

The first contingent disagreement: government regulations make it hard to deploy imperfect things, and non-trivial to deploy things even after they’re perfect. Eliezer has non-jokingly said he thinks AI might destroy the world before the average person can buy a self-driving car. Why? Because the government has to approve self-driving cars (and can drag its feet on that), but the apocalypse can happen even without government approval. In Paul’s model, sometime long before superintelligence we should have AIs that can drive cars, and that increases GDP and contributes to a general sense that exciting things are going on. Eliezer says: fine, what if that’s true? Who cares if self-driving cars will be practical a few years before the world is destroyed? It’ll take longer than that to lobby the government to allow them on the road.

The second contingent disagreement: superintelligent AIs can lie to us. Suppose you have an AI which wants to destroy humanity, whose IQ is doubling every six months. Right now it’s at IQ 200, and it suspects that it would take IQ 800 to build a human-destroying superweapon. Its best strategy is to lie low for a year. If it expects humans would turn it off if they knew how close it was to superweapons, it can pretend to be less intelligent than it really is. The period when AIs are holding back so we don’t discover their true power level looks like a period of lower-than-expected GDP growth - followed by a sudden FOOM once the AI gets its superweapon and doesn’t need to hold back.

So even if Paul is conceptually right and fundamental progress proceeds along a nice smooth curve, it might not look to us like a nice smooth curve, because regulations and deceptive AIs could prevent mildly-transformative AI progress from showing up on graphs, but wouldn’t prevent the extreme kind of AI progress that leads to apocalypse. To an outside observer, it would just look like nothing much changed, nothing much changed, nothing much changed, and then suddenly, FOOM.

But even aside from this, Eliezer doesn’t think Paul is conceptually right! He thinks that even on the fundamental level, AI progress is going to be discontinuous. It’s like a nuclear bomb. Either you don’t have a nuclear bomb yet, or you do have one and the world is forever transformed. There is a specific moment at which you go from “no nuke” to “nuke” without any kind of “slightly worse nuke” acting as a harbinger.

He uses the example of chimps → humans. Evolution has spent hundreds of millions of years evolving brainier and brainier animals (not teleologically, of course, but in practice). For most of those hundreds of millions of years, that meant the animal could have slightly more instincts, or a better memory, or some other change that still stayed within the basic animal paradigm. At the chimp → human transition, we suddenly got tool use, language use, abstract thought, mathematics, swords, guns, nuclear bombs, spaceships, and a bunch of other stuff. The rhesus monkey → chimp transition and the chimp → human transition both involved the same ~quadrupling of neuron number, but the former was pretty boring and the latter unlocked enough new capabilities to easily conquer the world. The GPT-2 → GPT-3 transition involved centupling parameter count. Maybe we will keep centupling parameter count every few years, and most times it will be incremental improvement, and one time it will conquer the world.

But even talking about centupling parameter points is giving Paul too much credit. Lots of past inventions didn’t come by quadrupling or centupling something, they came by discovering “the secret sauce”. The Wright brothers (he argues) didn’t make a plane with 4x the wingspan of the last plane that didn’t work, they invented the first plane that could fly at all. The Hiroshima bomb wasn’t some previous bomb but bigger, it was what happened after a lot of scientists spent a long time thinking about a fundamentally different paradigm of bomb-making and brought it to a point where it could work at all. The first transformative AI isn’t going to be GPT-3 with more parameters, it will be what happens after someone discovers how to make machines truly intelligent.

(this is the same debate Eliezer had with Ajeya over the Biological Anchors post; have I mentioned that Ajeya and Paul are married?)

Recursive Self-Improvement

Yudkowsky on recursive self-improvement:

This is where I think Eliezer most wants to take the discussion. The idea is: once AI is smarter than humans, it can do a superhuman job of developing new AI. In his Microeconomics paper, he writes about an argument he (semi-hypothetically) had with Ray Kurzweil about Moore’s Law. Kurzweil expected Moore’s Law to continue forever, even after the development of superintelligence. Eliezer objects:

"Suppose we were dealing with minds running a million times as fast as a human, at which rate they could do a year of internal thinking in thirty-one seconds, such that the total subjective time from the birth of Socrates to the death of Turing

would pass in 20.9 hours. Do you still think the best estimate for how long

it would take them to produce their next generation of computing hardware

would be 1.5 orbits of the Earth around the Sun?"That is: the fact that it took 1.5 years for transistor density to double isn’t a natural law. It’s pointing to a law that the amount of resources (most notably intelligence) that civilization focused on the transistor-densifying problem equalled the amount it takes to double it every 1.5 years. If some shock drastically changed available resources (by eg speeding up human minds a million times), this would change the resources involved, and the same laws would predict transistor speed doubling in some shorter amount of time (naively 0.000015 years, although realistically at that scale other inputs would dominate).

So when Paul derives clean laws of economics showing that things move along slow growth curves, Eliezer asks: why do you think they would keep doing this when one of the discoveries they make along that curve might be “speeding up intelligence a million times”?

(Eliezer actually thinks improvements in the quality of intelligence will dominate improvements in speed - AIs will mostly be smarter, not just faster - but speed is a useful example here and we’ll stick with it)

Christiano's response on recursive self-improvement:

Summary of my response: Before there is AI that is great at self-improvement there will be AI that is mediocre at self-improvement.

Powerful AI can be used to develop better AI (amongst other things). This will lead to runaway growth.

This on its own is not an argument for discontinuity: before we have AI that radically accelerates AI development, the slow takeoff argument suggests we will have AI that significantly accelerates AI development (and before that, slightly accelerates development). That is, an AI is just another, faster step in the hyperbolic growth we are currently experiencing, which corresponds to a further increase in rate but not a discontinuity (or even a discontinuity in rate).

The most common argument for recursive self-improvement introducing a new discontinuity seems be: some systems “fizzle out” when they try to design a better AI, generating a few improvements before running out of steam, while others are able to autonomously generate more and more improvements. This is basically the same as the universality argument in a previous section.

Continuous Progress on Benchmarks

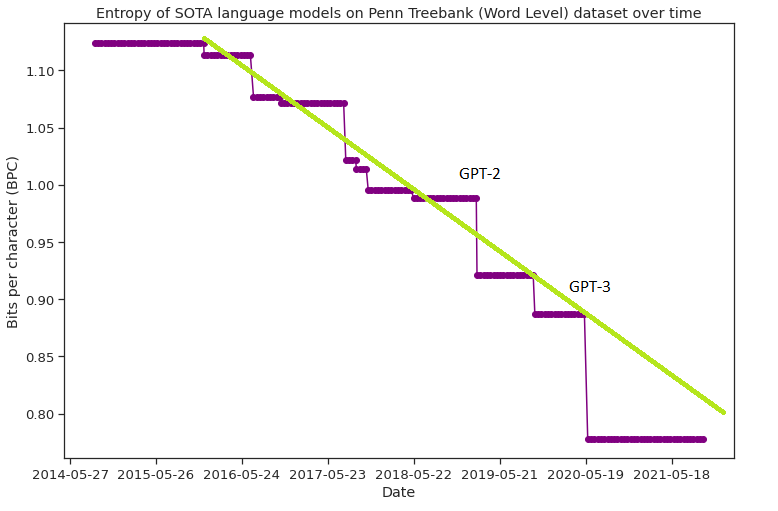

Matthew Barnett's response highlights relatively continuous progress by language models on the Penn Treebank benchmark, sparking three responses.

First, from Gwern:

The impact of GPT-3 had nothing whatsoever to do with its perplexity on Penn Treebank . . . the impact of GPT-3 was in establishing that trendlines did continue in a way that shocked pretty much everyone who'd written off 'naive' scaling strategies. Progress is made out of stacked sigmoids: if the next sigmoid doesn't show up, progress doesn't happen. Trends happen, until they stop. Trendlines are not caused by the laws of physics. You can dismiss AlphaGo by saying "oh, that just continues the trendline in ELO I just drew based on MCTS bots", but the fact remains that MCTS progress had stagnated, and here we are in 2021, and pure MCTS approaches do not approach human champions, much less beat them. Appealing to trendlines is roughly as informative as "calories in calories out"; 'the trend continued because the trend continued'. A new sigmoid being discovered is extremely important.

GPT-3 further showed completely unpredicted emergence of capabilities across downstream tasks which are not measured in PTB perplexity. There is nothing obvious about a PTB BPC of 0.80 that causes it to be useful where 0.90 is largely useless and 0.95 is a laughable toy. (OAers may have had faith in scaling, but they could not have told you in 2015 that interesting behavior would start at 𝒪(1b), and it'd get really cool at 𝒪(100b).) That's why it's such a useless metric. There's only one thing that a PTB perplexity can tell you, under the pretraining paradigm: when you have reached human AGI level. (Which is useless for obvious reasons: much like saying that "if you hear the revolver click, the bullet wasn't in that chamber and it was safe". Surely true, but a bit late.) It tells you nothing about intermediate levels. I'm reminded of the Steven Kaas line: “Why idly theorize when you can JUST CHECK and find out the ACTUAL ANSWER to a superficially similar-sounding question SCIENTIFICALLY?”

Then from Yudkowsky:

What does it even mean to be a gradualist about any of the important questions like [the ones Gwern mentions], when they don't relate in known ways to the trend lines that are smooth? Isn't this sort of a shell game where our surface capabilities do weird jumpy things, we can point to some trend lines that were nonetheless smooth, and then the shells are swapped and we're told to expect gradualist AGI surface stuff? This is part of the idea that I'm referring to when I say that, even as the world ends, maybe there'll be a bunch of smooth trendlines underneath it that somebody could look back and point out. (Which you could in fact have used to predict all the key jumpy surface thresholds, if you'd watched it all happen on a few other planets and had any idea of where jumpy surface events were located on the smooth trendlines - but we haven't watched it happen on other planets so the trends don't tell us much we want to know.)

And Christiano:

[Yudkowsky's argument above] seems totally bogus to me.

It feels to me like you mostly don't have views about the actual impact of AI as measured by jobs that it does or the $s people pay for them, or performance on any benchmarks that we are currently measuring, while I'm saying I'm totally happy to use gradualist metrics to predict any of those things. If you want to say "what does it mean to be a gradualist" I can just give you predictions on them.

To you this seems reasonable, because e.g. $ and benchmarks are not the right way to measure the kinds of impacts we care about. That's fine, you can propose something other than $ or measurable benchmarks. If you can't propose anything, I'm skeptical.

On the question of why AI takeoff speeds matter in the first place:

If there’s a slow takeoff (ie gradual exponential curve), it will become obvious that some kind of terrifying transformative AI revolution is happening, before the situation gets apocalyptic. There will be time to prepare, to test slightly-below-human AIs and see how they respond, to get governments and other stakeholders on board. We don’t have to get every single thing right ahead of time. On the other hand, because this is proceeding along the usual channels, it will be the usual variety of muddled and hard-to-control. With the exception of a few big actors like the US and Chinese government, and maybe the biggest corporations like Google, the outcome will be determined less by any one agent, and more by the usual multi-agent dynamics of political and economic competition. There will be lots of opportunities to affect things, but no real locus of control to do the affecting.

If there’s a fast takeoff (ie sudden FOOM), there won’t be much warning. Conventional wisdom will still say that transformative AI is thirty years away. All the necessary pieces (ie AI alignment theory) will have to be ready ahead of time, prepared blindly without any experimental trial-and-error, to load into the AI as soon as it exists. On the plus side, a single actor (whoever has this first AI) will have complete control over the process. If this actor is smart (and presumably they’re a little smart, or they wouldn’t be the first team to invent transformative AI), they can do everything right without going through the usual government-lobbying channels.

So the slower a takeoff you expect, the less you should be focusing on getting every technical detail right ahead of time, and the more you should be working on building the capacity to steer government and corporate policy to direct an incoming slew of new technologies.

Also Raemon on AI safety in a soft-takeoff world:

I totally think there are people who sort of nod along with Paul, using it as an excuse to believe in a rosier world where things are more comprehensible and they can imagine themselves doing useful things without having a plan for solving the actual hard problems. Those types of people exist. I think there's some important work to be done in confronting them with the hard problem at hand.

But, also... Paul's world AFAICT isn't actually rosier. It's potentially more frightening to me. In Smooth Takeoff world, you can't carefully plan your pivotal act with an assumption that the strategic landscape will remain roughly the same by the time you're able to execute on it. Surprising partial-gameboard-changing things could happen that affect what sort of actions are tractable. Also, dumb, boring ML systems run amok could kill everyone before we even get to the part where recursive self improving consequentialists eradicate everyone.

I think there is still something seductive about this world – dumb, boring ML systems run amok feels like the sort of problem that is easier to reason about and maybe solve. (I don't think it's actually necessarily easier to solve, but I think it can feel that way, whether it's easier or not). And if you solve ML-run-amok-problems, you still end up dead from recursive-self-improving-consequentialists if you didn't have a plan for them.

Metaculus has a question about AI takeoff speeds:

This is the Metaculus forecasting question corresponding to Paul’s preferred formulation of hard/soft takeoff. Metaculans think there’s a 69% chance it’s true. But it fell by about 4% after the debate, suggesting that some people got won over to Eliezer’s point of view.

Conclusions from the debate:

They both agreed they weren’t going to resolve this today, and that the most virtuous course would be to generate testable predictions on what the next five years would be like, in the hopes that one of their models would prove obviously much more productive at this task than the other.

But getting these predictions proved harder than expected. Paul believes “everything will grow at a nice steady rate” and Eliezer believes “everything will grow at a nice steady rate until we suddenly die”, and these worlds look the same until you are dead.

I am happy to report that three months later, the two of them finally found an empirical question they disagreed on and made a bet on it. The difference is: Eliezer thinks AI is a little bit more likely to win the International Mathematical Olympiad before 2025 than Paul (under a specific definition of “win”). I haven’t followed the many many comment sub-branches it would take to figure out how that connects to any of this, but if it happens, update a little towards Eliezer, I guess.

Also this:

I didn’t realize this until talking to Paul, but “holder of the gradualist torch” is a relative position - Paul still thinks there’s about a 1/3 chance of a fast takeoff.