I'll start us off with the standard argument against donation splitting. We'll start with the (important!) assumption that you are trying to maximize[1] the amount of good you can do with your money. We'll also take for the moment that you are a small donor giving <$100k/year.

There is some charity that can use your first dollar to do the most good. The basic question that this line of argument takes is: is there some amount of money within your donation budget that will cause the marginal effectiveness of a dollar to that charity to fall below that of the second best charity.

For example, you could imagine that Acme Charity has a program that has only a $50k funding gap. After that, donations to Acme Charity would go towards another program.

The standard argument against donation splitting, which seems right to me, is that the answer to that question is "probably not."

[1]: Most definitions of effective altruism have language about maximizing ("as much as possible"). I personally do make some fuzzies-based donations, but do not count them towards my Giving What We Can Pledge.

Here's the donation splitting policy that I might argue for: instead of "donate to the charity that looks best to you", I'd argue for "donate to charities in the proportion that, if all like-minded EAs donated their money in that proportion, the outcome would be best".

Here's the basic shape of my argument: suppose there are 1000 EAs, each of which will donate $1000. Suppose further there are two charities, A and B, and that the EAs are in agreement that (1) both A and B are high-quality charities; (2) A is better than B on the current margin; but (3) A will hit diminishing returns after a few hundred thousand dollars, such that the optimal allocation of the total $1M is $700k to A and $300k to B. What policy should each EA use to decide how to allocation their donation? It seems like the two sensible policies are:

- Donate $700 to A and $300 to B (donation splitting); or

- Don't donate all at the same time. Instead, over the course of giving season, keep careful track of how much A and B have received, and donate to whichever one is best on the margin. (In practice this will mean that the first few hundred thousand donations go to A, and then A and B will each be receiving donations in some ratio such that they remain equally good on the margin.)

But if you don't have running counters of how much has been donated to A and B, the first policy is easier to implement. And both policies are better than the outcome where every EA reasons that A is better on the margin and all $1M goes to A.

Now, of course EAs are not a monolith and they have different views about which charities are good. But I observe that in practice, EAs' judgments are really correlated. Like I think it's pretty realistic to have a situation in which a large fraction of EAs agree that some charity A is the best in a cause area, with B a close second. (Is this true for AMF and Malaria Consortium, in some order?) And in such a situation, I'd rather that EAs have a policy that causes some fraction to be allocated to B, than a policy that causes all the money to be allocated to A.

Note that how this policy plays out in practice really does depend on how correlated your judgments are to those of other EAs. If I'm wrong and EAs' judgments are not very correlated, then donating all your budget to the charity that looks best to you seems like a good policy.

I like this position — I'm already not sure how much I disagree. Some objections that might be more devil's advocate-y or might be real objections:

- I agree correlation is important. I'm not sure how to define it and, once defined, whether it will be correlated enough in practice.

- Roughly speaking, what decision theory / unit of analysis are we using here? It seems like your opening statement assumes we can set the norm for all EA. Whereas I'm thinking more about what I'd recommend to an individual who asked for my opinion. I want to avoid unilaterally doing something that only pays off when everyone does it, unless I really think that everyone will do it.

Cool, yeah, I agree that "how much correlation" and "which decision theory" are important uncertainties/cruxes. Maybe to spell this out more, I think my argument requires several assumptions:

- EAs' donations are quite correlated: the ways that different individual EA donors make decisions is correlated enough that, without communication/coordination between different donors, you'd end up with a large chunk of EAs donating to a small set of orgs, maybe so much so that an individual EA would look at those donations and be like "it would be better if the donations were more diffuse"

- Large unit of analysis: we are discussing a policy recommendation for EA donors writ large, rather than how an individual donor should behave. Or, we are making discussing what an individual donor should do, but in an evidential decision theory mindset where if the donor follows a given policy, that's evidence that other donors will as well. Or something like that. I generally find this confusing to think about.

- Lack of successful communication/coordination: even supposing that EAs' donations are very correlated, if there were enough communication bandwidth -- e.g. if there were running donation counters on every org's webpage and all the EA donors paid attention to those counters -- then this correlation wouldn't pose a big problem. But in practice we don't have such good coordination.

- Small EA donors collectively hit diminishing returns: e.g. if all EAs agreed that the best org were Charity A, then if all the EAs' donations went to Charity A then Charity A would be oversaturated (i.e. it would be better if the EAs were to donate more to the next best charity on the margin).

I'm happy to concede assumption 3. It seems possible that assumption 4 isn't true in some cases, but it's gotta be true for at least some cause areas, and I'm happy to drop it for now. Maybe a commenter could provide a useful analysis.

Points 1&2 both seem like important cruxes.

I think we should first deal with a conceptual question about what type of correlation we're interested in. Are we interested in a question of cross-cause splitting? Are we asking about correlation among all EAs? Or among donors who donate to a particular cause.

Ways JP would investigate the correlation question:

First approach: Do a research project where one asks GiveWell, Giving What We Can, etc about the correlation among donations they have visibility into.

Second approach: I think of like, 5 friends who donate to the same cause. And we can ask them where they're donating this year and where they donated last year. Maybe we use the CEA where are you donating post. Or the where are you donating thread. Which we could actually do within the course of this dialogue.

I think the relevant question of correlation is, like, is it enough to collectively hit diminishing returns? So for example, if there were zero of any kind of correlation, then the answer is clearly no, and if all EA donors were perfectly correlated, then it would depend on Assumption 4 above.

The truth is somewhere in the middle. To simplify, we can talk about correlation between cause areas (this means something like "lots of EAs decide to donate to AI safety, enough to collectively hit diminishing returns within the cause area, and they wish more of them had donated to farm animal welfare instead") and correlation within a cause area ("lots of EAs decide to donate to the Humane League, enough to collectively hit diminishing returns, and they wish more of them had donated to the Good Food Institute instead").

My guess is that correlation within a cause area is a bigger deal. Would you agree?

I agree the within cause area seems like the natural unit of analysis. Cross causes I'm much more suspicious of donation splitting, basically for the reason that I think assumption 4 fails.

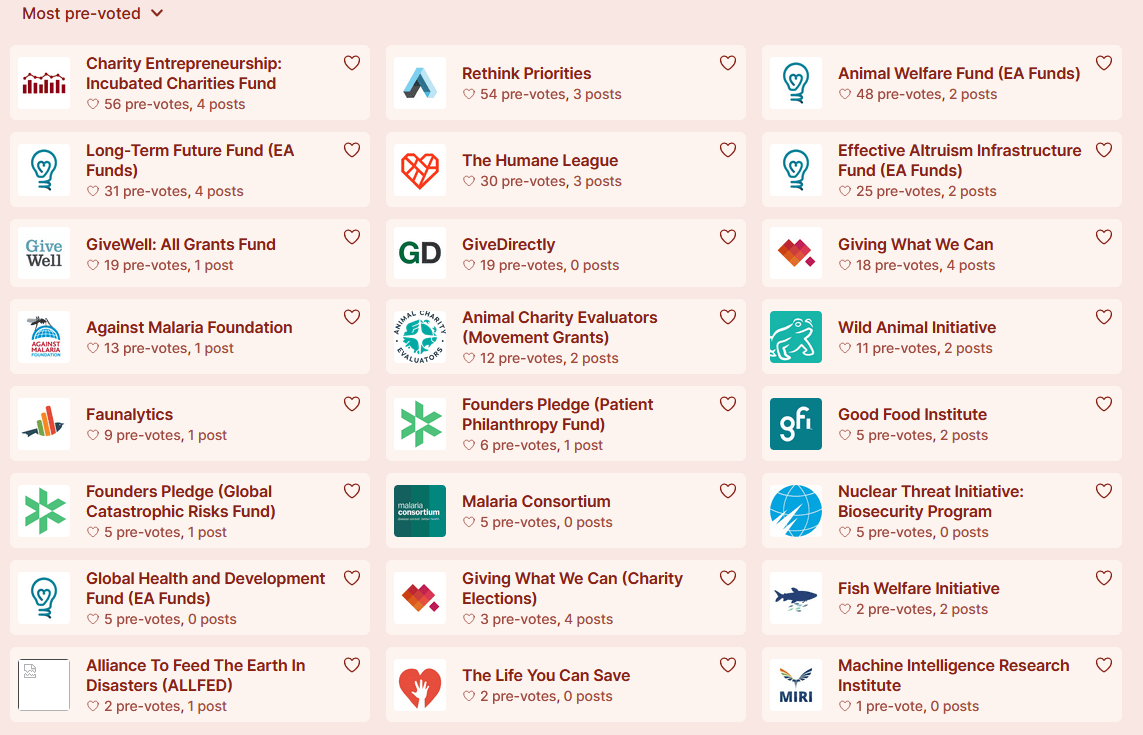

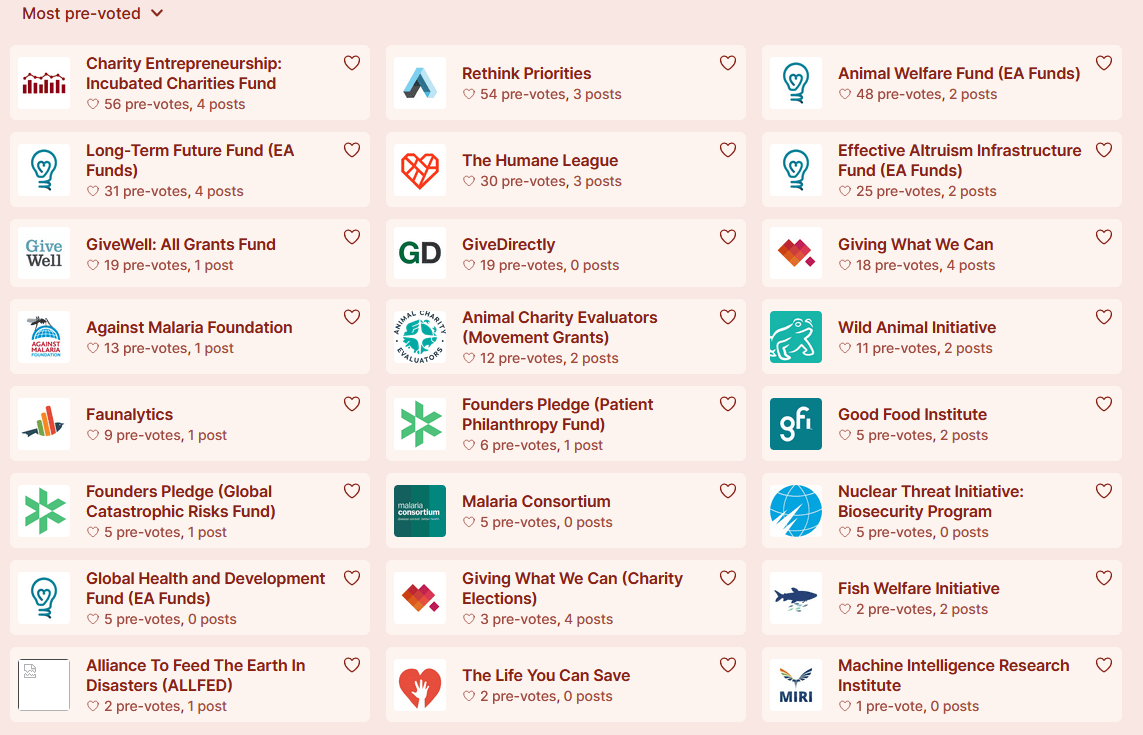

Another thing we can look at is the EA Forum donation election, where we already have "pre-votes". One basic question is, if small EA donors donated in proportion to these pre-votes, would that be good, or would some charities be oversaturated?

(This isn't exactly the right question because the set of EA donors voting in the election will be more correlated than the set of all EA donors, but it's a start.)

Here's a very hacky BOTEC approach we worked out via live collaboration:

- We can use an old estimate of EA Fund's amount of donations processed, and this summary of how much the LTFF got this year to arrive at very rough estimate that charities will receive $50,000 per pre-vote.

- We then can guesstimate that this giving season CE will get ~$3M.

- From this we agree on a wild guess that this would leave CE in a "diminishing marginal returns but not severely" situation.

Over the course of talking to you/thinking about this, I think I've gone from "correlation between EA donors is a medium-to-large problem" to "correlation between EA donors is a small-to-medium problem". (I do still feel kinda conceptually confused about how to think about correlation and have a ton of uncertainty.) But probably(?) it would be better if donations to EA orgs were spread more uniformly than the above pre-votes.

A shift in my thinking from this correlation conversation is I've gone from thinking about correlation as a number where it needs to be pretty high to make Eric's argument go through, to:

Is the amount of current donation splitting plus correlation enough that in practice ""EA should"" donation split more

The answer to which seems quite plausibly to be yes.

Let's grant for a moment that we believe that currently:

- CE's Incubated Charities Fund is the best target for donations

- After Giving Season this year, CE will probably have done well enough to hit diminishing marginal returns enough to lower it beneath GiveWell's All Grants Fund

I want to defend for the moment the position that you should donate 100% to the All Grants Fund.

Reasoning:

- It's the thing which will causally have the most impact.

- With the situation as you predict it to be, the world where you donate 100% to the AGF will be a better world than the world where you donate 70:30 to CE and the AGF.

- There's not enough (correlation!) evidence to make an evidential or other non-causal decision theorist deviate from the causal strategy.

- Like, in practice there are bunch of messy humans with pretty different views in pretty different situations weighing pretty different concerns.

Yeah, I think that probably makes sense in a context where you're an individual donor who knows what strategies the other donors are using (whether they're splitting or not, and whether they're thinking about oversaturation or not). But I also think that:

- Insofar as we're in the business of making a recommendation/best guess about how EA donors as a whole ought to behave, and people are listening to us, then coordinating on some sort of mild donation splitting strategy would be reasonable.

- Let's say you're worried that your favorite charity will get oversaturated and so you decide to donate to your second-favorite charity. Are you right, or will other donors reason similarly to you and so your favorite charity will be underfunded? I guess this is just a matter of how much correlation you expect, but this time the thing that matters is correlation in meta-level strategy rather than object level charity opinions.

- (But this argument feels too meta in a way that I feel like is detached from reality, so I probably don't endorse it.)

Insofar as we're in the business of making a recommendation/best guess about how EA donors as a whole ought to behave, and people are listening to us, then coordinating on some sort of mild donation splitting strategy would be reasonable.

I am kinda interested in a proposal here. I think if you could coordinate with a large enough group of donors, then I do think the donation splitting procedure in Eric's opening dialogue comment would do better than independent actions. Given that this dialogue is a public discussion, this post does seem like a good opportunity to do so.

I haven't (within the time bounds of this dialogue) come up with a specific proposal, but I'd encourage commenters to make them. Maybe an assurance contract?

keller_scholl @ 2023-11-30T01:02 (+17)

This is a good discussion, but I think that you're missing the strongest argument, in this context, against donation-splitting: we're not in a one-shot. Within the context of a one-shot, it makes much more sense to donation-split. But there's a charity that I donate to in part because I am convinced that they are very financially constrained (iteration impact). Furthermore, Donors can respond to each other within a year. If most EAs give around December/January, OpenPhil and GWWC can distribute in February, after hearing from relevant orgs how much funding they received. If a charity is credible in saying that they're under their expected funding, again, people can donate in response. So in practice I don't expect donation splitting to have that positive an effect on charity financing uncertainty, particularly compared to something like multi-year commitments.

Vasco Grilo @ 2023-12-01T10:37 (+3)

That makes a lot of sense, Keller! In addition, donation splitting seems to make the most sense within cause areas, but diminishing returns here can be mitigated by donating to funds (e.g. Animal Welfare Fund) instead of particular charities (e.g. The Humane League).

JP Addison @ 2023-11-30T13:28 (+1)

Seems reasonable.

Andrew Gimber @ 2023-11-29T07:09 (+7)

Thanks for this!

If trying to avoid diminishing marginal returns within a cause area was the only reason for donation splitting, then I think pooling donations in a managed fund could be a more effective way of coordinating. Fund managers can specialize in researching where the highest-marginal-value funding gaps are.

But there’s also a funging argument that, given the existence of large funds that are trying to do this, it matters less exactly which high-impact charities smaller donors choose. For example, if smaller donations were suboptimally allocated across GiveWell’s top charities, the GiveWell Top Charities Fund would attempt to balance things out.