Consolidation of EA criticism?

By Joseph @ 2024-09-29T16:51 (+25)

Does some kind of consolidated critiques of effective altruism FAQ exist?[1] That is my question in one sentence. The following paragraphs are just context and elaboration.

Every now and then I come across some discussion in which effective altruism is critiqued. Some of these critiques are of the EA community as it currently exists (such as a critique of insularity) and some are critiques of deeper ideas (such as inherent difficulties with measurement). But in general I find them to be fairly weak critiques, and often they suggest that the person doesn't have a strong grasp of various EA ideas.

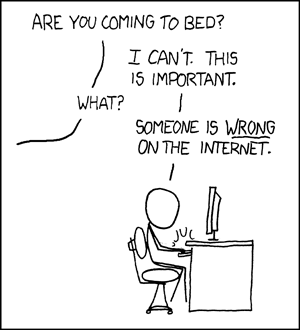

I found myself wishing that there was some sort of FAQ (A Google Doc? A Notion page?) that can be easily linked to. I'd like to have something better than telling people to read these three EA forum posts, and this Scott Alexander piece, and those three blogs, and these fourteen comment threads.

While I don't endorse anyone falling down the rabbit hole of arguing on the internet, it would be nice to have a consolidated package of common critiques and responses all in one place. It would be helpful for refuting common misconceptions. I found Dialogues on Ethical Vegetarianism to be a helpful consolidation of common claims and rebuttals, there have been blogs that have served a similar purpose for the development/aid world, and I'd love to have something similar for effective altruism. If nothing currently exists, then I might just end up creating a shared resource with a bunch of links to serve as this sort of a FAQ.

A few quotes from a few different people in a recent online discussion that I observed which sparked this thought of a consolidated FAQ, with minor changes in wording to preserve anonymity.[2] Many of these are not particularly well-thought out critiques, and suggest that the writers have a fairly inaccurate or simplified view of EA.

Any philosophy or movement with loads of money and that preaches moral superiority and is also followed by lots of privileged white guys lacking basic empathy I avoid like the plague.

EA is primarily a part of the culture of silicon valley's wealthy tech people who warm fuzzy feelings and to feel like they're doing something good. Many charity evaluating organizations existed before EA, so it is not concept that Effective Altruism created.

lives saved per dollar is a very myopic and limiting perspective.

EA has been promoted by some of the most ethically questionable individuals in recent memory.

Using evidence to maximize positive impact has been at the core of some horrific movements in the 20th century.

Improving the world seems reasonable in principle, but who gets to decide what counts as positive impact, and who gets to decide how to maximize those criteria? Will these be the same people who amassed resources through exploitation?

One would be hard pressed to find to specific examples of humanitarian achievements linked to EA. It is capitalizing on a philosophy than implementing it. And philosophically it’s pretty sophomoric: just a bare bones Anglocentric utilitarianism. So it isn't altruistic or effective.

In a Marxist framework, in order to amass resources you exploit labour and do harm. So wouldn't it be better to not do harm in amassing capital rather than 'solve' social problems with the capital you earned through exploiting people and create social problems.

EA is often a disguise for bad behavior without evaluating the root/source problems that created EA: a few individuals having the majority of wealth, which occurred by some people being highly extractive and exploitative toward others. If someone steals your land and donates 10% of their income to you as an 'altruistic gesture' while still profiting from their use of your land, the fundamental imbalance is still there. EA is not a solution.

It's hard to distinguish EA from the fact that its biggest support (including the origin of the movement) is from the ultra rich. That origin significantly shapes the the movement.

It’s basically rehashed utilitarianism with all of the problems that utilitarianism has always had. But EA lacks the philosophical nuance or honesty.

- ^

Yes, I know that EffectiveAltruism.org has a FAQ, but I'm envisioning something a bit more specific and detailed. So perhaps a more pedantic version of my question would be "Does some kind of consolidated critiques of effective altruism FAQ exist aside from the FAQ on EffectiveAltruism.org?"

- ^

If for some reason you really want to know where I read these, send me a private message and I'll share the link with you.

NunoSempere @ 2024-09-29T20:38 (+58)

The EA forum has tags. The one for criticisms of effective altruism is here: https://forum.effectivealtruism.org/topics/criticism-of-effective-altruism

Beyond that, here are some criticisms I've heard or made. Hope it helps:

Preliminaries:

- EA is both a philosophy and a social movement/group of existing people. Defenders tend to defend the philosophy and in particular the global health part, which is more unambiguously good. However, many of the more interesting things happen on the more speculative parts of the movement.

- A large chunk of non-global health EA or EA-adjacent giving is controlled by Open Philanthropy. Alternatively, there needs to be a name for "the community around Open Philanthropy and its grantees" so that people can model it. Hopefully this sidesteps some definitional counterarguments.

Criticism outlines:

- Open Philanthropy has created a class of grants officers who, by dint of having very high salaries, are invested in Open Philanthropy retaining its current giving structure.

- EA seduces some people into believing that they would be cherished members, but then leaves them unable to find jobs and in a worse position that they otherwise would have been if they had built their career capital elsewhere. cf. https://forum.effectivealtruism.org/posts/2BEecjksNZNHQmdyM/don-t-be-bycatch

- EA the community is much more of a pre-existing clique and mutual admiration society than its emphasis on the philosophy when presenting itself would indicate. This is essentially deceptive, as it leads prospective members, particularly neurodivergent ones, to have incorrect expectations. cf. https://forum.effectivealtruism.org/posts/2BEecjksNZNHQmdyM/don-t-be-bycatch

- It's amusing that the Center for Effective Altruism has taken a bunch of the energy of the EA movement, but itself doesn't seem to be particularly effective cf. https://nunosempere.com/blog/2023/10/15/ea-forum-stewardship/

- EA has tried to optimize movement building naïvely, but focus on metrics has led it to focus on the most cost-effective interventions for the wrong thing, in a way which is self-defeating cf. https://forum.effectivealtruism.org/posts/xomFCNXwNBeXtLq53/bad-omens-in-current-community-building

- Worldview diversification is an ugly prioritization framework that generally doesn't follow from the mathematical structure of anything but rather from political gerrymandering cf. https://nunosempere.com/blog/2023/04/25/worldview-diversification/

- Leadership strongly endorsed FTX, which upended many plans from the rank and file after it turned out to be a fraud and its promised funding was recalled

- EA has a narrative about how it searches for the best interventions using tools like cost-effectiveness analyses. But for speculative interventions, you need a lot of elbow grease, judgment calls. You have a lot of degrees of freedom. This amplies/enables clique dynamics.

- Leaders have been somewhat hypocritical around optimizing strongly, with a "do what I say not what I do" attitude towards deontological constraints. cf. https://forum.effectivealtruism.org/posts/5o3vttALksQQQiqkv/consequentialists-in-society-should-self-modify-to-have-side

- The community health team lacks many of the good qualities of a (US) court, such as the division of powers between judge, jury and executioner, or the possibility to confront one's accuser, or even know what one has been accused of. It is not resilient to adversarial manipulation, and priviledges the first party when both have strong emotions.

- EA/OP doesn't really know how to handle the effects of throwing large amounts of money on people's beliefs. Throwing money at a particular set of beliefs makes it gain more advocates and harder to update away from it. Selection effects will apply at many levels. cf. https://nunosempere.com/blog/2023/01/23/my-highly-personal-skepticism-braindump-on-existential-risk/

- EA is trapped in a narrow conceptual toolkit, which makes critics very hard to understand/slightly schizophrenic once they step away from that toolkit. cf. Milan Griffes

Finally, for global health, something which keeps me up at night is the possiblity that subsaharan Africa is trapped in a malthusian equilibrium, where further aid only increases the population which increases suffering.

NunoSempere @ 2024-09-29T21:53 (+9)

Here are some caveats/counterpoints:

- EA/OP does give large amounts of resources to areas that others find hard to care about, in a way which does seem more earnest & well-meaning than many other people in society

- The alternative framework in which to operate is probably capitalism, which is also not perfectly aligned with human values either.

- There is no evil mustache twirling mastermind. To the extent these dynamics arise, they do so out of some reasonably understandable constraints, like having a tight-knit group of people

- In general it's just pretty harsh to just write a list of negative things about someone/some group

- It's much easier to point out flaws than to operate in the world

- There are many things to do in the world, and limited competent operators to throw at problems. Some areas will just see less love & talent directed to them. There is some meta-prioritization going on in a way which broadly does seem kind of reasonable.

AnonymousTurtle @ 2024-09-29T23:08 (+8)

Another important caveat is that the criticisms you mention are not common from people evaluating the effective altruism framework from the outside when allocating their donations or orienting their careers.

The criticisms you mention come from people who have spent a lot of time in the community, and usually (but not exclusively) from those of us who have been rejected from job applications, denied funding, or had bad social experiences/cultural fit with the social community.

This doesn't necessarily make them less valid, but seems to be a meaningfully different topic from what this post is about. Someone altruistically deciding how much money to give to which charity is unlikely to be worried about whether they will be seduced into believing that they would be cherished members of a community.

People evaluating effective altruism "from the outside" instead mention things like the paternalism and unintended consequences, that it doesn't care about biodiversity, that quantification is perilous, that socialism is better, or that capitalism is better.

Note that I do agree with many of your criticisms of the community[1], but I believe it's important to remember that the vast majority of people evaluating effective altruism are not in the EA social community and don't care much about it, and we should probably flag our potential bias when criticizing an organization after being denied funding or rejected from it (while still expressing that useful criticism.)

- ^

I would also add Ben Kuhn's "pretending to try" critique from 11 years ago, which I assume shares some points with your unpublished "My experience with a Potemkin Effective Altruism group"

Josh Piecyk 🔹 @ 2024-10-02T00:58 (+3)

I found 1 unpopular EA post discussing your last point of the malthusian risk involved with global health aid in subsaharan Africa, and I'm unsure why this topic isn't discussed more frequently on this forum. The post also mentions a study that found that East Africa may currently be in a malthusian trap such that a charity contributing to population growth in this region could have negative utility and be doing more harm than good.

NunoSempere @ 2024-10-03T09:39 (+2)

Seems like a pretty niche worry, I wouldn't read too much into it not being discussed much. It's just that if true it does provide a reason to discount global health and development deeply.

DPiepgrass @ 2024-09-29T21:43 (+19)

I haven't seen such a resource. It would be nice.

My pet criticism of EA (forums) is that EAs seem a bit unkind, and that LWers seem a bit more unkind and often not very rationalist. I think I'm one of the most hardcore EA/rationalists you'll ever meet, but I often feel unwelcome when I dare to speak.

Like:

- I see somebody has a comment with -69 karma. An obvious outsider asking a question with some unfair assumptions about EA. Yes, it was brash and rude, but no one but me actually answered him.

- I write an article (that is not critical of any EA ideas) and, after many revisions, ask for feedback. The first two people who come along downvote it, without giving any feedback. If you downvote an article with 104 points and leave, it means you dislike it or disagree. If you downvote an article with 4 points and leave, it means you dislike it, you want the algorithm to hide it from others, you want the author to feel bad, and you don't want them to know why. If you are not aware that it makes people feel bad, you're demonstrating my point.

- I always say what I think is true and I always try to say it reasonably. But if it's critical of something, I often get downvote instead of a disagree (often without comment).

- I describe a pet idea that I've been working on for several years on LW (I built multiple web sites for it with hundreds of pages, published NuGet packages, the works). I think it works toward solving an important problem, but when I share it on LW the only people who comment say they don't like it, and sound dismissive. To their credit, they do try to explain to me why they don't like it, but they also downvote me, so I become far too distraught to try to figure out what they were trying to communicate.

- I write a critical comment (hypocrisy on my part? Maybe, but it was in response to a critical article that simply assumes the worst interpretation of what a certain community leader said, and then spends many pages discussing the implications of the obvious trueness of that assumption.) This one is weird: I get voted down to -12 with no replies, then after a few hours it's up to 16 or so. I understand this one―it was part of a battle between two factions of EA―but man that whole drama was scary. I guess that's just reflective of Bay Area or American culture, but it's scary! I don't want scary!

Look, I know I'm too thin-skinned. I was once unable to work for an entire day due to a single downvote (I asked my boss to take it from my vacation days). But wouldn't you expect an altruist to be sensitive? So, I would like us to work on being nicer, or something. Now if you'll excuse me... I don't know I'll get back into a working mood so I can get Friday's work done by Monday.

DPiepgrass @ 2024-09-29T22:19 (+4)

Also: critical feedback can be good. Even if painful, it can help a person grow. But downvotes communicate nothing to a commenter except "f**k you". So what are they good for? Text-based communication is already quite hard enough without them, and since this is a public forum I can't even tell if it's a fellow EA/rat who is voting. Maybe it's just some guy from SneerClub―but my amygdala cannot make such assumptions. Maybe there's a trick to emotional regulation, but I've never seen EA/rats work that one out, so I think the forum software shouldn't help people push other people's buttons.

Joseph Lemien @ 2024-09-29T22:54 (+2)

For what it is worth, you are not alone in feeling bad when you get downvotes. People have described me as a fairly confident person, and I view myself as pretty level-headed, but if I get a disagree vote without any comment or response I am also perplexed/frustrated/irritated. I often think something along the lines of "there is nothing in this comment that is stating a claim or making an argument; what could a person disagree with?"

I do wish there were a way to "break" the habit that EA forum users have to conflate up/down votes and agree/disagree votes.

Theoretically, if there was some sort of option for a user to "hide" votes (maybe on all content, or maybe just on the user's own content), do you think that would minimize the negative feelings you have relating to votes?

Ulrik Horn @ 2024-09-30T03:04 (+6)

First of all I would be careful about seeking to refute all criticisms - I think we should a priory be agnostic whether it is true or not. We can then after carefully investigating the criticism see if there is work to do for us, or if we should seek to refute it. Something else would be in stark opposition to the very principles we might seek to defend. To that end, another source of criticism which for some reason often is not cast as that is the community health surveys - this is insiders' largest problems with the ideas and more frequently various aspects of the community. To quote the latest such survey with excellent and careful analysis:

- Reasons for dissatisfaction with EA:

- A number of factors were cited a similar number of times by respondents as Very important reasons for dissatisfaction, among those who provided a reason: Cause prioritization (22%), Leadership (20%), Justice, Equity, Inclusion and Diversity (JEID, 19%), Scandals (18%) and excessive Focus on AI / x-risk / longtermism (16%).

- Including mentions of Important (12%) and Slightly important (7%) factors, JEID was the most commonly mentioned factor overall.

Evan_Gaensbauer @ 2024-09-30T01:04 (+6)

I'm working on some such resources myself. Here's a link to the first one, what is up to now a complete list of posts, of the still ongoing series, on the blog Reflective Altruism.

https://docs.google.com/document/d/1JoZAD2wCymIAYY1BV0Xy75DDR2fklXVqc5bP5glbtPg/edit?usp=drivesdk

Richard Y Chappell🔸 @ 2024-09-30T00:19 (+6)

I attempt to survey - and address - what I see as the main criticisms in my academic paper, 'Why Not Effective Altruism?' (summarized here). But it's not as comprehensive as an online FAQ could be.

jordanve @ 2024-10-01T09:14 (+3)

I love that you shared this. I've just finished reading it, you've done a fantastic job. Thank you for so clearly distilling the problems with such widespread objections. My highlights were the revolutionary's dilemma, EA as the minimisation of abandonment, the reputational threat that EA poses to traditional altruists, and the political critique.

titotal @ 2024-10-01T11:44 (+5)

The best EA critic is David Thorstadt, his work is compiled at "reflective altruism". I also have a lot of critiques that I post here as well as on my blog. There are plenty of other internal EA critics you can find with the criticisms tag. (I'll probably add to this and make it it's own post at some point).

In regards to AI x-risk in particular, there are a few place where you can find frequent critique. These are not endorsements or anti-endorsements. I like some of them and dislike others. I've ranked them in rough order of how convincing i would expect them to be for the average EA (most convincing first).

First, Magnus vinding has already prepared an anti-foom reading list, compiling arguments against the "ai foom" hypothesis. His other articles on the subject of foom are also good.

AI optimism, by Nora Belrose and Quinton pope, argues the case that AI will be naturally helpful, by looking at present day systems and debunking poor x-risk arguments.

The AI snake oil blog and book, by computer scientists Arvind Narayanan and Sayesh kapoor, which tries to deflate AI hype.

Gary marcus is a psychologist who has been predicting that deep learning will hit a wall since before the genAI boom, and continues to maintain that position. Blog, Twitter

Yann lecunn is a prestigious deep learning expert that works for meta AI and is strongly in favour of open source AI and anti-doomerism.

Emily bender is a linguist and the lead author on the famous "stochastic parrots" paper. She hosts a podcast, "mystery AI hype theatre 3000", attacking AI hype.

The effective accelerationists (e/accs) like marc andressen are strongly "full steam ahead" on AI. I haven't looked into them much (they seem dumb) so I don't have any links here.

Nirit Weiss-Blatt runs the AI panic blog, another anti AI-hype blog

The old tumblr user SU3SU2SU1 was a frequent critic of rationalism and MIRI in particular. Sadly it's mostly been deleted, but his critic of HPMOR has been preserved here

David Gerard is mainly a cryptocurrency critic, but has been criticizing rationalism and EA for a very long time, and runs the "pivot to AI" blog attacking AI hype.

Tech won't save us is a left wing podcast which attacks the tech sector in general, with many episodes on EA and AI x-risk figures in genral.

Timnit gebru is the ex-head of AI ethics in google. She strongly dislikes EA. Often associates with the significantly less credible Emille torres:

Emille torres, is an ex-EA who is highly worried about longtermism. They are very disliked here for a number of questionable actions.

r/sneerclub has massively dropped off in activity but has been mocking rationalism for more than a decade now, and as such has accumulated a lot of critiques.

Jason @ 2024-09-30T00:07 (+2)

I think one would have to be careful here -- there's a risk of focusing on weak or poorly-presented criticisms (perhaps unintentionally and/or for generally valid reasons). Highlighting bad criticisms and then demolishing them publicly could make the EA reader overconfident.

Other criticisms may be ill-suited to meaningful responses in a brief FAQ -- you're unlikely to say anything in a few hundred words that will update that Marxist critic.

Finally, there probably isn't-- and shouldn't be -- a "party line" response to many criticisms. One can for instance think billionaire philanthropy is inherently problematic (or not) and still think taking the billionaire bucks is better than the alternatives. Writing a response on some criticisms that fairly represents a range of community views without being wishy-washy may be challenging.

None of these are reasons not to do it, just potential issues and limitations.