Report: Artificial Intelligence Risk Management in Spain

By JorgeTorresC, Jaime Sevilla, Guillem Bas, Roberto Tinoco, Mónica Ulloa @ 2023-06-15T16:08 (+22)

This is a linkpost to https://riesgoscatastroficosglobales.com/articulos/informe-gestin-de-riesgos-de-la-inteligencia-artificial-en-espaa

Download full report

If you are interested in attending our research findings presentation on June 23, 2023 at 15:00 (GMT+1). Sign up at the following link.

Executive Summary

Artificial Intelligence (AI) is advancing rapidly, bringing significant global risks. To manage these risks, the European Union is preparing a regulatory framework that will be tested for the first time in a sandbox hosted by Spain. In this report, we review the risks that must be considered to effectively govern AI and discuss how the European Regulation can be implemented effectively.

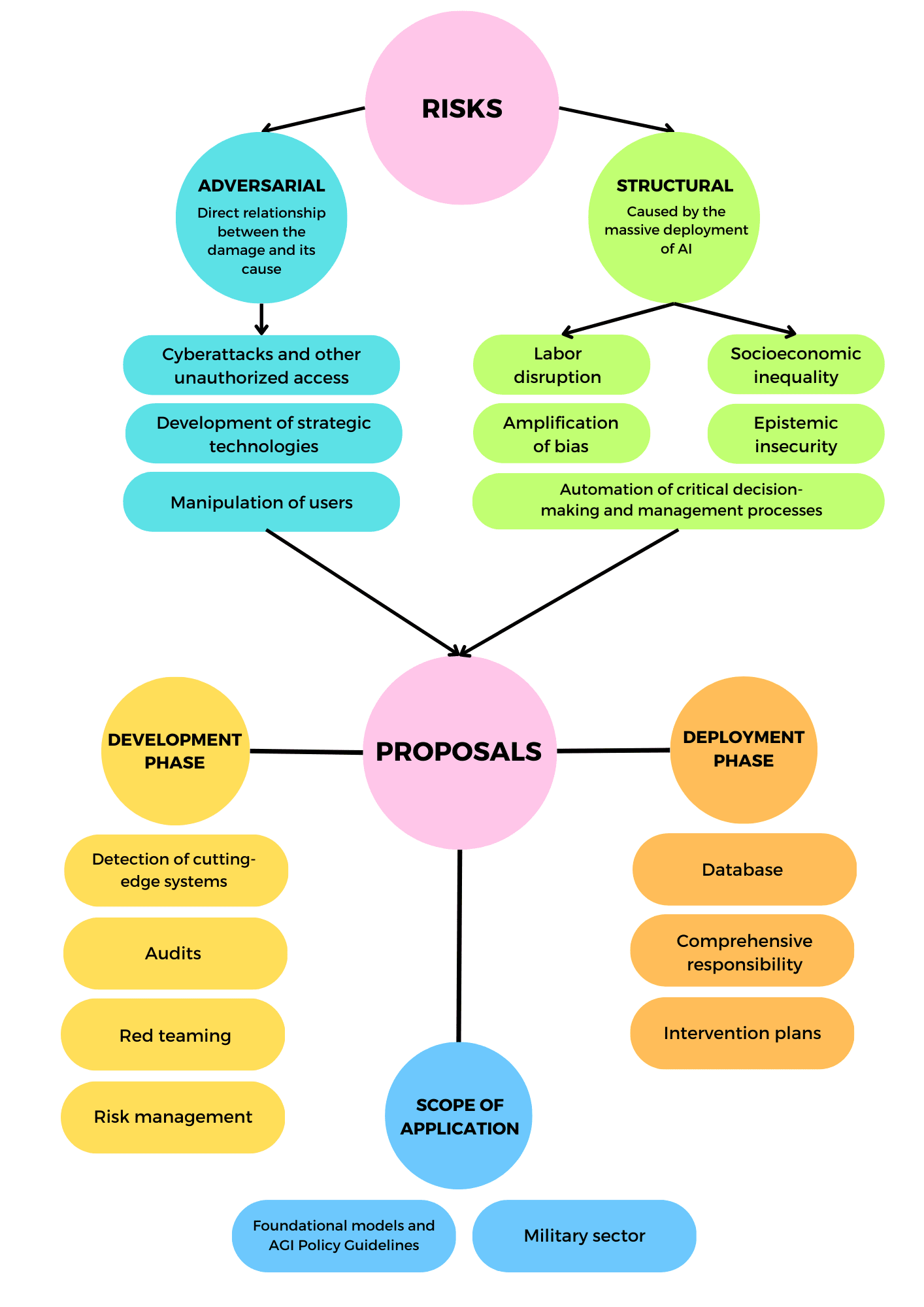

To facilitate their analysis, we have classified AI-related risks into adversarial and structural categories. The first group includes risks where there is a direct relationship between the harm and its cause. Specifically, we have identified two potential origin vectors: malicious actors with the intention of misusing AI and the AI systems themselves, which can act in unintended ways if not aligned with human objectives. On the other hand, structural risks are those caused by the widespread deployment of AI, focusing on the collateral effects that such technological disruption can have on society.

Regarding adversarial risks, we have focused on three types of threats: (i) cyberattacks and unauthorized access, (ii) development of strategic technologies, and (iii) user manipulation. Cyberattacks and unauthorized access involve using AI to carry out cyber offenses in order to gain resources. The development of strategic technologies refers to the misuse of AI to gain competitive advantages in the military or civilian sphere. User manipulation involves using persuasion techniques or presenting biased or false information through AI.

In terms of structural risks, we focus on five: (i) labor disruption, (ii) economic inequality, (iii) amplification of biases, (iv) epistemic insecurity, and (v) automation of critical decision-making and management processes. Labor disruption entails massive job loss due to automation. Economic inequality suggests that the accumulation of data and computing power might help AI developers concentrate wealth and power. Amplification of biases relates to the biases that algorithms may incorporate and generate in their decision-making. Epistemic insecurity indicates that AI can hinder the distinction between true and false information, affecting a country's socio-political stability and proper functioning. Finally, the automation of critical processes involves handing over command and control of strategic infrastructure to AI, increasing accidental risks.

Once the risks associated with AI are understood, we make nine recommendations to reinforce the implementation of the EU AI Act, especially in view of the development of the sandbox in Spain. The proposals are divided into three categories: measures for the development phase of AI systems, measures for the deployment phase, and conceptual clarifications.

Regarding the measures for the development phase, we prioritize four: (i) detection and governance of cutting-edge systems, taking training compute as an indicative measure of the model's capabilities; (ii) audits, with special emphasis on independent evaluations of the model; (iii) red teaming exercises to detect potential misuses and other risks; and (iv) strengthening risk management systems.

As for these policies, we recommend that public authorities carry out a systematic analysis of the European registry of AI systems, paying particular attention to those with higher computing power. Frontier models must be subjected to third-party audits focused on model evaluation, while other high-risk systems should pass stronger internal audits led by a special function within the company. In parallel, we recommend carrying out red teaming exercises through a network of professionals to identify risks and test responses. We also ask that these practices feed into a comprehensive and diligent risk management system.

Regarding the deployment phase, we present three proposals: (i) the collection of serious incidents and risks associated with the use of high-risk systems in a database; (ii) the reinforcement of the responsibilities of the providers to maintain the integrity of their AI systems along the value chain; (iii) and the development of intervention plans during post-market monitoring.

Specifically, we propose to encourage transparency and share lessons in a database that promotes collective learning and supports prevention efforts. On the other hand, we suggest security measures for the original providers to avoid alterations and misuses, and we lay out safeguards and plans to ensure that harmful AI systems are detected and can be adjusted or withdrawn.

Finally, we ask (i) to include foundational models in the scope of the Act and (ii) to consider military applications despite being excluded from the Regulation. For foundational models, we suggest applying the obligations required for high-risk systems and all the measures here presented. As for military uses, we urge the development of general norms and principles aligned with international humanitarian law.

Acknowledgments

Special thanks for their assistance and feedback to Pablo Villalobos, Pablo Moreno, Toni Lorente, José Hernández-Orallo, Joe O’Brien, Rose Hadshar, Risto Uuk, Samuel Hilton, Charlotte Siegmann, Javier Prieto, Jacob Arbeid and Malou Estier.