Is EA prepared for an influx of new, medium-sized donors?

By Nathan Young @ 2025-12-09T13:26 (+76)

This is a linkpost to https://nathanpmyoung.substack.com/p/is-ea-prepared-for-an-influx-of-new

Let's imagine a story.

- I am a mid- to upper-ranking member of OpenAI or Anthropic.

- I offload some of my shares and wish to give some money away.

- If I want to give to a specific charity or Coefficient, fair enough.

- If I am so wealthy or determined that I can set up my own foundation, also fair enough.

- What do I do if I wish to give away money effectively, but don't agree with Coefficient’s processes, and haven't done enough research to have chosen a specific charity?

Is EA prepared to deal with these people?

Notably, to the extent that such people are predictable, I feel like we should have solutions before they turn up, not after they turn up.

What might some of these solutions look like, and which of them currently exist?

Things the Currently exist

Coefficient Giving

It's good to have a large, trusted philanthropy that runs according to predictable processes. If people like those processes, they can just give to that one.[1]

Manifund

It's good to have a system of re-granting and such that wealthy individuals can re-grant to people they trust to do the granting in a transparent and trackable way.

The S-Process

The Survival and Flourishing Fund has something called the “S-process” which allocates money based on a set of trusted regranters and predictions about what things they’ll be glad they funded. I don’t understand it, but it feels like the kind of thing that a more advanced philanthropic ecosystem would use. Good job.

Longview Philanthropy

A donor advice service specifically caters to high net worth individuals. I haven't ever had their advice, but I assume they help wealthy people find options they endorse giving through.

Things that don’t exist fully

A repository or research (~EA forum)

The forum is quite good for this, though I imagine that many documents that are publicly available (Coefficient's research etc) are not available on the forum and then someone has to find them.

A philanthropic search/chatbot

It seems surprising to me that there isn't a winsome tool for engaging with research. I built one for 80k but they weren’t interested in implementing it (which is, to be clear, their prerogative).

But let’s imagine that I am a newly minted hundred millionaire. If there were Qualy, a well trusted LLM that would link to pages in the EA corpus and answer questions, I might chat to it a bit? EA is still pretty respected in those circles.

A charity ranker

As Bergman notes, there isn’t some clear ranking of different charities that I know of.

Come on folks, what are we doing? How is our wannabe philanthropist meant to know whether they ought to donate to AI, shrimp welfare or GiveWell. Vibes? [2]

I am in the process of building such a thing, but this seems like an oversight.

EA community notes

Why is there not a page with legitimate criticisms of EA projects, people and charities that only show if people from diverse viewpoints agree with them.

I think this one is more legitimately my fault, since I’m uniquely placed to built it, so sorry about that, but still, it should exist! It is mechanically appropriate, easy to build and the kind of liberal, self-reflective thing we support with appropriate guardrails.

A way to navigate the ecosystem

There is probably room for something which is more basic than any of this that helps people decide whether or not they probably want to give to give well or probably to Coefficient Giving or something else. My model is that for a lot of people, the reason they don't give more money is because they see the money they're giving as a significant amount, don’t want to give it away badly but don’t want to put in a huge amount of effort to give it well.

One can imagine a site with buzzfeed style questions (or an LLM) which guides people through this process. Consider Anthropics recent interviewing tool. It’s very low friction and elicits opinions. It wouldn't, I think, be that hard to build a tool which, at the end of some elicitation process, gives suggestions or a short set of things worth reading.

This bullet was suggested by Raymond Douglas, though written by me.

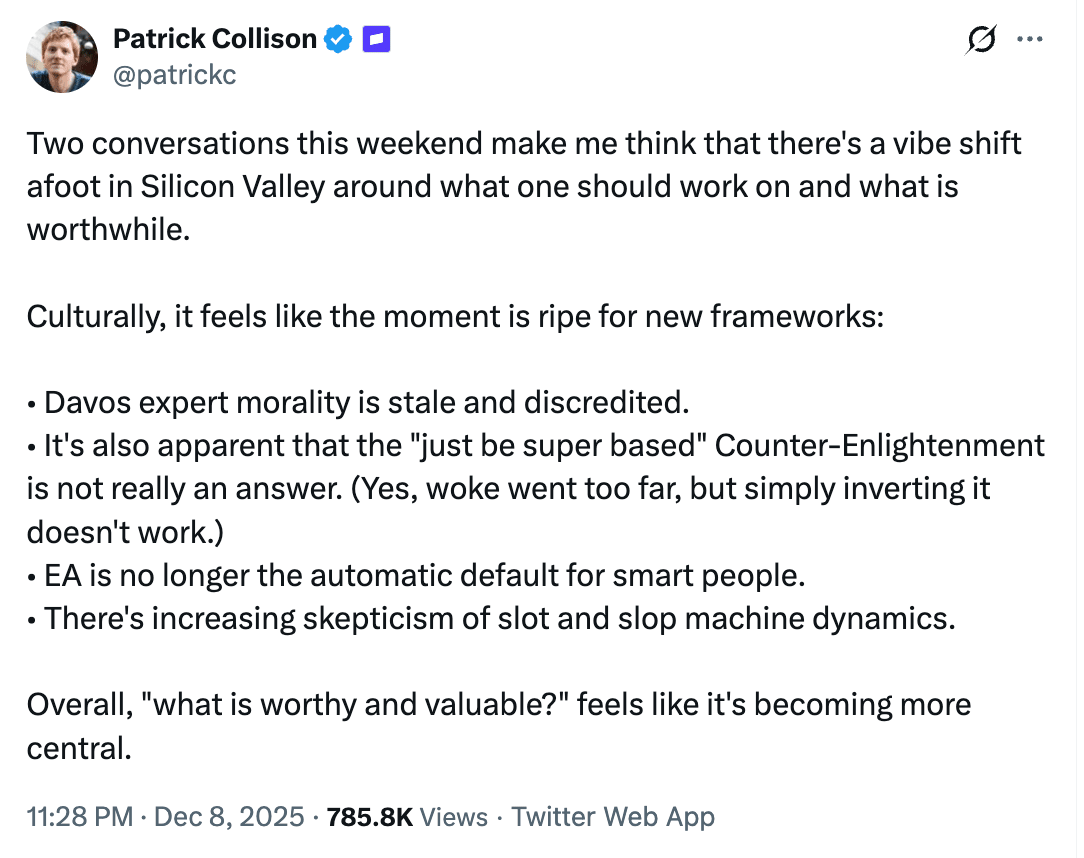

A new approach

Billionaire Stripe Founder Patrick Collison wrote the following. I recommend reading and thinking about it. I think he’s right to say that EA is no longer the default for smart people. What does that world look like? Is EA intimidated or lacking in mojo? Or is there space for public debates with Progress Studies or whatever appears next? Should global poverty work be split off in order to allow it to be funded without the contrary AI safety vibes?

I don’t know, but I am not sure EA knows either and this seems like a problem in a rapidly changing world.

There is a notable advantage for a liberal worldview that is no longer supreme in that we can ask questions we are scared of the answer or of tell people to return when they find stuff. EA not being the only game in town might be a good thing.

Imagine that 10 new EA-adjacent 100 millionaires popped up overnight. What are we missing?

It seems pretty likely that this is the world we are in, so we don’t have to wait for it to happen. If you imagine, for 5 minutes that you are in this world, what would you like to see?

Can we built it before it happens, not after.

Should you wish to fund work on a ranking site or EA community notes, email me at nathanpmyoung[at]gmail.com or small donors can subscribe to my substack.

- ^

Plausibly there are other large foundations that fit in this same space, feel free to suggest ones I've missed.

- ^

“papers? essays?”

(this is a joke)

ElliotTep @ 2025-12-10T02:57 (+28)

I think the moment you try and compare charities across causes, especially for the ones that have harder-to-evaluate assumptions like global catastrophic risk and animal welfare, it very quickly becomes clear how impossibly crazy any solid numbers are, and how much they rest on uncertain philosophical assumptions, and how wide the error margins are. I think at that point you're either left with worldview diversification or some incredibly complex, as-yet-not-very-well-settled, cause prioritisation.

My understanding is that all of the EA high net worth donor advisors like Longview, GiveWell, Coefficient Giving, (the org I work at) Senterra Funders, and many others are able to pitch their various offers to folks in Anthropic.

What has been missing is some recommended course prio split and/or resources, but that some orgs are starting to work on this now.

I think that any way to systematise this, where you complete a quiz and it gives you an answer, is too superficial to be useful. High net worth funders need to decide for themselves whether or not they trust specific grant makers beyond whether or not those grant makers are aligned with their values on paper.

Nathan Young @ 2025-12-10T08:56 (+11)

Naaaah, seems cheems. Seems worth trying. If we can't then fair enough. But it doesn't feel to me like we've tried.

Edit, for specificity. I think that shrimp QALYs and human QALYs have some exchange rate, we just don't have a good handle on it yet. And I think that if we'd decided that difficult things weren't worth doing we wouldn't have done a lot of the things we've already done.

Also, hey Elliot, I hope you're doing well.

ElliotTep @ 2025-12-11T04:44 (+6)

Oh, this is nice to read as I agree that we might be able to get some reasonable enough answers about Shrimp Welfare Project vs AMF (e.g. RP's moral weights project).

Some rough thoughts: It's when we get to comparing Shrimp Welfare Project to AI safety PACs in the US that I think the task goes from crazy hard but worth it to maybe too gargantuan a task (although some have tried). I also think here the uncertainty is so large that it's harder to defer to experts in the way that one can defer to GiveWell if they care about helping the world's poorest people alive today.

But I do agree that people need a way to decide, and Anthropic staff are incredibly time poor and some of these interventions are very time sensitive if you have short timelines, so that just begs the question: if I'm recommending worldview diversification, which cause areas get attention and how do we split among them?

I am legitimately very interested in thoughtful quantitative ways of going about this (my job involves a non-zero amount of advising Anthropic folks). Right now, it seems like Rethink Priorities is the only group doing this in public (e.g. here). To be honest, I find their work has gone over my heard, and while I don't want to speak for them my understanding is they might be doing more in this space soon.

Vasco Grilo🔸 @ 2025-12-11T19:56 (+3)

Hi Elliot and Nathan.

I [Nathan] think that shrimp QALYs and human QALYs have some exchange rate, we just don't have a good handle on it yet.

I think being able to compare the welfare of shrimps and humans is far from enough. I do not know about any interventions which robustly increase welfare in expectation due to dominant uncertain effects on soil animals. I would be curious to know your thoughts on these.

Oh, this [the point from Nathan quoted above] is nice to read as I agree that we might be able to get some reasonable enough answers about Shrimp Welfare Project vs AMF (e.g. RP's moral weights project).

I believe there is a very long way to robust results from Rethink Priorities' (RP's) moral weight project, and Bob Fischer's book about comparing welfare across species, which contains what RP stands behind now. For example, the estimate in Bob's book for the welfare range of shrimps is 8.0 % that of humans, but I would say it would be quite reasonable for someone to have a best guess of 10^-6, the ratio between the number of neurons of shrimps and humans.

Nathan Young @ 2025-12-12T10:01 (+6)

Maybe I'm being too facile here, but I genuinely think that even just taking all these numbers, making them visible in some place, and then taking the median of them, and giving a ranking according to that, and then allowing people to find things they think are perverse within that ranking, would be a pretty solid start.

I think producing suspect work is often the precursor to producing good work.

And I think there's enough estimates that one could produce a thing which just gathers all the estimates up and displays them. That would be sort of a survey or something, which wouldn't therefore make it bad in itself even if the answers were sort of universally agreed to be pretty dubious. But I think it would point to the underlying work which needs to be done more.

ElliotTep @ 2025-12-17T04:49 (+6)

I think one of the challenges here is for the people who are respected/have a leadershipy role on cause prioritisation, I get the sense that they've been reluctant to weigh in here, perhaps to the detriment of Anthropic folks trying to make a decision one way or another.

Even more speculative: Maybe part of what's going on here is that the charity comparison numbers that GiveWell produce, or when charities are being compared within a cause area in general, is one level of crazy and difficult. But the moment you get to cross-course comparisons, these numbers become several orders of magnitude more crazy and uncertain. And maybe there's a reluctance to use the same methodology for something so much more uncertain, because it's a less useful tool/there's a risk it is perceived as something more solid than it is.

Overal I think more people who have insights on cause prio should be saying: if I had a billion dollars, here's how I'd spend it, and why.

Vasco Grilo🔸 @ 2025-12-17T10:44 (+4)

Overal I think more people who have insights on cause prio should be saying: if I had a billion dollars, here's how I'd spend it, and why.

I see some value in this. However, I would be much more interested in how they would decrease the uncertainty about cause prioritisation, which is super large. I would spend at least 1 %, 10 M$ (= 0.01*1*10^9), decreasing the uncertainty about comparisons of expected hedonistic welfare across species and substrates (biological or not). Relatedly, RP has a research agenda about interspecies welfare comparisons more broadly (not just under expectational total hedonistic utilitarianism).

ElliotTep @ 2025-12-19T02:03 (+4)

I definitely think this should happen too, but reducing uncertainty about cause prio beyond what has already been done to date is a much much bigger and harder ask than 'share your best guess of how you would allocate a billion dollars'.

Vasco Grilo🔸 @ 2025-12-13T11:46 (+6)

How different is that from ranking the results from RP's cross-cause cost-effectiveness model (CCM)? I collected estimates from this in a comment 2 years ago.

Hugh P @ 2025-12-10T13:51 (+1)

When people write about where they donate, aren’t they implicitly giving a ranking?

Nathan Young @ 2025-12-10T17:13 (+2)

Sure but a really illegible and hard to search one.

OscarD🔸 @ 2025-12-09T17:39 (+22)

Longview does advising for large donors like this. Some other orgs I know of are also planning for an influx of money from Anthropic employees, or thinking about how best to advise such donors on comparing cuase areas and charities and so forth. This is also relevant: https://forum.effectivealtruism.org/posts/qdJju3ntwNNtrtuXj/my-working-group-on-the-best-donation-opportunities But I agree more work on this seems good!

Chris Leong @ 2025-12-10T09:20 (+16)

There's also Founder's Pledge.

Nathan Young @ 2025-12-09T22:44 (+8)

Thanks, someone else mentioned them. Do you think there is anything else I'm missing?

jenn @ 2025-12-10T00:38 (+14)

the other nonprofit in this space is the Effective Institutions Project, which was linked in Zvi's 2025 nonprofits roundup:

They report that they are advising multiple major donors, and would welcome the opportunity to advise additional major donors. I haven’t had the opportunity to review their donation advisory work, but what I have seen in other areas gives me confidence. They specialize in advising donors who have brad interests across multiple areas, and they list AI safety, global health, democracy and (peace and security).

from the same post, re: SFF and the S-Process:

SFF does not accept donations but they are interested in partnerships with people or institutions who are interested in participating as a Funder in a future S-Process round. The minimum requirement for contributing as a Funder to a round is $250k. They are particularly interested in forming partnerships with American donors to help address funding gaps in 501(c)(4)’s and other political organizations.

This is a good choice if you’re looking to go large and not looking to ultimately funnel towards relatively small funding opportunities or individuals.

Benevolent_Rain @ 2025-12-10T15:28 (+2)

Do you know if Longview does something like assign a person to the new potential donor? I think, for example, a donor going to their first EAG might not have enough bandwidth themselves to make sense of the whole ecosystem and get the most out of engaging with all donation opportunities.

OscarD🔸 @ 2025-12-10T16:17 (+4)

My understanding is Longview does a combination of actively reaching out (or getting exisitng donors to reach out) to possible new donors, and talking to people who express interest to them directly. But I don't know much about their process or plans.

NickLaing @ 2025-12-09T20:41 (+15)

I think giving circles like those that AIM run are a great option in this kind of case. They are a great way to build understanding around giving, while being well supported and also providing accountability

I also think lots of people will make statements along the lines of "EA is no longer the default option for smart people" as an excuse/copout for not giving at all, when really the issue is just value drift and greed catching up with them. If you don't want to give "EA style" that's great, as long as you've got another plan where to give it.

I think cross cause-area comparisons are great to consider, but cross cause-area rankings are a bit absurd given how big the error bars are around future stuff and animal welfare calculations. I don't mind someone making a ranking list as an interesting exercise that the odd person are going to defer to, but more realistically people are going to anchor on one cause area or another. At least have within-cause rankings before you start cross-cause ranking.

People within the field say they don't even want to do cost-effectiveness analysis within AI safety charities (I feel they could make a bit more of an effort). How on earth then will you do it cross-cause?

GiveWell and HLI basically rank global health/Dev charities. If you're that keen on rankings, why not start by making an animal welfare and AI safety rank list first, then if people take that seriously perhaps you can start cross-cause ranking with non-absurdity

Nathan Young @ 2025-12-09T22:44 (+2)

Could you give a concise explanation of what giving circles are?

jenn @ 2025-12-10T00:41 (+7)

Lydia Laurenson has a non-concise article here.

I once spoke to a philanthropist who told me her earliest mistake was “sitting alone in a room, writing checks.” It was hard for her to trust people because everyone kept trying to get her money. Eventually, she realized isolation wasn’t serving her.

Battery Powered is intended to help with that type of mistake. It’s a philanthropy community where people who are new to philanthropy mix with people who are more familiar with it, so they cross-pollinate and mentor each other. The BP staff — including you, Colleen — has several experts who research issues chosen by the members, and then vet organizations working on those issues. This gives members a chance to learn about the issues before they vote on which organizations get the money.

James Herbert @ 2025-12-10T23:48 (+11)

A couple of effective giving orgs, e.g., Doneer Effectief, have people who specialise in advising HNW individuals. There are also a few international initiatives I know of that keep a very low profile.

Craig Green @ 2025-12-09T21:57 (+8)

I do think some sort of moral-weights quizlet thing could be helpful for people to get to know their own values a bit better. GiveWell's models already do this but only for a narrow range of philanthropic endeavors relative to the OP (and they are actual weights for a model, not a pedagogical tool). To be clear, I do not think this would be very rigorous. As others have noted, the various areas are more-or-less speculative in their proposed effects and have more-or-less complete cost-evaluations. But it might help would-be donors to at least start thinking through their values and, based on their interests, it could then point them to the appropriate authorities.

As others have noted, I feel existing chatbots are pretty sufficient for simple search purposes (I found GiveWell through ChatGPT), and on the other hand, existing literature is probably better than any sort of fine-tuned LLM, IMO.

I have no idea what someone in this income-group would do. If I were in that class, being the respecter of expertise that I am, I would not be looking for a chatbot or a quizlet, and would seek out expert advice, so perhaps it is better to focus on getting these hypothetical expert-advisors more visibility?

Yarrow Bouchard 🔸 @ 2025-12-10T02:16 (+4)

Welcome to the EA Forum!

Snowman Socrates @ 2025-12-12T01:10 (+7)

Thank you for asking this question! I definitely agree we should be exploring EAs treatment of mid-size donors and whether we are ready for them (especially in the context of EA fund diversification). I’m not confident that we need more research tools or rankers for such donors - I think there are already many resources for those (ex: Giving What We Can provided a wide range of recommendations across cause areas for such donors, not to mention others like GiveWell, Giving Green, Founders Pledge, ACE, Longview Philanthropy, Ultra Philanthropy, Ark Philanthropy, Ellis Impact, and more).

I think perhaps the more important question for preparing for midlevel donors is does EA have the cultivation and stewardship practices (professionalized fundraising) in place to keep these donors giving and increase their giving. I think this is a massive opportunity within the EA ecosystem.

Aaron Bergman @ 2025-12-19T03:03 (+4)

Thanks for the highlight! Yeah I would love better infrastructure for trying to really figure out what the best uses of money are. I don't think it has to be as formal/quantitative as GiveWell. To quote myself from a recent comment (bolding added)

At some level, implicitly ranking charities [eg by donating to one and not another] is kind of an insane thing for an individual to do - not in an anti-EA way (you can do way better than vibes/guessing randomly) but in a "there must be better mechanisms/institutions for outsourcing donation advice than GiveWell and ACE and ad hoc posts/tweets/etc and it's really hard and high stakes" way.

Like what I would love is a lineup of 10-100 very highly engaged and informed people (could create the list simply by number of endorsements/requests) who talk about their strategy and values in a couple pages and then I just defer to them (does this exist?)

Larks @ 2025-12-12T01:26 (+4)

I think Patrick's comments are best interpreted as an attempt at hyperstition.

Nathan Young @ 2025-12-12T10:03 (+2)

Sure, but I think there are also relatively accurate comments about the world.

Vasco Grilo🔸 @ 2025-12-11T20:04 (+4)

Thanks for the relevant post, Nathan!

Come on folks, what are we doing? How is our wannabe philanthropist meant to know whether they ought to donate to AI, shrimp welfare or GiveWell. Vibes? [2]

I am in the process of building such a thing, but this seems like an oversight.

Feel free to get in touch if you think I may be able to help with something.

Nathan Young @ 2025-12-12T10:04 (+4)

I guess I can send you a mediocre prototype.

Vasco Grilo🔸 @ 2025-12-13T11:34 (+2)

Sure!

Mo Putera @ 2025-12-11T11:10 (+2)

I am in the process of building such a thing

Is it available online / open-source / etc by any chance? Even just as a butterfly idea.

Benevolent_Rain @ 2025-12-10T15:30 (+2)

Just a note that if anyone is interested in talking about this, please drop me a DM. I have some experience and think there might be something to do in this space.

Yarrow Bouchard 🔸 @ 2025-12-09T17:36 (+2)

If someone wants to use a chatbot to research EA-endorsed charities they might be interested in donating to, they can just describe to ChatGPT or Claude what they want to know and ask the bot to search the EA Forum and other EA-related websites for info.

titotal @ 2025-12-09T17:57 (+8)

they can just describe to ChatGPT or Claude what they want to know and ask the bot to search the EA Forum and other EA-related websites for info.

I feel like you've written a dozen posts at this point explaining why this isn't a good idea. LLM's are still very unreliable, the best way to find out what people in EA believe is to ask.

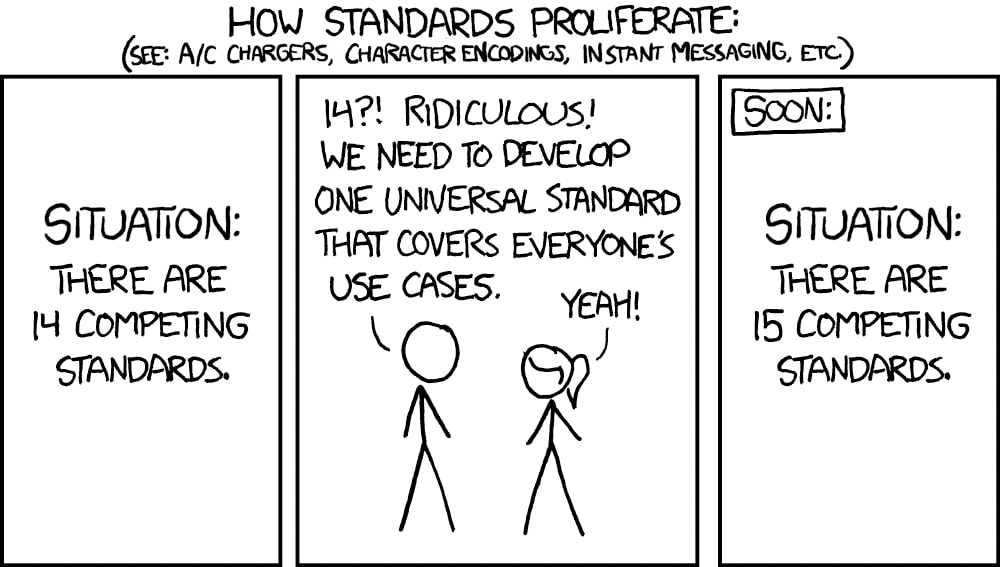

With regards to the ranking of charities, I think it would be totally fine if there were 15 different versions rankings out there. It would allow people to get a feel for what people with different worldviews value and agree or disagree on. I think this would be preferable to having just one "official" ranking, as there's no way to take into account massive worldview differences into that.

Yarrow Bouchard 🔸 @ 2025-12-09T18:42 (+2)

I was responding to this part:

But let’s imagine that I am a newly minted hundred millionaire. If there were Qualy, a well trusted LLM that would link to pages in the EA corpus and answer questions, I might chat to it a bit?

I agree chatbots are not to be trusted to do research or analysis, but I was imagining someone using a chatbot as a search engine, to just get a list of charities that they could then read about.

I think 15 different lists or rankings or aggregations would be fine too.

If a millionaire asked me right now for a big list of EA-related charities, I would give them the donation election list. And if they wanted to know the EA community’s ranking, I guess I would show them the results of the donation election. (Although I think those are hidden for the moment and we’re waiting on an announcement post.) Edit: the results are now here, although there isn't a ranking beyond the top 3.

Maybe a bunch of people should write their own personal rankings.

Someone should make a Tier List template for EA charities. Something like this:

Nathan Young @ 2025-12-09T22:46 (+2)

Yeah I'm making something like that :)

Nathan Young @ 2025-12-09T22:46 (+5)

I do not see 14 charity ranking tools. I don't really think I see 2? What, other than asking claude/chatGPT/gemini are you suggesting?

Yarrow Bouchard 🔸 @ 2025-12-10T02:15 (+4)

You know what, I don’t mean to discourage you from your project. Go for it.

exopriors @ 2025-12-10T02:34 (+1)

I just built something that can help donors explore the EA/alignment ecosystem: alignment-explorer.exopriors.com.

It's a read-only SQL + vector search API over a continuously growing alignment-adjacent corpus, that Claude Code w/ Opus 4.5 is exceptionally good at using. The compositional vibe search (vector mixing) functionality combined with long query time limits is quite something.