The state of global catastrophic risk research

By FJehn @ 2025-07-22T08:13 (+31)

This is a linkpost to https://esd.copernicus.org/articles/16/1053/2025/esd-16-1053-2025.html

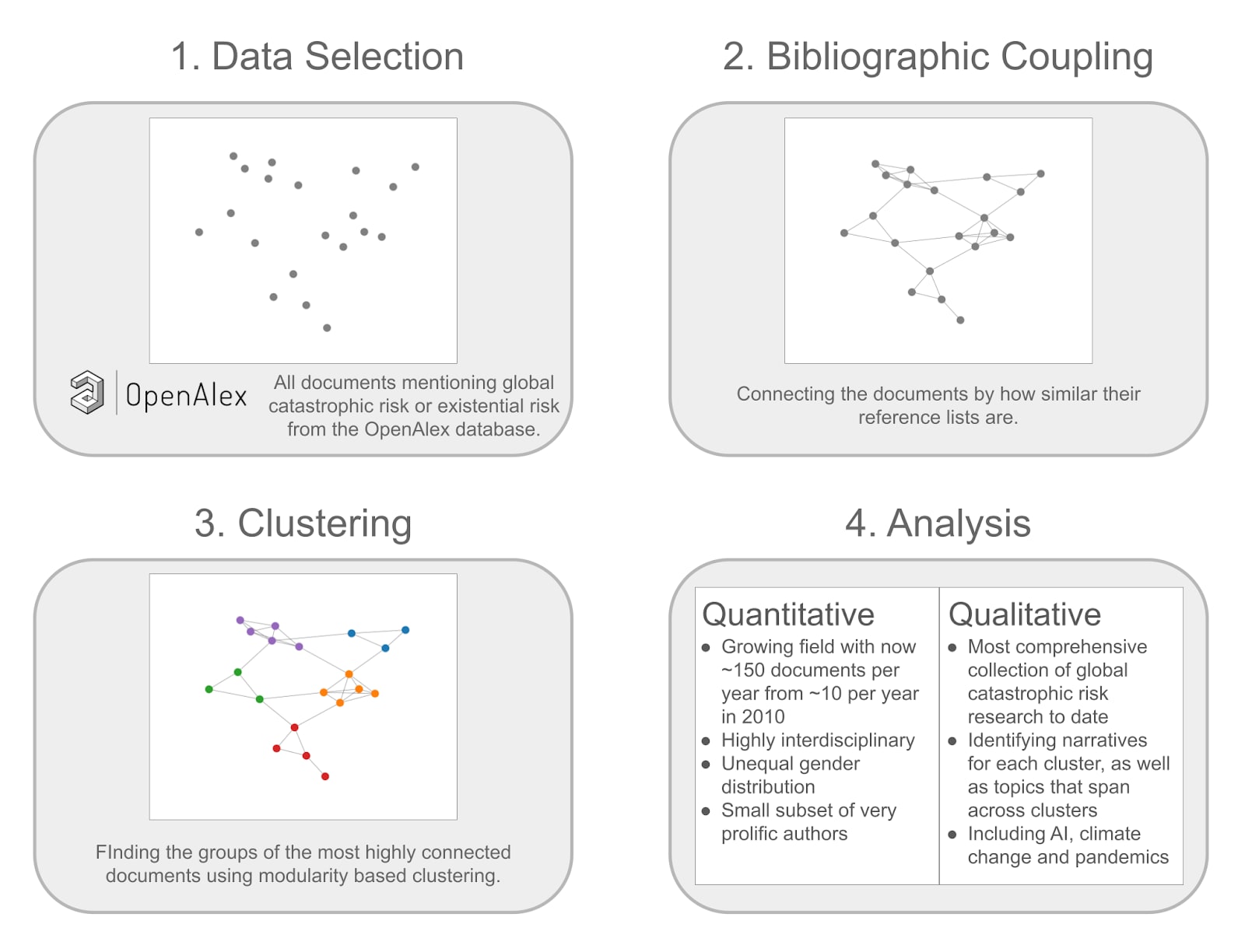

The following text presents a condensed summary of "The state of global catastrophic risk research: a bibliometric review" by Jehn et al. (2025), published in Earth System Dynamics. The paper represents the first systematic bibliometric analysis of global catastrophic risk (GCR) and existential risk (ER) literature, examining a large number of documents to map the field's development, identify research clusters, and assess current challenges. The full paper includes extensive supplementary materials, detailed methodological descriptions, and over 280 references and can be found here (open access): https://esd.copernicus.org/articles/16/1053/2025/

Visual Abstract

Introduction

The field of global catastrophic risk (GCR) and existential risk (ER) research has grown considerably over the past two decades, evolving from a nascent area of study into an established research domain. This first systematic bibliometric analysis of the GCR/ER literature examines all large number documents to understand how the field has developed, what topics it covers, and what challenges it faces.

GCR refers to risks that could cause the death of a significant fraction of humanity or major loss of global well-being, while ER encompasses risks of human extinction or similarly severe permanent curtailments of humanity's potential. These concepts emerged distinctly in the 2000s, with key foundational texts introducing and refining the terminology. The field's intellectual roots trace back to realizations about nuclear weapons' dangers and climate change threats, which brought awareness of humanity-scale risks into mainstream consciousness.

Growth and Development of the Field

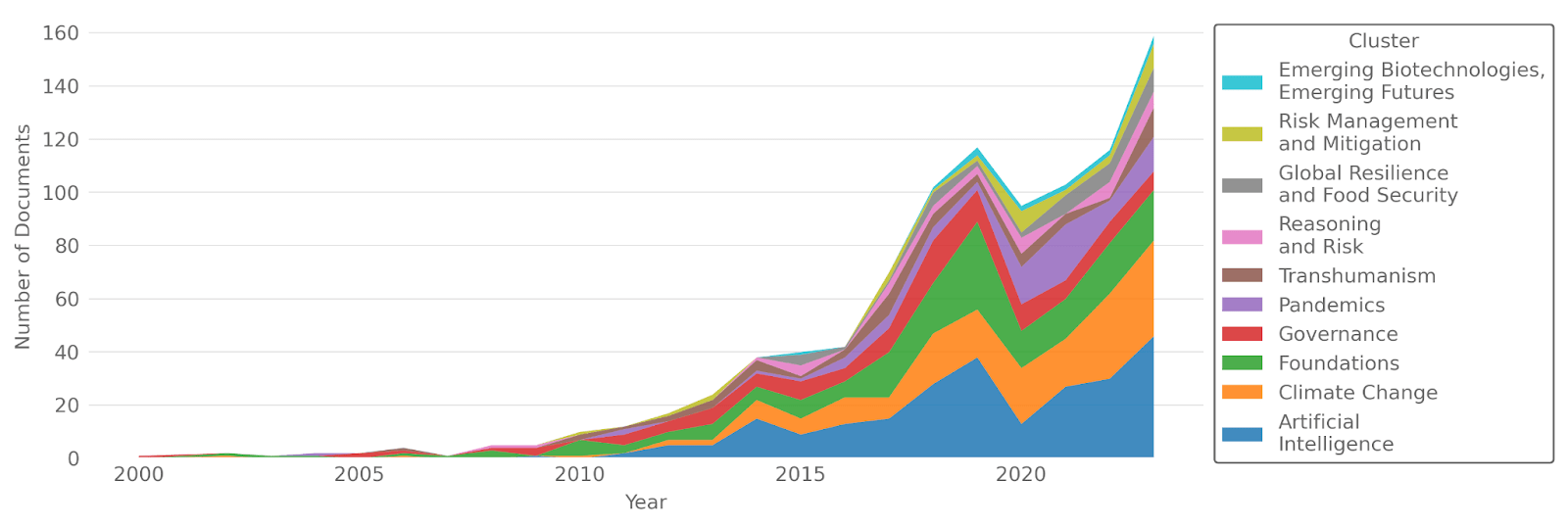

The analysis reveals remarkable growth in GCR/ER research, expanding from approximately 10 documents annually in 2010 to over 150 by 2023. This growth trajectory shows the field's increasing academic legitimacy and societal relevance, though a notable dip occurred in 2020, likely due to researchers pivoting to pandemic-related work during COVID-19.

GCR/ER documents per year by cluster.

Through bibliographic coupling analysis, ten distinct research clusters emerged, each representing different aspects of GCR/ER research: Foundations, Artificial Intelligence, Climate Change, Governance, Pandemics, Transhumanism, Global Resilience and Food Security, Risk Management and Mitigation, Reasoning and Risk, and Emerging Biotechnologies. These clusters demonstrate the field's interdisciplinary nature, touching on disciplines ranging from philosophy and ethics to climate science, agriculture, and technology studies.

Research Clusters and Their Interconnections

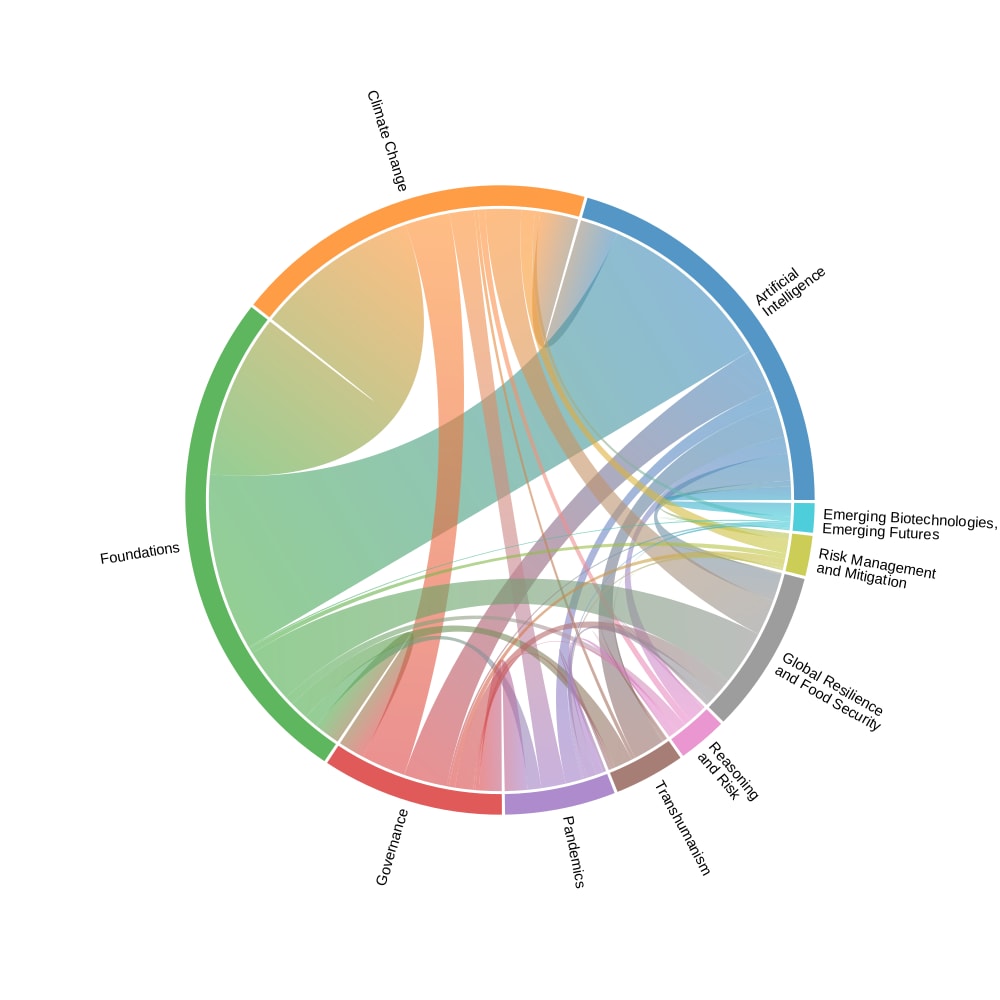

The Foundations cluster contains seminal works defining GCR and ER concepts, establishing methodological approaches, and making philosophical arguments for why preventing these risks should be a global priority. This cluster serves as the intellectual backbone of the field, with other clusters frequently citing its key texts.

The Artificial Intelligence cluster, representing about a quarter of annual publications, examines risks from advanced AI systems. Research here spans from technical analyses of AI alignment problems to philosophical discussions about digital consciousness and the ethics of artificial entities. The dominance of AI-related research reflects both the field's origins and contemporary concerns about rapid technological advancement.

Chord diagram of how the clusters relate to each other. The thicker the line is between two clusters, the more their references overlap.

Climate change research within the GCR/ER framework explores catastrophic warming scenarios, tipping points, and governance challenges. Unlike traditional climate research, this cluster often focuses on worst-case outcomes and their systemic impacts on human civilization. The Pandemics grew rapidly during COVID-19, examining not just biological risks but also governance failures and societal vulnerabilities exposed by global health crises.

The Global Resilience and Food Security cluster addresses practical interventions for maintaining food systems during catastrophes. This research area emerged around 2015 and focuses on "resilient foods" that could sustain populations following nuclear war, volcanic eruptions, or other sunlight-blocking events.

Geographic and Demographic Patterns

The field exhibits significant geographic concentration, with approximately 60% of publications originating from researchers in the United States and United Kingdom. This concentration reflects where key GCR/ER research institutions are located but also highlights a problematic lack of global diversity in perspectives on truly global risks.

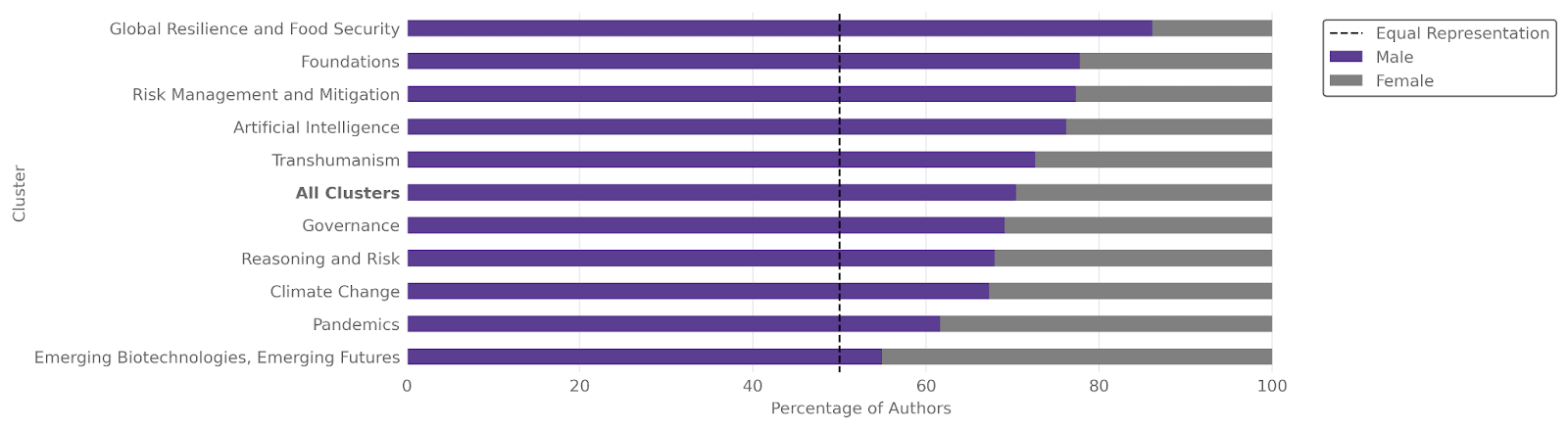

Gender representation shows even starker imbalances, with roughly 75% of authors being male. This disparity is particularly strong in certain clusters like Global Resilience and Food Security (85% male authors), while only the Pandemics and Biotechnology clusters approach gender parity. Among the most prolific researchers in the field, gender imbalance becomes even stronger, with none of them being female.

Gender balance in GCR/ER research based on all clusters.

Gaps and Future Directions

Several notable gaps emerge from this analysis. Despite nuclear war often being the first GCR people think of and its clear potential for global catastrophe, it remains underrepresented in GCR/ER literature. This possibly reflects the existence of established nuclear security research communities that predate GCR/ER terminology. Similarly, ecosystem collapse receives surprisingly little attention given its potential scale and likelihood.

The field lacks dedicated publication venues, with research scattered across diverse journals. No single journal serves as a focal point for GCR/ER research, potentially hampering community building and knowledge synthesis. Conference opportunities remain geographically limited, primarily concentrated in the US and UK.

Policy uptake of GCR/ER research also remains limited despite growing academic output. While some progress appears in initiatives like the UN's Pact for the Future and the US Global Catastrophic Risk Management Act, translation from research to policy implementation proceeds slowly.

Implications and Recommendations

This analysis suggests several priorities for advancing GCR/ER research. First, creating dedicated publication venues and geographically distributed conferences would help consolidate the field while improving accessibility. Second, actively addressing gender and geographic imbalances through targeted support and collaboration could enrich perspectives on these global challenges.

The field would also benefit from stronger connections to adjacent research areas like systemic risk, disaster risk reduction, and international relations. These connections could provide methodological tools and policy pathways while avoiding redundant efforts. Greater emphasis on understudied risks like ecosystem collapse and great power conflict could provide more comprehensive risk coverage.

Most critically, improving research-to-policy pipelines requires developing concrete scenarios, standardized assessment frameworks, and regional engagement strategies. The successful integration of climate science into policy frameworks provides a model for how GCR/ER research might achieve similar influence.

While challenges remain in diversity, coordination, and implementation, the field's rapid development and increasing sophistication offer hope for better understanding and mitigating humanity's greatest threats.

FJehn @ 2025-07-22T08:21 (+30)

Now that this paper is finally published, it feels a bit like a requiem to the field. Every non-AI GCR researcher I talked to in the last year or so is quite concerned about the future of the field. A large chunk of all GCR funding now goes to AI, leaving existing GCR orgs without any money. For example, ALLFED is having to cut a large part of their programs (https://forum.effectivealtruism.org/posts/K7hPmcaf2xEZ6F4kR/allfed-emergency-appeal-help-us-raise-usd800-000-to-avoid-1), even though pretty much everyone seems to agree that ALLFED is doing good work and should continue to exist.

I think funders like Open Phil or the Survival and Flourishing Fund should strongly consider putting more money into non-AI GCR research again. I get that many people think that AI risk is very imminent, but I don't think that this justifies to leave the rest of GCR research dying on the vine. It would be quite a bad outcome if in five years AI risk did not materialize, but most of the non-AI GCR orgs have ceased to exist, as all of the funding dried up.

Vasco Grilo🔸 @ 2025-07-22T17:17 (+2)

Thanks for sharing, Florian! Did you find any detailed quantitative estimates of the probability of the global population dropping by a given fraction? I would say this is a major gap of the global catastrophic risk and existential risk literature. I am only aware of guesses, like those in Carlsmith (2022).

FJehn @ 2025-07-22T17:39 (+3)

Hey Vasco. Haven't seen anything like this. But are talking about a probability estimates across all GCRs at once? My guess would be that the uncertainties would be so large, that it would not really tell you anything.

Vasco Grilo🔸 @ 2025-07-22T18:20 (+2)

Thanks, Florian. I was thinking about estimates like the probability of human population in 2050 being 99 % lower than in 2025 conditional on 1 k nuclear detonations in 2026.

In addition, I think it would be good to have estimates of the fraction of the expected damage caused by tails events as a fraction of the damage caused by all events. I got some for conflicts and pandemics.

FJehn @ 2025-07-22T18:42 (+3)

Ah okay get it. Have you considered asking those on Metaculus? Maybe you could get a rough ballpark there. But I am not aware of anything like this in peer reviewed research.

Vasco Grilo🔸 @ 2025-07-23T06:57 (+2)

There are some similar questions on Metaculus, which I think is good, but I do not trust their forecasts. I believe the ones for extreme events like human extinction are insensitive to small probabilities, and that detailed quantitative modelling would correct for this. I estimated a nearterm annual risk of human extinction from:

- Nuclear war of 5.93*10^-12, which is only 1.19*10^-6 (= 5.93*10^-12/(5*10^-6)) of the 5*10^-6 that I understand Toby Ord guessed in The Precipice.

- Supervolcanoes of 3.38*10^-14, which is only 6.76*10^-8 (= 3.38*10^-14/(5*10^-7)) of the 5*10^-7 that I understand Toby guessed in The Precipice.