Thoughts on these $1M and $500k AI safety grants?

By defun 🔸 @ 2024-07-11T13:37 (+50)

Open Philanthropy had a request for proposals for "benchmarking LLM agents on consequential real-world tasks".

At least two of the grants went to professors who are developing agents (advancing capabilities).

$1,045,620 grant

From https://www.openphilanthropy.org/grants/princeton-university-software-engineering-llm-benchmark/

Open Philanthropy recommended a grant of $1,045,620 to Princeton University to support a project to develop a benchmark for evaluating the performance of Large Language Model (LLM) agents in software engineering tasks, led by Assistant Professor Karthik Narasimhan.

From Karthik Narasimhan's LinkedIn: "My goal is to build intelligent agents that learn to handle the dynamics of the world through experience and existing human knowledge (ex. text). I am specifically interested in developing autonomous systems that can acquire language understanding through interaction with their environment while also utilizing textual knowledge to drive their decision making."

$547,452 grant

From https://www.openphilanthropy.org/grants/carnegie-mellon-university-benchmark-for-web-based-tasks/

Open Philanthropy recommended a grant of $547,452 to Carnegie Mellon University to support research led by Professor Graham Neubig to develop a benchmark for the performance of large language models conducting web-based tasks in the work of software engineers, managers, and accountants.

Graham Neubig is one of the co-founders of All Hands AI which is developing OpenDevin.

All Hands AI's mission is to build AI tools to help developers build software faster and better, and do it in the open.

Our flagship project is OpenDevin, an open-source software development agent that can autonomously solve software development tasks end-to-end.

Webinar

In the webinar when the RFP's were announced, Max Nadeau said (minute 19:00): "a lot of the time when you construct the benchmark you're going to put some effort into making the capable LLM agent that can actually demonstrate accurately what existing models are capable of, but for the most part we're imagining, for both our RFPs, the majority of the effort is spent on performing the measurement as opposed to like trying to increase performance on it".

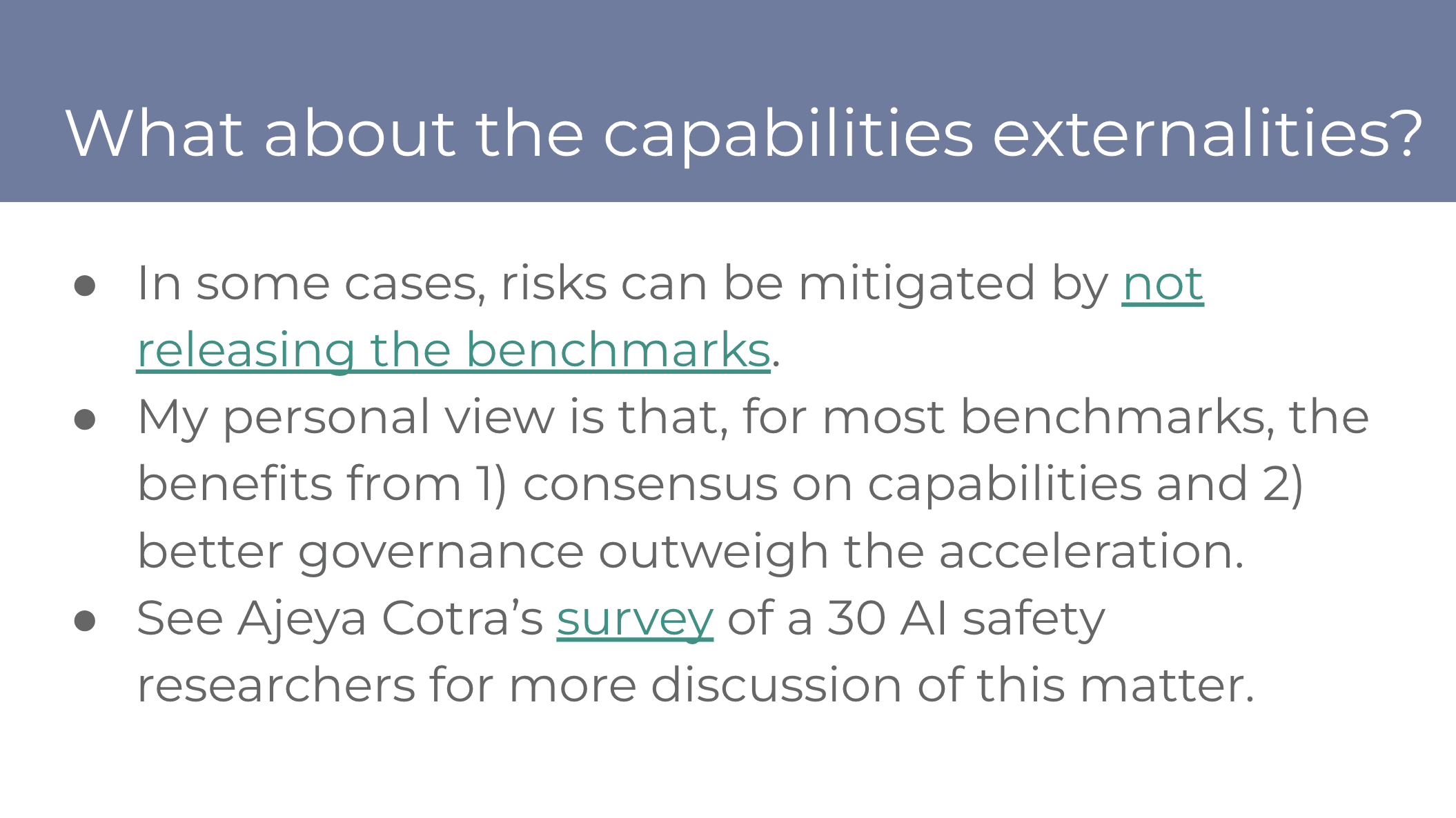

They were already aware that these grants would fund the development of agents and addressed this concern in the same webinar (minute 21:55).

Owen Cotton-Barratt @ 2024-07-11T14:56 (+29)

My thoughts:

- It's totally possible to have someone who does a mix of net-positive and net-harmful work, and for it to be good to fund them to do more of the net-positive work

- In general, one might reasonably be suspicious that grants could subsidize their harmful work, or that working on harmful things could indicate poor taste which might mean they won't do a good job with the helpful stuff

- This is more an issue if you can't tell whether they did the work you wanted, and if you have no enforcement mechanism to ensure they do

- Benchmarks seem unusually far in the direction of "you can tell if people did a good job"

- This is more an issue if you can't tell whether they did the work you wanted, and if you have no enforcement mechanism to ensure they do

- OTOH in principle funding them to work on positive things might end up pulling attention from the negative things

- In general, one might reasonably be suspicious that grants could subsidize their harmful work, or that working on harmful things could indicate poor taste which might mean they won't do a good job with the helpful stuff

- However ... I think the ontology of "advancing capabilities" is too crude to be useful here

- I think that some types of capabilities work are among the most in-expectation positive things people could be doing, and others are among the most negative

- It's hard to tell from these descriptions where their work falls

- In any case, this makes me primarily want to evaluate how good it would be to have these benchmarks

- Mostly benchmarks seem like they could be helpful in orienting the world to what's happening

- i.e. I buy the top-level story for impact has something to it

- Benchmarks could be harmful, via giving something for people to aim towards, and thereby accelerating research

- I think this may be increasingly a concern as we get close to major impacts

- But prima facie I'd expect this to be substituting for other comparably-good benchmarks for that purpose, whereas it is more just a new thing that wouldn't exist otherwise for helping people to orient

- My gut take is mildly-sceptical it's a good grant: like if you got great benchmarks from these grants, I'd be happy; but I sort of suspect that most things that look like this turn into something kind of underwhelming, and after accounting for that I wonder if it's worthwhile

- I can imagine having my mind changed on this point pretty easily

- I do think there's something healthy about saying "Activity X is one the world should be doing and isn't; we're just going to fund the most serious attempts we can find to do X, and let the chips fall where they may"

- Mostly benchmarks seem like they could be helpful in orienting the world to what's happening

defun @ 2024-07-15T11:33 (+3)

Thanks for your thorough comment, Owen.

And do the amounts ($1M and $0.5M) seem reasonable to you?

As a point of reference, Epoch AI is hiring a "Project Lead, Mathematics Reasoning Benchmark". This person will receive ~$100k for a 6-month contract.

Owen Cotton-Barratt @ 2024-07-22T22:49 (+3)

There are different reference classes we might use for "reasonable" here. I believe that paying the salary just of the researchers involved to do the key work will usually be a good amount less (but maybe not if you're having to compete with AI lab salaries?). But I think that that's not very available on the open market (i.e. for funders, who aren't putting in the management time), unless someone good happens to want to research this anyway. In the reference class of academic grants, this looks relatively normal.

It's a bit hard from the outside to be second-guessing the funders' decisions, since I don't know what information they had available. The decisions would look better the more there was a good prototype or other reason to feel confident that they'd produce a strong benchmark. It might be that it would be optimal to investigate getting less thorough work done for less money, but it's not obvious to me.

I guess this is all a roundabout way of saying "naively it seems on the high side to me, but I can totally imagine learning information such that it would seem very reasonable".

Yonatan Cale @ 2024-07-14T15:12 (+2)

Any idea if these capabilities were made public or, for example, only used for private METR evals?

defun @ 2024-07-15T07:34 (+5)

In the case of OpenDevin it seems like the grant is directly funding an open-source project that advances capabilities.

I'd like more transparency on this.