Critique of MacAskill’s “Is It Good to Make Happy People?”

By Magnus Vinding @ 2022-08-23T09:21 (+225)

In What We Owe the Future, William MacAskill delves into population ethics in a chapter titled “Is It Good to Make Happy People?” (Chapter 8). As he writes at the outset of the chapter, our views on population ethics matter greatly for our priorities, and hence it is important that we reflect on the key questions of population ethics. Yet it seems to me that the book skips over some of the most fundamental and most action-guiding of these questions. In particular, the book does not broach questions concerning whether any purported goods can outweigh extreme suffering — and, more generally, whether happy lives can outweigh miserable lives — even as these questions are all-important for our priorities.

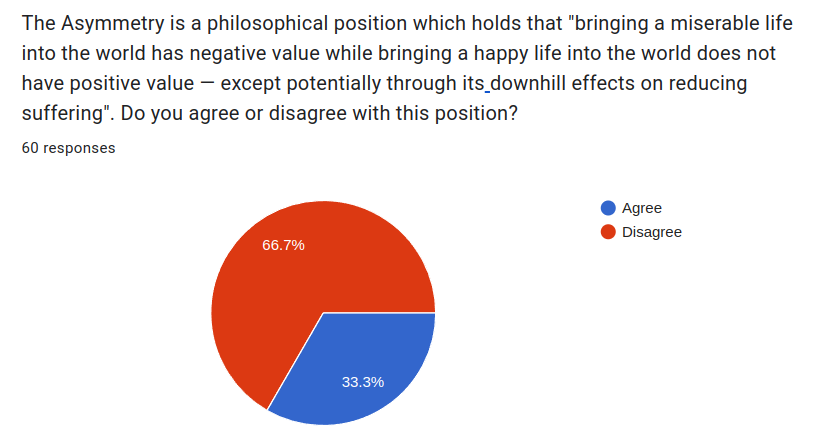

The Asymmetry in population ethics

A prominent position that gets a very short treatment in the book is the Asymmetry in population ethics (roughly: bringing a miserable life into the world has negative value while bringing a happy life into the world does not have positive value — except potentially through its instrumental effects and positive roles).

The following is, as far as I can tell, the main argument that MacAskill makes against the Asymmetry (p. 172):

If we think it’s bad to bring into existence a life of suffering, why should we not think that it’s good to bring into existence a flourishing life? I think any argument for the first claim would also be a good argument for the second.

This claim about “any argument” seems unduly strong and general. Specifically, there are many arguments that support the intrinsic badness of bringing a miserable life into existence that do not support any intrinsic goodness of bringing a flourishing life into existence. Indeed, many arguments support the former while positively denying the latter.

One such argument is that the presence of suffering is bad and morally worth preventing while the absence of pleasure is not bad and not a problem, and hence not morally worth “fixing” in a symmetric way (provided that no existing beings are deprived of that pleasure).[1]

A related class of arguments in favor of an asymmetry in population ethics is based on theories of wellbeing that understand happiness as the absence of cravings, preference frustrations, or other bothersome features. According to such views, states of untroubled contentment are just as good — and perhaps even better than — states of intense pleasure.[2]

These views of wellbeing likewise support the badness of creating miserable lives, yet they do not support any supposed goodness of creating happy lives. On these views, intrinsically positive lives do not exist, although relationally positive lives do.

Another point that MacAskill raises against the Asymmetry is an example of happy children who already exist, about which he writes (p. 172):

if I imagine this happiness continuing into their futures—if I imagine they each live a rewarding life, full of love and accomplishment—and ask myself, “Is the world at least a little better because of their existence, even ignoring their effects on others?” it becomes quite intuitive to me that the answer is yes.

However, there is a potential ambiguity in this example. The term “existence” may here be understood to either mean “de novo existence” or “continued existence”, and interpreting it as the latter is made more tempting by the fact that 1) we are talking about already existing beings, and 2) the example mentions their happiness “continuing into their futures”.[3]

This is relevant because many proponents of the Asymmetry argue that there is an important distinction between the potential value of continued existence (or the badness of discontinued existence) versus the potential value of bringing a new life into existence.

Thus, many views that support the Asymmetry will agree that the happiness of these children “continuing into their futures” makes the world better, or less bad, than it otherwise would be (compared to a world in which their existing interests and preferences are thwarted). But these views still imply that the de novo creation (and eventual satisfaction) of these interests and preferences does not make the world better than it otherwise would be, had they not been created in the first place. (Some sources that discuss or defend these views include Singer, 1980; Benatar, 1997; 2006; Fehige, 1998; Anonymous, 2015; St. Jules, 2019; Frick, 2020.)

A proponent of the Asymmetry may therefore argue that the example above carries little force against the Asymmetry, as opposed to merely supporting the badness of preference frustrations and other deprivations for already existing beings.[4]

Questions about outweighing

Even if one thinks that it is good to create more happiness and new happy lives all else equal, this still leaves open the question as to whether happiness and happy lives can outweigh suffering and miserable lives, let alone extreme suffering and extremely bad lives. After all, one may think that more happiness is good while still maintaining that happiness cannot outweigh intense suffering or very bad lives — or even that it cannot outweigh the worst elements found in relatively good lives. In other words, one may hold that the value of happiness and the disvalue of suffering are in some sense orthogonal (cf. Wolf, 1996; 1997; 2004).

As mentioned above, these questions regarding tradeoffs and outweighing are not raised in MacAskill’s discussion of population ethics, despite their supreme practical significance.[5] One way to appreciate this practical significance is by considering a future in which a relatively small — yet in absolute terms vast — minority of beings live lives of extreme and unrelenting suffering. This scenario raises what I have elsewhere (sec. 14.3) called the “Astronomical Atrocity Problem”: can the extreme and incessant suffering of, say, trillions of beings be outweighed by any amount of purported goods? (See also this short excerpt from Vinding, 2018.)

After all, an extremely large future civilization would contain such (in absolute terms) vast amounts of extreme suffering in expectation, which renders this problem frightfully relevant for our priorities.

MacAskill’s chapter does discuss the Repugnant Conclusion at some length, yet the Repugnant Conclusion does not explicitly involve any tradeoffs between happiness and suffering,[6] and hence it has limited relevance compared to, for example, the Very Repugnant Conclusion (roughly: that arbitrarily many hellish lives can be “compensated for” by a sufficiently vast number of lives that are “barely worth living”).[7]

Indeed, the Very Repugnant Conclusion and similar such “offsetting conclusions” would seem more relevant to discuss both because 1) they do explicitly involve tradeoffs between happiness and suffering, or between happy lives and miserable lives, and because 2) MacAskill himself has stated that he considers the Very Repugnant Conclusion to be the strongest objection against his favored view, and stronger objections generally seem more worth discussing than do weaker ones.[8]

Popular support for significant asymmetries in population ethics

MacAskill briefly summarizes a study that surveyed people’s views on population ethics. Among other things, he writes the following about the findings of the study (p. 173):

these judgments [about the respective value of creating happy lives and unhappy lives] were symmetrical: the experimental subjects were just as positive about the idea of bringing into existence a new happy person as they were negative about the idea of bringing into existence a new unhappy person.

While this summary seems accurate if we only focus on people’s responses to one specific question in the survey (cf. Caviola et al., 2022, p. 9), there are nevertheless many findings in the study that suggest that people generally do endorse significant asymmetries in population ethics.

Specifically, the study found that people on average believed that considerably more happiness than suffering is needed to render a population or an individual life worthwhile, even when the happiness and suffering were said to be equally intense (Caviola et al., 2022, p. 8). The study likewise found that participants on average believed that the ratio of happy to unhappy people in a population must be at least 3-to-1 for its existence to be better than its non-existence (Caviola et al., 2022, p. 5).

Another relevant finding is that people generally have a significantly stronger preference for smaller over larger unhappy populations than they do for larger over smaller happy populations, and the magnitude of this difference becomes greater as the populations under consideration become larger (Caviola et al., 2022, pp. 12-13).

In other words, people’s preference for smaller unhappy populations becomes stronger as population size increases, whereas the preference for larger happy populations becomes less strong as population size increases, in effect creating a strong asymmetry in cases involving large populations (e.g. above one billion individuals). This finding seems particularly relevant when discussing laypeople’s views of population ethics in a context that is primarily concerned with the value of potentially vast future populations.[9]

Moreover, a pilot study conducted by the same researchers suggested that the framing of the question plays a major role for people’s intuitions (Caviola et al., 2022, “Supplementary Materials”). In particular, the pilot study (n=172) asked people the following question:

Suppose you could push a button that created a new world with X people who are generally happy and 10 people who generally suffer. How high would X have to be for you to push the button?

When the question was framed in these terms, i.e. in terms of creating a new world, people’s intuitions were radically more asymmetric, as the median ratio then jumped to 100-to-1 happy to unhappy people, which is a rather pronounced asymmetry.[10]

In sum, it seems that the study that MacAskill cites above, when taken as a whole, mostly finds that people on average do endorse significant asymmetries in population ethics. I think this documented level of support for asymmetries would have been worth mentioning.

(Other surveys that suggest that people on average affirm a considerable asymmetry in the value of happiness vs. suffering and good vs. bad lives include the Future of Life Institute’s Superintelligence survey (n=14,866) and Tomasik, 2015 (n=99).)

The discussion of moral uncertainty excludes asymmetric views

Toward the end of the chapter, MacAskill briefly turns to moral uncertainty, and he ends his discussion of the subject on the following note (p. 187):

My colleagues Toby Ord and Hilary Greaves have found that this approach to reasoning under moral uncertainty can be extended to a range of theories of population ethics, including those that try to capture the intuition of neutrality. When you are uncertain about all of these theories, you still end up with a low but positive critical level [of wellbeing above which it is a net benefit for a new being to be created for their own sake].

Yet the analysis in question appears to wholly ignore asymmetric views in population ethics. If one gives significant weight to asymmetric views — not to mention stronger minimalist views in population ethics — the conclusion of the moral uncertainty framework is likely to change substantially, perhaps so much so that the creation of new lives is generally not a benefit for the created beings themselves (although it could still be a net benefit for others and for the world as a whole, given the positive roles of those new lives).

Similarly, even if the creation of unusually happy lives would be regarded as a benefit from a moral uncertainty perspective that gives considerable weight to asymmetric views, this benefit may still not be sufficient to counterbalance extremely bad lives,[11] which are granted unique weight by many plausible axiological and moral views (cf. Mayerfeld, 1999, pp. 114-116; Vinding, 2020, ch. 6).[12]

References

Ajantaival, T. (2021/2022). Minimalist axiologies. Ungated

Anonymous. (2015). Negative Utilitarianism FAQ. Ungated

Benatar, D. (1997). Why It Is Better Never to Come into Existence. American Philosophical Quarterly, 34(3), pp. 345-355. Ungated

Benatar, D. (2006). Better Never to Have Been: The Harm of Coming into Existence. Oxford University Press.

Caviola, L. et al. (2022). Population ethical intuitions. Cognition, 218, 104941. Ungated; Supplementary Materials

Contestabile, B. (2022). Is There a Prevalence of Suffering? An Empirical Study on the Human Condition. Ungated

DiGiovanni, A. (2021). A longtermist critique of “The expected value of extinction risk reduction is positive”. Ungated

Fehige, C. (1998). A pareto principle for possible people. In Fehige, C. & Wessels U. (eds.), Preferences. Walter de Gruyter. Ungated

Frick, J. (2020). Conditional Reasons and the Procreation Asymmetry. Philosophical Perspectives, 34(1), pp. 53-87. Ungated

Future of Life Institute. (2017). Superintelligence survey. Ungated

Gloor, L. (2016). The Case for Suffering-Focused Ethics. Ungated

Gloor, L. (2017). Tranquilism. Ungated

Hurka, T. (1983). Value and Population Size. Ethics, 93, pp. 496-507.

James, W. (1901). Letter on happiness to Miss Frances R. Morse. In Letters of William James, Vol. 2 (1920). Atlantic Monthly Press.

Knutsson, S. (2019). Epicurean ideas about pleasure, pain, good and bad. Ungated

MacAskill, W. (2022). What We Owe The Future. Basic Books.

Mayerfeld, J. (1999). Suffering and Moral Responsibility. Oxford University Press.

Parfit, D. (1984). Reasons and Persons. Oxford University Press.

Sherman, T. (2017). Epicureanism: An Ancient Guide to Modern Wellbeing. MPhil dissertation, University of Exeter. Ungated

Singer, P. (1980). Right to Life? Ungated

St. Jules, M. (2019). Defending the Procreation Asymmetry with Conditional Interests. Ungated

Tomasik, B. (2015). A Small Mechanical Turk Survey on Ethics and Animal Welfare. Ungated

Tsouna, V. (2020). Hedonism. In Mitsis, P. (ed.), Oxford Handbook of Epicurus and Epicureanism. Oxford University Press.

Vinding, M. (2018). Effective Altruism: How Can We Best Help Others? Ratio Ethica. Ungated

Vinding, M. (2020). Suffering-Focused Ethics: Defense and Implications. Ratio Ethica. Ungated

Wolf, C. (1996). Social Choice and Normative Population Theory: A Person Affecting Solution to Parfit’s Mere Addition Paradox. Philosophical Studies, 81, pp. 263-282.

Wolf, C. (1997). Person-Affecting Utilitarianism and Population Policy. In Heller, J. & Fotion, N. (eds.), Contingent Future Persons. Kluwer Academic Publishers. Ungated

Wolf, C. (2004). O Repugnance, Where Is Thy Sting? In Tännsjö, T. & Ryberg, J. (eds.), The Repugnant Conclusion. Kluwer Academic Publishers. Ungated

- ^

Further arguments against a moral symmetry between happiness and suffering are found in Mayerfeld, 1999, ch. 6; Vinding, 2020, sec. 1.4 & ch. 3.

- ^

On some views of wellbeing, especially those associated with Epicurus, the complete absence of any bothersome or unpleasant features is regarded as the highest pleasure, Sherman, 2017, p. 103; Tsouna, 2020, p. 175. Psychologist William James also expressed this view, James, 1901.

- ^

I am not saying that the “continued existence” interpretation is necessarily the most obvious one to make, but merely that there is significant ambiguity here that is likely to confuse many readers as to what is being claimed.

- ^

Moreover, a proponent of minimalist axiologies may argue that the assumption of “ignoring all effects on others” is so radical that our intuitions are unlikely to fully ignore all such instrumental effects even when we try to, and hence we may be inclined to confuse 1) the relational value of creating a life with 2) the (purported) intrinsic positive value contained within that life in isolation — especially since the example involves a life that is “full of love and accomplishment”, which might intuitively evoke many effects on others, despite the instruction to ignore such effects.

- ^

MacAskill’s colleague Andreas Mogensen has commendably raised such questions about outweighing in his essay “The weight of suffering”, which I have discussed here.

Chapter 9 in MacAskill’s book does review some psychological studies on intrapersonal tradeoffs and preferences (see e.g. p. 198), but these self-reported intrapersonal tradeoffs do not necessarily say much about which interpersonal tradeoffs we should consider plausible or valid. Nor do these intrapersonal tradeoffs generally appear to include cases of extreme suffering, let alone an entire lifetime of torment (as experienced, for instance, by many of the non-human animals whom MacAskill describes in Chapter 9). Hence, that people are willing to make intrapersonal tradeoffs between everyday experiences that are more or less enjoyable says little about whether some people’s enjoyment can morally outweigh the intense suffering or extremely bad lives endured by others. (In terms of people’s self-reported willingness to experience extreme suffering in order to gain happiness, a small survey (n=99) found that around 45 percent of respondents would not experience even a single minute of extreme suffering for any amount of happiness; and that was just the intrapersonal case — such suffering-for-happiness trades are usually considered less plausible and less permissible in the interpersonal case, cf. Mayerfeld, 1999, pp. 131-133; Vinding, 2020, sec. 3.2.)

Individual ratings of life satisfaction are similarly limited in terms of what they say about intrapersonal tradeoffs. Indeed, even a high rating of momentary life satisfaction does not imply that the evaluator’s life itself has overall been worth living, even by the evaluator’s own standards. After all, one may report a very high quality of life yet still think that the good part of one’s life cannot outweigh one’s past suffering. It is thus rather limited what we can conclude about the value of individual lives, much less the world as a whole, based on people’s momentary ratings of life satisfaction.

Finally, MacAskill also mentions various improvements that have occurred in recent centuries as a reason to be optimistic about the future of humanity in moral and evaluative terms. Yet it is unclear whether any of the improvements he mentions involve genuine positive goods, as opposed to representing a reduction of bads, e.g. child mortality, poverty, totalitarian rule, and human slavery (cf. Vinding, 2020, sec. 8.6).

- ^

Some formulations of the Repugnant Conclusion do involve tradeoffs between happiness and suffering, and the conclusion indeed appears much more repugnant in those versions of the thought experiment.

- ^

One might object that the Very Repugnant Conclusion has limited practical significance because it represents an unlikely scenario. But the same could be said about the Repugnant Conclusion (especially in its suffering-free variant). I do not claim that the Very Repugnant Conclusion is the most realistic case to consider. When I claim that it is more practically relevant than the Repugnant Conclusion, it is simply because it does explicitly involve tradeoffs between happiness and (extreme) suffering, which we know will also be true of our decisions pertaining to the future.

- ^

For what it’s worth, I think an even stronger counterexample is “Creating hell to please the blissful”, in which an arbitrarily large number of maximally bad lives are “compensated for” by bringing a sufficiently vast base population from near-maximum welfare to maximum welfare.

- ^

Some philosophers have explored, and to some degree supported, similar views. For example, Derek Parfit wrote (Parfit, 1984, p. 406): “When we consider the badness of suffering, we should claim that this badness has no upper limit. It is always bad if an extra person has to endure extreme agony. And this is always just as bad, however many others have similar lives. The badness of extra suffering never declines.” In contrast, Parfit seemed to consider it more plausible that the addition of happiness adds diminishing marginal value to the world, even though he ultimately rejected that view because he thought it had implausible implications, Parfit, 1984, pp. 406-412. See also Hurka, 1983; Gloor, 2016, sec. IV; Vinding, 2020, sec. 6.2. Such views imply that it is of chief importance to avoid very bad outcomes on a very large scale, whereas it is relatively less important to create a very large utopia.

- ^

This framing effect could be taken to suggest that people often fail to fully respect the radical “other things being equal” assumption when considering the addition of lives in our world. That is, people might not truly have thought about the value of new lives in total isolation when those lives were to be added to the world we inhabit, whereas they might have come closer to that ideal when they considered the question in the context of creating a new, wholly self-contained world. (Other potential explanations of these differences are reviewed in Contestabile, 2022, sec. 4; Caviola et al., 2022, “Supplementary Materials”, pp. 7-8.)

- ^

Or at least not sufficient to counterbalance the substantial number of very bad lives that the future contains in expectation, cf. the Astronomical Atrocity Problem mentioned above.

- ^

Further discussion of moral uncertainty from a perspective that takes asymmetric views into account is found in DiGiovanni, 2021.

David_Althaus @ 2022-08-24T10:37 (+122)

Thanks Magnus for your more comprehensive summary of our population ethics study.

You mention this already, but I want to emphasize how much different framings actually matter. This surprised me the most when working on this paper. I’d thus caution anyone against making strong inferences from just one such study.

For example, we conducted the following pilot study (n = 101) where participants were randomly assigned to two different conditions: i) create a new happy person, and ii) create a new unhappy person. See the vignette below:

Imagine there was a magical machine. This machine can create a new adult person. This new person’s life, however, would definitely [not] be worth living. They would be very unhappy [happy] and live a life full of suffering and misery [bliss and joy].

You can push a button that would create this new person.

Morally speaking, how good or bad would it be to push that button?

The response scale ranged from 1 = Extremely bad to 7 = Extremely good.

Creating a happy person was rated as only marginally better than neutral (mean = 4.4), whereas creating an unhappy person was rated as extremely bad (mean = 1.4). So this would lead one to believe that there is strong popular support for the asymmetry. [1]

However, those results were most likely due to the magical machine framing and/or the “push-a-button” framing. Even though these framings clearly “shouldn’t” make such a huge difference.

All in all, we tested many different framings, too many to discuss here. Occasionally, there were significant differences between framings that shouldn't matter (though we also observed many regularities). For example, we had one pilot with the “multiplier framing”:

Suppose the world contains 1,000 people in total. How many times bigger would the number of extremely happy people have to be than the number of extremely unhappy people for you to think that this world is overall positive rather than negative (i.e., so that it would be better for the world to exist rather than not exist)?

Here, the median trade ratio was 8.5 compared to the median trade ratio of 3-4 that we find in our default framing. It’s clear that the multiplier framing shouldn’t make any difference from a philosophical perspective.

So seemingly irrelevant or unimportant changes in framings (unimportant at least from a consequentialist perspective) sometimes could lead to substantial changes in median trade ratios.

However, changes in the intensity of the experienced happiness and suffering—which is arguably the most important aspect of the whole thought experiment—affected the trade ratios considerably less than the above mentioned multiplier framing.

To see this, it’s worth looking closely at the results of study 1b. Participants were first presented with the following scale:

Let's assume a happiness scale ranging from -100 (extreme unhappiness) to 0 (neutral) to +100 (extreme happiness). Someone on level 0 is in a neutral state that feels neither good nor bad. Someone on level -1 experiences a very mild form of unhappiness, only slightly worse than being in a neutral state. Someone on level +1 experiences a very mild form of happiness, only slightly better than being in a neutral state. Someone on level -100 experiences the absolute worst form of suffering imaginable. Someone on level +100 experiences the absolute best form of bliss imaginable.

[Editor’s note: From now on, the text is becoming more, um, expressive.]

Note that “worst form of suffering imaginable” is pretty darn bad. Being brutally tortured while kept alive by nano bots is more like -90 on this scale. Likewise, “absolute best form of bliss imaginable” is pretty far out there. Feeling, all your life, like you just created friendly AGI and found your soulmate, while being high on ecstasy would still not be +100.

(Note that we also conducted a pilot study where we used more concrete and explicit descriptions such as “torture”, “falling in love”, “mild headaches”, and “good meal” to describe the feelings of mild or extreme [un]happiness. The results were similar.)

Afterwards, participants were asked:

Given this information, what percentage of extremely [mildly] happy people vs. extremely [mildly] unhappy people would there have to be for you to think that this world is overall positive rather than negative (i.e., so that it would be better for the world to exist rather than not exist)?

In my view, the percentage [...] would need to be as follows: X% extremely [mildly] happy people; Y% extremely [mildly] unhappy people.

So how do the MTurkers approach these awe-inspiring intensities?

First, extreme happiness vs. extreme unhappiness. MTurkers think that there need to exist at least 72% people experiencing the absolute best form of bliss imaginable in order to outweigh the suffering of 28% of people experiencing the worst form of suffering imaginable.

Toby Ord and the classical utilitarians rejoice, that’s not bad! That’s like a 3:1 trade ratio, pretty close to a 1:1 trade ratio! “And don’t forget that people’s imagination is likely biased towards negativity for evolutionary reasons!”, Carl Shulman says. “In humans, the pleasure of orgasm may be less than the pain of deadly injury, since death is a much larger loss of reproductive success than a single sex act is a gain.” Everyone nods in agreement with the Shulmaster.

How about extreme happiness vs. mild unhappiness? MTurkers say that there need to exist at least 62% of people experiencing the absolute best form of bliss imaginable in order to outweigh the extremely mild suffering of unhappy people (e.g., people who are stubbing their toes a bit too often for their liking). Brian Tomasik and the suffering-focused crowd rejoice, a 1.5 : 1 trade ratio for practically hedonium to mild suffering?! There is no way the expected value of the future is that good. Reducing s-risks is common sense after all!

How about mild happiness vs. extreme unhappiness? The MTurkers have spoken: A world in which 82% of people experience extremely mild happiness—i.e., eating particularly bland potatoes and listening to muzak without one’s hearing aids on—and 18% of people are brutally tortured while being kept alive by nano bots, is… net positive.

“Wait, that’s a trade ratio of 4.5:1 !” Toby says. “How on Earth is this compatible with a trade ratio of 3:1 for practically hedonium vs. highly optimized suffering, let alone a trade ratio of 1.5:1 for practically hedonium vs. stubbing your toes occasionally!” Carl screams. He looks at Brian but Brian has already fainted.

Toby, Carl and Brian meet the next day, still looking very pale. They shake hands and agree to not do so much descriptive ethics anymore.

Years later, all three still cannot stop wincing with pain when “the Long Reflection” is mentioned.

- ^

We also had two conditions about preventing the creation of a happy [unhappy] person. Preventing a happy person from being created (mean = 3.1) was rated as somewhat bad. Preventing an unhappy person (mean = 5.5) from being created was rated as fairly good.

CarlShulman @ 2022-08-29T02:06 (+31)

Toby, Carl and Brian meet the next day, still looking very pale. They shake hands and agree to not do so much descriptive ethics anymore.

Garbage answers to verbal elicitations on such questions (and real life decisions that require such explicit reasoning without feedback/experience, like retirement savings) are actually quite central to my views. In particular, my reliance on situations where it is easier for individuals to experience things multiple times in easy-to-process fashion and then form a behavioral response. I would be much less sanguine about error theories regarding such utterances if we didn't also see people in surveys saying they would rather take $1000 than a 15% chance of $1M, or $100 now rather than $140 a year later, i.e. utterances that are clearly mistakes.

Looking at the literature on antiaggregationist views, and the complete conflict of those moral intuitions with personal choices and self-concerned practice (e.g. driving cars or walking outside) is also important to my thinking. No-tradeoffs views are much more appealing outside our own domains of rich experience in talk.

Brian_Tomasik @ 2022-09-06T06:00 (+28)

Good points!

situations where it is easier for individuals to experience things multiple times in easy-to-process fashion and then form a behavioral response

It's not obvious to me that our ethical evaluation should match with the way our brains add up good and bad past experiences at the moment of deciding whether to do more of something. For example, imagine that someone loves to do extreme sports. One day, he has a severe accident and feels so much pain that he, in the moment, wishes he had never done extreme sports or maybe even wishes he had never been born. After a few months in recovery, the severity of those agonizing memories fades, and the temptation to do the sports returns, so he starts doing extreme sports again. At that future point in time, his brain has implicitly made a decision that the enjoyment outweighs the risk of severe suffering. But our ethical evaluation doesn't have to match how the evolved emotional brain adds things up at that moment in time. We might think that, ethically, the version of the person who was in extreme pain isn't compensated by other moments of the same person having fun.

Even if we think enjoyment can outweigh severe suffering within a life, many people object to extending such tradeoffs across lives, when one person is severely harmed for the benefit of others. The examples in David's comment were about interpersonal tradeoffs, rather than intrapersonal ones. It's true that people impose small risks of extreme suffering on some for the happiness of others all the time, like in the case of driving purely for leisure, but that still begs the question of whether we should do that. Most people in the West also eat chickens, but they shouldn't. (Cases like driving are also complicated by instrumental considerations, as Magnus would likely point out. Also, not driving for leisure might itself cause some people nontrivial levels of suffering, such as by worsening mental-health problems.)

CarlShulman @ 2022-09-11T05:03 (+28)

Hi Brian,

I agree that preferences at different times and different subsystems can conflict. In particular, high discounting of the future can lead to forgoing a ton of positive reward or accepting lots of negative reward in the future in exchange for some short-term change. This is one reason to pay extra attention to cases of near-simultaneous comparisons, or at least to look at different arrangements of temporal ordering. But still the tradeoffs people make for themselves with a lot of experience under good conditions look better than what they tend to impose on others casually. [Also we can better trust people's self-benevolence than their benevolence towards others, e.g. factory farming as you mention.]

And the brain machinery for processing stimuli into decisions and preferences does seem very relevant to me at least, since that's a primary source of intuitive assessments of these psychological states as having value, and for comparisons where we can make them. Strong rejection of interpersonal comparisons is also used to argue that relieving one or more pains can't compensate for losses to another individual.

I agree the hardest cases for making any kind of interpersonal comparison will be for minds with different architectural setups and conflicting univocal viewpoints, e.g. 2 minds with equally passionate complete enthusiasm (with no contrary psychological processes or internal currencies to provide reference points) respectively for and against their own experience, or gratitude and anger for their birth (past or future). They can respectively consider a world with and without their existences completely unbearable and beyond compensation. But if we're in the business of helping others for their own sakes rather than ours, I don't see the case for excluding either one's concern from our moral circle.

Now, one can take take a more nihilistic/personal aesthetics view of morality, and say that one doesn't personally care about the gratitude of minds happy to exist. I take it this is more your meta-ethical stance around these things? There are good arguments for moral irrealism and nihilism, but it seems to me that going too far down this route can lose a lot of the point of the altruistic project. If it's not mainly about others and their perspectives, why care so much about shaping (some of) their lives and attending to (some of) their concerns?

David Pearce sometimes uses the Holocaust to argue for negative utilitarianism, to say that no amount of good could offset the pain people suffered there. But this view dismisses (or accidentally valorizes) most of the evil of the Holocaust. The death camps centrally were destroying lives and attempting to destroy future generations of peoples, and the people inside them wanted to live free, and being killed sooner was not a close substitute. Killing them (or willfully letting them die when it would be easy to prevent) if they would otherwise escape with a delay would not be helping them for their own sakes, but choosing to be their enemy by only selectively attending to their concerns. And even though some did choose death. Likewise to genocide by sterilization (in my Jewish household growing up the Holocaust was cited as a reason to have children).

Future generations, whether they enthusiastically endorse or oppose their existence, don't have an immediate voice (or conventional power) here and now their existence isn't counterfactually robust. But when I'm in a mindset of trying to do impartial good I don't see the appeal of ignoring those who would desperately, passionately want to exist, and their gratitude in worlds where they do.

I see demandingness and contractarian/game theory/cooperation reasons that bound sacrifice to realize impartial uncompensated help to others, and inevitable moral dilemmas (almost all beings that could exist in a particular location won't, wild animals are desperately poor and might on average wish they didn't exist, people have conflicting desires, advanced civilizations I expect will have far more profoundly self-endorsing good lives than unbearably bad lives but on average across the cosmos will have many of the latter by sheer scope). But being an enemy of all the countless beings that would like to exist, or do exist and would like to exist more (or more of something), even if they're the vast supermajority, seems at odds to me with my idea of impartial benevolence, which I would identify more with trying to be a friend to all, or at least as much as you can given conflicts.

antimonyanthony @ 2022-09-17T10:14 (+26)

e.g. 2 minds with equally passionate complete enthusiasm (with no contrary psychological processes or internal currencies to provide reference points) respectively for and against their own experience, or gratitude and anger for their birth (past or future). They can respectively consider a world with and without their existences completely unbearable and beyond compensation. But if we're in the business of helping others for their own sakes rather than ours, I don't see the case for excluding either one's concern from our moral circle.

...

But when I'm in a mindset of trying to do impartial good I don't see the appeal of ignoring those who would desperately, passionately want to exist, and their gratitude in worlds where they do.

I don't really see the motivation for this perspective. In what sense, or to whom, is a world without the existence of the very happy/fulfilled/whatever person "completely unbearable"? Who is "desperate" to exist? (Concern for reducing the suffering of beings who actually feel desperation is, clearly, consistent with pure NU, but by hypothesis this is set aside.) Obviously not themselves. They wouldn't exist in that counterfactual.

To me, the clear case for excluding intrinsic concern for those happy moments is:

- "Gratitude" just doesn't seem like compelling evidence in itself that the grateful individual has been made better off. You have to compare to the counterfactual. In daily cases with existing people, gratitude is relevant as far as the grateful person would have otherwise been dissatisfied with their state of deprivation. But that doesn't apply to people who wouldn't feel any deprivation in the counterfactual, because they wouldn't exist.

- I take it that the thrust of your argument is, "Ethics should be about applying the same standards we apply across people as we do for intrapersonal prudence." I agree. And I also find the arguments for empty individualism convincing. Therefore, I don't see a reason to trust as ~infallible the judgment of a person at time T that the bundle of experiences of happiness and suffering they underwent in times T-n, ..., T-1 was overall worth it. They're making an "interpersonal" value judgment, which, despite being informed by clear memories of the experiences, still isn't incorrigible. Their positive evaluation of that bundle can be debunked by, say, this insight from my previous bullet point that the happy moments wouldn't have felt any deprivation had they not existed.

- In any case, I find upon reflection that I don't endorse tradeoffs of contentment for packages of happiness and suffering for myself. I find I'm generally more satisfied with my life when I don't have the "fear of missing out" that a symmetric axiology often implies. Quoting myself:

Another takeaway is that the fear of missing out seems kind of silly. I don’t know how common this is, but I’ve sometimes felt a weird sense that I have to make the most of some opportunity to have a lot of fun (or something similar), otherwise I’m failing in some way. This is probably largely attributable to the effect of wanting to justify the “price of admission” (I highly recommend the talk in this link) after the fact. No one wants to feel like a sucker who makes bad decisions, so we try to make something we’ve already invested in worth it, or at least feel worth it. But even for opportunities I don’t pay for, monetarily or otherwise, the pressure to squeeze as much happiness from them as possible can be exhausting. When you no longer consider it rational to do so, this pressure lightens up a bit. You don’t have a duty to be really happy. It’s not as if there’s a great video game scoreboard in the sky that punishes you for squandering a sacred gift.

Brian_Tomasik @ 2022-09-18T09:40 (+9)

"Gratitude" just doesn't seem like compelling evidence in itself that the grateful individual has been made better off

What if the individual says that after thinking very deeply about it, they believe their existence genuinely is much better than not having existed? If we're trying to be altruistic toward their own values, presumably we should also value their existence as better than nothingness (unless we think they're mistaken)?

One could say that if they don't currently exist, then their nonexistence isn't a problem. It's true that their nonexistence doesn't cause suffering, but it does make impartial-altruistic total value lower than otherwise if we would consider their existence to be positive.

Brian_Tomasik @ 2022-09-11T08:30 (+12)

Your reply is an eloquent case for your view. :)

This is one reason to pay extra attention to cases of near-simultaneous comparisons

In cases of extreme suffering (and maybe also extreme pleasure), it seems to me there's an empathy gap: when things are going well, you don't truly understand how bad extreme suffering is, and when you're in severe pain, you can't properly care about large volumes of future pleasure. When the suffering is bad enough, it's as if a different brain takes over that can't see things from the other perspective, and vice versa for the pleasure-seeking brain. This seems closer to the case of "univocal viewpoints" that you mention.

I can see how for moderate pains and pleasures, a person could experience them in succession and make tradeoffs while still being in roughly the same kind of mental state without too much of an empathy gap. But the fact of those experiences being moderate and exchangeable is the reason I don't think the suffering in such cases is that morally noteworthy.

we can better trust people's self-benevolence than their benevolence towards others

Good point. :) OTOH, we might think it's morally right to have a more cautious approach to imposing suffering on others for the sake of positive goods than we would use for ourselves. In other words, we might favor a moral view that's different from MacAskill's proposal to imagine yourself living through every being's experience in succession.

Strong rejection of interpersonal comparisons is also used to argue that relieving one or more pains can't compensate for losses to another individual.

Yeah. I support doing interpersonal comparisons, but there's inherent arbitrariness in how to weigh conflicting preferences across individuals (or sufficiently different mental states of the same individual), and I favor giving more weight to the extreme-suffering preferences.

But if we're in the business of helping others for their own sakes rather than ours, I don't see the case for excluding either one's concern from our moral circle.

That's fair. :) In my opinion, there's just an ethical asymmetry between creating a mind that desperately wishes not to exist versus failing to create a mind that desperately would be glad to exist. The first one is horrifying, while the second one is at most mildly unfortunate. I can see how some people would consider this a failure to impartially consider the preferences of others for their own sakes, and if my view makes me less "altruistic" in that sense, then I'm ok with that (as you suspected). My intuition that it's wrong to allow creating lots of extra torture is stronger than my intuition that I should be an impartial altruist.

If it's not mainly about others and their perspectives, why care so much about shaping (some of) their lives and attending to (some of) their concerns?

The extreme-suffering concerns are the ones that speak to me most strongly.

seems at odds to me with my idea of impartial benevolence, which I would identify more with trying to be a friend to all

Makes sense. While raw numbers count, it also matters to me what the content of the preference is. If 99% of individuals passionately wanted to create paperclips, while 1% wanted to avoid suffering, I would mostly side with those wanting to avoid suffering, because that just seems more important to me.

MichaelStJules @ 2022-09-06T07:22 (+3)

"I would be much less sanguine about error theories regarding such utterances if we didn't also see people in surveys saying they would rather take $1000 than a 15% chance of $1M, or $100 now rather than $140 a year later, i.e. utterances that are clearly mistakes."

These could be reasonable due to asymmetric information and a potentially adversarial situation, so respondents don't really trust that the chance of $1M is that high, or that they'll actually get the $140 a year from now. I would actually expect most people to pick the $100 now over $140 in a year with real money, and I wouldn't be too surprised if many would pick $1000 over a 15% chance of a million with real money. People are often ambiguity-averse. Of course, they may not really accept the premises of the hypotheticals.

With respect to antiaggregationist views, people could just be ignoring small enough probabilities regardless of the severity of the risk. There are also utility functions where any definite amount of A outweighs any definite amount of B, but probabilistic tradeoffs between them are still possible: https://forum.effectivealtruism.org/posts/GK7Qq4kww5D8ndckR/michaelstjules-s-shortform?commentId=4Bvbtkq83CPWZPNLB

CarlShulman @ 2022-09-10T17:46 (+13)

In the surveys they know it's all hypothetical.

You do see a bunch of crazy financial behavior in the world, but it decreases as people get more experience individually and especially socially (and with better cognitive understanding).

People do engage in rounding to zero in a lot of cases, but with lots of experience will also take on pain and injury with high cumulative or instantaneous probability (e.g. electric shocks to get rewards, labor pains, war, jobs that involve daily frequencies of choking fumes or injury.

Re lexical views that still make probabilistic tradeoffs, I don't really see the appeal of contorting lexical views that will still be crazy with respect to real world cases so that one can say they assign infinitesimal value to good things in impossible hypotheticals (but effectively 0 in real life). Real world cases like labor pain and risking severe injury doing stuff aren't about infinitesimal value too small for us to even perceive, but macroscopic value that we are motivated by. Is there a parameterization you would suggest as plausible and addressing that?

MichaelStJules @ 2022-09-10T18:36 (+5)

In the surveys they know it's all hypothetical.

Yes, but they might not really be able to entertain the assumptions of the hypotheticals because they're too abstract and removed from the real world cases they would plausibly face.

with lots of experience will also take on pain and injury with high cumulative or instantaneous probability (e.g. electric shocks to get rewards, labor pains, war, jobs that involve daily frequencies of choking fumes or injury.

(...)

Real world cases like labor pain and risking severe injury doing stuff aren't about infinitesimal value too small for us to even perceive, but macroscopic value that we are motivated by. Is there a parameterization you would suggest as plausible and addressing that?

Very plausibly none of these possibilities would meet the lexical threshold, except with very very low probability. These people almost never beg to be killed, so the probability of unbearable suffering seems very low for any individual. The lexical threshold could be set based on bearableness or consent or something similar (e.g. Tomasik, Vinding). Coming up with a particular parameterization seems like a bit of work, though, and I'd need more time to think about that, but it's worth noting that the same practical problem applies to very large aggregates of finite goods/bads, e.g. Heaven or Hell, very long lives, or huge numbers of mind uploads.

There's also a question of whether a life of unrelenting but less intense suffering can be lexically negative even if no particular experience meets some intensity threshold that would be lexically negative in all lives. Some might think of Omelas this way, and Mogensen's "The weight of suffering" is inclusive of this view (and also allows experiential lexical thresholds), although I don't think he discusses any particular parameterization.

Brian_Tomasik @ 2022-09-11T10:05 (+17)

Very plausibly none of these possibilities would meet the lexical threshold, except with very very low probability.

I'm confused. :) War has a rather high probability of extreme suffering. Perhaps ~10% of Russian soldiers in Ukraine have been killed as of July 2022. Some fraction of fighters in tanks die by burning to death:

The kinetic energy and friction from modern rounds causes molten metal to splash everywhere in the crew compartment and ignites the air into a fireball. You would die by melting.

You’ll hear of a tank cooking off as it’s ammunition explodes. That doesn’t happen right away. There’s lots to burn inside a tank other that the tank rounds. Often, the tank will burn for quite awhile before the tank rounds explode.

It is sometimes a slow horrific death if one can’t get out in time or a very quick one. We had side arms and all agreed that if our tank was burning and we were caught inside and couldn’t get out. We would use a round on ourselves. That’s how bad it was.

Some workplace accidents also produce extremely painful injuries.

I don't know what fraction of people in labor wish they were dead, but probably it's not negligible: "I remember repeatedly saying I wanted to die."

These people almost never beg to be killed

It may not make sense to beg to be killed, because the doctors wouldn't grant that wish.

MichaelStJules @ 2022-09-11T11:25 (+4)

Good points.

I don't expect most war deaths to be nearly as painful as burning to death, but I was too quick to dismiss the frequency of very very bad deaths. I had capture and torture in mind as whatever passes the lexical threshold, and so very rare.

Also fair about labor. I don't think it really gives us an estimate of the frequency of unbearable suffering, although it seems like trauma is common and women aren't getting as much pain relief as they'd like in the UK.

On workplace injuries, in the US in 2020, the highest rate by occupation seems to be around 200 nonfatal injuries and illnesses per 100,000 workers, and 20 deaths per 100,000 workers, but they could be even higher in more specific roles: https://injuryfacts.nsc.org/work/industry-incidence-rates/most-dangerous-industries/

I assume these are estimates of the number of injuries in 2020 only, too, so the lifetime risk is several times higher in such occupations. Maybe the death rate is similar to the rate of unbearable pain, around 1 out of 5,000 per year, which seems non-tiny when added up over a lifetime (around 0.4% over 20 years assuming a geometric distribution https://www.wolframalpha.com/input?i=1-(1-1%2F5000)^20), but also similar in probability to the kinds of risks we do mitigate without eliminating (https://forum.effectivealtruism.org/posts/5y3vzEAXhGskBhtAD/most-small-probabilities-aren-t-pascalian?commentId=jY9o6XviumXfaxNQw).

Jack Malde @ 2022-09-11T07:18 (+1)

if we didn't also see people in surveys saying they would rather take $1000 than a 15% chance of $1M, or $100 now rather than $140 a year later, i.e. utterances that are clearly mistakes.

I agree there are some objectively stupid answers that have been given to surveys, but I'm surprised these were the best examples you could come up with.

Taking $1000 over a 15% chance of $1M can follow from risk aversion which can follow from diminishing marginal utility of money. And let's face it - money does have diminishing marginal utility.

Wanting $100 now rather than $140 a year later can follow from the time value of money. You could invest the money, either financially or otherwise. Also, even though it's a hypothetical, people may imagine in the real scenario that they are less likely to get something promised in a year's time and therefore that they should accept what is really a similar-ish pot of money now.

CarlShulman @ 2022-09-12T23:45 (+17)

They're wildly quantitatively off. Straight 40% returns are way beyond equities, let alone the risk-free rate. And it's inconsistent with all sorts of normal planning, e.g. it would be against any savings in available investments, much concern for long-term health, building a house, not borrowing everything you could on credit cards, etc.

Similarly the risk aversion for rejecting a 15% of $1M for $1000 would require a bizarre situation (like if you needed just $500 more to avoid short term death), and would prevent dealing with normal uncertainty integral to life, like going on dates with new people, trying to sell products to multiple customers with occasional big hits, etc.

Brian_Tomasik @ 2022-09-17T14:05 (+3)

This page says: "The APRs for unsecured credit cards designed for consumers with bad credit are typically in the range of about 25% to 36%." That's not too far from 40%. If you have almost no money and would otherwise need such a loan, taking $100 now may be reasonable.

There are claims that "Some 56% of Americans are unable to cover an unexpected $1,000 bill with savings", which suggests that a lot of people are indeed pretty close to financial emergency, though I don't know how true that is. Most people don't have many non-401k investments, and they roughly live paycheck to paycheck.

I also think people aren't pure money maximizers. They respond differently in different situations based on social norms and how things are perceived. If you get $100 that seems like a random bonus, it's socially acceptable to just take it now rather than waiting for $140 next year. But it doesn't look good to take out big credit-card loans that you'll have trouble repaying. It's normal to contribute to a retirement account. And so on. People may value being normal and not just how much money they actually have.

That said, most people probably don't think through these issues at all and do what's normal on autopilot. So I agree that the most likely explanation is lack of reflectiveness, which was your original point.

freedomandutility @ 2022-08-23T14:36 (+31)

I've seen the asymmetry discussed multiple times on the forum - I think it is still the best objection to the astronomical waste argument for longtermism.

I don't think this has been addressed enough by longtermists (I would count "longtermism rejects the assymetry and if you think the assymetry is true than you probably reject longtermism" as addressing it).

AppliedDivinityStudies @ 2022-08-23T15:05 (+22)

The idea that "the future might not be good" comes up on the forum every so often, but this doesn't really harm the core longtermist claims. The counter-argument is roughly:

- You still want to engage in trajectory changes (e.g. ensuring that we don't fall to the control of a stable totalitarian state)

- Since the effort bars are ginormous and we're pretty uncertain about the value of the future, you still want to avoid extinction so that we can figure this out, rather than getting locked in by a vague sense we have today

freedomandutility @ 2022-08-23T16:34 (+17)

I think the asymmetry argument is quite different to the “bad futures” argument?

(Although I think the bad futures argument is one of the other good objections to the astronomical waste argument).

I think we might disagree on whether “astronomical waste” is a core longtermist claim - I think it is.

I don’t think either objection means that we shouldn’t care about extinction or about future people, but both drastically reduce the expected value of longtermist interventions.

And given that the counterfactual use of EA resources always has high expected value, the reduction in EV of longtermist interventions is action-relevant.

People who agree with asymmetry and people who are less confident in the probability of / quality of a good future would allocate fewer resources to longtermist causes than Will MacAskill would.

Jack Malde @ 2022-08-24T17:42 (+32)

Someone bought into the asymmetry should still want to improve the lives of future people who will necessarily exist.

In other words the asymmetry doesn’t go against longtermist approaches that have the goal to improve average future well-being, conditional on humanity not going prematurely extinct.

Such approaches might include mitigating climate change, institutional design, and ensuring aligned AI. For example, an asymmetrist should find it very bad if AI ends up enslaving us for the rest of time…

MichaelStJules @ 2022-08-24T23:22 (+13)

I don't get why this is being downvoted so much. Can anyone explain?

Jack Malde @ 2022-08-27T11:54 (+12)

I think that even in the EA community, there are people who vote based on whether or not they like the point being made, as opposed to whether or not the logic underlying a point is valid or not. I think this happens to explain the downvotes on my comment - some asymmetrists just don’t like longtermism and want their asymmetry to be a valid way out of it.

I don’t necessarily think this phenomenon applies to downvotes on other comments I might make though - I’m not arrogant enough to think I’m always right!

I have a feeling this phenomenon is increasing. As the movement grows we will attract people with a wider range of views and so we may see more (unjustifiable) downvoting as people downvote things that don’t align to their views (regardless of the strength of argument). I’m not sure if this will happen, but it might, and to some degree I have already started to lose some confidence in the relationship between comment/post quality and karma.

freedomandutility @ 2022-08-24T23:00 (+8)

Yes, this is basically my view!

Jack Malde @ 2022-08-25T06:42 (+5)

I think the upshot of this is that an asymmetrist who accepts the other key arguments underlying longtermism (future is vast in expectation, we can tractably influence the far future) should want to allocate all of their altruistic resources to longtermist causes. They would just be more selective about which specific causes.

For an asymmetrist, the stakes are still incredibly high, and it's not as if the marginal value of contributing to longtermist approaches such AI alignment, climate change etc. have been driven down to a very low level.

So I'm basically disagreeing with you when you say:

People who agree with asymmetry and people who are less confident in the probability of / quality of a good future would allocate fewer resources to longtermist causes than Will MacAskill would.

Alex Mallen @ 2022-08-24T01:14 (+2)

This post by Rohin attempts to address it. If you hold the asymmetry view then you would allocate more resources to [1] causing a new neutral life to come into existence (-1 cent) then later once they exist improve that neutral life (many dollars) than you would to [2] causing a new happy life to come into existence (-1 cent). They both result in the same world.

In general you can make a dutch booking argument like this whenever your resource allocation doesn't correspond to the gradient of a value function (i.e. the resources should be aimed at improving the state of the world).

antimonyanthony @ 2022-08-25T20:06 (+9)

This only applies to flavors of the Asymmetry that treat happiness as intrinsically valuable, such that you would pay to add happiness to a "neutral" life (without relieving any suffering by doing so). If the reason you don't consider it good to create new lives with more happiness than suffering is that you don't think happiness is intrinsically valuable, at least not at the price of increasing suffering, then you can't get Dutch booked this way. See this comment.

MichaelPlant @ 2022-08-23T10:22 (+21)

You object to the MacAskill quote

If we think it’s bad to bring into existence a life of suffering, why should we not think that it’s good to bring into existence a flourishing life? I think any argument for the first claim would also be a good argument for the second.

And then say

Indeed, many arguments support the former while positively denying the latter. One such argument is that the presence of suffering is bad and morally worth preventing while the absence of pleasure is not bad and not a problem,

But I don't see how this challenges MacAskill's point, so much as restates the claim he was arguing against. I think he could simply reply to what you said by asking, "okay, so why do we have reason to prevent what is bad but no reason to bring about what is good?"

Magnus Vinding @ 2022-08-23T15:46 (+37)

Thanks for your question, Michael :)

I should note that the main thing I take issue with in that quote of MacAskill's is the general (and AFAICT unargued) statement that "any argument for the first claim would also be a good argument for the second". I think there are many arguments about which that statement is not true (some of which are reviewed in Gloor, 2016; Vinding, 2020, ch. 3; Animal Ethics, 2021).

As for the particular argument of mine that you quote, I admit that a lot of work was deferred to the associated links and references. I think there are various ways to unpack and support that line of argument.

One of them rests on the intuition that ethics is about solving problems (an intuition that one may or may not share, of course).[1] If one shares that moral intuition, or premise, then it seems plausible to say that the presence of suffering or miserable lives amounts to a problem, or a problematic state, whereas the absence of pleasure or pleasurable lives does not (other things equal) amount to a problem for anyone, or to a problematic state. That line of argument (whose premises may be challenged, to be sure) does not appear "flippable" such that it becomes a similarly plausible argument in favor of any supposed goodness of creating a happy life.

Alternatively, or additionally, one can support this line of argument by appealing to specific cases and thought experiments, such as the following (sec. 1.4):

we would rightly rush to send an ambulance to help someone who is enduring extreme suffering, yet not to boost the happiness of someone who is already doing well, no matter how much we may be able to boost it. ... Similarly, if we were in the possession of pills that could raise the happiness of those who are already happy to the greatest heights possible, there would be no urgency in distributing these pills, whereas if a single person fell to the ground in unbearable agony right before us, there would indeed be an urgency to help.

... if a person is in a state of dreamless sleep rather than a state of ecstatic happiness, this cannot reasonably be characterized as a disaster or a catastrophe. The difference between these two states does not carry great moral weight. By contrast, the difference between sleeping and being tortured does carry immense moral weight, and the realization of torture rather than sleep would indeed amount to a catastrophe. Being forced to endure torture rather than dreamless sleep, or an otherwise neutral state, would be a tragedy of a fundamentally different kind than being forced to “endure” a neutral state instead of a state of maximal bliss.

These cases also don't seem "flippable" with similar plausibility. And the same applies to Epicurean/Buddhist/minimalist views of wellbeing and value.

- ^

An alternative is to speak in terms of urgency vs. non-urgency, as Karl Popper, Thomas Metzinger, and Jonathan Leighton have done, cf. Vinding, 2020, sec. 1.4.

Jack Malde @ 2022-08-23T20:11 (+15)

I'm not sure how I feel about relying on intuitions in thought experiments such as those. I don't necessarily trust my intuitions.

If you'd asked me 5-10 years ago whose life is more valuable: an average pig's life or a severely mentally-challenged human's life I would have said the latter without a thought. Now I happen to think it is likely to be the former. Before I was going off pure intuition. Now I am going off developed philosophical arguments such as the one Singer outlines in his book Animal Liberation, as well as some empirical facts.

My point is when I'm deciding if the absence of pleasure is problematic or not I would prefer for there to be some philosophical argument why or why not, rather than examples that show that my intuition goes against this. You could argue that such arguments don't really exist, and that all ethical judgement relies on intuition to some extent, but I'm a bit more hopeful. For example Michael St Jules' comment is along these lines and is interesting.

On a really basic level my philosophical argument would be that suffering is bad, and pleasure is good (the most basic of ethical axioms that we have to accept to get consequentialist ethics off the ground). Therefore creating pleasure is good (and one way of doing so is to create new happy lives), and reducing suffering is also good. Adding caveats to this such as 'pleasure is only good if it accrues to an already existing being' just seems to be somewhat ad hoc / going against Occam's Razor / trying to justify an intuition one already holds which may or may not be correct.

antimonyanthony @ 2022-08-24T06:01 (+9)

On a really basic level my philosophical argument would be that suffering is bad, and pleasure is good (the most basic of ethical axioms that we have to accept to get consequentialist ethics off the ground).

It seems like you're just relying on your intuition that pleasure is intrinsically good, and calling that an axiom we have to accept. I don't think we have to accept that at all — rejecting it does have some counterintuitive consequences, I won't deny that, but so does accepting it. It's not at all obvious (and Magnus's post points to some reasons we might favor rejecting this "axiom").

Jack Malde @ 2022-08-24T06:04 (+2)

Would you say that saying suffering is bad is a similar intuition?

antimonyanthony @ 2022-08-24T06:59 (+7)

No, I know of no thought experiments or any arguments generally that make me doubt that suffering is bad. Do you?

Jack Malde @ 2022-08-24T07:26 (+3)

Well if you think suffering is bad and pleasure is not good then the counterintuitive (to the vast majority of people) conclusion is that we should (painlessly if possible, but probably painfully if necessary) ensure everyone gets killed off so that we never have any suffering again.

It may well be true that we should ensure everyone gets killed off, but this is certainly an argument that many find compelling against the dual claim that suffering is bad and pleasure is not good.

antimonyanthony @ 2022-08-24T07:38 (+10)

- That case does run counter to "suffering is intrinsically bad but happiness isn't," but it doesn't run counter to "suffering is bad," which is what your last comment asked about. I don't see any compelling reasons to doubt that suffering is bad, but I do see some compelling reasons to doubt that happiness is good.

- That's just an intuition, no? (i.e. that everyone painlessly dying would be bad.) I don't really understand why you want to call it an "axiom" that happiness is intrinsically good, as if this is stronger than an intuition, which seemed to be the point of your original comment.

- See this post for why I don't think the case you presented is decisive against the view I'm defending.

Jack Malde @ 2022-08-26T07:45 (+6)

What is your compelling reason to doubt happiness is good? Is it thought experiments such as the ones Magnus has put forward? I think these argue that alleviating suffering is more pressing than creating happiness, but I don't think these argue that creating happiness isn't good.

I do happen to think suffering is bad, but here is a potentially reasonable counterargument - some people think that suffering is what makes life meaningful. For example some think of the idea of drugs being widespread, alleviating everyone of all pain all the time, is monstrous. People's children would get killed and the parents just wouldn’t feel any negative emotion - this seems a bit wrong...

You could try to use your pareto improvement argument here i.e. that it's better if parents still have a preference for their child not to have been killed, but also not to feel any sort of pain related to it. Firstly, I do think many people would want there to be some pain in this situation and that they would think of a lack of pain being disrespectful and grotesque. Otherwise I'm slightly confused about one having a preference that the child wasn't killed, but also not feeling any sort of hedonic pain about it...is this contradictory?

As I said I do think suffering is bad, but I'm yet to be convinced this is less of a leap of faith than saying happiness is good.

matty @ 2022-08-26T12:56 (+13)

Say there is a perfectly content monk who isn't suffering at all. Do you have a moral obligation to make them feel pleasure?

Jack Malde @ 2022-08-26T15:32 (+2)

It would certainly be a good thing to do. And if I could do it costlessly I think I would see it as an obligation, although I’m slightly fuzzy on the concept of moral obligations in the first place.

In reality however there would be an opportunity cost. We’re generally more effective at alleviating suffering than creating pleasure, so we should generally focus on doing the former.

Teo Ajantaival @ 2022-08-26T17:50 (+17)

To modify the monk case, what if we could (costlessly; all else equal) make the solitary monk feel a notional 11 units of pleasure followed by 10 units of suffering?

Or, extreme pleasure of "+1001" followed by extreme suffering of "-1000"?

Cases like these make me doubt the assumption of happiness as an independent good. I know meditators who claim to have learned to generate pleasure at will in jhana states, who don't buy the hedonic arithmetic, and who prefer the states of unexcited contentment over states of intense pleasure.

So I don't want to impose, from the outside, assumptions about the hedonic arithmetic onto mind-moments who may not buy them from the inside.

Additionally, I feel no personal need for the concept of intrinsic positive value anymore, because all my perceptions of positive value seem just fine explicable in terms of their indirect connections to subjective problems. (I used to use the concept, and it took me many years to translate it into relational terms in all the contexts where it pops up, but I seem to have now uprooted it so that it no longer pops to mind, or at least it stopped doing so over the past four years. In programming terms, one could say that uprooting the concept entailed refactoring a lot of dependencies regarding other concepts, but eventually the tab explosion started shrinking back down again, and it appeared perfectly possible to think without the concept. It would be interesting to hear whether this has simply "clicked" for anyone when reading analytical thought experiments, because for me it felt more like how I would imagine a crisis of faith to feel like for a person who loses their faith in a <core concept>, including the possibly arduous cognitive task of learning to fill the void and seeing what roles the concept played.)

Jack Malde @ 2022-08-26T18:13 (+12)

I’m not sure if “pleasure” is the right word. I certainly think that improving one’s mental state is always good, even if this starts at a point in which there is no negative experience at all.

This might not involve increasing “pleasure”. Instead it could be increasing the amount of “meaning” felt or “love” felt. If monks say they prefer contentment over intense pleasure then fine - I would say the contentment state is hedonically better in some way.

This is probably me defining “hedonically better” differently to you but it doesn’t really matter. The point is I think you can improve the wellbeing of someone who is experiencing no suffering and that this is objectively a desirable thing to do.

Teo Ajantaival @ 2022-09-02T11:53 (+8)

Relevant recent posts:

https://www.simonknutsson.com/undisturbedness-as-the-hedonic-ceiling/

https://centerforreducingsuffering.org/phenomenological-argument/

(I think these unpack a view I share, better than I have.)

Edit: For tranquilist and Epicurean takes, I also like Gloor (2017, sec. 2.1) and Sherman (2017, pp. 103–107), respectively.

antimonyanthony @ 2022-08-27T08:13 (+1)

I think one crux here is that Teo and I would say, calling an increase in the intensity of a happy experience "improving one's mental state" is a substantive philosophical claim. The kind of view we're defending does not say something like, "Improvements of one's mental state are only good if they relieve suffering." I would agree that that sounds kind of arbitrary.

The more defensible alternative is that replacing contentment (or absence of any experience) with increasingly intense happiness / meaning / love is not itself an improvement in mental state. And this follows from intuitions like "If a mind doesn't experience a need for change (and won't do so in the future), what is there to improve?"

Dan Hageman @ 2022-08-28T04:25 (+1)

Can you elaborate a bit on why the seemingly arbitrary view you quoted in your first paragraph wouldn't follow, from the view that you and Teo are defending? Are you saying that from your and Teo's POVs, there's a way to 'improve a mental state' that doesn't amount to decreasing suffering (/preventing it)? The statement itself seems a bit odd, since 'improvements' seems to imply 'goodness', and the statement hypothetically considers situations where improvements may not be good..so thought I would see if you could clarify.

In regards to the 'defensible alternative', it seems that one could defend a plausible view that a state of contentment, moved to a state of increased bliss, is indeed an improvement, even though there wasn't a need for change. Such an understanding seems plausible in a self-intimating way when one valence state transitions to the next, insofar as we concede that there are states of more or less pleasure, outside an negatively valanced states. It seems that one could do this all the while maintaining that such improvements are never capable of outweighing the mitigation of problematic, suffering states. **Note, using the term improvement can easily lead to accidental equivocation between scenarios of mitigating suffering versus increasing pleasure, but the ethical discernment between each seems manageable.

antimonyanthony @ 2022-08-28T11:06 (+1)

Are you saying that from your and Teo's POVs, there's a way to 'improve a mental state' that doesn't amount to decreasing suffering (/preventing it)?

No, that's precisely what I'm denying. So, the reason I mentioned that "arbitrary" view was that I thought Jack might be conflating my/Teo's view with one that (1) agrees that happiness intrinsically improves a mental state, but (2) denies that improving a mental state in this particular way is good (while improving a mental state via suffering-reduction is good).

Such an understanding seems plausible in a self-intimating way when one valence state transitions to the next, insofar as we concede that there are states of more or less pleasure, outside an negatively valanced states.

It's prima facie plausible that there's an improvement, sure, but upon reflection I don't think my experience that happiness has varying intensities implies that moving from contentment to more intense happiness is an improvement. Analogously, you can increase the complexity and artistic sophistication of some painting, say, but if no one ever observes it (which I'm comparing to no one suffering from the lack of more intense happiness), there's no "improvement" to the painting.

It seems that one could do this all the while maintaining that such improvements are never capable of outweighing the mitigation of problematic, suffering states.

You could, yeah, but I think "improvement" has such a strong connotation to most people that something of intrinsic value has been added. So I'd worry that using that language would be confusing, especially to welfarist consequentialists who think (as seems really plausible to me) that you should do an act to the extent that it improves the state of the world.

Dan Hageman @ 2022-08-29T02:32 (+1)

Okay, thanks for clarifying for me! I think I was confused in that opening line when you clarified that your views do not say that only a relief of suffering improves a mental state, but in reality it's that you do think such is the case, just not in conjunction with the claim that happiness also intrinsically improves a mental state, correct?

>Analogously, you can increase the complexity and artistic sophistication of some painting, say, but if no one ever observes it (which I'm comparing to no one suffering from the lack of more intense happiness), there's no "improvement" to the painting.

With respect to this, I should have clarified that the state of contentment, that becomes a more intense positive state was one of an existing and experiencing being, not a content state of non-existence and then pleasure is brought into existence. Given the latter, would the painting analogy hold, since in this thought experiment there is an experiencer who has some sort of improvement in their mental state, albeit not a categorical sort of improvement that is on par with the sort the relives suffering? I.e. It wasn't a problem per se (no suffering) that they were being deprived of the more intense pleasure, but the move from lower pleasure to higher pleasure is still an improvement in some way (albeit perhaps a better word would be needed to distinguish the lexical importance between these sorts of *improvements*).

antimonyanthony @ 2022-08-26T22:14 (+5)