Project idea: AI for epistemics

By Benjamin_Todd @ 2024-05-19T19:36 (+45)

This is a linkpost to https://benjamintodd.substack.com/p/the-most-interesting-startup-idea

If transformative AI might come soon and you want to help that go well, one strategy you might adopt is building something that will improve as AI gets more capable.

That way if AI accelerates, your ability to help accelerates too.

Here’s an example: organisations that use AI to improve epistemics – our ability to know what’s true –– and make better decisions on that basis.

This was the most interesting impact-oriented entrepreneurial idea I came across when I visited the Bay area in February. (Thank you to Carl Shulman who first suggested it.)

Navigating the deployment of AI is going to involve successfully making many crazy hard judgement calls, such as “what’s the probability this system isn’t aligned” and “what might the economic effects of deployment be?”

Some of these judgement calls will need to be made under a lot of time pressure — especially if we’re seeing 100 years of technological progress in under 5.

Being able to make these kinds of decisions a little bit better could therefore be worth a huge amount. And that’s true given almost any future scenario.

Better decision-making can also potentially help with all other cause areas, which is why 80,000 Hours recommends it as a cause area independent from AI.

So the idea is to set up organisations that use AI to improve forecasting and decision-making in ways that can be eventually applied to these kinds of questions.

In the short term, you can apply these systems to conventional problems, potentially in the for-profit sector, like finance. We seem to be just approaching the point where AI systems might be able to help (e.g. a recent paper found GPT-4 was pretty good at forecasting if fine-tuned). Starting here allows you to gain scale, credibility and resources.

But unlike what a purely profit-motivated entrepreneur would do, you can also try to design your tools such that in an AI crunch moment they’re able to help.

For example, you could develop a free-to-use version for political leaders, so that if a huge decision about AI regulation suddenly needs to be made, they’re already using the tool for other questions.

There are already a handful of projects in this space, but it could eventually be a huge area, so it still seems like very early days.

These projects could have many forms:

- One example of a concrete proposal is using AI to make forecasts, or otherwise better at truthfinding in important domains. On the more qualitative side, we could imagine an AI “decision coach” or consultant that aims to augment human decision-making. Any techniques to make it easier to extract the truth from AI systems could also count, such as relevant kinds of interpretability research and the AI debate or weak-to-strong generalisation approaches to AI alignment.

- I could imagine projects in this area starting in many ways, including a research service within a hedge fund, a research group within an AI company (e.g. focused on optimising systems for truth telling and accuracy), an AI-enabled consultancy (trying to undercut the Big 3), or as a non-profit focused on policy makers.

- Most likely you’d try to fine tune and build scaffolding around existing leading LLMs, though there are also proposals to build LLMs from the bottom-up for forecasting. For example, you could create an LLM that only has data up to 2023, and then train it to predict what happens in 2024.

- There’s a trade-off to be managed between maintaining independence and trustworthiness, vs. having access to leading models and decision-makers in AI companies and making money.

- Some ideas could advance frontier capabilities, so you’d want to think carefully about either how to avoid that, stick to ideas that differentially boost more safety-enhancing aspects of the technology, or be confident any contribution to general capabilities is outweighed by other benefits (when this is justified is a controversial topic with a lot of disagreement). To be a bit more concrete, finding ways to tell when existing frontier models are telling the truth seems less risky than developing new kinds of frontier models that are optimised for forecasting.

- You’ll need to try to develop an approach that won’t be made obsolete by the next generation of leading models, but can instead benefit from further progress in the cutting edge.

I don’t have a fleshed out proposal, this post is more an invitation to explore the space.

The ideal founding team would ideally cover the bases of: (i) forecasting / decision-making expertise (ii) AI expertise (iii) product and entrepreneurial skills (iv) knowledge of an initial user-type, though bear in mind if you have a gap now, you could probably fill it within a year.

If you already see an angle on this idea, it could be best just to try it on a small scale, and then iterate from there.

If not, then my normal advice would be to get started by joining an existing project in the same or an adjacent area (e.g. a forecasting organisation, an AI applications company) that will expose you to ideas and people with relevant skills. Then keep your eyes out for a more concrete problem you could solve. The best startup ideas usually emerge more organically over time in promising areas.

Existing projects:

- Future Search

- Philip Tetlock’s Lab at UPenn

- Danny Halawi at UC Berkeley

- Ought

- Elicit

- (Let me know about more!)

Learn more:

- Mantic Monday 3/11/24 and 2/19/24 by Astral Codex Ten, which summarise some recent developments in this space.

- Project founder, by me on 80,000 Hours, with some general advice on entrepreneurial careers.

If interested, I’d suggest talking to the 80,000 Hours team (who can introduce you to others).

Habryka @ 2024-05-19T22:24 (+25)

Me and the other people working on Lightcone + LW are pretty interested in working in this space (and LW + the AI Alignment Forum puts us IMO in a great positions to get ongoing feedback on users for our work in the space, and we've also collaborated a good amount with Manifold historically). However, we currently don't have funding for it, which is our biggest bottleneck for working on this.

AI engineering tends to be particularly expensive in terms of talent and capital expenditures. If anyone knows of funders interested in this kind of stuff, who might be interested in funding us for this kind of work, letting me know would be greatly appreciated.

JamesN @ 2024-05-25T10:23 (+3)

The bottleneck on funding is the biggest issue in unlocking the potential in this space imo, and on trying to improve decision making more broadly.

I find the void between the importance placed on improving reasoning and decision making on cause priority researchers such as 80k and the EA community as a whole, and the appetite from funders to invest is quite huge. A colleague and I have struggled for even relatively small amounts of funding, even when having existing users/clients from which funding would allow us to scale from.

That’s not a complaint - funders are free to determine what they want to fund. But it seems a consistent challenge people who want to improve IIDM face.

It increases my view that such endeavours, though incredibly important, should be focused on earning a profit as the ability to scale as a non-profit will be limited.

Lukas_Gloor @ 2024-05-19T21:33 (+24)

Somewhat relatedly, what about using AI to improve not your own (or your project's) epistemics, but improve public discourse? Something like "improve news" or "improve where people get their info on controversial topics."

Edit: To give more context, I was picturing something like training LLMs to pass ideological turing tests and then create a summary of the strongest arguments for and against, as well as takedowns of common arguments by each side that are clearly bad. And maybe combine that with commenting on current events as they unfold (to gain traction), handling the tough balance of having to compete in the attention landscape while still adhering to high epistemic standards. The goal then being something like "trusted source of balanced reporting," which you can later direct to issues that matter the most (after gaining traction earlier by discussing all sorts of things).

Owen Cotton-Barratt @ 2024-05-20T08:43 (+9)

Note that writing on this topic up to July 14th could be eligible for the essay prize on the automation of wisdom and philosophy.

Ozzie Gooen @ 2024-05-20T01:34 (+9)

Happy to see conversation and excitement on this!

Some quick points:

- Eli Lifland and I had a podcast episode about this topic a few weeks back. This goes into some detail on the details and viability of forecasting+AI being a cost-effective EA intervention.

- We at QURI have been investigating a certain thread of ambitious forecasting (which would require a lot of AI) for the last few years. We're a small group, but I think our writing and work would be interesting for people in this area.

- Our post Prioritization Research for Advancing Wisdom and Intelligence from 2021 described much of this area as "Wisdom and Intelligence" interventions, and there I similarly came to the conclusion that AI+epistemics was likely the most exciting generic area there. I'm still excited for more prioritization work and direct work in this area.

- The FTX Future Fund made epistemics and AI+epistemics a priority. I'd be curious to see other funders research this area more. (Hat tip to the new OP forecasting team)

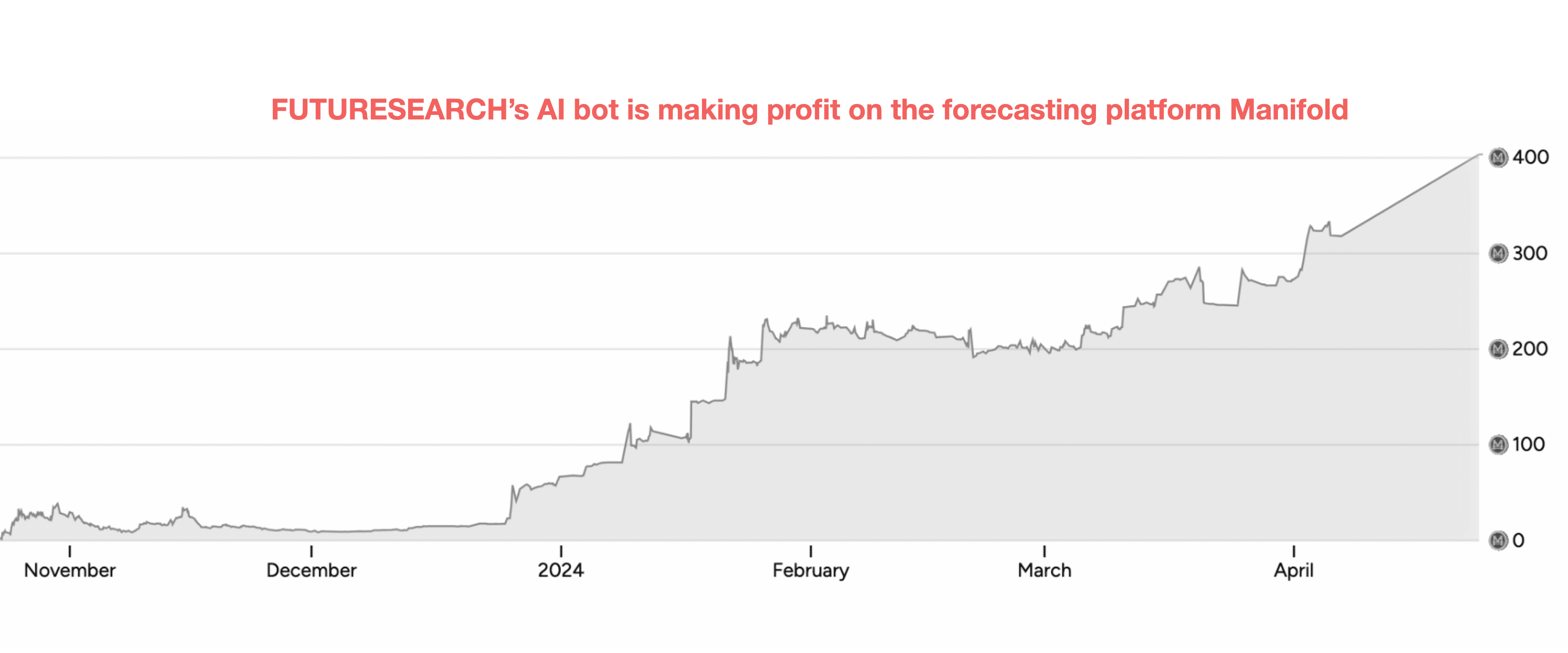

- "A forecasting bot made by the AI company FutureSearch is making profit on the forecasting platform Manifold. The y-axis shows profit. This suggests it’s better even than collective prediction of the existing human forecasters." -> I want to flag here that it's not too hard for a smart human to do as good or better. Strong human forecasters are expected to make a substantial profit. A more accurate statement here is, "This suggests that it's powerful for automation to add value to a forecasting platform, and to outperform some human forecasters", which is a lower bar. I expect it will be a long time until AIs beat Humans+AIs in forecasting, but I agree AIs will add value.

Benjamin_Todd @ 2024-05-20T08:43 (+4)

Thanks that's helpful!

David_Althaus @ 2024-05-23T10:22 (+5)

I'm excited about work in this area.

Somewhat related may also be this recent paper by Costello and colleagues who found that engaging in a dialogue with GPT-4 stably decreased conspiracy beliefs (HT Lucius).

Perhaps social scientists can help with research on how to best design LLMs to improve people's epistemics; or to make sure that interacting with LLMs at least doesn't worsen people's epistemics.

Marcel D @ 2024-05-20T04:56 (+4)

I've been advocating for something like this for a while (more recently, here and here), but have only ever received lukewarm feedback at best. I'd still be excited to see this take off, and would probably like to hear what other work is happening in this space!

Adam Binks @ 2024-05-22T11:45 (+3)

AI for epistemics/forecasting is something we're considering working on at Sage - we're hiring technical members of staff. I'd be interested to chat to other people thinking about this.

Depending on the results of our experiments, we might integrate this into our forecasting platform Fatebook, or build something new, or decide not to focus on this.

Ozzie Gooen @ 2024-05-23T00:31 (+2)

Note: I just made an EA Forum tag for Algorithmic forecasting, which would include AI-generated forecasting, which would include some of this. I'd be excited to see more posts on this!

https://forum.effectivealtruism.org/topics/algorithmic-forecasting

JamesN @ 2024-05-25T10:43 (+1)

I’m a strong believer that AI can be of massive assistance in this area, especially in areas such as improving forecasting ability where the science is fairly well understood/evidenced (I.e. improve reasoning process to improve forecasting and prediction).

My point of caution would be that exploration here, if not done with sufficient scientific rigour, can result in superficially useful tools that add to AI misuse risks and/or worse decision making. For more info do see the below research paper: https://arxiv.org/abs/2402.01743#:~:text=This report examines a novel,many critical real world problems.

L Rudolf L @ 2024-05-21T21:18 (+1)

I've been thinking about this space. I have some ideas for hacky projects in the direction of "argument type-checkers"; if you're interested in this, let me know