Climate Advocacy and AI Safety: Supercharging AI Slowdown Advocacy

By Matthew McRedmond🔹 @ 2024-07-25T12:08 (+8)

Epistemic Status: Exploratory. This is just a brief sketch of an idea that I thought I’d post rather than do nothing with. I’ll expand on it if it gains traction.

As such, feedback and commentary of all kinds are encouraged.

TL;DR

- There is a looming energy crisis in AI Development.

- It is unlikely that this crisis can be solved without using copious amounts of fossil fuels.

- This scenario presents a strategic opportunity for the AI slowdown advocacy movement to benefit from the substantial influence of the climate advocacy movement

- Misalignment of goals between the movements is a risk

- This strategy is a high-stakes bet that requires careful thought but likely demands immediate action.

The Situation

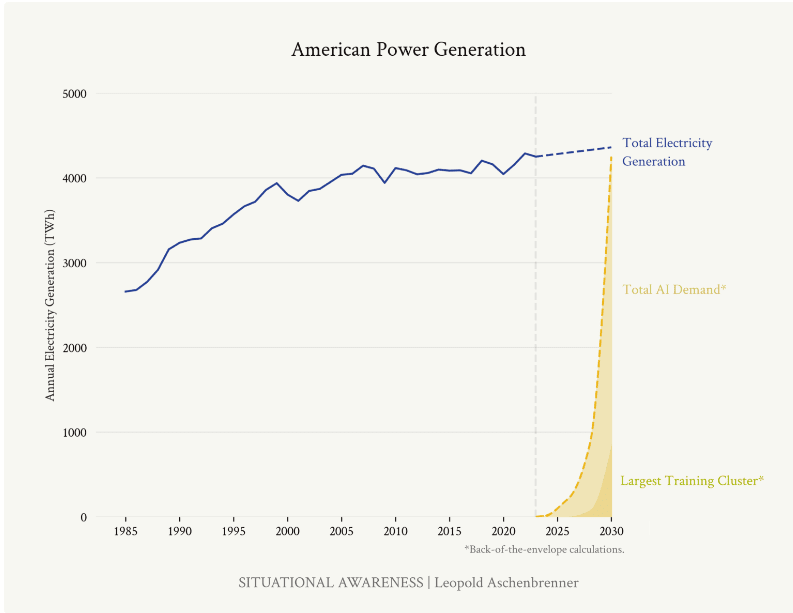

There is a looming energy crisis in AI Development. Recent projections about the energy requirements for the next generations of frontier AI systems are nothing short of alarming. Consider the estimates from former OpenAI researcher Leopold Aschenbrenner's article on the next likely training runs:

- By 2026, compute for frontier models could consume about 5% of US electricity production.

- By 2028, this could rise to 20%.

- By 2030, it might require the equivalent of 100% of current US electricity production.

The implications are stark: the rapid advancement of AI is on a collision course with energy infrastructure and, by extension, climate goals.

It is unlikely that this crisis can be solved without using copious amounts of fossil fuels. Given the projected timelines for AI development and the current state of renewable energy infrastructure, it's highly improbable that this enormous energy demand can be met without heavy reliance on fossil fuels. This presents a critical dilemma:

1. Rapid AI advancement could significantly increase fossil fuel consumption, directly contradicting global climate goals.

2. Attempts to meet this energy demand with renewables would require an unprecedented and likely unfeasible acceleration in green energy infrastructure development.

This scenario creates a natural alignment of interests between climate advocates and those concerned about the risks of rapid AI development.

The Opportunity

This scenario presents a strategic opportunity for AI slowdown advocacy. The climate advocacy movement has spent decades building powerful infrastructure for public awareness, policy influence, and corporate pressure. This existing framework presents a unique opportunity for AI slowdown advocates:

- Megaphone Effect: By framing AI development as a major climate issue, we can tap into the vast reach and resources of climate advocacy groups.

- Policy Pressure: Climate advocates have experience in pushing for regulations on high-emission industries. This expertise could be redirected towards policies that limit large-scale AI training runs.

- Corporate Accountability: Many tech companies have made public commitments to sustainability. Highlighting the conflict between these commitments and energy-intensive AI development could create internal pressure for a slowdown.

- Public Awareness: The climate crisis is well-understood by the general public (and policymakers). Linking AI development to increased emissions could rapidly build support for AI regulation.

The Risk

Misalignment of goals is a risk. While this strategy offers significant potential, it's important to consider possible drawbacks from a misalignment of goals: climate advocates may push for solutions (like rapid green energy scaling) that don't align with AI slowdown goals. In reality, these potential misalignments seem manageable compared to the strategic benefits. The climate advocacy movement is closely linked to the sustainability movement which would find the idea of doubling energy consumption by any means anathema. Nevertheless, the fact that both movements have different world views and goals should be kept in mind when pursuing this strategy.

Conclusion

Collaborating with the climate advocacy movement is a high-stakes bet that requires careful thought but likely demands immediate action. With potentially short AI development timelines and the current intractability of AI slowdown advocacy, we must seriously consider high-payoff strategies like this – and fast.

Stephen McAleese @ 2024-07-25T18:49 (+5)

I've never heard this idea proposed before so it seems novel and interesting.

As you say in the post, the AI risk movement could gain much more awareness by associating itself with the climate risk advocacy movement which is much larger. Compute is arguably the main driver of AI progress, compute is correlated with energy usage, and energy use generally increases carbon emissions so limiting carbon emissions from AI is an indirect way of limiting the compute dedicated to AI and slowing down the AI capabilities race.

This approach seems viable in the near future until innovations in energy technology (e.g. nuclear fusion) weaken the link between energy production and CO2 emissions, or algorithmic progress reduces the need for massive amounts of compute for AI.

The question is whether this indirect approach would be more effective than or at least complementary to a more direct approach that advocates explicit compute limits and communicates risks from misaligned AI.

Oisín Considine @ 2024-07-26T13:01 (+4)

(Apologies in advance for the messiness from my lack of hyperlinks/footnotes as I'm commenting from my phone and I can't find how to include them using my phone. If anyone knows how to, please let me know)

This is something which has actually briefly crossed my mind before. Looking (briefly) into the subject, I believe an alliance with the climate advocacy movement could be a plausible route of action for the AI safety movement. Though this of course would depend on whether AI would be net-positive or net-negative for the climate. I am not sure yet which it will be, but it is something I believe is certainly worth looking into.

This topic has actually gained some recent media attention (https://edition.cnn.com/2024/07/03/tech/google-ai-greenhouse-gas-emissions-environmental-impact/index.html), although most of the media focus on the intersection of AI and climate change appears to be around how AI can be used as a benefit to the climate.

A few recent academic articles (1. https://www.sciencedirect.com/science/article/pii/S1364032123008778#b111, 2. https://drive.google.com/uc?id=1Wt2_f1RB7ylf7ufD8LviiD6lKkQpQnWZ&export=download, 3. https://link.springer.com/article/10.1007/s00146-021-01294-x, 4. https://wires.onlinelibrary.wiley.com/doi/full/10.1002/widm.1507) go in depth on the topic of how beneficial/harmful AI will be the climate, and basically the conclusions appear somewhat mixed (I admittedly didn't look into them in too much detail), with there being a lot of emissions from ML training and servers, though this paper (https://arxiv.org/pdf/2204.05149) has shown that

"published studies overestimated the cost and carbon footprint of ML training because they didn’t have access to the right information or because they extrapolated point-in-time data without accounting for algorithmic or hardware improvements."

There could also be reasons to think that this may not be the best move particularly if it's association with the climate advocacy movement fuels a larger right-wing x E/Acc counter-movement, though I personally feel it would be better to (at least partly) align with the climate movement to bring the AI slowdown movement further into the mainstream.

Another potential drawback might be if the climate advocates see many in the AI safety crowd as not being as concerned about climate change as they may hope, and they may see the AI safety movement as using the climate movement simply for their own gains, especially given the tech-sceptic sentiment in much of the climate movement (though definitely not all of it). However, I believe the intersection of the AI safety movement with the climate movement would be net-positive as there would be more exposure of the many potential issues associated with the expansion of AI capabilities owing to the climate movement being a very well established lobbying force, and in a sense the tech-scepticism in the climate movement may also be helpful in slowing down progress in AI capabilities.

Also, this alliance may spread more interest in and sympathy towards AI safety within the climate movement (as well as more climate-awareness among AI safety people), as has been done with the climate and animal movements, as well as the AI safety and animal welfare movements.

Another potential risk is if the AI industry is able to become much more efficient (net-zero, net-negative, or even just slightly net-positive in GHG emissions) and/or come up with many good climate solutions (whether aligned or not), which may make climate advocates more optimistic about AI (though this may also be a reason to align with the climate movement to prevent this case from ocurring).

jackva @ 2024-07-25T18:07 (+2)

I disagree with the substance, but I don't understand why it gets downvoted.

Matthew McRedmond🔹 @ 2024-07-26T07:29 (+3)

I would be curious to hear what the nature of your disagreement is : )

jackva @ 2024-07-26T08:26 (+4)

Something like this:

-

I think an obvious risk to this strategy is that it would further polarize AI risk discourse and make it more partisan, given how strongly the climate movement is aligned with Democrats.

-

I think pro-AI forces can reasonably claim that the long-term impacts of accelerated AI development are good for climate -- increased tech acceleration & expanded industrial capacity to build clean energy faster -- so I think the core substantive argument is actually quite weak and transparently so (I think one needs to have weird assumptions if one really believes short-term emissions from getting to AGI would matter from a climate perspective - e.g. if you believed the US would need to double emissions for a decade to get to AGI you would probably still want to bear that cost given how much easier it would make global decarbonization, even if you only looked at it from a climate maximalist lens).

-

If one looks at how national security / competitiveness considerations regularly trump climate considerations and this was true even in a time that was more climate-focused than the next couple of years, then it seems hard to imagine this would really constrain things -- I find it very hard to imagine a situation where a significant part of US policy makers decide they really need to get behind accelerating AGI, but then they don't do it because some climate activists protest this.

So, to me, it seems like a very risky strategy with limited upside, but plenty of downside in terms of further polarization and calling a bluff on what is ultimately an easy-to-disarm argument.

Matthew McRedmond🔹 @ 2024-07-26T11:25 (+4)

Thanks for the details of your disagreement : )

1. Yeah I think this is a fair point. However, my understanding is that climate action is reasonably popular with the public - even in the US (https://ourworldindata.org/climate-change-support). It's only really when it comes to taking action that the parties differ. So if you advocated for restrictions on large training runs for climate reasons I'm not sure it is obvious that it would necessarily have a downside risk, only that you might get more upside benefits with a democratic administration.

2. Yes, I think the argument doesn't make sense if you believe large training runs will be beneficial. Higher emissions seem like a reasonable price to pay for an aligned superintelligence. However, if you think large training runs will result in huge existential risks or otherwise not have upside benefits then that makes them worth avoiding - as the AI slowdown advocacy community argues - and the costs of emissions are clearly not worth paying.

I think in general most people (and policymakers) are not bought into the idea that advanced AI will cause a technological singularity or be otherwise transformative. The point of this strategy would be to get those people (and policymakers) to take a stance on this issue that aligns with AI safety goals without having to be bought into the transformative effects of AI.

So while a "Pro-AI" advocate might have to convince people of the transformative power of AI to make a counter-argument, we as "Anti-AI" advocates would only have to point non-AI affiliated people towards the climate effects of AI without having to "AI pill" the public and policymakers. (PauseAI apparently has looked into this already and has a page which gives a sense of what the strategy in this post might look like in practice (https://pauseai.info/environmental))

3. Yes but the question - as @Stephen McAleese noted - "is whether this indirect approach would be more effective than or at least complementary to a more direct approach that advocates explicit compute limits and communicates risks from misaligned AI." So yes national security / competitiveness considerations may regularly trump climate considerations, but if they are trumped less than by safety considerations then they're the better bet. I don't know what the answer to this is but I don't think it's obvious.

jackva @ 2024-07-26T12:09 (+4)

Thanks, spelling these kind of things out is what I was trying to get at, this could make the case stronger working through them.

I don't have time to go through these points here one by one, but I think the one thing I would point out is that this strategy should be risk-reducing in those cases where the risk is real, i.e. not arguing from current public opinion etc.

I.e. in the worlds where we have the buy-in and commercial interest to scale up AI that much that it will meaningfully matter for electricity demand, I think in those worlds climate advocates will be side-lined. Essentially, I buy the Shulmanerian point that if the prize from AGI is observably really large then things that look inhibiting now - like NIMBYism and environmentalists - will not matter as much as one would think if one extrapolated from current political economy.