Yglesias on EA and politics

By Richard Y Chappell🔸 @ 2022-05-23T12:37 (+79)

This is a linkpost to https://www.slowboring.com/p/understanding-effective-altruisms

[Updated to a full cross-post, with thanks to Matt for permitting this.]

Last Tuesday, the most expensive open House primary in history wrapped up with Andrea Salinas, a well-qualified state legislator, winning handily against a large field in which the second-place contender was a political neophyte named Carrick Flynn.

Millions of dollars were spent on behalf of Salinas, largely by Bold PAC, the super PAC for Hispanic House Democrats. But a much larger sum of money was spent on Flynn’s behalf by a super PAC called Protect Our Future, which is largely funded by Sam Bankman-Fried, CEO of the cryptocurrency exchange FTX.

While Flynn and SBF had never met before the campaign, Flynn is a longtime effective altruist and member of the EA community (see his interview with Vox’s EA vertical Future Perfect), and SBF has recently emerged as the biggest donor in the EA space.

Daniel Eth of the Oxford Future of Humanity Institute wrote a good piece on lessons for the EA movement from the campaign, but speaking from a media and politics perspective, I want to underline with the boldest possible stroke that I think the most important lesson from the race is the extent to which Flynn was understood to be the “crypto candidate” rather than the “EA candidate.”

That’s in part because of hostile messaging from his opponents (which is fair enough, it’s politics) but also because Flynn didn’t really talk about effective altruism until quite late. His campaign did discuss pandemic prevention — the top current priority for SBF and a topic Flynn has a background in — but not much about the movement or the ideas until the final weeks of the race. The judgment that EA is unfamiliar to most voters and sounds a bit weird was perfectly reasonable. But you can’t dump $12 million into a random House race without people wondering what’s up.

I think SBF’s ideas, as I understand them, are pretty good, and I hope that his efforts to spend money to influence American politics succeed. But to succeed, I think ultimately he’s going to have to persuade people that his agenda really is what he says it is.

Part of that will require fostering a broader familiarity with EA, and to that end, I wanted to write about the space as I understand it and its significance and insights.

Consequentialism briefly explained

I can’t speak to contemporary academia, but when I was in college the family of moral theories known as consequentialism were pretty unfashionable in philosophy departments. My understanding is that this has been true for most of the past two or three generations.

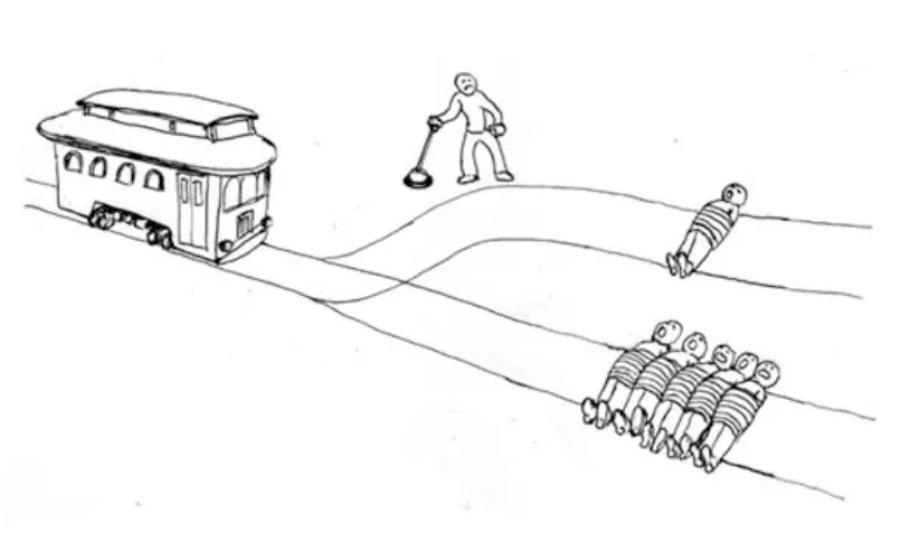

Probably the easiest way to gloss consequentialism is with reference to the famous trolley problem thought experiment: a consequentialist is someone who wants to throw the switch and cause one person to be run over in order to avoid the deaths of five people.

Perhaps more scandalously, opponents of consequentialism often argue that it follows from this position that it is morally appropriate (perhaps even obligatory) to murder innocent people and harvest their organs in order to save multiple lives.

Barbara Fried, a Stanford legal theorist and philosopher who also happens to be Bankman-Fried’s mother, has a great paper arguing that we should talk less about trolley problem scenarios. Her point is that the many variations of trolley problems share an artificial certainty about outcomes that is very unusual for a policy choice. A real-world trolley problem is more like the dilemma that the Washington Metropolitan Area Transit Authority recently faced when they found out many of their train drivers were out of compliance with certification rules. For the sake of safety, the agency took a bunch of drivers out of rotation and therefore needed to cut service. This decision provided a benefit in the form of a statistical reduction in the likelihood of a train accident. But it also comes with the possibility of a range of harms: less convenience for some, more automobile congestion for others, a small and diffuse increase in air pollution, and increased automobile risk.

On both sides, you have a lot of uncertainty. And Fried’s claim, which I think is correct, is that you simply can’t address these kinds of issues without making the kind of interpersonal tradeoffs that non-consequentialist theorists say you shouldn’t make. And if these allegedly bad interpersonal tradeoffs are unavoidable, they must actually not be bad, and in fact we should just be consequentialists.

A lot of people have asked me over the past six weeks what I think about Liam Kofi Bright’s April 4 post, “Why I Am Not A Liberal,” and mostly I think that it indicates that consequentialism is still held in low enough regard by academic philosophers that he doesn’t even mention it as an option.

This is too bad, because Fried is right.

EA is applied consequentialism

Consequentialist moral theory has been around for a long time, but I think the Effective Altruist movement really came into being when people started trying to use rationalist concepts to generate an applied form of consequentialism.

This is to say it’s easy to sit around in your armchair and say we should set superstition aside and pay people to donate kidneys because the consequences are good. But the tougher question is what should you, a person who is not allowed to unilaterally decide what the kidney rules are, do in a world where what matters most is the consequences of your actions? For Dylan Matthews, the answer was (among other things), to give one of his kidneys to a stranger for free.

The philosopher Will MacAskill tackles this sort of question in his book “Doing Good Better,” a foundational text for the EA movement. One of the most broadly accepted answers in the EA space is that if you’re a middle-class person living in a rich country, you should be giving more of your money away to those with much greater needs. The classic argument here is that if you were walking down the road and saw a child drowning in a pond, you’d jump in and try to save him. And you’d do that even if you happened to be wearing a nice shirt that would be ruined by the water because saving a child is more important than a nice shirt. So how can you in good conscience be walking around buying expensive shirts when you could be giving the money to save lives in poor countries?

An adjacent idea is “earning to give.” Typically we think that an idealistic young college grad might go work for some do-gooding organization, whereas a greedy materialist might go work at a hedge fund. But what if the best thing to do is to go work at a hedge fund, make a lot of money, and then make large charitable donations?

I first learned about EA from my college acquaintance Holden Karnofsky, who co-founded GiveWell with Elie Hassenfeld because they were, in fact, working at a hedge fund and earning to give but noticed a lack of critical scrutiny of where to give. Because we find their arguments convincing, we at Slow Boring give 10 percent of our subscription revenue to GiveWell’s Maximum Impact Fund. We are not 100 percent bought in on the full-tilt EA idea that you should ignore questions of community ties and interpersonal obligation, so we also give locally in D.C.

But I think the world would be greatly improved if a lot more people became a little more EA in their thinking, so I always encourage everyone to check out GiveWell and the Giving What We Can Pledge.

Protecting our future

Another core EA concept is that we should care about the very long-term future and that it is irrational to greatly discount the interests of people alive 100 or 100,000 or 100 million years from now.1

A number of things follow from that idea, but a major one is that it’s extremely important to try to identify and avoid low-probability catastrophes. This involves setting a reasonably high bar. Climate change, for example, is a big problem. The odds of worst-case scenario outcomes have gone down a lot recently, which is great, but we’re still on track for a really disruptive scenario. Over a billion people living on the Indian Subcontinent are going to face frequent punishing levels of heat that could easily generate repeated mass-death scenarios. Places that people are farming now will become unviable, and as they try to move off that land into new places there will be conflicts. Coastal cities will need to spend large sums of money on flood control or else abandon large amounts of fixed capital.

This is all very bad, but as Kelsey Piper argues, almost nobody who looks at this rigorously thinks it will actually lead to human extinction or the total collapse of civilization. I think it’s important not to take too much solace from this. The reality is just that in a world of eight billion people, you can unleash an incredible amount of suffering and death without provoking extinction. Even a full-scale nuclear war between the U.S. and Russia probably wouldn't do that — though the odds of civilization collapse and massive technological regression are pretty good there.

Preoccupation with this kind of question is called longtermism, and the way that it has impacted me most personally is trying to get people to think more seriously about asteroid or comet collisions or supervolcano eruptions. These are things that we know with total certainty are very real possibilities that just happen to be unlikely on any given day, so it’s easy to ignore them. But we shouldn’t ignore them!

Probably the most distinctive EA idea in this space, though, is the idea that humanity faces a high likelihood of being wiped out by rogue AI. That’s the kind of thing that people give you funny looks for if you say it at a party. But while it’s obviously not directly implied by the basic philosophical tenets of EA, it is something that has come to be widely believed in the community. If you tell the leading EA people that you are really only interested in helping save children’s lives in Africa, they will give you funny looks. I tend to go back and forth a little on what I think about this in part because it’s never really clear to me what sharing this worry actually commits you to in practice.

The last big bucket of existential risk, the one Sam Bankman-Fried has prioritized with his spending (and something I’ve written about frequently), is preventing pandemics.

SBF is for real

Sam Bankman-Fried’s life choices are unconventional, and I think that has made it easy to dismiss Carrick Flynn as a “crypto-backed” candidate or Protect Our Future as a crypto play. But there’s a pretty clear and plausible argument that this is simply not the case:

- SBF was raised by a leading consequentialist moral theorist.

- While attending MIT, SBF went to a Will MacAskill lecture on Effective Altruism and got lunch with him.

- At this point in time, MacAskill was heavily promoting “earn to give” as a life plan, specifically promoting the virtues of the then-unfashionable life choice of going to work in finance.

- SBF then went to work at Jane Street Capital, a proprietary trading firm notorious for employing smart weirdos, giving half his salary away to animal welfare charities.

- He left Jane Street to briefly work directly for the Centre for Effective Altruism, which MacAskill founded, but while there hit upon a crypto trading arbitrage opportunity.

- He left CEA to focus on crypto trading, starting a company called Alameda Research focused on making money through crypto arbitrage and other trading strategies.

- He then left Alameda to found FTX. Now the co-CEO of Alameda Research is Caroline Ellison, also an EA who writes posts on EA Forum explaining how much weight2 she puts on ensuring that potential hires are aligned with EA values.

- FTX has been incredibly successful, and Bankman-Fried is now a multi-billionaire.

The whole SBF vibe seems weird to most people because although the billionaire philanthropist is a well-known character in our society, we normally expect the billionaire to come to philanthropy over time as part of a maturing process. When you’re young, you’re just focused on building the business and getting rich. Then you start doing charity as a form of personal/corporate PR, and then maybe you get more serious about it or potentially make the Bill Gates turn where you have a whole second career as a philanthropist. But SBF is still very young and still very much running his business.

But I think when you consider SBF’s literally life-long investment in consequentialist and EA ideas (he was blogging about utilitarianism as a college student), it’s clear that far from his political spending being a front for cryptocurrency, the cryptocurrency businesses just exist to finance effective altruism.

Now in the spirit of full disclosure in case you think I’m full of shit, I have never met the guy, but I am friendly with some of the people who work for him on the politics side. Kate worked for a bit at GiveWell. So we are not 100 percent arm’s length observers of this space, but we are not receiving money from the EAverse.

Of course if you’re very skeptical of cryptocurrency you might not find this reassuring — a person running a cryptocurrency exchange for the larger purpose of saving all of humanity might actually be more ruthless in his lobbying practices than someone doing it for something as banal as money. But I think it’s pretty clear that Bankman-Fried is genuinely trying to get the world to invest in pandemic prevention because it’s an important and underrated topic, and he’s correct about that.

Consequences are what matter

The most important part of all of this, the part that ties back into my more mainstream political commentary, is the importance of trying to do detached critical thinking about the consequences of our actions.

Max Weber, this blog’s namesake, talked about the “ethic of responsibility” as an important part of politics. You can’t just congratulate yourself on having taken a righteous stand — you need to take a stand that generates a righteous outcome. That means a position on abortion rights that wins elections and stops people from enacting draconian bans. It means crafting a politically sustainable approach to increasing immigration rather than just foot-dragging on enforcement. It means taking Black lives matter seriously in the 2020-present murder surge. And, yes, it means trying to drag our Covid-19 politics out of the domain of culture wars and into the world of pandemic prevention.

I don’t know whether all or most of the current EA conventional wisdom will stand the test of time, but I think the ethic of trying to make sure your actions have the desired consequences rather than being merely expressive is incredibly important.

The “longtermist” view that has become ascendant recently and is articulated in MacAskill’s new book “What We Owe The Future” (and dates back at least to a 1992 paper by Derek Parfit and Tyler Cowen) says we shouldn't discount the future at all, but it's not clear to me how widely shared this opinion is or whether the main longtermist policy priorities really hinges on this detail.

As it happens, the answer is not that much weight, but for reasons that specifically relate to the nature of Alameda’s business.

Alex Mallen @ 2022-05-23T15:53 (+66)

I'm concerned about getting involved in politics on an explicitly EA framework when currently only 6.7% of Americans have heard of EA (https://forum.effectivealtruism.org/posts/qQMLGqe4z95i6kJPE/how-many-people-have-heard-of-effective-altruism). This is because there is serious risk of many people's first impressions of EA to be bad/politicized, with bad consequences for the longterm potential of the movement. This is because political opponents will be incentivized to attack EA directly when attacking a candidate running on an EA platform. If people are exposed to EA in other more faithful ways first, EA is likely to be more successful longterm.

RyanCarey @ 2022-05-23T16:23 (+15)

Agreed. By the way, the survey is not representative, and people often say they've heard of things that they have not. I think the true number is an order of magnitude lower than the survey suggests.

Lorenzo @ 2022-05-23T19:38 (+8)

If you tell the leading EA people that you are really only interested in helping save children’s lives in Africa, they will give you funny looks.

I'm really surprised to read this!

I guess this points strongly towards EA being just longtermism now, at least in how "the leading EA people" present it

timunderwood @ 2022-05-24T14:34 (+9)

It's definitely not just long termism - and at least before sbf's money started becoming a huge thing there is still an order of magnitude more money going to children in Africa than anything else. For that matter, I'm mostly longtermist mentally, and most of what I do (partly because of inertia, partly because it's easier to promote) is saving children in Africa style donations.

Lorenzo @ 2022-05-24T16:14 (+1)

Not sure how visible it is, but I was linking to this post: https://forum.effectivealtruism.org/posts/LRmEezoeeqGhkWm2p/is-ea-just-longtermism-now-1

Erich_Grunewald @ 2022-05-23T23:48 (+7)

For what it's worth, something like one fifth of EAs don't identify as consequentialist.

Nathan Young @ 2022-05-23T15:01 (+4)

I would recommend crossposting the entire article, since it's a public one.

(Matt if you're readign this and would prefer us not to crosspost your articles, please say, but uh I think you'll get more clicks this way anyway)

Matthew Yglesias @ 2022-05-23T19:21 (+13)

Feel free to cross-post!

Richard Y Chappell @ 2022-05-23T19:33 (+2)

Done, thanks!

Kevin Lacker @ 2022-05-23T14:42 (+4)

It is really annoying for Flynn to be perceived as “the crypto candidate”. Hopefully future donations encourage candidates to position themselves more explicitly as favoring EA ideas. The core logic that we should invest more money in preventing pandemics seems like it should make political sense, but I am no political expert.

Matthew Yglesias @ 2022-05-23T19:23 (+37)

This is also just an example of how growing and diversifying the EA funding base can be useful even if EA is not on the whole funding constrained ... a longtermist superpac that raised $1 million each from 12 different rich guys who got rich in different ways would arguably be more credible than one with a single donor.