Improving Institutional Decision-Making: Which Institutions? (A Framework)

By IanDavidMoss @ 2021-08-23T02:26 (+86)

Summary

- Why we wrote this article: In support of our work to build a global community of practice around improving institutional decision-making (IIDM), the Effective Institutions Project has created a framework to help us make thoughtful judgments about which institutions’ decisions we should most prioritize improving as well as what strategies are most likely to succeed at improving them. We are publishing the framework as a way of fostering transparency and understanding of our approach.

- What’s in the article: This document describes a generalized theory of the mechanisms through which institutional improvement efforts can lead to globally desirable outcomes using both a neartermist and a longtermist lens. It offers guidance for analyzing the cost-effectiveness of such efforts in one of two ways: via a specific-strategy model that assumes the user has both a particular institution in mind and a concrete idea of how to improve its decision-making; or a generic-strategy model that is better suited for comparing many different institutions at once or gauging the overall upside of engaging with an institution. We demonstrate the use of these models with two contrasting case studies focusing on the City of Zürich and the United States Food and Drug Administration, respectively.

- Who should read this article: While we developed this framework primarily for use in our own projects, we expect it may be of interest to a range of other audiences, including:

- Funders interested in deploying resources toward improving the performance of important institutions around the world (or some subset thereof)

- Advocates and practitioners seeking to evaluate the potential of specific strategies to improve the decisions of a specific institution

- Individuals seeking to build an impactful career focused on institutional effectiveness

- Researchers exploring the intersection between a given cause area and the institutional/policy landscapes connected to it

- How to use this article: We welcome both feedback on the framework and adaptations of it for readers’ own purposes. Experienced readers will likely find the “Estimating engagement potential for a given institution” and case studies most content-rich.

Background

Actions taken by powerful institutions—such as central governments, multinational corporations, influential media outlets, and wealthy philanthropic funders—shape our lives in myriad and often hard-to-perceive ways. Most institutions prioritize the interests of a relatively narrow set of stakeholders, but the indirect effects of their decisions can influence outcomes far beyond the realm of their target audience. Even when institutions’ goals are fully aligned with the common good, moreover, the process of translating those goals into concrete actions is often plagued with errors of judgment and failures of imagination. If we could find ways to increase both the technical quality and alignment with the public interest of important decisions made by powerful decision-making bodies, we would greatly improve our collective capacity to address global challenges.

Making meaningful progress on this challenge requires a strong understanding of which institutions’ decisions we should prioritize improving and what strategies are most likely to succeed at improving them. This document offers an analytical framework to help readers make thoughtful judgments about both.

The framework presented here is the work of a particular group of people (largely English-speaking professionals from industrialized countries with representative governments), and our assumptions about how best to advance the common good draw from a particular set of inspirations (including consequentialism, the scientific method, and many ideas from effective altruism). With that said, we have tried to construct this framework to be flexible enough to accommodate a range of worldviews, and welcome adaptations to represent alternative ways of conceptualizing the common good.

Throughout this document, we refer variously to “institutions,” “key institutions,” and “powerful institutions.” The definition of an institution is not completely standardized across disciplines or academic fields, but in this context we mean a formally organized group of people, usually delineated by some sort of legal entity (or an interconnected set of such entities) in the jurisdiction(s) in which that group operates. In tying our definition to explicit organizations, we are not including more nebulous concepts like “the media” or “the public,” even as we recognize how broader societal contexts (such as behavior norms, history, etc.) help define and constrain the choices available to institutions and the people working within them. By “key” or “powerful” institutions, we are referring in particular to institutions whose actions can significantly influence the circumstances, attitudes, beliefs, and/or behaviors of a large number of morally relevant beings.

Finally, it’s important to state that this framework is an experiment. We intend to treat it as a living document, and expect it to evolve—or perhaps transform into something else entirely—as a wider range of interested parties encounter it and as we have more opportunity to use it in practice. We ask that you read the document with this caveat in mind, and invite you to share any criticisms or suggestions for improvement you may have with us.

This framework is one of several initiatives the Effective Institutions Project (formerly the improving institutional decision-making working group) has undertaken to better understand and make progress on the challenge of improving institutional decision-making. In addition to this document, we are also creating a directory of resources related to institutional decision-making, a synthesis of research findings relevant to the area, and community collaboration spaces for individuals and organizations interested in helping to build the field. Please contact improvinginstitutions@gmail.com to learn more.

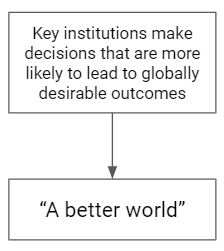

The role of institutional decisions in creating a better world

There would be little point in investing in better decision-making at institutions if we didn’t believe it would, in turn, result in globally desirable outcomes. Thus, it’s not enough for us to simply treat “improving decision-making” itself as the end goal: we must keep our eyes on the prize of a better world and attempt to specify the role that better decision-making can play in making that world possible.

The first challenge, therefore, is to define what we mean by a better world. We would never presume to have all the answers about how to make the world a better place, and there are several active communities of scholars and intellectuals already working to define what constitutes global progress and ways to conceptualize and measure that progress in practice. Rather than join that debate directly, therefore, we feel it is more productive for us to create a framework for connecting institutional decisions to global outcomes that takes moral uncertainty and worldview diversification into account. We currently believe that the best way to do this is to construct our framework in a modular way that allows for the substitution of different end goals that each represent different moral theories.[1] We will demonstrate that idea in this document with two definitions of the common good and their associated metrics.

One of the directions we’ll explore involves prioritizing institutional engagement around the idea of increasing global happiness or quality of life in the near term. In recent decades, scholars from a range of disciplines have increasingly used the broad concept of “wellbeing” as a stand-in for general welfare, creating a range of both subjective and objective wellbeing measures such as the Human Development Index, Social Progress Index, and the World Happiness Report. At present, the most holistic metric for wellbeing that we know of is the wellbeing-adjusted life year, typically abbreviated as WALYs, WELBYs, or WELLBYs. While the operationalization of wellbeing-adjusted life years is a work in progress, the concept is a simple expansion of the quality-adjusted life year (QALY) metric that is already widely used in health economics to include non-health-based measures of quality of life. Among other practical applications, the What Works Centre for Wellbeing and London School of Economics have explored what it would look like to incorporate wellbeing measures into cost-effectiveness analyses.

Another definition of success draws heavily from the effective altruism movement and specifically from the philosophy of longtermism, or the idea that we should choose our actions based primarily on what would be best for the very long-run future because there could be be many, many more sentient beings in the future than there are living today.[2] The challenge of conceptualizing and estimating costs and benefits for such a large and diffuse stakeholder group, the vast majority of whom can’t speak for themselves, is daunting to say the least. Longtermists have partially gotten around that challenge, however, by focusing on “existential risks”, meaning risks of events that would permanently, drastically reduce the potential for value in the future. If the future could contain vast numbers of morally relevant beings with flourishing lives, and those existential risks could irreversibly prevent them from existing or worsen their lives, it may be reasonable to simply focus on the proxy goal of existential risk reduction. In line with that, existential risk reduction is the longtermism-relevant metric we will use in our pilot adaptation of the framework here.[3]

That brings us to our second challenge: defining what we mean by better decisions, or to put it more precisely, articulating how the process of making decisions can change in ways that are more likely to lead to the globally desirable outcomes we’ve just identified. Before we go further, we should clarify that when we speak of “decision-making,” we are using that term broadly to refer to all of the aspects of the process leading up to an institution’s actions over which that institution has agency. Institutional decision-making, therefore, encompasses everything from determining the fundamental purpose of the organization to day-to-day details of program execution.

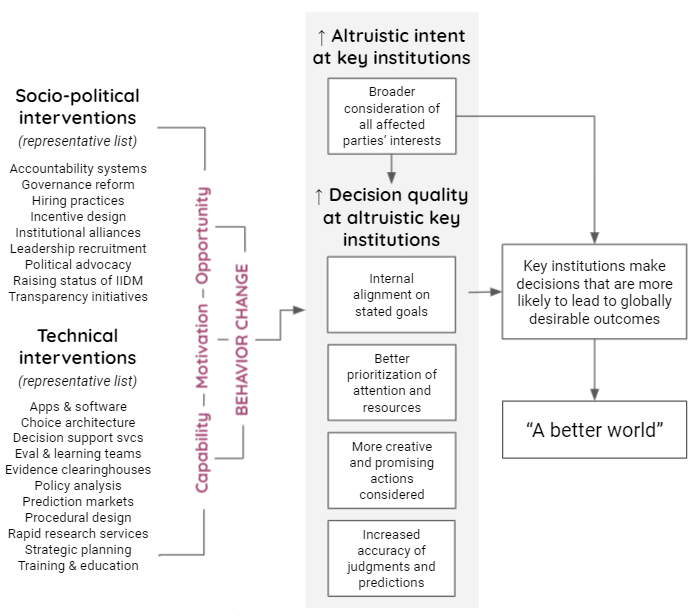

Within that broad scope, we see two overall pathways by which better institutional decisions can lead to better outcomes. First, an institution’s mission or stated goals can become more altruistic, in the sense that they more directly contribute to a vision of a better world. One way in which this can happen is for the institution to cultivate a discipline of caring about the interests and wellbeing of everyone affected by its decisions, not only its most direct or powerful or visible stakeholders. We might think of this principle as a version of moral circle expansion for institutions; the broader the set of stakeholder interests to which the institution is willing to be accountable in practice, the more altruistic we might consider the institution to be.[4]

Second, an institution can take steps to increase the likelihood that its decisions will lead to the outcomes it desires by, for example, enhancing internal alignment and focus on its goals and promoting more accurate team judgments. Because this type of work treats an institution’s goals as a given, we must be careful to avoid scenarios in which improving the technical quality of decision-making at an institution yields outcomes that are beneficial for the institution but harmful by the standards of the “better world” definition above. With that caveat in mind, there are a number of decision-making techniques and strategies that institutions which meet this minimal threshold can deploy to more consistently achieve their desired outcomes over time.

Intervention design for better decisions

There is no standardized definition of what constitutes a “good” decision in the academic literature, and researchers have attempted to operationalize it in various ways including by asking the decision-makers to rate their decisions after the fact, tying the decisions to outcomes such as corporate earnings or profits, etc. Given the role of luck and the diversity of success metrics necessary to capture a notion as broad as world improvement, it seems important to choose a definition for decision quality that is anchored to process rather than outcomes. One useful construct in this vein comes from the corporate consultancy Strategic Decisions Group, which collaborated with pioneering decision analysis researcher Ronald Howard in the 1980s to develop the decision quality (DQ) framework. Our current theory of change uses a modified version of this framework that includes the following four elements:

- Alignment between an institution’s stated goals and revealed preferences. Organizations are made up of people, each of whom has a complicated set of priorities and incentives that can sometimes conflict with those of the institution (this challenge is referred to as the principal-agent problem in management literature). If a cynical disregard for the mission is deeply embedded in an institution’s culture—for example, a nonprofit or government agency pursuing continued access to power over beneficial outcomes for constituents—it may make more sense for our purposes to treat such revealed preferences as the organization’s de facto goals.

- Prioritizing attention and resources for important decisions. This criterion involves ensuring that the amount of time, bandwidth, and other resources devoted to getting a decision “right” is at least roughly proportional to the potential consequences of that decision, taking into account considerations of urgency and other constraints. Among other things, it requires recognizing when there is an important decision for the institution to make and creating structure around that process as needed.

- Range and promise of options under consideration. Research suggests that decision-making teams in professional contexts tend to consider fewer alternatives than would be optimal in high-stakes situations. Deploying tools such as public consultation, human centered design, brainstorming, blue-sky thinking, adaptive planning, vulnerability identification (for managing extreme risks), etc. can help institutions overcome these limitations and identify a broad set of options. Institutions can also increase the quality of their options by improving their capacity and reach in various ways.

- Accuracy of judgments and predictions. Decisions ultimately require making judgments about the future: what will happen as a result of choosing one path vs. others. Those judgments are informed by our understanding of both the conditions of the world around us and how that world is likely to respond to actions we take, the quality of the evidence and decision making tools at our fingertips, and our own capacity to implement decisions. Thus, the more accurate our judgments and predictions are, the more likely it is that our decisions will yield the outcomes we want.

Specialists in various fields have developed a variety of methodologies for moving the needle on the decision quality outcomes described above. These can be divided broadly if imperfectly into technical interventions (e.g., training, prediction markets) designed to improve the skills and capabilities of the decision-makers and socio-political interventions (e.g., anti-corruption measures, advocacy campaigns) intended to shift incentives and power dynamics in ways that promote good decision-making. While interventions have varying degrees and quality of evidence behind them, choosing between them often requires attending to case-specific considerations like the organization’s cultural strengths and weaknesses and how likely a particular intervention is to get adopted and implemented faithfully. These ideas roughly map onto a policy development rubric known as the COM-B model, which argues that behavior change among a group of actors results from those actors having sufficient capability, opportunity, and motivation to make the change.

When designing any specific intervention to improve the decisions of a specific institution, we advise considering the intervention at least informally through the lens of the COM-B model in order to judge its prospects for successful adoption/implementation and identify any adjustments that can be made to improve those chances.

Estimating engagement potential for a given institution

With the above premises in place, we can proceed toward the construction of an evaluation framework for assessing the relative benefits of trying to improve the decision-making practices of any institution. We can structure this framework as if we were creating a quantitative model to estimate the costs and benefits of such attempts, even though at this early stage of our work there will necessarily be very wide bands of uncertainty associated with any estimates of those benefits in the resulting analysis.

In this section, we will consider two different ways of going about estimating these costs and benefits. The specific-strategy version of the model assumes that the user has both a particular institution in mind and a very concrete idea of how to improve its decision-making. Ideally, the proposed intervention should be rooted in a strong understanding of the institution’s internal practices and external influences, as it will be difficult to estimate its potential benefits and risks with much fidelity absent that understanding. This version of the model is best suited for comparing the relative potential of different strategies to improve the same institution, evaluating concrete proposals such as grant applications, or iterating on the details of an intervention that is still in the design stage.

By contrast, the generic-strategy version of the model assumes only a high-level familiarity with the institution and its role in the world. Rather than attempt to specify the intervention upfront, it attempts to assess the prospects of finding one or more worthwhile interventions to undertake and the potential upside of doing so. It is best suited for comparing across many institutions at once, especially when not all of them are well known to the user. Such comparisons may be relevant when developing a policy engagement strategy for a particular cause area, considering a portfolio of investments to make an ecosystem of institutions more resilient to unknown future threats, and assessing possible directions for a career in improving institutional decision-making, among other use cases.

Each of these variations in the model, in turn, can be adapted to use either of the definitions of “a better world” outlined in the previous section. Below, we lay out a set of questions designed to generate numeric inputs for the model. It’s critical to emphasize that many of these quantitative estimates will involve an enormous degree of uncertainty. For that reason we strongly recommend using a modeling method such as Monte Carlo simulation that is capable of handling very wide confidence intervals, treating the model outputs as suggestive rather than determinative, and taking care when communicating model results to a general audience not to convey a false level of precision or confidence in either the numbers or the conclusions.[5]

For a user seeking to make casual or fast choices about prioritizing between institutional engagement strategies, for example a small consulting firm choosing among competing client offers, it’s perfectly acceptable to eschew calculations and instead treat the questions as general prompts to add structure and guidance to an otherwise intuitive process. Since institutional engagement can often carry high stakes, however, where possible we recommend at least trying a heuristic quantitative approach to deciding how much additional quantification is useful, if not more fully quantifying the decision.

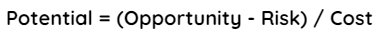

Specific-strategy model

At a conceptual level, the equation we propose for the specific-strategy model[6] looks like this:

Below, we’ll examine each of these parameters in turn.

Opportunity

The Opportunity parameter focuses on the potential benefits of the strategy with respect to the outcome of interest. The considerations include some variables that are specific to the “better world” outcome used and others that are relevant no matter the definition of success. As noted above, these questions assume the existence of a concrete strategy to improve an institution’s decision-making, such as advocating for the adoption of a particular decision-making practice or technique, changing its management structure in a specific way, or hiring a strategic planning consultant. The strategy should be endorsed as feasible to implement by people who are at least broadly familiar with how the institution works. If there are multiple such strategies that meet the criteria, the questions below should be considered separately for each.

- Conditional on the change in the institution’s practices being implemented, what is the expected impact on [the metric of interest]?

- Conditional on the success of the strategy, how long are its effects likely to endure?

- For example, we might expect a reform to be at greater risk of reversal in an environment in which different factions with disparate priorities are struggling for authority than one under stable control.

- If “we” were to proactively support or get involved in the abovementioned strategy, by how much would the strategy’s probability of success increase?

- The definition of “we” in this question will depend on the user of the framework and the context. It could be specific to a particular organization or interest group, or it could be a much more general statement about a broad and loosely connected network of institutional reformers worldwide.

- This parameter could either be estimated directly or by breaking down the ways in which the user’s involvement might strengthen implementation of the strategy according to its theory of change.

- If the expected impact of the strategy (not just its chances of success) may change as a result of “our” involvement, what is the magnitude (in [metric of interest]) of that change?

- If there are unusually plausible second-order effects of the strategy (e.g., lessons learned that can be applied to strategies to reform similar institutions in the future), what is the magnitude in [metric of interest] of that expected impact?

- We recommend being conservative when filling out this term since all interventions can have indirect effects, but it may be justified if the work is especially high-profile or there are known parties closely following its progress.

- (CALCULATE) What impact on [metric of interest] is likely to result from “us” getting involved in this strategy?

Risk

With any intervention, there is always a risk that the desired result won’t be achieved. This section is intended to capture something distinct: the risk that involvement in a strategy will actively make things worse.

Relevant questions:

- What is the probability that “our” involvement in this strategy to make change in this institution would not just fail to help, but reduce either the chances of that strategy succeeding or the impact of the strategy if it did succeed? If this is a concern, what is the potential magnitude of these risks?

- What are the chances that a failed attempt to execute on this strategy at the next available opportunity will crowd out a more successful strategy to change practices at this institution in the next five years (whether the latter involves us or not)? If this is a concern, what is the potential magnitude of these risks, and how much might our involvement exacerbate them?

- What are the chances that our involvement in a failed attempt to execute on this strategy will reduce our or others’ ability to create change at other important institutions in the future?

- What are the chances that a successfully implemented strategy to change decision-making practices at this institution will result in a worse outcome than the alternative due to insufficient concern for indirect stakeholders on the institution’s part (e.g., the institution uses its increased technical sophistication to increase the wellbeing of its direct constituents at the expense of everyone else)? If this is a concern, what is the potential magnitude of this risk?

Cost

Costs are an estimate of upfront and/or ongoing investments required to sustain a strategy and its implementation. We recommend framing costs in purely economic terms (e.g., dollars) for the sake of simplicity, being sure to include implicit costs like staff time at least approximately. By including a cost variable, we can more fairly compare interventions that are expensive but offer high upside, such as those that require influencing public opinion or behavior at scale, with others that are more narrowly scoped. Cost estimates should be confined to the incremental costs incurred by the user getting actively involved in a strategy—for example, if a funder is considering supporting an advocacy campaign to improve anti-corruption protections at the local government level, the funder should include only the cost of the contributions it itself will be making, just as we are only focusing on the marginal impact those funds are likely to have on the success of the strategy.

Generic-strategy model

The generic-strategy model focuses on an institution’s general level of influence on the outcome(s) of interest as well as the degree to which efforts to improve its decision-making are expected to be tractable and neglected. The generic-strategy model is focused on establishing a reasonable upper bound on the potential benefit from engaging with an institution, so we should expect that any concrete intervention modeled for the same institution using the specific-strategy model will most likely not look quite as promising.

While still framed as a cost-effectiveness analysis, the generic-strategy model also takes inspiration from the ITN (Importance, Tractability, Neglectedness) framework commonly used in effective altruism. The questions about the institution’s influence on the world map to Importance, the questions about the potential difference that intentional interventions to improve its decision-making could make cover Tractability, and the question about how much of that difference would be captured anyway by other reformist influences establish Neglectedness.

The details of how the generic-strategy model is constructed depend on the metric(s) chosen for the “better world” outcome. Below, we present two separate adaptations for WELLBYs and existential risk, respectively.

WELLBYs (for near-term maximization of global welfare)

Relevant questions:

- How many WELLBYs (wellbeing-adjusted life years) are at stake as a result of this institution’s decisions in a typical year?

- Estimate roughly to an order of magnitude. By “at stake,” we mean the difference between plausible best-case and worst-case scenarios of an institution’s performance during a typical year (or in the case of the next question, an “extreme scenario”). For advice on how to arrive at this estimate, see the “Tips for estimating WELLBYs” inset below.

- How many WELLBYs are at stake as a result of this institution’s decisions in an extreme scenario?

- Estimate roughly to an order of magnitude. An “extreme” scenario, for the purposes of this model, means a scenario in which the consequences of the institution’s decisions are about as dramatic as they can possibly be. For example, consider the difference between the consequences of the US military’s decisions during a nuclear conflict vs. its decisions during an uneventful peacetime year.

- In expectation, how many of the next 10 years will fit the extreme scenario rather than the typical scenario?

- For example, if you think there’s roughly a 1 in 100 chance every year of an extreme scenario unfolding, you could use 0.1 for the input. If you have a lot of uncertainty about the likelihood of such a scenario and you’re using a simulation-based modeling method, you could use a range of estimates reflecting an 80% or 90% confidence interval.

- Note the choice of 10 years is arbitrary, and you can select a different time horizon as long as you use it consistently throughout the model. Using a longer time frame may be important for considering unlikely scenarios, but can also lead to overestimating the stability of institutions.

- (CALCULATE) How many WELLBYs are likely to be at stake from this institution’s decisions over the next 10 years?

- On a scale of 0 to 100 where 0 represents the institution’s worst possible performance and 100 represents its best possible performance over that period of time, where would we expect its average performance to be absent any intentional interventions to improve its decision-making or otherwise change its practices?

- Now imagine that there was a massive and well-designed (yet realistic) effort over 10 years to improve the institution’s decisions. On that same scale of 0 to 100, where would we expect the institution’s average performance to be with these interventions in place?

- What proportion of the benefits from interventions like these do we think the institution would realize over the next 10 years anyway as a result of efforts by other reformers with aligned goals?

- (CALCULATE) What is the potential upside of engaging with the institution to improve its decisions?

Tips for estimating WELLBYs |

|

|

|

|

|

Existential risk (for long-term maximization of global welfare)

Relevant questions:

- Do this institution’s decisions have material impact on any specifically noteworthy existential risk scenarios (e.g., full-scale nuclear war, artificial general intelligence, global pandemics)?

- For each existential risk scenario:

- What is our current expectation of the likelihood of the scenario over the next century?

- Note: the selection of a 100-year time horizon is arbitrary. A different time horizon can be substituted as long as it is used consistently throughout the model.

- How much of that likelihood do we think the institution could reasonably influence through its actions?

- What is our current expectation of the likelihood of the scenario over the next century?

- What is the institution’s expected impact on all other existential risks, including those yet to be identified?

- As one reference point, Toby Ord’s The Precipice estimates the odds of the world experiencing some kind of existential risk over the next 100 years at roughly 1 in 6, with the odds from “unforeseen” anthropogenic risks totaling ~1 in 50.

- (CALCULATE) What proportion of existential risk is attributable to the institution’s actions over the next century?

- Conditional on the institution being in a position to determine the fate of humanity at some point in the next 100 years, to what degree should we expect that intentional interventions to improve its decision-making undertaken between now and then might improve the outcome?

- What proportion of the benefits from interventions like these do we think the institution would realize over the next 100 years anyway as a result of efforts by other reformers with aligned goals?

- (CALCULATE) What is the potential upside of engaging with the institution to improve its decisions?

The notion of arriving at numerical estimates for the above considerations may seem impossibly challenging, but remember that the goal of the generic-strategy version of the framework is only to establish a very rough upper bound on the potential of engaging with the institution in question. It is meant as a starting point for detecting differences between institutions that are large enough to be meaningful but not so large as to be self-evident.

Refining initial estimates

The above model templates should suffice as a first pass at understanding the role that an institution and its decisions can play in shaping the world for better or worse. For many situations in which general guidance is all that’s needed or feasible at that junction, it’s fine to stop there. When faced with a highly consequential choice and some time to make it, however, it could be valuable to combine the framework with a more robust and issue-specific approach to strategy and policy design.

The biggest limitation of the models presented in the previous section is that they leave the mechanisms between an institution’s actions and the ultimate outcomes of interest unspecified. While we can still estimate the relationship between those two factors even with this limitation, the estimates will necessarily have a lot of uncertainty due to the complex systems in which the institution operates.

When considering whether to invest in specific strategies to leverage an institution’s influence to achieve outcomes of interest—whether that investment looks like deploying funding, orienting a team’s work, planning a career, etc.—it’s helpful to have a detailed understanding of both the broader systems in which the institution operates as well as the web of causal factors that could facilitate or hinder the quest for the intended outcomes. Each of these assets can help the model-builder break down the overall situation into more component parts, which in turn can foster more precise and accurate estimates for how changes in an institution’s actions might contribute toward the desired goal.

Many tools exist to help answer such questions. There is an established discipline of systems mapping that has found adoption in some corners of the social sector, such as Democracy Fund’s systems map for public trust in US government institutions. The relationships between different elements and levers of such a system can be mapped on to a theory of change, which in turn can form the foundation of a more detailed version of the framework above.

Similarly, users of the framework can improve upon it by integrating issue- or cause-specific resources laying out connections between institutional actions and outcomes of interest. For example, the Sendai Framework, endorsed by the UN General Assembly in 2015 following the Third World Conference on Disaster Risk Reduction, lays out a detailed 15-year road map aimed at “the substantial reduction of disaster risk and losses in lives, livelihoods and health and in the economic, physical, social, cultural and environmental assets of persons, businesses, communities and countries.” For WELLBYs, the UK’s What Works Centre for Wellbeing has put together a living knowledge bank of evidence regarding the drivers of wellbeing and begun working with local authorities on developing overall wellbeing-maximization strategies for their respective jurisdictions.

It bears repeating that when we refer to “the framework” or “the model” in this document, we are not only talking about numbers. While the design of the framework is oriented around a cost-effectiveness analysis, such quantitative treatments are of little value if they are not grounded in a strong qualitative understanding of the situation at hand. Tools like those mentioned above can be as useful for improving that baseline understanding as for improving calculations.

Case studies

To help illustrate how this framework can be used in practice, we’ve provided two contrasting case studies. One, the story of an effort to increase the city of Zürich’s international development aid budget and direct it toward evidence-based interventions, is based on a real-life ballot initiative that became law in 2019. The other, a proposal to bolster the United States Food and Drug Administration (FDA)’s ability to respond to emergencies, is inspired by the important role the agency played during the COVID-19 pandemic in 2020 and 2021. The two case studies differ in several other important ways:

- The Zürich case study uses the neartermist benchmark of wellbeing-adjusted life years, while the FDA case study focuses on reductions to existential risk.

- The Zürich case study is based on an intervention that already took place, while the FDA case study is forward-looking.

- The FDA case study highlights changes that could be undertaken internally by agency leadership, while the Zürich case study involves a public-facing campaign to make changes to the way the city makes decisions.

- The Zürich case study is focused on a specific strategy to make a specific change within a specific department of the institution, whereas the FDA case study is more speculative and applies across the institution as a whole.

These contrasts help show the breadth of contexts to which the framework can be applied.

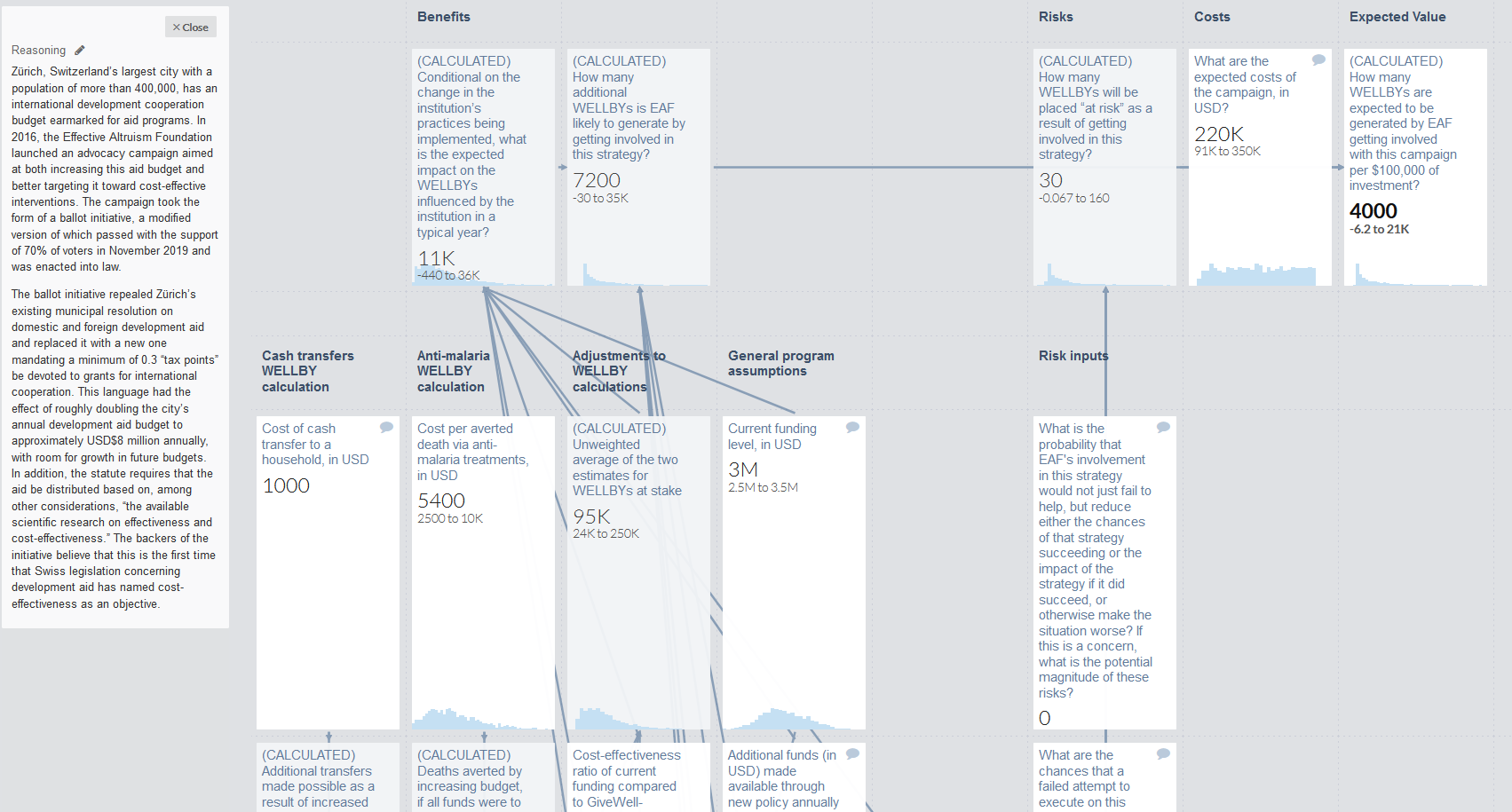

Case study #1: Reforming a city government’s development aid policies

Zürich, Switzerland’s largest city with a population of more than 400,000, has an international development cooperation budget earmarked for aid programs. In 2016, the Effective Altruism Foundation launched a ballot initiative aimed at both increasing this aid budget and better targeting it toward cost-effective interventions. The campaign took the form of a ballot initiative, a modified version of which passed with the support of 70% of voters in November 2019 and was enacted into law.

The ballot initiative repealed Zürich’s existing municipal resolution on domestic and foreign development aid and replaced it with a new one mandating a minimum of 0.3 “tax percentage points” be devoted to grants for international cooperation. This language had the effect of roughly doubling the city’s annual development aid budget to approximately USD$8 million annually, with room for growth in future budgets. In addition, the statute requires that the aid be distributed based on, among other considerations, “the available scientific research on effectiveness and cost-effectiveness.” The backers of the initiative believe that this is the first time that Swiss legislation concerning development aid has named cost-effectiveness as an objective.

Why might a ballot reform initiative like this represent an improvement in institutional decision-making? From the perspective of the first definition of “a better world” outlined in this framework, both subjective and objective measures of wellbeing correlate with material wealth, especially for people living below or just above the poverty line. As wealth increases, however, its impact on wellbeing diminishes. Thus, redistributing wealth from the relatively affluent to the world’s poorest people is a way to increase overall global wellbeing as well as to reduce wellbeing inequality. Furthermore, the initiative’s requirement that cost-effectiveness be taken into account when choosing aid recipients ought to result in better outcomes from this redistribution than would otherwise be the case, although there is a risk that poor-quality implementation of this statute could erase any expected benefits (e.g., by creating excessive bureaucracy or perverse incentives among organizations competing for aid).

We can establish an upper bound on the benefits to this redistribution by imagining what would happen if the aid were directed exclusively to the most cost-effective charities fighting global poverty. Since its formation in 2007, GiveWell has specialized in finding such charities and estimating their cost-effectiveness. As of 2021, for example, GiveWell has estimated that GiveDirectly, one of its recommended charities, can roughly double the baseline annual consumption of a household in sub-Saharan Africa via a one-time unconditional cash transfer of about $1,000; meanwhile, a variety of anti-malaria groups can avert a child’s death in the same region for about $4,000. The Happier Lives Institute has, in turn, done pioneering work to translate these outcomes into WELLBYs.

Using these benchmarks as a guide and adjusting for expectations that not all of Zürich’s grants will be this cost-effective in practice, our simulation model suggests that the new ballot initiative will generate approximately 700 to 25,000 WELLBYs a year (using an 80% confidence interval), with the impacts likely to endure for decades.[9]

Because this case study involves consideration of a concrete proposal to change an institution’s practices, we will use the specific-strategy version of the model to estimate its costs and benefits. The model inputs are demonstrated below, and you can view a live version of it here.

Opportunity

| Question | Estimate |

| Conditional on the change in the institution’s practices being implemented, what is the expected impact on the WELLBYs influenced by the institution in a typical year? | 700 to 25,000 annually per calculation above. |

| Conditional on the success of the strategy, how long are its effects likely to endure? | The Effective Altruism Foundation estimated a 25-80 year time horizon in its own cost-benefit analysis after the initiative was passed. As a sanity check, the previous law had stood for nearly 50 years at the time EAF launched its ballot initiative, and any future changes to the law are likely to be framed in the context of the current law. |

| If “we” were to proactively support or get involved in the abovementioned strategy, by how much would the strategy’s probability of success increase? | We will approach this and following parameters from the perspective of the Effective Altruism Foundation, which initiated and fundraised for the campaign. There had not been any prior momentum for such a change in city laws, so we can estimate the probability of that change taking place absent EAF’s efforts at roughly zero. At the time of the fundraising campaign, EAF estimated the probability of success at 9%. |

| If the impact of the strategy (not just its chances of success) may change as a result of EAF’s involvement, what is the magnitude in WELLBYs of that expected impact? | Not applicable in this situation since the strategy did not exist prior to EAF’s involvement. |

| If there are unusually plausible indirect effects of the strategy (e.g., lessons learned that can be applied to strategies to reform similar institutions in the future), what is the magnitude in WELLBYs of that expected impact? | EAF has proposed that this model could be replicated in other cities in Switzerland and around the European Union. We know of two other ballot initiatives in Switzerland that have been directly inspired by this effort. Since these were anticipated by the designers of the campaign when launching the initiative, we will model their combined expected impact at 50-150% of that of the Zürich campaign, taking into account the smaller populations involved. We will also take into account that the initiatives would have been unlikely to proceed if the original had been unsuccessful. These calculations yield a present value of additional indirect benefit between 4 and 1,500 WELLBYs. |

| (CALCULATE) How many additional WELLBYs is EAF likely to generate by getting involved in this strategy? | The total present value of EAF’s involvement, before taking costs and downside risks into account, is estimated to be between 40 and 20,000 WELLBYs. |

Risks

| Question | Estimate |

| What is the probability that “our” involvement in this strategy to make change in this institution would not just fail to help, but reduce either the chances of that strategy succeeding or the impact of the strategy if it did succeed, or otherwise make the situation worse? If this is a concern, what is the potential magnitude of these risks? | There was no preexisting strategy to ruin, and according to EAF personnel, it was hard to imagine given the progressive bent of the city’s politics that any backlash would place the city’s existing development aid budget in jeopardy. We will thus estimate this risk at roughly zero. |

| What are the chances that a failed attempt to execute on this strategy at the next available opportunity will crowd out a more successful strategy to change practices at this institution in the next five years (whether the latter involves us or not)? If this is a concern, what is the potential magnitude of these risks, and how much might our involvement exacerbate them? | Since there was no alternative strategy on the table or being contemplated by other parties, the risk here seems minimal. |

| What are the chances that our involvement in a failed attempt to execute on this strategy will reduce our or others’ ability to create change at other important institutions in the future? | Other than the opportunity cost of time and funds put towards this initiative (already covered under “Costs” below), it’s possible that a poor implementation of this campaign might have deterred planned future campaigns in other cities in Switzerland. If we estimate that those campaigns would have had a 5% chance of proceeding later on without the Zürich campaign being tried, and that there was a 10% chance of “avoidable” failure of the Zürich campaign, that yields an estimate of 0 to 80 WELLBYs at risk. |

| What are the chances that a successfully implemented strategy to change decision-making practices at this institution will result in a worse outcome than the alternative due to insufficient concern for indirect stakeholders on the institution’s part (e.g., the institution uses its increased technical sophistication to increase the wellbeing of its direct constituents at the expense of everyone else)? If this is a concern, what is the potential magnitude of this risk? | There are some limitations on the way the funds can be spent which may hinder the city from allocating its resources as cost-effectively as possible (e.g., only Swiss organizations are eligible). However, these limitations would have been in place regardless and the cost-effectiveness guidelines should help to bring the city’s interests and global beneficiaries’ interests at least somewhat in closer alignment, so there does not seem to be cause for significant concern here. |

| (CALCULATE) How many WELLBYs are “at risk” as a result of getting involved in this strategy? | 0 to 80 |

Costs

The Effective Altruism Foundation predicted that the campaign would cost about USD$100,000 at the time it launched the initiative; the final tab ended up closer to $220,000.

Conclusion

Taking these inputs together, the EAF ballot campaign is estimated to have generated between 40 and 10,000 WELLBYs (median 1,600) for every $100,000 spent. This compares to 180 to 900 WELLBYs (median 400) per $100,000 for cash transfers and 1,500 to 8,500 WELLBYs (median 4,000) per $100,000 for anti-malaria treatments.

Case study #2: Helping a critical regulatory body better prepare for emergencies

The United States Food and Drug Administration (FDA) is one of the most important government regulators in the world, exerting influence over some 25% of consumer spending in the United States. The FDA plays a critical role in American society, approving medical treatments and devices, overseeing food safety, regulating veterinary care, and more.

The FDA’s reach was especially apparent during the COVID-19 pandemic, when agency decisions were central for shaping the containment strategy and, later, vaccine rollout in the United States. While many of these decisions ultimately led to good outcomes (e.g., measures taken to ensure the Pfizer mRNA vaccine was ready to roll out immediately upon approval), the FDA faced criticism for several missteps and missed opportunities throughout the pandemic, including:

- Its failure to expedite the approval process for COVID-19 tests throughout most of February 2020, which substantially contributed to the collapse of the country’s initial containment efforts

- Waiting three weeks to convene a panel to review the emergency use authorization for the Pfizer vaccine after it was submitted in November 2020 during the biggest wave of the pandemic, which plausibly resulted in thousands of unnecessary deaths

- The decision to pause authorization for the Johnson & Johnson vaccine in mid-April over a very small number of reported blood clots, which may have contributed to the decline in vaccination rates across the country

- Declining to authorize vaccines that had been approved in other countries until a phase 3 trial had been conducted in the United States, leading to a strange situation in which the country allowed a large stockpile of AstraZeneca vaccines to sit unused during a critical period of supply shortage

A common thread to these critiques is that the agency found itself needing to make extraordinarily consequential decisions on a much tighter than usual timeline with imperfect information. These are exactly the sorts of situations that can benefit most from focused efforts to improve institutional decision-making. In particular, a more comprehensive and well-thought-out agency-wide emergency protocol could help guide the FDA toward better bets in analogous future situations.

While the most obvious application of such a protocol would be toward preventing a future pandemic, given the agency's broad regulatory purview, the FDA could plausibly loom large in efforts to prevent or mitigate other existential risk scenarios as well. For example, in the case of a widespread agricultural disaster or food pathogen crisis, the FDA might be responsible for approving and overseeing experimental methods of generating nourishment cost-effectively and at scale. And as AI safety becomes a more and more pressing concern, the FDA’s status as a regulator of products that employ advanced AI is likely to become increasingly salient. Improved decision-making could help the FDA avoid at least two types of mistakes that could contribute to existential risk: 1) approving products, medications, etc. that should not be approved, resulting in an avoidable disaster; and 2) not approving (or not approving fast enough) items that should be approved, and thereby missing an opportunity to stop a crisis from mushrooming into an x-risk scenario.

Since there are many design choices that would need to be made in order to ensure any intervention is fit for purpose, at this stage we will use the generic-strategy version of the framework to estimate the potential benefits of engaging at all. Based on the initial results, we can then determine if the concept seems worth exploring further. Furthermore, as another contrast with the Zürich case study above, we will avoid using a simulation model and instead use simple calculations to get a rough idea of the magnitude of potential impact.

Generic-strategy model

| Question | Estimate |

|---|---|

| Do this institution’s decisions have material impact on any specifically noteworthy existential risk scenarios (e.g., full-scale nuclear war, artificial general intelligence, global pandemics)? | Mainly pandemics, with a large-scale food supply crisis next most likely. |

| For pandemics: | |

| According to Michael Aird’s database of existential risk estimates, estimates of the combined existential risk from natural and engineered pandemics over the next 100 years range from 0.0002% to 3%. |

| Given its very consequential role in determining the course of the COVID-19 pandemic in one of the world’s largest countries, it seems reasonable to assign a number that is between 0.1% and 5% of the total risk from pandemics estimated above. |

| For food system collapse: | |

| Some related estimates included in Aird’s database cited above include estimates of existential risk from nuclear war, which could include agricultural collapse, at 0.005% to 1%, and from climate change at 0.01% to 1% over the next 100 years. Overall, let’s set the risk of food system collapse regardless of cause between 0.005% and 1%. |

| There is a greater variety of potential pathways here that might or might not involve the FDA, so we will set this an order of magnitude lower than the estimate for pandemics: 0.01% to 0.5%. |

| For all other existential risks, including those yet to be indentifed: | |

| Estimates of the “total risk” from all scenarios from the Aird database range from roughly 1-20% over the next 100 years. Subtracting out the risks noted above leaves us with a range of 1% to 16%. |

| While it’s certainly plausible that the FDA could play a role, the mechanisms by which that could take place are highly speculative. A range of 0.001% to 0.1% seems defensible as a first guess, though further study may be advisable before proceeding with any major commitments. |

| (CALCULATE) What proportion of existential risk is attributable to the FDA’s actions over the next century? | Since we are not building a simulation model for this back-of-the-envelope example, we’ll calculate a naive range of possibilities from the upper and lower bounds cited earlier. That process yields a range of effectively zero to 0.15 percentage points for pandemics, effectively zero to 0.005 percentage points for food system collapse, and 0.00001 to 0.016 percentage points for all other existential risks, for a sum of 0.00001 to 0.171 percentage points. Put another way, theoretically the actions of the US FDA could be important enough to have as much as a 1-in-600 chance of determining the future of the human race over the next century, although a more plausible estimate would be more like a 1-in-10,000 chance. |

| Conditional on the FDA being in a position to determine the fate of humanity at some point in the next 100 years, to what degree should we expect that intentional interventions to improve its decision-making undertaken between now and then might improve the outcome? | Given that there is a strong public interest in the FDA making optimal decisions, that doing so is consistent with its mission, and that relatively simple interventions such as more effective scenario planning could drive significant improvement, we might surmise that a relatively large proportion—say, 60%—of the FDA’s counterfactual contribution to existential risk scenarios could be eliminated through intentional efforts to improve the agency’s decisions. |

| What proportion of the benefits from interventions like these do we think the institution would realize over the next 100 years anyway as a result of efforts by other reformers with aligned goals? | It seems likely that there will be attention on the FDA’s role in emergencies over the next century, but less certain that the resulting reforms or policy changes will be effective at preventing existential risks absent a clear and specific focus on them. Let’s say about half of the upside will be captured by counterfactual efforts. |

| (CALCULATE) What is the potential upside of engaging with the FDA to improve its decisions? | Roughly combining the figures above, we find that intentional engagement with the FDA to improve its decisions would reduce existential risk probability by at most about 0.05 percentage points over the next century, and more likely something in the range of 0.0001 to 0.001 percentage points. |

In the generic-strategy model, we don’t explicitly model the risks of accidental harm from intervening since such risks are highly sensitive to the design of the intervention, the safeguards involved, who's leading it, etc. Thus, it’s important to remember that these estimates only establish a reasonable upper bound on the positive impact of trying to improve an institution’s decisions. To find the most impactful opportunities, one could use the generic-strategy model to find large opportunity spaces first, then use the specific-strategy model to try to find good opportunities within those spaces.

While the inherent fuzziness that comes with considering very unlikely scenarios over a long time horizon can be intimidating, the most likely alternative is an even fuzzier and entirely intuitive impression of the FDA’s overall importance to the world and its capability of being influenced for the better. Adding some structure to how we approach the question as we do here, even if it fails to give us precise and reliable numbers to work with, can at least begin to put some boundaries on what we think might be possible and where our efforts might be best directed.

Next steps

The Effective Institutions Project’s primary use of this framework will be to construct a rough ranking of the world’s most important institutions. We are in the process of creating a “short list” of key institution candidates from a combination of secondary research and expert opinion, and from there we plan to use the generic-strategy version of the model to sort these candidates into tiers. We will undertake that process using both of the outcomes of interest described above, reporting on the top tier separately for each as well as creating a composite ranking that takes worldview uncertainty into account. While we could try to construct a list of the most important institutions in the world by soliciting votes from experts or relying entirely on our intuitions, we expect that using a structured framework like this one will help us discover powerful institutions that we might not have been previously thinking about and combat biases that might arise from having greater familiarity with some institutions vs. others.

We anticipate that the results of this exercise will help guide Effective Institutions Project’s strategy and programming in the years ahead. Depending on the specific results, its influence could be quite dramatic—e.g., if our modeling suggests that the best opportunities to improve institutions are concentrated among only a few organizations, our approach would shift to match that reality—or more subtle. Either way, we expect that the analysis will identify a set of “priority” institutions that it will be important for us to incorporate into our organizational planning. For example, we might use the results to develop relationships with advisors and other connections who are familiar with the institutions in question; more fully understand the scenarios in which those institutions would have outsized influence; seek out evidence of effective interventions that applies well to those institutions’ specific contexts; and chart out career paths for members of our community who are interested in increasing the effectiveness of these organizations from the inside.

We are similarly interested in hearing about your next steps, and how you expect to find the framework useful to you. Please feel free to comment here or contact improvinginstitutions@gmail.com with questions or to let us know how it’s going. We look forward to hearing from you.

With thanks to Michael Aird, Tamara Borine, Victoria Clayton, Owen Cotton-Barratt, Max Daniel, Sara Duncan, Dewi Erwan, Barry Eye, Laura Green, Kit Harris, Samuel Hilton, Arden Koehler, Rassin Lababidi, Shannon Lantzy, Marc Lipsitch, David Manheim, Angus Mercer, Richard Ngo, Aman Patel, Dilhan Perera, Michael Plant, Robin Polding, Maxime Stauffer, and Jonas Vollmer for their valuable feedback throughout the drafting process, and to the EA Infrastructure Fund for its financial support.

Footnotes

[1] We recognize that this will not be a complete or satisfactory solution for all possible worldviews. For example, some social justice thinkers and advocates might object to applying a single definition of the common good across all geographies/communities/etc., even an explicitly justice-oriented one, because they see it as violating the principle of self-determination. We hope to explore issues like these more fully in a future iteration of this work.

[2] Improving institutions might be particularly relevant to the variant of longtermism known as patient longtermism, which argues that we should invest in the long-term future by building long-term capacity in ourselves and in our society. Adherents to this point of view might argue that by strengthening institutions generally (e.g., by ensuring the continuity and development of expertise across generations) we will increase our collective capacity to handle global challenges in the future even if we can’t anticipate what those challenges might be today.

[3] While in principle one could use WELLBYs as a sort of common currency across both neartermist and longtermist perspectives, doing so glosses over some important philosophical issues and uncertainties such as whether it’s better to have a smaller population with great lives or a much larger population with mediocre lives. (WELLBYs would typically favor the latter because of their additive nature.)

[4] This idea is not without complications; for example, if a nonprofit formed to fight for the rights of an oppressed population were to expand its stakeholder accountability map to include the interests of the oppressors as well, it’s counterintuitive to claim that it is more altruistic than it was before. And it is unreasonable to expect that, say, one country’s military will ever care about another country’s citizens as much as its own. Nevertheless, in practice it seems clear that the world would benefit greatly if a significant number of powerful institutions were to expand their moral circles even incrementally.

[5] Given all these caveats, one might reasonably ask why bother using numbers at all. While we don’t know for sure, forecasting experiments and the success of probabilistic simulation tools in other contexts suggest that even in conditions of high uncertainty, well-calibrated and rigorous estimates can still improve upon intuitive assumptions about where the greatest potential for impact may lie.

[6] Risk assessments do not usually feature prominently in cost-benefit analyses, but we feel they are important to call out separately here due to the fact that it is possible for poorly-conceived or -executed institutional engagement strategies to cause widespread harm in their own right. Such downsides can be distinguished from the usual risks of a strategy just failing to achieve the intended outcome, which are more easily integrated into standard cost-benefit models.

[7] There are a number of open philosophical and methodological questions involved in quantitatively estimating WELLBYs for interventions like these, which are laid out by the Happier Lives Institute here.

[8] While WELLBYs were designed for use with human beings, the methodology could in theory be extended further. For example, some animal welfare researchers suggest assigning fractional values to the lives of non-human animals in proportion to the level of suffering they appear to be capable of experiencing.

[9] The potential sacrifice in income or service cuts to Zürich residents due to the new policy is not expected to be significant enough to change the order of magnitude of this estimate, both because of the points raised earlier about the diminishing returns to wealth as well as provisions in the new law that allow for the development cooperation budget to be cut if the city is facing a budget deficit.

C Tilli @ 2021-09-23T11:25 (+4)

Thanks a lot for this post! I really appreciate it and think (as you also noted) that it could be really useful also for career decisions, all well as for structuring ideas around how to improve specific organizations.

we must be careful to avoid scenarios in which improving the technical quality of decision-making at an institution yields outcomes that are beneficial for the institution but harmful by the standards of the “better world”

I think this is a really important consideration that you highlight here. When working in an organization my hunch is that one tends to get relatively immediate feedback on if decisions are good for the organization itself, while feedback on how good decisions are for the world and in the long term is much more difficult to get.

For a user seeking to make casual or fast choices about prioritizing between institutional engagement strategies, for example a small consulting firm choosing among competing client offers, it’s perfectly acceptable to eschew calculations and instead treat the questions as general prompts to add structure and guidance to an otherwise intuitive process. Since institutional engagement can often carry high stakes, however, where possible we recommend at least trying a heuristic quantitative approach to deciding how much additional quantification is useful, if not more fully quantifying the decision.

I'm doing some work on potential improvements to the scientific research system, and after reading this post I'm thinking I should try to apply this framework to specific funding agencies and other meta-organizations in the research system. Do you have any further thoughts since posting this regarding how difficult vs valuable it is to attempt quantification of the values? Approximately how time-consuming is such work in your experience?

IanDavidMoss @ 2021-09-23T23:35 (+2)

Thanks for the comment!

Do you have any further thoughts since posting this regarding how difficult vs valuable it is to attempt quantification of the values? Approximately how time-consuming is such work in your experience?

With the caveat that I'm someone who's pretty pro-quantification in general and also unusually comfortable with high-uncertainty estimates, I didn't find the quantification process to be all that burdensome. In constructing the FDA case study, far more of my time was spent on qualitative research to understand the potential role the FDA might play in various x-risk scenarios than coming up with and running the numbers. Hope that helps!

Indra Gesink @ 2022-12-12T11:04 (+1)

The following part has I think some problems, which I recently addressed here: "Future people might not exist"

The challenge of conceptualizing and estimating costs and benefits for such a large and diffuse stakeholder group, the vast majority of whom can’t speak for themselves, is daunting to say the least. Longtermists have partially gotten around that challenge, however, by focusing on “existential risks”, meaning risks of events that would permanently, drastically reduce the potential for value in the future. If the future could contain vast numbers of morally relevant beings with flourishing lives, and those existential risks could irreversibly prevent them from existing or worsen their lives, it may be reasonable to simply focus on the proxy goal of existential risk reduction.

Indra Gesink @ 2022-12-12T14:32 (+1)

Also, I don't think reducing existential risk is of intrinsic value; instead it translates into an increase in WELLBYs, in expectation. In that way, the two kinds of measures (nearterm vs. longterm) and their outcomes are also 'in a common currency' and thus, in principle, comparable.

brb243 @ 2022-01-17T15:50 (+1)

A comment on your calculation: Is it

[WELLBYgained*P(WELLBYgained)-WELLBYlost*P(WELLBYlost)]/Cost,

where P() is the probability of the total (across all times) WELLBY gains and losses?

Is there a probability threshold value that can inform whether a strategy is recommended? For example, if there is a 0.1% chance of success (assuming no expected WELLBY loss), would you refrain from endorsing the strategy, regardless of the size of the WELLBY gain? Or, if there is a 3% chance of a significant WELLBY loss, even if that is outweighed by the magnitude of the expected WELLBY gain, would you suggest involvement in that strategy?

Which counterfactuals are you considering? The alternative use of resources to involvement in a strategy (or, you are mapping all involvement combinations and just selecting the best one) and the WELLBY lost and gained due to inaction or limited involvement in any strategy (this can be relevant especially to institutionalized dystopia or partial dystopia for some groups)?

Are you converting all non-financial constraints into cost? For example, the cost of paying people to develop networks (for example, by reducing their workload), the cost of developing convincing narratives in a low-risk way, the cost of developing solutions, the amount needed to flexibly gain influence momentum in relevant circles as opportunities arise (if this is needed, maybe this can fall under network development, but more internal as opposed to external advocacy). What else is needed to influence decisions which can be generalizable across different political systems?

How are timeframes considered in your model? For example, if developing different networks takes various amounts of time (assuming equivalent cost and expected WELLBY gains and losses), which one do you choose?

To what extent do you aim for impartiality in wellbeing achieved in terms of individuals? How are you relatively weighting (different amounts of) suffering and wellbeing?

Is continuous optimization assumed? For example, if the predicted WELLBY loss probability increases or decreases after some steps, are you re-running your calculations?

In addition to your calculation, I wanted to ask about the institutions that you would suggest that EA prioritizes in its influence. I think, for example, the UN because the institution already seeks to benefit others and reputational loss risk from offering innovative solutions can be limited (these solutions may just not be perpetuated to more influential ranks) (the advocacy should be for the One Health approach (which includes non-human animals) and developing preventive suffering frameworks in addition to convening decisionmakers when issues escalate), developing countries governments (because they can be highly underresourced and could use skilled volunteer work for various tasks, while EA-related tasks could be prioritized by volunteers), in addition to the governments of major global economies (assuming that economic and military power is convertible) and large MNC (because they influence large proportions of global production).

In summary, what specific strategies are you recommending?

IanDavidMoss @ 2022-02-15T18:51 (+5)

Thanks for the detailed questions! I'll do my best to answer them in turn:

A comment on your calculation: Is it

[WELLBYgained*P(WELLBYgained)-WELLBYlost*P(WELLBYlost)]/Cost,

where P() is the probability of the total (across all times) WELLBY gains and losses?

The best way to understand the calculation is to look at the case study we constructed for the City of Zurich, which has the full Guesstimate model linked.

Is there a probability threshold value that can inform whether a strategy is recommended? For example, if there is a 0.1% chance of success (assuming no expected WELLBY loss), would you refrain from endorsing the strategy, regardless of the size of the WELLBY gain? Or, if there is a 3% chance of a significant WELLBY loss, even if that is outweighed by the magnitude of the expected WELLBY gain, would you suggest involvement in that strategy?

I don't anticipate that we would refrain from endorsing a strategy with a low probability of success unless we thought there was a possibility for accidental harm (which the model should account for) or there were other strategies with a higher expected value that we want to recommend instead. The question about how to weigh downside risks against expected gains is harder, and I think would involve careful exploration with advisors and potential users of our recommendations (e.g. funders) about the appropriate level of risk tolerance for that specific situation.

Are you converting all non-financial constraints into cost? For example, the cost of paying people to develop networks (for example, by reducing their workload), the cost of developing convincing narratives in a low-risk way, the cost of developing solutions, the amount needed to flexibly gain influence momentum in relevant circles as opportunities arise (if this is needed, maybe this can fall under network development, but more internal as opposed to external advocacy). What else is needed to influence decisions which can be generalizable across different political systems?

Some would likely be converted to cost and others to the success metric of interest (e.g., WELLBYs) -- for example, the challenge of developing convincing narratives in a low-risk way might be better expressed as a component of the overall risk that the project won't meet its objectives given a particular plan of action and level of resources committed to it. The framework in the article can certainly be broken down into more parts and components if helpful for generating the variables needed to make an estimate, and you can see some instances of that in the case study.

How are timeframes considered in your model? For example, if developing different networks takes various amounts of time (assuming equivalent cost and expected WELLBY gains and losses), which one do you choose?

In the specific-strategy model, the user can choose the timeframe they think is most appropriate and add a discount rate if they like. If there is a delay from realizing the benefits of the success of the strategy, the cost of the delay would then be reflected through the use of the discount rate. Again, see the Guesstimate model for an example.

To what extent do you aim for impartiality in wellbeing achieved in terms of individuals? How are you relatively weighting (different amounts of) suffering and wellbeing?

The way we (as in Effective Institutions Project) are currently using the model treats all changes in wellbeing as equivalent and doesn't distinguish between populations. But another user could certainly add weights to prioritize certain kinds of changes more than others if they wished.

Is continuous optimization assumed? For example, if the predicted WELLBY loss probability increases or decreases after some steps, are you re-running your calculations?

Great question! My current intuition is that we would only re-run the calculations if doing so would be decision-relevant -- e.g., if we're deciding whether to continue recommending the option or not. But there could be some other reason to do it that I haven't considered; happy to hear other perspectives on this.

In addition to your calculation, I wanted to ask about the institutions that you would suggest that EA prioritizes in its influence. I think, for example, the UN because the institution already seeks to benefit others and reputational loss risk from offering innovative solutions can be limited (these solutions may just not be perpetuated to more influential ranks) (the advocacy should be for the One Health approach (which includes non-human animals) and developing preventive suffering frameworks in addition to convening decisionmakers when issues escalate), developing countries governments (because they can be highly underresourced and could use skilled volunteer work for various tasks, while EA-related tasks could be prioritized by volunteers), in addition to the governments of major global economies (assuming that economic and military power is convertible) and large MNC (because they influence large proportions of global production).

Yep, this is exactly where we're headed next. We've spent the last several months conducting a landscape analysis of "opportunity spaces" associated with particular institutions around the world and assessing which ones are most promising to investigate further. We're almost done with our preliminary analysis and should be ready to release it later this month.

In summary, what specific strategies are you recommending?

Stay tuned! :)

brb243 @ 2022-02-16T14:26 (+3)

Ok, thank you!

So, you are basically assuming that institutional change impact is the expected gain (measured by e. g. economic or health improvements, simplified as GD and AMF WELLBY impact estimates, or using other calculations, or measured by other metrics, which can but do not have to be impartial) of a shift to more effective causes, considering discount rate (selected by the user), minus risk (which can be weighted more compared to gain, in some cases, and which includes suboptimal/unintended investment outcomes), and plus leverage and substitutability effects. I would also add complementarity (the impact of influencing other actors' efficiencies in pursuing their objectives, unless that is considered by leverage).

You would re-run your calculations if it is decision-relevant. I think that re-running the calculation is relevant whenever the persons involved in the strategy or external informants identify a possibility of substantially changed variable (benefit, risk, leverage, or substitutability) (for example, due to a (substantial) change in the government, relevant press release, general discourse change, scientific finding, or partnership changes).

Yaaay opportunity spaces.