Preliminary investigations on if STEM and EA communities could benefit from more overlap

By elte @ 2023-04-11T16:08 (+31)

TLDR

This post discusses the potential benefits of increased overlap between the EA and STEM communities, particularly for addressing existential risks.

We (at Foresight Institute, a non-profit working to advance technology for good) want to explore if introducing more EA ideas into scientific discussions and further informing the EA dialogue with novel scientific perspectives could increase awareness of potential risks with emerging technologies and identify new areas for differential technology development.

We conducted some very informal and preliminary investigations through conversations with 20 risk-researchers and a survey sent out to the Foresight STEM community, which suggested that most respondents saw a gap between the two communities and believed that more overlap would be beneficial.

Would the STEM and EA communities benefit from more bridge-building?

I currently work at an organization whose primary aim is to advance beneficial technology: the Foresight Institute. We focus on technologies that are too early-stage or interdisciplinary for legacy institutions to support and have a long history of community building within the STEM community. Our network of spans various fields of science and technology that seem relevant to several EA cause areas, especially biotech/biorisk, neurotech/neurorisk, nanotech/nanorisk, and AI safety.

Although Foresight has significant overlap with EA, it is not an "EA-org" in itself. We receive very little funding from EA sources, and our approach to identifying worthwhile interventions is broader, encompassing beneficial applications in nanotech, neurotech, longevity, and space tech. However, we have participated in EA events since around 2015 and collaborated with many people and organizations within the EA ecosystem.

Our community at Foresight primarily consists of scientists and entrepreneurs working in the areas mentioned above. While many do not have a distinctly EA perspective, they deeply care about the long-term potential and risks associated with their technologies. Their mindset aligns well with the increasingly popular EA focus on differential technology development. As a result, we are considering whether it would be beneficial to work on building bridges between EA concepts, projects, and people—particularly those with a DTD focus—and our community.

This would involve:

- Introducing more EA ideas into scientific discussions,

- Further informing the EA dialogue with specific potential near- and long-term capabilities currently explored in the STEM community,

- Increasing long-term coordination amongst scientists/technologists and policy/risk researchers.

We believe that the key benefits of this bridge-building can be roughly summed up as follows:

- Increase awareness among hard science/tech actors regarding potential risks associated with their work, mitigation strategies, and distinctly beneficial applications to potentially prioritize.

- Increase awareness among risk and policy researchers regarding technological capabilities under development, as well as potential technological risk mitigation approaches.

- Foster long-term trust-building across these communities, which may facilitate easier collaboration on realizing risks and pursuing opportunities arising from these technologies as they mature.

- Identify potential areas for differential technology development and project collaborations in these areas.

Shallow Investigations

To explore this opportunity, we have conducted some informal preliminary investigations, including conversations I've had with approximately 20 relevant actors at EAG (identified as people doing or coordinating risk research on EA cause areas, mainly for AI and biorisk). We also sent out a survey to the Foresight community, which generated 41 responses from participants in our technical groups (computation, nanotech, neurotech, longevity, and space tech).

Conversations with risk-researchers

At the last two EAGs I attended, I had around 20 individual meetings with relevant actors working on reducing existential risk via technical research, mainly for AI and biorisk. These were a combination of mid and senior-level people conducting direct research on these topics and people coordinating programs aimed at reducing existential risk (for example, FHI, BERI, OpenPhil, SERI, as well as individual researchers with EA funding).

Very briefly summarized, I would interpret the results from these conversations to be something like:

- Everyone I talked to agreed that there is a gap between the communities. They believed that some level of collaboration was already happening but could still be improved. Everyone agreed that more overlap would be beneficial, except for one person who did not think additional collaboration would be useful for the field of AI, as they felt the existing cooperation was sufficient.

- Three people said that in working to bridge this gap, it seems important that these meetings need to be facilitated in a way that makes sure it does not lead to a (further) divide between communities. This mainly refers to STEM people interpreting a risk-reducing focus as an attempt to hinder progress. They suggested that open communication, mutual respect, and finding common ground should be prioritized to ensure a healthy exchange of ideas and prevent misunderstandings.

In the conversations, I aimed to ask questions in a way that made them open for discussion, but as the answers were quite homogenous, I believe there is a high risk that the way I phrased my questions during these conversations may have affected the responses and led to a bias of people agreeing with me on these topics.

Survey sent out to Foresight’s STEM community

In addition to these informal EA discussions, we also sent out a survey to the Foresight community, which mainly consists of people operating in the "hard" tech/science field. In terms of demographics, the majority were male and living in the US. A few respondents were based in the UK, and only one woman answered. The survey had the topic "Help Foresight improve our work on existential risks" and consisted of questions asking:

- If they are familiar with the concepts of Effective Altruism, Existential Risk and/or Longtermism

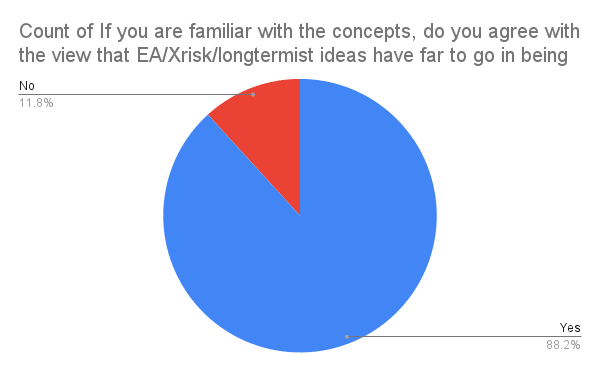

- If they think that EA/X-risk/longtermist ideas have far to go in being integrated into the mainstream science/tech community

- If they think that both communities (The EA/X-risk/longtermist community and the science/tech community) could benefit from more interaction.

You can access the actual survey sent out here.

Survey results

To protect the privacy of respondents, we will not be sharing the raw data here, but readers are welcome to reach out to me should they wish to dig deeper on this topic and the data we collected. However, we will paraphrase quotes from the survey.

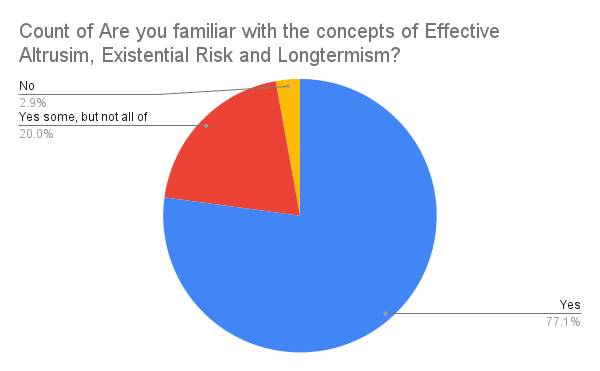

In terms of who responded to this survey, there seemed to be a majority of respondents who were already aware of these concepts. 78% of respondents described themselves as familiar with these concepts, 19% as a little familiar, and only 3% were not aware at all, pointing to some selection bias in survey respondents.

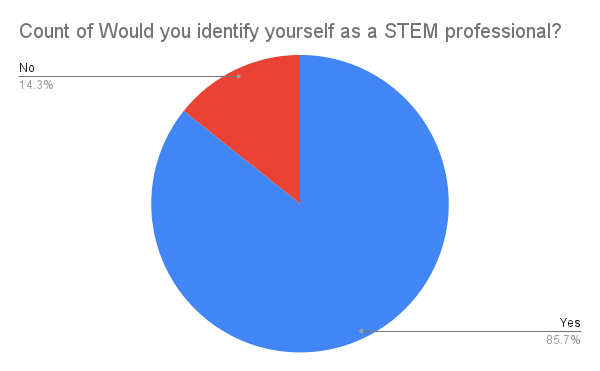

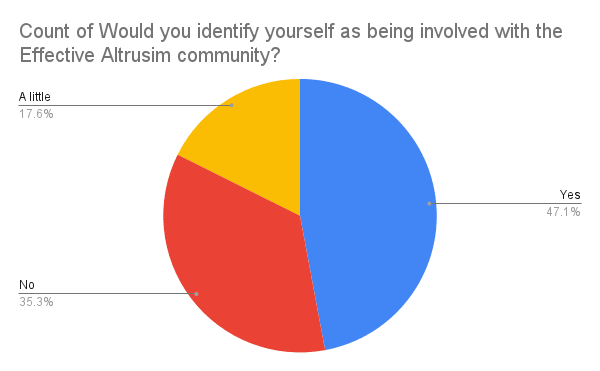

86% self-identified as STEM professionals. 45% said they identified themselves as being "involved with the Effective Altruism community." This, in itself, could be somewhat interesting since the survey was sent out to about ~1800 people. As the survey had been given the topic "Help Foresight improve our work on existential risks," this could imply that the number of people aware of the idea of Existential Risks in our STEM community is potentially very low.

- 90% of respondents answered yes to the question of whether they agree that “EA/Xrisk/longtermist ideas have far to go in being integrated into the mainstream science/tech community”.

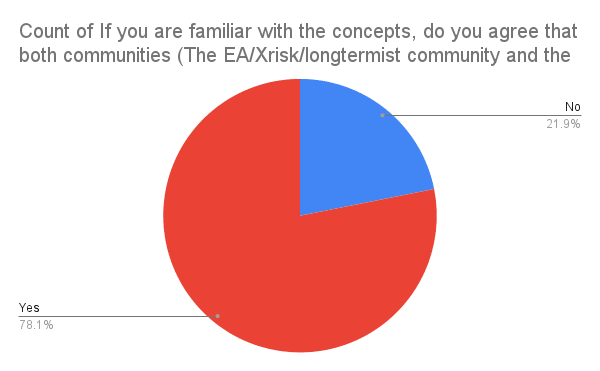

- 80% of respondents answered yes to the question of whether they agree that “both communities (The EA/Xrisk/longtermist community and the science/tech community) could benefit from more interaction”.

I will expand a bit more below on people’s reasoning for their answers, and divide the responses into two different categories, Category 1 being those saying “Yes, these communities would benefit from more overlap” and Category 2 saying “No, these communities would NOT benefit from more overlap”.

Category 1: Yes, these communities would benefit from more overlap

I’d categorize these answers into the following buckets:

- Lack of awareness and need for education: Many respondents believed that people in STEM are not aware of effective altruism (EA) and existential risk (X-risk) ideas. Respondents suggested that more outreach and education are needed to raise awareness of these topics.

- Technology is very important in solving X-risk challenges: Many respondents believe that technology has the potential to help mitigate X-risks. They feel that getting technologists involved in the conversation is essential to developing effective solutions.

- Gap between ideas and execution: Some respondents stated that while STEM may not have a clear understanding of what is worthwhile, EAs may not have the skills to execute their ideas effectively. Bridging this gap could ensure that ideas can be more effectively executed.

- Engagement with policy and long-term strategy: Respondents said there is a need for STEM to engage more with policy, philanthropy, and strategy to make long-term investments and risk mitigation possible. More EA integration would already be a step on the way towards more engagement on these topics.

- Overly focused on short-term gains: Some respondents noted that most science and tech projects and their financial backers focus on short-term gains, rather than long-term interventions and risk mitigation. This could make it more difficult to invest in long-term solutions to X-risks. Something which more integration with EA could potentially help avoid.

Paraphrased quotes from the respondents:

- "Throughout my many years in the field, I've seldom encountered dedication to these principles. When I bring them up and advocate for awareness and action, it's typically from a conventional standpoint grounded in the essentials of the UN SDGs."

- "Technological advancements will be key in addressing existential risks, rather than depending on government or regulation. Involving technologists is crucial in this regard."

- "While effective altruists struggle with practical implementation, engineers often lack insight into what's truly valuable."

- "Many researchers, including myself, may not possess a clear or even basic understanding of these concepts. Nonetheless, it is important that we do, as all scientists aim to benefit humanity in some way."

- "It is essential for more individuals in STEM to grasp the importance of effective altruism, long-term thinking, and existential risk. At the same time, those in STEM can contribute significantly to addressing the challenges prioritized by effective altruism, long-term thinking, and existential risk management."

Category 2: No, these communities would NOT benefit from more overlap

I’d categorize these answers into the following buckets:

- There is already significant overlap between the groups: Respondents felt that there is already a large portion of the EA/X-risk/longtermism community composed of STEM professionals, so a rigorous scientific worldview is not missing.

- There is a risk of diluting the potency of energy in the EA community: Some believe that trying to cast too broad a net could weaken the effectiveness of the community by spreading its focus too thin.

- Decentralized development and diversification is antifragile: Some stated that institutions like the Foresight Institute and the Center for Progress Studies are valuable because they are adjacent to but do not rigidly withinEA cause areas. In an uncertain world, diversification is antifragile, and strictly adhering to EA could make both communities more fragile.

- The value of technological foresight is questionable: Some respondents believe that it is impossible to predict how far a technology will go and how it will be used, so discussing extremely long-term possibilities is not productive.

- Trying to address existential risks can increase existential risk: Some respondents are not fans of trying to address existential risk because they believe that focusing too heavily on the issue can lead to actions that inadvertently increase the likelihood of existential risk.

Paraphrased quotes from the respondents:

- "A considerable portion of the effective altruism, existential risk, and long-term thinking community already consists of STEM professionals, ensuring that a rigorous scientific perspective is not absent. Furthermore, there is a potential risk of diluting the community's energy by trying to expand its scope too broadly."

- "I somewhat agree. However, organizations like the Foresight Institute occupy a unique position that allows for increased STEM involvement, discussion, and investigation beyond conventional effective altruism concerns. For instance, expertise in neurotechnology may not qualify someone for EA Global. Thus, it's valuable to have institutions like the Foresight Institute and the Center for Progress Studies that are related but do not strictly adhere to EA efforts. In an unpredictable world, decentralized development and diversification promote resilience; a strict adherence to effective altruism would make us more vulnerable."

- "I believe that there is already considerable overlap between these groups, and there may not be significant benefits to using resources to push for even greater integration."

- "If the Foresight Institute aims to contribute to the existential risk field, it would be more valuable to invest its efforts in debunking widely-accepted existential risk claims and strategies, especially those intending to restrict or slow down technological advancement."

Conclusions and recommended actions

Again, we want to emphasize that this was a very informal and preliminary investigation! But if we decide to work more on bridge building between these communities, here are a few avenues we would think are useful to pursue.

Broad ideas on what we can do to bridge the gap between EA and STEM:

- Have more risk researchers participate in STEM events: Identify and invite risk researchers and invite them to relevant technical events and workshops to both inform and connect individuals from both communities

- Build out DTD specific fellowships: Build out our existing fellowship, which already builds community across STEM and risk/policy researchers in these areas to facilitate more high-trust community-building across specific individuals, including grants for collaborative DTD research projects

- Host intros on risk and mitigation strategies for the STEM audience: Host sessions on existential risks and EA cause areas for the STEM community

- Determine areas for differential technology development and communicate to the STEM audience: Identify a few technologies identified in different STEM domains that would differentially increase safety in this domain, and encourage further work on it.

For all of these examples, we can see that this is something we have a good opportunity to integrate into the Foresight program. But we would be very interested in hearing if readers of this post have any thoughts on;

- General criticisms of any of the points discussed above

- Avenues for facilitating DTD discussions without increasing info hazards

- Specific technological areas you deem promising for bridge-building

- Additional mechanisms, such as prizes, fellowships, events that could be useful

- Pointers to existing efforts with the goals discussed in this post

Please let us know in the comments if you have any thoughts or ideas you would like to share with us.

Jessica Wen @ 2023-04-12T11:41 (+8)

Thanks for putting together this survey and sharing it on the forum! A brief background to me: I co-direct High Impact Engineers, where we aim to help (non-software) engineers do more high-impact work. If the Foresight Institute does decide to go ahead with the outlined ideas/next steps for bridging the gap between EA and STEM, I can see some collaborations being beneficial. I expand on this in my general comments below. (The rest of this comment is from a personal capacity, rather than on behalf of HI-Eng)

General comments (not very heavily edited, apologies for the length and for any rambling/unclear parts. Main points in bold):

- Although people in STEM share a lot of important attributes (numerical and analytical skills, problem-solving ability, etc.), there isn't really such a thing as a STEM community as they're split out into many different domains of expertise, interests, applications, skills, etc.

- It seems unlikely that the survey respondents are omni-STEM, and I think grouping them all under the "STEM" banner obscures a lot of useful information, e.g. in which fields professionals think EA and STEM are already well-integrated, and which aren't; which skills professionals think would be useful to develop technology to mitigate X-risks/do DTD research projects, etc.

- It would be useful to be able to see what the breakdown of the respondents' fields is – I suspect that life sciences are under-represented here as those fields tend to skew less male-dominated.

- Somewhat related: I think we also need more social scientists in EA (understanding how people behave, especially around new technologies, is probably very useful and important for X-risk mitigation – and I'd argue that psychologists and sociologists belong in the S for Science in the STEM acronym), but I assume there were very few of these scientists in this survey considering the survey was sent to a group of people in mostly "hard" tech/sciences.

In terms of demographics, the majority were male and living in the US. A few respondents were based in the UK, and only one woman answered.

- If only one woman responded, this survey obviously doesn't cover the whole STEM community. Hot take: this seems to me to be more a survey of men rather than a survey of the "STEM community" (in quotes due to point 1 and also because a survey of the STEM community would probably be too broad to be useful).

- If this is going to become a community-building effort, I think we need to be careful if we spend more time, effort, and money building communities in the respondents' communities. EA is already overwhelmingly white and male; I somewhat worry that increasing overlap in the respondents' communities will cause EA to become a boy's club.

- Since the majority of the respondents were US- (and some UK-) based, I would also be interested in seeing the ethnic breakdown (if this was collected).

- A final demographic that I'd like to see from this survey is the age breakdown – mid-career professionals, in my experience, tend to have important and useful perspectives that EA generally lacks.

Like Linda, I'd also like to know the response rate for this survey.[EDIT]: 41/~1800 seems like an awfully low response rate, so doesn't seem particularly representative of Foresight's STEM community, which makes me question the validity of these conclusions.

Technology is very important in solving X-risk challenges: Many respondents believe that technology has the potential to help mitigate X-risks. They feel that getting technologists involved in the conversation is essential to developing effective solutions.

- What does technology mean here? Usually it means information technology, but I think "physical" technologies also have the potential to help mitigate X-risks. It feels obvious to me that getting technologists involved will help solve problems effectively – this is what technologists do!

- I agree with the conclusions and recommended actions, and my intuition says that it's better to focus on the ways to get STEM professionals working on X-risks and EA cause areas without integrating the EA and STEM communities.

- High Impact Engineers has already been doing point 3 of the recommended actions (introducing existential risks and EA cause areas for the STEM community) at universities as a low-risk test of whether people with an engineering background respond well to these ideas – we've found that they do! We plan to do more outreach to professional engineering institutes in the next 6 months, and this is a project where collaboration could be beneficial. Happy to discuss more.

Specific technological areas you deem promising for bridge-building

- At HI-Eng, common areas that we've found to be in-demand for biosecurity, civilisation resilience, AI governance, differential neurotechnology development, and other X-risk mitigation seem to be electrical/electronic engineers, mechanical engineers, and materials engineers (particularly experts in semiconductors). Bioengineers and biomedical engineers seem to be useful for biosecurity. Happy to discuss this further, and we also expect to have a presentation/poster to share on this soon.

My overall takeaway: a survey like this is probably useful and important, but I would need to know more about the types of people surveyed for the data to be useful and for the actions to be convincing.

elteerkers @ 2023-04-14T08:06 (+1)

Hi Jessica, thank you so much for your thorough read and response! I found it very useful.

I agree there isn’t really such a thing as “the STEM community”, and if I were to write the post now I would want to better reflect the fact that this was asked to the Foresight community, in which most participants are working in one of our technical fields: neurotech, space tech, nanotech, biotech or computation. In the survey I ask if people identify themselves as STEM professionals, a question to which most answered yes (85% of respondents in this v. small survey). So as you point out, most are not in the life sciences.

Regarding the demographics, our community is very male dominated. However, our team is all female, and we are actively trying to improve on this. I would be interested to hear if you at HI Engineers are doing anything on this, and have any learning that you can share? I did not collect any data on ethnicity or age in this survey.

As I stated in the post, and can only state again, this is very preliminary, so I agree one shouldn’t draw too much of a conclusion based on this. But I’m happy I put it out there as is so that I could get this useful feedback from you!

Regarding what technology means in the text, I would say that it refers to both informations and "physical" technologies. I’d be very interested to hear more about your outreach work with HI Engineers. Overall, your work looks very interesting, and so I hope you don’t mind if I reach out “off forum”! :)

DavidNash @ 2023-04-12T11:13 (+6)

It seems that there would be more to be gained from building bridges between the STEM and existential risk communities rather than EA more broadly.

EA has a lot of seemingly disconnected ideas that aren't as relevant to most people. Some will be interested in all of them, but most people will be interested in just a subset. Also with x-risk, some people will have much more interest in one of nuclear/AI/bio risks than all of them.

elteerkers @ 2023-04-12T11:55 (+1)

Good point! Are there any other X-risk communities you think we should look at, other than the ones already active within EA?

DavidNash @ 2023-04-12T14:00 (+3)

I meant the communities/organisations that have overlap with EA but focused on a specific cause, but it would be useful to connect people to less EA related orgs like the Nuclear Threat Initiative, CEPI, etc.

It seems like there is less field building for existential risk but also not that much within specific causes compared with the amount of EA specific field building there has been.

This seems to be changing though with things like the Summit on Existential Security this year, and updates being made by people at EA organisations (mentioned by @trevor1 in another comment).

elteerkers @ 2023-04-14T08:09 (+1)

Thank you!

Linda Linsefors @ 2023-04-12T08:30 (+6)

the survey was sent out to about ~1800 people.

What percentage of those people responded? Apologies if this is already in the text, but I could not find it.

DavidNash @ 2023-04-12T13:34 (+6)

Earlier in the post - 'We also sent out a survey to the Foresight community, which generated 41 responses from participants in our technical groups'

Jessica Wen @ 2023-04-12T15:17 (+13)

41 out of ~1800 seems like an extremely low response rate – one would usually expect ~10% response rate, from what I've heard. Combining that with the singular female respondent, it seems to me that this survey is not particularly representative of their "STEM community".

elteerkers @ 2023-04-14T08:10 (+1)

Agree it doesn't represent “the STEM community”. As in my reply to Jessica's longer comment, I agree there isn’t really such a thing as “the STEM community”, and if I were to write the post now I would want to better reflect the fact that this was asked to the Foresight community, in which most participants are working in one of our technical fields: neurotech, space tech, nanotech, biotech or computation. In the survey I ask if people identify themselves as STEM professionals, a question to which most answered yes (85% of respondents in this v. small survey).

C Tilli @ 2023-04-11T16:33 (+3)

Interesting!

What is your assessment of current risk awareness among the researchers you work with (outside of survey responses), and their interest in such perspectives?

elteerkers @ 2023-04-14T08:27 (+1)

Thanks for the question!

I would say that it's not that people aren't aware of risks, my broad reflection is more in terms of how one relates to it. In the EA/X-risk community it is clear that one should take these things extremely seriously and do everything one can to prevent them. I often feel that even though researchers in general are very aware of potential risks with their technologies, they seem to get swept up in the daily business of just doing their work, and not reflecting very actively over the potential risks with it.

I don't know exactly why that is, it could be that they don't consider it their personal responsibility, or perhaps they feel powerless and that aiming to push progress forward is either the best or the only option? But that is a question that would be interesting to dig deeper into!

Linda Linsefors @ 2023-04-12T08:50 (+2)

- Three people said that in working to bridge this gap, it seems important that these meetings need to be facilitated in a way that makes sure it does not lead to a (further) divide between communities. This mainly refers to STEM people interpreting a risk-reducing focus as an attempt to hinder progress. They suggested that open communication, mutual respect, and finding common ground should be prioritized to ensure a healthy exchange of ideas and prevent misunderstandings.

There is problem here. Many of us in the EA/X-risk community (including me) think that it would be better if AI progress was significantly slowed down. I think that asking us to play down this disagreement, with at least some of the STEM community, would be very bad.

I'm generally in favour of bridge building, and as you write in the post such interactions can be very beneficial. I do think that a good starting point is to assume everyone have good intentions (mutual respect), but I don't think finding common ground should be prioritized. I think its more valuable to acknowledge and discuss differences in opinion.

I'm working as an AI Safety field builder, feel free to reach out.

Linda Linsefors @ 2023-04-12T08:57 (+2)

"If the Foresight Institute aims to contribute to the existential risk field, it would be more valuable to invest its efforts in debunking widely-accepted existential risk claims and strategies, especially those intending to restrict or slow down technological advancement."

I think this is written by an EA? It seems like they personally are not in favour of slowing down AI, and I think they share this opinion with many other EAs. I don't think we have a community consensus on this. But saying that its a myth that should be debunked, is just wrong.

There are many EAs who signed this letter, and FLI is arguably an EA org (weather or not they identify as EA, they are part of the EA/X-risk/long-termism network).

Pause Giant AI Experiments: An Open Letter - Future of Life Institute

I know of others in our network who think this letter don't go far enough.

elteerkers @ 2023-04-14T08:17 (+1)

That's a good point. I'm unsure of what the best way of facilitating these meetings would be, so that it doesn't downplay the seriousness of the questions. But assuming good intentions, allowing for disagreements, and acknowledging the differences is enough and the best option.