80,000 Hours two-year review: 2021–2022

By 80000_Hours @ 2023-03-08T17:36 (+92)

This is a linkpost to https://80000hours.org/2023/03/80000-hours-two-year-review-2021-and-2022/

80,000 Hours has released a review of our programmes for the years 2021 and 2022. The full document is available for the public, and we’re sharing the summary below.

You can find our previous evaluations here. We have also updated our mistakes page.

80,000 Hours delivers four programmes: website, job board, podcast, and one-on-one. We also have a marketing team that attracts users to these programmes, primarily by getting them to visit the website.

Over the past two years, three of four programmes grew their engagement 2-3x:

- Podcast listening time in 2022 was 2x higher than in 2020

- Job board vacancy clicks in 2022 were 3x higher than in 2020

- The number of one-on-one team calls in 2022 was 3x higher than in 2020

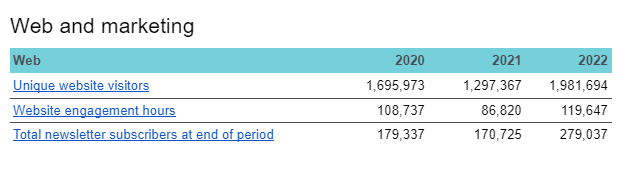

Web engagement hours fell by 20% in 2021, then grew by 38% in 2022 after we increased investment in our marketing.

From December 2020 to December 2022, the core team grew by 78% from 14 FTEs to 25 FTEs.

Ben Todd stepped down as CEO in May 2022 and was replaced by Howie Lempel.

The collapse of FTX in November 2022 caused significant disruption. As a result, Howie went on leave from 80,000 Hours to be Interim CEO of Effective Ventures Foundation (UK). Brenton Mayer took over as Interim CEO of 80,000 Hours. We are also spending substantially more time liaising with management across the Effective Ventures group, as we are a project of the group.[1]

We had previously held up Sam Bankman-Fried as a positive example of one of our highly rated career paths, a decision we now regret and feel humbled by. We are updating some aspects of our advice in light of our reflections on the FTX collapse and the lessons the wider community is learning from these events.

In 2023, we will make improving our advice a key focus of our work. As part of this, we’re aiming to hire for a senior research role.

We plan to continue growing our main four programmes and will experiment with additional projects, such as relaunching our headhunting service and creating a new, scripted podcast with a different host. We plan to grow the team by ~45% in 2023, adding an additional 11 people.

Our provisional expansion budgets for 2023 and 2024 (excluding marketing) are $10m and $15.5m.[2] We’re keen to fundraise for both years and are also interested in extending our runway — though we expect that the amount we raise in practice will be heavily affected by the funding landscape.

- ^

The Effective Ventures group is an umbrella term for Effective Ventures Foundation (England and Wales registered charity number 1149828 and registered company number 07962181) and Effective Ventures Foundation USA, Inc. (a section 501(c)(3) tax-exempt organisation in the USA, EIN 47-1988398), two separate legal entities which work together.

- ^

Amended March 29, 2023 to our newest provisional figures. (Previously this read $12m and $17m.)

Cillian Crosson @ 2023-03-08T18:32 (+26)

Great work - excited to see so much growth across the podcast, one-on-one service & job board! I'm curious about web engagement though.

Web engagement hours fell by 20% in 2021, then grew by 38% in 2022 after we increased investment in our marketing.

This implies that engagement hours rose by ~10% in 2022 compared to 2020. This is less than I would have expected given the marketing budget rose from $120k in 2021 to $2.65m in 2022. I'm assuming it was also ~$120k in 2020 (but this might not be true). Even if we exclude the free book giveaway (~£1m), there seems to have been a ~10x increase in marketing here that translated to a 10-40% rise in engagement hours (depending whether you count from 2020 or 2021).

See quote from this recent post for context on marketing spend:

In 2022, the marketing programme spent $2.65m (compared to ~$120k spent on marketing in 2021). The bulk of this spending was on sponsored placements with selected content creators ($910k), giving away free books to people who signed up to our newsletter ($1.04m), and digital ads ($338k).

I can think of a bunch of reasons why this might be the case. For example:

- Maybe the price of acquiring new users / engagement hours increases geometrically or something

- It looks like marketing drove a large increase in newsletter subs. Maybe they're engaging with the content directly in their inbox instead?

- Maybe you expect a lag in time between initial reach & time spent on the 80k website for some reason (e.g. because people become more receptive to the ideas on 80k's website over time, especially if they're receiving regular emails with some info about it)

- Maybe marketing mainly promoted podcast / 1-1 service / job board (or people reached by marketing efforts mainly converted to users of these services)

See screenshot from the full report for extra context on engagement hours, unique visitors & subscribers:

Again, great work overall. I'd be really curious to hear any quick thoughts anyone from 80k has on this?

Bella @ 2023-03-08T20:23 (+24)

Hey Cillian — thanks so much for a really thoughtful/detailed question!

I'll take this one since I was the only staff member on marketing last year :)

The short answer is:

- Marketing ramped up considerably over the second half of 2022. Web engagement time grew a lot more in the second half of 2022 as well — if we just compare Q3 &Q4 2020 and 2022, engagement time grew 50% (rather than 10%).

- But that still doesn't look like web engagement time rising precisely in step with marketing investment, as you point out!

- We don't know all the reasons why, but the reasons you gave might be part of it.

- There are five other reasons I want to mention:

- marketing didn't focus on engagement time

- people we find via marketing seem less inclined to use our advice than other users

- there’s a headwind on engagement time

- site engagement time doesn’t count the book giveaway

- 2020 was a bumper year!

The long answer...

- It's true that there's only a 10% rise in engagement time between 2020 and 2022.

- The main thing going on here is that marketing investment didn't rise until the second half of 2022 — I spent a while trying out smaller scale pilots. So the spend is very unevenly distributed.

- If we compare just Q3 and Q4 (i.e. Q3 and Q4 2020 with Q3 and Q4 2021 and Q3 and Q4 2022), there was a 6% fall from 2020 to 2021, and then a 59% rise from 2021 to 2022, resulting in an overall 50% rise from 2020 to 2022!

But our marketing budget still rose by a lot more than 50% — so what's going on there?

I'll start out with the reasons you gave then add my own:

- Maybe the price of acquiring new users / engagement hours increases geometrically or something

Yeah I don't exactly know how the price of finding new users increases, but we should probably expect some diminishing returns from increased investment.

- It looks like marketing drove a large increase in newsletter subs. Maybe they're engaging with the content directly in their inbox instead?

I think this might be a smallish part of it — I've noticed an effect where if the email we send on the newsletter is itself full of content, people click through to the website less than if the newsletter doesn’t itself provide much value.

I don't think this can account for tons of what we're seeing, though, just cos I don't think the emails work as a 1:1 replacement for 80k's site (I can't really imagine there being much of a 'substitution effect' here).

- Maybe you expect a lag in time between initial reach & time spent on the 80k website for some reason (e.g. because people become more receptive to the ideas on 80k's website over time, especially if they're receiving regular emails with some info about it)

I think this might be a pretty big part of what's going on.

There does seem to be a significant 'lag time' from people first hearing about us and people making an important change to their careers (about 2 years on average) and I think there's often a lag before people get really engaged with site content, too.

Also, bear in mind that because of what I said about the 'unevenness' of growth from marketing, people who found out about us this year are mostly still really new.

- Maybe marketing mainly promoted podcast / 1-1 service / job board (or people reached by marketing efforts mainly converted to users of these services)

Yep, I did put some resources directly towards promoting the podcast, and a much smaller amount of resources towards directly promoting 1-1 (about 7% of the budget as a whole, and probably more like 10% of my time). So this could be (a small) part of what's going on.

There are five other main things I think explain this effect:

- Marketing didn't focus on engagement time

- My focus was really on reaching new users, rather than trying to get people to spend time with our content.

- For example, last year I spent almost zero effort and resources on trying to get people who already knew about 80,000 Hours to engage with stuff on the site.

- People who find out about us via marketing are, on average, less interested in our advice

- I talked about this a little bit in my post that you linked.

- I think this is probably a pretty big factor here!

- There's a headwind on engagement time, i.e. engagement time by default seems to go down over time

- We think this is because, e.g.:

- Articles that were last updated longer ago are seen as less relevant by search engines and deprioritised

- Things we wrote become out of date and less useful to people

- There might be some broader internet trend where people are spending more time on social media and less time on individual websites

- We think this is because, e.g.:

- Our current metric doesn't incorporate engagement time from reading the books in the book giveaway

- However, we are looking into including it in future reporting!

- My (still ongoing) analysis of survey results about the book giveaway suggest that this engagement time is at least tens of thousands of hours, possibly >100k hrs.

- (For context, our average monthly engagement time since Jan 2021 is about 8,000 hours (ha!)).

- 2020 was weird

- We saw a very large spike in traffic in early 2020 — partly because we had content on COVID when many places didn’t, and partly because of the broader trend where tons of sites got a lot more traffic as people were staying home and spending more time online.

- So 2020 might be an “inappropriate benchmark” or something like that.

Okay I hope that gives you an insight into what I think is going on here! Sorry for length :)

Ardenlk @ 2023-03-08T22:00 (+18)

Hey! Arden here, also from 80,000 Hours. I think I can add a few things here on top of what Bella said, speaking more to the web content side of the question:

(These are additional to the 'there's a headwind on engagement time' part of Bella's answer above – though they less important I think compared to the points Bella already mentioned about a 'covid spike' in engagement time in 2020 and marketing not getting going strong until the latter half of 2022 .)

-

The career guide (https://80000hours.org/career-guide/) was very popular. In 2019 we deprioritised it in favour of a new 'key ideas series' that we thought would be more appealing to our target audience and more accurate in some ways, and stopped updating the career guide and put notes on its pages saying it was out of date. Engagement time on those pages fell dramatically.

This is relevant because, though engagement time fell due to this in 2019, a 'covid spike' in 2020 (especially due to this article, which went viral: https://80000hours.org/2020/04/good-news-about-covid-19/) masked the effect; when the spike ended we had less baseline core content engagement time.

(As a side note: we figured that engagement time would fall some amount when we decided to switch away from the career guide: ultimately our aim is to help solve pressing global problems, and engagement time is only a very rough proxy for that. We decided it'd be worth taking some hit in popularity to have content that we thought would be more impactful for the people who read it. That said, we're actually not sure it was the right call! In particular, our user survey suggests the career guide has been very useful for getting people started in their career journeys, so we now think we may have underrated it. We are actually thinking about bringing an updated version of the career guide back this year.)

-

2021 was also a low year for us in terms of releasing new written content. The most important reason for this was having less staff. In particular, Rob Wiblin (who wrote our two most popular pieces in 2020) moved to the podcast full time at the start of the year, we lost two other senior staff members, and we were setting up a new team with a first time manager (me!). As a result I spent most of the year on team building (hiring and building systems), and we didn't get as much new or updated content out as normal, and definitely nothing with as much engagement time as some of the pieces from the previous year.

-

The website's total year-on-year engagement time has historically been greater than the other programmes, largely because it's the oldest programme. So it's harder to move its total engagement time in terms of %.

Also, re the relationship of these figures with marketing, the amount of engagement time with the site due to marketing did go up dramatically over the past 2 years (I'm unsure of the exact figure, but it's many-fold), because it was very low before that. We didn't even have a marketing team before Jan 2022! Though we did do a small amount of advertising, almost all our site engagement time before then was from organic traffic, social media promotions of pieces, word of mouth, etc.

(Noticing I'm not helping with the 'length of answer to your question' issue here, but thought it might be helpful :) )

Vaidehi Agarwalla @ 2023-03-09T01:51 (+24)

(from the full report)

For product, our priorities in 2023 are:

- Increase investment in research and in improving the content substantively

- Consider releasing an updated version of our out-of-date career guide (which continues to be popular with our audience)

- Take low-hanging fruit in a range of other areas — e.g. updating key articles to be more compelling and to better reflect our current views.

I would be really excited for the web team to spend time revamping the 2017 career guide (I personally found it very useful). From the 2022 user survey (which I recommend reading) :

- The 2017 career guide is still frequently influential for people who make plan changes — even those who found 80,000 Hours after the 2017 career guide was deprioritised in April 2019. The career guide was mentioned as influential by over 1/4 of CPBCs who found 80,000 Hours in 2021-2022. It was also more likely to be mentioned by plan changes that seemed more impressive. We are now considering releasing an updated version of this career guide.

My hypothesis on why the guide is useful: It teaches skills and approaches, rather than object level views. It gives people tools to compare between options, and encourages people to be more proactive and ambitious in achieving those goals. I think the guide is one of the few complete / comprehensive resources that embodies "EA/EA thinking as a practically useful framework for making decisions about your life". I think we could do a lot more in this space.

Ardenlk @ 2023-03-09T12:36 (+5)

Thanks Vaidehi -- agree! I think another key part of why it's been useful is that it's just really readable/interesting -- even for people who aren't already invested in the ideas.

emre kaplan @ 2023-03-08T20:28 (+20)

This is a huge public service and I really appreciate 80000 Hours publicly sharing this much of its thinking and progress. As a founder, I learnt a lot about how to run an organisation thanks to all those public documents of 80000 Hours. Thank you for setting a high bar for transparency in the community.

Vaidehi Agarwalla @ 2023-03-09T01:53 (+11)

I'd be curious to about the team's decision not to publish an annual report in 2021. Based on the 2020 report, it seemed like there were a number of big updates (regarding 80K's impact on the community, and updates in how much impact 80K had) that seemed important to receive updates on.

brentonmayer @ 2023-03-09T11:25 (+18)

Basically: these just take a really long time!

Lumping 2021 and 2022 progress together into a single public report meant that we saved hundreds of hours of staff time.

A few other things that might be worth mentioning:

- I’m not sure whether we’ll use 1 or 2 year cycles for public annual reviews in future.

- This review (14 pages + appendices) was much less in-depth and so much less expensive to produce than 2020 (42 pages + appendices) or 2019 (109 pages + appendices). If we end up thinking that our public reviews should be more like this going forward then the annual approach would be much less costly.

- In 2021, we only did a ‘mini-annual review’ internally, in which we attempted to keep the time cost of the review relatively low and not open up major strategic questions.

- We didn’t fundraise in 2021.

- I regret not publishing a blog post at the time stating this decision.

Vaidehi Agarwalla @ 2023-03-09T01:44 (+10)

It would be helpful to have information on lag metrics (actual change in user behavior) in the summary and more prominently in the full report.

My understanding is that plan changes (previously IASPC's then DIPY's) were a core metric 80K used in previous years to evaluate impact. It seems that there has been a shift to a new metric - CBPC's (see below).

From the 2022 user survey :

Criteria Based Plan Changes

1021 respondents answered that 80,000 Hours had increased their impact. Within those, we identified 266 people as having made a “criteria based plan change” (CBPC) i.e. they answered they’d taken a different job or graduate course of study and there was at least a 30% chance they wouldn’t have if not for 80,000 Hours programs.

The CBPCs are across problem areas. They also vary in how impressive they seem - both in terms of how much of a counterfactual impact 80,000 Hours specifically seems to have had on the change, and how promising the new career choice seems to be as a way of having impact. Most often the website was cited as most important for the change. In reading the responses, we found 19 that seemed the most impressive cases of counterfactual impact and 69 that seemed moderately impressive.

I'd be curious to know the following:

- How does the CBPC differ from the previous metrics you've used?

- How important is the CBPC metric to informing strategy decisions compared to other metrics? How do you see the CBPC metric interacting with other metrics like engagement? Are there other lag metrics you think are directly impactful apart from plan changes (e.g. people being better informed about cause areas, helping high impact orgs recruit, etc.?

- Were you on track with predicted CBPC's or not (and were there any predictions on this - perhaps with the old metrics)? The 2021 predictions doc doesn't mention them (as compared to the 2020 prediction doc)

- By what process do you rate different plan changes? Does predicted impact vary by cause, or other factors? Are these ratings reviewed by advisors external to 80,000 Hours?

brentonmayer @ 2023-03-09T11:23 (+10)

Hi Vaidehi - I'm answering here as I was responsible for 80k’s impact evaluation until late last year.

My understanding is that plan changes (previously IASPC's then DIPY's) were a core metric 80K used in previous years to evaluate impact. It seems that there has been a shift to a new metric - CBPC's (see below).

This understanding is a little off. Instead, it’s that in 2019 we decided to switch from IASPCs to DIPYs and CBPCs.

The best place to read about the transition is the mistakes page here, and I think the best places to read detail on how these metrics work is the 2019 review for DIPYs and the 2020 review for CBPCs. (There’s a 2015 blog post on IASPCs.)

~~~

Some more general comments on how I think about this:

A natural way to think about 80k’s impact is as a funnel which culminates in a single metric which we can relate to as a for profit does to revenue.

I haven’t been able to create a metric which is overall strong enough to make me want to rely on it like that.

The closest I’ve come is the DIPY, but it’s got major problems:

- Lags by years.

- Takes hundreds of hours to put together.

- Requires a bunch of judgement calls - these are hard for people without context to assess and have fairly low inter-rater reliability (between people, but also the same people over time).

- Most (not all) of them come from case studies where people are asked questions directly by 80,000 Hours staff. That introduces some sources of error, including from social-desirability bias.

- The case studies it’s based on can’t be shared publicly.

- Captures a small fraction of our impact.

- Doesn’t capture externalities.

(There’s a bit more discussion on impact eval complexities in the 2019 annual review.)

So, rather than thinking in terms of a single metric to optimise, when I think about 80k’s impact and strategy I consider several sources of information and attempt to weigh each of them appropriately given their strengths and weaknesses.

The major ones are listed in the full 2022 annual review, which I’ll copy out here:

- Open Philanthropy EA/LT survey.

- EA Survey responses.

- The 80,000 Hours user survey. A summary of the 2022 user survey is linked in the appendix.

- Our in-depth case study analyses, which produce our top plan changes (last analysed in 2020). EDIT: this process produces the DIPYs as well. I've made a note of this in the public annual review - apologies, doing this earlier might have prevented you getting the impression that we retired them.

- Our own data about how users interact with our services (e.g. our historical metrics linked in the appendix).

- Our and others' impressions of the quality of our visible output.

~~~

On your specific questions:

- I understand that we didn’t make predictions about CBPCs in 2021.

- Otherwise, I think the above is probably the best general answer to give to most of these - but lmk if you have follow ups :)

Ivy Mazzola @ 2023-03-09T19:10 (+8)

Somebody added the community tag and I think that's wrong and it should be removed. This should be on frontpage. It's a charity review.

Ben Stewart @ 2023-03-08T21:44 (+4)

Thanks, I appreciate this kind of public review! And congratulations on the impressive growth. I was wondering, do you have figures for how many people altered their career plans or were successful in a related job opportunity due to your work? This is much harder to measure, but is much more closely connected to endline goals and what's of value, including potential cost-effectiveness. Apologies if this is in the full report which I only glanced at (though if it is there I would suggest adding it to the summary).

Cody_Fenwick @ 2023-03-09T11:36 (+3)

Hi — thanks for for the question! That’s definitely what we care about most, but it’s also unsurprisingly very hard to track, as you say. We have different ways we try to assess our impact along these lines, but the best metrics we can share publicly are in an appendix to our two-year review that summarises the results of our user survey. You can also see Brenton's answer in a separate comment for much more detail about our efforts to track these metrics.

brentonmayer @ 2023-03-09T12:27 (+2)

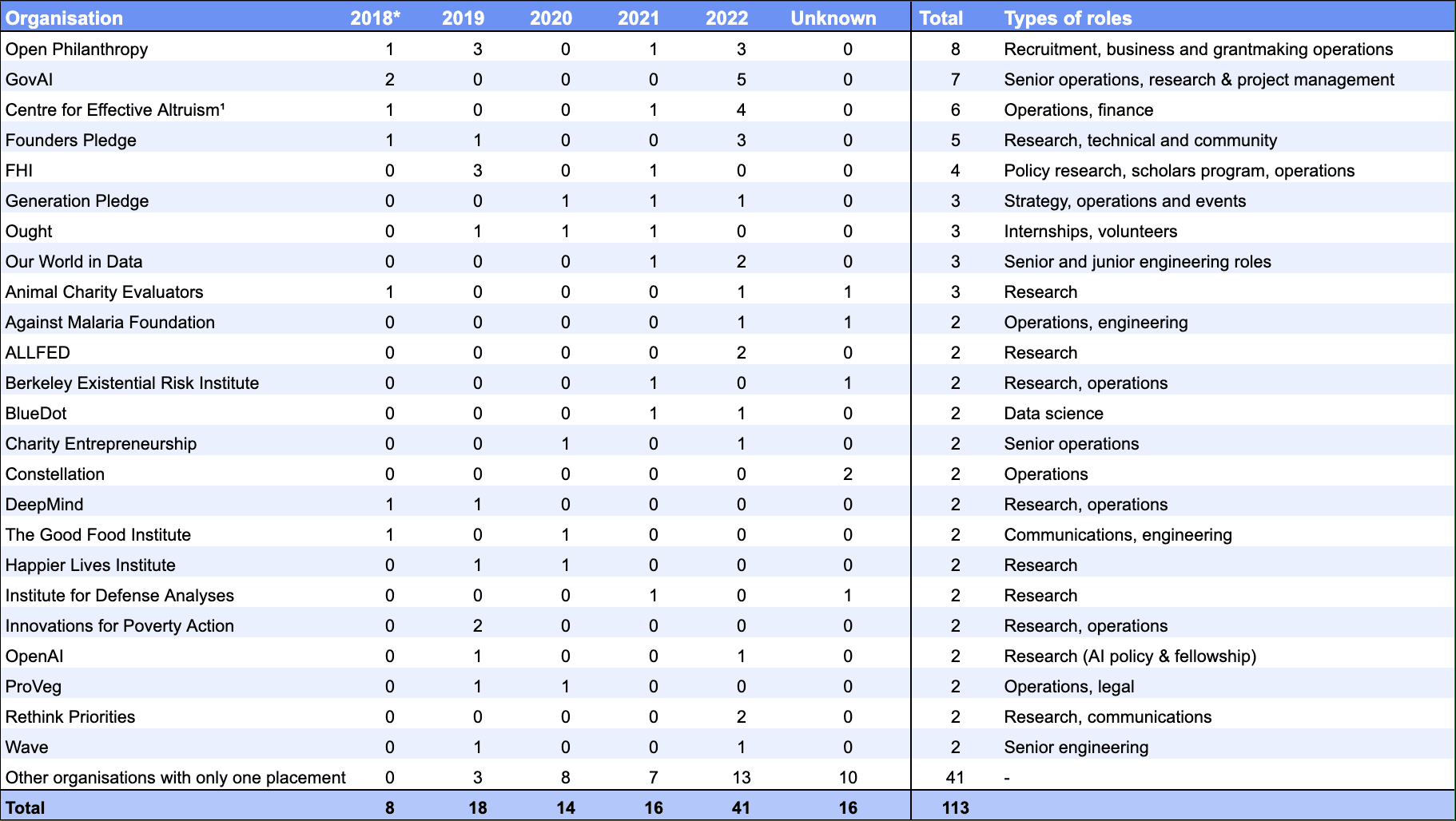

Cody's answer below and mine above give better 'overall' answers to your question, but - if you'd like to see something concrete and incomplete you could look at this appendix of job board placements we're aware of.

Ben Stewart @ 2023-03-16T09:20 (+2)

Thanks Cody and Brenton!

Vasco Grilo @ 2023-03-10T11:11 (+2)

Thanks for sharing!

I think it would be cool to have in the summary some metrics referring to the overall cost-effectiveness of your 4 programmes. I suppose these would have to refer to previous years, as the impact of your programmes is not immediate. I still think they would be helpful, as the metrics you mention now (in the summary above) refer to impact and cost separately.

brentonmayer @ 2023-03-10T14:05 (+3)

Thanks for the thought!

You might be interested in the analysis we did in 2020. To pull out the phrase that I think most closely captures what you’re after:

We also attempted an overall estimate.

This gave the following picture for 2018–2019 (all figures in estimated DIPY per FTE and not robust):

- Website (6.5)

- Podcast (4.1) and advising (3.8)

- Job board (2.9) and headhunting (2.5)

~~~

We did a scrappy internal update to our above 2020 analysis, but haven’t prioritised cleaning it up / coming to agreements internally and presenting it externally. (We think that cost effectiveness per FTE has reduced, as we say in the review, but are not sure how much.)

The basic reasoning for that is:

- We’ve found that these analyses aren’t especially valuable for people who are making decisions about whether to invest their resources in 80k (most importantly - donors and staff (or potential staff)). These groups tend to either a) give these analyses little weight in their decision making, or b) prefer to engage with the source material directly and run their own analysis, so that they’re able to understand and trust the conclusions more.

- The updates since 2020 don’t seem dramatic to me: we haven’t repeated the case studies/DIPY analysis, Open Phil hasn’t repeated their survey, the user survey figures didn’t update me substantially, and the results of the EA survey have seemed similar to previous years. (I’m bracketing out marketing here, which I do think seems significantly less cost effective per dollar, though not necessarily per FTE.)

- Coming to figures that we’re happy to stand behind here takes a long time.

~~~

This appendix of the 2022 review might also be worth looking at - it shows FTEs and a sample of lead metrics for each programme.