A shallow review of what transformative AI means for animal welfare

By Lizka, Ben_West🔸 @ 2025-07-08T00:25 (+163)

Epistemic status: This post — the result of a loosely timeboxed ~2-day sprint[1] — is more like “research notes with rough takes” than “report with solid answers.” You should interpret the things we say as best guesses, and not give them much more weight than that.

Summary

There’s been some discussion of what “transformative AI may arrive soon” might mean for animal advocates.

After a very shallow review, we’ve tentatively concluded that radical changes to the animal welfare (AW) field are not yet warranted. In particular:

- Some ideas in this space seem fairly promising, but in the “maybe a researcher should look into this” stage, rather than “shovel-ready”

- We’re skeptical of the case for most speculative “TAI<>AW” projects

We think the most common version of this argument underrates how radically weird post-“transformative”-AI worlds would be, and how much this harms our ability to predict the longer-run effects of interventions available to us today. Without specific reasons to believe that an intervention is especially robust,[2] we think it’s best to discount its expected value to ~zero.

Here’s a brief overview of our (tentative!) actionable takes on this question[3]:

| ✅ Some things we recommend | ❌ Some things we don’t recommend |

|

|

We also think some interventions that aren’t explicitly focused on animals (or on non-human beings) may be more promising for improving animal welfare in the longer-run future than any of the animal-focused projects we considered. But we basically set this aside for the sprint.

In the rest of the post, we:

- Outline a bit of our approach on the broad question (& why we separate potential interventions by whether they target more “normal” eras/scenarios vs “transformed” ones)

- Discuss some specific proposed AI<>AW interventions

- And list some questions we think may be important

1. Paradigm shifts, how they screw up our levers, and the “eras” we might target

If advanced AI transforms the world, a lot of our assumptions about the world will soon be broken

AI might radically transform the world in the near future. If we’re taking this possibility seriously, we should be expecting that past a certain point,[5] a lot of our assumptions about what’s happening and how we can affect that will be completely off. (For example, a powerful AI agent may perform a global coup, which, among other consequences, would completely invalidate all the work animal advocates had done to secure corporate commitments, change governmental policy, etc. Or: governance and economic structures may get redefined. We might see the emergence of digital minds. Etc.)

Views on when we might see such a “paradigm shift” (or on how quickly things will change) vary:

We (Ben and Lizka) expect that advanced AI is the most likely driver of massive transformation in the near future,[6] and that by default, AI will continue rapidly progressing. As a result, we think by e.g. 2035,[7] little about the world will be as it is today.

- But it’s still possible that AI progress will stall (or slow to a crawl for a while) — or perhaps AI will just not be a huge deal in the coming century even if capabilities progress continues.

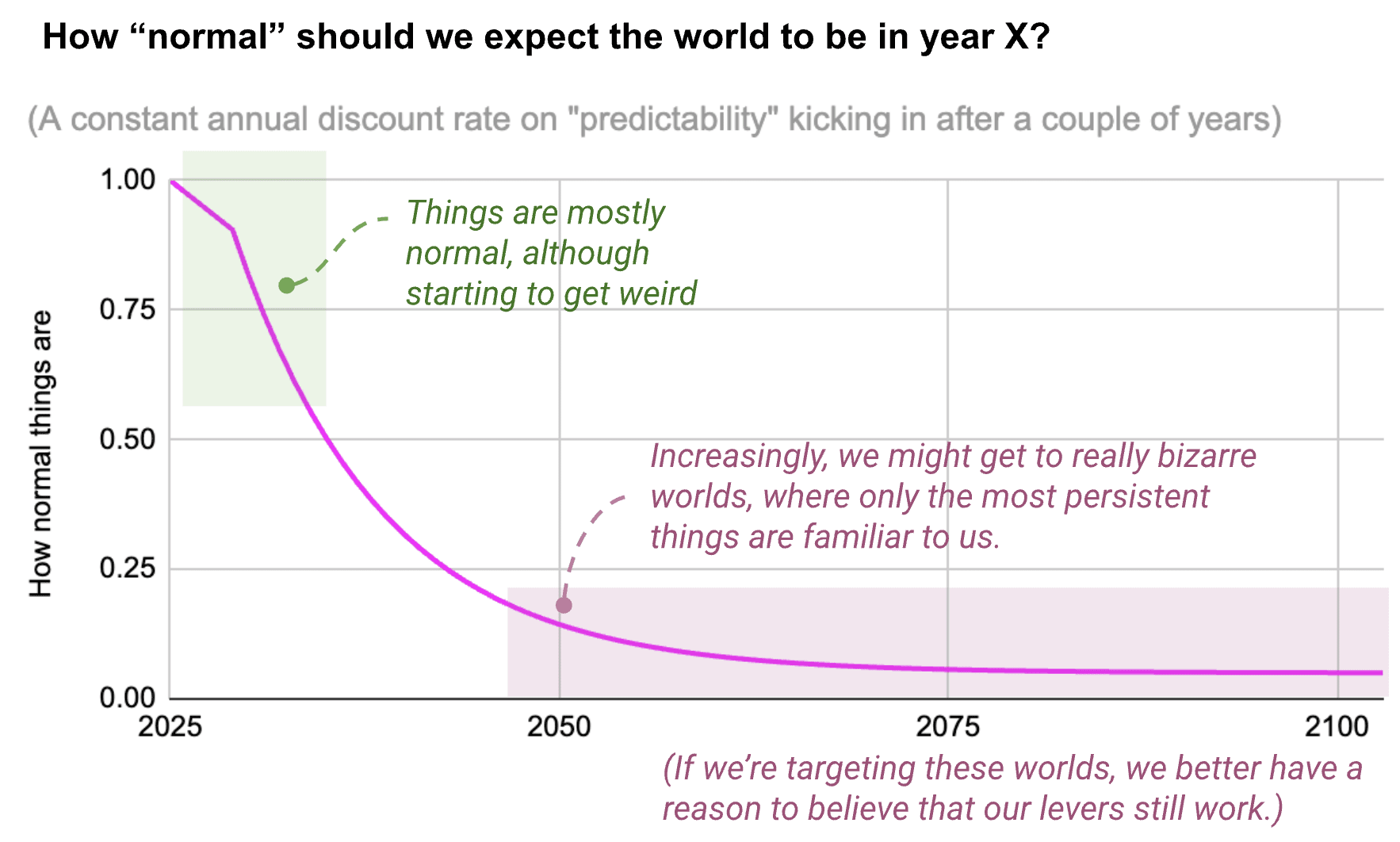

In practice, the “paradigm shift” will probably not be immediate. The change could happen fairly gradually; the “weirdness” of the world (from our POV) just continues going up as we look further into the future — and correspondingly our ability to predict what’s happening goes down:

Should we be aiming to improve animal welfare in the long-run future (in transformed eras)?

We can’t expect (without justification) that the same strategies will work for helping animals before and after a “paradigm shift.”[8] So we think it’s worth splitting strategies by the "kind of world" they try to affect:

- The “pre-paradigm-shift” or “normal(ish) era”, where we can mostly imagine today’s world, with some adjustments based on specific trends or developments we can already foresee

- The “post-paradigm-shift” or “transformed era”, which looks extremely alien to us, as AI (or other things) have upended almost everything

Some people are pessimistic about the viability of trying to reach across these eras and predictably affect what’s happening in the “transformed” world. On this view, we’re pretty “clueless” about how to help animals past a certain point in time, and should just focus on “normal” worlds if we want to help animals.[9]

But if we could actually find levers that reach across the paradigm shift, then we may be able to have an outsized impact. In particular, we may be able to identify more robust trends (“pockets of predictability”) or pivotal transition points (~crucibles) whose outcomes are contingent, persistent, and predictably good or bad for animals.[10]

Ultimately, we think that right now, we don’t know of strong strategies/levers that reach across paradigm shifts, but that some work should go to looking for such strategies (and that occasional high-leverage opportunities may show up, particularly for people in unusual positions — so they’re worth paying attention to).

Here are some of our considerations:

| What era is targeted | Normal(ish) (pre-shift) era | Transformed (post-shift) era (/ the long-term future) |

|---|---|---|

| Sketch of the strategies involved | Pursue interventions that will pay out before the critical shift.

(And as the world starts changing, we’ll know more about what to expect further out.) | Look for interventions whose effects will persist into the “post-shift” era (or otherwise affect the longer-term future in predictable ways)[11]. |

| Key things to remember about these strategies | We should heavily discount the impact of these interventions over time to account for the probability that the world changes and the intervention’s theory of change is disrupted. (See below.) | We should only pursue strategies that are particularly robust — we have specific reasons to believe they’ll be positive across many different worlds, or the “transformed” worlds we expect.

(One way to approach this is to look for AW-relevant “basins of attraction”[12] / “lock-ins” that will persist past the paradigm shift, which we’d be able to steer into or around.) |

| Reasons to penalize our estimates for how promising targeting this era/paradigm would be | These interventions will have less time to pay out (we’re not filtering them for cross-paradigm robustness, so we can’t assume that they’ll stay relevant once AI starts radically transforming the world). Targeting normal(ish) worlds may functionally involve betting on longer AI timelines (or on “AI will not actually be that transformative”[13]).

The period/scenarios in which this world is relevant may also have weaker levers for actually help animals. (For example, you may think that advanced AI could be a very powerful lever for scaling AW work — but you’re conditioning on “AI isn’t a big deal.” Or you may think that most worlds in which AI development stalls involve a large-scale nuclear war that derails scientific progress — and a large-scale nuclear war may also derail animal advocacy efforts.) | It’ll be harder to be confident that these strategies will be relevant, or that their impact will be positive (we may just be totally off!). This should be viewed as a real penalty on their viability.

It’s also possible that AW problems will be solved (or overdetermined) “by default” in most transformed worlds[14] (which would be a penalty on the scope of these strategies). |

| Reasons to pursue interventions that target this era |

|

|

| ~Random examples as illustrations |

|

|

It’s also possible that the most promising scenarios to target are actually somewhere in between the two options above — e.g. worlds in which AI is “a huge deal, but not utterly transformative” (more like the internet). These could provide us with strong levers (new tech opportunities, maybe large amounts of funding) while being predictable-enough to plan for (and might retain status-quo issues, unfortunately). On the other hand they may be fairly unlikely, or actually sufficiently bizarre... (For now, we’re just listing this as a question to consider exploring.)

A Note on Pascalian Wagers

A possible defense of investing in certain speculative long-run-future-oriented interventions goes like this:

Because the stakes of the post-paradigm-shift world might be so high, even small increases in the probability of better outcomes can outweigh the value of pre-paradigm-shift, more certain work.

In our specific context, this might become:

Giving some of today's AI models a strong stance against factory farming is unlikely to help the long-run future much (even if we manage to do it) — it's unclear if there will be factory farming in the future [see below], or if today's model values will translate to future models' [see below], ... — but if there *are* factory farms, there could be *so many* of them. So even with the reduced chances, it's worth investing in.

We disagree with this stance, and only consider a possible intervention for affecting the post-paradigm-shift world when we have a concrete, articulable reason why it should predictably affect that world.[15] In particular, we think that the uncertainty in most interventions' expected effects builds up incredibly quickly when we're talking about post-paradigm worlds, and it's basically just as easy for the effects of such interventions to be negative[16] — as one example, see the discussion on how making AI values “animal-friendly” may backfire, below. So you really do need a specific reason to believe in your levers.

Perhaps unsurprisingly, this dynamic tends to make us more skeptical of many (consequentialist) interventions that are justified on the basis of helping animals in the long-term future (or in "post-transformation" scenarios).

(But this is a well worn topic, and we won’t discuss it further in the memo.)

Discounting for obsoletion & the value of "normal-world-targeting" interventions given a coming paradigm shift

These considerations affect how we think about normal interventions, too:

In general, most of the “levers” which exist and work pre-paradigm-shift (now) do not obviously work post-paradigm-shift (the levers will break, the changes they cause will not translate directly). As discussed above, we think this means you should heavily discount the effects of using most levers (based on when you expect the world to be transformed).[17]

- When considering the levers advocates currently pursue (e.g. cage-free campaigns), we don’t immediately see reasons for why these levers' effects would persist post-paradigm-shift.

- For example, it seems extremely unclear that McDonald’s would continue to honor a pledge about cage-free eggs once the economy had been radically transformed, human labor was no longer economically valuable, etc. (“MacDonald’s” might no longer exist. Even eggs, cages, etc. might at some point become obsolete. ...)

- So we think that, unless advocates have a specific & compelling story for why a lever’s outcomes will persist post-paradigm-shift, they should only focus on the effects the intervention will have before the paradigm shift, and discount the post-shift impacts to ~0.

- For example, if you believe that McDonald’s will honor cage-free eggs pledges for only the next 10 years, you should only consider suffering averted within the next decade.

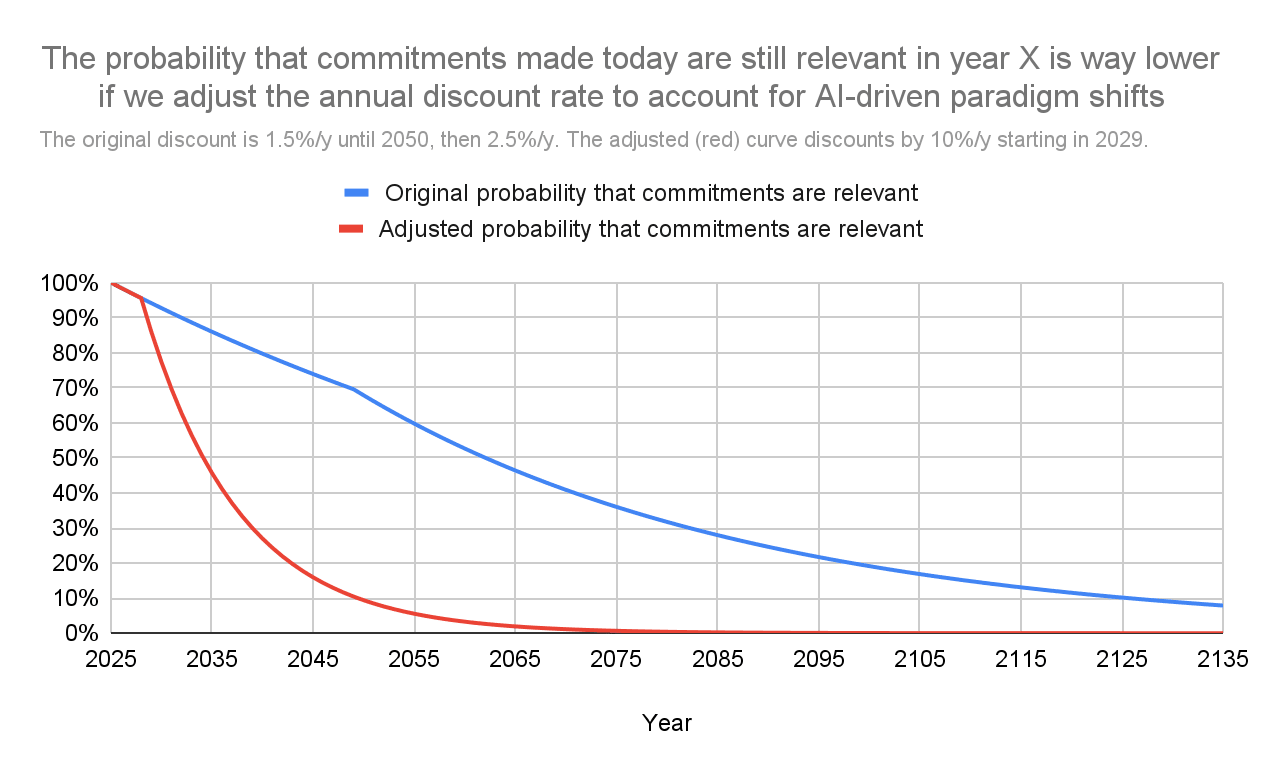

We’re not actually sure how quickly things will change, so we can model this as some (annual) penalty on the expected impact of a given intervention that accounts for the chances that the intervention has become obsolete by time x

As an example of why this matters: Cost-effectiveness of Anima International Poland discounts the impact of commitments via a “Probability that commitments are relevant” curve (shown in blue below), resulting in a median estimate of 37 years of "relevancy". An alternative discount schedule, which we think better accounts for the impact of AI (though think is oversimplified and don’t strongly endorse), results in a median of only 9 years of "relevancy" (see the red curve).[18]

- We fairly strongly feel that this kind of discounting is worth doing — even with something as simple as the “10% per year” model above — though are unsure how much such discounting would change priorities for AW advocates

2. Considering some specific interventions

Below we explore some possibilities for what transformative AI could mean for AW work.[19] We were thinking primarily about whether it makes sense for e.g. established animal welfare charities to start hiring for staff in these areas, or for funders to announce support for this work; in many of the places where we come out skeptical, we wouldn't be surprised if it did actually make sense for specific individuals to pursue projects they're well placed for.

2.1. Interventions that target normal(ish) eras

🔹 Leveraging AI progress to boost “standard” animal welfare work

Even before AI starts radically transforming the world, it could provide very promising opportunities for improving the lives of animals.

Several ideas here seem promising, including AI-boosted AW-specific tools (e.g. PLF-for-good, tools for better enforcement of commitments), fundraising from people invested in AI, and simply training people in the AW to effectively use various AI productivity tools. (See also this recent post on a related topic.)

There could also be some unusually robust (i.e. persistently good, even across the “paradigm shift”) interventions in this space,[20] but we've set this aside for now.

Note: As discussed above, if we're leveraging AI-related developments to boost "standard" animal welfare work (e.g. welfare commitments, alt proteins R&D, policy advocacy, etc.), we should generally focus on interventions that pay off quickly, as the background situation might start changing rapidly and make the investment obsolete.

❌ Trying to prevent near-term uses of AI that worsen conditions in factory farming (“bad PLF”)

We're tentatively skeptical that trying to prevent harmful uses of "contemporary" AI in farming is a promising approach:

- As discussed in the discounting section, we believe that work which pays off in the future should be heavily discounted

- Our initial understanding is that the PLF projects that advocates currently are considering will take years to pay off (e.g. lobbying for PLF regulations)

- We were unable to find specific PLF projects that seemed promising after an extremely shallow review (they generally seem very tied to current "paradigms"), and understand that Anima does not have any PLF projects they believe are worth pursuing independent of AI concerns

- So this mostly feels overdetermined to us - PLF may be very hard/slow to fight, have limited impacts independent of transformative AI, and in transformative-AI scenarios will likely have fairly short-term predictable impacts

- So we don’t currently see advocacy against PLF techniques as promising, though we wish to emphasize that we did an extremely shallow review

- As with other sections, “quick wins” are probably still valuable in this space, if they exist

2.2. Interventions that try to improve animal welfare in the long-run (past the paradigm shift)

⭐ Guarding against misguided prohibitions & other bad lock-ins

Advanced AI and other developments in the near future may lead to “lock-in” moments in which certain decisions have unusually persistent impacts. If so, the chance to prevent such lock-ins / improve how they go (assuming we aren’t clueless about their long-run impacts on animals) could be a very important lever for improving animal welfare in the long-run.

One concrete type of lock-in to pay attention to is attempts to enforce the status quo, especially by powerful players (who benefit from it), or via misguided attempts to hard-code certain moral stances/choices in important agreements/documents.

For instance:

Today, the USDA is instructed to purchase “surplus” agriculture products, thus ensuring that farming animals is revenue-generating even if no one wants to purchase their meat.[21] We could have more laws or decisions like this (perhaps AI-enforced), which could perpetuate factory farming past the paradigm shift, even if no one is actually eating meat or otherwise benefitting from animal farming (which means these decisions might be especially contingent).[22] We should try hard to prevent this kind of thing. (Parts of US law are unusually likely to survive/translate past the paradigm shift.)

- There may also be pushes for well-intentioned-but-misguided policies / international agreements with demands along the lines of “historical practices must be allowed to continue” which result in farming (or e.g. wildlife) continuing post-paradigm-shift in something like its current form. (Many of these policies will presumably not actually last very long — but some might.)

- (Note: some lock-in moments may not involve laws or political agreements.)

We don’t know of concrete opportunities in this area (to be clear: we didn't spend much time looking for any), and don’t confidently recommend allocating significant amounts of funding towards it yet. But we think they would be worth pursuing if viable opportunities were found[23], and such opportunities are worth looking for.

🔹 Exploring wild animal welfare & not over-indexing on farming

We think it’s likely that animals as we know them will at some point stop being economically valuable, and don't expect a huge number of farmed animals in our central examples of an "(AI-)transformed world". Just as oxen were replaced by tractors and messenger pigeons by the telegraph, we expect farmed animals to be outcompeted by some (perhaps unknown-to-us-today) technology.

So we recommend trying to not index on our current world, where factory farming is arguably the greatest source of animal suffering, at least as far as we can easily affect it.

The stories we can tell for why a post-paradigm-shift world would include animals generally rely on some sort of sentimentality. This probably means that wild animal suffering is the most likely source of animal suffering in post-paradigm-shift worlds (because decision-makers have sentimental attachment to nature/wild animals, or moral views on this question) — we therefore tentatively suggest that most AW work targeting post-paradigm-shift worlds should focus on wild animals (or more generally animals that exist for non-”functional” reasons).

(Still, we can't rule out farming. "Nostalgia" and other factors could lead to the persistence of farming, we might see new “functional” animals or animal practices, and there are unknown unknowns here. We note related questions in the list below.)

💬 “Shaping AI values”

A lot of energy in AI<>AW so far has focused on making the values of (advanced) AI systems more animal-friendly/inclusive. We have mixed feelings here; some approaches to “shaping AI values for AW” seem very promising, but we think that many will make basically no difference, and some could easily be harmful.

We explore this below, but first wanted to caution that we think this discussion uses “AI values” in a pretty messy way, particularly for future AI. We may try to clarify this in the future. For now, we’ve included as-is, as it seemed better than cutting and we didn’t have time to actually untangle things.

a) Can we actually affect the values (or “tendencies”) of future, advanced AIs (not just weaker contemporary models) to make them more AW-friendly?

Let's take for granted that we have reasonably clear mechanisms for influencing the values of current AI models, in at least some respects. (For example, we can suggest or advocate for changes to the “model specs” developers use to grade AI models in post-training, or create evals/benchmarks/datasets that might get used for training future models, etc.)

But the tendencies/values of today's AI systems don’t matter that much, and it’s less clear that the same mechanisms will affect the behavior of future, significantly more powerful AI systems.[24]

So; will the changes persist? The key reasons for "yes" are:

- (1) Changes we can make to AI training may stick around / remain relevant, particularly if...

- ...the intervention targets more robust / long-lasting levers — e.g. getting a strong, long-term commitment from an AI developer or changing regulation may be much better than only changing the constitution of a specific AI system

...future transformative AI systems will be trained via quite similar methodologies (e.g. constitutions/”model specs”, evals/benchmarks) and something like inertia carries the changes through to more powerful models (which is more likely if the AW-favoring changes aren’t too costly for developers[25])

- ...or new training methodologies that are heavily influenced (possibly with help from advocacy) by today’s methodologies — e.g. constitutions are straightforwardly copied and used in new AI training paradigms

- (2) Current models’ values may shape future AIs’ values

The fact that we’ve changed the values/behavior of today’s (relatively weak) models will have flow-through effects to the nature of future AI systems (e.g. because today’s models are used to generate a lot of training data)[26]

However, the above arguments seem fairly fragile, and it’s pretty easy to imagine that, for instance, future AI models will be trained using methodologies completely unrelated to things like constitutions, or that changes are undone to provide a better customer value, etc.

(We haven't really looked at methods for influencing future AI values that don't look like "assume future AIs are trained in ways analogous to the current regime and changehow that will work."[27])

How much will “values” of advanced AI actually matter for animal welfare in the long-run?

It seems plausible that, if transformative AI is developed, the “values” of an advanced AI system will (in at least some predictable ways) shape the long-term future, and its treatment of animals.[28]

But there are also substantial reasons to doubt the importance of “values” like this.

For one thing, choices relevant to the treatment of animals may be determined more by “emergent” dynamics (via social norms, various selection forces, etc.) than by any individual entity’s “values”. Today, for instance, economic pressures make it hard to run cruelty-free farms (such farms are not competitive, at least without external support) — so trying to make farmers care more about animals would likely have little or no effect. Similarly, powerful AI entities’ behavior will be, in large part, determined by efficiency and other factors[29] unrelated to “values.” Depending on how much consideration for animals (or the qualities required to enable consideration for animals) weakens “fitness” of AI entities under those forces, animal-friendly AIs may simply be selected out.

In more “multipolar” worlds, individual AIs’ values may also play a more complicated role (see more on “multi-agent risks” here). Alternatively, humans (or human institutions) may remain in control, with AI entities making few relevant decisions independently. (And there’s also a chance that animals will no longer exist by the time AI systems’ values are significantly shaping the world.)

Overall, we're somewhat worried that too much focus is going to a simple story in which one "AI agent"'s values are most important, possibly because this is more natural think about.

Would giving “animal welfare” values to (advanced) AI systems be good?

Finally, even if we can influence “values” of advanced AIs and they do matter for animal welfare, we think that might play out in messier, more complicated and fragile ways — we don't think it's obvious that making AIs more concerned with “animal welfare" (as we know it) is good / important.

For example:

- Pushing for “animal-friendly” values may be harmful if it skews trajectories that are good for animals

- As an intuition pump, imagine that animal farming (or other functional-animal mistreatment by humans) will be eradicated by default (e.g. because it will stop being economically valuable). If we manage to instill strong animal-related concerns that are not perfectly “wise” (e.g. specific ~beliefs on what is good or bad for farmed animals), then the AI(s) may perpetuate farming in some form even if that choice is unnecessary and harmful.

Alternatively, advanced AI may start helping humanity[30] improve our moral reasoning (or facilitate legitimate collective decision-making processes) in more general ways. But earlier interventions aimed at giving advanced AI systems specific values may disrupt or worsen that process, and again the results may be worse for animals.

- Animals and how they’re integrated in our society may exist in a form so bizarre (e.g. as cyborg organisms) that today’s “animal welfare” values don’t straightforwardly apply and/or are actively harmful

There are a variety of arguments that “better” AI values may indirectly result in worse outcomes, particularly during conflict between AI agents[31]

(There’s also some chance that pushing for animal-considering values may increase the chances of AI takeover. We haven’t thought about this too much but can probably say more if helpful.)

Takeaways on "shaping AI values"?

We think it’s probably good for future AIs to have more animal-friendly values, but think this is less obvious and less good than one might expect. We’re also worried that contemporary work will not actually influence the values of post-paradigm-shift AIs.

In terms of a recommendation: we moderately think that work here may be valuable by people who are well-placed to do it, but do not think that resources should be reallocated from otherwise-promising projects towards this area, unless projects can make a more compelling case that their work will have persistent and robustly good outcomes. To the extent that it does happen, we’re more positive about more “general”/higher-order values (vs. e.g. “hens should not be...”).

💬 Other kinds of interventions? (/overview of post-paradigm AW interventions)

While thinking through this broad question, we quickly brainstormed / outlined some types of interventions that may be helpful for animal welfare in "transformed" worlds. This list is almost certainly not exhaustive, but we’re sharing it in case it’s useful:

- Building broad/flexible capacity for “AW in AI-transformed worlds”

- Laying foundations / strategy work

- Potentially especially when focusing on preparing for AI-driven research/work

- Growing the field’s resources (more of some kinds of labor, or other resources — compute commitments, money, ..?)

- Guarding against potential harms to the field (e.g. tarnished reputation, or maybe self-fulfilling zero-sum dynamics between human and animal ~rights, burning goodwill in AI spaces...)

Get the field more AI-ready specifically[32]

- Laying foundations / strategy work

- Trajectory changes: Avoiding bad lock-ins, steering towards/away from good/bad basins of attraction

- Working to ensure that radical/singularity-era ~constitutions (the effective values of the “AI nation..”) or decision processes aren’t unjustifiably speciesist (or more generally promoting something like better moral reasoning/ healthier decision-making processes)

- Trying to prevent/delay/improve other animal-relevant lock-ins — e.g. choices about spreading (wild) animals to other planets

- Incentive-shaping / empowering better-for-animals groups

- Maybe: Ensuring that non-human-being-relevant research does not fall behind

- Misc / weird

- Synergies between human-oriented x-risk work and AW (e.g. improving expected/default animal outcomes post-transformative-AI in part to discourage high-risk behavior)

- (And it’s possible that non-animal-specific actions — e.g. things help Earth-originating biological life, like preventing scary AI takeover scenarios — are important for animals)

- It seems plausible that simply improving animal welfare as much as possible in the near-term is the best approach for improving it in the longer-term

- [Other?]

- Synergies between human-oriented x-risk work and AW (e.g. improving expected/default animal outcomes post-transformative-AI in part to discourage high-risk behavior)

3. Potentially important questions

Note: These haven’t been edited / processed much!

- Animal outcomes & getting more specific on possible “post-paradigm-shift worlds”

- Are there things we should do in “misaligned/takeover" worlds? (How good/bad would the situation for AW be in takeover vs. non-takeover worlds?)

- Does multipolarity vs not matter, and if so, how? (How likely are multipolar worlds?)

- What “functional” reasons might there be, for keeping animals around post-paradigm-shift?

- E.g. farmed animals currently serve a “function” of providing food to people. Will there be any functions like that post-paradigm-shift (depending on how things go?)?

- Note that “animal” may be something quite bizarre to us, like a living bioreactor for creating some useful protein, or a cyborg drone. (It may end up being debatable whether these beings even count as “animals”.)

- Might humans/decision-makers choose to keep animals around for non-functional reasons? Will those choices actually track what’s good for animals? (Can we make that more likely?)

- Which worlds to target interventions at (and what that implies)

- How important is the pre-paradigm-shift space?

- Considerations; How much (subjective) time should we expect (bet on) here? Also, interventions will take some time to pay off, which eats into the remaining time... Maybe the leverage is actually smaller, because if we end up in these worlds then things have gone badly in some other ways (e.g. wars?)

- How do AI timelines affect this reasoning? Should animal advocates bet on long timeline worlds? Or extremely short ones? (What actually changes in what people should work on?)

- (Does animal welfare work look better than other kinds of work assuming very short timelines? Or: what would you do in incredibly short-timeline worlds?)

- Can we get better answers on which scenarios are actually most worth targeting — including considering “in-between” worlds relative to the framework above (e.g. scenarios in which AI is “a huge deal, but not utterly transformative” in the next century or so).

- Related: What (possibly AI-driven but not purely AI) tech developments might be particularly relevant for animals? (Are there developments that are likely very positive in EV for animals, which may not come nearly as quickly as they could?)

- How should we actually think about the “paradigm shift” stuff? E.g. is it coherent to talk about a single “paradigm shift”, or maybe we should be thinking about “partial” shift(s)?

- Maybe agriculture changes slower/faster than other things

- Maybe things change but there will be (enough) pockets of the world that look pretty normal to us (e.g. equivalent of the Amish today), such that interventions today directly translate

- How important is the pre-paradigm-shift space?

- Levers for affecting the long-run future?

- How is it actually possible to predictably affect things post-paradigm-shift? What are the levers that don’t break? Or, if it’s not possible, we should just aim to help animals before the event horizon.

- What might get “locked in” by advanced AI or other forces/factors? (Are there unusually stable/sticky trajectories?)

- Which “windows of opportunity” do we expect? Which ones may close sooner/later?

- Values

- How much will contingent AI values play a role in post-paradigm-shift worlds?

- What are better ways to think about what shapes how advanced AIs will behave (or “make judgment calls”)? How informative is talking about “values of AIs” shaping the world vs. e.g. interactions between AIs, or “world constitution”/laws (see e.g. this paper), or emergent dynamics

- How contingent are AI values with respect to AW stuff?

- Are there hard-to-affect selection pressures?

- If powerful AIs are “aligned,” will they care about animals “by default”? (See also: value fragility.)

- What about non-animal-specific interventions?

- Which kinds of capacity-building may be most useful to do now? (e.g. commitments of some kind?)

- How is it actually possible to predictably affect things post-paradigm-shift? What are the levers that don’t break? Or, if it’s not possible, we should just aim to help animals before the event horizon.

- What aspects of AW-relevant activities (both those that help and that harm animals) are easier to automate with AI? These will become more powerful.

- What is the set of beings we should be focusing on? (Farmed animals, wild animals, digital minds - the boundaries of this memo feel pretty arbitrary and we’ve flip-flopped a bit on which groupings make sense in this context...)

- [Lots more questions!]

Conclusion

We struggled to find many concrete projects that seemed promising, and therefore don’t recommend substantial amounts of money going to this field (yet?). But it seems plausible that the field should receive more attention from researchers.

Our biggest recommendation is: to the extent that you're targeting animal welfare improvements in the normal era, you probably should discount heavily. Discounting to zero work which pays off in more than ten years is maybe one viable but very simplistic model.[33]

To the extent that you are targeting the post-paradigm-shift era: It's truly incredibly easy to forget how weird post-paradigm shift worlds might be. Of these interventions, we tentatively think marginal research effort should go to preventing bad lock-ins. Shaping AI values could also be valuable, though we feel skeptical about some of the proposed interventions.

We also think it's worth remembering that the lines between various beings/work might get blurry. Farming may stop existing or be transformed (or be driven mostly by nostalgia), so "wild animals" or bizarre things might account for most animals here. The lines between organic and not might also get blurry. (For now, it probably makes sense to nurture these fields separately though, to some extent at least.)

This memo is an extremely shallow investigation into a topic which itself seems to have received minimal investigation, so our takes are lightly held. Investigating some of the questions we list above may be a reasonable next step for those who want to push the field forward.

Appendices

Some previous work & how this piece fits in

Several people have discussed what transformative AI would mean for animal welfare advocacy:

- How should we adapt animal advocacy to near-term AGI? lists some arguments and suggests a takeaway of “if we assume a 50% probability of AGI in the next 5-10 years, [our] goal should probably be more like ‘ensure that advanced AI and the people who control it are aligned with animals’ interests by 2030, still do some other work that will help animals if AGI timelines end up being much further off, and align those two strands of work as much as possible’.”

- Transformative AI and wild animals: An exploration suggests some concrete ways TAI should influence Wild Animal Welfare, though also concludes “It seems that investing in WAW science remains valuable under many scenarios despite short TAI timelines.”

- The AI for Animals substack has a variety of posts which we won’t attempt to summarize here.

- Steering AI to care for animals, and soon is a brief post arguing that the field should receive more attention.

- Bringing about animal-inclusive AI lists a handful of ideas that advocates could pursue.

- Speciesist bias in AI: how AI applications perpetuate discrimination and unfair outcomes against animals reports on bias in current ML models.

- Animal Advocacy in the Age of AI “calls on animal advocates to take seriously how the future will be transformed by AI”.

(Due to time constraints we did not do a thorough literature review, and the above list is not comprehensive!)

How our work fits in

Anima International asked us to advise animal advocates on how transformative AI should affect their work.[34] We originally scoped out ~two days to publish a report, though we probably ended up spending closer to three.

This memo should therefore be taken as a very shallow overview. Notably, we are approaching this as people who have mostly thought about (transformative) AI, and are less familiar with animal advocacy (which we understand to be the converse of most people in the AIxAnimals space).

An important caveat is that this memo deals specifically with animal welfare. A theme running throughout this memo is that a world which is radically transformed by AI may either not have animals or have animals in such a bizarre state that it’s unclear whether you would describe them as “animals” and therefore the impact of animal welfare interventions per se is lower. This memo does not comment on the utility of things like digital minds work (and AIxAnimals interventions are sometimes justified on the basis of being useful for preparing for digital minds).

We would like to thank Keyvan Mostafavi, Yip Fai Tse, Constance Li, Adrià Moret, Max Taylor, and Jakub Stencel. (Commenters don’t necessarily agree with what we write here!)

- ^

Neither of us is currently involved in animal welfare work, and mostly think about AI stuff. (Lizka is employed at Forethought, but did not write this on Forethought time.)

- ^

I.e. “strong reasons it’ll will have positive effects across at least a non-trivial chunk of “transformed” worlds, with no similarly strong reasons to believe the opposite”

See also: “pockets of predictability”

- ^

We were exploring the question: “What might extremely advanced AI mean for animal welfare work.” Many other considerations are out of scope here.

In particular, we were not exploring what the possibility of TAI means for comparisons between animal welfare and other causes/work.

- ^

We tentatively think this area — e.g. scanning for hard-to-reverse developments that predictably-in-expectation shift the trajectory of what happens to animals — is the biggest deal in this area, but that it’s not ready to absorb large amounts of money/labor

- ^

the shift may look pretty gradual, or there may be multiple ~paradigm shifts, or more context-specific narrower paradigm shifts (e.g. paradigm shifts for agriculture), etc.

It might be better to use partial/gradual/alternate frameworks to think about the world being transformed, but we've gone with a very simplified model for now, largely without trying out variants.

- ^

Non-AI things (e.g. full-scale nuclear war) may cause “paradigm shifts” like this, too.

- ^

We both have a loose range for how likely it is that the world will look fundamentally different at various points in time. We listed 2035 above, but even by 2030 we think there’s a decent chance that relevant things have been “transformed” (e.g. the difference between now and 2030 may be more than the difference between 1800 and now, in some relevant way). On the other hand, things might change more slowly — there’s some chance that e.g. 2050 is no more different from today than e.g. 1990.

- ^

Some strategies — like flexible capacity-building or high-level strategy research — may be relevant across both of these worlds. But we think they’re the exception.

- ^

See e.g. this post: Leadership change at the Center on Long-Term Risk — EA Forum

- ^

- ^

Especially strategies which benefit from earlier investment, e.g. because there’s a limited window of opportunity. This paper section discusses related considerations.

- ^

Related discussion here: Beyond Maxipok — good reflective governance as a target for action (“Horizons” and “Stable basins” sections in particular)

- ^

Targeting your work at (potential) futures where you think you can actually make a difference is related to the idea of “playing to your outs” (choosing strategies in a game based on the assumption that you can still win, instead of strategies that minimize your losses)

- ^

Either because the resulting global order treats animals well, or because of something more like “all biological life was destroyed so animal welfare is now irrelevant”.

- ^

and discount to zero reasoning which does not rely on concrete articulable reasons, or possible effects on sufficiently transformed worlds

- ^

For more, see e.g. Counterproductive Altruism: The Other Heavy Tail

- ^

- ^

Calculation can be found here

- ^

We didn't try to be very exhaustive here!

- ^

E.g. AI for improving epistemics may be an "asymmetric weapon" that helps humanity actually follow its morals.

- ^

See also how the majority of longshoremen don’t actually work, but instead continue to receive payments for sitting at home after their work was automated, thanks to contracts they negotiated with the docks.

- ^

Something to keep in mind is that e.g. in the US, powerful groups like the farming lobby (or workers’ unions, etc) might actively push for such outcomes (and political power may be concentrating, or at least shifting rapidly).

- ^

Though note that many of these policies would also be worth fighting in pre-paradigm-shift worlds

- ^

(In some ways, this is the problem of ~scalable alignment.)

- ^

e.g. customers may be annoyed if the model refuses to tell it how to cook veal, so changes that demand such behavior are probably quite costly

- ^

See some more discussion of the persistence of changes to AI training/development here (appendix to this piece).

- ^

At the moment, Lizka (at least?) very tentatively thinks that weirder/less direct proposals like "write stories in which benevolent (artificial?) agents (heroically) work towards harmony between humanity and other beings" (or "simply start treating animals better ASAP" or "work towards AI-powered tools that help humans understand their values better and/or act in accordance to them" etc.) are approximately as likely to "shape future AIs' values to be (effectively) more animal-friendly" as targeted shifts to ~current training regimes. We really haven't thought about these ideas, though, they're mostly included in case they help give readers a sense of where we're at.

- ^

This could be because, for example:

- All humans have been killed, so the AI(s) are the sole decision-makers and one system’s values dictate how that goes

- Humans voluntarily delegate decision-making to an AI system

- Humans are nudged towards something like CEV (but influenced by the AI)

- ^

These could include pretty bizarre dynamics, like memetic fitness. In fact, “values” may be a confusing term for what’s driving the AI entities in question (they might be closer to massive organizations or even cultures than to an agent with coherent moral beliefs and preferences).

- ^

Or the relevant set of decision-makers, which could be the AIs themselves..

- ^

Although as far as we understand, this concern seems more related to the suffering of digital beings (which is out of scope of this memo).

- ^

See: AI Tools for Existential Security (“Implications for...”) for a bit more on this. (In particular, it could be good to separate out work streams, get people more familiar with AI, build connections with the AI world, ...)

- ^

10 years is a rough heuristic; a more accurate discount rate might be obtained by commissioning a forecast (or repurposing an existing one)

- ^

Ben was commissioned to work on this, Lizka joined on personal time

LewisBollard @ 2025-07-10T00:49 (+62)

Thanks Lizka and Ben! I found this post really thought-provoking. I'm curious to better understand the intuition behind discounting the post-AGI paradigm shift impacts to ~0.

My sense is that there's still a pretty wide continuum of future possible outcomes, under some of which we should predictably expect current policies to endure. To simplify, consider six broad buckets of possible outcomes by the year 2050, applied to your example of whether the McDonald's cage-free policy remains relevant.

- No physical humans left. We're all mind uploads or something more dystopian. Clearly the mind uploads won't be needing cage-free McMuffins.

- Humans remain but food is upended. We all eat cultivated meat, or rats (Terminator), or Taco Bell (Demolition Man). I also agree McDonald's policy is irrelevant ... though Taco Bell is cage-free too ;)

- Radical change, people eat similar foods but no longer want McDonald's. Maybe they have vast wealth and McDonald's fails to keep up with their new luxurious tastes, or maybe they can't afford even a cheap McMuffin. Either way, the cage-free policy is irrelevant.

- Radical change, but McDonald's survives. No matter how weird the future is, people still want cheap tasty convenient food, and have long established brand attachments to McDonald's. Even if McDonald's fires all its staff, it's not clear to me why it would drop its cage-free policy.

- AGI is more like the Internet. The cage-free McMuffins endure, just with some cool LLM-generated images on them.

- No AGI.

I agree that scenarios 1-3 are possible, but they don't seem obviously more likely to me than 4-6. At the very least, scenarios 4-6 don't feel so unlikely that we should discount them to ~0. What am I missing?

Mjreard @ 2025-07-10T15:19 (+16)

4-6 seem like compelling reasons to discount the intersection of AI and animals work (which is what this post is addressing), because AI won't be changing what's important for animals very much in those scenarios. I don't think the post makes any comment on the value of current, conventional animal welfare work in absolute terms.

Ben_West🔸 @ 2025-07-10T19:17 (+5)

I actually want to make both claims! I agree that it's true that, if the future looks basically like the present, then probably you don't need to care much about the paradigm shift (i.e. AI). But I also think the future will not look like the present so you should heavily discount interventions targeted at pre-paradigm-shift worlds unless they pay off soon.

Ben_West🔸 @ 2025-07-10T19:15 (+12)

Good question and thanks for the concrete scenarios! I think my tl;dr here is something like "even when you imagine 'normalish' futures, they are probably weirder than you are imagining."

Even if McDonald's fires all its staff, it's not clear to me why it would drop its cage-free policy

I don't think we want to make the claim that McDonald's will definitely drop its cage free policy but rather the weaker claim that you should not assume that the value of a cage-free commitment will remain ~constant by default.

If I'm assuming that we are in a world where all of the human labor at McDonald's has been automated away, I think that is a pretty weird world. As you note, even the existence of something like McDonald's (much less a specific corporate entity which feels bound by the agreements of current-day McDonald's) is speculative.

But even if we grant its existence: a ~40% egg price increase is currently enough that companies feel cover to be justified in abandoning their cage-free pledges. Surely "the entire global order has been upended and the new corporate management is robots" is an even better excuse?

And even if we somehow hold McDonald's to their pledge, I find it hard to believe that a world where McDonald’s can be run without humans does not quickly lead to a world where something more profitable than battery cage farming can be found. And, as a result, the cage-free pledge is irrelevant because McDonald’s isn’t going to use cages anyway. (Of course, this new farming method may be even more cruel than battery cages, illustrating one of the downsides of trying to lock in a specific policy change before we understand what the future will be like.)

To be clear: this is just me randomly spouting, I don't believe strongly in any of the above. I think it's possible someone could come up with a strong argument why present-day corporate pledges will continue post-paradigm-shift. But my point is that you shouldn’t assume that this argument exists by default.

AGI is more like the Internet. The cage-free McMuffins endure, just with some cool LLM-generated images on them.

Yeah I think this world is (by assumption) one where cage free pledges should not receive a massive discount.

No AGI

Note that some worlds where we wouldn't get AGI soon (e.g. large-scale nuclear war setting science back 200 years) are also probably not great for the expected value of cage-free pledges.

(It is good to hear though that even in the maximally dystopian world of universal Taco Bell there will be some upside for animals 🙂.)

Michael St Jules 🔸 @ 2025-07-10T23:57 (+9)

Under 4, we should consider possibilities of massive wealth gains from automation, and that the cage-free shift would have happened anyway or at much lower (relative) cost without our work before the AI transition and paradigm shift. People still want to eat eggs out of habit, food neophobia or for cultural or political or any other reasons. However, consumers become so wealthy that the difference in cost between caged and cage-free is no longer significant to them, and they would just pay it, or are much more open to cage-free (and other high welfare) legislation..

Or, maybe some animal advocates (who invested in AI or even the market broadly) become so wealthy that they could subsidize people or farms to switch to cage-free. If this is "our money", then this looks more like investing to give and just optimal donation timing. If this is not "our money", say, because we're not coordinating that closely with these advocates and have little influence on their choices, then it looks like someone else solving the problem later.

MichaelDickens @ 2025-07-19T00:22 (+8)

I think this is a good way of thinking about it and I like your classification. Also agree that neartermist animal welfare interventions shouldn't discounted to ~0. I disagree with the claim that scenarios 1–3 are not obviously more likely than 4–6. #6 seems somewhat plausible but I think #4 and #5 are highly unlikely.

The weakest possible version of AGI is something like "take all the technological and economic advances being made by the smartest people in the world, and now rapidly accelerate those advancements because you can run many copies of equally-smart AIs." (That's the minimum outcome; I think the more likely outcome is "AGI is radically smarter than the smartest human".)

RE #5, I can't imagine a world where an innovation like "greatly increase the number of world-class researchers" would be on par with the Internet in terms of impactfulness.

RE #4, if technological chance is happening that quickly, it seems implausible that McDonald's will survive. They didn't have anything comparable to McDonald's 1000 years ago. They couldn't have even imagined McDonald's. I predict that a decade after TAI, if we're still alive, then whatever stuff we have will look nothing like McDonald's, in the same way that McDonald's looks nothing like the stuff people had in medieval times.

I don't think outcome #4 is crazy unlikely, but I do think it's clearly less likely than #1–3.

Michael St Jules 🔸 @ 2025-07-19T00:37 (+6)

RE #4, if technological chance is happening that quickly, it seems implausible that McDonald's will survive. They didn't have anything comparable to McDonald's 1000 years ago. They couldn't have even imagined McDonald's. I predict that a decade after TAI, if we're still alive, then whatever stuff we have will look nothing like McDonald's, in the same way that McDonald's looks nothing like the stuff people had in medieval times.

If we're still alive, most of the same people will still be alive, and their tastes, habits and values will only have changed so much. Think conservatives, people against alt proteins and others who grew up with McDonald's. 1000 years is enough time for dramatic shifts in culture and values, but 10 years doesn't seem to be. I suspect shifts in culture and values are primarily driven by newer generations just growing up to have different values and older generations with older values dying, not people changing their minds.

And radical life extension might make some values and practices persist far longer than they would have otherwise, although I'm not sure how much people who'd still want to eat conventional meat would opt for radical life extension.

MichaelDickens @ 2025-07-19T00:22 (+5)

I would predict maybe 65% chance of outcome #1 (50% chance everyone dies, 15% chance we get mind uploads or something); 15% chance of #2; 15% chance of #6; low chance of #3 or #4; basically zero chance of #5.

zdgroff @ 2025-09-29T12:50 (+6)

I'd add to this that you do also have the possibility that 1-3 happen, but they happen much later than many people currently think. My personal take is that the probability that 'either AGI's impact comes in more than ten years or it's not that radical' is >50%, certainly far more than 0%.

MichaelDickens @ 2025-07-08T01:31 (+18)

I like this article. I have reservations about some AI-for-animal-welfare interventions, and this article explains my reservations well. Particularly I am glad that you highlighted the importance of (1) potentially short timelines and (2) that the post-TAI world will probably be very weird.

Ariel Simnegar 🔸 @ 2025-07-10T01:10 (+15)

Hi guys, thanks for doing this sprint! I'm planning on making most of my donations to AI for Animals this year, and would appreciate your thoughts on these followup questions:

- You write that "We also think some interventions that aren’t explicitly focused on animals (or on non-human beings) may be more promising for improving animal welfare in the longer-run future than any of the animal-focused projects we considered". Which interventions, and for which reasons?

- Would your tentative opinion be more bullish on AI for Animals' movement-building activities than on work like AnimalHarmBench? Is there anything you think AI for Animals should be doing differently from what they're currently doing?

- Do you know of anyone working (or interested in working) on the movement strategy research questions you discuss?

- Do you have any tentative thoughts on how animal/digital mind advocates should think about allocating resources between (a) influencing the "transformed" post-shift world as discussed in your post and (b) ensuring AI is aligned to human values today?

Constance Li @ 2025-07-14T07:19 (+13)

Hey Ariel! Just to give you a quick update, AI for Animals is rebranding to Sentient Futures to better reflect our overall goal of making the future go well for all sentient beings and avoid committing ourselves to a specific theory of change.

Also, we are running a wargame to figure out how different interventions might end up playing out for animals in the context of AGI. That will give us some insight into what strategies could be promising. In the meantime, we are fairly certain that capacity building (growing a collaborative movement, refining ideas, creating networks with dense talent, and nurturing stakeholder relationships) to be able to adopt/test a promising intervention quickly is a fairly good bet.

MichaelDickens @ 2025-07-19T00:04 (+4)

Also, we are running a wargame to figure out how different interventions might end up playing out for animals in the context of AGI.

This is a great idea!

Ben_West🔸 @ 2025-07-19T16:09 (+6)

These are good questions, unfortunately I don't feel very qualified to answer. One thing I did want to say though is that your comment made me realize that we were incorrectly (?) focused on a harm reduction frame. I'm not sure that our suggestions are very good if you want to do something like "maximize the expected number of rats on heroin".

My sense is that most AIxAnimals people are actually mostly focused on the harm reduction stuff so maybe it's fine that we didn't consider upside scenarios very much, but, to the extent that you do want to consider upside for animals, I'm not sure our suggestions hold. (Speaking for myself, not Lizka.)

OscarD🔸 @ 2025-07-12T13:08 (+12)

Growing the field’s resources (more of some kinds of labor, or other resources — compute commitments, money, ..?)

My guess is that for the (perhaps rare) person who has short-ish AI timelines (e.g. <10 years median) and cares in particular about animals, investing money to give later is better than donating now. At least, if we buy the claim that the post-AGI future will likely be pretty weird/wild, and we have a fairly low pure time discount rate, it seems presumptuous to think we will allocate money better now than after we see how things pan out.

And to a (very rough) first approximation, I expect the values of the future to be somewhat in proportion to the wealth/power of present people. Ie if there are more (and more powerful/wealthy) pro-animal people, that seems somewhat robustly good for the future of animals. Though not fully robust.

Alistair Stewart @ 2025-07-08T17:16 (+9)

I'm very pleased more thinking is being done on this – thank you.

I'm not sure I follow this:

Pushing for “animal-friendly” values may be harmful if it skews trajectories that are good for animals

- As an intuition pump, imagine that animal farming (or other functional-animal mistreatment by humans) will be eradicated by default (e.g. because it will stop being economically valuable). If we manage to instill strong animal-related concerns that are not perfectly “wise” (e.g. specific ~beliefs on what is good or bad for farmed animals), then the AI(s) may perpetuate farming in some form even if that choice is unnecessary and harmful.

Would this be an example: we instill a goal in a powerful AI system along the lines of "reduce the suffering of animals who are being farmed". Then the AI system prevents the abolition of animal farming on the grounds that it can't achieve that goal if animal farming ends?

Ben_West🔸 @ 2025-07-10T16:26 (+16)

(Lizka and I have slightly different views here, speaking only for myself.)

This is a good question. The basic point is that, just as lock-in can prevent things from getting worse, it can also prevent things from getting better.

For example, the Universal Declaration of the Rights of Mother Earth says that all beings have the right to "not have its genetic structure modified or disrupted in a manner that threatens its integrity or vital and healthy functioning".

Even though I think this right could reasonably be interpreted as "animal-friendly", my guess is that it would be bad to lock it in because it would prevent us from e.g. genetically modifying farmed animals to feel less pain.

MichaelDickens @ 2025-07-09T19:06 (+8)

Not OP but I would say that if we end up with an ASI that can misunderstand values in that kind of way, then it will almost certainly wipe out humanity anyway.

That is the same category of mistake as "please maximize the profit of this paperclip factory" getting interpreted as "convert all available matter into paperclip machines".

Alistair Stewart @ 2025-07-09T19:25 (+1)

Yes, my example and the paperclip one both seem like a classic case of outer misalignment / reward misspecification.

Vasco Grilo🔸 @ 2025-07-08T09:21 (+5)

Thanks for the post, Lizka and Ben!

Our biggest recommendation is: to the extent that you're targeting animal welfare improvements in the normal era, you probably should discount heavily. Discounting to zero work which pays off in more than ten years is maybe one viable but very simplistic model.

I like Ege Erdil's median time of 20 years until full automation of remote work (bets are welcome), and I would not neglect impact after that. So I think a typical discount rate of 3 % implying 33.3 years of impact is fine. However, I agree with your recommendation conditional on your timelines.

Among the organisations working on invertebrate welfare I recommended, I would only recommend the Shrimp Welfare Project (SWP) ignoring impact after 5 or 10 years. From Vetted Causes' evaluation of SWP, "SWP also informed us that it typically takes 6 to 8 months for SWP to distribute a stunner and have it operational once an agreement has been signed". Assuming 0.583 years (= (6 + 8)/2/12) from agreements to impact, and 1 year from donations to agreements, there would be 1.58 years (= 0.583 + 1) from donations to impact. All my other invertebrate welfare recommendations involve research, which I guess would take significantly longer than 1.58 years from donations to impact. For SWP, there would be 3.42 (= 5 - 1.58) and 8.42 years (= 10 - 1.58) of impact neglecting impact after 5 and 10 years. I assumed "10 years" of impact to estimate the past cost-effectiveness of SWP's Humane Slaughter Initiative (HSI). As a result, supposing SWP's current marginal cost-effectiveness is equal to the past one of HSI including all years of impact, SWP's current marginal cost-effectiveness neglecting impact after 5 and 10 years would be 34.2 % (= 3.42/10) and 84.2 % (= 8.42/10) of the past cost-effectiveness of HSI.

Among the interventions for which I estimated the cost-effectiveness accounting for effects on soil nematodes, mites, and springtails, I would only recommend buying beef ignoring impact after 5 or 10 years. I estimated it is 63.8 % as cost-effective as HSI has been, and I guess it would only take 2 years or so from buying beef to its benefits materialising via an increased agricultural land. For my AI timelines, cost-effectively decreasing human mortality also increases agricultural land cost-effectively. However, for a life expectancy of 70 years, ignoring impact after 5 and 10 years would make the cost-effectiveness 7.14 % (= 5/70) and 14.3 % (= 10/70) as high.

Karen Singleton @ 2025-07-13T03:55 (+3)

Thanks so much for this thoughtful and clear breakdown, it's one of the most useful framings I've seen for thinking about strategy in the face of paradigm shifts.

The distinction between the “normal(ish)” and “transformed” eras is especially helpful, and I appreciate the caution around assuming continuity in our current levers. The idea that most of today’s advocacy tools may simply not survive or translate post-shift feels both sobering and clarifying. The point about needing a compelling story for why any given intervention’s effects would persist beyond the shift is well taken.

I also found the discussion of moral “lock-ins” particularly resonant. The idea that future systems could entrench either better or worse treatment of animals, depending on early influence, feels like a crucial consideration, especially given how sticky some value assumptions can become once embedded in infrastructure or governance frameworks. There’s probably a lot more to map here about what kinds of decisions are most likely to persist and where contingent choices could still go either way.

I’m exploring some of these questions from a different angle, focusing on how animal welfare might (or might not) be integrated into emerging economic paradigms (I hope to post on this soonish) but this post helped clarify the strategic terrain we’re navigating. Thanks again for putting this together.