I'm Buck Shlegeris, I do research and outreach at MIRI, AMA

By Buck @ 2019-11-15T22:44 (+123)

EDIT: I'm only going to answer a few more questions, due to time constraints. I might eventually come back and answer more. I still appreciate getting replies with people's thoughts on things I've written.

I'm going to do an AMA on Tuesday next week (November 19th). Below I've written a brief description of what I'm doing at the moment. Ask any questions you like; I'll respond to as many as I can on Tuesday.

Although I'm eager to discuss MIRI-related things in this AMA, my replies will represent my own views rather than MIRI's, and as a rule I won't be running my answers by anyone else at MIRI. Think of it as a relatively candid and informal Q&A session, rather than anything polished or definitive.

----

I'm a researcher at MIRI. At MIRI I divide my time roughly equally between technical work and recruitment/outreach work.

On the recruitment/outreach side, I do things like the following:

- For the AI Risk for Computer Scientists workshops (which are slightly badly named; we accept some technical people who aren't computer scientists), I handle the intake of participants, and also teach classes and lead discussions on AI risk at the workshops.

- I do most of the technical interviewing for engineering roles at MIRI.

- I manage the AI Safety Retraining Program, in which MIRI gives grants to people to study ML for three months with the goal of making it easier for them to transition into working on AI safety.

- I sometimes do weird things like going on a Slate Star Codex roadtrip, where I led a group of EAs as we travelled along the East Coast going to Slate Star Codex meetups and visiting EA groups for five days.

On the technical side, I mostly work on some of our nondisclosed-by-default technical research; this involves thinking about various kinds of math and implementing things related to the math. Because the work isn't public, there are many questions about it that I can't answer. But this is my problem, not yours; feel free to ask whatever questions you like and I'll take responsibility for choosing to answer or not.

----

Here are some things I've been thinking about recently:

- I think that the field of AI safety is growing in an awkward way. Lots of people are trying to work on it, and many of these people have pretty different pictures of what the problem is and how we should try to work on it. How should we handle this? How should you try to work in a field when at least half the "experts" are going to think that your research direction is misguided?

- The AIRCS workshops that I'm involved with contain a variety of material which attempts to help participants think about the world more effectively. I have thoughts about what's useful and not useful about rationality training.

- I have various crazy ideas about EA outreach. I think the SSC roadtrip was good; I think some EAs who work at EA orgs should consider doing "residencies" in cities without much fulltime EA presence, where they mostly do their normal job but also talk to people.

elle @ 2019-11-19T18:56 (+56)

Reading through some of your blog posts and other writing, I get the impression that you put a lot of weight on how smart people seem to you. You often describe people or ideas as "smart" or "dumb," and you seem interested in finding the smartest people to talk to or bring into EA.

I am feeling a bit confused by my reactions. I think I am both a) excited by the idea of getting the "smart people" together so that they can help each other think through complicated topics and make more good things happen, but b) I feel a bit sad and left out that I am probably not one of the smart people.

Curious about your thoughts on a few things related to this... I'll put my questions as separate comments below.

elle @ 2019-11-19T18:57 (+27)

2) Somewhat relatedly, there seems to be a lot of angst within EA related to intelligence / power / funding / jobs / respect / social status / etc., and I am curious if you have any interesting thoughts about that.

Buck @ 2019-11-19T20:23 (+27)

I feel really sad about it. I think EA should probably have a communication strategy where we say relatively simple messages like "we think talented college graduates should do X and Y", but this causes collateral damage where people who don't succeed at doing X and Y feel bad about themselves. I don't know what to do about this, except to say that I have the utmost respect in my heart for people who really want to do the right thing and are trying their best.

I don't think I have very coherent or reasoned thoughts on how we should handle this, and I try to defer to people who I trust whose judgement on these topics I think is better.

elle @ 2019-11-19T21:56 (+14)

If you feel comfortable sharing: who are the people whose judgment on this topic you think is better?

elle @ 2019-11-19T18:56 (+15)

1) Do you have any advice for people who want to be involved in EA, but do not think that they are smart or committed enough to be engaging at your level? Do you think there are good roles for such people in this community / movement / whatever? If so, what are those roles?

Aidan O'Gara @ 2019-11-20T19:00 (+141)

I used to expect 80,000 Hours to tell me how to have an impactful career. Recently, I've started thinking it's basically my own personal responsibility to figure it out. I think this shift has made me much happier and much more likely to have an impactful career.

80,000 Hours targets the most professionally successful people in the world. That's probably the right idea for them - giving good career advice takes a lot of time and effort, and they can't help everyone, so they should focus on the people with the most career potential.

But, unfortunately for most EAs (myself included), the nine priority career paths recommended by 80,000 Hours are some of the most difficult and competitive careers in the world. If you’re among the 99% of people who are not Google programmer / top half of Oxford / Top 30 PhD-level talented, I’d guess you have slim-to-none odds of succeeding in any of them. The advice just isn't tailored for you.

So how can the vast majority of people have an impactful career? My best answer: A lot of independent thought and planning. Your own personal brainstorming and reading and asking around and exploring, not just following stock EA advice. 80,000 Hours won't be a gospel that'll give all the answers; the difficult job of finding impactful work falls to the individual.

I know that's pretty vague, much more an emotional mindset than a tactical plan, but I'm personally really happy I've started thinking this way. I feel less status anxiety about living up to 80,000 Hours's recommendations, and I'm thinking much more creatively and concretely about how to do impactful work.

More concretely, here's some ways you can do that:

- Think of easier versions of the 80,000 Hours priority paths. Maybe you'll never work at OpenPhil or GiveWell, but can you work for a non-EA grantmaker reprioritizing their giving to more effective areas? Maybe you won't end up in the US Presidential Cabinet, but can you bring attention to AI policy as a congressional staffer or civil servant? (Edit: I forgot, 80k recommends congressional staffing!) Maybe you won't run operations at CEA, but can you help run a local EA group?

- The 80,000 Hours job board actually has plenty of jobs that aren’t on their priority paths, and I think some of them are much more accessible for a wider audience.

- 80,000 Hours tries to answer the question “Of all the possible careers people can have, which ones are the most impactful?” That’s the right question for them, but the wrong question for an individual. For any given person, I think it’s probably much more useful to think, “What potentially impactful careers could I plausibly enter, and of those, which are the most impactful?” Start with what you already have - skills, connections, experience, insights - and think outwards from there: how you can transform what you already have into an impactful career?

- There are tons of impactful charities out there. GiveWell has identified some of the top few dozen. But if you can get a job at the 500th most effective charity in the world, you’re still making a really important impact, and it’s worth figuring out how to do that.

- Talk to people working in the most important problems who aren't top 1% of professional success - seeing how people like you have an impact can be really motivating and informative.

- Personal donations can be really impactful - not earning to give millions in quant trading, just donating a reasonable portion of your normal-sized salary, wherever it is that you work.

- Convincing people you know to join EA is also great - you can talk to your friends about EA, or attend/help out at a local EA group. Converting more people to EA just multiplies your own impact.

Don't let the fact that Bill Gates saved a million lives keep you from saving one. If you put some hard work into it, you can make a hell of a difference to a whole lot of people.

brentonmayer @ 2019-11-27T22:12 (+53)

Hi Aidan,

I’m Brenton from 80,000 Hours - thanks for writing this up! It seems really important that people don’t think of us as “tell[ing] them how to have an impactful career”. It sounds absolutely right to me that having a high impact career requires “a lot of independent thought and planning” - career advice can’t be universally applied.

I did have a few thoughts, which you could consider incorporating if you end up making a top level post. The most substantive two are:

- Many of the priority paths are broader than you might be thinking.

- A significant amount of our advice is designed to help people think through how to approach their careers, and will be useful regardless of whether they’re aiming for a priority path.

Many of the priority paths are broader than you might be thinking:

Most people won’t be able to step into an especially high impact role directly out of undergrad, so unsurprisingly, many of the priority paths require people to build up career capital before they can get into high impact positions. We’d think of people who are building up career capital focused on (say) AI policy as being ‘on a priority path’. We also think of people who aren’t in the most competitive positions as being within the path

For instance, let’s consider AI policy. We think that path includes graduate school, all the options outlined in our writeup on US AI policy and the 161 roles currently on the job board under the relevant filter. It’s also worth remembering that the job board has still left most of the relevant roles out: none of them are congressional staffers for example, which we’d also think of as under this priority path.

A significant amount of our advice is designed to help people think through how to approach their careers, and will be useful regardless of whether they’re aiming for a priority path.

In our primary articles on how to plan your career, we spend a lot of time talking about general career strategy and ways to generate options. The articles encourage people to go through a process which should generate high impact options, of which only some will be in the priority paths:

- The career strategy and planning and decision making sections of key ideas

- This article on high impact careers

- Career planning

Unfortunately, there’s something in the concreteness of a list of top options which draws people in particularly strongly. This is a communication challenge that we’ve worked on a bit, but don’t think we have a great answer to yet. We discussed this in our ‘Advice on how to read our advice’. In the future we’ll add some more ‘niche’ paths, which may help somewhat.

A few more minor points:

- Your point about Bill Gates was really well put. It reminded me of my colleague Michelle’s post on ‘Keeping absolutes in mind’, which you might enjoy reading.

- We don’t think that the priority paths are the only route through which people can affect the long term future.

- I found the tone of this comment generally great, and two of my colleagues commented the same. I appreciate that going through this shift you’ve gone through would have been hard and it’s really impressive that you’ve come out of it with such a balanced view, including being able to acknowledge the tradeoffs that we face in what we work on. Thank you for that.

- If you make a top level post (which I’d encourage you to do), feel free to quote any part of this comment.

Cheers, Brenton

Khorton @ 2019-11-20T23:00 (+51)

I think this comment is really lovely, and a very timely message. I'd support it being turned into a top-level post so more people can see it, especially if you have anything more to add.

jpaddison @ 2019-11-21T03:58 (+11)

Seconded.

Aidan O'Gara @ 2019-11-21T20:57 (+17)

Thank you both very much, I will do that, and I almost definitely wouldn't have without your encouragement.

If anyone has more thoughts on the topic, please comment or reach out to me, I'd love to incorporate them into the top-level post.

DavidNash @ 2019-11-21T11:01 (+10)

I think similar areas were covered in these two posts as well 80,000 Hours - how to read our advice and Thoughts on 80,000 Hours’ research that might help with job-search frustrations.

Sean_o_h @ 2019-11-21T10:14 (+35)

I agree this is a very helpful comment. I would add: these roles in my view are not *lesser* in any sense, for a range of reasons and I would encourage people not to think of them in those terms.

- You might have a bigger impact on the margins being the only - or one of the first few - people thinking in EA terms in a philanthropic foundation than by adding to the pool of excellence at OpenPhil. This goes for any role that involves influencing how resources are allocated - which is a LOT, in charity, government, industry, academic foundations etc.

- You may not be in the presidential cabinet, or a spad to the UK prime minister, but those people are supported and enabled by people building up the resources, capacity, overton window expansion elsewhere in government and civil service. The 'senior person' on their own may not be able to achieve purchase with key policy ideas and influence.

- A lot of xrisk research, from biosecurity to climate change, draws on and depends on a huge body of work on biology, public policy, climate science, renewable energy, insulation in homes, and much more. Often there are gaps in research on extreme scenarios due to lack of incentives for this kind of work, and other reasons - and this may make it particularly impactful at times. But that specific work can't be done well without drawing on all the underlying work. E.g., biorisk mitigation needs not just the people figuring out how to defend against the extreme scenarios, but also everything from people testing birds in vietnam for H5N1 and seals in the north sea for H7, to people planning for overflow capacity in regional hospitals, to people pushing for the value of preparedness funds in the reinsurance industry to much more. Same for climate+environment, same will be true for AI policy etc.

- I think there's probably a good case to be made that in many or perhaps most instances the most useful place for the next generally capable EA to be is *not* an EA org. And for all 80k's great work, they can't survey and review everything, nor tailor to personal fit for the thousands, or hundreds of thousands of different-skillset people who can play a role in making the future better.

For EA to really make the future better to the extent that it has the potential, it's going to need a *much* bigger global team. And that team's going to need to be interspersed everywhere, sometimes doing glamorous stuff, sometimes doing more standard stuff that is just as important in that it makes the glamorous stuff possible. To annoy everyone with a sports analogy, the defense and midfield positions are every bit as important as the glamorous striker positions, and if you've got a team made up primarily of star strikers and wannabe star strikers, that team's going to underperform.

Milan_Griffes @ 2019-11-21T18:14 (+6)

To annoy everyone with a sports analogy, the defense and midfield positions are every bit as important as the glamorous striker positions, and if you've got a team made up primarily of star strikers and wannabe star strikers, that team's going to underperform.

But the marginal impact of becoming a star striker is so high!

(Just kidding – this is a great analogy & highlights a big problem with reasoning on the margin + focusing on maximizing individual impact.)

jpaddison @ 2019-11-21T23:15 (+11)

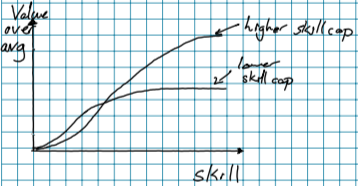

I also like the analogy, let's run with it. Suppose I'm reasoning from the point of view of the movement as a whole, and we're trying to put together a soccer team. Suppose also that there are two types of positions, midfield and striker. I'm not sure if this is true for strikers in what I would call soccer, but suppose the striker has a higher skillcap than midfield.[1] I'll define skillcap as the amount of skill with the position before the returns begin to diminish.

Where skill is some product of standard deviation of innate skill and hours practiced.

Back to the problem of putting together a soccer team, if you're starting with a bunch of players of unknown innate skill, you would get a higher expected value to tell 80% of your players to train to be strikers, and 20% to be midfielders. Because you have a smaller pool, your midfielders will have less innate talent for the position. You can afford to lose this however, as the effect will be small compared to the gain in the increased performance of the strikers.

That's not to say that you should fill your entire team with wannabe strikers. When you select your team you'll undoubtedly leave out some very dedicated strikers in favor of someone who trained for midfield. Still, compared to the percentage that end up playing on the team, the people you'd want training for the role leans more towards the high-skillcap positions.

There are all sorts of ways this analogy doesn't apply directly to the real world, but it might help pump intuitions.

[1] For American football, the quarterback position definitely exhibits this effect. The effect can be seen clearly in this list of highest-paid players.

Milan_Griffes @ 2019-11-21T23:37 (+6)

There are all sorts of ways this analogy doesn't apply directly to the real world, but it might help pump intuitions.

Yeah, I think this model misses that people who are aiming to be strikers tend to have pretty different dispositions than people aiming to be midfielders. (And so filling a team mostly with intending-to-be-strikers could have weird effects on team cohesion & function.)

Interesting to think about how Delta Force, SEAL Team Six, etc. manage this, as they select for very high-performing recruits (all strikers) then meld them into cohesive teams. I believe they do it via:

1. having a very large recruitment pool

2. intense filtering out of people who don't meet their criteria

3. breaking people down psychologically + cultivating conformity during training

I found it interesting to cash this out more... thanks!

jpaddison @ 2019-11-21T23:56 (+3)

Ah, so like, in the "real world", you don't have a set of people, you end up recruiting a training class of 80% would-be-strikers, which influences the culture compared to if you recruited for the same breakdown as the eventually-selected-team?

Sean_o_h @ 2019-11-22T17:58 (+14)

I really enjoy the extent to which you've both taken the ball and run with it ;)

Elityre @ 2019-11-22T00:47 (+20)

I think a lot of this is right and important, but I especially love:

Don't let the fact that Bill Gates saved a million lives keep you from saving one.

We're all doing the best we can with the privileges we were blessed with.

Buck @ 2019-11-20T06:30 (+10)

"Do you have any advice for people who want to be involved in EA, but do not think that they are smart or committed enough to be engaging at your level?"--I just want to say that I wouldn't have phrased it quite like that.

One role that I've been excited about recently is making local groups be good. I think that having better local EA communities might be really helpful for outreach, and lots of different people can do great work with this.

elle @ 2019-11-20T09:47 (+3)

"...but do not think that they are smart or committed enough to be engaging at your level?" was intended to be from a generic insecure (or realistic) EA's perspective, not yours. Sorry for my confusing phrasing.

elle @ 2019-11-19T18:58 (+11)

4) You seem like you have had a natural strong critical thinking streak since you were quite young (e.g., you talk about thinking that various mainstream ideas were dumb). Any unique advice for how to develop this skill in people who do not have it naturally?

Buck @ 2019-11-19T20:41 (+27)

For the record, I think that I had mediocre judgement in the past and did not reliably believe true things, and I sometimes had made really foolish decisions. I think my experience is mostly that I felt extremely alienated from society, which meant that I looked more critically on many common beliefs than most people do. This meant I was weird in lots of ways, many of which were bad and some of which were good. And in some cases this meant that I believed some weird things that feel like easy wins, eg by thinking that people were absurdly callous about causing animal suffering.

My judgement improved a lot from spending a lot of time in places with people with good judgement who I could learn from, eg Stanford EA, Triplebyte, the more general EA and rationalist community, and now MIRI.

I feel pretty unqualified to give advice on critical thinking, but here are some possible ideas, which probably aren't actually good:

- Try to learn simple models of the world and practice applying them to claims you hear, and then being confused when they don't match. Eg learn introductory microeconomics and then whenever you hear a claim about the world that intro micro has an opinion on, try to figure out what the simple intro micro model would claim, and then inasmuch as the world doesn't seem to look like intro micro would predict, think "hmm this is confusing" and then try to figure out what about the world might have caused this. When I developed this habit, I started noticing that lots of claims people make about the world are extremely implausible, and when I looked into the facts more I found that intro micro seemed to back me up. To learn intro economics, I enjoyed the Cowen and Tabarrok textbook.

- I think Katja Grace is a master of the "make simple models and then get confused when the world doesn't match them" technique. See her novel opinions page for many examples.

- Another subject where I've been doing this recently is evolutionary biology--I've learned to feel confused whenever anyone makes any claims about group selection, and I plan to learn how group selection works, so that when people make claims about it I can assess them accurately.

- Try to find the simplest questions whose answers you don't know, in order to practice noticing when you believe things for bad reasons.

- For example, some of my favorite physics questions:

- Why isn't the Sun blurry?

- What is the fundamental physical difference between blue and green objects? Like, what equations do I solve to find out that an object is blue?

- If energy is conserved, why we so often make predictions about the world by assuming that energy is minimized?

- I think reading Thinking Physics might be helpful at practicing noticing your own ignorance, but I'm not sure.

- Try to learn a lot about specific subjects sometimes, so that you learn what it's like to have detailed domain knowledge.

elle @ 2019-11-19T18:58 (+11)

3) I've seen several places where you criticize fellow EAs for their lack of engagement or critical thinking. For example, three years ago, you wrote:

I also have criticisms about EAs being overconfident and acting as if they know way more than they do about a wide variety of things, but my criticisms are very different from [Holden's criticisms]. For example, I’m super unimpressed that so many EAs didn’t know that GiveWell thinks that deworming has a relatively low probability of very high impact. I’m also unimpressed by how many people are incredibly confident that animals aren’t morally relevant despite knowing very little about the topic.

Do you think this has improved at all? And what are the current things that you are annoyed most EAs do not seem to know or engage with?

Buck @ 2019-11-19T20:28 (+12)

I no longer feel annoyed about this. I'm not quite sure why. Part of it is probably that I'm a lot more sympathetic when EAs don't know things about AI safety than global poverty, because learning about AI safety seems much harder, and I think I hear relatively more discussion of AI safety now compared to three years ago.

One hypothesis is that 80000 Hours has made various EA ideas more accessible and well-known within the community, via their podcast and maybe their articles.

edoarad @ 2019-11-18T05:47 (+47)

In the 80k podcast episode with Hilary Greaves she talks about decision theory and says:

Hilary Greaves: Then as many of your listeners will know, in the space of AI research, people have been throwing around terms like ‘functional decision theory’ and ‘timeless decision theory’ and ‘updateless decision theory’. I think it’s a lot less clear exactly what these putative alternatives are supposed to be. The literature on those kinds of decision theories hasn’t been written up with the level of precision and rigor that characterizes the discussion of causal and evidential decision theory. So it’s a little bit unclear, at least to my likes, whether there’s genuinely a competitor to decision theory on the table there, or just some intriguing ideas that might one day in the future lead to a rigorous alternative.

I understand from that that there is little engagement of MIRI with the academia. What is more troubling for me is that it seems that the cases for the major decision theories are looked upon with skepticism from academic experts.

Do you think that is really the case? How do you respond to that? It would personally feel much better if I knew that there are some academic decision theorists who are exited about your research, or a compelling explanation of a systemic failure that explains this which can be applied to MIRI's work specifically.

[The transition to non-disclosed research happend after the interview]

Buck @ 2019-11-19T03:09 (+43)

Yeah, this is an interesting question.

I’m not really sure what’s going on here. When I read critiques of MIRI-style decision theories (eg from Will or from Wolfgang Schwartz), I feel very unpersuaded by them. This leaves me in a situation where my inside views disagree with the views of the most obvious class of experts, which is always tricky.

- When I read those criticisms by Will MacAskill and Wolfgang Schwartz, I feel like I understand their criticisms and find them unpersuasive, as opposed to not understanding their criticisms. Also, I feel like they don’t understand some of the arguments and motivations for FDT. I feel a lot better disagreeing with experts when I think I understand their arguments and when I think I can see particular mistakes that they’re making. (It’s not obvious that this is the right epistemic strategy, for reasons well articulated by Gregory Lewis here.)

- Paul’s comments on this resolved some of my concerns here. He thinks that the disagreement is mostly about what questions decision theory should be answering. He thinks that the updateless decision theories are obviously more suitable to building AI than eg CDT or EDT.

- I think it’s plausible that Paul is being overly charitable to decision theorists; I’d love to hear whether skeptics of updateless decision theories actually agree that you shouldn’t build a CDT agent. (Also, when you ask a CDT agent what kind of decision theory it wants to program into an AI, you get a class of decision theory called "Son of CDT", which isn't UDT.)

- I think there’s a systematic pattern where philosophers end up being pretty ineffective at answering the philosophy questions that I care about (based eg on my experience seeing the EA community punch so far above its weight thinking about ethics), and so I’m not very surprised if it turns out that in this specific case, the philosophy community has priorities that don’t match mine.

- I think there’s also a pattern where philosophers have some basic disagreements with me, eg about functionalism and how much math intuitions should feed into our philosophical intuitions. This decision theory disagreement reminds me of that disagreement.

- Schwartz has a couple of complaints that the FDT paper doesn’t engage properly with the mainstream philosophy literature (eg the Justin Fisher and the David Gauthier papers). My guess is that these complaints are completely legitimate.

On his blog, Scott Aaronson does a good job of describing what I think might be a key difference here:

But the basic split between Many-Worlds and Copenhagen (or better: between Many-Worlds and “shut-up-and-calculate” / “QM needs no interpretation” / etc.), I regard as coming from two fundamentally different conceptions of what a scientific theory is supposed to do for you. Is it supposed to posit an objective state for the universe, or be only a tool that you use to organize your experiences?

Also, are the ultimate equations that govern the universe “real,” while tables and chairs are “unreal” (in the sense of being no more than fuzzy approximate descriptions of certain solutions to the equations)? Or are the tables and chairs “real,” while the equations are “unreal” (in the sense of being tools invented by humans to predict the behavior of tables and chairs and whatever else, while extraterrestrials might use other tools)? Which level of reality do you care about / want to load with positive affect, and which level do you want to denigrate?

My guess is that the factor which explains academic unenthusiasm for our work is that decision theorists are more of the “tables and chairs are real” school than the “equations are real” school--they aren’t as oriented by the question of “how do I write down a decision theory which would have good outcomes if I created an intelligent agent which used it”, and they don’t have as much of an intuition as I do that that kind of question is fundamentally simple and should have a lot of weight in your choices about how to think about reality.

---

I am really very curious to hear what people (eg edoarad) think of this answer.

bmg @ 2019-11-20T20:21 (+30)

I think it’s plausible that Paul is being overly charitable to decision theorists; I’d love to hear whether skeptics of updateless decision theories actually agree that you shouldn’t build a CDT agent.

FWIW, I could probably be described as a "skeptic" of updateless decision theories; I’m pretty sympathetic to CDT. But I also don’t think we should build AI systems that consistently take the actions recommended by CDT. I know at least a few other people who favor CDT, but again (although small sample size) I don’t think any of them advocate for designing AI systems that consistently act in accordance with CDT.

I think the main thing that’s going on here is that academic decision theorists are primarily interested in normative principles. They’re mostly asking the question: “What criterion determines whether or not a decision is ‘rational’?” For example, standard CDT claims that an action is rational only if it’s the action that can be expected to cause the largest increase in value.

On the other hand, AI safety researchers seem to be mainly interested in a different question: “What sort of algorithm would it be rational for us to build into an AI system?” The first question doesn’t seem very relevant to the second one, since the different criteria of rationality proposed by academic decision theorists converge in most cases. For example: No matter whether CDT, EDT, or UDT is correct, it will not typically be rational to build a two-boxing AI system. It seems to me, then, that it's probably not very pressing for the AI safety community to think about the first question or engage with the academic decision theory literature.

At the same time, though, AI safety writing on decision theory sometimes seems to ignore (or implicitly deny?) the distinction between these two questions. For example: The FDT paper seems to be pitched at philosophers and has an abstract that frames the paper as an exploration of “normative principles.” I think this understandably leads philosophers to interpret FDT as an attempt to answer the first question and to criticize it on those grounds.

they aren’t as oriented by the question of “how do I write down a decision theory which would have good outcomes if I created an intelligent agent which used it”

I would go further and say that (so far as I understand the field) most academic decisions theorists aren't at all oriented by this question. I think the question they're asking is again mostly independent. I'm also not sure it would even make sense to talk about "using" a "decision theory" in this context, insofar as we're conceptualizing decision theories the way most academic decision theorists do (as normative principles). Talking about "using" CDT in this context is sort of like talking about "using" deontology.

[[EDIT: See also this short post for a better description of the distinction between a "criterion of rightness" and a "decision procedure." Another way to express my impression of what's going on is that academic decision theorists are typically talking about critera of rightness and AI safety decision theorists are typically (but not always) talking about decision procedures.]]

RobBensinger @ 2019-11-21T22:41 (+20)

The comments here have been very ecumenical, but I'd like to propose a different account of the philosophy/AI divide on decision theory:

1. "What makes a decision 'good' if the decision happens inside an AI?" and "What makes a decision 'good' if the decision happens inside a brain?" aren't orthogonal questions, or even all that different; they're two different ways of posing the same question.

MIRI's AI work is properly thought of as part of the "success-first decision theory" approach in academic decision theory, described by Greene (2018) (who also cites past proponents of this way of doing decision theory):

[...] Consider a theory that allows the agents who employ it to end up rich in worlds containing both classic and transparent Newcomb Problems. This type of theory is motivated by the desire to draw a tighter connection between rationality and success, rather than to support any particular account of expected utility. We might refer to this type of theory as a "success-first" decision theory.

[...] The desire to create a closer connection between rationality and success than that offered by standard decision theory has inspired several success-first decision theories over the past three decades, including those of Gauthier (1986), McClennen (1990), and Meacham (2010), as well as an influential account of the rationality of intention formation and retention in the work of Bratman (1999). McClennen (1990: 118) writes: “This is a brief for rationality as a positive capacity, not a liability—as it must be on the standard account.” Meacham (2010: 56) offers the plausible principle, “If we expect the agents who employ one decision making theory to generally be richer than the agents who employ some other decision making theory, this seems to be a prima facie reason to favor the first theory over the second.” And Gauthier (1986: 182–3) proposes that “a [decision-making] disposition is rational if and only if an actor holding it can expect his choices to yield no less utility than the choices he would make were he to hold any alternative disposition.” In slogan form, Gauthier (1986: 187) calls the idea “utility-maximization at the level of dispositions,” Meacham (2010: 68–9) a “cohesive” decision theory, McClennen (1990: 6–13) a form of “pragmatism,” and Bratman (1999: 66) a “broadly consequentialist justification” of rational norms.

[...] Accordingly, the decision theorist’s job is like that of an engineer in inventing decision theories, and like that of a scientist in testing their efficacy. A decision theorist attempts to discover decision theories (or decision “rules,” “algorithms,” or “processes”) and determine their efficacy, under certain idealizing conditions, in bringing about what is of ultimate value.

Someone who holds this view might be called a methodological hypernaturalist, who recommends an experimental approach to decision theory. On this view, the decision theorist is a scientist of a special sort, but their goal should be broadly continuous with that of scientific research. The goal of determining efficacy in bringing about value, for example, is like that of a pharmaceutical scientist attempting to discover the efficacy of medications in treating disease.

For game theory, Thomas Schelling (1960) was a proponent of this view. The experimental approach is similar to what Schelling meant when he called for “a reorientation of game theory” in Part 2 of A Strategy of Conflict. Schelling argues that a tendency to focus on first principles, rather than upshots, makes game-theoretic theorizing shockingly blind to rational strategies in coordination problems.

The FDT paper does a poor job of contextualizing itself because it was written by AI researchers who are less well-versed with the philosophical literature.

MIRI's work is both advocating a particular solution to the question "what kind of decision theory satisfies the 'success' criterion?", and lending some additional support to the claim that "success-first" is a coherent and reasonable criterion for decision theorists to orient towards. (In a world without ideas like UDT, it was harder to argue that we should try to reduce decision theory to 'what decision-making approach yields the best utility?', since neither CDT nor EDT strictly outperforms the other; whereas there's a strong case that UDT does strictly outperform both CDT and EDT, to the extent it's possible for any decision theory to strictly outperform another; though there may be even-better approaches.)

You can go with Paul and say that a lot of these distinctions are semantic rather than substantive -- that there isn't a true, ultimate, objective answer to the question of whether we should evaluate decision theories by whether they're successful, vs. some other criterion. But dissolving contentious arguments and showing why they're merely verbal is itself a hallmark of analytic philosophy, so this doesn't do anything to make me think that these issues aren't the proper province of academic decision theory.

2. Rather than operating in separate magisteria, people like Wei Dai are making contrary claims about how humans should make decisions. This is easiest to see in contexts where a future technology comes along: if whole-brain emulation were developed tomorrow and it was suddenly trivial to put CDT proponents in literal twin prisoner's dilemmas, the CDT recommendation to defect (one-box, etc.) suddenly makes a very obvious and real difference.

I claim (as someone who thinks UDT/FDT is correct) that the reason it tends to be helpful to think about advanced technologies is that it draws out the violations of naturalism that are often implicit in how we talk about human reasoning. Our native way of thinking about concepts like "control," "choice," and "counterfactual" tends to be confused, and bringing in things like predictors and copies of our reasoning draws out those confusions in much the same way that sci-fi thought experiments and the development of new technologies have repeatedly helped clarify confused thinking in philosophy of consciousness, philosophy of personal identity, philosophy of computation, etc.

3. Quoting Paul:

Most causal decision theorists would agree that if they had the power to stop doing the right thing, they should stop taking actions which are right. They should instead be the kind of person that you want to be.

And so there, again, I agree it has implications, but I don't think it's a question of disagreement about truth. It's more a question of, like: you're actually making some cognitive decisions. How do you reason? How do you conceptualize what you're doing?"

I would argue that most philosophers who feel "trapped by rationality" or "unable to stop doing what's 'right,' even though they know they 'should,'" could in fact escape the trap if they saw the flaws in whatever reasoning process led them to their current idea of "rationality" in the first place. I think a lot of people are reasoning their way into making worse decisions (at least in the future/hypothetical scenarios noted above, though I would be very surprised if correct decision-theoretic views had literally no implications for everyday life today) due to object-level misconceptions about the prescriptions and flaws of different decision theories.

And all of this strikes me as very much the bread and butter of analytic philosophy. Philosophers unpack and critique the implicit assumptions in different ways of modeling the world (e.g., "of course I can 'control' physical outcomes but can't 'control' mathematical facts", or "of course I can just immediately tell that I'm in the 'real world'; a simulation of me isn't me, or wouldn't be conscious, etc."). I think MIRI just isn't very good at dialoguing with philosophers, and has had too many competing priorities to put the amount of effort into a scholarly dialogue that I wish were being made.

4. There will obviously be innumerable practical differences between the first AGI systems and human decision-makers. However, putting a huge amount of philosophical weight on this distinction will tend to violate naturalism: ceteris paribus, changing whether you run a cognitive process in carbon or in silicon doesn't change whether the process is doing the right thing or working correctly.

E.g., the rules of arithmetic are the same for humans and calculators, even though we don't use identical algorithms to answer particular questions. Humans tend to correctly treat calculators naturalistically: we often think of them as an extension of our own brains and reasoning, we freely switch back and forth between running a needed computation in our own brain vs. in a machine, etc. Running a decision-making algorithm in your brain vs. in an AI shouldn't be fundamentally different, I claim.

5. For similar reasons, a naturalistic way of thinking about the task "delegating a decision-making process to a reasoner outside your own brain" will itself not draw a deep philosophical distinction between "a human building an AI to solve a problem" and "an AI building a second AI to solve a problem" or for that matter "an agent learning over time and refining its own reasoning process so it can 'delegate' to its future self".

There will obviously be practical differences, but there will also be practical differences between two different AI designs. We don't assume that switching to a different design within AI means that the background rules of decision theory (or arithmetic, etc.) go out the window.

(Another way of thinking about this is that the distinction between "natural" and "artificial" intelligence is primarily a practical and historical one, not one that rests on a deep truth of computer science or rational agency; a more naturalistic approach would think of humans more as a weird special case of the extremely heterogeneous space of "(A)I" designs.)

bmg @ 2019-11-22T14:01 (+19)

"What makes a decision 'good' if the decision happens inside an AI?" and "What makes a decision 'good' if the decision happens inside a brain?" aren't orthogonal questions, or even all that different; they're two different ways of posing the same question.

I actually agree with you about this. I have in mind a different distinction, although I might not be explaining it well.

Here’s another go:

Let’s suppose that some decisions are rational and others aren’t. We can then ask: What is it that makes a decision rational? What are the necessary and/or sufficient conditions? I think that this is the question that philosophers are typically trying to answer. The phrase “decision theory” in this context typically refers to a claim about necessary and/or sufficient conditions for a decision being rational. To use different jargon, in this context a “decision theory” refers to a proposed “criterion of rightness.”

When philosophers talk about “CDT,” for example, they are typically talking about a proposed criterion of rightness. Specifically, in this context, “CDT” is the claim that a decision is rational only if taking it would cause the largest expected increase in value. To avoid any ambiguity, let’s label this claim R_CDT.

We can also talk about “decision procedures.” A decision procedure is just a process or algorithm that an agent follows when making decisions.

For each proposed criterion of rightness, it’s possible to define a decision procedure that only outputs decisions that fulfill the criterion. For example, we can define P_CDT as a decision procedure that involves only taking actions that R_CDT claims are rational.

My understanding is that when philosophers talk about “CDT,” they primarily have in mind R_CDT. Meanwhile, it seems like members of the rationalist or AI safety communities primarily have in mind P_CDT.

The difference matters, because people who believe that R_CDT is true don’t generally believe that we should build agents that implement P_CDT or that we should commit to following P_CDT ourselves. R_CDT claims that we should do whatever will have the best effects -- and, in many cases, building agents that follow a decision procedure other than P_CDT is likely to have the best effects. More generally: Most proposed criteria of rightness imply that it can be rational to build agents that sometimes behave irrationally.

MIRI's AI work is properly thought of as part of the "success-first decision theory" approach in academic decision theory.

One possible criterion of rightness, which I’ll call R_UDT, is something like this: An action is rational only if it would have been chosen by whatever decision procedure would have produced the most expected value if consistently followed over an agent’s lifetime. For example, this criterion of rightness says that it is rational to one-box in the transparent Newcomb scenario because agents who consistently follow one-boxing policies tend to do better over their lifetimes.

I could be wrong, but I associate the “success-first approach” with something like the claim that R_UDT is true. This would definitely constitute a really interesting and significant divergence from mainstream opinion within academic decision theory. Academic decision theorists should care a lot about whether or not it’s true.

But I’m also not sure if it matters very much, practically, whether R_UDT or R_CDT is true. It’s not obvious to me that they recommend building different kinds of decision procedures into AI systems. For example, both seem to recommend building AI systems that would one-box in the transparent Newcomb scenario.

You can go with Paul and say that a lot of these distinctions are semantic rather than substantive -- that there isn't a true, ultimate, objective answer to the question of whether we should evaluate decision theories by whether they're successful, vs. some other criterion.

I disagree that any of the distinctions here are purely semantic. But one could argue that normative anti-realism is true. In this case, there wouldn’t really be any such thing as the criterion of rightness for decisions. Neither R_CDT nor R_UDT nor any other proposed criterion would be “correct.”

In this case, though, I think there would be even less reason to engage with academic decision theory literature. The literature would be focused on a question that has no real answer.

[[EDIT: Note that Will also emphasizes the importance of the criterion-of-rightness vs. decision-procedure distinction in his critique of the FDT paper: "[T]hey’re [most often] asking what the best decision procedure is, rather than what the best criterion of rightness is... But, if that’s what’s going on, there are a whole bunch of issues to dissect. First, it means that FDT is not playing the same game as CDT or EDT, which are proposed as criteria of rightness, directly assessing acts. So it’s odd to have a whole paper comparing them side-by-side as if they are rivals."]]

RobBensinger @ 2019-11-22T22:23 (+12)

I agree that these three distinctions are important:

- "Picking policies based on whether they satisfy a criterion X" vs. "Picking policies that happen to satisfy a criterion X". (E.g., trying to pick a utilitarian policy vs. unintentionally behaving utilitarianly while trying to do something else.)

- "Trying to follow a decision rule Y 'directly' or 'on the object level'" vs. "Trying to follow a decision rule Y by following some other decision rule Z that you think satisfies Y". (E.g., trying to naïvely follow utilitarianism without any assistance from sub-rules, heuristics, or self-modifications, vs. trying to follow utilitarianism by following other rules or mental habits you've come up with that you expected to make you better at selecting utilitarianism-endorsed actions.)

- "A decision rule that prescribes outputting some action or policy and doesn't care how you do it" vs. "A decision rule that prescribes following a particular set of cognitive steps that will then output some action or policy". (E.g., a rule that says 'maximize the aggregate welfare of moral patients' vs. a specific mental algorithm intended to achieve that end.)

The first distinction above seems less relevant here, since we're mostly discussing AI systems and humans that are self-aware about their decision criteria and explicitly "trying to do what's right".

As a side-note, I do want to emphasize that from the MIRI cluster's perspective, it's fine for correct reasoning in AGI to arise incidentally or implicitly, as long as it happens somehow (and as long as the system's alignment-relevant properties aren't obscured and the system ends up safe and reliable).

The main reason to work on decision theory in AI alignment has never been "What if people don't make AI 'decision-theoretic' enough?" or "What if people mistakenly think CDT is correct and so build CDT into their AI system?" The main reason is that the many forms of weird, inconsistent, and poorly-generalizing behavior prescribed by CDT and EDT suggest that there are big holes in our current understanding of how decision-making works, holes deep enough that we've even been misunderstanding basic things at the level of "decision-theoretic criterion of rightness".

It's not that I want decision theorists to try to build AI systems (even notional ones). It's that there are things that currently seem fundamentally confusing about the nature of decision-making, and resolving those confusions seems like it would help clarify a lot of questions about how optimization works. That's part of why these issues strike me as natural for academic philosophers to take a swing at (while also being continuous with theoretical computer science, game theory, etc.).

The second distinction ("following a rule 'directly' vs. following it by adopting a sub-rule or via self-modification") seems more relevant. You write:

My understanding is that when philosophers talk about “CDT,” they primarily have in mind R_CDT. Meanwhile, it seems like members of the rationalist or AI safety communities primarily have in mind P_CDT.

The difference matters, because people who believe that R_CDT is true don’t generally believe that we should build agents that implement P_CDT or that we should commit to following P_CDT ourselves.

Far from being a distinction proponents of UDT/FDT neglect, this is one of the main grounds on which UDT/FDT proponents criticize CDT (from within the "success-first" tradition). This is because agents that are reflectively inconsistent in the manner of CDT -- ones that take actions they know they'll regret taking, wish they were following a different decision rule, etc. -- can be money-pumped and can otherwise lose arbitrary amounts of value.

A human following CDT should endorse "stop following CDT," since CDT isn't self-endorsing. It's not even that they should endorse "keep following CDT, but adopt a heuristic or sub-rule that helps us better achieve CDT ends"; they need to completely abandon CDT even at the meta-level of "what sort of decision rule should I follow?" and modify themselves into purely following an entirely new decision rule, or else they'll continue to perform poorly by CDT's lights.

The decision rule that CDT does endorse loses a lot of the apparent elegance and naturalness of CDT. This rule, "son-of-CDT", is roughly:

- Have whatever disposition-to-act gets the most utility, unless I'm in future situations like "a twin prisoner's dilemma against a perfect copy of my future self where the copy was forked from me before I started following this rule", in which case ignore my correlation with that particular copy and make decisions as though our behavior is independent (while continuing to take into account my correlation with any copies of myself I end up in prisoner's dilemmas with that were copied from my brain after I started following this rule).

The fact that CDT doesn't endorse itself (while other theories do), the fact that it needs self-modification abilities in order to perform well by its own lights (and other theories don't), and the fact that the theory it endorses is a strange frankenstein theory (while there are simpler, cleaner theories available) would all be strikes against CDT on their own.

But this decision rule CDT endorses also still performs suboptimally (from the perspective of success-first decision theory). See the discussion of the Retro Blackmail Problem in "Toward Idealized Decision Theory", where "CDT and any decision procedure to which CDT would self-modify see losing money to the blackmailer as the best available action."

In the kind of voting dilemma where a coalition of UDT agents will coordinate to achieve higher-utility outcomes, an agent who became a son-of-CDT agent at age 20 will coordinate with the group insofar as she expects her decision to be correlated with other agents' due to events that happened after she turned 20 (such as "the summer after my 20th birthday, we hung out together and converged a lot in how we think about voting theory"). But she'll refuse to coordinate for reasons like "we hung out a lot the summer before my 20th birthday", "we spent our whole childhoods and teen years living together and learning from the same teachers", and "we all have similar decision-making faculties due to being members of the same species". There's no principled reason to draw this temporal distinction; it's just an artifact of the fact that we started from CDT, and CDT is a flawed decision theory.

Regarding the third distinction ("prescribing a certain kind of output vs. prescribing a step-by-step mental procedure for achieving that kind of output"), I'd say that it's primarily the criterion of rightness that MIRI-cluster researchers care about. This is part of why the paper is called "Functional Decision Theory" and not (e.g.) "Algorithmic Decision Theory": the focus is explicitly on "what outcomes do you produce?", not on how you produce them.

(Thus, an FDT agent can cooperate with another agent whenever the latter agent's input-output relations match FDT's prescription in the relevant dilemmas, regardless of what computations they do to produce those outputs.)

The main reasons I think academic decision theory should spend more time coming up with algorithms that satisfy their decision rules are that (a) this has a track record of clarifying what various decision rules actually prescribe in different dilemmas, and (b) this has a track record of helping clarify other issues in the "understand what good reasoning is" project (e.g., logical uncertainty) and how they relate to decision theory.

bmg @ 2019-11-23T00:50 (+9)

I agree that these three distinctions are important

"Picking policies based on whether they satisfy a criterion X" vs. "Picking policies that happen to satisfy a criterion X". (E.g., trying to pick a utilitarian policy vs. unintentionally behaving utilitarianly while trying to do something else.)

"Trying to follow a decision rule Y 'directly' or 'on the object level'" vs. "Trying to follow a decision rule Y by following some other decision rule Z that you think satisfies Y". (E.g., trying to naïvely follow utilitarianism without any assistance from sub-rules, heuristics, or self-modifications, vs. trying to follow utilitarianism by following other rules or mental habits you've come up with that you expected to make you better at selecting utilitarianism-endorsed actions.)

"A decision rule that prescribes outputting some action or policy and doesn't care how you do it" vs. "A decision rule that prescribes following a particular set of cognitive steps that will then output some action or policy". (E.g., a rule that says 'maximize the aggregate welfare of moral patients' vs. a specific mental algorithm intended to achieve that end.)

The second distinction here is most closely related to the one I have in mind, although I wouldn’t say it’s the same. Another way to express the distinction I have in mind is that it’s between (a) a normative claim and (b) a process of making decisions.

“Hedonistic utilitarianism is correct” would be a non-decision-theoretic example of (a). “Making decisions on the basis of coinflips” would be an example of (b).

In the context of decision theory, of course, I am thinking of R_CDT as an example of (a) and P_CDT as an example of (b).

I now have the sense I’m probably not doing a good job of communicating what I have in mind, though.

The main reason is that the many forms of weird, inconsistent, and poorly-generalizing behavior prescribed by CDT and EDT suggest that there are big holes in our current understanding of how decision-making works, holes deep enough that we've even been misunderstanding basic things at the level of "decision-theoretic criterion of rightness".

I guess my view here is that exploring normative claims will probably only be pretty indirectly useful for understanding “how decision-making works,” since normative claims don’t typically seem to have any empirical/mathematical/etc. implications. For example, to again use a non-decision-theoretic example, I don’t think that learning that hedonistic utilitarianism is true would give us much insight into the computer science or cognitive science of decision-making. Although we might have different intuitions here.

It's that there are things that currently seem fundamentally confusing about the nature of decision-making, and resolving those confusions seems like it would help clarify a lot of questions about how optimization works. That's part of why these issues strike me as natural for academic philosophers to take a swing at (while also being continuous with theoretical computer science, game theory, etc.).

I agree that this is a worthwhile goal and that philosophers can probably contribute to it. I guess I’m just not sure that the question that most academic decision theorists are trying to answer -- and the literature they’ve produced on it -- will ultimately be very relevant.

The fact that CDT doesn't endorse itself (while other theories do), the fact that it needs self-modification abilities in order to perform well by its own lights (and other theories don't), and the fact that the theory it endorses is a strange frankenstein theory (while there are simpler, cleaner theories available) would all be strikes against CDT on their own.

The fact that R_CDT is “self-effacing” -- i.e. the fact that it doesn’t always recommend following P_CDT -- definitely does seem like a point of intuitive evidence against R_CDT.

But I think R_UDT also has an important point in its disfavor. It fails to satisfy what might be called the “Don’t Make Things Worse Principle,” which says that: It’s not rational to take decisions that will definitely make things worse. Will’s Bomb case is an example of a case where R_UDT violates the this principle, which is very similar to his “Guaranteed Payoffs Principle.”

There’s then a question of which of these considerations is more relevant, when judging which of the two normative theories is more likely to be correct. The failure of R_UDT to satisfy the “Don’t Make Things Worse Principle” seems more important to me, but I don’t really know how to argue for this point beyond saying that this is just my intuition. I think that the failure of R_UDT to satisfying this principle -- or something like it -- is also probably the main reason why many philosophers find it intuitively implausible.

(IIRC the first part of Reasons and Persons is mostly a defense of the view that the correct theory of rationality may be self-effacing. But I’m not really familiar with the state of arguments here.)

In the kind of voting dilemma where a coalition of UDT agents will coordinate to achieve higher-utility outcomes, an agent who became a son-of-CDT agent at age 20 will coordinate with the group insofar as she expects her decision to be correlated with other agents' due to events that happened after she turned 20 (such as "the summer after my 20th birthday, we hung out together and converged a lot in how we think about voting theory"). But she'll refuse to coordinate for reasons like "we hung out a lot the summer before my 20th birthday", "we spent our whole childhoods and teen years living together and learning from the same teachers", and "we all have similar decision-making faculties due to being members of the same species". There's no principled reason to draw this temporal distinction; it's just an artifact of the fact that we started from CDT, and CDT is a flawed decision theory.

I actually don’t think the son-of-CDT agent, in this scenario, will take these sorts of non-causal correlations into account at all. (Modifying just yourself to take non-causual correlations into account won’t cause you to achieve better outcomes here.) So I don’t think there should be any weird “Frankenstein” decision procedure thing going on.

….Thinking more about it, though, I’m now less sure how much the different normative decision theories should converge in their recommendations about AI design. I think they all agree that we should build systems that one-box in Newcomb-style scenarios. I think they also agree that, if we’re building twins, then we should design these twins to cooperate in twin prisoner’s dilemmas. But there may be some other contexts where acausal cooperation considerations do lead to genuine divergences. I don’t have very clear/settled thoughts about this, though.

RobBensinger @ 2019-11-23T03:06 (+7)

But I think R_UDT also has an important point in its disfavor. It fails to satisfy what might be called the “Don’t Make Things Worse Principle,” which says that: It’s not rational to take decisions that will definitely make things worse. Will’s Bomb case is an example of a case where R_UDT violates the this principle, which is very similar to his “Guaranteed Payoffs Principle.”

I think "Don't Make Things Worse" is a plausible principle at first glance.

One argument against this principle is that CDT endorses following it if you must, but would prefer to self-modify to stop following it (since doing so has higher expected causal utility). The general policy of following the "Don't Make Things Worse Principle" makes things worse.

Once you've already adopted son-of-CDT, which says something like "act like UDT in future dilemmas insofar as the correlations were produced after I adopted this rule, but act like CDT in those dilemmas insofar as the correlations were produced before I adopted this rule", it's not clear to me why you wouldn't just go: "Oh. CDT has lost the thing I thought made it appealing in the first place, this 'Don't Make Things Worse' feature. If we're going to end up stuck with UDT plus extra theoretical ugliness and loss-of-utility tacked on top, then why not just switch to UDT full stop?"

A more general argument against the Bomb intuition pump is that it involves trading away larger amounts of utility in most possible world-states, in order to get a smaller amount of utility in the Bomb world-state. From Abram Demski's comments:

[...] In Bomb, the problem clearly stipulates that an agent who follows the FDT recommendation has a trillion trillion to one odds of doing better than an agent who follows the CDT/EDT recommendation. Complaining about the one-in-a-trillion-trillion chance that you get the bomb while being the sort of agent who takes the bomb is, to an FDT-theorist, like a gambler who has just lost a trillion-trillion-to-one bet complaining that the bet doesn't look so rational now that the outcome is known with certainty to be the one-in-a-trillion-trillion case where the bet didn't pay well.

[...] One way of thinking about this is to say that the FDT notion of "decision problem" is different from the CDT or EDT notion, in that FDT considers the prior to be of primary importance, whereas CDT and EDT consider it to be of no importance. If you had instead specified 'bomb' with just the certain information that 'left' is (causally and evidentially) very bad and 'right' is much less bad, then CDT and EDT would regard it as precisely the same decision problem, whereas FDT would consider it to be a radically different decision problem.

Another way to think about this is to say that FDT "rejects" decision problems which are improbable according to their own specification. In cases like Bomb where the situation as described is by its own description a one in a trillion trillion chance of occurring, FDT gives the outcome only one-trillion-trillion-th consideration in the expected utility calculation, when deciding on a strategy.

[...] This also hopefully clarifies the sense in which I don't think the decisions pointed out in (III) are bizarre. The decisions are optimal according to the very probability distribution used to define the decision problem.

There's a subtle point here, though, since Will describes the decision problem from an updated perspective -- you already know the bomb is in front of you. So UDT "changes the problem" by evaluating "according to the prior". From my perspective, because the very statement of the Bomb problem suggests that there were also other possible outcomes, we can rightly insist to evaluate expected utility in terms of those chances.

Perhaps this sounds like an unprincipled rejection of the Bomb problem as you state it. My principle is as follows: you should not state a decision problem without having in mind a well-specified way to predictably put agents into that scenario. Let's call the way-you-put-agents-into-the-scenario the "construction". We then evaluate agents on how well they deal with the construction.

For examples like Bomb, the construction gives us the overall probability distribution -- this is then used for the expected value which UDT's optimality notion is stated in terms of.

For other examples, as discussed in Decisions are for making bad outcomes inconsistent, the construction simply breaks when you try to put certain decision theories into it. This can also be a good thing; it means the decision theory makes certain scenarios altogether impossible.

bmg @ 2019-11-23T12:12 (+6)

One argument against this principle is that CDT endorses following it if you must, but would prefer to self-modify to stop following it (since doing so has higher expected causal utility).

A more general argument against the Bomb intuition pump is that it involves trading away larger amounts of utility in most possible world-states, in order to get a smaller amount of utility in the Bomb world-state.

This just seems to be the point that R_CDT is self-effacing: It says that people should not follow P_CDT, because following other decision procedures will produce better outcomes in expectation.

I definitely agree that R_CDT is self-effacing in this way (at least in certain scenarios). The question is just whether self-effacingness or failure to satisfy "Don't Make Things Worse" is more relevant when trying to judge the likelihood of a criterion of rightness being correct. I'm not sure whether it's possible to do much here other than present personal intuitions.

The point that R_UDT only violates the "Don't Make Things Worse" principle only infrequently seems relevant, but I'm still not sure this changes my intuitions very much.

If we're going to end up stuck with UDT plus extra theoretical ugliness and loss-of-utility tacked on top, then why not just switch to UDT full stop?

I may just be missing something, but I don't see what this theoretical ugliness is. And I don't intuitively find the ugliness/elegance of the decision procedure recommend by a criterion of rightness to be very relevant when trying to judge whether the criterion is correct.

[[EDIT: Just an extra thought on the fact that R_CDT is self-effacing. My impression is that self-effacingness is typically regarded as a relatively weak reason to reject a moral theory. For example, a lot of people regard utilitarianism as self-effacing both because it's costly to directly evaluate the utility produced by actions and because others often react poorly to people who engage in utilitarian-style reasoning -- but this typically isn't regarded as a slam-dunk reasons to believe that utilitarianism is false. I think the SEP article on consequentialism is expressing a pretty mainstream position when it says: "[T]here is nothing incoherent about proposing a decision procedure that is separate from one’s criterion of the right.... Criteria can, thus, be self-effacing without being self-refuting." Insofar as people don't tend to buy self-effacingness as a slam-dunk argument against the truth of moral theories, it's not clear why they should buy it as a slam-dunk argument against the truth of normative decision theories.]]

ESRogs @ 2019-11-26T01:31 (+7)

is more relevant when trying to judge the likelihood of a criterion of rightness being correct

Sorry to drop in in the middle of this back and forth, but I am curious -- do you think it's quite likely that there is a single criterion of rightness that is objectively "correct"?

It seems to me that we have a number of intuitive properties (meta criteria of rightness?) that we would like a criterion of rightness to satisfy (e.g. "don't make things worse", or "don't be self-effacing"). And so far there doesn't seem to be any single criterion that satisfies all of them.

So why not just conclude that, similar to the case with voting and Arrow's theorem, perhaps there's just no single perfect criterion of rightness.

In other words, once we agree that CDT doesn't make things worse, but that UDT is better as a general policy, is there anything left to argue about about which is "correct"?

EDIT: Decided I had better go and read your Realism and Rationality post, and ended up leaving a lengthy comment there.

bmg @ 2019-11-26T23:03 (+5)

Sorry to drop in in the middle of this back and forth, but I am curious -- do you think it's quite likely that there is a single criterion of rightness that is objectively "correct"?

It seems to me that we have a number of intuitive properties (meta criteria of rightness?) that we would like a criterion of rightness to satisfy (e.g. "don't make things worse", or "don't be self-effacing"). And so far there doesn't seem to be any single criterion that satisfies all of them.

So why not just conclude that, similar to the case with voting and Arrow's theorem, perhaps there's just no single perfect criterion of rightness.

Happy to be dropped in on :)

I think it's totally conceivable that no criterion of rightness is correct (e.g. because the concept of a "criterion of rightness" turns out to be some spooky bit of nonsense that doesn't really map onto anything in the real world.)

I suppose the main things I'm arguing are just that:

-

When a philosopher expresses support for a "decision theory," they are typically saying that they believe some claim about what the correct criterion of rightness is.

-

Claims about the correct criterion of rightness are distinct from decision procedures.

-

Therefore, when a member of the rationalist community uses the word "decision theory" to refer to a decision procedure, they are talking about something that's pretty conceptually distinct from what philosophers typically have in mind. Discussions about what decision procedure performs best or about what decision procedure we should build into future AI systems [[EDIT: or what decision procedure most closely matches our preferences about decision procedures]] don't directly speak to the questions that most academic "decision theorists" are actually debating with one another.

I also think that, conditional on there being a correct criterion of rightness, R_CDT is more plausible than R_UDT. But this is a relatively tentative view. I'm definitely not a super hardcore R_CDT believer.

It seems to me that we have a number of intuitive properties (meta criteria of rightness?) that we would like a criterion of rightness to satisfy (e.g. "don't make things worse", or "don't be self-effacing"). And so far there doesn't seem to be any single criterion that satisfies all of them.

So why not just conclude that, similar to the case with voting and Arrow's theorem, perhaps there's just no single perfect criterion of rightness.

I guess here -- in almost definitely too many words -- is how I think about the issue here. (Hopefully these comments are at least somewhat responsive to your question.)

It seems like following general situation is pretty common: Someone is initially inclined to think that anything with property P will also have property Q1 and Q2. But then they realize that properties Q1 and Q2 are inconsistent with one another.

One possible reaction to this situation is to conclude that nothing actually has property P. Maybe the idea of property P isn't even conceptually coherent and we should stop talking about it (while continuing to independently discuss properties Q1 and Q2). Often the more natural reaction, though, is to continue to believe that some things have property P -- but just drop the assumption that these things will also have both property Q1 and property Q2.

This obviously a pretty abstract description, so I'll give a few examples. (No need to read the examples if the point seems obvious.)

Ethics: I might initially be inclined to think that it's always ethical (property P) to maximize happiness and that it's always unethical to torture people. But then I may realize that there's an inconsistency here: in at least rare circumstances, such as ticking time-bomb scenarios where torture can extract crucial information, there may be no decision that is both happiness maximizing (Q1) and torture-avoiding (Q2). It seems like a natural reaction here is just to drop either the belief that maximizing happiness is always ethical or that torture is always unethical. It doesn't seem like I need to abandon my belief that some actions have the property of being ethical.

Theology: I might initially be inclined to think that God is all-knowing, all-powerful, and all-good. But then I might come to believe (whether rightly or not) that, given the existance of evil, these three properties are inconsistent. I might then continue to believe that God exists, but just drop my belief that God is all-good. (To very awkwardly re-express this in the language of properties: This would mean dropping my belief that any entity that has the property of being God also has the property of being all-good).

Politician-bashing: I might initially be inclined to characterize some politician both as an incompetent leader and as someone who's successfully carrying out an evil long-term plan to transform the country. Then I might realize that these two characterizations are in tension with one another. A pretty natural reaction, then, might be to continue to believe the politician exists -- but just drop my belief that they're incompetent.

To turn to the case of the decision-theoretic criterion of rightness, I might initially be inclined to think that the correct criterion of rightness will satisfy both "Don't Make Things Worse" and "No Self-Effacement." It's now become clear, though, that no criterion of rightness can satisfy both of these principles. I think it's pretty reasoanble, then, to continue to believe that there's a correct criterion of rightness -- but just drop the belief that the correct criterion of rightness will also satisfy "No Self-Effacement."

ESRogs @ 2019-11-27T03:31 (+7)

Thanks! This is helpful.

It seems like following general situation is pretty common: Someone is initially inclined to think that anything with property P will also have property Q1 and Q2. But then they realize that properties Q1 and Q2 are inconsistent with one another.

One possible reaction to this situation is to conclude that nothing actually has property P. Maybe the idea of property P isn't even conceptually coherent and we should stop talking about it (while continuing to independently discuss properties Q1 and Q2). Often the more natural reaction, though, is to continue to believe that some things have property P -- but just drop the assumption that these things will also have both property Q1 and property Q2.